Abstract

We describe a method for computing an atlas for the stable or unstable manifold attached to an equilibrium point and implement the method for the saddle-focus libration points of the planar equilateral restricted four-body problem. We employ the method at the maximally symmetric case of equal masses, where we compute atlases for both the stable and unstable manifolds. The resulting atlases are comprised of thousands of individual chart maps, with each chart represented by a two-variable Taylor polynomial. Post-processing the atlas data yields approximate intersections of the invariant manifolds, which we refine via a shooting method for an appropriate two-point boundary value problem. Finally, we apply numerical continuation to some of the BVP problems. This breaks the symmetries and leads to connecting orbits for some nonequal values of the primary masses.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Illuminating studies by Darwin, Strömgren, and Moulton in the first decades of the Twentieth Century established the importance of numerical calculations in the qualitative theory of Hamiltonian systems (Darwin 1897; Strömgren 1934; Moulton et al. 1920). In particular, their work gave new insights into the orbit structure of the circular restricted three-body problem (CRTBP), a problem already immortalized by Poincaré. Interest in the CRTBP was reinvigorated in the 1960s with the inauguration of the space race and a number of authors including Szebehely and Nacozy (1967), Szebehely and Flandern (1967) harnessed the newly available power of digital computing to settle some questions raised by Strömgren. The interested reader will find a delightful retelling of this story with many additional references in the book of Szebehely (1967).

Motivated by the works just mentioned, in 1973 Henrard proved a theorem settling a conjecture of Strömgren about the role of asymptotic orbits. More precisely, Henrard showed that the existence of a transverse homoclinic for a saddle-focus equilibrium in a two-degree-of-freedom Hamiltonian system implies the existence of a tube of periodic orbits parameterized by energy and accumulating to the homoclinic (Henrard 1973). In the same paper he showed that the period of the orbits in the family goes to infinity and their stability changes infinitely often as they accumulate to the homoclinic. This phenomenon was called the blue sky catastrophe by Abraham (1985) and has been studied by a number of authors including Shilnikov et al. (2014), Devaney (1977).

In 1976 it was further shown by Devaney that such a transverse homoclinic—again for a saddle-focus in a two-degree-of-freedom Hamiltonian system—implies the existence of chaotic dynamics in the energy level of the equilibrium (Devaney 1976). See also the works of Lerman (1991, 2000). Such theorems should be thought of as Hamiltonian versions of the homoclinic bifurcations studied by Shilńikov (1967, 1970a, b). Taken together the results cited so far paint a vivid picture of the rich dynamics near a transverse homoclinic connection in a two-degree-of-freedom Hamiltonian system.

The present study concerns asymptotic orbits in the planar equilateral restricted four-body problem, henceforth referred to as the circular restricted four-body problem (CRFBP). The problem has a rich literature dating at least back to the work of Pedersen (1944, 1952). Detailed numerical studies of the equilibrium set, as well as the planar and spatial Hill’s regions, are found in Simó (1978), in Baltagiannis and Papadakis (2011a), and in Álvarez-Ramírez and Vidal (2009). Mathematically rigorous theorems about the equilibrium set and its bifurcations are proven by Leandro (2006), Barros and Leandro (2011, 2014) (with computer assistance). They show that for any value of the masses there are either 8, 9, or 10 equilibrium solutions with 6 outside the equilateral triangle formed by the primary bodies (see Fig. 1).

Fundamental families of periodic orbits are considered by in Papadakis (2016a, b), and by Burgos-García and Delgado (2013a), Burgos-García and Bengochea (2017). A study by Burgos-García, Lessard, and Mireles James proves the existence of some spatial periodic orbits for the CRFBP (Burgos-García et al. 2019) (again with computer assistance). An associated Hill’s problem is derived, and its periodic orbits are studied by Burgos-García (2016), Burgos-García and Gidea (2015).

Regularization of collisions is studied by Alvarez-Ramírez et al. (2014). Chaotic motions were studied numerically by Gidea and Burgos (2003) and by Alvarez-Ramírez and Barrabés (2015). Perturbative proofs of the existence of chaotic motions are found in the work of Cheng and She (2017), She and Cheng (2014), She et al. (2013) and also in the work of Alvarez-Ramírez et al. (2018). Blue sky catastrophes in the CRFBP were previously studied by Burgos-García and Delgado (2013b) and by Kepley and Mireles James (2018). This last reference develops (computer-assisted) methods of proof for verifying the hypotheses of the theorems of Hernard and Devaney.

The main goal of the present work is to study orbits which are homoclinic to a saddle-focus equilibrium solution in the equilateral restricted four-body problem. We apply the parameterization method of Cabré, Fontich, and de la Llave to compute a chart for the stable or unstable manifold in a neighborhood of the equilibrium (Cabré et al. 2003a, b, 2005). Then, we implement the analytic continuation scheme for local invariant manifolds developed by Kalies et al. (2018), where it was applied to some two-dimensional manifolds in the Lorenz system. We adapt this scheme for the CRFBP and compute atlases for the local stable/unstable manifolds attached to a saddle-focus equilibrium. By an atlas, we mean a collection of analytic maps or charts of the form, \(P :[-1, 1]^2 \rightarrow \mathbb {R}^4\), where the image of P lies in the stable or unstable manifold. The union of these charts is a piecewise approximation for a large portion of the manifold away from the equilibrium. For a more formal definition, see any standard text on differential geometry. The charts are computed using high-order polynomial approximations with algorithms that exploit automatic manipulations of formal series.

Configuration space for the CRFBP: the three primary bodies with masses \(m_1,m_2,\) and \(m_3\) are arranged in an equilateral triangle configuration of Lagrange, which is a relative equilibrium solution of the three-body problem. After transforming to a co-rotating frame, we consider the motion of a fourth massless body. The equations of motion have 8, 9, or 10 equilibrium solutions (libration points) denoted by \(\mathcal {L}_j\) for \(0 \le j \le 9\). The number of libration points, and their stability, varies depending on \(m_1\), \(m_2\), and \(m_3\). In this work we study the points, \(\mathcal {L}_{0, 4,5,6}\), which are the only libration points which can have saddle-focus stability

After computing the stable/unstable manifold atlases, we post-process to find approximate intersections. Once a potential intersection is located, we refine the approximation using a Newton scheme for a two-point boundary value problem as in the classical work of Doedel and Friedman (1989), Doedel et al. (1997). In the case of the CRFBP, our algorithm identifies a large collection of connecting orbits which are naturally ordered by connection time. We focus on the maximally symmetric case of equal masses, which we refer to as the triple Copenhagen problem. We prove that a rotational symmetry in this case reduces the complexity of the atlas computations by a factor of 3.

The algorithm for producing the atlases utilizes an adaptive subdivision routine to carefully control errors. This results in a large number of charts, on the order of tens of thousands, in only a few minutes of computation time. These computations are expensive in terms of memory usage, and it is impractical to recompute the atlases for a large number of parameter values, at least given the resources of the present study, namely laptop/desktop computers running single threads. Instead, after computing an ensemble of connecting orbits for the triple Copenhagen problem, we apply numerical continuation to the boundary value problem describing the homoclinics. That is, we use the connections found for the equal mass case as a jumping off point for exploring nearby—but nonsymmetric—mass parameters. Continuation of the connecting orbits is much more efficient than continuing the entire invariant manifold atlas.

As is well known, the bifurcation structure of the homoclinic continuation problem in the Hamiltonian setting is rich. We do not attempt automatic tracking of new branches, nor do we follow folds. A more systematic study of the branching would make an excellent topic for future study, perhaps by combining our invariant manifold atlas data with powerful continuation software such as AUTO (Champneys et al. 1996).

We emphasize that our restriction to the equal masses case is due to convenience and is not a technical restriction on the method itself. Our atlas algorithm applies to any choice of parameters or even to other Hamiltonian systems. Thus, even though we abandon the branch whenever the homoclinic continuation algorithm fails, we always have the ability to dig deeper into the cause of failure by running the full atlas computation from scratch.

We remark that our method is deployed in the full phase space and does not require choosing a fixed surface of section in which to study intersections of the invariant manifolds. This is advantageous as many problems do not admit a single section for which the return map is topologically conjugate to the true dynamics. Considering the intersections of the stable/unstable manifolds in a particular section may not reveal all the connecting orbits. Moreover, the first intersections to appear in phase space may not be the first to appear in a given section. Indeed, projecting to a section can introduce discontinuities which make it impossible to precisely formulate notions like “first intersection.” The great virtue of a surface of section (restricted to an energy level) is that it leads—at least in the case of a two-degree-of-freedom Hamiltonian—to a two-dimensional representation of the dynamics. We remark that the methods of the present work generalize to systems with three or more degrees of freedom, where considering surfaces of section is less fruitful.

2 Saddle-focus equilibrium solutions of the equilateral CRFBP

In this section, we review well known results about the set of equilibrium solutions in the CRFBP, focusing on material which informs the calculations carried out in the remainder of the work. We are especially interested in the number and location of saddle-foci and in how these depend on the mass ratios. First, we recall the mathematical formulation of the problem and some of its elementary properties.

2.1 The planar equilateral circular restricted four-body problem

Consider three particles with masses \(0< m_3 \le m_2 \le m_1 < 1\), normalized so that

These massive particles are referred to as the “primaries.” Suppose that the primaries are located at the vertices of a planar equilateral triangle, rotating with constant angular velocity. That is, we assume that the three massive bodies are in the triangular configuration of Lagrange. We choose a co-rotating coordinate frame which puts the triangle in the xy-plane and fixes the center of mass at the origin. We orient the triangle so that the first primary is on the negative x-axis, the second body is in the lower right quadrant, and the smallest body is in the upper right quadrant. Once in co-rotating coordinates, we are interested in the dynamics of a fourth, massless particle with coordinates (x, y), moving in the gravitational field of the primaries. The situation is illustrated in Fig. 1.

We write \((x_1, y_1)\), \((x_2, y_2)\) and \((x_3, y_3)\) to denote the locations of the primary masses. Let

Taking into account the normalizations discussed above, the precise positions of the primary bodies are given by the formulas

Define the potential function

where

and let \(\mathbf {x} = (x, \dot{x}, y, \dot{y}) \in \mathbb {R}^4\) denote the state of the system. The equations of motion in the rotating frame are

where

The system conserves the quantity

which is called the Jacobi integral. Note that E is smooth—in fact real analytic—away from the primaries. The zero velocity curves are defined by fixing a value of the energy and setting \(\dot{x}, \dot{y}\) to zero. These curves are useful for understanding the structure of the phase space and are illustrated in Fig. 2.

Zero velocity curves for the triple Copenhagen problem: fixing a value of the Jacobi constant and setting velocity equal in Eq. (4) implicitly defines the zero velocity curves in the phase space of the CRFBP. An orbit which reaches one of these curves arrives with zero velocity and hence turns around immediately. These define natural boundaries which orbits at a given energy level may not cross. Left: the zero velocity curves associated with the energy levels of \(\mathcal {L}_{1,2,3}\) (top left) \(\mathcal {L}_0\) (top right), \(\mathcal {L}_{4,5,6}\) (bottom left), and \(\mathcal {L}_{7,8,9}\). Right: a typical orbit in the \(\mathcal {L}_0\) energy level confined by the zero velocity curves

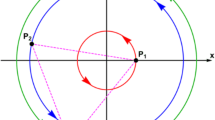

Two-dimensional local invariant manifolds in the triple Copenhagen problem (CRFBP with equal masses): Left: all two-dimensional attached invariant manifolds for libration points in the equal mass case (one-dimensional manifolds not shown). In the case of equal masses, the libration points \(\mathcal {L}_{0,4,5,6}\) have saddle-focus stability. Orbits are shown accumulating to the libration points in forward/backward time (green/red, respectively). The libration points \(\mathcal {L}_{4,5,6,7,8,9}\) on the other hand have saddle \(\times \) center stability. In this case each libration point has an attached center manifold foliated by periodic orbits—the so-called planar Lyapunov orbits. We make no systematic study the Lyapunov orbits in the present work and only remark that they appear to organize some of the homoclinic orbits in the discussion to follow. Right: closeup on the inner libration points and their invariant manifolds. All references to color refer to the online version

As mentioned in the introduction, the CRFBP has exactly 8, 9 or 10 equilibrium solutions, depending on the values of the mass parameters \(m_1, m_2,\) and \(m_3\). The equilibria are referred to as libration points in the dynamical astronomy literature, and we denote them by \(\mathcal {L}_j\) for \(0 \le j \le 9\). A typical configuration of these libration points is illustrated in Fig. 1, which also illustrates out naming convention. In the present work we are interested in the linear stability of the libration points. We are especially interested in determining the mass ratios where \(\mathcal {L}_j\) with \(j = 0, 4, 5, 6\) are saddle-focus—as opposed to real saddle or center \(\times \) center—equilibria. This question is considered from a numerical point of view in Sect. .

We note that for all values of the masses, \(\mathcal {L}_j\) with \(j = 1, 2, 3, 7, 8, 9\) have either saddle \(\times \) center, or center \(\times \) center stability depending on the values of the masses. The local two-dimensional invariant manifolds attached to all ten libration points are illustrated in Fig. 3, for the case of equal masses.

2.2 Saddle-foci in parameter space

The CRFBP admits as many as four and as few as zero saddle-focus equilibrium points, depending on the mass ratios. We now consider briefly what happens in between these extremes as the masses are varied. The problem is normalized so that \(m_1 + m_2 + m_3 = 1\), with \(m_3 \le m_2 \le m_1\), so we have that \(m_1 \in [1/3, 1]\), \(m_2 \in [0, 1/2]\) and \(m_3 \in [0, 1/3]\). Considering the 2-simplex in \(\mathbb {R}^3\) satisfying these constraints, we see that when \(m_1 \in [1/3, 1/2]\) we have

while for \(m_1 \in [1/2, 1]\) we have

In either case, once we choose \(m_1\) and \(m_3\), the value of \(m_2\) is determined by

The question is, how does the stability of the libration points depend on the mass ratios? We address the question for each of the points, \(\mathcal {L}_{0,4,5,6}\), as follows. Beginning with the case of equal masses, \(m_1 = m_2 = m_3 = 1/3\), we numerically continue each equilibrium to the opposite boundary of the parameter simplex at \(m_3 = 0\). Throughout the computation, we track the stability of each libration point and label a parameter point with a black dot whenever the stability is of saddle-focus type. The results are summarized in Fig. 4. We refer to the curve in the parameter simplex where the stability changes as the Routh–Gascheau curve.

Roughly speaking, we see that when \({1}/{3} \le m_1 \le 0.42\) the libration point \(\mathcal {L}_0\) is a saddle-focus for all allowable values of \(m_2\), \(m_3\). When \(m_1 > 0.43\), the libration point \(\mathcal {L}_0\) is no longer a saddle, no matter the values of \(m_2\), \(m_3\). The points \(\mathcal {L}_{4, 6}\) on the other hand have saddle-focus stability for most parameter values, and only bifurcate after \(m_1 > 0.95\) (with \(\mathcal {L}_6\) a little more robust than \(\mathcal {L}_4\) except when \(m_2 = m_3\)). The libration point \(\mathcal {L}_5\) is the most robust. It maintains saddle-focus stability until \(m_1 \approx 0.99\). For \(m_1 > 0.995\) there are no more saddle-foci at all. By reading parameter values off of the frames in Fig. 4, we can arrange that the CRFBP has 1, 2, 3 or 4 saddle-focus equilibria. In the sequel we are interested in homoclinic connections for such parameters.

Mass values and saddle-focus stability: results of a numerical search of the parameter space. Values of \(m_1\) are on the horizontal axis and values of \(m_3\) are on the vertical axis. These determine the remaining mass parameter through the relation \(m_2 = 1 - m_1 - m_3\). In each frame a parameter pair is marked with a black or red dot if the libration point \(\mathcal {L}_{0,4,5,6}\) has saddle-focus stability. The top left figure reports the results for \(\mathcal {L}_0\), the top right for \(\mathcal {L}_{4,6}\), and the bottom frame for \(\mathcal {L}_5\). In each case the inlay zooms in on the Routh–Gascheau bifurcation curve. Note that these bifurcation curves are nonlinear, and that in the top right results for \(\mathcal {L}_4\) are black and results for \(\mathcal {L}_6\) are red. We remark that the changes in the dot pattern in the bottom right inlay are due to the use of an adaptive step size in our continuation algorithm

2.3 Two ways to formulate a connecting orbit: phase space geometry and boundary value problems

There are two standard ways to think about connecting orbits and—while they are completely equivalent from a mathematical point of view—in practice they have different advantages and disadvantages. In the following let \(f :\mathbb {R}^n \rightarrow \mathbb {R}^n\) denote a smooth vector field and let \(\mathbf {x}_0 \in \mathbb {R}^n\) be an equilibrium solution for f. We write \(W^s(\mathbf {x}_0)\) and \(W^u(\mathbf {x}_0)\) to denote, respectively, the stable and unstable manifolds attached to \(\mathbf {x}_0\).

-

Analytic definition If \(\mathbf {x} :\mathbb {R}\rightarrow \mathbb {R}^n\) satisfies

$$\begin{aligned} \frac{\mathrm{d}}{\mathrm{d}t} \mathbf {x}(t) = f(\mathbf {x}(t)), \end{aligned}$$for all \(t \in \mathbb {R}\), and satisfies the asymptotic boundary conditions

$$\begin{aligned} \lim _{t \rightarrow \pm \infty } \mathbf {x}(t) = \mathbf {x}_0, \end{aligned}$$then we say that \(\mathbf {x}\) is a homoclinic connecting orbit for \(\mathbf {x}_0\).

-

Geometric definition If

$$\begin{aligned} \hat{x} \in W^s(\mathbf {x}_0) \cap W^u(\mathbf {x}_0), \end{aligned}$$and \(\mathbf {x} = \text{ orbit }(\hat{x})\) denotes the orbit which passes through \(\hat{x}\), then \(\mathbf x\) is a homoclinic connecting orbit for \(\mathbf {x}_0\). If the intersection of the manifolds is transverse, then we say that \(\mathbf {x}\) is a transverse homoclinic connection.

The analytic definition is recast as a finite time boundary value problem by projecting the boundary conditions onto local stable/unstable manifolds. If P, Q are parameterizations of the local unstable and stable manifolds, respectively, then we look for \(T > 0\) and \(\mathbf {x} :[0, T] \rightarrow \mathbb {R}^n\), so that \(\mathbf {x}\) solves the differential equation subject to the boundary conditions

In applications one frequently replaces P and Q by their linear approximations. In Sect. 3 we review an approach called the parameterization method for computing high-order polynomial approximations of the local charts P, Q.

Remark 1

(Relative strengths and weaknesses) One great advantage of the analytic formulation is that, since it is equivalent to a two-point boundary value problem, we can utilize the Newton method to find very accurate solutions—often on the order of machine precision. The formulation as a boundary value problem also lends itself to numerical continuation schemes, which are very useful for exploring the parameter space. The disadvantages are twofold. First, in this formulation it is necessary to begin the Newton iteration with a fairly good approximate solution and this raises the question: Where do the approximate solutions come from? Second, it is difficult to rule out solutions using the BVP approach.

In the geometric approach, there is no need to make a guess. Instead, one moves along the stable and unstable manifolds and identifies connections by locating intersections in phase space. At the same time, the geometric approach allows one to rule out connecting orbits by showing that a particular region of phase space does not contain any intersections. The difficulty with the geometric perspective is that it provides information only as good as our knowledge of the embeddings of the stable/unstable manifolds. Computing embeddings of invariant manifolds is challenging, and methods tend to decrease in accuracy the farther from the equilibrium they are applied.

The important point, from the perspective of the present work, is that these two approaches complement one another. The geometric formulation is good for locating and ruling out connections, while the analytic formulation is good for refining approximations and for continuation with respect to parameters. This suggests the approach of the present work: namely that we use the two formulations in concert, playing the strengths of one against the weaknesses of the other as appropriate.

We remark that in many applications it is convenient to examine the intersections of the invariant manifolds in an intermediate surface of section. This is especially true for two-degree-of-freedom systems as the section intersected with the energy level leads to a two-dimensional image which is easy to visualize. Often an appropriate section is suggested by the geometry of the problem, or by the goals of a particular space mission. We refer the interested reader to the works (Koon et al. 2000; Canalias and Masdemont 2006; Barrabés et al. 2009) for examples and fuller discussion.

3 Numerical computation of the stable/unstable manifolds

The results of Sect. show that for most parameter values, the CRFBP has either three or four saddle-focus equilibria—though for some parameters it may have only two, or one, or none. For a given saddle-focus equilibrium with fixed values of the mass parameters, we compute the invariant manifolds in two steps. First, we find a high-order expansion of an initial local chart containing the equilibrium solution. Then we use a high-order Taylor integration scheme to advect the boundary of the initial chart one subarc at a time. The second step is repeated until a certain integration time has been reached, or until some error tolerance has been exceeded. Along the way, it is sometimes necessary to subdivide boundary arcs in order to manage the truncation errors.

Our computation of the initial chart employs the parameterization method, which is reviewed in Sect. 3.1. Advection of the boundary uses a Taylor integration scheme similar to the one developed in Kalies et al. (2018), but adapted to the problem at hand. Both procedures exploit differential-algebraic manipulations of formal power series, and these manipulations are delicate due to the presence of the minus two-thirds of power in the nonlinearity of the CRFBP vector field.

One technique for manipulating power series of several complex variables involves automatic differentiation combined with the radial gradient. This procedure is developed in Haro et al. (2016) and is reviewed in “Appendix B.” Another technique involves appending additional variables and equations to the problem, so that the enlarged field is polynomial and equivalent to the original CRFBP on a certain submanifold. This option is discussed at length for the CRFBP in Kepley and Mireles James (2018) which also includes a more precise definition of what “equivalent” means here. See also Lessard et al. (2016) and Rabe (1961).

3.1 Parameterization method for the local invariant manifold

We now review the parameterization method adapted to the needs of the present work, namely for a stable/unstable manifold attached to a saddle-focus equilibrium in \(\mathbb {R}^4\). Much more general treatment of the parameterization method is found in Cabré et al. (2003a, b, 2005). See also the book on this topic (Haro et al. 2016).

Let \(\mathbf {x}_0 \in \mathbb {R}^4\) denote a saddle-focus equilibrium point. Specifically, we suppose \(f(\mathbf {x}_0) = 0\),

with \(\alpha , \beta > 0\) denotes the stable eigenvalues for \(Df(\mathbf {x}_0)\), and \(\xi _{1,2} \in \mathbb {C}^4\) denotes a choice of associated complex conjugate eigenvectors.

Since the eigenvalues are complex, it is convenient to look for a complex parameterization of a local stable manifold. Let

denote the unit complex polydisc. We look for a parameterization \(P :D^2 \rightarrow \mathbb {C}^4\) satisfying the infinitesimal conjugacy given by

where \(\mathbf {z} = (z_1, z_2)^{\text{ T }}\), and

Equation (5) is subject to the first-order constraints

Note that

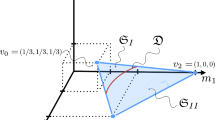

is the push forward of the linear vector field by P. The geometric meaning of Eq. (5) is illustrated in Fig. 5.

Geometric interpretation of the parameterization method for differential equations: Eq. (5) requires that the push forward of the vector field \(\varLambda \) by P matches the vector field f on the image of P. A function satisfying this equation is a parameterization of a local stable manifold

Let \(\varPhi \) denote the flow generated by f. Any P satisfying Eq. (5) on \(D^2\) also satisfies the flow conjugacy

In particular, if P satisfies both Eq. (5) and the constraints of Eq. (6), then for any \((z_1, z_2) \in D^2\) it follows that

so that \(P(D^2) \subset W^s(\mathbf {x}_0)\). Combining this with the fact that the image of P contains \(\mathbf {x}_0\) and is tangent to the stable eigenspace at \(\mathbf {x}_0\) we see that P parameterizes a local stable manifold for \(\mathbf {x}_0\). Moreover, we recover the dynamics on the manifold through the conjugacy.

When the vector field f is analytic near \(\mathbf {x}_0\), then \(W^{s}(\mathbf {x}_0)\) is an analytic manifold, and it makes sense to look for an analytic chart of the form

with \(p_{m,n} \in \mathbb {C}^4\) for all \(m,n \in \mathbb {N}\). Since we are interested in the real image of the chart, we look for a solution of Eq. (5) with

for all \(|z| < 1\). This is achieved whenever the power series coefficients of the solution satisfy

for all \((m,n) \in \mathbb {N}^2\). The real parameterization \(\tilde{P} :B \rightarrow \mathbb {R}^4\) is recovered using complex conjugate variables

Elementary proofs of the facts discussed in this section are found, for example, in Kepley and Mireles James (2018).

3.2 Power series solution of Eq. (5)

We describe three methods for computing the power series coefficients of an analytic solution of the invariance equation given in Sect. 3.1. Combining these methods leads to very efficient numerical methods.

3.2.1 Solution by power matching

Plugging the unknown power series expansion for P into Eq. (5) leads to

It is shown in Cabré et al. (2003a) (see also the discussion in Haro et al. 2016) that when we match like powers and isolate \(p_{m,n}\) we are led to an expression of the form

where \(R(P)_{m,n}\) depends in a nonlinear way on coefficients \(p_{j,k}\) with \( 0 \le j+k < m+n\). Isolating the variable \(p_{m,n}\) on the left leads to the homological equations

Remark 2

(The formal solution is well defined) Observe that Eq. (9) is linear in \(p_{m,n}\) and has a unique solution as long as \(m \lambda _1 + n \lambda _2\) is not an eigenvalue of \(Df(\mathbf {x}_0)\). But \(\lambda _2 = \overline{\lambda _1}\), and since any remaining eigenvalues are assumed to be unstable, we have that \(m \lambda _1 + n \lambda _2\) is never an eigenvalue of \(Df(\mathbf {x}_0)\). Hence the matrix on the left-hand side of the homological equation (9) is invertible for all \(m + n \ge 2\).

Given any first-order data as in the constraint Eq. (6), the homological equations are uniquely solvable to all orders and the corresponding formal series solution of Eq. (5) is well defined. Since each Taylor coefficient is uniquely determined by the homological equations (9), it follows that the formal series solution is unique up to the choice of the scalings of the eigenvectors in Eq. (6). Solving the homological equations recursively to order \(N \ge 2\) provides a polynomial chart \(P^N\) which approximately parameterizes the local stable manifold.

Remark 3

(Reality of the parameterization) Taking complex conjugates in the homological equations (9) shows that the coefficients \(p_{m,n}\) have the symmetry of Eq. (8).

3.2.2 A Newton scheme

A quadratic convergence scheme for Eq. (5) is obtained as follows. Define the nonlinear operator

where f is the CRFBP vector field, and note that a zero of \(\varPsi \) is a solution of Eq. (5). Moreover, we note that, at least formally, the Fréchet derivative is given by

In fact this is the correct Fréchet derivative of \(\varPsi \) when, for example, we consider \(\varPsi \) defined on a Banach space of analytic functions, see Cabré et al. (2003a, 2005), de la Llave and Mireles James (2012).

Choose \(P_0\) an approximate zero of \(\varPsi \), and define the sequence

where \(\Delta _n\) is the formal series solution of the linear equation

If \(P_0\) is a good enough approximate solution of Eq. (5) we expect \(P_n\) to converge quadratically to a zero of \(\varPsi \). The linear operator \(D \varPsi [P]\) nonconstant coefficient, and Eq. (10) may be solved recursively via the following power matching scheme. Define

and

Here \(\Delta _{m,n}, q_{m,n} \in \mathbb {C}^4\), and \(A_{mn}\) are \(4 \times 4\) complex valued matrices for all \((m,n) \in \mathbb {N}^2\). Plugging these series expansions into Eq. (10) leads to

or, upon matching like powers,

for all \(m+n \ge 2\). We note that the sum contains one term of order \(\Delta _{mn}\), appearing when \(j = m\) and \(k = n\). That is

Let

Then we use \(\tilde{\delta }_{j,k}^{m,n}\) to extract terms of order (m, n) from the sum and write the equation for \(\Delta _{mn}\) as

Recall that \(A_{0,0} = Dg(0) = Df(\mathbf {x}_0)\), so that rearranging terms leads to the linear equations

for \(m + n \ge 2\). Since the right-hand side of Eq. (11) is exactly the right-hand side appearing in the homological equations (9) of Sect. 3.2.1, arguing as in Remarks 2 and 3 shows that the equations of (11) are uniquely solvable for all \(m + n \ge 2\) just as before, and that the resulting power series coefficients have the desired symmetry. Then this Newton scheme is well defined on the space of formal power series.

3.2.3 A pseudo-Newton scheme

While the Newton scheme of the previous section converges rapidly (in the sense of the number of necessary iterations), solving the required nonconstant coefficient linear equations is expensive. In this case the overall computation may be slow just because of the cost of computing the individual corrections. The iterations can be speeded up as follows.

First, we note that

and we define a new iterative scheme

where \( \tilde{\Delta }_k\) is a solution of the constant coefficient linear equation

On the level of power series, this equation becomes

and matching like powers yields the linear equations

These homological equations uniquely determine the coefficients \(\tilde{\Delta }_{m,n}\) and have the virtue of being “diagonal” in Taylor coefficient space. In practice we find that the pseudo-Newton scheme requires more iterates than the Newton method to converge. However, a single iteration step is much faster and for reasonable values of N the pseudo-Newton method is faster overall. We discuss this further below.

Remark 4

In practice the linear approximation of P by the eigenvectors provides a good initial guess for the Newton and pseudo-Newton schemes, especially when computations are started “from scratch.” However, within the context of calculations based on parameter continuation, we will take \(P_0\) as the high-order parameterization from the previous mass values.

Indeed, it seems that the best results are obtained by a “hybrid” approach. That is, we compute an initial guess \(P_0\) by recursively solving Eq. (9) to some fixed order, \(N_0\). Then, we refine this approximation via the Newton or pseudo-Newton scheme to obtain a polynomial approximation to order, \(N > N_0\). The runtime performance for this hybrid approach is recorded in Table 1.

Remark 5

(Quantifying the errors) Suppose that the polynomial

is an approximation solution of Eq. (5). One way to measure the quality of the approximation is to measure the defect associated with \(P^N\) defined by the quantity

This quantity could be approximated by evaluating on a mesh of points in D. On the other hand, we can use the fact that for power series on the unit disk we have the bound

where the infinite sum can be approximated by a finite sum. Then another useful a-posteriori indicator is obtained by choosing an \(N' > N\) and computing the quantity

where \(p_{m,n}^N\) are the power series coefficients of \(P^N\), and \([f \circ P^N]_{m,n}\) are the coefficients of \(f(P^N(\mathbf {z}))\). Of course this bounds also the real image of \(P^N\).

If f is a polynomial of order K, then we take \(N' = K N\). If f is not a polynomial, then the power series for \(f\circ P^N\) has infinitely many terms even though \(P^N\) is polynomial. Then we choose \(N' > N\) somewhat arbitrarily. Note that \(p_{m,n}^N\) are zero when \(m + n > N\), so that eventually the sum involves only the coefficients of the composition.

Yet another useful error indicator is obtained by considering the dynamical conjugacy of Eq. (7). Since the true solution satisfies the dynamical conjugacy exactly, we consider also the quantity defined by

To approximate this quantity, we fix \(\tau > 0\) and let \(\varPhi _{\text{ num }}\) denote a numerical integrator and \(z_{k}\), \(1 \le k \le K\) be a mesh of the complex circle so \(|z_k| = 1\). Define the indicator

Error bounds for a number of example computations are recorded in Table 2.

Remark 6

(Eigenvector scaling and coefficient decay) Solutions of Eq. (5) are only unique up to the choice of the scalings of the eigenvectors and this freedom is exploited in our numerical algorithms. Indeed, this is the reason we can always take our domain to be the unit disk. The results in Table 2 describe the dependence of the numerical errors on the approximation order and the eigenvector scalings. These numerical experiments lead to the following heuristic. If we scale the eigenvectors so that the final coefficients—that is the N-th-order coefficients of \(P^N\)—are on the order of machine epsilon, then we obtain a-posteriori errors on the order of machine epsilon.

3.3 Integration of analytic arcs

In Sect. 4 we present a scheme for computing an atlas for the stable/unstable manifolds which relies on integrating analytic arcs of initial conditions by the flow generated by f. We describe this integrator in terms of power series expansions. Let us assume that \(\gamma :(-1, 1) \rightarrow \mathbb {R}^4\) is an analytic arc with power series expansion

Denote the formal series expansion

Here, we use the variables (s, t) in place of \((z_1,z_2)\) to emphasize the intuition that s corresponds to the “spatial” parameterization along the initial data, and t corresponds to the “time” parameterization along the flow. In other words, we consider \(\varGamma \) as the solution of the parameterized family of initial value problems

Substituting the formal series into this IVP and matching like powers leads to the recursion relations

which allow us to compute the coefficients of \(\varGamma \) to arbitrary order using the same methods described in Sect. 3.2. We also note that the precision of these formal series computations depend on convergence and domain decomposition of these series expansions which has not been addressed and will also be taken up in the following section.

4 Building an atlas for the local stable/unstable manifold

In this section, let \(W^*(\mathbf {x}_0)\) denote an invariant stable/unstable manifold for a saddle-focus equilibrium, \(\mathbf {x}_0\). Our goal is to describe an algorithm for producing an atlas of chart maps which parameterizes a large portion of the invariant manifold. The union of the images of these maps is a piecewise parameterization of a two-dimensional subset of \(W^*(\mathbf {x_0})\). Our procedure is iterative and at each step outputs a (strictly) larger piecewise parameterization.

It is important to emphasize that our computations are carried out only to finite order. In particular, the charts described in this section are analytic functions of two complex variables. However, in practice we fix \((M,N) \in \mathbb {N}^2\), and for each chart we compute a finite polynomial approximation of order (M, N). Nevertheless, throughout this section we denote these analytic charts and their polynomial approximations using the same notation. We end this section by outlining methods for reliably, efficiently, and automatically computing these atlases. This includes algorithms for estimating and controlling truncation errors, identifying Taylor series blowup, domain decomposition, and stiffness.

4.1 Iterative method for computing charts

Before elaborating on the technical details of our method, we briefly describe the overall strategy. Starting from the parameterized local invariant manifolds obtained via methods described in Sect. 3.1, we want to build an even larger representation of the manifold. There are many ways to grow such a representation. We could, for example, simply integrate a collection of initial conditions meshing the boundary of the parameterization. However, as is well known, the exponential separation of initial conditions will force these orbits apart and eventually degrade the description of the manifold. Instead, we mesh the boundary into a collection of one-dimensional arcs and advect each of these under the flow. Propagating these arcs maintains the fidelity of the representation, and leads to new “patches” of the manifold.

Since the initial chart is parameterized by a high-order polynomial, we would like the same representation for new charts. To this end we develop a high-order Taylor integration scheme which applies to analytic arcs of initial conditions. This results in a power series representation of the flow of a boundary arc, and we take this as our next chart. After advecting each one of the boundary arcs, we have a new and strictly larger representation of local stable/unstable manifold. The idea is illustrated in Fig. 4.

After one step of this procedure, we have moved the boundary of the local invariant manifold. In some cases, the image of the advected arc undergoes excessive stretching due to the exponential separation of initial conditions. This stretching in phase space is matched by a corresponding blow up in coefficients of the Taylor expansion, and the computations become numerically unstable.

Building an atlas: here P is a chart for a neighborhood of the equilibrium \(\mathbf {x}_0\) computed using the parameterization method. To grow the atlas, we mesh the boundary of the P using a collection of analytic arcs \(\gamma _j(s)\). Each of these arcs is advected under the flow \(\varPhi \) to produce a new chart \(\varGamma _j(s, t) = \varPhi (\gamma _j(s), t)\). The union of P with all the \(\varGamma _j\) is an atlas for a larger local stable/unstable manifold

This problem is overcome by occasionally remeshing the boundary of the atlas. This comes at a cost of increasing the number of charts in the next step of the algorithm. Hence, efficiently computing large atlases while controlling numerical error requires automatic algorithms for managing the growth of the power series coefficients, deciding how long to integrate each individual arc, and deciding when and how to subdivide the new boundaries. These topics account for much of the technical details which follow (Fig. 6).

4.1.1 The initial local manifold

The first step in our algorithm is to compute a polynomial approximation of the local parameterization, either by directly solving the homological equations or by iterating the Newton or pseudo-Newton schemes described in Sect. 3.1. Let \(\varGamma _0\) be a solution of Eq. (5), and \(D^2\) denote the unit polydisc in \(\mathbb {C}^2\). Recall that \(\varGamma _0: D^2 \rightarrow W^*_{\text {loc}}(\mathbf {x}_0)\) is analytic, and that \(\varGamma _0(\partial D^2)\) is flow transverse. In particular, \(\varGamma _0\) serves as our initial local parameterization, and we refer to it as a zeroth-generation interior chart and we write \(\varGamma _0(D^2) = W^*_0(\mathbf {x}_0)\).

In practice, we compute \(\varGamma _0\) to order (N, N) with \(N \in \mathbb {N}\) chosen by applying the heuristic methods discussed in Sect. 3.2. This chart is represented in the computer as a polynomial in two complex variables of total degree \(\deg (\varGamma _0) = (N-1)^2\). The truncation error of this approximation is controlled directly by choosing the eigenvector scaling as described in Remark 6, and in practice, is on the order of machine epsilon.

4.1.2 The initial manifold boundary

With \(\varGamma _0\) in hand, we fix \(K_0 \in \mathbb {N}\) and subdivide \(\partial D\) into \(K_0\)-many analytic segments, each of which has the form, \(c_j: [-\,1,1] \rightarrow \partial D\), for \(1 \le j \le K_0\). We parameterize \(\partial W^*_0(\mathbf {x}_0)\) by defining \(\gamma _j(s) = \varGamma _0 \circ c_j(s)\) and we refer to \(\gamma _j\) as a lifted boundary. Note that for each \(1\le j\le K_0\), \(\gamma _j: [-\,1,1] \rightarrow \partial W^*_{\text {loc}}(\mathbf {x}_0)\) and \(\gamma _j([-\,1,1])\) is a flow transverse arc since \(\varGamma _0\) is a dynamical conjugacy and the image of \(c_j\) is transverse to the linear flow. Now, we define the zeroth-generation boundary to be

and refer to each \(\gamma _j\) as a zeroth-generation boundary chart.

4.1.3 The next generation

Now, we apply the high-order Taylor advection described in Sect. 3.3 to grow a larger local manifold denoted by \(W^*_1(\mathbf {x}_0)\). Specifically, for \(1 \le j \le K_0\), we choose \(|\tau _j| > 0\), and our advection algorithm takes \(\gamma _j,\tau _j\) as input and produces a chart, \(\varGamma _{1,j}: D \rightarrow W^*(\mathbf {x}_0)\) which satisfies

In other words, \(\varGamma _{1,j}\) parameterizes the advected image of \(\gamma _j\) under the flow over the time interval \([0,\tau _j]\). These new charts are referred to as first-generation interior charts which we add to our atlas to obtain the first-generation local parameterization

Note that \(\tau _j \ne 0\) and since \(\gamma _j\) is flow transverse, we have \(W^*_0(\mathbf {x}_0) \subsetneq W^*_1(\mathbf {x}_1)\) is a strict subset. In fact, transversality of \(\gamma _j\) implies the stronger condition that \(\partial W^*_0(\mathbf {x}_0) \subset \text {Int}(W^*_1(\mathbf {x}_0))\), i.e., the manifold has grown through every point on the previous boundary.

Remark 7

(Time rescaling) In this description, \(\tau _j\) serves as a time rescaling of the flow. This allows direct control over the truncation error (in the time direction) and is analogous to the eigenvector scaling for the initial parameterization described in Remark 6. However, choosing this time rescaling is typically more difficult than choosing the eigenvector scaling and we postpone the discussion of this problem to Sect. 4.2.1.

Once the first-generation interior charts are computed by advection, the first-generation boundary arcs are now obtained by evaluation of the time variable. In particular, for \(1 \le j \le K_0\), the evaluation, \(\varGamma _{1,j}([-\,1,1],1) \subset \partial W^*_1(\mathbf {x}_0)\) is a flow transverse arc segment. We perform spatial rescaling as needed (see Remark 8 below) to obtain the next-generation boundary arcs, \(\gamma _{1,j} : [-\,1,1] \rightarrow \partial W^*_1(\mathbf {x}_0)\) where \(1 \le j \le K_1\) for some \(K_1 \ge K_0\) and

is flow transverse. The advection and evaluation algorithms are then iterated to increase the number of charts in the atlas. The \(L^\mathrm{th}\) step in the iteration chain has the form

where \(W^*_L(\mathbf {x}_0)\) is parameterized by \(K_{L-1}\)-many interior charts (polynomials in both the space and time variables), \(\partial W^*_L(\mathbf {x}_0)\) is parameterized by \(K_{L}\)-many boundary charts (polynomials in the space variable only), and \(K_{L-1} \le K_L\).

If we stop iteration, say at the Lth step, then the final atlas,

is a collection of \(\left| \mathcal {A} \right| = 1 + \sum \limits _{l=1}^{L} K_l\)-many analytic charts is a piecewise parameterization a portion of the invariant manifold.

Remark 8

(Spatial rescaling) The parameters, \(K_0,\cdots ,K_L\), control the number of boundary subdivisions, and therefore, allow direct control over scaling in the spatial direction. As in the time rescaling problem, choosing these parameters effectively is a nontrivial problem which we take up in Sect. 4.2.2.

4.2 Convergence, manifold subdivision, and numerical integration

Thus far, we have ignored the issue of convergence for our formal power series computations. The best method for studying this issue is to combine rigorous numerical computations with a-posteriori analysis and obtain a proof of the existence of an analytic solution and explicit error bounds on the polynomial approximation. Rigorously validated numerical methods for invariant manifold atlases are described in detail in Kalies et al. (2018), Kepley and Mireles James (2018). In the present work we explore the utility of invariant manifold atlases as a purely numerical tool and trade the computer-assisted proof of rigorous error bounds for improved runtime performance.

In the absence of a rigorous validation scheme, we develop more heuristic checks to insure the reliability of the computations. More precisely, we must automatically identify and fix numerical accuracy issues related to numerical Taylor integration. This amounts to rescaling our Taylor coefficients whenever the decay in either space or time becomes too slow. However, this is less straightforward than the eigenvector rescaling for the initial local parameterization described in Remark 6. In particular, it is helpful to consider the rescaling in space and time “directions” separately.

4.2.1 Time-stepping

Recall that at the saddle-focus equilibrium, the stable/unstable eigenvalues occur in complex conjugate pairs. In particular, both eigenvalues in each pair have equal real parts. It follows that identically rescaling each pair of eigenvectors is the ideal strategy. In fact, this strategy is also necessary and sufficient to ensure that the initial parameterization is real-valued, see Van den Berg et al. (2016). Moreover, in the general case of a hyperbolic equilibrium, the real part of each eigenvalue is a measure of the expansion or contraction rate in the direction of its associated eigenvector. Thus, in cases for which they are not equal, the real parts are still explicitly known and the eigenvectors are scaled proportional to these rates.

On the other hand, all but the initial chart in our atlas is obtained via our advection scheme. In this case, neither the expansion/contraction rates, nor their directions are explicitly known. Obtaining these estimates would require solving for the (spatial) derivative of the flow on each chart. For a general vector field defined on \(\mathbb {R}^n\), this amounts to increasing the phase space dimension of our ODE solver from n, to \(n + n^2\), which would significantly reduce the size of each manifold which is computationally feasible to produce.

Instead, we take an approach similar to Kalies et al. (2018), which describes heuristics for rescaling time and space independent of one another. Specifically, we adopt a time rescaling which ensures that the norm of the \(M^\mathrm{th}\) “coefficient” (with respect to t) for each chart, is less than machine epsilon. Note that for a classical IVP this coefficient is of course just a scalar. However, in our case the coefficient is actually an analytic function of the spatial variable, represented as a power series and the norm of this coefficient is measured using the \(\ell ^1\) norm. This is made more precise in the following section.

This choice is highly conservative, which gives us tight control over the truncation error in the time direction. On the other hand, the spatial rescaling in the present work deviates from the scheme presented in Kalies et al. (2018) and is detailed in Sect. 4.2.2.

4.2.2 Manifold subdivision

Next, we describe the spatial rescaling scheme which we refer to as manifold subdivision. We assume that the time rescaling described in the previous section has been carried out on each chart, and our interest is in rescaling each boundary arc to control truncation errors accumulating in the “space direction.” This is equivalent to subdividing a manifold since it is reasonable to assume the rescaling will always shrink the domain. Thus, a single boundary arc will give rise to multiple subarcs defined on reduced domains.

To be more precise, we let \(C^{\omega }\) denote the collection of real-valued, analytic functions defined on \((-1,1)\), and let \(\mathcal {S}\) denote the collection of real-valued sequences. We define the Taylor transform, \(\mathcal {T}: C^{\omega } \rightarrow \mathcal {S}\), to be the mapping which sends an analytic function to its sequence of Taylor coefficients centered at \(z = 0\). Specifically, if \(g \in C^\omega \) has the Taylor expansion,

then \(\mathcal {T} \left( g \right) = \{a_n\} = a \in \mathcal {S}\). Now, we equip \(\mathcal {S}\) with the \(\ell _1\)-norm defined by

and we note that elements of \(\mathcal {S}\) with finite norm form a closed subalgebra denoted as

and we write \(\left| \left| a \right| \right| _{\ell _1}\) when we want to emphasize that \(a \in \ell _1\) (i.e., we write \(\left| \left| a \right| \right| _{\ell _1}\) for the norm \(\left| \left| a \right| \right| _1\) when \(\left| \left| a \right| \right| _1\) is finite).

We remark that our error analysis is carried out using the \(\ell _1\)-norm due to the efficiency of computing this norm for polynomials. However, if \(\overline{g}\approx g\) is a numerical approximation, then the errors we are interested in are of the form

We are justified in using the \(\ell _1\) norm due to the well known result that \(\left| \left| \overline{g}- g \right| \right| _{\infty } \le \left| \left| \overline{g}- g \right| \right| _{\ell _1}\).

Now, suppose \(\gamma \in C^\omega \) and assume that \(\mathcal {T} \left( \gamma \right) = a \in \ell _1\). Since \(\varPhi \) is a nonlinear flow, a typical arc segment undergoes rapid deformation and stretching when advected. This implies that for a single step in our algorithm with the general form,

we expect both the arc length and curvature of \(\gamma '\) to be larger than for \(\gamma \). On the level of Taylor coefficients, this statement about deformation/stretching says that if \(b = \mathcal {T} \left( \gamma ' \right) \), then in general we expect \(\left| \left| a \right| \right| _{\ell _1} \le \left| \left| b \right| \right| _{\ell _1}\). The relationship between this norm and the truncation error implies that advecting an arc adversely impacts the propagation error.

To see this, we recall that in practice our computation stores a truncated polynomial approximation for \(\gamma '\) in the form \(\overline{b}= \left( b_0,\cdots ,b_{N-1}\right) \). In order that \(\overline{b}\approx b\) is a “good” approximation (in the \(\ell _1\) topology), \(|b_{n}|\) must be “small” for each \(n \ge N\). These higher-order terms correspond to the truncation error for \(\gamma '\) and primarily arise from two sources. One source which we can not control (once N is fixed) is the truncation error associated with \(\gamma \). However, by inspection of the Cauchy product formula in Eq. (19), it is clear that the polynomial coefficients stored for \(\gamma \) also contribute to this truncation error for \(\gamma '\) after applying the nonlinearity. We refer to these contributions as spillover terms.

This observation implies that for \(\bar{b} \approx b\) to be a good approximation, we must also require that \(\left| a_n \right| \) is “small” for each \(n > N'\) where \(N' < N\) depends on the degree of the nonlinearity. This motivates the following heuristic method for controlling truncation error for propagated arcs. We begin by assuming that a has approximately geometric decay. Specifically, we expect that there exists some \(r < 1\) such that the tail of the series defined by \(\gamma \) decays faster than the geometric series with ratio r. In this case, the truncation error is of order \(\mathcal {O}(r^N)\). Now, fix \(0< N' < N\), and we define the tail ratio for a by

Evidently, \(T_{N'}(a)\) is small whenever “most” of the \(\ell _1\) weight of a is carried in the first \(N'\)-many coefficients. It follows that if \(T_{N'}(a)\) is sufficiently small, then under the action of a nonlinear function, \(f : \ell _1 \rightarrow \ell _1\), the spillover terms for f(a) remain small. Of course, small is dependent on context and in particular, choices for \(N'\) as well as thresholding values for \(T_{N'}\) are problem specific. In the present work, we prove it is always possible to control \(T_{N'}\).

Remark 9

Strictly speaking, for the CRFBP we have \(\gamma = \left( \gamma ^{(1)}, \cdots , \gamma ^{(4)}\right) \) where each \(\gamma ^{(j)} \in C^\omega \) is a coordinate for the boundary chart. Similarly, \(\mathcal {T} \left( \gamma \right) = \left( a^{(1)},\cdots ,a^{(4)}\right) \in \ell _1^4\), and thus the discussion in Sect. 4.2.2 thus far is technically not applicable. However, our restriction to scalar-valued functions is justified by the fact that if \(a \in \ell _1^4\), then defining

makes \(\ell ^4_1\) into a normed vector space. This choice of norm gives us the freedom to restrict the discussion of remeshing and tail ratios to scalar-valued functions.

Next, we describe our scheme for controlling the tail ratio. This algorithm takes a polynomial representation for \(\gamma \), defined on \([-\,1,1]\) as input, and returns a list of polynomials, \(\{\gamma _1,\cdots ,\gamma _K\}\), as outputs. The key point is that these polynomials are also defined on \([-\,1,1]\), and they can be chosen such that \(T_{N'}(\gamma _j)\) is arbitrarily small for \(1 \le j \le K\). In this work, we assume the output polynomials are specified as coefficient vectors of length N (i.e., the same degree as the input); however, this is not required.

This gives rise to an additional remeshing step in our algorithm which is performed as needed after an evaluation step and prior to an advection step leading to an updated schematic

In the remeshing step, the tail ratio for each boundary arc from the previous step is computed and checked against a threshold. Boundary arcs which exceed this threshold are flagged as poorly conditioned, and subdivided into smaller subarcs which satisfy the threshold. The collection of resulting subarcs and well-conditioned arcs from the previous step is passed to the advection step where each results in a separate chart.

Before proving this threshold can always be satisfied, we describe the subdivision algorithm. As noted in Remark 9, it suffices to consider a single coordinate for a parameterized boundary arc. Thus, we assume \(\gamma (s): [-\,1,1] \rightarrow \mathbb {R}\) is analytic with Taylor series

and fix a subinterval, \([s_1,s_2] \subset [-\,1,1]\). Define the constants

and define \(\hat{\gamma }: [-\,1,1] \rightarrow \mathbb {R}\) by

Then \(\hat{\gamma }\) is a parameterization for the arc segment parameterized by \(\gamma \) restricted to \([s_1,s_2]\). In fact, \(\hat{\gamma }\) is the Taylor series for \(\gamma \) after recentering at \(\hat{s}\) and rescaling by \(\delta \) which satisfies the functional equation

Moreover, the mapping \(a \mapsto c\) is a linear transformation on \(\mathcal {S}\), and in particular, if \(a_n = 0\) for all \(n \ge N\), then \(c_n = 0\) for all \(n \ge N\) also. Now, we prove that we have explicit control over the tail ratio for \(\hat{\gamma }\).

Proposition 1

(Controlling tail ratios) Suppose \(\gamma : [-\,1,1] \rightarrow \mathbb {R}\) is analytic, fix \(\hat{s} \in (-1,1)\), \(1 \le N' \le N\), and let \(\epsilon > 0\). Then there exists \(\delta > 0\) such that \(T_{N'}(c) < \epsilon \) where c is the truncation to order N for \(\hat{\gamma }: [-\,1,1] \rightarrow \mathbb {R}\) defined by \(\hat{s},\delta \) as in Eq. (14).

Proof

Define \(\gamma ^N: [-\,1,1] \rightarrow \mathbb {R}\) to be the Taylor polynomial obtained by truncating the Taylor series for \(\gamma \) to order N. For \(k \in \mathbb {N}\), define the usual \(C^k\)-norm on \([-\,1,1]\) to be

Since \(\gamma ^N\) is a polynomial, we have the bound

In particular, for any \(\hat{s} \in (-1,1)\), we have \(\left| \gamma ^{(n)}(\hat{s}) \right| \le M\), for \(0 \le n \le (N-1)\), and we define

It follows that

Now, let \(\hat{\gamma }\) be defined as in Eq. (14). Recall that \(\hat{\gamma }\) is also analytic on \([-\,1,1]\), and by differentiating Eq. (15) we have the derivative formula, \(\hat{\gamma }^{(n)}(s) = \delta ^n \gamma ^{(n)}\left( \hat{s} + \delta s\right) \), for all \(n \in \mathbb {N}\). By Taylor’s theorem, we obtain another explicit formula for \(c_n\) given by

and we note that \(c_0 = \hat{\gamma }(0) = \gamma (\hat{s})\) does not depend on \(\delta \). We have the estimate for the tail ratio of \(\hat{\gamma }\):

which completes the proof. \(\square \)

Proposition 1 establishes the fact that we may reparameterize \(\gamma \) on subintervals of \([-\,1,1]\) with width, \(2\delta \), and that as \(\delta \rightarrow 0\) the tail ratio also approaches zero. We note that \(\delta \) does not depend on the subinterval, and therefore, for a fixed \(\epsilon \) the number of required subarcs is finite. In particular, no more than \(K = \lceil \frac{2}{\delta } \rceil \) subarcs are required. To summarize the usefulness of this result, we present the following algorithm for controlling the spatial truncation error which was implemented for the atlases in this work.

-

1.

Fix a threshold \(0 < \epsilon \ll 1\), a cutoff \(1 \le N' < N\), and \(K \in \mathbb {N}\). The threshold and cutoff are both chosen based on the alignment of \(\gamma \) with the flow, the degree of the nonlinearity in f, and the truncation size. In practice, these are problem specific choices which require some ad hoc experimentation in order to balance computational efficiency and truncation error.

-

2.

Following each evaluation step in our algorithm, a boundary arc has the form \(\gamma : [-\,1,1] \rightarrow \mathbb {R}\) which is stored in the computer as a polynomial approximation, \(\overline{a}= \left( a_0,\cdots ,a_{N-1}\right) \). If \(T_{N'}(\overline{a}) < \epsilon \), continue to the advection step.

-

3.

If \(T_{N'}(\overline{a}) \ge \epsilon \), specify a partition of \([-\,1,1]\) into K-many subintervals by choosing their endpoints, \(\{s_0,s_1,\cdots ,s_K\}\). Apply the formula in Eq. (14) to obtain \(\{\gamma _1,\cdots ,\gamma _K\}\) where for \(1 \le j \le K\), \(\gamma _j(s) = \gamma (\hat{s}_j + \delta _j s)\) where \(\hat{s}_j = \frac{s_j + s_{j-1}}{2}\) and \(\delta _j = \frac{s_{j} - s_{j-1}}{2}\).

-

4.

Each resulting subarc which satisfies the tail ratio threshold passes to the advection step. Subarcs which violate the threshold are subdivided again by repeating step 3. By Proposition 1, this condition is eventually met for every subarc and the algorithm proceeds to the advection step.

4.2.3 Stiffness

The final numerical consideration which we address is the stiffness problem. We recall that the CRFBP vector field is analytic away from the primary masses which correspond to singularities of Eq. (3). Since this system is Hamiltonian, any trajectory which collides with one of these primaries must blow up in finite time. However, smooth trajectories may pass arbitrarily close to these primaries and as they do, the velocity coordinates, \(\dot{x}, \dot{y}\), become arbitrarily large.

Recall that a single boundary arc, \(\gamma : [-\,1,1] \rightarrow \mathbb {R}^4\), is a parameterized manifold of initial data. Then its advected image, \(\varGamma :[-\,1,1] \times [0,1] \rightarrow \mathbb {R}^4\), is a parameterized bundle of trajectory segments. For any \(s_0 \in [-\,1,1]\), \(\varGamma (s_0,t)\) parameterizes the trajectory passing through \(\gamma (s)\) over the (nonscaled) time interval, \([0,\tau ]\).

Now, suppose that for \(s_0 \in [-\,1,1]\), the trajectory through \(\gamma (s_0)\) passes “close” to a primary at time \(t = t_0\). Then, we have

Recalling our time rescaling algorithm described in Sect. 4.2.1, it is clear that controlling truncation in the time direction will require taking increasingly shorter time-steps. Of course, this is not surprising; however, the difficulty arises from the fact that other choices of \(s \in [-\,1,1]\) often correspond to trajectory segments which remain far away from the primary and our time rescaling is applies uniformly on \([-\,1,1]\). Hence, the advection of the entire boundary chart is slowed dramatically whenever any portion of its image approaches a primary. We refer to these charts as stiff. Obviously, this is a major problem for our “breadth-first” approach for computing the manifold atlas. Namely, the integrator gets stuck on the stiff charts causing the computation to stall.

A naive method for dealing with this is to define the speed for a boundary chart which is a parameterized curve of the form, \(\gamma (s) =\) \((x(s),\dot{x}(s)\), \(y(s), \dot{y}(s) )\), by

set a threshold, \(\kappa \), and cease advection of \(\gamma \) whenever \(S(\gamma ) > \kappa \). While this fixes the problem of computational efficiency, we also lose large portions of the manifold which remain far from the primaries. Instead, we leverage the manifold subdivision procedure which is already introduced in Sect. 4.2.2 to modify the naive algorithm in order to retain these portions of the manifold as follows.

-

1.

Fix a maximum speed threshold, \(\kappa >0\). For each boundary chart, \(\gamma \), present after the evaluation step, check that \(S(\gamma ) \le \kappa \) and if so, continue to the remeshing step.

-

2.

If \(S(\gamma ) > \kappa \), write \(\gamma (s) = \left( x(s),\dot{x}(s), y(s), \dot{y}(s)\right) \) and compute

$$\begin{aligned} \left\{ s \in [-\,1,1] : \dot{x}(s)^2 + \dot{y}(s)^2 - \kappa ^2 = 0\right\} . \end{aligned}$$Since \(\dot{x}, \dot{y}\) are polynomial approximations, this set is a finite collection of roots of a polynomial which we denote by, \(\left\{ s_0,\dots ,s_K\right\} \).

-

3.

For \(1\le j \le K\), check that \(\dot{x}(s)^2 + \dot{y}(s)^2 - \kappa ^2 < 0\) holds on \([s_j,s_{j+1}]\) and if so, compute \(\hat{\gamma }_j\) as in Eq. (14) and continue to the remeshing step. Subintervals which fail this check are discarded.

To summarize, our algorithm identifies regions of the manifold boundary which pass close to a primary by checking the maximum speed. Regions which exceed a threshold are cut away, while regions of the nearby boundary continue to be advected. The cut regions cause the apparent holes punched out around each primary in the manifold plots, as in Figs. 7 and 8.

Atlases at \(\mathcal {L}_0\) in the Triple Copenhagen problem: the center of each frame shows the initial local stable chart (green), and unstable chart (red), computed to order 45 using the parameterization method. The three blue dots in each frame represent the location of the primaries. One-third of the boundary of each local manifold is meshed into ten analytic arcs and propagated in time with the boundary of each chart illustrated in blue. By Lemma 1, the rest of the atlas is obtained via \(\pm \,120^{\circ }\) rotations. The five frames illustrate the complete atlases obtained after advecting the boundary arcs for \(\pm \,0.25, \pm \,1.0, \pm \,1.5\) time units (top row) and \(\pm \,2.5\) and \(\pm \,4.0\) time units (bottom row). After a fairly short integration time, the resulting atlases become complicated enough that visual analysis is difficult or impossible. This complexity motivates development of the post-processing schemes described in Sect. 5.2. Each chart is approximated using Taylor order 20 in space and 40 in time. Runtime and number of charts are given in Table 3

4.3 Computational results: manifold atlases for the triple Copenhagen problem

Performance results for atlas computations at the libration points \(\mathcal {L}_{0}\) and \(\mathcal {L}_5\) are given in Tables 3 and 4, respectively. The computations are performed for the case of equal masses, that is for the triple Copenhagen problem. The tables report the advection time—that is the number of time units the boundary of the local parameterizations are integrated—as well as the time required to complete the computations and the number of polynomial charts comprising the atlas. All computations were performed on a MacBook Air laptop running Sierra version 10.12.6, on a 1.8 GHz Intel Core i5, with 8 GB of 1600 MHz DDR3 memory.

Atlases at \(\mathcal {L}_5\) in the Triple Copenhagen Problem: center of each frame shows the local stable/unstable charts computed using the parameterization method (red and green, respectively). The locations of the primaries are denoted by the blue dots in each frame. Parameterizations approximated to polynomial order 45. The boundary of the local stable/unstable manifold is meshed into thirty analytic arcs. The five frames illustrate the atlases obtained by advecting the boundary arcs by \(\pm \,0.75, \pm \,1.15, \pm \,1.5\) time units (top row) and by \(\pm \,3.0\) and \(\pm \,4.0\) time units (bottom row). Again it is difficult to analyze the results by eye, and some post-processing is necessary. Each chart is approximated using Taylor order 20 in space and 40 in time. Runtime and number of charts are given in Table 4

The resulting atlases for \(\mathcal {L}_0\) and \(\mathcal {L}_5\) are illustrated in Figs. 7 and 8 for various integration times. The boundaries for the charts are also shown, making it clear that the computational effort goes up dramatically near the primaries. Note that the chart boundary lines running out of the local parameterizations are actual orbits of the system and hence give a sense of the dynamics on the manifold. The pictures provide some insight into the dynamics of the problem; however, their complexity illustrates the need for more sophisticated search techniques in order to extract further useful qualitative information from the atlases.

5 Homoclinic dynamics in the CRFBP

In this section, we discuss connecting orbits found for the symmetric \(m_1 = m_2 = m_3 = 1/3\) case by searching the manifold atlases computed in the previous section.

5.1 Mining the atlases

Assume we have computed atlases, \(\mathcal {A}^{s,u}\), for the stable/unstable manifolds of \(\mathbf {x_0}\). We are interested in “mining” the chart data to find transverse connections. Since each atlas is stored as a collection of polynomial charts, it suffices to identify pairwise intersections between stable and unstable charts. Thus, throughout we assume \(\varGamma ^{s,u} : [-\,1,1]^2 \rightarrow W^{s,u}(\mathbf {x}_0)\) is a pair of charts which parameterize a portion of the stable/unstable manifold. We write \(\varGamma ^{s,u}_{1,2,3,4}\) denote the scalar coordinates of each chart. The following theorem whose proof can be found in Kepley and Mireles James (2018) provides a computable condition for verifying transverse intersection of a pair of charts.

Theorem 1

Define \(G :[-\,1,1]^3 \rightarrow \mathbb {R}^3\) by

and suppose \((\hat{s}, \hat{t}, \hat{\sigma }) \in [-1, 1]^3\) satisfies \(G(\hat{s}, \hat{t}, \hat{\sigma }) = 0\). If \(\varGamma _4^u(\hat{s}, \hat{t})\) and \(\varGamma _4^s(\hat{\sigma }, 0)\) have the same sign, then \(\hat{\mathbf {x}} := \varGamma ^u(\hat{s}, \hat{t})\) is homoclinic to \(\mathbf {x}_0\). Moreover, if \(DG(\hat{s}, \hat{t}, \hat{\sigma })\) is nonsingular and if \(\nabla E(\hat{\mathbf {x}}) \ne 0\) (where E is the CRFBP energy), then the energy level set is a smooth 3-manifold near \(\hat{\mathbf {x}}\) and the stable/unstable manifolds of \(\mathbf {x}_0\) intersect transversally in the energy manifold.

We emphasize that Theorem 1 provides a computable condition for verifying a transverse intersection using rigorous numerics. However, we will use the same theorem to detect transverse intersections in the purely numerical setting of this paper. This is made explicit in the following algorithm utilized in the mining scheme for all results in the present work.

Assume \(\varGamma ^{s,u}, G\) are as defined in Theorem 1. Apply Newton’s method to find an approximate root of G. Let \(\hat{v} = \left( \hat{s}, \hat{t}, \hat{\sigma }\right) \) denote an approximate solution with \(G(\hat{v}) \approx 0\), and check the following conditions:

-

1.

\(\varGamma _4^u(\hat{s},\hat{t})\), and \(\varGamma _4^s(\hat{\sigma },0)\) are both “far” from 0.

-

2.

\(\varGamma _4^u(\hat{s},\hat{t})\), and \(\varGamma _4^s(\hat{\sigma },0)\) have the same sign.

If condition 1 holds without condition 2, then these charts are nonintersecting. In this case, these charts lie on separated portions of the stable/unstable manifolds which are symmetric with respect to the fourth coordinate. We refer to these as “pseudo-intersections.” On the other hand, if both conditions hold, then we conclude from Theorem 1 that we have numerically found a transverse homoclinic for \(\mathbf {x}_0\) passing through \(\varGamma ^u(\hat{s}, \hat{t}) = {\hat{\mathbf{x}}}\).

Note that condition 1 serves two purposes in this setting. First, it serves as an easily computable condition for checking that \(\nabla E({\hat{\mathbf{x}}}) \ne 0\) as required in the theorem. This follows by noting that

so it follows that \(\nabla E(\hat{\mathbf {x}}) \ne 0\) is satisfied automatically whenever condition 1 is satisfied.

In addition, condition 1 gives us some confidence that the sign difference from condition 2 holds due to transversality of the homoclinic, as opposed to numerical error. Indeed, if condition 1 is not satisfied, then \(\varGamma _4^u(\hat{s},\hat{t})\), and \(\varGamma _4^s(\hat{\sigma },0)\) take values near zero in which case sign errors for either coordinate are likely due to integration errors. In this case, even if condition 2 is satisfied we are unable to trust the result, and hence unable to conclude whether the zero of G corresponds to a transverse intersection or a pseudo-intersection. Fortunately, this situation can be remedied as discussed in Remark 11. As a result, we are free to choose our threshold for what is meant by “far” in the statement of condition 1 very conservatively which leads to a great deal of confidence that our mining algorithm returns only transverse homoclinic orbits.

We further increase our confidence in the approximate connection by using it as the input for a BVP solver based on Newton’s method, which allows us to refine our approximation to nearly machine precision, and it is the BVP formulation to which we then apply continuation methods. Every connection reported in this section has been so certified, and none of the connections identified from the mining algorithm had a BVP which failed to converge. In other words, the mining algorithm did not return any false homoclinics.

5.2 Efficient atlas mining

It is not desirable to check every pair of charts from each atlas using the above procedure, and we introduce two methods which significantly reduce the number of chart pairs which must be checked via the Newton intersection scheme based on Theorem 1.

5.2.1 The \(\ell _1\) box approximation

The first method for improving the mining efficiency is to apply a coarse preprocessing step to each pair of charts which must be compared. The main idea is based on the fact that for most pairs of charts which do not intersect, these charts will “obviously” not intersect in the sense that their images in phase space will be very far apart. We exploit this using a fast algorithm for identifying many such pairs and in this case skip the slower Newton-based intersection attempt.

To be more precise, consider an arbitrary polynomial \(P: [-\,1,1]^2 \rightarrow \mathbb {R}\) defined by

We define the \(\ell _1\) box for P to be

The significance of \(B_P\) is that we have the bound

or equivalently, \(P(s,t) \in B_P\) for all \((s,t) \in [-\,1,1]^2\). Analogously, we extend this to higher dimensions component-wise and apply this to geometrically rule out pairs of charts which can not intersect because their images are “well separated.” Specifically, consider a pair of stable/unstable charts

which have \(\ell _1\) boxes described by rectangles in \(\mathbb {R}^4\) and satisfying \(\varGamma ^s(s,t) \in B_{\varGamma ^s}\), and \(\varGamma ^u(s,t) \in B_{\varGamma ^u}\). Then, if the set distance, \(d(B_{\varGamma ^s}, B_{\varGamma ^u})\) is large enough, we can conclude that \(\varGamma ^s,\varGamma ^u\) do not intersect.

Using \(\ell _1\) boxes has two advantages. The first is that computing and checking \(\ell _1\) boxes for pairwise intersections is much faster than our Newton-like intersection method. This is due to the fact that for each coordinate the box radius, r, is equivalently computed as

which is extremely fast to compute using modern implementations. Determining whether two boxes intersect or not is also fast due to efficient interval arithmetic libraries such as the INTLAB library for MATLAB (Rump 1999) which was utilized in our implementation.

The second advantage is that an \(\ell _1\) box is typically a very coarse enclosure for the true values of P. This “problem” is often referred to as the data-dependence problem or the wrapping effect. In our situation, however, we consider the coarseness to be a feature since it makes our numerical estimates more conservative. Thus, we are able to rule out many pairs of charts which clearly do not intersect without eliminating false negatives.

In practice, a single pairwise \(\ell _1\) box intersection check is approximately 1,000 times more efficient than the Newton-based scheme and this method rules out around 90 percent of nonintersecting chart pairs. Moreover, the \(\ell _1\) box for each chart can be computed only once during the atlas construction and stored. This leaves the cost of a single box intersection check as the only significant computational operation.

Finally, we remark that once \(\ell _1\) boxes have been computed and stored for each chart in both atlases, one can make careful use of the triangle inequality to reduce the computation even further. This provides roughly an additional order of magnitude improvement in the efficiency of our algorithm which could be crucial to the feasibility of mining extremely large atlases. However, we took limited advantage of this fact in the present work.

5.2.2 Fundamental domains

The other main source of efficiency gain in our algorithm relies on using the dynamics explicitly. Recalling our notation in Sect. 4, assume \(\mathcal {A}^s\) is the stable manifold atlas which we have computed to include the \(L_s^\mathrm{th}\) generation and let \(W^{s}_{k}(\mathbf {x}_0)\) denote the \(k^\mathrm{th}\) generation local stable manifold. Then, \(W^{s}_k(\mathbf {x}_0)\) is a fundamental domain for \(W^{s}(\mathbf {x}_0)\). In other words, if \(\mathbf {x}(t)\) is any orbit which satisfies \(\lim \limits _{t \rightarrow \infty } \mathbf {x}(t) = \mathbf {x}_0\) and if \(\mathbf {x}(0) \ne \mathbf {x}_0\), then there exists \(t_k \in \mathbb {R}\) such that \(\mathbf {x}(t_k) \in W^{s}_k(\mathbf {x}_0)\). Of course, the same claim holds for the unstable manifold. Taken together, if we assume we have computed the unstable manifold, \(\mathcal {A}^u\), up to the \(L_u^\mathrm{th}\) generation, then we have the following observation.

Proposition 2

Let \(\mathbf {x}(t)\) be a transverse homoclinic to \(\mathbf {x}_0\). Then \(\mathbf {x}(t) \in W^s(\mathbf {x}_0) \cap W^u(\mathbf {x}_0)\) for all \(t \in \mathbb {R}\). Let \(W^{s,u}_0(\mathbf {x}_0),W^{s,u}_1(\mathbf {x}_0),\cdots ,W^{s,u}_{L_{s,u}}(\mathbf {x}_0)\) denote the generation sequence of local stable/unstable manifolds. Then exactly one of the following is true.

-

There exists \(k_s,k_u\) and \(t_0 \in \mathbb {R}\), such that \(\mathbf {x}(t_0) \in W^s_{k_s}(\mathbf {x}_0)\bigcap W^u_{k_u}(\mathbf {x}_0)\) and \(k_s + k_u\) is constant for all pairs \((k_s,k_u)\) which satisfy this property.

-