Abstract

Neuroimaging evidence suggests that dynamic facial expressions elicit greater activity than static face stimuli in brain structures associated with social cognition, interpreted as greater ecological validity. However, a quantitative meta-analysis of brain activity associated with dynamic facial expressions is lacking. The current study investigated, using three fMRI experiments, activity elicited by (a) dynamic and static happy faces, (b) dynamic and static happy and angry faces, and (c) dynamic faces and dynamic flowers. In addition, using activation likelihood estimate (ALE) meta-analysis, we determined areas concordant across published studies that (a) used dynamic faces and (b) specifically compared dynamic and static emotional faces. The middle temporal gyri (Experiment 1) and superior temporal sulci (STS; Experiment 1 and 2) were more active for dynamic than static faces. In contrasts with the baseline the amygdalae were more active for dynamic faces (Experiment 1 and 2) and the fusiform gyri were active for all conditions (all Experiments). The ALE meta-analyses revealed concordant activation in all of these regions as well as in areas associated with cognitive manipulations (inferior frontal gyri). Converging data from the experiments and the meta-analyses suggest that dynamic facial stimuli elicit increased activity in regions associated with interpretation of social signals and emotional processing.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Most facial movements result in expressions of emotion or language, and subtle changes of the mouth or eyes provide a rich and powerful source of social information. Recognizing and understanding these changes are basic to interactions in our social environment. Faces are processed rapidly and require minimal attentional resources (Vuilleumier and Schwartz 2001; Palermo and Rhodes 2007), making humans very effective at quickly and readily discerning emotions from facial expressions. Humans have more experience looking at dynamic expressive faces; looking at static faces has been a privilege largely of the last century with the advent of photography. Behavioural (Biele and Grabowska 2006; Yoshikawa and Sato 2006; Uono et al. 2010) and neuroimaging studies (e.g., Kilts et al. 2003; LaBar et al. 2003; Sato et al. 2004; Trautmann et al. 2009) show that, compared to static expressions (i.e., photographs), dynamic facial expressions convey compelling information that is more similar to what we encounter with faces in everyday social interactions. For instance, dynamic presentation of facial emotions was shown to improve identification of emotion due to more realistic feature changes during motion (Frijda 1953; Kozel and Gitter 1968; Harwood et al. 1999; Wehrle et al. 2000), consistent with the argument that motion in facial expressions increases the ecological validity of a face (e.g., Carter and Pelphrey 2008; LaBar et al. 2003; Sato et al. 2004; Trautmann et al. 2009). Static photographs of facial expressions are predominantly used in imaging studies (e.g., Allison et al. 2000; Adolphs 2002, 2003; Phan et al. 2002; Fusar-Poli et al. 2009, for reviews); however, due to possible increased ecological validity, the use of dynamic stimuli may be advantageous in this work. The purpose of this study is to examine brain responses to dynamic facial expressions with the primary interest in the differences between dynamic and static facial expressions.

General agreement exists among researchers that static facial expressions elicit activity in the fusiform gyri, the amygdalae and parts of the temporal cortex (for reviews: Allison et al. 2000; Adolphs 2002, 2003; Britton et al. 2006; Gobbini and Haxby 2007; Palermo and Rhodes 2007; Vuilleumier and Pourtois 2007). The fusiform gyri and the superior temporal sulci (STS) are involved in the detailed perception of faces (Haxby et al. 2000; Adolphs 2002; Gobbini and Haxby 2007). Previous research showed that STS activity was greater for emotion than identity morphs, supporting the hypothesis that STS is involved in social cognition (Allison et al. 2000; LaBar et al. 2003). Just posterior and ventral to the STS is the V5/MT (middle temporal) cortex, a motion sensitive area (Allison et al. 2000) involved in visual motion awareness (Lanyon et al. 2009). LaBar et al. (2003) showed that V5/MT responded to dynamic visual cues, but emotional cues recruited the STS. As these regions are topographically adjacent, differentiating them can be difficult and activity may be categorised as coming from the same region (e.g., Allison et al. 2000). The amygdalae have also been shown to activate to facial emotions (Adolphs 2002; Morris et al. 1998; Gobbini and Haxby 2007). Although early studies implicated the amygdalae particularly with fear processing (e.g., Morris et al. 1999; Phan et al. 2002), recent studies show amygdala involvement in a wide range of salient emotions (discussions of which can be found in Carter and Pelphrey 2008; LaBar et al. 2003).

The vast majority of neuroimaging studies have used static facial stimuli. Fewer studies have used brain imaging protocols with dynamic facial expressions (e.g., Carter and Pelphrey 2008; Kilts et al. 2003; LaBar et al. 2003; Sato et al. 2004; Trautmann et al. 2009); however, enough research is accumulating to warrant a collective report of the data. Researchers using dynamic stimuli (LaBar et al. 2003; Sato et al. 2004; Schultz and Pilz 2009) usually presented the faces as short video clips with a neutral expression, transforming through a set of frames (frames/s ranges from 25 to 40), over 800–5,000 ms, into a face with a salient emotional expression, for instance, a smile or a frown. Although most studies used stimuli from available stimulus datasets (e.g., Ekman series; Ekman and Friesen 1976), some researchers generated their stimuli by filming actresses and actors (e.g., Grosbras and Paus 2006; Trautmann et al. 2009). Imaging studies that examined dynamic facial expressions employed a range of control conditions or baselines (e.g., static facial expressions and scrambled images). Details on studies used in the meta-analyses are found on Table 1. Although the methodology (e.g., stimulus presentation interval, emotion) varies among these studies, the brain areas that activate to dynamic facial expressions largely overlap with those seen in static face studies, namely the fusiform gyri, superior temporal sulci (STS), posterior middle temporal gyri (more commonly referred to as V5/MT), as well as the amygdalae. Using a coordinate-based meta-analytic technique (Laird et al. 2005), we aimed to characterize the areas that underlie dynamic facial expressions with a major focus on the contrast between dynamic and static facial expressions; this method provides quantitative information that a subjective, qualitative review cannot provide.

Stimuli that have increased ecological validity may be especially advantageous in the study of social cognition in young and clinical populations, where imaging time is limited and maximal activations are critical. With a future aim to utilise dynamic and static face expression tasks to developmental populations, we were particularly interested in methodological approaches favoured for developmental neuroimaging, to minimize extraneous developmentally laden factors (e.g., Gaillard et al. 2001; Luna et al. 2010). For instance, it is preferable to use tasks with simple instructions and short runs. It is also recommended to use tasks where the neural correlates are well understood in the adult literature to readily allow for comparisons across populations (Luna et al. 2010). Thus, to determine the brain regions implicated in the processing of dynamic facial expressions, we conducted three experiments and quantitative meta-analyses of relevant literature. As an initial step, in Experiment 1 participants viewed static and dynamic happy faces. In Experiment 2, participants viewed static and dynamic happy and angry faces; data on happy faces were also merged with data from Experiment 1. With these comparisons we were able to look at the effect of emotion in brain regions that underlie processing of positive and negative dynamic facial expressions. Lastly, in Experiment 3 we used only dynamic stimuli of happy and angry faces as well as dynamic pictures of opening and closing flowers, to examine differences between motion producing facial expressions and other biological motion in objects. In the meta-analyses we compiled data from earlier studies to quantitatively assess the areas involved in processing dynamic faces with a specific focus on contrasts between dynamic and static facial expressions.

Experiment 1

The purpose of the first experiment was to examine the neural correlates of dynamic and static happy facial expressions. We were particularly interested in the contrast between dynamic and static facial expressions, and expected more activity to dynamic faces in regions associated with face processing and related motion.

Materials and Methods

Participants

Twenty four participants, 18 female, mean age 26.5 ± 4.6 years completed Experiment 1. None of the participants had any history of neurological or psychiatric disorders. Informed consent was obtained from all participants and the Research Ethics Board at the Hospital for Sick Children approved all procedures.

Stimuli

Stimuli were greyscale pictures of happy faces (4 males and 4 females) presented either in static or dynamic form (Fig. 1a). The images were taken from MacBrain Face Stimulus Set database of faces; images had an accuracy of emotion rating >80%.Footnote 1 The images were cropped using an oval mask to remove hair, ears and shoulders and were embedded in a uniform light grey background. Dynamic stimuli were created using WinMorph software. Faces were morphed from a neutral expression to a smiling expression over 15 frames. These images were presented in a video at 50 frames per second. The final frame was repeated for 9 frames for a total of 24 frames over 480 ms. In the static condition, only the last frame was displayed for 480 ms. In a block design, static and dynamic conditions were presented in an alternating fashion (ABXBAX…; Fig. 1; A and B correspond to static and dynamic conditions, respectively, and X represents baseline blocks). Four 13.5 s blocks were presented for each condition and each block contained 8 stimuli presented at a 1,500 ms inter-stimulus interval. A white fixation cross was presented for 1 s between stimuli. Within each block, a star was presented randomly for 1,500 ms; participants were instructed to press a key when they saw the star, to maintain attention. Baseline blocks, during which a fixation cross was presented were also presented for 13.5 s. A fixation cross was also presented for 16 s at the beginning and end of the run.

fMRI Procedure

All MR imaging was conducted on a 1.5T Signa Twin EXCITE3 scanner (GE Medical Systems, WI; software rev.12M4) with a 8-channel head coil. T1-weighted 3D SPGR images covering the whole brain were acquired (TE/TR/alpha = 9 ms/4.2 ms/15°, 116 slices, voxel size = 1×1 × 1.5 mm3, 2 NEX, 7 min) as an anatomical underlay. Functional images were then acquired while participants completed the task, with a standard single-shot gradient-recalled echo-planar imaging sequence (TE/TR/alpha = 40 ms/2000 ms/90°, voxel size = 3.75 × 3.75 × 5 mm3). Depending on the participant’s head size, we collected 24–26 contiguous axial slices.

Participants were asked to attend to all stimuli and press a button when they saw a star. Stimuli were displayed on MR compatible goggles (CinemaVision, Resonance Technology Inc., CA). Stimuli were controlled and responses recorded using the software Presentation (Neurobehavioral Systems Inc., CA) on a personal computer. Responses to the star stimulus were recorded using an MR compatible keypad (Lumitouch, Photonics Control, CA).

Data Processing and Analyses

Data pre-processing and analyses were carried out in AFNI (Cox 1996). The standard pre-processing pipeline included motion correction, 8 mm Gaussian kernel (full width half maximum; FWHM), signal intensity normalization for percent signal change and GLM fitting using a fixed haemodynamic response function (AFNI program 3dDeconvolve) within subjects’ datasets. Images were spatially normalised to the MNI N27 brain in Talairach stereotaxic space and resampled to 3 mm cubic voxels.

Group images were analyzed using random effects ANOVA. Differences were examined among the static, dynamic and baseline/rest conditions. The results were thresholded at P < 0.01 (corrected for multiple comparisons using cluster size; Xiong et al. 2003) for the whole brain. The AFNI program AlphaSim performs probability simulations to estimate the random field noise producing a cluster in a given volume after the noise is thresholded at a prescribed P-value; this provides the minimum cluster size in the dataset of interest. Using this method, 1,000 Monte Carlo iterations were performed at an uncorrected P-value of 0.05 on the whole brain mask. The smoothness of the ANOVA residuals were measured and used as the smoothness of the simulated noise fields. The average brain mask for Experiment 1 contained 61,537 voxels, yielding a minimum volume of 2,214 μl (82 voxels) as the cluster size threshold.

Results

Behavioural results showed that participants were very accurate in responding to the star stimulus (99 and 100% for static and dynamic stimuli, respectively). No significant differences in response times were observed during static (450.5 ± 76 ms) and dynamic (460 ± 96 ms) faces blocks.

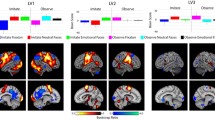

Neuroimaging results showed that compared to baseline, static happy faces elicited bilateral activity in the extended visual occipital temporal processing stream, as well as in the right precentral gyrus, right middle frontal gyrus (BA6 and BA 9) and the right parietal lobule; dynamic happy faces elicited activity in the same occipital temporal areas as well as bilateral amygdalae, parahippocampal gyri, thalami, lentiform nuclei and more extensive frontal activation (Fig. 2; Table S1). The contrast between dynamic and static happy faces showed more activity for dynamic happy faces in the right inferior and middle temporal gyri (BA 37; Table 2a; Fig. 3).

Rendered brain maps of activity shared by the three experiments. Activation maps are superimposed to generate activity related with (a) dynamic happy > baseline for experiments 1, 2, and 3, (b) static happy > baseline for experiments 1 and 2 and (c) dynamic angry > baseline for experiments 2 and 3. P = 0.01 corrected for multiple comparisons using cluster size

Overall, these results demonstrate that both static and dynamic faces elicit activity as expected in core areas of face processing, such as the lingual and the fusiform gyri, as well as extended regions, such as the middle frontal gyri (BA 9), which are associated with the cognitive processing of faces. However, dynamic faces showed more activity than static faces in posterior middle temporal regions. More activity for dynamic faces than baseline was detected in the STS and the amygdalae, which are regions associated with interpreting social signals, and emotional processing, respectively. This is consistent with the hypothesis that V5/MT underlies movement, whereas the STS plays a key role for interpreting information in social signals (LaBar et al. 2003). Results are discussed in more detail in the general discussion.

Experiment 2

The purpose of this experiment was to examine the neural correlates of dynamic and static happy and angry facial expressions. Our primary goal was to replicate results from Experiment 1 regarding the contrast between dynamic and static presentation of faces and as a secondary goal to examine the effect of emotion by utilizing both happy and angry faces.

Materials and Methods

Participants

Fifteen adults, 13 female, mean age 26.3 ± 4.5 years, who participated in Experiment 1 also completed Experiment 2, with the same inclusion criteria and consents.

Stimuli

Stimuli were presented in the same sequence as in Experiment 1, but in different blocks the four male and four female faces exhibited angry or happy expressions (Fig. 1b). There were four blocks for each of the emotions and face types (i.e., dynamic happy, dynamic angry, static happy, static angry) for a total of 16 13.5 s blocks, interspersed with rest blocks as in Experiment 1.

fMRI Procedure

We employed the same procedure as Experiment 1.

Data Processing and Analyses

We used the same data processing methods as Experiment 1. Whole brain contrasts were performed among all conditions and are reported at P < 0.01 (corrected for multiple comparisons using cluster size; Xiong et al. 2003). The average brain mask for Experiment 2 contained 60,700 voxels; Monte Carlo simulations required a minimum cluster volume of 2,133 μl (79 voxels) to pass an uncorrected P-value of P < 0.05.

As both happy and angry dynamic and static expressions were included in this study, we were able to examine the effect of both emotion and stimulus presentation on signal change in task-relevant regions. The average signal change and standard error were extracted from regions of interest (ROIs—averaged over 8 voxels; cubes 6 mm in length) in the amygdalae, fusiform gyri, posterior middle temporal regions (V5/MT) and STS. Central voxels were chosen from functional contrasts (dynamic > static) for V5/MT in the left (−42, −60, −4) and right (45, −57, 1) hemisphere, and superior temporal gyrus in the left (−48, −50, 12) and right (49, −50, 10) as well as from the dynamic happy > baseline contrast for the fusiform gyri, left (−43, −57, −17) and right (41, −57, −13) and the amygdalae, left (−21, −4, −10) and right (23, −4, −10). Note that ROI results are descriptive, for the purpose of illustration; they do not include further statistical analyses.

Results

Behavioural results show that participants responded with 96% accuracy to the star stimulus in the dynamic and static angry conditions, with 98% accuracy for static happy faces and 100% accuracy for dynamic happy faces; accuracy scores were not statistically different among conditions. No significant differences were observed for reaction times in blocks of happy static (457.2 ± 87 ms), happy dynamic (475.5 ± 110 ms), angry static (471.7 ± 122 ms) and angry dynamic (461.4 ± 91 ms) faces.

Neuroimaging results show that compared to baseline, static happy and angry faces elicited activity in classic face processing areas; dynamic happy and angry faces evoked more activity than baseline in more wide-ranging areas than static faces (Table S2). These results replicated the findings from Experiment 1 and demonstrated that happy and angry faces elicited activity from a similar set of areas, with the exception of the left inferior parietal lobule (BA 7/40) and left superior parietal lobule (BA 7; Table 2b) that showed more activity for happy than angry faces.

Dynamic faces (both happy and angry) elicited more activity than static faces in bilateral middle temporal gyri, left middle occipital gyrus, left fusiform gyrus and right superior temporal gyrus (Table 2b; Fig. 3). Percent signal change related to fusiform gyri, posterior middle temporal gyri, STS and the amygdalae for all four face types, using the ROIs described above, are shown in Fig. 4. Note that these figures are for the purpose of illustration, and their differences have not been statistically tested, however the following trends are observed. The right fusiform appeared to be the most active region irrespective of facial expression and mode of presentation; however, dynamic facial expressions elicited more signal than static expressions in both hemispheres. In posterior middle temporal gyri and the STS, activity decreased bilaterally between dynamic and static faces. Although a difference was observed in the left amygdala between dynamic presentation of happy and angry faces, the effect was larger for the right amygdala and higher for dynamic angry faces than other conditions (Fig. 4d). Overall, dynamically presented faces elicited more activity in regions associated with emotional processing of faces (e.g., amygdalae, STS) as well as motion (posterior middle temporal regions). Results are discussed in more detail in the general discussion.

Average signal change and standard error for regions sensitive to dynamic emotions in Experiment 2. (a) Fusiform gyri; left: −43, −57, −17; right: 41, −57, −13, (b) V5/MT; left: −42, −60, −4; right: 45, −57, 1, (c) STS; left: −48, −50, 12; right: 49, −50, 10 and (d) Amygdalae: left: −21, −4, −10; right: 23, −4, −10. Note: ROI averages were not tested for statistical significance

Experiment 3

Flowers, similar to faces, are configurations of different parts or features (e.g., petals, stamens). Motion in these parts produces a different flower state. Thus, as a complementary purpose, in Experiment 3 we examined whether dynamic motion in faces and flowers would show differences in areas of social cognition (e.g., superior temporal regions). We used dynamic stimuli of happy and angry faces as well as dynamic pictures of opening and closing flowers. This experiment allowed for investigating activity differences elicited by biological motion in faces and flowers.

Materials and Methods

Participants

Fifteen participants, 7 female, mean age 25.8 ± 5.8 years completed Experiment 3. They fulfilled the same criteria and consents as in Experiment 1.

Stimuli

Face stimuli were the same as the dynamic stimuli used in Experiment 2: 4 male and 4 female face movie clips of happy and angry expressions. In addition, we included videos of flowers in greyscale opening and closing. The flower videos were presented at 31.25 frames per second and included 10 frames (312.5 ms) of transformation and 5 repetitions of the final frame for a total duration of 468.75 ms. As in Experiments 1 and 2, each block consisted of 8 stimuli, interleaved by a white fixation cross for 1 s. Each block also included a randomly presented star image, to which subjects responded, to ensure that they were paying attention. There were six 13.5 s blocks for each task condition. A 16 s block of fixation was presented at the beginning and end of the run with a 13.5 s block of fixation in the middle.

fMRI Procedure

We used the same fMRI procedure as Experiment 1.

Data Processing and Analyses

We again used the same data processing and analyses methods as Experiment 1. Contrasts were performed among all conditions and are reported at P < 0.01 (corrected for multiple comparisons using cluster size; Xiong et al. 2003). The average brain mask for Experiment 3 contained 60,924 voxels and Monte Carlo simulations required a minimum cluster volume of 1,620 μl (60 voxels) to pass an uncorrected P-value of P < 0.05.

Results

Behavioural results indicated ceiling accuracy for all conditions in response to the star stimulus (happy dynamic, 99%, angry dynamic 100%, and flower dynamic 100%). Response times for happy dynamic (457.6 ± 55 ms), angry dynamic (452.5 ± 50 ms) and flower dynamic (456.5 ± 39 ms) did not show any significant differences.

Neuroimaging results showed that compared to baseline, dynamic happy faces elicited more activity bilaterally in the inferior occipital, middle occipital and fusiform gyri; dynamic angry faces elicited more activity than baseline in the same regions as dynamic happy faces, but in addition, showed activity bilaterally in the middle temporal gyrus (Fig. 2; Table S3). Dynamic presentation of flowers evoked more activity than baseline bilaterally in the lingual, middle occipital, middle temporal and fusiform gyri, as well as the right parahippocampal gyrus (Table S3). No significant differences were detected in comparisons between happy and angry dynamic faces. Dynamic flowers elicited more activity than dynamic happy faces in the left cuneus, left fusiform gyrus and right middle occipital gyrus (Table 2c). Compared to dynamic angry faces, dynamic flowers showed more activity in bilateral middle occipital gyri, the left fusiform gyrus and the right parahippocampal gyrus.

These results replicated in part the findings from Experiments 1 and 2. Activity in the middle temporal gyri was common to all three conditions in this experiment, and suggests that biological motion, whether facial or flower motion, recruits this area. No significant difference between faces and flowers was observed in the STS, indicating that any difference was not sufficient to survive the contrast. The lack of increased activity to dynamic faces in STS, typically involved in processing social signals, may be due to the salience of watching a flower open or close, as this is an unusual display. Results are discussed in more detail in the general discussion. To more fully characterise the effects of dynamic facial expressions on brain activation, we completed two meta-analyses.

Quantitative Meta-Analyses

Using activation likelihood estimation (ALE; Laird et al. 2005), we conducted meta-analyses of published neuroimaging studies that examined dynamic faces to identify the brain correlates specifically of dynamic facial expressions. ALE is a quantitative meta-analysis method available in BrainMap (http://brainmap.org/ale/; Research Imaging Center of the University of Texas in San Antonio). Initially proposed by Turkeltaub et al. (2002), it has been updated by Laird et al. (2005). ALE generates a probabilistic map of activation where the value at each voxel indexes the probability that at least one of the reported coordinates will fall within that voxel in the template stereotaxic space. The underlying mathematical equations are detailed in Laird et al. (2005).

Methods

Literature Search and Article Selection

A literature search was performed using the standard search engine of Web of Science (http://www.isiknowledge.com). We looked for keywords such as dynamic faces or facial motion and fMRI. First, abstracts of these articles were reviewed to confirm that each article was an imaging study (fMRI or PET) using tasks to investigate dynamic facial expressions of emotion. The remaining papers underwent a full text review. To preserve data interpretability, we only considered studies written in English that included healthy adult samples with stereotaxic coordinates of within group whole-brain results using random effects analysis. Coordinates in these studies had to follow Talairach or Montreal Neurological Institute (MNI) convention. Data from eleven articles survived these criteria; seven of these studies reported a specific contrast between dynamic and static faces. Thus, data from all eleven studies were included in a domain-centered meta-analysis (i.e., dynamic faces) while data from seven studies were included in the contrast specific meta-analysis (i.e., dynamic versus static facial expressions; Table 1).

Meta-Analysis: Activation Likelihood Estimate

To create a probabilistic map of activation in the brain elicited by dynamic facial expressions of emotion using ALE, foci related to single contrasts of interest were chosen from the selected articles (Table 1). A total of 140 participants (77 females) took part in these studies. One study did not report the age of participants; the average age was 23.3 years (range 21–30 years) for the remaining studies. A single contrast was selected from every study; 141 foci were considered for the domain meta-analysis and 83 foci were considered for the specific contrast meta-analysis.

To prepare foci for analysis, MNI coordinates were transformed into Talairach space (Talairach and Tournoux 1988) using GingerALE (Lancaster et al. 2007). A random effects ALE approach was used with FWHM ranging 9.24–10.36 (median = 9.86; Eickhoff et al. 2009) for the domain meta-analysis, and FWHM ranging 9.43–10.36 (median = 9.86) for the contrast-specific meta-analysis. A voxel-wise likelihood of activation was calculated using 5000 permutations. Multiple comparisons were corrected using false discovery rate (FDR) q = 0.05.

Results

The meta-analyses demonstrated a set of areas associated with dynamic faces; significant clusters resulting from these quantitative analyses are presented in Table 3. Consistent with expectations, concordant probability values were observed across studies in the fusiform, middle temporal and superior temporal gyri and in the amygdalae (Fig. 5). The right fusiform gyrus (BA 37) demonstrated the highest likelihood of being detected in both meta-analyses. Left amygdala was significantly concordant for the contrast-specific meta-analysis and bilateral amydgala concordance was observed for the domain meta-analysis albeit with higher ALE value for the left hemisphere (Fig. 5). Clusters centred on the middle temporal gyrus spanning into the superior temporal sulcus elicited stronger ALE values in the right hemisphere. Other regions that showed significant concordance across studies included the inferior frontal gyri (BA 9) and precentral gyri (BA 6). Results are discussed in more detail in the general discussion.

General Discussion

With three experiments we examined the neural correlates of dynamic versus static happy and angry facial expressions. Dynamic faces elicited more activity than static faces in middle temporal gyri (Experiment 1) and superior temporal sulci (STS; Experiment 1 and 2). All contrasts with baseline in all experiments elicited activity in the fusiform gyri, and in these contrasts the amygdalae were more active for dynamic faces (Experiment 1 and 2). Although the fusiform gyri and amygdalae were not significantly different in contrasts between dynamic and static faces, we discuss their contributions, as they were significantly concordant among studies in the meta-analyses as well as in contrasts with baseline. Results from the quantitative meta-analyses are based on concordance across studies using dynamic faces, particularly focusing on the contrast between dynamic and static faces. The meta-analyses results show concordant activity in areas related to the visual processing of faces (e.g., fusiform gyri), motion (e.g., posterior middle temporal gyri), facial motion interpretation (e.g., STS) and facial emotion (e.g., amygdalae). We discuss the implication of these regions in facial expressions based on mode of presentation (e.g., static or dynamic).

The fusiform gyri are implicated in processing the configuration of features that make up a face; these have been called the invariant aspects of the face (Allison et al. 2000; Adolphs 2002). In our data we see fusiform activity and similar signal change (Fig. 4a) in all contrasts with baselines for all three experiments, regardless of face type (i.e., static or dynamic; Fig. 2). This is in agreement with the fusiform gyri being core visual regions for processing faces (e.g., McCarthy et al. 1997; Gobbini and Haxby 2007). More activity was seen in the right fusiform for both static and dynamic presentation of faces, consistent with face processing being more right lateralized (Rhodes 1993; Puce et al. 1995; Le Grand et al. 2003). The meta-analyses also showed the fusiform gyri were significantly concordant across studies with higher activation likelihood values in the right hemisphere.

Middle temporal gyri, specifically V5/MT, are sensitive to dynamic visual stimuli (Maunsell and Van Essen 1983; Zeki et al. 1991; Movshon et al. 1985). In agreement with this, our results showed activity in posterior middle temporal cortex in most contrasts with baseline including the comparisons with dynamic flowers (Fig. 2). Direct contrasts between dynamic and static faces, in Experiments 1 and 2, showed that dynamic faces elicited significantly more activity in this region (Fig. 3). Higher signal change was also observed for dynamic compared to static faces in both hemispheres (Fig. 4b). As expected, the meta-analyses indicated that there was significant concordance across studies in posterior middle temporal activity for dynamic faces (Fig. 5; Table 3). Previous meta-analyses on static facial emotion show middle temporal region involvement in processing facial emotion (Fusar-Poli et al. 2009; Phan et al. 2002), however its role is mainly discussed in papers studying dynamic facial movements (e.g., LaBar et al. 2003). We agree with the hypothesis that posterior temporal regions may process feature motion in dynamic facial expressions and here we quantitatively show that this region has a significant likelihood of being detected.

Superior temporal sulci are associated with the visual processing of social signals (Pelphrey et al. 2004; Saxe et al. 2004), such as the variant facial aspects that can generate emotional expressions on a face (Allison et al. 2000; Adolphs 2002). We observed activity in STS to dynamic facial expressions when compared to baseline in Experiments 1 and 2 (Fig. 2). A significant difference was also observed in a contrast between dynamic and static faces in Experiment 2 (Fig. 3), and similar to posterior middle temporal activity, dynamic presentation showed higher signal change than static presentation (Fig. 4c). The contrast-specific meta-analysis demonstrated significant concordance among studies in the right STS, which may be associated to the right hemisphere dominance for processing faces (Rhodes 1993; Puce et al. 1995; Le Grand et al. 2003). Meta-analyses of static facial expressions do not show contributions from superior temporal regions (Fusar-Poli et al. 2009), however as the middle and superior temporal areas are topographically next to each other it may be difficult to categorize this activity (e.g., large clusters centered around middle temporal gyrus may also encompass parts of the superior temporal cortices). Overall, STS is linked to social cognition, whereas V5/MT is linked to perceiving motion (LaBar et al. 2003); given their close proximity, the STS may be responsible for interpreting subtle movements into social cues.

Amygdalae activity is elicited by both positive and negative emotional information (e.g., Britton et al. 2006) with numerous studies reporting amygdalae activation to emotional faces (Ishai et al. 2002; Leibenluft et al. 2004; Taylor et al. 2009). In Experiments 1 and 2, we showed higher amygdalae activity for dynamic faces than baseline (Fig. 2) and greater signal change in the amygdalae to dynamic than static faces (Fig. 4d). The meta-analyses results showed that the amygdalae—primarily in the left hemisphere—were significantly detected in contrasts of processing dynamic faces. Previous research identified the amygdalae as key brain regions responsible for processing emotional information in a face (Adolphs 2002; Gobbini and Haxby 2007; Palermo and Rhodes 2007; Phan et al. 2002). The meta-analyses extend this conclusion by showing that amygdala activation, particularly in the left hemisphere, is more readily detected with dynamic than static faces.

Additional regions that showed significant concordance among studies in the domain-centred meta-analysis included the middle and inferior occipital gyri (BA 18/19), the inferior frontal gyri (BA 9), associated with cognitive processing, and the precentral gyri (BA 6), associated with eye movements. The middle and inferior occipital gyri are part of the visual pathways (e.g., Ungerleider and Mishkin 1982) and linked to core visual processing of faces (e.g., Haxby et al. 2000; Gobbini and Haxby 2007). Similarly, the precentral gyri (BA 6) are well established regions associated with eye movements (Corbetta et al. 1998; Wolfe 2007). It is likely that dynamic presentation of facial expressions, as in the studies included in the meta-analyses, elicited more eye movements than the comparison conditions, suggesting that dynamic facial expressions require more visual scanning. Lastly, the inferior frontal gyri (BA 9) are associated with cognitive processes such as maintaining and manipulating externally generated information (Petrides 1996; Christoff and Gabrieli 2000). Thus, activity in the inferior frontal gyri, seen in the meta-analyses, suggests that dynamic faces require more top-down cognitive control, perhaps because the dynamic faces generate more information to be coordinated.

Are Dynamic Faces Advantageous in Neuroimaging Studies?

This returns to the question of whether motion in facial expressions improves detection of regions associated with processing emotional expressions. Neuroimaging studies of dynamic expressions claim that these stimuli have increased ecological validity (e.g., Robins et al. 2009; Trautmann et al. 2009). Signal change from task-relevant regions showed increased activity for the amygdalae and the superior temporal gyri, associated with processing of emotional and social information. However, regions associated with core visual processing of faces, such as the lingual gyri and the fusiform gyri, did not demonstrate as much signal change between static and dynamic faces. Also, increased activity in prefrontal regions, as demonstrated by the meta-analyses and Experiments 1 and 2, suggests that dynamic faces require more cognitive processing. These results are congruent with behavioural evidence that shows that dynamic facial expressions elicit an exaggerated perception of the displayed emotion, as participants rated dynamic happy faces as happier than the same happy faces presented statically (Yoshikawa and Sato 2006; Uono et al. 2010). This converging evidence suggests that the increased ecological validity could present itself as higher activity in extended face processing regions.

Certainly for the core visual areas, the response differences were small, suggesting that for studies interested in only the core areas, either type of stimuli would suffice. Thus, the use of dynamic stimuli, which are more difficult to create and use reliably, is not necessary for basic face processing studies. For activation in more extended regions, the dynamic faces show a significant advantage and they may be beneficial in studies where the activation of the extended face processing networks, involved in emotion and cognitive interpretations of facial emotions, is critical. For instance, research shows that social cognition is impaired in individuals with autism; they have difficulty recognizing faces (e.g., Pelphrey et al. 2002) and identifying emotions or mental states (Rutherford et al. 2002). Behavioural research using dynamic stimuli challenge these findings by showing that children with autism are as successful as typically developing children in identifying emotion (Gepner et al. 2001; Back et al. 2007). In the only fMRI study using dynamic facial expressions and adults with autism Pelphrey et al. (2007) observed more activity in right superior temporal gyrus and amygdala in controls compared to adults with autism, but no differences in regions associated with motion (e.g., V5/MT); behavioural data did not show significant differences between the groups. Thus, as dynamic presentations of faces elicit an exaggerated perception of emotional states (Yoshikawa and Sato 2006; Uono et al. 2010) and similar performance is observed for children with and without autism (Gepner et al. 2001; Back et al. 2007), using dynamic stimuli in the study of social cognition in autism is one example for which these stimuli may show an advantage in the understanding the brain-behaviour responses.

The present paper focuses primarily on the brain regions that underlie the processing of dynamic versus static facial expressions, although we also present data on dynamic facial expressions alone. In our experiments, we only considered happy and angry emotions. This, however, was typical of the studies available for the meta-analysis; few included more than two emotions. As there were only a few studies that reported coordinates for application of ALE, we had to combine foci from different dynamic facial expressions (e.g., fear, anger, happiness). With more research on dynamic facial expressions, it would be interesting for future meta-analyses to examine specific expressions separately. One criticism for ALE coordinate based meta-analysis technique is whether results from studies of differing methodology (e.g., stimuli presentation, statistical analyses) are comparable. It is not typical to find exactly the same imaging studies; however, many imaging studies target the same domain. In order to make quantitative comparisons across studies in the domain of interest, underlying methodological differences are only partly controlled by choosing, for instance, whole-brain random-effects analyses. In an effort to account for more of these differences we present meta-analyses for both dynamic faces and contrast-specific (dynamic > static) results; the latter accounts for the contrasted control condition. Although the limitations related to the ALE technique have also been discussed in the literature (e.g., Ellison-Wright et al. 2008; Christ et al. 2009; Di Martino et al. 2009), ALE is nonetheless a meta-analytical methodology that has a number of advantages over traditional meta-analytic methods. ALE is not merely a descriptive technique, but it allows for the quantification of locations of common activations by using foci generated by independent research groups using common conditions but different methodologies. Furthermore, it minimizes subjectivity in generating conclusions, as after the collection of contrast coordinates the computational steps are automated. Thus, the ALE provides an important means of objectively summarising data in a specific neuroimaging field, allowing a more solid basis for future studies (as argued by Laird et al. 2009; Kober et al. 2008).

In summary, the results of our three experiments are consistent with previous literature. The meta-analyses quantified this consistency, demonstrating significant concordance among functional neuroimaging studies of dynamic facial expressions. The results from both approaches converge to a set of regions which include the fusiform gyri, middle temporal gyri (V5/MT), STS and amygdalae, which respectively have been shown to process visual features, motion, social and emotional aspects associated with faces. Areas associated with social and emotional aspects, in particular, were highly detectable among studies of dynamic facial expressions, which in turn demonstrate that dynamic stimuli have a statistical power advantage in the study of these processes. Future research using dynamic faces can benefit from this work done in adults and investigate how these areas are implicated in developmental and clinical populations.

Notes

Development of the MacBrain Face Stimulus Set was overseen by Nim Tottenham and supported by the John D. and Catherine T. MacArthur Foundation Research Network on Early Experience and Brain Development. Please contact Nim Tottenham at tott0006@tc.umn.edu for more information concerning the stimulus set.

References

Adolphs R (2002) Neural systems for recognizing emotion. Curr Opin Neurobiol 12:169–177

Adolphs R (2003) Cognitive neuroscience of human social behaviour. Nat Rev Neurosci 4:165–178

Allison T, Puce A, McCarthy G (2000) Social perception from visual cues: role of the STS region. Trends Cogn Sci 4:267–278

Back E, Ropar D, Mitchell P (2007) Do the eyes have it? Inferring mental states from animated faces in autism. Child Dev 78:397–411

Biele C, Grabowska A (2006) Sex differences in perception of emotion intensity in dynamic and static facial expressions. Exp Brain Res 171:1–6

Britton JC, Phan KL, Taylor SF, Welsh RC, Berridge KC, Liberzon I (2006) Neural correlates of social and nonsocial emotions: an fMRI study. Neuroimage 31:397–409

Carter EJ, Pelphrey KA (2008) Friend or foe? Brain systems involved in the perception of dynamic signals of menacing and friendly social approaches. Soc Neurosci 3:151–163

Christ SE, Van Essen DC, Watson JM, Brubaker LE, McDermott KB (2009) The contributions of prefrontal cortex and executive control to deception: evidence from activation likelihood estimate meta-analyses. Cereb Cortex 19:1557–1566

Christoff K, Gabrieli JDE (2000) The frontopolar cortex and human cognition: evidence for a rostrocaudal hierarchical organization within the human prefrontal cortex. Psychobiology 28:168–186

Corbetta M, Akbudak E, Conturo TE, Snyder AZ, Ollinger JM, Drury HA, Linenweber MR, Petersen SE, Raichle ME, Van Essen DC, Shulman GL (1998) A common network of functional areas for attention and eye movements. Neuron 21:761–773

Cox RW (1996) AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res 29:162–173

Di Martino A, Ross K, Uddin LQ, Sklar AB, Castellanos FX, Milham MP (2009) Functional brain correlates of social and nonsocial processes in autism spectrum disorders: an activation likelihood estimation meta-analysis. Biol Psychiatry 65:63–74

Eickhoff SB, Laird AR, Grefkes C, Wang LE, Zilles K, Fox PT (2009) Coordinate-based activation likelihood estimation meta-analysis of neuroimaging data: a random-effects approach based on empirical estimates of spatial uncertainty. Hum Brain Mapp 30:2907–2926

Ekman P, Friesen WV (1976) Pictures of facial affect. Consulting Psychologist Press, Palo Alto, CA

Ellison-Wright I, Glahn DC, Laird AR, Thelen SM, Bullmore E (2008) The anatomy of first-episode and chronic schizophrenia: an anatomical likelihood estimation meta-analysis. Am J Psychiatry 165:1015–1023

Frijda NH (1953) The understanding of facial expression of emotion. Acta Psychol 9:294–362

Fusar-Poli P, Placentino A, Carletti F, Landi P, Allen P, Surguladze S, Benedetti F, Abbamonte M, Gasparotti R, Barale F, Perez J, McGuire P, Politi P (2009) Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J Psychiat Neurosci 34(6):418–432

Gaillard WD, Grandin CB, Xu B (2001) Developmental aspects of pediatric fMRI: considerations for image acquisition, analysis, and interpretation. Neuroimage 13:239–249

Gepner B, Deruelle C, Grynfeltt S (2001) Motion and emotion: a novel approach to the study of face processing by young autistic children. J Autism Dev Disord 31:37–45

Gobbini MI, Haxby JV (2007) Neural systems for recognition of familiar faces. Neuropsychologia 45:32–41

Grosbras MH, Paus T (2006) Brain networks involved in viewing angry hands or faces. Cer Cortex 16:1087–1096

Harwood NK, Hall LJ, Shinkfield AJ (1999) Recognition of facial emotional expressions from moving and static displays by individuals with mental retardation. Am J Ment Retard 104:270–278

Haxby JV, Hoffman EA, Gobbini MI (2000) The distributed human neural system for face perception. Trends Cogn Sci 4:223–233

Hurlemann R, Rehme AK, Diessel M, Kukolja J, Maier W, Walter H, Cohen MX (2008) Segregating intra-amygdalar responses to dynamic facial emotion with cytoarchitectonic maximum probability maps. J Neurosci Methods 172:13–20

Ishai A, Haxby JV, Ungerleider LG (2002) Visual imagery of famous faces: effects of memory and attention revealed by fMRI. Neuroimage 17:1729–1741

Kilts CD, Egan G, Gideon DA, Ely TD, Hoffman JM (2003) Dissociable neural pathways are involved in the recognition of emotion in static and dynamic facial expressions. Neuroimage 18:156–168

Kober H, Barrett LF, Joseph J, Bliss-Moreau E, Lindquist K, Wager TD (2008) Functional grouping and cortical-subcortical interactions in emotion: a meta-analysis of neuroimaging studies. Neuroimage 42:998–1031

Kozel NJ, Gitter GA (1968) Perception of emotion: differences in mode of presentation, sex of perceiver, and race of expressor. CRC Report 18:1–61

LaBar KS, Crupain MJ, Voyvodic JT, McCarthy G (2003) Dynamic perception of facial affect and identity in the human brain. Cer Cortex 13:1023–1033

Laird AR, Fox PM, Price CJ, Glahn DC, Uecker AM, Lancaster JL, Turkeltaub PE, Kochunov P, Fox PT (2005) ALE meta-analysis: controlling the false discovery rate and performing statistical contrasts. Hum Brain Mapp 25:155–164

Laird AR, Lancaster JL, Fox PT (2009) Lost in localization? The focus is meta-analysis. Neuroimage 48:18–20

Lancaster JL, Tordesillas-Gutierrez D, Martinez M, Salinas F, Evans A, Zilles K, Mazziotta JC, Fox PT (2007) Bias between MNI and Talairach coordinates analyzed using the ICBM-152 brain template. Hum Brain Mapp 28:1194–1205

Lanyon LJ, Giaschi D, Young SA, Fitzpatrick K, Diao L, Bjornson BH, Barton JJ (2009) Combined functional MRI and diffusion tensor imaging analysis of visual motion pathways. J Neuroophthalmol 29:96–103

Le Grand R, Mondloch CJ, Maurer D, Brent HP (2003) Expert face processing requires visual input to the right hemisphere during infancy. Nat Neurosci 6:1108–1112

Lee LC, Andrews TJ, Johnson SJ, Woods W, Gouws A, Green GGR, Young AW (2010) Neural responses to rigidly moving faces displaying shifts in social attention investigated with fMRI and MEG. Neuropsychologia 48:447–490

Leibenluft E, Gobbini MI, Harrison T, Haxby JV (2004) Mothers’ neural activation in response to pictures of their children and other children. Biol Psychiatry 56:225–232

Luna B, Velanova K, Geier CF (2010) Methodological approaches in developmental neuroimaging studies. Hum Brain Mapp 31:863–871

Maunsell JH, Van Essen DC (1983) Functional properties of neurons in middle temporal visual area of the macaque monkey. I. Selectivity for stimulus direction, speed, and orientation. J Neurophysiol 49:1127–1147

McCarthy G, Puce A, Gore JC, Allison T (1997) Face-specific processing in the human fusiforrn gyms. J Cogn Neurosci 9:605–610

Morris JS, Friston KJ, Buchel C, Frith CD, Young AW, Calder AJ, Dolan RJ (1998) A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain 121(Pt 1):47–57

Morris JS, Ohman A, Dolan RJ (1999) A subcortical pathway to the right amygdala mediating “unseen” fear. Proc Natl Acad Sci USA 96:1680–1685

Movshon JA, Adelson EH, Gizzi MS, Newsome WT (1985) The analysis of moving visual patterns. In: Chagas C, Gattass R, Gross C (eds) Pattern recognition mechanisms. Pontificiae Academiae Scientiarum Scripta Varia 54, pp 117–151

Palermo R, Rhodes G (2007) Are you always on my mind? A review of how face perception and attention interact. Neuropsychologia 45:75–92

Pelphrey KA, Sasson NJ, Reznick S, Paul G, Goldman BD, Piven J (2002) Visual scanning of faces in autism. J Autism Dev Disord 32:249–261

Pelphrey KA, Morris JP, McCarthy G (2004) Grasping the intentions of others: the perceived intentionality of an action influences activity in the superior temporal sulcus during social perception. J Cogn Neurosci 16:1706–1716

Pelphrey KA, Morris JP, McCarthy G, Labar KS (2007) Perception of dynamic changes in facial affect and identity in autism. Soc Cogn Affect Neurosci 2:140–149

Petrides M (1996) Lateral frontal cortical contribution to memory. Neurosciences 8:57–63

Phan KL, Wager T, Taylor SF, Liberzon I (2002) Functional neuroanatomy of emotion: a meta-analysis of emotion activation studies in PET and fMRI. NeuroImage 16:331–348

Puce A, Allison T, Gore JC, McCarthy G (1995) Face-sensitive regions in human extrastriate cortex studied by functional MRI. J Neurophysiol 74:1192–1199

Rhodes G (1993) Configural coding, expertise, and the right hemisphere advantage for face recognition. Brain Cogn 22:19–41

Robins DL, Hunyadi E, Schultz RT (2009) Superior temporal activation in response to dynamic audio-visual emotional cues. Brain Cogn 69:269–278

Rutherford MD, Baron-Cohen S, Wheelwright S (2002) Reading the mind in the voice: a study with normal adults and adults with asperger syndrome and high functioning autism. J Autism Dev Disord 32:189–194

Sato W, Kochiyama T, Yoshikawa S, Naito E, Matsumura M (2004) Enhanced neural activity in response to dynamic facial expressions of emotion: an fMRI study. Cogn Brain Res 20:81–91

Saxe R, Xiao DK, Kovacs G, Perrett DI, Kanwisher N (2004) A region of right posterior superior temporal sulcus responds to observed intentional actions. Neuropsychologia 42:1435–1446

Schultz J, Pilz KS (2009) Natural facial motion enhances cortical responses to faces. Exp Brain Res 194:465–475

Talairach J, Tournoux P (1988) Co-planar stereotactic atlas of the human brain. Thieme, New York

Taylor MJ, Arsalidou M, Bayless SJ, Morris D, Evans JW, Barbeau EJ (2009) Neural correlates of personally familiar faces: parents, partner and own faces. Hum Brain Mapp 30:2008–2020

Trautmann SA, Fehr T, Herrmann M (2009) Emotions in motion: dynamic compared to static facial expressions of disgust and happiness reveal more widespread emotion-specific activations. Brain Res 1284:100–115

Turkeltaub PE, Eden GF, Jones KM, Zeffiro TA (2002) Meta-analysis of the functional neuroanatomy of single-word reading: method and validation. Neuroimage 16:765–780

Ungerleider LG, Mishkin M (1982) Two cortical visual systems. In: Ingle DJ, Goodale MA, Mansfield RJW (eds) Analysis of visual behavior. MIT Press, Cambridge, MA, pp 549–586

Uono S, Sato W, Toichi M (2010) Brief report: representational momentum for dynamic facial expressions in pervasive developmental disorder. J Autism Dev Disord 40:371–377

Vuilleumier P, Pourtois G (2007) Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia 45:174–194

Vuilleumier P, Schwartz S (2001) Emotional facial expressions capture attention. Neurology 56:153–158

Wehrle T, Kaiser S, Schmidt S, Scherer KR (2000) Studying the dynamics of emotional expression using synthesized facial muscle movements. J Pers Soc Psychol 78:105–119

Wolfe JM (2007) Guided search 4.0: current progress with a model of visual search. In: Gray W (ed) Integrated models of cognitive systems. Oxford, New York, pp 99–119

Xiong Z, Wu X, Cheng S, Hua J (2003) Lossy-to-lossless compression of medical volumetric data using three-dimensional integer wavelet transforms. IEEE Trans Med Imaging 22:459–470

Yoshikawa S, Sato W (2006) Enhanced perceptual, emotional, and motor processing in response to dynamic facial expressions of emotion. Jpn Psychol Res 48:213–222

Zeki S, Watson JD, Lueck CJ, Friston KJ, Kennard C, Frackowiak RS (1991) A direct demonstration of functional specialization in human visual cortex. J Neurosci 11:641–649

Acknowledgments

We thank Dr. Sarah Bayless for creating the dynamic stimuli. This work was supported by a CIHR grant to MJT (MOP-81161).

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Arsalidou, M., Morris, D. & Taylor, M.J. Converging Evidence for the Advantage of Dynamic Facial Expressions. Brain Topogr 24, 149–163 (2011). https://doi.org/10.1007/s10548-011-0171-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10548-011-0171-4