Abstract

Despite the high prevalence of substance use disorders among juvenile offenders, most do not receive services. System-level process improvement plans to address unmet service needs can be optimized by combining data-driven decisions and facilitated meetings with behavioral health stakeholders. This paper operationalizes and analyzes the level of specified complexity among process improvement plans evident within 36 juvenile probation and drug courts across 7 states. To inform more effective implementation strategies, this analysis identifies and prioritizes promising courses of agency enhancement toward addressing unmet substance use needs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Substance using youth entering the juvenile justice (JJ) system often face an array of family impediments, delinquency, and social troubles (Belenko and Dembo 2003). Justice agencies can serve as a place to identify individuals in need of substance use (SU) services and link them to appropriate care (Chandler et al. 2009; Taxman et al. 2009). Linkage to care, however, is complex in that it involves coordination across multiple service sectors and agencies, many of which experience significant resource and staffing shortages (Belenko et al. 2004; Young et al. 2007). Thus, efforts aimed at incorporating evidence-based practices (EBPs) into such settings require careful consideration and planning (Aarons et al. 2011).

Including key stakeholders from multiple organizational levels, structures, and agencies is critically important in both determining the needs of substance using justice-involved youth who are under community supervision while also identifying available treatment options for them. Because the mission, goals, structural characteristics, and resources available to each agency may vary (e.g., differing emphasis on public safety versus child rehabilitation; Henderson and Taxman 2009; Taxman et al. 2009), it is important to be mindful when multiple service agencies are working for the common good of youth (Chorpita et al. 2008). In this regard, the specific goals and improvement plans agreed upon must be modified to fit within the contextual constraints unique to that particular service system (and the agencies enacting the plan) that may impact agency adoption, staff training, and implementation (Aarons et al. 2011; Damschroder et al 2009).

This paper describes an extensive decision-making and progress-monitoring process that can be utilized by policy makers and administrators to improve the likelihood of successful implementation, including (a) considering what an ideal service flow would look like, (b) getting the right players at the table (the full range of inter- and intra-agency stakeholders), (c) determining where systems have the greatest needs, (d) setting doable goals that match needs, (e) making sure goals are clear and have measurable progress indicators, (f) measuring progress, and (g) changing processes if necessary.

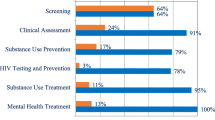

Best practices for identifying and addressing SU can be conceptualized along what is known as the Behavioral Health Services Cascade (hereafter, “the Cascade”). Based on HIV care models (Gardner et al. 2011; Mugavero et al. 2013), the Cascade provides a visual contrast between an ideal and actual sequence of SU services available to community-supervised youth in JJ settings (Belenko et al. 2017; Fig. 1 represents hypothetical data for the Cascade), beginning with universal screening for these youth when entering JJ and ending with engagement of services for those in need of treatment. For youth who score above a critical threshold on an evidence-based screener (identifying a potential need for service), the next step would be to administer a full clinical assessment (typically conducted by an external entity, such as a behavioral health provider). Confirmed need, as informed by evidenced-based screening and assessment services, would be followed by referral to an accredited behavioral health (BH) provider with services matched to the presenting need, concluding with continuation in wraparound services and a lower level of care (American Society of Addiction Medicine 2013).

Copyright from Knight et al. (2016)

Hypothetical retention in the Cascade as youth transition across service systems.

Thus, improving the identification of SU problems among JJ-involved community-supervised youth and linking them to appropriate services entails complexity along multiple dimensions. First, improving best practices involves delivery of interrelated services across a continuum (i.e., the Cascade). Second, youths’ movement through the Cascade requires coordination and collaboration across multiple agencies (JJ and BH). Third, because each system is unique, allowing flexibility in improvement targets is imperative. For instance, requiring all systems to improve screening practices may not be appropriate for systems that have a robust screening protocol in place. Similarly, a single EBP may not be the best fit for all systems. Thus, allowing flexibility in the strategies selected to address a goal is also important in some contexts. This lack of standardization means more customization at the site level, which likely facilitates adoption and implementation (Aarons et al. 2012), but results in challenges when comparing process improvement activities across sites. To date, studies have examined the adoption of single EBPs (Chamberlain et al. 2011, 2012; Glisson et al. 2010; Barwick et al. 2009), a specific set of best practices (Atkins et al. 2008; Brooks et al. 2008), and generic best practices (Gustafson et al. 2013) within social service organizations. However, few have explored the challenges of implementing a set of interrelated EBPs across multiple organizational settings with different aims, cultures, and resources.

Process Improvement Planning

The Exploration, Preparation, Implementation, and Sustainment (EPIS) framework (Aarons et al. 2011) explicitly identifies the importance of adaptations to fit of an evidence-based practice or evidence-based treatment characteristics (e.g., characteristics of the intervention or how it’s implemented) in resulting practice adoption for a specific setting. Accordingly, exploration and preparation are the first two essential phases of practice adoption. Exploration involves identifying inner (i.e., organizational) and outer (i.e., system-level) contextual factors that set the stage for change efforts. These activities not only include assessment of existing services and practices, but also evaluation of needs, resources, and potential barriers. The Preparation phase follows the Exploration phase, building upon lessons learned and utilizing that knowledge to develop a plan for change that fits within the context of the service systems, agencies, organizations, and stakeholders involved (Aarons et al. 2012). A key element of exploration and preparation activities involves goal identification and improvement planning. Goals help to focus team efforts toward a common target, whereas improvement plans (or steps) describe needed actions and specify benchmarks for measuring progress (Horan Fisher et al. 2018). In system-wide process improvement efforts (as is the case when improving SU services for JJ youth; Becan et al. 2018), exploration and preparation activities will be most successful if accomplished through an interagency team that involves individuals from JJ and BH agencies and includes representation from multiple levels within each agency (i.e., leadership as well as line staff such as probation officers and counselors). A benefit of such diverse workgroup membership is that multiple perspectives can be considered (Aarons et al. 2014); a challenge is that without clear communication, the different perspectives and experiences espoused by workgroup participants could result in disparate priorities, making goal selection and joint planning difficult at best (Aarons et al. 2012; Hurlburt et al. 2014; Leukefeld et al. 2017; Pearson et al. 2014; Prendergast et al. 2017). Thus, strategies are needed to support multiple stakeholder participation and collaboration during goal selection.

The Juvenile Justice-Translational Research on Interventions for Adolescents in the Legal System (JJ-TRIALS), a multi-site research study aimed at improving uptake of SU services for justice-involved youth (Knight et al. 2016), addresses challenges inherent in systems-level change processes by implementing three goal selection support strategies: (1) use of an overarching framework to guide decision-making (i.e., the Cascade), (2) data-driven exploration to identify target areas of need (e.g., site feedback report), and (3) researcher-led facilitation of workgroup goal selection and improvement planning. During the Exploration phase (Becan et al. 2018), sites are introduced to the Cascade as a framework for measuring site performance and identifying unmet needs of clients in their care. Youth service records are then plotted along the Cascade to visually illustrate gaps in service provision or areas of pervasive unmet need (i.e., services where youth are likely to “fall through the cracks”). Trained facilitators assist interagency workgroups in interpreting their data, developing a shared understanding of the implications, and identifying opportunities for improvement. Facilitators also guide the selection of goals and help the site transition to the Preparation phase through specification of steps required for goal attainment.

While primary outcomes of JJ-TRIALS focus on achievement (and sustainment) of goals, it is important to understand the nature of selected goals and steps—their focus, specificity, and complexity—before examining how they relate to the achievement of outcomes. The current study uses two strategies for operationalizing process improvement goals. The first involves establishing quality indicators (QIs) that can be used to facilitate improvement in organizational systems (Kötter et al. 2012) and measure the degree to which goals and steps align with best practices in a particular area (e.g., use of an evidence-based instrument to screen for SU; see Knight et al. 2019 for more information). Given the flexibility in targeted goals (i.e., targeting any domain along the Cascade; variability in which an EBP is used to address a goal), using QIs enables comparisons of plans to be conducted across systems. The second strategy involves operationalizing goal complexity. The Consolidated Framework for Implementation Research (CFIR; Damschroder et al. 2009) summarizes and provides a typology of factors that influence implementation success including the complexity of the innovation. Complexity factors include the number of steps needed to reach a goal (Greenhalgh et al. 2004; Grol et al. 2007) and how disruptive the changes will be to the organizations involved. Complexity generally increases when a larger number of units (e.g., individuals, departments, clinics) are impacted by the new practice (Kochevar and Yano, 2006). Therefore, it is reasonable to expect a high degree of complexity when multiple service systems and agencies are engaging collaboratively in systems-level change.

The Current Study

The purpose of this paper is to apply a common language and approach to identify, distill, and compare process improvement plans (PIPs) within a large scale study, representing 36 multi-agency systems (sites). Documenting the specificity of goals that are developed jointly by multiple stakeholders can inform systems-level change planning within JJ environments and provide models for improving SU services in other multifaceted contexts. Three research questions are examined. First, are the strategies used in JJ-TRIALS effective in supporting goal selection and planning? Second, what target goals along the Cascade are selected by interagency workgroups, and how do plans map onto Cascade quality and data use indicators? Third, can PIPs that address different goals be meaningfully compared on measures of complexity? Exploring complexities inherent in these PIPs can shed light on potential implementation barriers and is a first step toward understanding how goal articulation relates to outcome achievement. Indeed, further clarity in specifying and reporting process improvement strategies can lead to more informed theories and frameworks of process change (Proctor et al. 2013).

Methods

Participants

Data reported herein were obtained as part of the JJ-TRIALS Cooperative Agreement, conducted in 7 US states across 6 research centers (RCs). Each RC recruited six JJ agencies and 1–2 BH partners per JJ agency, totaling 36 study sites. Each JJ agency and its BH partner(s) were considered a single site. State level JJ departments assisted RCs in identifying counties that matched pre-specified site selection criteria (see Knight et al. 2016 for more information). Once potential county juvenile services departments were identified, the RC investigators contacted the Chief Probation Officer or Drug Court Judge at that county, described the overarching goals of the JJ-TRIALS Cooperative Agreement, and invited them to participate. Chiefs or Judges designated 1–2 site liaisons to help identify and invite 1–2 BH agencies to which JJ refers juveniles for substance use/abuse assessment and/or treatment (see Becan et al. 2018 for agency and staff recruitment details). All RCs had institutional review board approval from their local institution.

Following the orientation meetings, chiefs and site liaisons were asked to form an Interagency Workgroup (IAW) composed of JJ agency staff and partnering BH providers. A total of 36 workgroups composed of 258 individuals (199 JJ staff, 59 BH staff) were formed, intentionally representing diverse backgrounds and varying organizational roles (see Horan Fisher et al. 2018 for more information). The composition of the IAWs depended on the size and complexity of the agencies represented. IAWs at the time of goal selection were comprised of an average of 7 members (SD = 2.73 members, range 3–13 members), with a majority representing JJ departments (M = 5.53 members, SD = 2.41; BH partners, M = 1.74 members, SD = 1.21). Workgroup representation included an average of 3 agency leaders (2 for JJ, 1 for BH). BH agency representation among IAWs typically consisted of 1 agency (n = 22 IAWs), with 2 IAWs reporting an absence of BH representation at the goal selection support session. While workgroup representation among JJ agencies typically reflected line staff positions across sites [63.3% of the 199 JJ workgroup members, mostly probation officers (n = 83)], with 36.7% as JJ leadership [mostly supervisors (n = 47)]; workgroup representation among BH agencies typically reflected leadership positions across sites [55.9% of the 59 BH workgroup members were BH agency leadership, mostly supervisors (n = 21)], with 44.1% as BH line staff [mostly counselors (n = 14)].

Procedure

During the project baseline period (see Knight et al. 2016), workgroups participated in a 3-h facilitated discussion to identify areas of unmet need for their community, select targeted goals, develop PIPs, and identify ways to measure progress (see Horan Fisher et al. 2018). This process was informed by data derived from two sources: (1) RC-based facilitation of a local needs assessment with the workgroup (a) to support visual depiction of the most common flow of youth in their community through the Cascade and (b) to identify service barriers in interagency communication, staff training, service delivery, use of EBPs, and service timing (Bowser et al. 2018), and (2) to review service utilization data along the Cascade for youth in their community (Dennis et al. 2018). These two data sources were used by the corresponding research center to generate a site feedback report and served as a springboard for goal selection.

As informed by the site feedback report, workgroups were encouraged to select a goal that met specified criteria with three stipulations. First, the goal needed to address a substantial unmet need along the Cascade among youth served by the local probation department. Second, the goal should have an emphasis on interagency collaboration or transition between systems (from the JJ department to a BH treatment provider). Finally, the goal needed to be informed by SMART principles (Specific, Measurable, Attainable, Relevant and Time-bound; Lawlor and Hornyak 2012; Locke and Latham 1990; Morrison, 2010). These criteria were enacted to help limit diversification of PIPs to the 5 specified domains along the Cascade (screening, assessment, referral, treatment initiation, treatment engagement) while allowing for examination of progress toward goal completion using existing youth records data. By the conclusion of the goal selection process, each workgroup drafted a written PIP with identified goal steps and specified measures to evaluate progress toward goal completion.

The workgroups were supported by an RC-based facilitator, with a Ph.D. or Masters in psychology or related field, and who had knowledge of the JJ system and how to collect and analyze data. To ensure equivalency in knowledge and skills, RC facilitators engaged in two cooperative-wide trainings, prior to facilitated goal selection, specifically designed to teach techniques on using data-driven decision-making (DDDM) to inform goal selection; how to nurture the social dimensions of goal achievement, such as working to create an ethos of teamwork and shared responsibility; how to resolve conflicts that might emerge in the pursuit of particular objectives (including goal selection); and how to address other human facets of workplace and interagency collaboration. A large body of research in implementation science indicates that training alone is often insufficient at imparting complex skill sets (King-Sears 2001). For that reason, facilitators were provided a manual featuring step-by-step instructions on how to conduct the group discussion and use the activity worksheets to promote efficient and SMART goal selection. To support fidelity to the protocol, facilitators participated in intermittent web-conferences to discuss challenges and share successes and were required to complete a series of fidelity checklists as a way for RC principal investigators or other staff to identify opportunities to increase adherence. A majority of RC-based facilitators supporting the goal selection process facilitated workgroup activities during project baseline (75%; needs assessment: 64% facilitated, 11% observed; site feedback report: 25% facilitated, 47% observed). Using correlational analyses, no significant relationship was detected between facilitator involvement in pre-goal selection activities and the resulting complexity of goals.

Measures

PIPs developed during the baseline period were used as raw data for this study. Goals were coded discretely, at study conclusion, as targeting one of the five domains along the Cascade. Steps were coded for one or more of 56 indicators (41 quality, 15 data use). At least one representative from each of the six RCs served as a coder (n = 8). Coders reported familiarity with a vast majority of the sites (32 of 36) through either workgroup project facilitation (n = 4) or periodic post-goal selection site implementation check-in calls (n = 1).

Quality Indicators

Using three converging and complementary sources (1: Belenko et al. 2017; 2: Knight et al. 2016; 3: Knight et al. 2019), a common template in which to compare improvement plans across the 36 sites was developed; allowing a means to code the degree to which PIP goals and steps incorporated quality indicators (QIs). The Cascade (Belenko et al. 2017) provided the overarching framework for coding steps as QIs and serving as the first of three sources. Definitions of “quality” were drawn from a review of best practices associated with each domain in the Cascade, as summarized by Knight et al. (2016; the second source). QIs for Screening, for example, included the use of a psychometrically sound screening instrument, administration within 30 days of JJ intake, and 2 or more sources of corroborating evidence (Committee on Substance Abuse 2011; National Institute on Alcohol Abuse and Alcoholism 2015; New York State Department of Health 1999, April). The specific verbiage incorporated in the template was drawn from a study of JJ staff attitudes toward specific SU best practices (Knight et al. 2019; the third source). Domains and indicators included Screening (11 QIs; see Table 1 indicators), Clinical Assessment (8 QIs), Referral (13 QIs), Treatment Initiation (7 QIs), and Treatment Engagement and Continuing Care (10 QIs).

Data Use Indicators

Because the JJ-TRIALS Cooperative Agreement encouraged site utilization of DDDM, many workgroups emphasized improvement and utilization of data in their PIPs. Indeed, using data as a source of feedback during initial pilot testing and revising plans to better fit within the organizational context leads to more robust and lasting change (Taylor et al. 2014). While there is a growing emphasis on the need to use data to inform decisions and agencies are encouraged to plan how they will define and measure outcomes (Orwin et al. 2012; Schneider and Simpson 2011; Young et al. 2006), guidance on how to do so is sparse. The degree to which agencies incorporate data collection and potential data management changes in their planning processes is unknown. As such, 3 data use indicators were added for each Cascade domain to address implementation and/or improvement in (1) case-management systems (to better capture youth-level data); (2) information sharing (across agencies/programs for better services, including non-database forms of communication, e.g., e-mail, fax); and (3) formal agreements (MOUs across agencies/programs for better practices or sharing of data, e.g., on how and what data or receipt of practices will be communicated between agencies).

Plan Complexity

The identified quality and data use indicators derived from the coded improvement plans were used to systematically and uniformly compare the complexity of each plan across the 36 sites. Plan Length (number of sequential sub-processes or steps for using or implementing an intervention; Kochevar andYano 2006), was defined for this project as the number of indicators targeted within a PIP. Plans were also coded for complexity as measured by Plan Scope (number of Cascade domains targeted) and Primary Goal Domain (Cascade domain with the most targeted indicators).

The following two measures of plan complexity were largely informed by local knowledge of the workgroup at the time of goal selection. Indicator Target Integration (number of individuals, teams, or agencies targeted or influenced by the new practice; Grol et al. 2007; Kochevar and Yano 2006), was defined for this project as number of indicators with proposed involvement of both JJ and BH partners. Indicator Radicalness (degree to which change would require a fundamental departure from standard agency practice, including structural, financial, and personnel changes—essentially magnitude of proposed transformation; Greenhalgh et al. 2004), was defined for this project as the number of indicators perceived to involve initiation of new practices (e.g., adoption of a new assessment tool), rather than an exclusive focus on improving consistency (e.g., ensuring that all JJ referrals who screened positive for substance use complete an assessment) or revising existing practices (e.g., revising the timing of assessment services). Lastly, Data Emphasis was defined as the number of indicators involving data management or information sharing across agencies.

Analytic Procedures

Analyses conducted at the end of the experimental period were completed in two stages: qualitative coding and quantitative analyses. First, each of the 36 PIPs were coded individually using the taxonomy of quality and data use indicators described above. Qualitative coding occurred in two rounds: (1) establishing interrater checks and finalizing codes and (2) cross-coding all improvement plans. Second, after the qualitative coding was complete, codes were quantified to examine PIP complexity.

Qualitative Coding Round 1: Establishing Interrater Checks and Finalizing Codes

PIPs were analyzed following the template analysis approach (Keith et al. 2017), in which combinations of deductive and inductive codes were generated from a priori expectations and preliminary inspections of the data. The template (or final constellation of codes) that resulted from these initial actions was then applied to all data to ensure interpretive and analytical consistency across all documents (in this case, PIPs).

Using the taxonomy of quality and data use indicators to guide coding, each primary coder partnered with an RC coder from two other RCs by employing a round-robin approach, with each coder independently coding 12 improvement plans (the plans for each of the 6 sites from the home RC and the plans from each of the 6 sites from their round-robin RC). To promote interrater checks across coders, the process started with the partnering coders reading and independently coding two improvement plans (one site from the home RC and one from the round-robin RC). The coders then compared coded plans and marked points of agreement and disagreement. Synthesis of coding was based upon agreement between coders. If consensus could not be achieved or there were insufficient coding options, these issues were resolved in discussion with the entire coding team. Six of 36 (17%) improvement plans were included in this initial phase of reliability checking.

Qualitative Coding Round 2: Cross-Coding

Gaps in round 1 of the coding process were resolved by updating the coding rules. The revised rules were used to code the remaining improvement plans maintaining the same round-robin partnership from round one. As with the first round, each pair of coders met and worked through coding differences until consensus was reached. Once all steps were assigned to one or more quality or data use indicator, each indicator was then coded for target integration and radicalness by the home RC coder (using local knowledge of the site). In a final pass, all plans were coded or, as needed, recoded with the refined coding framework to ensure analytic and interpretive consistency among plans and raters.

Quantitative Analysis

Once all 36 plans were coded on complexity, a series of 4 univariate ANOVAs were used to statistically compare differences in plan complexity (dependent variables = plan length, plan radicalness, data emphasis, and plan target integration) by goal domain (each of the 5 Cascade domains).

Results

Question 1. Strategies for Supporting Goal Selection and Planning

JJ-TRIALS strategies were effective in promoting the identification and articulation of process improvement goals. All workgroups identified a goal and developed a detailed improvement plan (including specification of incremental steps needed to promote goal success and measurement markers to inform DDDM). Furthermore, all selected goals and 94% of improvement plan steps (204 of 228 steps) centered on services along the Cascade. The remaining 12 steps (6%) targeted building cohesion, promoting engagement among workgroup members, or improving non-SU services. Using a fidelity form to monitor site engagement during goal selection, RC staff documented that a majority of workgroups were highly engaged (n = 26, 72%); the remaining 10 sites reported moderate engagement among some but not all of the workgroup members. Within these groups, RC staff observed a deference to agency leaders’ opinions, but after facilitators expressed flexibility in goal selection and garnered agency leadership support, the workgroups typically engaged in active, productive, and meaningful discussion.

Question 2. Process Improvement Targets along the Cascade

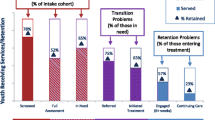

There was variation in where workgroups focused their primary quality improvement efforts (Table 1). Of the 36 workgroups, 3 (8%) targeted screening goals, 5 (14%) assessment goals including identification of client need, 14 (39%) referral goals (whether to assessment or treatment), 8 (22%) treatment initiation goals, and 6 (17%) treatment engagement/continuing care goals. Thus, workgroups placed the greatest emphasis on improving referral and treatment practices (78%), the areas along the Cascade that inherently involve interagency coordination and linkages.

Workgroups were also successful in using knowledge of their system to develop PIPs targeting diverse points along the Cascade that would discretely support their agency selected goal. Table 1 provides detailed information on the number and proportion of JJ-TRIALS sites that targeted particular quality and data use indicators (representing a total of 271 indicators across 36 sites). Across sites, an average of 25% of the selected indicators targeted improvement to screening practices, 9% to assessment or identification of need, 41% to improvement in referral processes, 25% to treatment initiation, and 10% to cross-agency improvements in treatment engagement. Nearly one third of workgroups (31%) focused on implementing an evidence-based screening instrument, 22% prioritized screening youth for substance problems, and 25% planned to enhance staff awareness about screening practices. Unlike screening practices, service changes related to assessment were not highly prioritized. For example, although five workgroups selected assessment goals, only two workgroups (6%) targeted implementation of a comprehensive assessment of SU and related problems, with only one workgroup (3%) targeting use of a scored, standardized evidence-based assessment instrument or using comprehensive assessment results to inform treatment referrals. One quarter of workgroups targeted referring youth with a substance problem to treatment services, nearly half (47%) targeted use of standardized referral procedures, and 44% targeted improvement in staff awareness/training on referral practices. The emphasis for treatment initiation and engagement practices was largely focused on improving interagency communication. Specifically, 31% of workgroups targeted improvement in sharing information across agencies, 19% focused on staff training on treatment initiation services, and 17% aimed to initiate contact between JJ and BH through formal agreements.

Workgroups fully embraced the opportunity to improve agency decision-making as informed by client data. All workgroups with the exception of one planned to develop or improve data collection as an outcome measurement. Overall, workgroups widely endorsed improvement to case management records around screening (28% of workgroups), referral (53%), treatment initiation practices (25%), and assessment data (17%). Additionally, workgroups embraced the multiagency collaboration that JJ-TRIALS promoted by including improvement to information sharing between JJ and BH agencies to support referral procedures and treatment initiation (53% and 31% of the workgroups, respectively).

For instance, in order to illustrate the process of tailoring indicators to a targeted plan, one participating site was presented a site feedback report indicating low treatment referral rates. Through facilitated data-driven approaches, this site choose to target referral processes, with a specific goal of increasing the number of youth on community supervision who are referred to accredited treatment providers. While success for this site would be ultimately gauged by an increase in referrals to accredited treatment providers, the PIP included several additional indicators. Broadly, they aimed to train staff on using an evidence-based screener to inform need for comprehensive assessment, train staff on using standard referral practices including involving youth and family in treatment placement, and to improve documentation and sharing of referral data through establishing an MOU between JJ and an accredited treatment provider.

Question 3. Assessing Complexity

In general, plan complexity varied based on the goal domain along the Cascade. Table 2 shows the proportion of indicators by primary goal domain. In general, the type of goal targeted corresponded to the central area of focus/plan concentration. For instance, screening indicators on average represent 92% of the plans among the 3 sites targeting screening goals.

The scope of targeted indicators (count of Cascade domains targeted for improvement) varied considerably across targeted goals. While workgroups targeting screening goals chose to concentrate their efforts almost exclusively on improving screening practices (M = 92% screening focused); workgroups targeting other goals included up-stream changes (improving earlier processes in the Cascade by, e.g., adopting an evidence-based screener as part of a referral goal) and down-stream changes (improving services later in the Cascade by, e.g., proposing a match between clinical need and treatment services as part of an assessment goal). Workgroups targeting assessment goals planned to spend considerable effort improving the down-stream referral process (M = 29% on referral); likewise, workgroups targeting referral goals proposed service changes to the earlier assessment process (M = 33% on assessment). Workgroups targeting treatment initiation or engagement goals included a wider scope of quality and data use indicators. For both initiation and engagement goals, almost equal focus was placed on improving assessment (M = 39% and 34%, respectively) as improving the treatment process (M = 45% and 37%, respectively). On average, length of PIPs involved 8 quality and data use indicators along the Cascade, with wide variation depending on goal domain (Screening M = 5, Assessment M = 6, Referral M = 7, Initiation M = 8, Engagement M = 12).

Figure 2 demonstrates the level of complexity represented in PIPs for each goal domain across the 36 sites including target integration (interagency collaboration between JJ and BH), radicalness (service reorientation), and data emphasis (data improvement or sharing of information across agencies). On average, across the varying types of goals, 66% of the indicators proposed involved target integration, 57% involved radicalness, and 42% involved data emphasis (improving electronic data systems, n = 28 sites; information sharing, n = 27 sites). Workgroups that targeted screening planned to involve BH and implement new practices in 50% of proposed changes, with a quarter of the changes involving improvement in data use. Workgroups targeting assessment planned to involve BH in 56% of their proposed changes, with 68% of the targeted changes necessitating service reorientation, and 65% targeting improvement in data use. For workgroups that targeted referral, the proportion of indicators with proposed BH involvement was higher (61%) as compared to the earlier goal domains, while the proportion involving new service implementation and data use improvements was lower (55% and 34%, respectively). Workgroups targeting treatment initiation placed even greater emphasis on involving BH (66% of the QIs), with 46% and 48% of the proposed changes targeting new practices and data use improvements. Not surprisingly, workgroups targeting engagement were most inclined to prioritize interagency collaboration (M = 93% of selected QIs), with 70% of the proposed changes involving service reorientation and a little under half involving data use improvements (46%). No significant differences were detected on the relationship between goal domain and complexity: length [F (4, 30) = 2.14, p = .1001], target integration [F (4, 30) = 1.12, p = .3643], radicalness [F (4, 30) = 1.13, p = .3599] or data emphasis [F (4, 30) = 2.58, p = .0573].

Discussion

Youth on community supervision present JJ agencies with numerous challenges that complicate terms of probation, including SU and mental health issues (among others). While many JJ departments strive to provide evidenced-based services, addressing SU often requires referral to private sector BH providers, and success is contingent on adequate interagency coordination. This study demonstrates commonalities and complexities in development and articulation of PIPs for improving SU services using JJ-TRIALS goal selection support strategies across 36 diverse systems of care.

Most of the sites targeted mid to later points along the BH Cascade including referral, initiation, and engagement in treatment. Consistent with the Cascade model (Belenko et al. 2017), plan complexity increased along the Cascade, particularly with regard to coordination between JJ and BH treatment targets (i.e. referral). Workgroups that targeted screening, for example, involved BH less frequently than workgroups targeting initiation. Radicalness, or substantial changes to existing practice, was most pronounced when target goals were assessment or engagement. For instance, quite commonly, assessment is the entry point into treatment and is often administered by a BH agency. In some systems, assessment protocols are nonexistent thus necessitating vast changes.

While all sites incorporated the use of data within their PIP, planned data use was incorporated more broadly when assessment was the target goal. Greater use of data for assessment may reflect workgroup plans to document new assessment practices (including referral to assessment), and/or plans to evaluate the success of new practices. The inclusion of data-related indicators is encouraging given that data-driven approaches (e.g., rapid-cycle testing, PDSA) can indirectly facilitate implementation of new practices. Routine monitoring of data during early implementation of a new practice, or when piloting a new intervention, can alert workgroups to potential problems and direct modification efforts (e.g., Gardner et al. 2018; Kieffer et al. 2014). For instance, improved documentation of treatment need indicators can help determine whether a screening tool is adequately identifying need for comprehensive assessment or if a more sensitive screening instrument is warranted. Thus, improvement in data collection, accuracy, and consistency can serve as a necessary first step to inform subsequent service changes.

Regardless of the chosen target, sites generally developed plans that spanned several Cascade domains, and most plans included improvement in assessment and referral practices. This finding is important, in that it documents workgroup member awareness of the interrelated nature of SU referral and treatment practices. Improving referral practices, for instance, depends in part on the availability of quality information from screening and assessment. If these practices are not of sufficient quality (e.g., using a non-evidence-based screener) or departmental procedures are not well specified (e.g., no guidance regarding when to make a referral), then efforts to improve referral may be futile. Likewise, changes in one element of the Cascade (e.g., implementing decision rules regarding classifying need and making a treatment referral) will have down-stream implications for treatment initiation rates.

Limitations/Challenges

While this study represents a robust examination of PIPs, several limitations should be considered. First, the complexity measures are a count of selected quality and data use indicators/new practices and proposed BH involvement in process. A more comprehensive measure of complexity would include inner and outer contextual factors occurring at the state, community, organizational, staff, and client levels that workgroups consider as they design PIPs. Second, although a rigorous coding process was applied, memory bias could have occurred among RC staff as a result of the time lag between fieldwork (plan development) and coding. Third, as is common for large scale multi-site studies, a majority of sites either volunteered to participate or were strategically selected due to a record of being at the forefront of service improvement, which may have resulted in a self-selection bias. Fourth, the generalizability of study findings is limited to public service sector JJ systems. However, a pilot extension of JJ-TRIALS goal selection strategies suggests that these strategies may be equally effective when workgroups are comprised of JJ and health agency partnerships (Gardner et al. 2018).

Implications for Implementation Science and Clinical Practice

System change requires dynamic adaptation, including changes in leadership, policies, and scientific developments, as well as consideration for the needs of providers and clients (Chambers et al. 2013). Prior studies based on the EPIS framework [including the Dynamic Adaptation Process model (DAP; Aarons et al. 2012) and Interagency Collaborative Team scale-up strategy (ICT; Hurlburt et al. 2014)] highlight the importance of tailoring strategies for specific contexts. Such efforts include not only tailoring the particular approach employed (e.g., EBP assessment), but also tailoring policies, communications, practices, and supports at system and organizational levels. Adaptation can range from rather mundane to more aspirational. These types of adaptations were evident in the 36 PIPs, as informed by system-level needs. For instance, while one participating site illustrated a mundane PIP to revise existing practices as isolated to screening practices only, with no plans to involve their BH partner in goal progress and minimal changes; another site provided a more aspirational PIP that involved a significant shift from current service provision along the Cascade and that fostered multiagency collaboration with several sequential practice and data management changes.

Although the participating sites represent multiple agencies and complicated systems, this project demonstrated that interagency workgroups successfully transitioned through the first two EPIS stages as conceptualized by the JJ-TRIALS cooperative (Becan et al. 2018). Specifically, all sites were able to identify and set a prevalent system unmet need as a common goal (Exploration) and develop an actionable plan to address the identified system need (Preparation). This movement from Exploration to Preparation serves as a necessary first step toward Implementation (initializing changes to address system identified unmet needs) and represents a novel application, testing the EPIS framework on public service sector JJ systems.

Additionally, the current study helps advance an understanding of the CFIR framework by developing a useful methodology for operationally defining and comparing complexity across highly diverse system improvement plans. While, the CFIR was developed by researchers to provide a menu of implementation barriers and facilitators; the intentionality of giving isolated attention to previously understudied CFIR constructs (such as complexity) has the potential to provide a rich understanding for the implementation science field. Specifically, operationalizing plans through complexity in a harmonious manner can result in more informed theories and frameworks of process change (Proctor et al. 2013) by providing the structure to relate goal articulation to system change, shedding light on potential implementation barriers, and illustrating the graded impact of implementation strategies. This paper offers stakeholders and scientists studying process improvement along a continuum of services with an analytic taxonomy and defined list of quality and implementation success indicators, of which can be used when planning and executing process improvement projects.

The implications for clinical practice are similar to those for implementation science. The JJ-TRIALS Cooperative Agreement goal selection strategies can be effective for agencies attempting to address gaps in services or implement practice improvements, even when cross-agency collaboration and complex system change are required. The use of the worksheets, for example, can help refine target areas, explore feasibility, and focus discussion around task planning. The structure provided by these tools helps improve communication and keeps goal selection activities focused (Dansereau and Simpson 2009). The specification of steps is important, not only regarding obvious tasks that are required to achieve a specific goal (e.g., establish an MOU between JJ and BH to open the pathway for treatment referral), but also including steps that address peripheral or underlying issues such as improving data collection and reporting capacity, providing staff training, ensuring ongoing communication, and intentional efforts to communicate across providers is essential when addressing complex goals. Finally, external facilitation of the goal selection process can help teams more effectively use the tools and provide clarification and assistance in how to narrow focus, identify how goals will be measured, and offer suggestions for breaking down goals into concrete steps. At project end, all JJ-TRIALS Cooperative Agreement materials including Goal Selection protocols will be available free of charge for agencies and implementation scientists to use (contact the primary author for information about these materials).

Future Directions

Because sites exhibited wide variation in plan complexity, these data will be included as process measures in subsequent JJ-TRIALS outcome studies. One interesting and highly relevant issue pertains to whether sites fully or only partially implemented their planned changes. As such, future investigations will systematically integrate complexity measures with CFIR inner and outer contextual measures to more comprehensively compare planned versus actual change through (a) monthly workgroup reports of progress toward or the achievement of steps, (b) qualitative accounts of new practices implemented and barriers encountered, and (c) quantitative measures of service receipt among youth cohorts over time.

Given that DDDM forms the foundation of the JJ-TRIALS implementation strategies that are being tested (Knight et al. 2016), the broader study has potential to further implementation science by examining the actual use of data during implementation, as well as its role in sustainment of practices. While there was particular importance placed on fidelity to practice improvement around the system selected unmet need; sites were granted a certain level of flexibility during the Implementation phase to dynamically adapt their PIP through on-going review of existing youth records and stakeholder feedback, and to be responsive to local needs and constraints they experience and overcome in performing their duties. Therefore, future work will offer an opportunity to test the JJ-TRIALS strategies as tools to assist systems and organizations in planning and moving through this process, by applying EPIS as a phased framework approach and DAP as an examination of implementation fidelity with flexibility. For instance, some participating sites grappled with changing entrenched practices, serving more diverse clientele through non-existent or limited connections with external providers, and identifying more serious data infrastructure deficiencies than did other sites. Future work is needed to explore how workgroups address and overcome constraints and how strategies such as facilitation during implementation promote quality improvement in agencies that face significant barriers to progress.

Conclusion

This study specified a series of implementation strategies suitable for process improvement planning governed by the Behavioral Health Services Cascade and developed a useful taxonomy for comparing complexity of system-informed plans as informed by the Consolidated Framework for Implementation Research (CFIR). As such, a major contribution of this study is provision of a theoretically developed, articulated, and quantified demonstration of plan complexity as a potential important covariate on the EBP adoption process. In a larger sense, such measures of anticipated complexity can be considered a lens through which to examine other facets of agency functioning, such as intra-organizational data management and inter-organizational relationships outside the agency (particularly with a behavioral health provider). There is every indication that this analytic approach to quantifying complexity in system-level process improvement planning could be used quite fruitfully in other settings beyond juvenile justice; including criminal justice, healthcare, and education.

References

Aarons, G. A., Fettes, D. L., Hurlburt, M. S., Palinkas, L. A., Gunderson, L., Willging, C. E., & Chaffin, M. J. (2014). Collaboration, negotiation, and coalescence for interagency-collaborative teams to scale-up evident-based practice. Journal of Clinical Child and Adolescent Psychology,43(6), 915–928. https://doi.org/10.1080/15374416.2013.876642.

Aarons, G. A., Green, A. E., Palinkas, L. A., Self-Brown, S., Whitaker, D. J., Lutzker, J. R., … Chaffin, M. J. (2012). Dynamic adaptation process to implement an evidence-based child maltreatment intervention. Implementation Science,7(32). https://doi.org/10.1186/1748-5908-7-32.

Aarons, G. A., Hurlburt, M., & Horwitz, S. M. (2011). Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research,38(1), 4–23. https://doi.org/10.1007/s10488-010-0327-7.

American Society of Addiction Medicine. (2013). The ASAM criteria: Treatment criteria for addictive, substance-related, and co-occurring conditions. Chevy Chase, MD: Author.

Atkins, M. S., Frazier, S. L., Leathers, S. J., Graczyk, P. A., Talbott, E., Jakobsons, L., … Bell, C. C. (2008). Teacher key opinion leaders and mental health consultation in low-income urban schools. Journal of Consulting and Clinical Psychology,76(5), 905–908. https://doi.org/10.1037/a0013036.

Barwick, M. A., Peters, J., & Boydell, K. (2009). Getting to uptake: Do communities of practice support the implementation of evidence-based practice? Journal of the Canadian Academy of Child and Adolescent Psychiatry,18(1), 16–29. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2651208/.

Becan, J. E., Bartkowski, J., Knight, D. K., Wiley, T., DiClemente, R., Ducharme, L., … Aarons, G. A. (2018). A Model for Rigorously Applying the Exploration, Preparation, Implementation, and Sustainment (EPIS) Framework in the Design and Measurement of a Large Scale Collaborative Multi-site Study. Health and Justice. https://doi.org/10.1186/s40352-018-0068-3.

Belenko, S., & Dembo, R. (2003). Treating adolescent substance abuse problems in the juvenile drug court. International Journal of Law and Psychiatry,26(1), 87–110. https://doi.org/10.1016/s0160-2527(02)00205-4.

Belenko, S., Knight, D., Wasserman, G. A., Dennis, M. L., Wiley, T., Taxman, F. S., … Sales, J. (2017). The Juvenile Justice Behavioral Health Services Cascade: A new framework for measuring unmet substance use treatment services needs among adolescent offenders. Journal of Substance Abuse Treatment,74, 80–91. https://doi.org/10.1016/j.jsat.2016.12.012.

Belenko, S., Sprott, J. B., & Peterson, C. (2004). Drug and alcohol involvement among minority and female juvenile offenders: Treatment and policy issues. Criminal Justice Policy Review,15(1), 3–36. https://doi.org/10.1177/0887403403255068.

Bowser, D., Henry, B., Wasserman, G., Knight, D., Gardner, S., Krupka, K., … Robertson, A. (2018). Comparison of the overlap between juvenile justice case processing and screening & referral to Behavioral Health Services. Journal of Applied Juvenile Justice Services,5(1), 96–125. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6342497/.

Brooks, J. M., Titler, M. G., Ardery, G., & Herr, K. (2008). The effect of evidence-based acute pain management practices on inpatient costs. Health Services Research,44(1), 245–263. https://doi.org/10.1111/j.1475-6773.2008.00912.x.

Chamberlain, P., Hendricks Brown, C. H., & Saldana, L. (2011). Observational measure of implementation progress in community based settings: The Stages of Implementation Completion (SIC). Implementation Science,6, 116. https://doi.org/10.1186/1748-5908-6-116.

Chamberlain, P., Roberts, R., Jones, H., Marsenich, L., Sosna, T., & Price, J. M. (2012). Three Collaborative models for scaling up evidence-based practices. Administration and Policy in Mental Health and Mental Health Services Research,39(4), 278–290. https://doi.org/10.1007/s10488-011-0349-9.

Chambers, D. A., Glasgow, R. E., & Stange, K. C. (2013). The dynamic sustainability framework: Addressing the paradox of sustainment amid ongoing change. Implementation Science,8, 117. https://doi.org/10.1186/1748-5908-8-117.

Chandler, R. K., Fletcher, B. W., & Volkow, N. D. (2009). Treating drug abuse and addiction in the criminal justice system: Improving public health and safety. Journal of the American Medical Association,301(2), 183–190. https://doi.org/10.1001/jama.2008.976.

Chorpita, B. F., Bernstein, A., Daleiden, E. L., & Research Network on Youth Mental Health. (2008). Driving with roadmaps and dashboards: Using information resources to structure the decision models in service organizations. Administration and Policy in Mental Health and Mental Health Services Research,35(1–2), 114–123. https://doi.org/10.1007/s10488-007-0151-x.

Committee on Substance Abuse. (2011). Substance use screening, brief intervention, and referral to treatment for pediatricians. American Academy of Pediatrics,128(5), e1330–e1340. https://doi.org/10.1542/peds.2011-1754.

Damschroder, L. J., Aron, D. C., Keith, R. E., Kirsh, S. R., Alexander, J. A., & Lowery, J. C. (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science,4, 50. https://doi.org/10.1186/1748-5908-4-50.

Dansereau, D. F., & Simpson, D. D. (2009). A picture is worth a thousand words: The case for graphic representations. Professional Psychology: Research and Practice,40(1), 104–110. https://doi.org/10.1037/a0011827.

Dennis, M., Smith, C., Knight, D., Belenko, S., Dembo, R., DiClemente, R., … Wiley, T. (2018). Demonstrating the need for and feasibility of applying a behavioral health services cascade framework in juvenile justice. Presentation at the College on Problems of Drug Dependence 80th Annual Scientific Meeting, San Diego, CA.

Gardner, S. K., Elkington, K. S., Knight, D. K., Huang, S., DiClemente, R. D., Spaulding, A. C., & Baird-Thomas, C. (2018). Juvenile justice staff attitudes about HIV/STI prevention, testing, and treatment linkage. Unpublished manuscript, Mississippi State: Social Science Research Center. https://doi.org/10.1186/s40352-019-0096-7.

Gardner, E. M., McLees, M. P., Steiner, J. F., del Rio, C., & Burman, W. J. (2011). The spectrum of engagement in HIV care and its relevance to test-and-treat strategies for prevention of HIV infection. Clinical Infectious Diseases,52(6), 793–800. https://doi.org/10.1093/cid/ciq243.

Glisson, C., Schoenwald, S. K., Hemmelgarn, A., Green, P., Dukes, D., Armstrong, K. S., & Chapman, J. E. (2010). Randomized trial of MST and ARC in a two-level evidence based treatment implementation strategy. Journal of Consulting and Clinical Psychology,78(4), 537–550. https://doi.org/10.1037/a0019160.

Greenhalgh, T., Robert, G., MacFarlane, F., Bate, P., & Kryriakidou, O. (2004). Diffusion of innovations in service organizations: Systematic review and recommendations. The Milbank Quarterly,82(4), 581–629. https://doi.org/10.1111/j.0887-378x.2004.00325.x.

Grol, R. P. T. M., Bosch, M. C., Hulscher, M. E. J. L., Eccles, M. P., & Wensing, M. (2007). Planning and studying improvement in patient care: The use of theoretical perspectives. The Milbank Quarterly,85(1), 93–138. https://doi.org/10.1111/j.1468-0009.2007.00478.x.

Gustafson, D. H., Quanbeck, A. R., Robinson, J. M., Ford II, J. H., Pulvermacher, A., French, M. T., … McCarty, D. (2013). Which elements of improvement collaboratives are most effective? A cluster-randomized trial. Addiction,108(6), 1145–1157. https://doi.org/10.1111/add.12117.

Henderson, C. E., & Taxman, F. S. (2009). Competing values among criminal justice administrators: The importance of substance abuse treatment. Drug and Alcohol Dependence,103(Suppl 1), S7–S16. https://doi.org/10.1016/j.drugalcdep.2008.10.001.

Horan Fisher, J., Becan, J. E., Harris, P. W., Nager, A., Baird-Thomas, C., Hogue, A., … The JJ-TRIALS Cooperative. (2018). Using goal achievement training in juvenile justice settings to improve substance use services for youth on community supervision. Health and Justice,6(1), 10. https://doi.org/10.1186/s40352-018-0067-4.

Hurlburt, M., Aarons, G. A., Fettes, D., Willging, C., Gunderson, L., & Chaffin, M. J. (2014). Interagency Collaborative Team model for capacity building to scale-up evidence-based practice. Children and Youth Services Review,39, 160–168. https://doi.org/10.1016/j.childyouth.2013.10.005.

Keith, R. E., Crosson, J. C., O’Malley, A. S., Cromp, D., & Taylor, E. F. (2017). Using the Consolidated Framework for Implementation Research (CFIR) to produce actionable findings: A rapid-cycle evaluation approach to improving implementation. Implementation Science,12(1), 15. https://doi.org/10.1186/s13012-017-0550-7.

Kieffer, M. P., Mattingly, M., Giphart, A., van de Ven, R., Chouraya, C., Walakira, M., … the EGPAF Technical Directors Forum. (2014). Lessons learned from early implementation of Option B+: The Elizabeth Glaser Pediatric AIDS Foundation Experience in 11 African countries. Journal of Acquired Immune Deficiency Syndromes,67(Suppl 4), S188–S194. https://doi.org/10.1097/qai.0000000000000372.

King-Sears, M. E. (2001). Institutionalizing peer-mediated instruction and interventions in schools: Beyond “Train and Hope”. Remedial and Special Education,22(2), 89–101. https://doi.org/10.1177/074193250102200203.

Knight, D. K., Belenko, S., Wiley, T., Robertson, A. A., Arrigona, N., Dennis, M., … The JJ-TRIALS Cooperative. (2016). Juvenile Justice—Translational Research on Interventions for Adolescents in the Legal System (JJ-TRIALS): A cluster randomized trial targeting system-wide improvement in substance use services. Implementation Science,11, 57. https://doi.org/10.1186/s13012-016-0423-5.

Knight, D. K., Joe, G. W., Morse, D. T., Smith, C., Knudsen, H., Johnson, I., … Wiley, T. R. A. (2019). Organizational context and individual adaptability in promoting perceived importance and use of best practices for substance use. Journal of Behavioral Health Services & Research,46(2), 192–216. https://doi.org/10.1007/s11414-018-9618-7.

Kochevar, L. K., & Yano, E. M. (2006). Understanding health care organization needs and context: Beyond performance gaps. Journal of General Internal Medicine,21(Suppl 2), S25–S29. https://doi.org/10.1111/j.1525-1497.2006.00359.x.

Kötter, T., Blozik, E., & Scherer, M. (2012). Methods for the guideline-based development of quality indicators—A systematic review. Implementation Science,7, 21. https://doi.org/10.1186/1748-5908-7-21.

Lawlor, K. B., & Hornyak, M. J. (2012). Smart goals: How the application of smart goals can contribute to achievement of student learning outcomes. Developments in Business Simulation and Experiential Learning,39, 259–267. https://absel-ojs-ttu.tdl.org/absel/index.php/absel/article/view/90/86.

Leukefeld, C. G., Cawood, M., Wiley, T., Robertson, A., Horan Fisher, J., Arrigona, N., … The JJ-TRIALS Cooperative. (2017). The benefits of community and juvenile justice involvement in organizational research. Journal of Juvenile Justice,6(1), 112–124. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5562037/.

Locke, E. A., & Latham, G. P. (1990). A theory of goal setting & task performance. Englewood Cliffs, NJ: Prentice-Hall, Inc. https://doi.org/10.5860/choice.28-0608.

Morrison, M. (2010). History of SMART objectives. Retrieved from https://rapidbi.com/history-of-smart-objectives.

Mugavero, M. J., Amico, K. R., Horn, T., & Thompson, M. A. (2013). The state of engagement in HIV care in the United States: From cascade to continuum to control. Clinical Infectious Diseases,57(8), 1164–1171. https://doi.org/10.1093/cid/cit420.

National Institute on Alcohol Abuse and Alcoholism. (2015). Alcohol screening and brief intervention for youth: A practitioner’s guide (NIH Publication No. 11-7805, revised). Retrieved from https://www.niaaa.nih.gov/alcohol-screening-and-brief-intervention-youth-practitioners-guide.

New York State Department of Health. (1999, April). Disease screening—Statistics teaching tools. Retrieved from https://www.health.ny.gov/diseases/chronic/discreen.htm.

Orwin, R. G., Edwards, J. M., Buchanan, R. M., Flewelling, R. L., & Landy, A. L. (2012). Data-driven decision making in the prevention of substance-related harm: Results from the Strategic Prevention Framework State Incentive Grant Program. Contemporary Drug Problems,39(1), 73–106. https://doi.org/10.1177/009145091203900105.

Pearson, F. S., Shafer, M. S., Dembo, R., del Mar Vega-Debién, G., Pankow, J., Duvall, J. L., … Patterson, Y. (2014). Efficacy of a process improvement intervention on delivery of HIV services to offenders: A multisite trial. American Journal of Public Health, 104(12), 2385–2391. https://doi.org/10.2105/ajph.2014.302035.

Prendergast, M., Welsh, W. N., Stein, L., Lehman, W., Melnick, G., Warda, U., … Duvall, J. (2017). Influence of organizational characteristics on success in implementing process improvement goals in correctional treatment settings. Journal of Behavioral Health Services & Research,44(4), 625–646. https://doi.org/10.1007/s11414-016-9531-x.

Proctor, E. K., Powell, B. J., & McMillen, J. C. (2013). Implementation strategies: Recommendations for specifying and reporting. Implementation Science,8, 139. https://doi.org/10.1186/1748-5908-8-139.

Schneider, S., & Simpson, L. (2011). Getting on board with juvenile justice information technologies. In F. T. Sherman & F. H. Jacobs (Eds.), Juvenile justice: Advancing research, policy, and practice. Hoboken, NJ: Wiley. https://doi.org/10.1002/9781118093375.ch22.

Taxman, F. S., Henderson, C. E., & Belenko, S. (2009). Organizational context, systems change, and adopting treatment delivery systems in the criminal justice system. Drug and Alcohol Dependence,103(Suppl 1), S1–S6. https://doi.org/10.1016/j.drugalcdep.2009.03.003.

Taylor, M. J., McNicholas, C., Nicolay, C., Darzi, A., Bell, D., & Reed, J. E. (2014). Systematic review of the application of the plan-do-study-act method to improve quality in healthcare. BMJ Quality & Safety,23(4), 290–298. https://doi.org/10.1136/bmjqs-2013-001862.

Young, D. W., Dembo, R., & Henderson, C. E. (2007). A national survey of substance abuse treatment for juvenile offenders. Journal of Substance Abuse Treatment,32(3), 255–266. https://doi.org/10.1016/j.jsat.2006.12.018.

Young, D., Moline, K., Farrell, J., & Bierie, J. (2006). Best implementation practices: Disseminating new assessment technologies in a juvenile justice agency. Crime & Delinquency,52(1), 135–158. https://doi.org/10.1177/0011128705281752.

Acknowledgements

The authors would like to thank the following members of the Study Design Workgroup for their assistance and participation in curriculum and methodological development activities: Barbara Estrada, Kate Elkington, Leah Hamilton, Philip Harris, Kevin Knight, James Maccarone, Larkin McReynolds, Alexis Nager, Traci Rieckmann, Eve Rose, Anne Spaulding, Gail Wasserman, and Matt Webster.

Funding

This study was funded under the JJ-TRIALS cooperative agreement, funded at the National Institute on Drug Abuse (NIDA) by the National Institutes of Health (NIH). The authors gratefully acknowledge the collaborative contributions of NIDA and support from the following grant awards: Chestnut Health Systems (U01DA036221); Columbia University (U01DA036226); Emory University (U01DA036233); Mississippi State University (U01DA036176); Temple University (U01DA036225); Texas Christian University (U01DA036224); University of Kentucky (U01DA036158), and University of Miami (R21DA044378). NIDA Science Officer on this project is Tisha Wiley. The contents of this publication are solely the responsibility of the authors and do not necessarily represent the official views of the NIDA, NIH, or the participating universities or JJ systems.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

GAA serves on the Editorial Board for Administration and Policy in Mental Health and Mental Health Services Research; all decisions on this paper were made by another editor. The authors declare that they have no other competing interests.

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional review boards for each of the seven research institutions and the coordinating center and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Becan, J.E., Fisher, J.H., Johnson, I.D. et al. Improving Substance Use Services for Juvenile Justice-Involved Youth: Complexity of Process Improvement Plans in a Large Scale Multi-site Study. Adm Policy Ment Health 47, 501–514 (2020). https://doi.org/10.1007/s10488-019-01007-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10488-019-01007-z