Abstract

Community-partnered school behavioral health (CP-SBH) is a model whereby schools partner with local community agencies to deliver services. This mixed-methods study examined 80 CP-SBH clinicians’ adoption and implementation of evidence-based practice (EBP) approaches following mandated training. Forty-four clinicians were randomly assigned to one of two training conditions for a modular common elements approach to EBPs; 36 clinicians were preselected for training in a non-modular EBP. EBP knowledge improved for all training conditions at 8-month follow-up and practice element familiarity improved for modular approach training conditions, but the modular condition including ongoing consultation did not yield better results. Qualitative interviews (N = 17) highlighted multi-level influences of the CP-SBH service system and individual clinician characteristics on adoption and implementation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Underutilization of mental health treatment among children and adolescents is a public health crisis that has improved little over the last several decades. Due to substantial unmet mental health needs, schools have been increasingly recognized as a logical setting to improve access to mental health services. Among the small percentage of youth with mental health needs who receive treatment, a large majority of those services are received at school (Burns and Costello 1995; Foster et al. 2005; Hoagwood and Erwin 1997; Rones and Hoagwood 2000).

To date, research has established that providing behavioral health services in schools improves children’s access to care by reducing logistical barriers (e.g., transportation, childcare) and decreases the stigma of help seeking (Bringewatt and Gershoff 2010). However, a more current emphasis has been on ensuring that school behavioral health (SBH) services are of consistently high quality in terms of evidence-based practice (Weist et al. 2009). Unfortunately, despite improvements in access to care, school behavioral health treatment quality is inconsistent, at best (Barrett et al. 2013).

A substantial body of implementation science research has been dedicated specifically to improving the transportability of evidence-based practices (EBPs) to usual care mental health settings. Continued implementation research focusing on how to close the research-to-practice gap specifically in schools is critical to ensuring high quality public mental health services for children. One approach to improve the uptake and implementation of EBPs in usual care is clinician training and ongoing implementation supports. The research evidence on effective clinician training methods in community settings suggests a number of useful conclusions. For instance, reading training materials is a necessary and common, but insufficient training approach to acquire new skills; and one-time workshop trainings appear to result in increased knowledge but not meaningful changes in attitudes or practice (Herschell et al. 2010). Also, ongoing contact such as coaching, consultation and/or follow-up visits appears to be a critical supplement to one-time training (Beidas et al. 2012; Kelley et al. 2010; Miller et al. 2004).

However, the literature on effective training methods is still in its infancy as training strategies have been systematically examined with a limited number of EBPs and service systems to date. Franklin and Hopson (Franklin and Hopson 2007) note that successful practice of EBPs in community settings requires a complex set of well-honed clinical skills, often a knowledge base in cognitive-behavioral or behavioral strategies, and considerable organizational supports for adaptations to local community contexts and client needs (including supervision and feedback). Effective training must account for staff turnover, variable client attendance, and recognition that not all skills will be implemented with fidelity (Franklin and Hopson 2007). The practical implications for this from the system or agency level are significant. Public mental health administrators are thus faced with needing to hire and retain very skilled clinicians in a field with 25–50% turnover (Gallon et al. 2003; Glisson et al. 2008) and provide ongoing fidelity monitoring and supervision structures with often limited financial and administrative staffing resources. The early research evidence on effective clinician training methods finds that high intensity training with follow up is likely the gold standard, however, the financial and time cost of training clinicians this way may be insurmountable for the typical mental health care system or agency (McMillen et al. 2016).

From this we can surmise that training format and content are critical factors influencing clinician adoption and implementation of EBPs, but not the whole story.

Implementation processes are inherently multi-level, including patient, clinician, organizational and contextual factors (Damschroder et al. 2009; Proctor et al. 2009). Increasingly robust findings confirm the significant influence of organizational level factors and service context on clinician implementation of EBPs (Beidas et al. 2015, 2016; Brimhall et al. 2016). Intervention-setting fit has been noted as one of the most critical yet under-researched areas related to implementation of new practices (Lyon et al. 2014).

Against this backdrop, behavioral health agencies and systems are increasingly adopting, investing in, and requiring training in EBPs for their clinicians (McHugh and Barlow 2010). School behavioral health administrators in community clinics and schools are no exception, and there is increasing emphasis on the installation of EBPs in schools (Forman 2015; Weist et al. 2008).

Community-Partnered School Behavioral Health

School behavioral health services are provided in a variety of ways, including school-employed psychologists or social workers who take the lead on mental health service delivery (Policy Leadership Cadre for Mental Health In Schools 2001), mental health clinicians embedded in school-based health centers (Bains and Diallo 2016; Lyon et al. 2014), and community agency-employed mental health clinicians who partner with schools to provide services directly in the school building (Flaherty et al. 1996; Flaherty and Weist 1999; Lever et al. 2015; Weist and Evans 2005). The latter approach—that of involving community partners—was originally referred to as “expanded school mental health” (Weist 1997; Weist et al. 2006) and has proliferated since, most recently referred to as Community-Partnered School Behavioral Health (CP-SBH) (Connors et al. 2016; Lever et al. 2015). Over a decade ago it was documented that a large majority of SBH services were delivered in the community-partnered model (Foster et al. 2005).

Behavioral health refers to mental/emotional well-being, and is inclusive of one’s wellness, mental disorders, and substance use concerns (Substance Abuse and Mental Health Services Administration 2014). However, some states and locales use “mental health” instead of “behavioral health” to refer to the same type of service array. For example, comprehensive school mental health has also been widely used to reflect a full continuum of prevention, early intervention and treatment services related to social, emotional, and behavioral wellness including substance use concerns (Barrett et al. 2013).

Adoption and Implementation in CP-SBH

Effective implementation of EBPs in SBH is likely to vary across models because the duties, professional development opportunities, organizational supports, service models, and even record keeping vary. In fact, qualitative results with 17 SBH clinicians in school-based health centers trained in modular psychotherapy found that intervention-setting fit of modular psychotherapy was based on key elements related to students served, clinician, and school context (Lyon et al. 2014). Authors recommended replication of findings with other school clinician samples including those who work within other SBH models such as CP-SBH. SBH clinicians’ adoption and use of EBPs following mandated training is particularly understudied.

Adoption can be conceptualized as a finite event or ongoing process at the service system level (Landsverk et al. 2018). According to the Exploration, Adoption/Preparation, Implementation, Sustainment (EPIS) framework, adoption is nestled within a larger, multi-stage process. Each phase of exploring the possibility of EBP adoption (exploration), decision to adopt (adoption/preparation), Implementation and Sustainment are influenced by intra-organizational and individual adopter (clinician) characteristics (“inner context”) and service environment, inter-organizational environment, and consumer support or advocacy (“outer context”). Individual adopter characteristics are critical during the active implementation phase (Aarons et al. 2011). Once a service system has made the decision to adopt an innovation and train their staff, individual clinicians’ decision to adopt and implement those practices with their patients remains variable at best (Jensen-Doss et al. 2009). In fact, Rogers’ theory (Rogers 2003) posits five factors that influence an individual’s decision to adopt or reject an innovation. These are as follows: (1) relative advantage, of the innovation as compared to the idea or existing practice that it follows. Advantage may be in the form of economic, social prestige, convenience, or satisfaction; (2) compatibility, in terms of consistency with one’s values, experiences, and needs (in this case, clinicians and their agencies); (3) complexity, or the degree to which the innovation or program is difficult to understand and/or use; (4) trialability, or the degree to which the innovation can be experimented with temporarily, which can appease any uncertainty; and (5) observability to others, which facilitates peer discussion of new ideas or practices. According to Rogers’ theory, adoption decisions are also preceded by the combination of one’s knowledge and attitudes, but also situated in a broader context of both information and uncertainty.

Rogers’ theory is particularly relevant in the context of organizational-level decisions to adopt and implement an EBP and provide mandated training for clinicians. We applied Rogers’ theory to individual school behavioral health clinician’s decisions to adopt and ultimately implement (i.e., use) EBPs following mandated training; the purpose of the current study is to explore a variety of factors that may predict clinicians’ adoption and implementation of EBPs in school settings within a CP-SBH delivery model.

Method

This study was conducted in the context of a behavioral health system-initiated requirement for 80 CP-SBH clinicians to receive training in one of two EBPs. The behavioral health system adopted training in a modular “common elements” (MCE) approach to evidence-based practice (Chorpita et al. 2005; Chorpita and Weisz 2009) for 44 clinicians based on established effectiveness as compared to manualized EBPs (Weisz et al. 2012), acceptability to SBH clinicians (Lyon et al. 2014), and desire to equip clinicians with a flexible, individualized, data-driven option for clinical decision making based on scientific knowledge. These 44 clinicians were stratified by years of experience within agency and randomly assigned to one of two MCE conditions. Training conditions were “MCE Basic” (3-h didactic orientation and materials, N = 25) or “MCE Plus” (2-day training, materials, and monthly phone consultation, N = 19). Several clinicians assigned to MCE Plus were unavailable for the 2-day training, so were reassigned to the MCE Basic condition, resulting in uneven groups.

The MCE Plus condition includes components of training and ongoing consultation established in the literature as the “gold standard” (Herschell et al. 2010). MCE Plus condition which was an active, 2 day training including behavioral rehearsal and monthly consultation calls for 7 months follow-up. Behavioral rehearsal was included in the training by allowing clinicians to break into pairs to read scripts of patient concerns, use the training materials to select and sequence practices and role play practices with time allotted for feedback from trainers, questions, discussions. Consultation calls were led by two faculty members with extensive experience delivering modular CBT approaches in schools; calls provided expert and peer feedback for case-specific questions using MCE materials. The MCE Basic condition includes components commonly used in professional development initiatives to implement EBPs in public mental health settings. This condition included a 3 h didactic orientation and materials, but no in-depth training, behavioral rehearsal or ongoing consultation support.

Due to a school district initiative to offer an early intervention EBP to 6th grade students at risk for negative behavioral health outcomes, 36 of the 80 clinicians were preselected to receive LifeSkills training (Botvin et al. 2006). LifeSkills is a universal, school-based preventive intervention designed to address individual-level risk factors for youth violence and substance abuse. This condition served as a comparison group, as the training method did not include ongoing consultation, but the intervention is evidence-based and utilizes a cognitive-behavioral approach (Botvin et al. 2000, 2006). However, as with any implementation initiative in “real world” settings, some group contamination did occur; at 8-month follow-up, 19 (53%) clinicians who received LifeSkills training reported having heard about MCE and 9 (25%) reported some exposure to MCE training and resources.

Design

A sequential, mixed-methods design was used to obtain a comprehensive understanding of training, individual clinician, organizational, and school context factors associated with clinicians’ subsequent adoption and implementation (use) of these EBPs. Quantitative data collection focused primarily on evaluating the impact of the two MCE training conditions on 44 CP-SBH clinicians’ attitudes toward, knowledge of and practices related to a modular approach to EBPs. Secondary quantitative data analyses were also conducted for the 36 Lifeskills training group clinicians in order to explore whether results might be related to the EBP. Qualitative interviews were conducted at the end of the school year with a subset of the sample (N = 17 CP-SBH clinicians) to further understand the individual clinician, organizational, and school context factors that were associated with the process of EBP adoption and implementation from the individual clinicians’ perspectives. That is, the structure of the mixed methodology was sequential, starting with quantitative data as the primary method (i.e., QUAN → qual) for the function of complementarity and qualitative data was embedded or nested to understand the context of adoption and implementation within the broader quantitative study of implementation outcomes (Palinkas et al. 2011).

Quantitative Methods

Procedure

Self-report measures were collected from clinicians in all training conditions (MCE Basic, MCE Plus, LifeSkills) at three time points, including baseline (prior to training, at the beginning of the school year), 1-month, and 8-month follow-up (end of school year).

Participants

Participants were nearly all are Masters-level clinicians (97%) within fields ranging across social work (63%), counseling psychology (20%), clinical psychology (7%) and school counseling/psychology (6%), among others. Participants were mostly female (80%) with a median age of 30 years old. Using non-mutually exclusive race categories, approximately 69% identified as Caucasian, and approximately 32% identified as African-American. Some clinicians reported previous familiarity with MCE (N = 23, 28%) on the baseline survey, but only 9 (11%) received formal training in the past. Experience level ranged from 1 to 20 years of experience in children’s mental health care. All clinicians worked in a public, urban school system and were employed by one of five community mental health agencies.

Agencies were coded with A–E to distinguish them; all agencies were community-based outpatient mental health clinics that provide school-based individual, group, and family psychosocial treatment students age 4–18 years old as well as mental health prevention and early intervention services. All five agencies offer the same model of school-based services based on a common memorandum of understanding with the local behavioral health agency and consistent policies and procedures outlined with the school district. This school behavioral health network with which they participate also provides professional development opportunities such as those included in this study. All agencies provide fee-for-service treatment to primarily low-income, publicly-insured students and their families. According to a recent school district profile, students are 79% Black/African-American, 10% Hispanic/Latino, 8% White/Caucasian, and 2% other race/ethnicity (Baltimore City Public Schools 2018). The chronic absence rate is 30.1% and in the 2016–2017 school year, only about 15% students met or exceeded expectations in Mathematics or English Language Arts (Baltimore City Public Schools 2018).

Table 1 displays distribution of clinicians by training group and agency.

Measurement

All 80 participants completed self-report measures about their familiarity with and use of practice elements, knowledge of EBPs and attitudes toward EBPs.

Practice Element Familiarity and Use

The Practice Elements Checklist (Stephan et al. 2012), is intended to capture self-reported familiarity with and use of the Common Elements specifically for four disorder areas (i.e., attention deficit/hyperactivity disorder, depression, disruptive behavior, and anxiety) addressed in PracticeWise training and MATCH-ADTC (Chorpita and Weisz 2009) resource materials. On a six-point Likert scale, respondents rated their familiarity (1 = “none” and 6 = “significant”) and use (1 = “never” and 6 = “frequently”) of each practice element. Mean scores for familiarity and use were calculated separately. Internal consistency for each subscale in this sample is excellent, ranging, ranging from α = 0.90 to 0.96. Clinicians were also asked to self-report their frequency of use of practice element materials provided at 8-month follow-up on a 4-point Likert scale.

Attitudes Toward EBP

The Evidence-Based Practice Attitude Scale (EBPAS) (Aarons 2004) is a 15-item self-report measure of therapist’s attitudes toward adopting new types of psychotherapy techniques and manualized treatments. Response options range from 0 (“Not at All”) to 4 (“To a Very Great Extent”). Mean total scores were used. The EBPAS has considerable psychometric strengths, both in regards to its internal construct validity and reliability Cronbach’s alpha of 0.76 for the total score, with subscale alphas ranging from 0.67 to 0.91 (Aarons et al. 2010). The EBPAS has been used extensively to capture practitioner attitudes toward EBPs(Borntrager et al. 2009; Gioia 2007; Lim et al. 2012; Nakamura et al. 2011; Pignotti and Thyer 2009) and is regarded as one of the first standardized, reliable measures of this construct (Beidas and Kendall 2010).

Knowledge

The Knowledge of Evidence-Based Services Questionnaire (KEBSQ) is a 40-item self-report measure to assess provider knowledge of psychotherapy techniques found in evidence-supported and evidence-unsupported protocols for four common presenting problems among youth (i.e., anxious/avoidant, depressed/withdrawn, disruptive behavior, and attention/hyperactivity) (Stumpf et al. 2009). All 40 items describe specific therapeutic techniques without naming them, and some techniques listed are not evidence-based. For each item, the respondent indicates the problem areas for which the technique is evidence-based. Total scores range from 0 to 160. Items and scoring criteria were developed from two comprehensive reviews of treatment literature practice components, the Hawaii CAMHD Biennial Report (Child and Adolescent Mental Health Division 2004) and the Hawaii CAMHD Annual Evaluation Report (Daleiden et al. 2004). Preliminary studies with the KEBSQ indicate adequate psychometric properties such as α = 0.56 for 2-week test–retest reliability and sensitivity to change following training (p < .001) (Stumpf et al. 2009).

Analyses

Prior to conducting analyses, a one-way ANOVA revealed no significant differences among the three training conditions in terms of gender, race, professional discipline, degree type, years of experience, knowledge scores (per the KEBSQ), attitude scores (both total scores and all four subscales of the EBPAS were tested) or familiarity or use of CE (per the PEC-R). Repeated Measures Analysis of Variance (RM ANOVA) was used for the analysis to examine within-group effects of time as well as between-group differences for both MCE conditions. Dependent variables were attitudes about, knowledge of, use, and familiarity with evidence-based practices at all three time points. A separate RM ANOVA was used to analyze the LifeSkills condition as there was no design control related to their training group assignment.

Qualitative Methods

Procedure

Qualitative interview participants were randomly selected, with replacement, until five clinicians within each of the three training conditions agreed to participate. The projected sample size of fifteen clinicians was achieved from 8 random selections of 44 clinicians, resulting in a 34% response rate. Of the 29 who were selected and were not interviewed, one declined participation, five no longer worked at their agency, seven email addresses were undeliverable, and 16 did not reply to the email prior to the deadline provided. Two additional interviews were conducted based on clinicians independently contacting the first author to volunteer, resulting in a final sample size of 17 clinicians interviewed. We did not find significant differences between the 17 participants and those who declined to interview based on demographic characteristics, attitudes, knowledge, or familiarity or use of practice elements. Clinicians were invited via email to participate in a 20–30 min individual, semi-structured interview to provide additional feedback on their experience implementing the EBP materials. Written consent was obtained and compensation of $25 was provided for interview participation. All qualitative interviews were audio-recorded with two devices to ensure data integrity. Interview data were transcribed, checked for accuracy, and systematically analyzed using Atlas.ti (Version 7) to apply a four-stage grounded theory approach (Charmaz 2014). Interviews were an average of 34.2 min long (median = 30.4, range 16.6–57.0).

Participants

The 17 interviewees represented all five agencies and three training conditions within the sample. The proportions of demographic and individual characteristics among clinicians interviewed almost identically mirrored those of the larger sample.

Measurement

A semi-structured interview protocol with open-ended questions and follow-up probes was developed, pilot tested, and revised to elicit clinicians’ beliefs, attitudes, and other relevant factors that play a role in their decision-making process and clinical practice related to adopting and implementing new EBPs. Questions were developed to query aspects of quantitative measurement that would benefit from elaboration or more in-depth understanding of implementation processes. For instance, although the PEC provides a picture of which practice elements are currently being used by clinicians, it does not indicate other interventions they may be using instead of or in addition to the specific intervention training provided. Also the EBPAS collects information regarding attitudes toward EBPs and manualized treatment in general, but interview data were intended to shed light on clinician attitudes toward the specific EBP training, materials provided, and professional development activities received as a part of this study.

Analyses

Grounded theory was used to develop a unified understanding of how the processes of adoption and implementation of EBPs operate within a group of school behavioral health clinicians based on their experiences and perspectives. Consistent with a constructionist approach to grounded theory, adoption literature (e.g., Rogers’ theory) was used as a resource while efforts were made not to force this prior knowledge on the data. The analysis team consisted of a qualitative researcher, graduate student investigator and research assistant, who received input on codes from two principal investigators on the project. Initial, provisional codes (N = 210) were identified by sticking closely to the data and coding for actions, with components of Rogers’ theory as a sensitizing concepts (Charmaz 2014). Next, open codes were closely examined for development of 30 focused codes which were then organized and collapsed into 4 main theoretical codes (i.e., adoption process, implementation, clinician experience, and school mental health) through a series of iterations and review of memo-writing from all phases of analysis. For instance, initial codes of “feeling overwhelmed”, “lack of time for treatment planning” and “caseload” were collapsed into a focused code named “demands”, which is one of six focused codes grouped under the “clinician experience” theoretical code. The final codebook (which retained the four primary theoretical codes but became more defined and expanded to include 48 focused codes) was applied to all 17 interviews. Seven transcripts were coded by two coders, followed by consensus meetings. The remaining ten transcripts were coded independently by the graduate student investigator; her inter-coder reliability with consensus-coded transcripts was approximately κ = 0.79.Footnote 1

Once all focused codes were applied to the 17 transcripts in Atlas, and iterative coding of certain transcripts and codes i.e., double-checking the consistency of codes’ application across interviews based on constant comparison of data emphasized by (Charmaz 2014) and (McHatton 2009), the four theoretical codes were re-evaluated. By examining the frequency of code use and reviewing coded quotations, focused codes were assigned to theoretical codes or “families” in Atlas.ti software. Cross-filing focused codes under more than one theoretical code was also considered for each. Next, quotations for each code—within each family—were thoroughly reviewed. Memo-writing from all phases of analysis were reviewed, and memo-writing continued for each focused and theoretical code. Memo-writing is considered to be a critical step between data collection and writing results, as it facilitates analysis by generating comparisons, connections, questions and directions that lead to the unified, grounded theory under study (Charmaz 2014). For instance, a memo taken early in the coding process was “the compatibility code is the most-frequently used, and thus may be too broad”, which resulted in several types of compatibility codes being used in the final code book, and then a later memo stated “the function of adaptation may be explained by perceived incompatibility with students, families, or the school setting, which necessitates changes to the materials; review cross-codings with adaptation to understand other potential mechanisms”. This example illustrates the importance of the memo-writing process to making constant comparisons, staying close to the data, and having a critical lens for understanding how clinicians’ personal and shared processes of adoption and implementation operate. Finally, memo writing informed the results as described in the text, as well as the graphic representation of the theory uncovered by this process for this group of clinicians the latter refers to axial coding, which is not a coding procedure conducted on the text, but rather reassembling the categories and subcategories of data in a unified framework (Charmaz 2014).

Results

Quantitative Results

Familiarity with Practice Elements

MCE-trained clinicians reported significantly greater familiarity with practice elements over time (F2,42 = 4.01, p < .05, n2 = 0.09). However, there were no differences based on training condition. Familiarity with practice elements did not change for the LifeSkills-trained clinicians over time (F2,35 = 1.03, p = .36, n2 = 0.03).

Use of Practice Elements

There was no significant effect of time (F2,42 = 1.60, p = .21, n2 = 0.04) or training condition (F2,42 = 3.04, p = .053, n2 = 0.07) on self-reported use of practice elements for MCE-trained clinicians. LifeSkills clinicians also did not report a significant change in use over time (F2,35 = 2.31, p = .11, n2 = 0.06). Among the 32 MCE-trained clinicians who answered follow-up questions about their use of MCE at 8-month follow-up, 10 (31%) reported they “never” or “rarely” used the MATCH-ADTC manual, 15 (47%) reported “sometimes” using the manual, and 7 (22%) reported they “often” or “always” used the manual. However, these clinicians reported much lower rates of using the PracticeWise online resource including accessing the database of best practices. That is, 15 (47%) reported they “never” used online resources, 12 (38%) reported “rarely” using the online resources, 4 (13%) reported use “sometimes” and only 1 reported use “often”. 10 (31%) clinicians reported they never signed on to activate their online account. Additional follow-up questions about perceived feasibility and effectiveness of MCE materials indicated that 19 (59%) clinicians reported it was “a little” or “somewhat” feasible to implement MCE and 23 (72%) clinicians reported MCE materials were “effective” or “very effective” for clients with whom they used any aspect of MCE.

Attitudes

Clinician self-reported attitudes toward evidence-based practice did not change for either MCE-trained clinicians (F2,42 = 0.77, p = .47, n2 = 0.02) or LifeSkills-trained clinicians (F2,35 = 0.39, p = .68, n2 = 0.01) over time, nor were there between-group differences for MCE-trained clinician conditions of MCE Basic versus MCE Plus (F2,42 = 0.12, p = .89, n2 = 0.003). A follow-up analysis revealed that baseline openness to EBPs (per the EBPAS Openness scale) was positively related to use of practice elements at follow-up (r = .311, p < .01).

Knowledge

EBP knowledge significantly increased over time for MCE-trained clinicians (F2,42 = 4.76, p = .01, n2 = 0.10). No significant between-group effects were detected based on MCE training group (F2,42 = 0.51, p = 60, n2 = 0.01). EBP knowledge also increased for the LifeSkills-trained clinicians (F2,35 = 10.36, p < .001, n2 = 0.23). Effect sizes for knowledge gains over time were moderate to large for all clinicians.

Qualitative Results

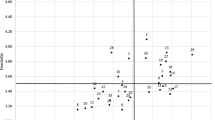

Four predominant theoretical codes emerged from the data: (1) school behavioral health, (2) clinician experience, (3) adoption, and (4) implementation. Axial coding was used to reassemble these categories together in a meaningful way to describe clinician’s perspectives and resulted in an initial grounded theory model (see Fig. 1). In this model, called the Framework for Clinical Level Adoption and Implementation of EBPs in CP-SBH, adoption processes influence implementation processes. Both processes are embedded in and influenced by the clinician’s individual experiences and school behavioral health service delivery setting. School behavioral health includes factors unique to clinical work and implementation in urban public schools, such as the school setting and culture and clinician’s partnership with students, parents, teachers, administrators, and access to resources that support their clinical work and professional development. Within the school behavioral health context, individual clinician experiences were shaped by perceived organizational support by their agency, the degree of graduate training they received related to EBPs, years of experience, demands of clinical work and peer support. Clinician experience essentially includes the past and current factors that comprise one’s day-to-day professional experiences providing direct care. (Of note, individual clinician characteristics also directly influence the individual clinician’s decision to adopt an EBP.) Thus, any EBP is adopted and implemented within both the clinician’s individual experience (shaped by their training background, current organization, demands of clinical work, etc.) and the setting in which they work (i.e., schools). For this reason, contextual factors related to adoption and implementation were analyzed for the entire sample of 17 clinicians, with LifeSkills and MCE clinicians’ feedback being combined. Pseudonyms are used in place of actual participant names.

Adoption refers to why an innovation (i.e., intervention or EBP) gets put to use by an individual practitioner. This can be regarded as the clinician’s decision-making process upon exposure to an EBP about whether they choose to adopt it into their practice. In other words, adoption can be thought of as individual “uptake” of a specific EBP. Implementation refers to how an innovation (i.e., intervention or EBP) gets used by an individual practitioner, and the process of that work over time.

Adoption

Although Rogers’ factors of adoption were not specifically queried by the interview protocol in order to facilitate a truly inductive understanding of adoption, the study team coded for these factors as they arose. Compatibility with the specific setting and population served and perceived advantage relative to existing resources used by the clinician were prominent in the data. 14 (84%) of clinicians interviewed talked about the importance of compatibility of EBPs with the students and families served, school setting, and their own theoretical orientation. 11 (68%) of the clinicians talked about the importance of relative advantage of the EBP to their current practice techniques and experience. However, complexity (i.e., how complicated the innovation or EBP is), trialability (i.e., ability to pilot test or explore the EBP before deciding to adopt it), and observability (i.e., seeing peers or colleagues use the EBP or awareness that others are using it) were less so. 4 (24%) mentioned complexity, 2 (12%) mentioned trialability and 1 (6%) mentioned observatibility, but moreso in the context of their actual implementation or use of the materials as compared to their decision to adopt the EBP. Low frequency for comments on trialability and observability are logical; MCE was a mandated professional development provided without clinician input (precluding opportunities for trialability to inform the decision to adopt) and school settings may result in less inter-clinician observability of practices as compared to clinic-based settings. In addition to Rogers’ factors of adoption, participants reported that individual clinician characteristics such as openness to and interest in evidence-based interventions were related to a clinician’s decision to adopt aspects of an EBP into their daily practice. The perceived degree of flexibility of materials to complement clinical judgment was also discussed as an important factor.

Compatibility with School Setting

The extent to which the materials and overall approach of an EBP is compatible, or appropriately fitting, to different aspects of school behavioral health emerged as the most frequently-discussed topic in the interviews. Several clinicians noted that standardized materials were somewhat incompatible for students who “are just in chaos constantly,” especially in the school setting where “a lot of crisis [management] goes on throughout the day.” In addition, students who face a lack of basic needs and family system difficulties may render active treatment interventions less feasible for clinicians. A third aspect of compatibility with students and families is related to developmental appropriateness and engaging for students. For instance, some of the MCE modules were reportedly “juvenile for the high school population” (Harry) and “expecting the family to be the primary system which isn’t super developmentally appropriate as kids get older and the peer system becomes more predominant” (Carey). Parent-directed materials, particularly for the disruptive behavior disorder treatment components, were regarded by some clinicians as a poor fit for the school environment but others noted that “the handouts for parents were really helpful”, and reported adapting them for use with teachers as needed. Also, the LifeSkills curriculum was noted as needing more activities to engage students and make material less “dry”.

Relative Advantage

The relative advantage of the EBPs in question varied based on clinician perspective. For as many clinicians who denied the materials introduced anything unique or additive to her existing practice, others indicated seeing some advantages. For instance, John noted that the CE manual offered new material, but also some material he was familiar with, but overall “the way that it was so scripted was unique about it…I thought it was a neat idea for someone who was brand new to doing it [therapy]”. Also, Carey concurred that:

I really do think there’s a lot of valuable things, and there’s things that I’ve taken from it that I didn’t used to talk about before. Or certain things, like I feel like now I talk a lot more with clients about false alarms versus true alarms, there’s things that I really like.

Clinician Characteristics

Most of the clinicians interviewed talked about individual differences—either of themselves or fellow clinicians—that relate to the adoption process. These include clinicians’ general attitude of openness and/or interest in training and new interventions as well as tendency to seek evidence from innovations. Clinicians with a range of years of experience expressed openness to professional development, (e.g., Ken stated, “Remaining teachable is the big thing…you can call me an expert if you want, but if I’m not willing to learn from whoever I’m talking to, then I’ve lost everything.” and Carey said, “I’m excited about new things all the time…I think it seems silly not to use the resources to me. I would use any resource; I would go to any training.”). This was regarded as a positive quality, even a necessity, for clinical work. For others, licensure requirements and needing continuing education units drive openness to training in a practical sense. Related, participants reported a range of values and attitudes pertaining to EBPs, the evidence-based practice movement, and individual tendencies to seek evidence, which are directly relevant to their decision to adopt a specific practice. Also, clinicians’ perceptions of an intervention’s ecological validity or effectiveness and flexibility and room for clinical judgment may predict one’s adoption. Clinicians in the current sample often raised questions or reported feeling “a little skeptical” about whether the EBP had been “styled for” or based on effectiveness research within an urban, public school sample of children who are predominantly African-American. There were specific concerns about the practice materials being out of date or not optimally relevant for everyday experiences of urban youth (e.g., LifeSkills materials about asking someone on a “date,” driving a car, etc.). Finally, individual clinician perspectives about the flexibility of an EBP and how much room it allows for clinical judgment was noted as an individualized characteristic influencing adoption. Some clinicians expressed hesitance to “rely on” any intervention that is “very scripted” or “automated,” whereas others thought having structured materials were a “good guide post” even for a “more advanced clinician” to check in to make sure all aspects of a skill or intervention is covered because clinical work inherently involves “so many things you’re balancing.”

Implementation

Implementation-oriented themes reflect the process and definition of implementation was based on Rogers’ (2003) innovation-decision process which describes how an individual proceeds when presented with a new innovation—or in this case, EBP—to consider. Consistent also with the more current exploration, adoption/preparation, implementation, sustainment (EPIS) model (Aarons et al. 2011). Implementation occurs after a decision is made to adopt or reject the innovation. The following themes discuss how the EBP gets put to use by a clinician and the implementation of that work over time.

Materials

Clinicians in the sample largely preferred hard copy to online materials. Barriers to using online materials included lack of time to learn how to navigate and use the site and lack of internet and/or printer access in the schools. Hard copy materials were cited as more feasible due to (usually) being able to access photocopier in the schools, the fast pace of the school setting and impromptu sessions that lend them to pulling a worksheet right from a file in the office. However, when probed about whether or not an online component should be offered to clinicians in the future, several participants reported enjoying the option to use hard copy or online materials and recommended both be offered. Many clinicians who reported they did not use the online materials often, or at all, stated that this was just their personal preference, which they were reticent to state as generalizable to her peers’ preferences. Also of note, general attitudes about EBP manuals were queried during the interviews, but the responses often did not differentiate attitudes toward other manualized interventions and CE as a modular approach. Thus, attitudes toward manuals are discussed throughout this section, when relevant, and also within the Implementation section, supplementing with other resources.

Training Received

Clinicians typically reported that their agencies strongly encouraged or mandated their attendance at the trainings scheduled and required by the behavioral health system. Additionally, some agencies provided ongoing implementation supports such as supervision to support implementation of EBPs that were trained. Interviewees were specifically asked to provide feedback on the training components they received in terms of the trainer, format, length and content. Trainers all reportedly had an “engaging style” and had “worked with kids in urban areas which is helpful”. Overall, clinicians’ preferences for training format and length were highly variable. Some clinicians in the 2-day training condition would have preferred a shorter training, and some of those in the orientation-only condition preferred a longer training. Reactions to behavioral rehearsal were also mixed, with some clinicians enjoying the role plays and other specifically noting they did not enjoy them or find them useful.

Reach and Use

How applicable the EBPs were to a clinician’s entire caseload (reach) and feasibility of use were specifically queried with the interview protocol. In terms of use, the clinicians were split with half reporting little to no use of the materials and the other half reporting some to frequent use of the materials. There was no clear pattern between those in MCE Basic or Plus conditions; that is, consultation calls did not appear to be associated with greater use based on qualitative feedback.

Consultation and Supervision

Feedback about consultation calls was specifically queried among the five MCE Plus clinicians who were interviewed. Regarding consultation calls, three clinicians were very satisfied and found the calls helpful, one clinician did not find them helpful, and one clinician was ambivalent about the calls. The utilization of EBPs in supervision was not specifically queried but implementation support for MCE was reportedly also informally provided via supervision by a couple of the clinicians who serve as clinical supervisors. They reported the materials “made supervision a lot easier” and that they had interest in additional professional development “specifically on how you would use this as a supervisor”.

Adaptation

Specific methods for tailoring the intervention (changing or adapting aspects modestly or greatly) were reported by nearly all clinicians and discussed at length during many interviews. Some clinicians referred to the need to “change things a little bit” with any EBP materials, particularly manualized EBPs. One clinician said, “I think [manuals are] helpful, but to the point where you have to adapt them to work for the family. A lot of things don’t fit; you have to change things a little bit.” The types of adaptations reported seemed to maintain general fidelity to the treatment approach, but with specific changes to fit the broad age range, cognitive development, and general school setting within school behavioral health.

Use of Supplemental Resources. Most clinicians provided some commentary regarding their use of supplemental treatment resources in addition to EBP materials. As noted above, some clinicians indicated that no intervention was used as the sole treatment modality for their entire caseload, reflecting an eclectic approach to treatment in general among school behavioral health clinicians sampled.

Individualized Clinical Care. A code that emerged from approximately half of the clinicians’ data addresses other factors important for providing high quality, individualized clinical care, including assessing family readiness for change, family or student engagement, developmental stage of the child, and school setting-specific treatment (e.g., “like their relationship with the school, and what [the parent’s] schooling experience was like”). Two clinicians discussed the “danger” in being ready with active intervention techniques without some of this other information (reportedly a common misstep among less experienced clinicians and trainees). One quote that reflects this general perspective of ensuring that active treatment techniques are appropriately individualized is: “…Families are all individual; you can’t make a handout that’s going to work for everybody.” Notably, Harry mentioned: “…There are basic tenets of life that we need to remember; you don’t wear the same shirt every day, you don’t wear the same underwear every day, and if you do there are consequences…And the thing is, that’s the same thing that applies when it comes to therapy. We don’t do the same thing over and over. It doesn’t work for everyone.” Therefore, even if an EBP is adopted because the clinician is open to learning a new set of techniques, the implementation may be inconsistent if the clinician perceives those techniques to be overly-scripted, inflexible, or a poor fit with individual needs and strengths of the patient and their family.

Discussion

Remarkable rates of unmet children’s mental health needs continue to warrant attention from research and practice communities to develop and expand viable solutions to improve access to care. School settings offer tremendous potential to increase access to care for children and their families. Thus, effective implementation efforts to increase school-based clinicians’ use of empirically-supported techniques have significant public health impact. The adoption and implementation of EBPs among community-based mental health providers is critical to advancing the quality of usual care mental health services.

Current study findings indicate that in a complex service system environment such as CP-SBH, mandating training in EBPs may be associated with increased knowledge of and familiarity with EBPs, but may not impact clinician practices. Moreover, any effects of ongoing implementation support on adoption and implementation of EBPs are embedded in the context of numerous other multi-level factors. This is consistent with extant literature on EBP implementation in community settings suggests that such processes are inherently multi-level at the student, clinician, agency, and broader health care system levels (Landsverk et al. 2018; Langley et al. 2010; Proctor et al. 2009).

One goal of the study was to understand the impact of degree of implementation support (specifically, extended training with behavioral rehearsal and ongoing consultation calls) on clinicians’ adoption and implementation. However, quantitative analysis of implementation results among the 44 clinicians that were randomly assigned to a modular common elements (MCE) training condition did not show between-condition differences in knowledge, familiarity or use of EBPs. This finding that the gold standard of EBP training and ongoing support did not result in practice change is inconsistent with the literature (Herschell et al. 2010). The implication is to continue testing this gold standard of training methods in various implementation efforts in the public mental health system. Without clear results that additional training and implementation supports produce new and better clinical practices, mental health clinicians and administrators are unlikely to allocate their limited time and finances to this more expensive and intensive implementation approach. That said, there were limitations to this particular design (further discussed below) that may have resulted in too much error to detect significant between-group effects in such a naturalistic study. However, it was clear from clinician feedback that the training condition-clinician fit was not always optimal based on clinician perspectives and preferences; an important future direction may be to research the effectiveness of various training modalities when they are matched to clinician preferences as compared to randomly assigned.

Another primary goal of this study was to examine individual clinicians’ adoption and implementation of EBPs following mandated training. Quantitative data revealed significant increases in clinician knowledge of and familiarity with EBPs for all training conditions. Main effects on use of and attitudes toward EBPs were not found for any EBP training condition. Of note, baseline total scores on the EBPAS were 2.84 for MCE-trained clinicians and 2.98 for LifeSkills-trained clinicians. As compared to a national sample of 1089 mental health service providers drawn from 100 clinics across 26 states (c.f. Aarons et al. 2010), clinicians in this sample reported more positive attitudes toward new therapy techniques, including manualized treatments (strong effect size d = 1.15). This indicates a potential ceiling effect of attitudes at baseline. One related finding was that MCE-trained clinicians self-reported more use of the MATCH-ADTC manual as compared to online materials. However, clinicians reported that MCE was feasible and effective for clients with whom they used the materials.

The primary purpose of the mixed methodology was complementarity; quantitative data were used to evaluate training outcomes and qualitative interviews were used to elaborate on related questions about the implementation process and context (Palinkas et al. 2011). Interview data and the resulting Framework for Clinical Level Adoption and Implementation of EBPs in CP-SBH offer possible explanations about adoption and implementation processes in the context of CP-SBH service delivery that quantitative outcome measures were not designed to capture. Qualitative methods were much more amenable to understanding how the school behavioral health context and CP-SBH clinician experience impacts the adoption and implementation of EBPs, particularly with organizational support and daily demands of their work that the quantitative measures could not detect.

Perhaps most importantly, we learned that actual use of MCE was quite variable and in fact might have been explained by several multi-level factors related to the school setting, agency characteristics, and individual training history and experience as displayed in Fig. 1. During the coding process, Rogers’ factors of adoption were represented in codes mentioned by clinicians, especially compatibility and relative advantage. Thus, Rogers’ framework likely offers viable constructs within which to study adoption and implementation of EBPs in schools in future studies. However, trialability, observability and complexity were not often noted. This might be because training was mandated (limiting trialability), clinicians work in their own school settings (possibly limiting observability) and the EBPs were consistent with general cognitive-behavioral and problem solving skills that are not especially complex. Further study about the application of Rogers’ factors of adoption is warranted to understand adoption decisions specific to various innovations in various service contexts. In addition, the multi-level nature of implementing EBPs particularly in school settings highlights the importance of further study of both inner and outer context as outlined by the EPIS framework when understanding implementation in schools. Clinician experience of organizational support, characteristics such as years of experience and training, the demands of clinical work and peer support arose in these results as critical inner context factors. Outer context factors of school behavioral health may include the school setting or culture, partnership with students, parents, teachers and administrators and access to resources in their school and agency.

Two other qualitative findings are worth noting. First, several clinicians reported that they do not distinguish between modular and manualized approaches. Data-driven selection and sequencing of intervention strategies is strongly emphasized in MCE, but not a commonly-reported practice among this sample. Also, perspectives about the acceptability and usefulness of ongoing consultation was mixed, suggesting that although the extant literature emphasizes it as part of the gold standard of training, additional research is needed to understand how consultation format, content, and delivery fit with various types of clinicians and service settings.

Limitations

In terms of research design, only two of the three training conditions were randomly assigned. This was an artifact of working with highly-supportive community partners who agreed to have a subset of their clinicians randomly assigned to training conditions. We were fortunate to be able to include all clinicians in the study and leverage the Lifeskills training condition as a proxy comparison group. However, LifeSkills has some cognitive-behavioral sessions such as teaching coping skills and problem solving and there was some contamination between LifeSkills and MCE groups due to the stratification strategy by agency (because clinicians within agencies shared materials). Regardless, although it is possible that the groups differed as a function of the EBP, all were novel in the CP-SBH community and the design was intended to examine implementation outcomes based on training condition and in the context of the CP-SBH service delivery model. Also, no quantitative measurement of school or mental health organization characteristics was included, although qualitative findings revealed these factors did influence clinician adoption and implementation. Also, there were a small subset of clinicians lost to follow-up due to turnover but rates were consistent with what would be expected in the field for a 1 year study. All dependent measures were self-reported and there were limited psychometrics for the PEC-R as a newer.

Conclusion and Future Directions

Within broader school behavioral health implementation research, this service delivery model of CP-SBH warrants unique implementation research because CP-SBH clinicians work within the dual organizational context of their school and their agency. This presents very complex challenges to prospectively measuring organizational factors related to implementation. Also, attitudes toward one’s school placement and agency are likely to vary by clinician based on individual preferences, practices, and professional history. Future research is also needed to understand the similarities and differences in EBP implementation for the various service delivery models within SBH (i.e., school-based health centers, school-centered service delivery when community partners are not involved) to inform more precise tailoring of implementation strategies to the clinician experience and school behavioral health context. Rigorous methods to do so may be replicated in SBH settings based on Aarons et al. (2016) mixed methods investigation of the role of organizational factors in EBP sustainment. Future research on SBH should systematically measure the multiple levels of influence on adoption and implementation of EBPs, in addition to testing the differential effectiveness of implementation strategies to support uptake and sustainability of these innovations in school settings.

Notes

This is based on Byrt, Bishop, & Carlin’s prevalence-adjusted kappa statistic (Byrt et al. 1993). Due to differences in the prevalence of “Yes” and “No” categories inherent in the nature of the coding system, one of several justifications for an adjusted kappa [i.e. in a 2 × 2 cell, the “hits” for both coders applying no code is very low, if not zero (Hallgren 2012)].

References

Aarons, G. A. (2004). Mental health provider attitudes toward adoption of evidence-based practice: The evidence-based practice attitude scale (EBPAS). Mental Health Services Research, 6(2), 61–74.

Aarons, G. A., Glisson, C., Hoagwood, K., Kelleher, K., Landsverk, J., & Cafri, G. (2010). Psychometric properties and US national norms of the evidence-based practice attitude scale (EBPAS). Psychological Assessment, 22(2), 356.

Aarons, G. A., Green, A. E., Trott, E., Willging, C. E., Torres, E. M., Ehrhart, M. G., & Roesch, S. C. (2016). The roles of system and organizational leadership in system-wide evidence-based intervention sustainment: A mixed-method study. Administration and Policy in Mental Health and Mental Health Services Research, 43(6), 991–1008.

Aarons, G. A., Hurlburt, M., & Horwitz, S. M. (2011). Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research, 38(1), 4–23.

Bains, R. M., & Diallo, A. F. (2016). Mental health services in school-based health centers: Systematic review. The Journal of School Nursing, 32(1), 8–19.

Baltimore City Public Schools. (2018). District profile—Spring 2018. Baltimore, MD: Baltimore City Public Schools. Retrieved from http://www.baltimorecityschools.org/cms/lib/MD01001351/Centricity/Domain/8048/999-DistrictProfile.pdf.

Barrett, S., Eber, L., & Weist, M. (2013). Advancing education effectiveness: Interconnecting school mental health and school-wide positive behavior support. Baltimore: Center for School Mental Health.

Beidas, R. S., Edmunds, J. M., Marcus, S. C., & Kendall, P. C. (2012). Training and consultation to promote implementation of an empirically supported treatment: A randomized trial. Psychiatric Services, 63(7), 660–665.

Beidas, R. S., & Kendall, P. C. (2010). Training therapists in evidence-based practice: A critical review of studies from a systems-contextual perspective. Clinical Psychology: Science and Practice, 17(1), 1–30.

Beidas, R. S., Marcus, S., Aarons, G. A., Hoagwood, K. E., Schoenwald, S., Evans, A. C., et al. (2015). Predictors of community therapists’ use of therapy techniques in a large public mental health system. JAMA Pediatrics, 169(4), 374–382.

Beidas, R. S., Stewart, R. E., Adams, D. R., Fernandez, T., Lustbader, S., Powell, B. J., et al. (2016). A multi-level examination of stakeholder perspectives of implementation of evidence-based practices in a large urban publicly-funded mental health system. Administration and Policy in Mental Health and Mental Health Services Research, 43(6), 893–908.

Borntrager, C. F., Chorpita, B. F., Higa-McMillan, C., & Weisz, J. R. (2009). Provider attitudes toward evidence-based practices: Are the concerns with the evidence or with the manuals? Psychiatric Services, 60(5), 677–681.

Botvin, G. J., Griffin, K. W., Diaz, T., Scheier, L. M., Williams, C., & Epstein, J. A. (2000). Preventing illicit drug use in adolescents: Long-term follow-up data from a randomized control trial of a school population. Addictive Behaviors, 25(5), 769–774.

Botvin, G. J., Griffin, K. W., & Nichols, T. D. (2006). Preventing youth violence and delinquency through a universal school-based prevention approach. Prevention Science, 7(4), 403–408.

Brimhall, K. C., Fenwick, K., Farahnak, L. R., Hurlburt, M. S., Roesch, S. C., & Aarons, G. A. (2016). Leadership, organizational climate, and perceived burden of evidence-based practice in mental health services. Administration and Policy in Mental Health and Mental Health Services Research, 43(5), 629–639.

Bringewatt, E. H., & Gershoff, E. T. (2010). Falling through the cracks: Gaps and barriers in the mental health system for america’s disadvantaged children. Children & Youth Services Review, 32(10), 1291–1299.

Burns, B. J., & Costello, E. J. (1995). Children’s mental health service use across service sectors. Health Affairs, 14(3), 147.

Byrt, T., Bishop, J., & Carlin, J. B. (1993). Bias, prevalence and kappa. Journal of Clinical Epidemiology, 46(5), 423–429.

Charmaz, K. (2014). Constructing grounded theory. Thousand Oaks: Sage.

Child and Adolescent Mental Health Division. (2004). Evidence-based services committee: 2004 biennial report, summary of effective interventions for youth with behavioral and emotional needs. Honolulu: Hawaii Department of Health, Child and Adolescent Mental Health Division.

Chorpita, B. F., Daleiden, E. L., & Weisz, J. R. (2005). Identifying and selecting the common elements of evidence based interventions: A distillation and matching model. Mental Health Services Research, 7(1), 5–20.

Chorpita, B. F., & Weisz, J. R. (2009). Modular approach to therapy for children with anxiety, depression, trauma, or conduct problems (MATCH-ADTC). Satellite Beach: PracticeWise, LLC.

Connors, E. H., Stephan, S. H., Lever, N., Ereshefsky, S., Mosby, A., & Bohnenkamp, J. (2016). A national initiative to advance school mental health performance measurement in the US. Advances in. School Mental Health Promotion, 9(1), 50–69.

Daleiden, E., Lee, J., & Tolman, R. (2004). Annual evaluation report: Fiscal year 2004. Honolulu: Hawaii Department of Health, Child and Adolescent Mental Health Division.

Damschroder, L. J., Aron, D. C., Keith, R. E., Kirsh, S. R., Alexander, J. A., & Lowery, J. C. (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4(1), 50.

Flaherty, L. T., & Weist, M. D. (1999). School-based mental health services: The baltimore models. Psychology in the Schools, 36(5), 379–389.

Flaherty, L. T., Weist, M. D., & Warner, B. S. (1996). School-based mental health services in the united states: History, current models and needs. Community Mental Health Journal, 32(4), 341–352.

Forman, S. G. (2015). Implementation of mental health programs in schools: A change agent’s guide. Washington, DC: American Psychological Association.

Foster, S., Rollefson, M., Doksum, T., Noonan, D., Robinson, G., & Teich, J. (2005). School mental health services in the United States, 2002–2003. Washington, DC: Substance Abuse and Mental Health Services Administration.

Franklin, C., & Hopson, L. M. (2007). Facilitating the use of evidence-based practice in community organizations. Journal of Social Work Education, 43(3), 377–404.

Gallon, S. L., Gabriel, R. M., & Knudsen, J. R. (2003). The toughest job you’ll ever love: A pacific northwest treatment workforce survey. Journal of Substance Abuse Treatment, 24(3), 183–196.

Gioia, D. (2007). Using an organizational change model to qualitatively understand practitioner adoption of evidence-based practice in community mental health. Best Practices in Mental Health, 3(1), 1–15.

Glisson, C., Schoenwald, S. K., Kelleher, K., Landsverk, J., Hoagwood, K. E., Mayberg, S., et al. (2008). Therapist turnover and new program sustainability in mental health clinics as a function of organizational culture, climate, and service structure. Administration and Policy in Mental Health and Mental Health Services Research, 35(1–2), 124–133.

Hallgren, K. A. (2012). Computing inter-rater reliability for observational data: An overview and tutorial. Tutorials in Quantitative Methods for Psychology, 8(1), 23–34.

Herschell, A. D., Kolko, D. J., Baumann, B. L., & Davis, A. C. (2010). The role of therapist training in the implementation of psychosocial treatments: A review and critique with recommendations. Clinical Psychology Review, 30(4), 448–466.

Hoagwood, K., & Erwin, H. D. (1997). Effectiveness of school-based mental health services for children: A 10-year research review. Journal of Child & Family Studies, 6(4), 435–451.

Jensen-Doss, A., Hawley, K. M., Lopez, M., & Osterberg, L. D. (2009). Using evidence-based treatments: The experiences of youth providers working under a mandate. Professional Psychology: Research and Practice, 40(4), 417.

Kelley, S. D., de Andrade, A. R., Sheffer, E., & Bickman, L. (2010). Exploring the black box: Measuring youth treatment process and progress in usual care. Administration and Policy in Mental Health and Mental Health Services Research, 37(3), 287–300.

Landsverk, J., Brown, C. H., Chamberlain, P., Palinkas, L., Ogihara, M., Czaja, S., et al. (2018). Chapter 13: Design and analysis in dissemination and implementation research. In C. R. Brownson, G. A. Colditz, & E. K. Proctor (Eds.), Design and analysis in dissemination and implementation research (2nd ed., pp. 201–228). New York: Oxford University Press.

Langley, A. K., Nadeem, E., Kataoka, S. H., Stein, B. D., & Jaycox, L. H. (2010). Evidence-based mental health programs in schools: Barriers and facilitators of successful implementation. School Mental Health, 2(3), 105–113.

Lever, N., Stephan, S., Castle, M., Bernstein, L., Connors, E., Sharma, R., et al. (2015). Community-partnered school behavioral health: State of the field in Maryland. Baltimore: Center for School Mental Health. Retrieved from http://csmh.umaryland.edu/media/SOM/Microsites/CSMH/docs/Resources/Briefs/FINALCP.SBHReport3.5.15_2.pdf.

Lim, A., Nakamura, B. J., Higa-McMillan, C. K., Shimabukuro, S., & Slavin, L. (2012). Effects of workshop trainings on evidence-based practice knowledge and attitudes among youth community mental health providers. Behaviour Research and Therapy, 50(6), 397–406.

Lyon, A. R., Ludwig, K., Romano, E., Koltracht, J., Vander Stoep, A., & McCauley, E. (2014). Using modular psychotherapy in school mental health: Provider perspectives on intervention-setting fit. Journal of Clinical Child & Adolescent Psychology, 43(6), 890–901.

McHatton, P. (2009). Grounded theory. In J. Paul, J. Kleinhammer-Tramill, & K. Fowler (Eds.), Qualitative research methods in special education. Denver: Love Publishing Company.

McHugh, R. K., & Barlow, D. H. (2010). The dissemination and implementation of evidence-based psychological treatments: A review of current efforts. American Psychologist, 65(2), 73–84.

McMillen, J., Hawley, K., & Proctor, E. (2016). Mental health clinicians’ participation in web-based training for an evidence supported intervention: Signs of encouragement and trouble ahead. Administration & Policy in Mental Health & Mental Health Services Research, 43(4), 592–603.

Miller, W. R., Yahne, C. E., Moyers, T. B., Martinez, J., & Pirritano, M. (2004). A randomized trial of methods to help clinicians learn motivational interviewing. Journal of Consulting and Clinical Psychology, 72(6), 1050.

Nakamura, B. J., Higa-McMillan, C. K., Okamura, K. H., & Shimabukuro, S. (2011). Knowledge of and attitudes towards evidence-based practices in community child mental health practitioners. Administration and Policy in Mental Health and Mental Health Services Research, 38(4), 287–300.

Palinkas, L. A., Aarons, G. A., Horwitz, S., Chamberlain, P., Hurlburt, M., & Landsverk, J. (2011). Mixed method designs in implementation research. Administration and Policy in Mental Health and Mental Health Services Research, 38(1), 44–53.

Pignotti, M., & Thyer, B. A. (2009). Use of novel unsupported and empirically supported therapies by licensed clinical social workers: An exploratory study. Social Work Research, 33(1), 5–17.

Policy Leadership Cadre for Mental Health In Schools. (2001). Mental health in schools: Guidelines, models, resources, & policy considerations. Los Angeles: Center for Mental Health in Schools and Student/Learning Supports at UCLA. Retrieved from http://smhp.psych.ucla.edu.

Proctor, E. K., Landsverk, J., Aarons, G., Chambers, D., Glisson, C., & Mittman, B. (2009). Implementation research in mental health services: An emerging science with conceptual, methodological, and training challenges. Administration and Policy in Mental Health and Mental Health Services Research, 36(1), 24–34.

Rogers, E. M. (2003). Diffusion of innovations (5th ed.). New York: The Free Press.

Rones, M., & Hoagwood, K. (2000). School-based mental health services: A research review. Clinical Child and Family Psychology Review, 3(4), 223–241.

Stephan, S., Westin, A., Lever, N., Medoff, D., Youngstrom, E., & Weist, M. (2012). Do school-based clinicians’ knowledge and use of common elements correlate with better treatment quality? School Mental Health, 4(3), 170–180.

Stumpf, R. E., Higa-McMillan, C. K., & Chorpita, B. F. (2009). Implementation of evidence-based services for youth: Assessing provider knowledge. Behavior Modification, 33(1), 48–65.

Substance Abuse and Mental Health Services Administration. (2014). National behavioral health quality framework. Retrieved from https://www.samhsa.gov/data/national-behavioral-health-quality-framework.

Weist, M., Lever, N., Stephan, S., Youngstrom, E., Moore, E., Harrison, B., et al. (2009). Formative evaluation of a framework for high quality, evidence-based services in school mental health. School Mental Health, 1(4), 196–211.

Weist, M. D. (1997). Expanded school mental health services. In T. H. Ollendick, R. J. Prinz (eds) Advances in clinical child psychology (Vol. 19, pp. 319–352) Boston: Springer.

Weist, M. D., Ambrose, G. M, & Lewis, C. P. (2006). Expanded school mental health: A collaborative community-school example. Children & Schools, 28(1), 45–50.

Weist, M. D., & Evans, S. W. (2005). Expanded school mental health. Journal of Youth & Adolescence, 34(1), 3–6.

Weist, M. D., Evans, S. W., & Lever, N. A. (2008). Handbook of school mental health: Advancing practice and research. Berlin: Springer Science & Business Media.

Weisz, J. R., Chorpita, B. F., Palinkas, L. A., Schoenwald, S. K., Miranda, J., Bearman, S. K., et al. (2012). Testing standard and modular designs for psychotherapy treating depression, anxiety, and conduct problems in youth: A randomized effectiveness trial. Archives of General Psychiatry, 69(3), 274–282.

Funding

This study was funded by Behavioral Health System Baltimore (PI: Hoover).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All author declares that they have no conflict of interest.

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Rights and permissions

About this article

Cite this article

Connors, E.H., Schiffman, J., Stein, K. et al. Factors Associated with Community-Partnered School Behavioral Health Clinicians’ Adoption and Implementation of Evidence-Based Practices. Adm Policy Ment Health 46, 91–104 (2019). https://doi.org/10.1007/s10488-018-0897-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10488-018-0897-3