Abstract

School safety is of great concern for prevention researchers, school officials, parents, and students, yet there are a dearth of assessments that have operationalized school safety from an organizational framework using objective tools and measures. Such a tool would be important for deriving unbiased assessments of the school environment, which in turn could be used as an evaluative tool for school violence prevention efforts. The current paper presents a framework for conceptualizing school safety consistent with Crime Prevention through Environmental Design (CPTED) model and social disorganization theory, both of which highlight the importance of context as a driver for adolescents’ risk for involvement in substance use and violence. This paper describes the development of a novel observational measure, called the School Assessment for Environmental Typology (SAfETy), which applies CPTED and social disorganizational frameworks to schools to measure eight indicators of school physical and social environment (i.e., disorder, trash, graffiti/vandalism, appearance, illumination, surveillance, ownership, and positive behavioral expectations). Drawing upon data from 58 high schools, we provide preliminary data regarding the validity and reliability of the SAfETy and describe patterns of the school safety indicators. Findings demonstrate the reliability and validity of the SAfETy and are discussed with regard to the prevention of violence in schools.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

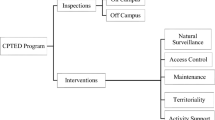

School safety continues to be a high priority among policy makers, educators, and prevention researchers. Much of the research on school safety has relied upon student self-report and disciplinary data, as relatively few observational tools have been validated for assessing school safety and the physical environment of schools. Yet, self-report measures and disciplinary data may be sensitive to bias and thus be less valid indicators of the school context as compared to observations conducted by outside assessors. The current paper builds on the Crime Prevention through Environmental Design (CPTED) framework (see Brantingham and Brantingham 1981; Cisneros 1995; Mair and Mair 2003; Newman 1995, 1996; Westinghouse Electric 1977a, 1977b), which emphasizes the importance of defensible space and physical features of an environment to create informal social controls (Newman 1972). A related area of research based on social disorganization theory (Shaw and McKay 1969) further highlights the importance of contextual variables for determining individuals’ risk for involvement in deviant behavior. Building on these two frameworks, a novel observational measure was created, called the School Assessment for Environmental Typology (SAfETy). Specifically, the SAfETy was developed to serve as an observational tool that delineates and measures school physical and social environment indicators theorized to be linked with behavioral and academic outcomes. The current focus on schools and school safety extends an earlier line of neighborhood observational environmental research by Furr-Holden et al. (2008, 2010). The overall goals of the present study were to describe the development of the SAfETy, provide preliminary evidence of is reliability and validity, and summarize information on the pattern of school environment indicators across a number of dimensions. This line of research has important implications for school-based prevention research and informs the selection of tools for evaluating school safety and violence prevention efforts.

Theoretical Basis for Environmental Assessments

The physical environment has been purported to impact crime, response to crime, and social control. The notion of defensible space was first developed by Oscar Newman, who suggested that the physical features of an environment create informal social controls to build “a social fabric that defends itself” (Newman 1972, p. 3). Newman theorized that changes in the physical aspects of a space could impact indicators of social control– such as territoriality, natural surveillance, a sense of security, and the social milieu—and therefore reduce criminality (Newman 1972). Crime Prevention through Environmental Design (CPTED) built upon this framework and integrated principles from criminal justice, architecture, and urban planning to suggest that changes to the physical environment (e.g., space use, layout, design) can decrease the likelihood of violence and crime (Cisneros 1995; Crowe 1991; Mair and Mair 2003).

A related line of research has focused on social disorganization theory (Sampson et al. 1997; Shaw and McKay 1969), which contends that individuals’ risk for involvement in deviant behavior are influenced by contextual variables. The theory suggests that chaotic and disorganized social and structural aspects of a community create an environment in which deviant behaviors become normal and rational responses (Shaw and McKay 1969). Under this theory, structural aspects of a community, such as concentration of poverty, ethnic heterogeneity, and residential mobility, affect the level of organization present within an environment. This results in reduced community cohesiveness, thereby weakening informal social controls, reducing supervision and intervention behaviors, and increasing opportunities for crime and deviance (Sampson and Groves 1989; Sampson et al. 1997).

Transferring Neighborhood Theory and Evidence to Schools

Assessing the Neighborhood Environment

Both the CPTED model and social disorganization theory have been used to guide the study of neighborhood environment and individual-level behavior. For example, several studies have utilized administrative data (e.g., US Census data) to characterize the neighborhood environment and its relationship with substance use, sexual risk behavior, and behavioral problems (Beyers et al. 2003; Boardman et al. 2001; Cohen et al. 2003). Other researchers have used a combination of indicators of institutional resources (e.g. recreational centers, schools), incident data (e.g., shootings, rates of violent crime), and/or self-report surveys of relationships (e.g. coping skills) and collective efficacy, which are often sampled at random from individuals within the neighborhood and then aggregated up to the neighborhood level (for examples, see Leventhal and Brooks-Gunn 2000; Sampson and Groves 1989).

One of the relatively few pure observational assessments of neighborhood environment to address these limitations is the Neighborhood Inventory for Environmental Typology (NIfETy; Furr-Holden et al. 2010; see Samspon and Raudenbush 1999 for an additional example of a neighborhood observational measure). This instrument captures seven indicators of the neighborhood environment (i.e., physical layout; types of structures; adult activity; youth activity; physical disorder and order; social disorder and order; indicators of violence, alcohol, and other drugs). The NIfETy instrument was previously validated using child self-report of violence victimization and witnessing violence as well as local crime data (Furr-Holden et al. 2010). Several other studies have used the NIfETy to examine adolescent substance use (Furr-Holden et al. 2011a, b), childhood anxiety (Furr-Holden et al. 2011b), risk-taking propensity (Furr-Holden et al. 2012) and academic performance (Milam et al. 2010).

Connection Between School Environment and Student Perceptions and Behaviors

Several school- and classroom-level indicators of disorder (such as poverty level, school-size, teacher characteristics, concentration of students with behavior problems) have been linked with diminished perceptions of safety, attitudes favoring aggressive retaliation, and youth violence involvement (Birnbaum et al. 2003; Bradshaw et al. 2007; Koth et al. 2008). Furthermore, a disordered school environment has been shown to undermine teachers’ ability to efficiently manage student behavior and to negatively impact student learning (Gregory et al. 2011; Milam et al. 2010). Furthermore, studies have shown that indicators of social control, such as the presence of adults and a lack of physical deterioration, decrease the belief that violence is acceptable and will go unnoticed (Kitsantas et al. 2004; Mayer and Leone 1999; Van Dorn 2004; Wilcox et al. 2006). Thus, consistent with CPTED (Crowe 1991), the design of the school’s physical environment could have an influence on the likelihood of deviant behavior. In fact, there is a growing body of literature indicating that aspects of the physical environment, such as building age, air temperature, lighting, ambient noise, and design features are associated with student academic performance and student discipline problems (see Uline and Tschannen-Moran 2008; Bosch 2006; Plank et al. 2009).

Extant Observational Measures of the School Environment

Due in part to these findings the Environmental Protection Agency (EPA 2012) and the National Clearinghouse developed tools to help schools assess for such things as air, water, chemical, and construction safety hazards and more general design and planning recommendations (http://www.ncef.org/checklist). One of the earliest projects using CPTED involved structural alterations of four diverse schools to increase opportunities for surveillance, limit opportunities to access isolated areas, and enhance the definition of boundaries (Wallis and Ford 1981). Although no change was found on student perceptions of safety, there was a reduction in crime victimization (Wallis and Ford 1981). Also drawing upon the theory behind CPTED, Astor and colleagues interviewed students and teachers in Michigan public schools utilizing maps and surveys to identify dangerous “hot spots” of violence and deviance in the schools (Astor et al. 1999, 2001; Astor and Meyer 2001). Their work demonstrated that “hot spots” were perceived by students and staff to be “unowned” spaces and that students and staff believed that interventions should work to redefine the spaces by creating an internal sense of ownership, rather than imposing external controls such as police or cameras (Astor et al. 1999, 2001; Astor and Meyer 2001). Similarly, Wilcox and colleagues studied the relationship between school crime and defensible space by examining school-specific territoriality, natural surveillance, and school image/milieu (Wilcox et al. 2006; CPTED School Security Assessment). They examined 65 public schools across the state, utilizing surveys of students and staff, as well as observations of the physical and social environment. Although they found few significant associations between the physical environment and school crime in the student-reported measures, there were significant associations between teacher-reported measures of crime and aspects of the physical environment (Wilcox et al. 2006).

Taken together, the extant research in this area suggests that there is great interest in the development and use of observational measures of school safety, particularly as it relates to the physical environment of schools. However, there are relatively few well-validated observational measures of the physical environment of schools that are currently available to researchers or school personnel. More specifically, the previously validated measures including the Neighborhood Inventory for Environmental Typology (NIfETy) Instrument (Furr-Holden et al. 2008, 2010), the CPTED School Security Assessment (Wilcox et al. 2006), the Environmental Protection Agency (EPA) Healthy School Environment Assessment Tool (EPA 2012), and the National Clearinghouse for Educational Facilities Safe School Facilities checklist (http://www.ncef.org/checklist) do not provide a comprehensive assessment of the school environment that can be used to understand school safety and inform violence prevention efforts. Specifically, while the NIfETy can help understand the environment around schools and has been utilized to examine safety in route to school (e.g., Milam et al. 2014), it does not include assessments of the school environment. While the CPTED School Security Assessment (Wilcox et al. 2006) and the EPA’s Healthy School Environmental Assessment Tool (EPA 2012) are specific to school environments, they each focus on a specific aspect of the environment such as surveillance, disorder, environmental hazards, or interactions. Furthermore, other measures such as the National Clearinghouse for Educational Facilities Safe School Facilities checklist (http://www.ncef.org/checklist), offer specifications for what an environment should look like, but are not assessments of the current status of a space. As a result, there is a great need for additional development of observational tools that can be used to objectively assess the physical environment of schools.

Overview of the Current Study

The majority of both descriptive and prevention-related studies of school safety have used survey-based approaches and relied heavily on administrative indicators of safety (e.g., suspension rates, discipline data) (e.g., Kitsantas et al. 2004; Mayer and Leone 1999; Van Dorn 2004). Yet, observations of the school environment may further inform our understanding of the school context and provide objective evaluations of school-level social processes and physical structures to more accurately capture environmental factors. The limited attention to the association between observational measures of the physical environment of a school and levels of school violence and crime indicate a need for further research and more validated measures. The SAfETy was developed to fill this gap in the literature. Specifically, the SAfETy was developed to serve as an observational tool that describes the school environment across three broad domains of school ownership, disorder, and surveillance. This paper provides a description of the SAfETy assessment tool and reports preliminary evidence of its reliability and validity. We also provide a summary of patterns of observational data captured through the observational system. This work may inform the design and evaluation of school organizational and environmental efforts aimed at preventing violence in schools.

Methods

Sample

The data for the current study come from Maryland’s Safe and Supportive Schools Initiative (MDS3), which is a joint project of the Maryland State Department of Education (MSDE), Sheppard Pratt Health System, and Johns Hopkins University to improve school climate. Schools’ participation in the MDS3 project was voluntary. Districts were approached for participation by MSDE in order of perceived need for school climate prevention programming beginning in the spring of 2011. All approached districts agreed to participate and upon expressing interest in the MDS3 Initiative, district-specific principal meetings were conducted to obtain school-level and principal commitment to the project. The 58 schools were located in 12 of the state’ school districts throughout the state; 13 schools were in urban or urban fringe locations, 29 in suburban locations, and 16 in rural locations. For this paper, we used the first two waves of data collected: Wave 1 which occurred in the fall and Wave 2 which occurred in the spring of the same academic year. The average student enrollment of the schools was 1282.8 (SD = 467.9). The average minority enrollment was 45.2 % (SD = 25.3), including 33.8 % Black (SD = 25.0 %), 4.9 % Hispanic (SD = 3.3 %), 3.3 % Asian (SD = 2.9 %), 3.2 % American Indian/Pacific Islander (SD = 1.9 %). See Table 1 for additional school-level descriptive characteristics of the school sample. We also collected data on participant ratings of school climate via an on-line survey, which was completed by 28,104 adolescents across all 58 schools. An average of 25.40 classrooms per school participated in the survey during the spring (i.e., Wave 2). Data were also available on 4306 staff across all 58 schools during the spring (Wave 2). The project was approved by the Institutional Review Boards at Johns Hopkins University and the University of Virginia.

Measures

SAfETy

Consistent with CPTED, the SAfETy was conceptually developed to include items designed to measure the following broad aspects of the school environment: school ownership (e.g., murals, positive behavioral expectations), disorder (e.g., litter, graffiti, alcohol paraphernalia) and surveillance (e.g., school police officers, surveillance cameras). The overall structure and administration of the SAfETy was modeled in large part after the parallel NIfETy neighborhood measure (Furr-Holden et al. 2008, 2010). For the SAfETy, data collectors observed the physical environment of both the school interior and exterior in nine different locations, including the entrance to the school grounds, the entrance to the school building, the perimeter of the school building, hallways, stairwells, cafeteria, a playing field, and staff and student parking lots. Time in each location varied by its size with observers spending approximately 30 min observing the perimeter of the school building, 20 min for the cafeteria, playing field, and parking lots, and 10 min at the entrance to the school grounds and building and the hallways and stairwells. The majority of the 259 items involve counts of observed aspects of the environment, with a few questions utilizing a Likert scale (e.g., raters perceptions of an area or adequate lighting) or a dichotomous indicator of yes/no (e.g., presence of staff in hallway). Decisions about scale metrics were made through extensive pilot testing of the measure in three non-project schools within the state. Specifically, these non-project schools were involved in other youth violence research related projects and were selected on the basis of similar physical environment characteristics as the 58 MDS3 Project high schools (e.g., physical grounds, age of students). Sample observations were conducted to ensure the items, response options, and scoring were practical and that the theoretical domains mapped onto observable features of the school environment.

MDS School Climate Survey

School climate data come from a school-wide survey administered to students and staff at each school. The measure comprised the domains of safety, engagement, and environment (for additional details see Bradshaw et al. 2014). These self-report scales assessed perceptions of an anti-bullying culture, student connectedness, low disorder, physical comfort, and the presence of supportive services for youth. These student- and staff-report scales were adapted from previously developed measures and have demonstrated adequate psychometric properties (see Bradshaw et al. 2014).

Procedure

SAfETy Training

The data collectors received training in four stages: an initial didactic session, on-site practice, on-site inter-observer agreement or reliability, and on-site recalibration. A representative set of items was selected for both the reliability and recalibration assessment that captured measurements of school ownership, disorder, and surveillance across multiple locations.

Didactic Training and On-site Practice

Each data collector was required to attend a 4-h didactic training led by an expert observer. At this training each data collector received a manual, which included information about the study and a detailed description of the measure and each individual item. The training consisted of a standard presentation of procedures for the study as well as a detailed description of all items utilizing photographs and examples. Additionally, data collectors spent time learning how to define the appropriate boundaries for each observational area (e.g., 50 feet for hallways) and the order in which areas were to be observed (e.g., begin with entrance to the school grounds as students arrive on campus). Data collectors also spent time learning how to operate the electronic data collection device. After spending at least two additional hours reviewing the SAfETy procedures and items, data collectors had on-site practice using the SAfETy measure. Three to four hours were spent working with an expert observer collecting data in a non-project school. Data were collected in all locations of the school, and expert observers provided feedback and answered questions throughout the practice.

Initial Reliability

Following the initial training and practice sessions, observers completed the on-site inter-observer agreement assessment (reliability session). Data collectors and an expert observer/trainer collected data simultaneously at a non-project school in the following areas: entrance to the school building, two hallways, two stairwells, and one playing field. Inter-observer agreement was calculated using 45 of the items observed in the six areas mentioned above by summing the total number of agreements between the observer and the data collector divided by the total number of items and multiplying by 100 % (Barlow and Hersen 1984). Data collectors were required to achieve an inter-observer agreement of 80 % or higher. Data collectors failing the first reliability assessment were given additional on-site training and then retested. Average inter-observer agreement across all trainings was .88 (see Table 2).

On-site Recalibration

On at least one occasion during active data collection, data collectors were joined by an expert observer/trainer for an on-site recalibration session. Recalibration followed nearly identical procedures as the reliability session; the data collector and expert observer collected items for the following areas: cafeteria, two hallways, two stairwells, and one playing field. Sixty items from these areas were used to calculate inter-observer agreement, which was also assessed using percent agreement. If the data collectors did not calibrate at 80 % or above, retraining was conducted and an additional recalibration test scheduled. Average inter-observer agreement was .87 across all recalibrations (see Table 2).

Observation Procedures

The data collectors were randomly assigned to schools within one each of the four state regions (central, south, east, and west). Data were collected over the course of three separate days at each school (i.e., 1 full-day observation, an AM observation, and a PM observation), for a total of approximately 2 days of observation. The staggering across different days was intended to ensure multiple observations of each item and location across multiple days. All data were entered in real-time on a Samsung handheld tablet using the Pendragon mobile data collection software. Electronic data collection helped facilitate accuracy (i.e., boundary parameters) and completeness of records. Observers transmitted data to a secure server upon completion of each day of the observation.

MDS3 School Climate Survey Administration

The anonymous, on-line MDS3 School Climate Survey was administered in the spring of the school year using a waiver of active parental consent process for parents and youth assent. The survey was administered online in language arts classrooms to approximately 7 classrooms of 9th grade students and 6 classrooms for all other grade levels of students (10th, 11th, and 12th grade students) at each school. School staff administered the survey following a written protocol (see Bradshaw et al. 2014 for more details about survey administration). School staff also completed a anonymous online survey, which assessed a parallel scales to the students.

Results

Creation of the School Assessment for Environmental Typology (SAfETy)

The creation of constructs in the SAfETy was accomplished through a multi-stage process. We first conducted descriptive analyses to examine assumptions about the normality using recommended values for asymmetry and kurtosis. This informed decisions about continuous and dichotomous categorizations of the variables. We then conducted exploratory factor analyses (EFA) in Mplus 7.1 (Muthén and Muthén, 2012) using data from Wave 1. Items with factor loadings above .4 were kept. Based on the EFA results, we conducted confirmatory factor analyses (CFA) on the Wave 1 data to test the resulting factor structure separately for each of the indicators (see Table 3 for a listing of indicators and items). Finally, a CFA was conducted on the separate Wave 2 sample for each of the indicators. Model fit was assessed utilizing the comparative fit index (CFI), Tucker–Lewis index (TLI), root mean-square error of approximation (RMSEA), and standardized root mean square residual (SRMR). For the CFI and TLI a value of .90 or higher was considered acceptable fit (Bentler and Bonnett 1980), with those closer to .95 considered to be a well-fitting model (Hu and Bentler 1999). For RMSEA, a fit of .06 or less and for SRMR a fit of .08 or less indicated a good fit (Hu and Bentler 1999) (Table 3).

Exploratory and CFA identified 8 different indicators across the broad domains of school ownership, disorder, and surveillance. These included disorder, trash, graffiti/vandalism, appearance, illumination, surveillance, ownership, and positive behavioral expectations. Table 3 presents the individual items included in each of the indicators, the prevalence of the item across schools, as well as their factor loadings. Items in the disorder indicator were the least frequently observed across the two waves of data collection, with the presence of bars on windows having the lowest prevalence (i.e., 5.5 % of schools Wave 1 and 3.4 % of schools Wave 2). Trash and graffiti/vandalism were observed in the majority of schools in the majority of locations; however, most schools were rated as being adequately maintained. Indicators of surveillance efforts (e.g., cameras) were frequently observed in both the interior and exterior locations of the school. The interior locations had the additional security benefit as being rated as adequately illuminated. Signs of ownership were most common in exterior locations whereas positive behavioral expectations were more often found in interior locations.

Reliability Analyses

Internal Consistency

Internal consistency reliability was assessed using Cronbach’s α, which was calculated for each of the indicators developed using EFA/CFA. An alpha was calculated for each wave of data collection. While there is no universal cutoff for acceptable Cronbach’s α (Nunnally 1978; Schmitt 1996) an alpha greater than .70 is considered as indicating moderate internal consistency. For the purposes of this investigation, the following evaluation criteria were used for the alpha coefficients: minimal (<.60); moderate (.60–.69); extensive (.70–.79); and exemplary (≥.80). Specifically, graffiti/vandalism had exemplary internal consistency for both Wave 1 (α = .82) and Wave 2 (α = .87). Trash (α = .72), appearance (α = .76), as well as surveillance (α = .72) had extensive internal consistency for Wave 1. The remaining constructs (i.e., disorder α = .61; illumination α = .65; ownership α = .69) had moderate internal consistency in Wave 1 with the exception of positive behavioral expectations (α = .59), which had minimal internal consistency. For Wave 2, surveillance (α = .78) had extensive internal consistency. Trash (α = .68), illumination (α = .69), appearance (α = .63), and the positive behavioral expectations construct (α = .67) had moderate internal consistency. The ownership (α = .37) and disorder (α = .47) constructs had minimal internal consistency for Wave 2. Additional details on the reliability, including the intraclass correlation coefficients (ICCs; measure of inter-rater reliability) for Wave 1 are provided in Tables 4 and 5.

Test Retest

Stability of the constructs was measured through a test–retest process where the correlation between all items/indices was calculated for each item in the scale (see Table 3). Specifically, 31 items were assessed for test retest using full-day observation and the corresponding item in the AM or PM observation during the same wave of data collection. We were limited to the 31 items that were included in the scales described above and appeared in both the Day 1 observation and the AM or PM observations. Spearman correlations were used for skewed continuous items and polychoric correlations were used for categorical items. Twenty-four of the 31 items (77.4 %) had significant correlations between the Day 1 observation and the corresponding AM/PM observation. The correlations ranged from −1.0 to 1.0. Lack of test–retest reliability was found for items primarily on the disorder construct, which had a low prevalence. Items assessed on the playing fields and the hallways, for which the assessment protocol dictated observing different locations within these broader areas each day, also had non-significant correlations.

Validity Analysis

Convergent and divergent validity were assessed using bivariate correlations of the indices for the SAfETy with both students’ and staff perceptions of school climate (Table 6). Criterion validity was assessed using bivariate correlations of the individual constructs for the SAfETy with administrative data obtained from the Maryland State Department of Education (e.g., suspension rate, high school assessment [HSA] pass rate, truancy rate, and attendance rate) (Table 7).

The most consistent associations identified for the school climate data involved those associated with the trash and graffiti/vandalism indicators. An increase in the amount of trash observed at school was associated with staff perceptions of more bullying. Additionally, both students and staff also perceived a more disordered climate and had less favorable perceptions of physical comfort when there was more trash observed. Likewise higher graffiti/vandalism observed was associated with staff perceptions of more bullying and a more disordered climate. It was also related to less favorable perceptions of physical comfort for both students and staff. Likewise, increased presence of graffiti/vandalism was related to less favorable perceptions of student connectedness and poorer student and staff perceptions of the availability for supportive services. Fewer significant correlations were found with the other constructs, with those that were present occurring between constructs and ratings of disorder and physical comfort. A similar pattern of findings was identified for correlations with administrative data. The presence of trash and graffiti/vandalism were associated with higher rates of truancy, reduced attendance, and lower performance on the state’s standardized academic test.

Discussion

Relevance to Theory and Prior Research

This paper aimed to apply the CPTED framework (Cisneros 1995; Crowe 1991; Mair and Mair 2003) and related theories regarding social disorganization (Sampson et al. 1997; Shaw and McKay 1969) to the school setting. Our findings demonstrated that items based on the CPTED framework can be identified and measured in a school, and that groupings of items can be used to characterize the school environment. This work also highlights potential aspects of the physical environment of a school that are associated with perceptions of a positive school climate. The results from this study further suggested that this association may be particularly relevant for indicators of physical disorganization (i.e., trash, graffiti/vandalism) and in relation to staff reports of school climate, as compared to student perceptions.

This paper extends previous work and theory focusing on the role of the school physical environment in determining school safety. For example, based on the findings of Astor and colleagues (Astor et al. 1999, 2001; Astor and Meyer 2001) that different spaces may have unique properties that made them susceptible to violence, the SAfETy included assessments of nine different locations across the school. Interestingly, analytic procedures did not indicate a divide between inside/outside locations or normally supervised (e.g., cafeteria, school entrance)/unsupervised (e.g., stairwells, parking lots) locations as evidenced by the fact that indoor and outdoor locations loaded on the same factor. This perhaps suggests that the characteristics measured in the SAfETy are similar across locations within specific school environments. The grouping of locations within a school allows for school-level analyses of various constructs, which facilitates understanding aspects of the school physical environment as a component or even a predictor of school climate or student outcomes. Unfortunately what could not be assessed with this grouping was the hypothesis suggested by Astor et al., that specific characteristics of a place are related to the violence that occurs in that space (Astor et al. 1999, 2001; Astor and Meyer 2001).

The current study also extended previous research by Wilcox et al. (2006), which identified features of the school physical environment associated with victimization and perceptions of school safety. Similar to their work, our findings suggested stronger associations between measures of the school physical environment and perceptions of the school environment for staff than for students. Wilcox et al. (2006) proposed that this may be due to measurement differences, such that student-reported measures of violence capture more everyday acts of aggression, whereas teacher measures might be more reflective of serious violence. Interestingly, we found stronger associations for staff perceptions of engagement and environment as well as safety, as compared to student perceptions. The stronger associations for staff may suggest a greater similarity between staff and outside observers, such that staff and the observers may have more similar notions of what a school environment “should” look like. Student perceptions of the school environment therefore may reflect more of a comparison with their home or neighborhood environment. Additional exploration of other adults’ perceptions of the school environment (e.g., parents) may provide further insight into these discrepancies.

Although further research is needed to understand the role of the built environment in determining students’ behaviors, both positive and negative, the use of the theoretical principles of CPTED represents a critical advancement in the field. Previous studies that have measured the school environment have primarily focused on assessments of school disorder, either by combining students’ self-report of disorder (Kitsantas et al. 2004; Mayer and Leone 1999; Van Dorn 2004) or by creating a summary score of a limited number of items (Wilcox et al. 2006). The inclusion of other aspects of the schools’ physical environment, such as surveillance and ownership, allows for a more complete understanding of the ways in which the design and physical layout of a school could influence students’ behavior. It also potentially allows for the identification of positive things (e.g., ownership) that schools can do to improve their school environment as well as suggests important design considerations when building new schools. However, it is interesting that these factors were not as strongly related to self-reported measures of the school environment or administrative data as were measures of disorder. This could highlight the role of collective efficacy, which has been associated with physical and social disorder (Sampson et al. 1997; Shaw and McKay 1969). The additional constructs of the SAfETy (i.e., illumination and surveillance) may have more relevance for determining safety from outside perpetration. Additional theoretical work applying CPTED to school settings is needed.

Strengths and Limitations

The SAfETy was modeled after the NIfETy and builds upon other previously developed measures of the physical environments of schools and neighborhoods. We also leveraged best practices related to measuring aspects of surveillance, security, and ownership of schools (e.g., CPTED). However, some measurement difficulties did arise, particularly for scales that included low (i.e., drug and alcohol paraphernalia) and high (i.e., adequate illumination or landscaping) prevalence items, thereby limiting variability across these items. Furthering tailoring of the measure may be needed as more information is gained about the comparative importance of various physical environment indicators. For example, the existence of any drug or alcohol paraphernalia may be important to assess, as it may provide information about norms of behaviors at the school. Other highly prevalent indicators, such as adequate illumination, may be normative in schools and therefore may not have much discriminant validity. Another limitation stems from the sample size needed to assess associations between school-level environmental features and student behaviors. While this study was rather large and diverse, as we included nearly 60 high schools and a set of non-project schools which were used for training, additional schools may have provided more variability in the pattern of findings. In contrast, neighborhood studies of the influence of the physical and social environment, which primarily are conducted at the block-level, traditionally include several hundred locations (Furr-Holden et al. 2010; Sampson et al. 1997). Unfortunately, the logistics of working with local educational authorities makes obtaining larger samples of schools difficult. Additional work with the SAfETy in other schools may help further disentangle the influence of the school setting from the larger neighborhood context. We also focused just on high schools, and thus additional research is needed on this measure in elementary and middle schools. The reliability and validity findings may have also been attenuated somewhat by the small number of schools and the relatively small number of observers. Given the cost and school burden associated with collection of observational data, we conducted recalibrations on a subset of 60 of the full set of items, for efficiency purposes, rather than on all items.

Conclusions and Future Directions

In conclusion, our findings support the value of understanding how aspects of the school physical environment influence student behavior. The SAfETy is a potentially promising tool for measuring specific aspects of the school physical environment. Consistent with CPTED, these factors may influence both students’ and teachers’ perceptions of safety as well as their engagement in school. More work is needed to understand theoretical and analytical relationships between the domains of the SAfETy and students’ behaviors, as well as to understand how to best measure these constructs in schools. Future research will also explore the predictive validity of the SAfETy scale scores and administrative outcomes over multiple years. Specifically, we intend to explore the extent to which the SAfETy data longitudinally predict administrative outcomes (e.g., academic performance, suspensions, attendance) and to see how these data fare in comparison to perceptual school climate data, as reported by students as well as staff. Taken together, the findings of this study suggest that the SAfETy may be a promising tool for assessing the impact of school-based violence prevention programming, and provide potentially useful information to practitioners in guiding school-violence prevention efforts.

References

Astor, R. A., & Meyer, H. A. (2001). The conceptualization of violence-prone school subcontexts: Is the sum of the parts greater than the whole? Urban Education, 36(3), 374–399.

Astor, R. A., Meyer, H. A., & Behre, W. J. (1999). Unowned places and times: Maps and interviews about violence in high schools. American Educational Research Journal, 36(1), 3–42.

Astor, R. A., Meyer, H. A., & Pitner, R. O. (2001). Elementary and middle school students’ perceptions of violence-prone school subcontexts. The Elementary School Journal, 101(5), 511–528.

Barlow, D. H., & Hersen, M. (1984). Single-case experimental designs: Strategies for studying behavior change (2nd ed.). New York: Pergamon Press.

Bentler, P. M., & Bonnett, P. G. (1980). Significance tests and goodness of fit in the analysis of covariance structures. Psychological Bulletin, 88(3), 588–606.

Beyers, J. M., Bates, J. E., Pettit, G. S., & Dodge, K. A. (2003). Neighborhood structure, parenting processes, and the development of youths’ externalizing behaviors: A multilevel analysis. American Journal of Community Psychology, 31(1–2), 35–53.

Birnbaum, A. S., Lytle, L. A., Hannan, P. J., Murray, D. M., Perry, C. L., & Forester, J. L. (2003). School functioning and violent behavior among young adolescents: A contextual analysis. Health Education Research, 18(3), 389–403.

Boardman, J. D., Finch, B. K., Ellison, C. G., Williams, D. R., & Jackson, J. S. (2001). Neighborhood disadvantage, stress, and drug use among adults. Journal of Health and Social Behavior, 42(2), 151–165.

Bosch, S. F. (2006). Research priorities: How facilities affect learning outcomes. In C. K. Tanner & J. A. Lackney (Eds.), Educational facilities planning: Leadership, architecture and management (pp. 323–347). Boston: Pearson Education.

Bradshaw, C. P., Sawyer, A. L., & O’Brennan, L. M. (2007). Bullying and peer victimization at school: Perceptual differences between students and school staff. School Psychology Review, 36(3), 361–382.

Bradshaw, C. P., Waasdorp, T. E., Debnam, K. J., & Lindstrom Johnson, S. (2014). Measuring school climate: A focus on safety, engagement, and the environment. Journal of School Health, 84, 593–604. doi:10.1111/josh.12186.

Brantingham, P. L., & Brantingham, P. J. (1981). Environmental criminology. Beverly Hills: Sage.

Cisneros, H. G. (1995). Defensible space: Deterring crime and building community. Washington: U.S. Department of Housing and Human Development.

Cohen, D. A., Mason, K., Bedimo, A., Scribner, R., Basolo, V., & Farley, T. A. (2003). Neighborhood physical conditions and health. American Journal of Public Health, 93(3), 467–471.

Crowe, T. D. (1991). Crime prevention through environmental design: Applications of architectural design and space management concepts. Louisville: National Crime Prevention Institute.

Environmental Protection Agency. (2012). Healthy School Environments Assessment Tool (HealthySEAT). Retrieved from http://www.epa.gov/schools/healthyseat/

Furr-Holden, C. D. M., Campbell, K. D. M., Milam, A. J., Smart, M. J., Ialongo, N. A., & Leaf, P. J. (2010). Metric properties of the neighborhood inventory for environmental typology (NIfETy): An environmental assessment tool for measuring indicators of violence, alcohol, tobacco, and other drug exposures. Evaluation Review, 34(3), 159–184.

Furr-Holden, C. D. M., Lee, M. H., Milam, A. J., Johnson, R. M., Lee, K. S., & Ialongo, N. S. (2011a). The growth of neighborhood disorder and marijuana use among urban adolescents: A case for policy and environmental interventions. Journal of Studies on Alcohol and Drugs, 72(3), 371.

Furr-Holden, C. D. M., Milam, A. J., Reynolds, E. K., MacPherson, L., & Lejuez, C. W. (2011b). Exposure to hazardous neighborhood environments in late childhood and anxiety. Journal of Community Psychology, 39(7), 876–883.

Furr-Holden, C. D. M., Milam, A. J., Reynolds, E. K., MacPherson, L., & Lejuez, C. W. (2012). Disordered neighborhood environments and risk-taking propensity in late childhood through adolescence. Journal of Adolescent Health, 50(1), 100–102.

Furr-Holden, C. D. M., Smart, M. J., Pokorni, J. L., Ialongo, N. S., Leaf, P. J., Holder, H. D., & Anthony, J. C. (2008). The NIfETy method for environmental assessment of neighborhood-level indicators of violence, alcohol, and other drug exposure. Prevention Science, 9(4), 245–255.

Gregory, A., Cornell, D., & Fan, X. (2011). The relationship of school structure and support to suspension rates for Black and White high school students. American Educational Research Journal, 48(4), 904–934.

Hu, L., & Bentler, H. M. (1999). Cut-off criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A MultiDisciplinary Journal, 6(1), 1–55.

Kitsantas, A., Ware, H. W., & Martinez-Arias, R. (2004). Students’ perceptions of school safety: Effects by community, school environment, and substance use variables. The Journal of Early Adolescence, 24(4), 412–430.

Koth, C. W., Bradshaw, C. P., & Leaf, P. J. (2008). A multilevel study of predictors of student perceptions of school climate: The effect of classroom-level factors. Journal of Educational Psychology, 100(1), 96–104.

Leventhal, T., & Brooks-Gunn, J. (2000). The neighborhoods they live in: The effects of neighborhood residence on child and adolescent outcomes. Psychological Bulletin, 126(2), 309–337.

Mair, J. S., & Mair, M. (2003). Violence prevention and control through environmental modifications. Annual Review of Public Health, 24(1), 209.

Mayer, M. J., & Leone, P. E. (1999). A structural analysis of school violence and disruption: Implications for creating safer schools. Education & Treatment of Children, 22(3), 333–356.

Milam, A., Furr-Holden, D., Cooley-Strickland, M., Bradshaw, C. P., & Leaf, P. (2014). Risk for exposure to alcohol, tobacco, and other drugs on the route to and from school: The role of alcohol outlets. Prevention Science, 15(1), 12–21. doi:10.1007/s10964-012-9843-y.

Milam, A. J., Furr-Holden, C. D. M., & Leaf, P. J. (2010). Perceived school and neighborhood safety, neighborhood violence and academic achievement in urban school children. The Urban Review, 42(5), 458–467.

Muthén, L. K., & Muthén, B. O. (1998–2012). Mplus user’s guide (7th ed.). Los Angeles: Muthén & Muthén.

Newman, O. (1972). Defensible space: Crime prevention through urban design. New York: Macmillan.

Newman, O. (1995). Defensible space: A new physical planning tool for urban revitalization. Journal of the American Planning Association, 61(2), 149–155.

Newman, O. (1996). Creating defensible space. Washington, DC: U.S. Department of Housing and Urban Development.

Nunnally, J. C. (1978). Psychometric theory (2nd ed.). New York: McGraw-Hill.

Plank, S. B., Bradshaw, C. P., & Young, H. (2009). An application of “Broken-windows” and related theories to the study of disorder, fear, and collective efficacy in schools. American Journal of Education, 115(2), 227–247.

Sampson, R. J., & Groves, W. B. (1989). Community structure and crime: Testing social-disorganization theory. American Journal of Sociology, 94(4), 774. doi:10.1086/229068.

Samspon, R. J., & Raudenbush, S. W. (1999). Systematic social observation of public spaces: A new look at disorder in urban neighborhoods. American Journal of Sociology, 105(3), 603–651.

Sampson, R. J., Raudenbush, S. W., & Earls, F. (1997). Neighborhoods and violent crime: A multilevel study of collective efficacy. Science, 277(5328), 918–924.

Schmitt, N. (1996). Uses and abuses of coefficient alpha. Psychological Assessment, 8(4), 350.

Shaw, C. R., & McKay, H. D. (1969). Juvenile delinquency and urban areas (rev ed.). Chicago: University of Chicago Press.

Uline, C., & Tschannen-Moran, M. (2008). The walls speak: The interplay of quality facilities, school climate, and student achievement. Journal of Educational Administration, 46(1), 56–73.

Van Dorn, R. A. (2004). Correlates of violent and nonviolent victimization in a sample of public high school students. Violence and Victims, 19(3), 303–320.

Wallis, A., & Ford, D. (1981). Crime prevention through environmental design: The school demonstration in Broward County, Florida: Executive summary. Washington, DC: US Department of Justice, National Institute of Justice.

Westinghouse Electric. (1977a). Crime prevention through environmental design. CPTED program manual, Vol. 1: Planning and implementation manual. Arlington: Westinghouse Electric.

Westinghouse Electric. (1977b). Crime prevention through environmental design. CPTED program manual, Vol. 2: Strategies and directives manual. Arlington: Westinghouse Electric.

Wilcox, P., Augustine, M. C., & Clayton, R. R. (2006). Physical environment and crime and misconduct in Kentucky schools. Journal of Primary Prevention, 27(3), 293–313.

Acknowledgments

Support for this project comes from the U.S. Department of Education, the Centers for Disease Control and Prevention, and the William T. Grant Foundation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors do not have any conflicts that would influence the findings.

Rights and permissions

About this article

Cite this article

Bradshaw, C.P., Milam, A.J., Furr-Holden, C.D.M. et al. The School Assessment for Environmental Typology (SAfETy): An Observational Measure of the School Environment. Am J Community Psychol 56, 280–292 (2015). https://doi.org/10.1007/s10464-015-9743-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10464-015-9743-x