Abstract

This paper describes the extent to which communities implementing the Communities That Care (CTC) prevention system adopt, replicate with fidelity, and sustain programs shown to be effective in reducing adolescent drug use, delinquency, and other problem behaviors. Data were collected from directors of community-based agencies and coalitions, school principals, service providers, and teachers, all of whom participated in a randomized, controlled evaluation of CTC in 24 communities. The results indicated significantly increased use and sustainability of tested, effective prevention programs in the 12 CTC intervention communities compared to the 12 control communities, during the active phase of the research project when training, technical assistance, and funding were provided to intervention sites, and 2 years following provision of such resources. At both time points, intervention communities also delivered prevention services to a significantly greater number of children and parents. The quality of implementation was high in both conditions, with only one significant difference: CTC sites were significantly more likely than control sites to monitor the quality of implementation during the sustainability phase of the project.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Increasing the implementation fidelity, dissemination and sustainability of tested and effective prevention programs are major goals of prevention science (Elliott and Mihalic 2004; Glasgow et al. 2003; Rohrbach et al. 2006; Saul et al. 2008; Spoth et al. 2008). Although there have been few large-scale studies documenting the extent to which communities are meeting these goals, progress appears limited. National studies investigating the degree to which school-based tested and effective prevention programs have been implemented with fidelity–in adherence to guidelines regarding the program’s content, duration, and delivery methods—have indicated very poor implementation quality (Gottfredson and Gottfredson 2002; Hallfors and Godette 2002). Implementation fidelity challenges have also been reported in effectiveness trials of other types of evidence-based programs (Elliott and Mihalic 2004; Griner Hill et al. 2006; Henggeler et al. 1997; Polizzi Fox et al. 2004). These findings suggest that implementation quality is likely to be compromised when programs are replicated without the high levels of training, technical assistance, and supervision provided during controlled research trials. However, this hypothesis requires further testing.

Barriers to increasing the dissemination of effective prevention strategies also exist. Ringwalt et al. (2011) found that 47% of a national sample of middle schools reported using an evidence-based program in 2008, but only 26% reported using such programs “the most” out of all of the prevention activities they implemented, and only 10% of high schools reported using effective, universal drug prevention curricula (Ringwalt et al. 2008). Researchers have also noted limited adoption of effective prevention strategies by other types of community agencies (Kumpfer and Alvarado 2003; Printz et al. 2009; Saul et al. 2008). The lack of widespread dissemination emphasizes the need to identify strategies that will help increase the spread of prevention services.

Research related to sustainability has included examination of the continuation of discrete prevention activities, on-going improvements in organizational and service provider capacity to conduct effective prevention activities, and long-term community support for prevention (Altman 1995; Gruen et al. 2008; Scheirer 2005; Shediac-Rizkallah and Bone 1998). Naturalistic experiments (i.e., those conducted without intensive oversight and technical assistance from program developers) investigating the degree to which particular prevention programs have been sustained in communities suggest that sustainability is more likely given program and organizational factors including integration between the program and implementing agency, organizational stability, presence of strong supporters (i.e., “champions”), and financial resources (August et al. 2006; Elliott and Mihalic 2004; Fagen and Flay 2009; Gruen et al. 2008; Kalafat and Ryerson 1999; Scheirer 2005). Less research has examined community-level factors that enhance program maintenance, such as support from community leaders, community member participation in prevention activities, and community-wide commitment to the principles of prevention science. Increasing knowledge regarding factors that enhance sustainability is important given that greater levels of sustainability should lead to larger and longer-term benefits to communities (Scheirer 2005; Shediac-Rizkallah and Bone 1998).

The current study investigates the degree to which communities can enhance their ability to adopt, implement with fidelity, and sustain tested and effective prevention strategies using the Communities That Care (CTC) prevention system. CTC guides community-based prevention work in a five-phase process which includes: (1) assessing community readiness to undertake collaborative prevention efforts; (2) forming a diverse and representative prevention coalition; (3) using epidemiologic data to assess prevention needs; (4) choosing tested and effective prevention policies and programs to address these needs; and (5) implementing the new policies and programs with fidelity, monitoring implementation and impact, and using this information to improve prevention activities as needed (Hawkins and Catalano 1992; Hawkins et al. 2002). The CTC system provides communities with structured trainings and detailed manuals that not only facilitate the transfer of scientific knowledge from the research setting to the practice community, but also foster local capacity and promote community ownership and support for prevention in order to produce long-term, sustainable changes.

The Promise of Community Coalitions to Foster Effective Prevention Programming

Research has indicated that coalitions can be successful in changing targeted problem behaviors (David-Ferdon and Hammond 2008; Spoth et al. 2007; Stevenson and Mitchell 2003; Wandersman and Florin 2003), but it is also true that not all coalitions are equally effective. Until recently, coalition success was considered contingent upon bringing together diverse and committed stakeholders and allowing them to identify their community’s needs and implement strategies they believed would best address these needs (Hallfors and Godette 2002). Evidence from scientifically rigorous evaluations of coalitions now suggests that success is most likely for coalitions that have clearly defined, focused, and manageable goals; collect high-quality, epidemiologic data to identify areas of need; address these needs using prevention strategies that have previously been shown to be effective; and carefully monitor the quality of implementation of prevention activities (Hallfors et al. 2002; Hawkins et al. 2002; Wandersman and Florin 2003). Quasi-experimental and experimental evaluations of the CTC prevention model, which meets the above criteria, have demonstrated that it significantly reduces substance use and delinquency among adolescents (Feinberg et al. 2007; Feinberg et al. 2010; Hawkins et al. 2009).

Prevention scientists have posited that community-based coalitions like CTC can produce behavior changes in part by increasing the adoption, high quality implementation, and sustainability of effective prevention strategies. Communities vary in their prevention needs and in the barriers that may hinder the adoption of prevention strategies, and local stakeholders should be better able than outsiders to identify such issues and effectively address them (David-Ferdon and Hammond 2008; Hawkins et al. 2002). Likewise, involving community members directly in prevention activities, rather than having them serve as the objects of scientist-led research activities, should foster local ownership and support for prevention and increase program adoption and sustainability (Altman 1995; Shediac-Rizkallah and Bone 1998). By pooling human and financial resources across various sectors of the community, coalitions can help local organizations increase their general capacity to implement prevention activities (Zakocs and Guckenburg 2007).

Research regarding the extent to which coalitions engage in the types of activities associated with success, including their ability to adopt, faithfully replicate, and sustain prevention programming, is limited. Available evidence has indicated much variation in these practices (Flewelling et al. 2005; Hallfors et al. 2002), although evaluations of the CTC model have been more positive. A quasi-experimental study of the CTC model in Pennsylvania found that better functioning CTC coalitions were more likely than lower functioning CTC coalitions to implement effective prevention programs (Brown et al. 2010), but having a coalition provide oversight or funding to programs was not associated with program sustainability (Tibbits et al. 2010). Data collected from coalition leaders during a randomized, controlled evaluation of CTC in 24 communities demonstrated that coalitions using the CTC approach were more likely than other types of prevention coalitions (across the intervention and control communities) to implement at least two tested and effective programs and to monitor the quality of these interventions (Arthur et al. 2010). Surveys of prevention providers in these communities also demonstrated higher rates of program adoption and participation, but not implementation fidelity, in CTC versus control communities, 3.5 years after the CTC model was adopted (Fagan et al. in press).

The current study investigates the degree to which CTC communities involved in the randomized trial maintained their focus on using and implementing with fidelity tested and effective prevention strategies, relative to control communities, 1.5 years after the end of proactive training and technical assistance to intervention sites. This study is one of very few to utilize a rigorous, experimental design to examine the extent to which community-level factors—in this case, the use of a coalition-based prevention system (CTC) that emphasizes community collaboration, widespread commitment to and participation in prevention activities, and support for a science-based approach to prevention—are related to the adoption, dissemination, high quality delivery, and sustainability of prevention programs. The methods used to collect data on these outcomes are also innovative, in that few evaluations have attempted to survey both program administrators and staff regarding their use of an array of tested and effective prevention programs.

Methods

Study Description

This study utilizes data from the Community Youth Development Study (CYDS), a ten-year study involving 12 pairs of small- to medium-sized communities in seven states matched within state with regard to size, poverty, diversity, and crime indices. In the fall of 2002, one member of each matched pair was randomized to the CTC intervention (N = 12) or the control condition (N = 12) (Hawkins et al. 2008). Control communities conducted prevention planning and programming according to their usual methods and received no resources or services other than small incentives for participation in data collection efforts. For the first five years of the study (Spring 2003 to Spring 2008)—the intervention phase—each of the 12 intervention communities was provided with training in the CTC model and regular technical assistance via telephone calls, e-mail correspondence, and site visits at least once annually. They also received funding for a full-time CTC coordinator and up to $75,000 annually in Years 2–5 to implement tested and effective prevention services that targeted fifth- to ninth-grade students (the focus age group of this phase of the study) and their families. Data from this phase of the study indicated that the 12 intervention communities implemented the CTC system as a whole with very high rates of implementation fidelity (Fagan et al. 2009). In Years 6–7 (Spring 2008 to Spring 2010)—the beginning of the sustainability phase—intervention communities received no funding to implement the CTC model or prevention services and very limited technical assistance.

Data Collection Process

The Community Resource Documentation (CRD) surveys assessed the number, scope and quality of delivery of tested and effective prevention services in communities. This paper relied on CRD data collected in all 24 study communities in 2006–2007 (3.5 years after the study began) and 2009–2010 (6.5 years after the study began and 1.5 years into the sustainability phase). The CRD involved multiple components, including structured telephone interviews with the directors and service providers of agencies and coalitions implementing prevention services, mail surveys of school administrators (in 2007 only), and internet-based surveys of teachers.Footnote 1

A three-tiered snowball sampling approach was used to generate the sample (Fagan et al. in press). In Tier 1, Community Key Informant interviews (Arthur et al. 2002) were conducted in each community with 10 positional leaders (e.g., the mayor, school superintendent, police chief) and 5 community leaders identified by the positional leaders as those most knowledgeable about community prevention. Respondents were asked to provide contact information for directors of all community agencies, organizations, and coalitions providing prevention services in their communities. In Tier 2, telephone interviews were conducted with these nominees, who were asked to name the prevention programs their organizations delivered or sponsored in the past year, with ‘program’ defined as: A defined set of services with set activities (sessions, classes or meeting times) that are provided to a defined group of people (members of the community, customers or participants). Respondents were asked to nominate only programs which served the target community and focused on the prevention (not treatment) of problem behaviors, and to focus on four program types:

-

1.

Parent Training: Programs that use curricula to teach parents skills for effective parenting.

-

2.

Social and Emotional Competence: Programs that use curricula to teach emotional, social and behavioral skills to prevent adolescent drug use and/or other problem behaviors.

-

3.

Mentoring: Programs that match adults or older teens with children in a supervised one-on-one relationship for at least one school year.

-

4.

Tutoring: Programs that link children with trained tutors (older children or adults) to improve academic skills or performance.

For each program that met these criteria, respondents provided contact information for the program coordinator(s), who were later interviewed. Also during Tier 2, in 2007 only, principals of all public elementary, middle, and high schools in the 24 communities were mailed surveys and asked to identify prevention programs occurring in their schools and contact information for their coordinators, who were later interviewed. As shown in Table 1, response rates were high across all of the CRD interviews. In each year, 95% of the eligible population of agency and coalition directors was interviewed, and 82% of the eligible administrators completed the principal survey in 2007.

Tier 3 of the CRD process was designed to verify the adoption and assess the implementation fidelity of identified tested and effective programs. Program coordinators and staff identified during Tier 2 were surveyed using computer-assisted telephone interviews (CATI), with very good response rates (92% in 2007 and 93% in 2010; see Table 1). Respondents were asked to verify that each identified program was currently being offered, was prevention-focused, and served at least one parent or youth in the targeted age group (Grades 5–8 in 2007 and Grades 5–10 in 2010). Respondents in the Parent Training, Social Competence, and Mentoring interviews were presented with a list of prevention programs previously demonstrated in at least one high quality research trial to reduce problem behaviors, as identified in the CTC Prevention Strategies Guide (http://www.sdrg.org/ctcresource/) or through reviews conducted by the research team.Footnote 2 Respondents were asked: In the past year, did your program use any of the following curricula? and (in the Parent Training and Social Competence interviews): Is there a primary curriculum from which [your program] draws? Respondents who identified one of the listed programs in response to either question were considered adopters. Adoption of a tested and effective Tutoring program was contingent on affirmative responses to five items indicating that tutors were screened before acceptance and trained, tutors were supervised, tutoring sessions occurred at least twice a week, there was a tutor to tutee ratio of less than 1–5, and changes in tutees’ performance were evaluated. Across all program types, respondents were also asked to report the total number of participants served by the program in the past year.

An internet-based survey of teachers was used to measure the adoption and fidelity of tested and effective programs delivered in classrooms. Eligible teachers were all those in participating schools who taught students in Grades 5–9 in 2007 and Grades 6–12 in 2010 and whose principals allowed them to participate. Response rates were 80% in 2007 and 70% in 2010 (see Table 1). One of the intervention communities refused to participate in the survey each year, and data from this community and its matched pair were not included in the analyses. In 2010, one of the control communities did not allow teachers to complete the survey, but the contact person for the school district provided information on the number of programs implemented and participants served across all schools; as implementation fidelity data was not provided by the contact, this outcome could not be assessed in this community or in its matched pair.

To be identified as adopting a classroom-based program, teachers first had to report that they had taught prevention curricula, then were asked whether or not they used each of the tested, effective programs listed on a menu derived from the CTC Prevention Strategies Guide and reviews conducted by the research team. For each program, teachers were presented with the program name, logo if available, name of the program developer and/or distribution company, and a short description of the program. Program adoption was considered to have occurred when teachers reported delivery of one of these programs in the current school year, excepting those who reported delivery of: (1) universal programs (i.e., programs designed to be taught to all students in a classroom and/or school) to fewer than 10 children (unless they taught special education populations); (2) programs to zero students; or (3) 10 or more programs. If the Olweus Bullying Prevention Program was reported by only one teacher in a school, it was not considered to have been adopted unless confirmed on the Principal Survey (in 2007), given that it is a school-wide strategy and use by only one teacher was considered too large a deviation from the program as designed. Teachers were also asked to report, using open-ended questions, the total number of students receiving each identified program.

Program Fidelity Measures

Program adopters were asked additional questions to assess aspects of implementation fidelity identified as important in the literature (Dusenbury et al. 2003; Fixsen et al. 2005), including: adherence (implementing the core components of the program), dosage (teaching the required number of lessons with the recommended length and frequency), participant responsiveness (regular attendance), and program oversight (monitoring and evaluating implementation procedures). Table 2 lists the fidelity components included in this study and how they were measured via the CRD. Items differed somewhat across program types, given differences in the nature and content of the strategies, and fewer fidelity questions were asked of teachers to reduce respondent burden. Unless otherwise noted in the table, items assessed fidelity constructs using dichotomous ratings which were averaged across all respondents in each community. Respondents were to rate implementation practices occurring during the past year, and if programs were offered multiple times during the year, to consider all program offerings in their responses.

Statistical Procedures

Program adoption was calculated as the total number of tested and effective programs offered in each of the 24 communities in 2007 and 2010, as well as in the 12 intervention communities combined and the 12 control communities combined in these years. Sustainability of programs was calculated as the number of programs reported consecutively in 2007 and 2010, divided by the total number of programs delivered in 2010. To calculate implementation fidelity scores, if multiple respondents in the same community or school indicated implementation of the same program, their responses were averaged to calculate the overall fidelity score for that program. Fidelity scores for each community were calculated by averaging scores across all programs operating in each community, and for CTC versus control communities by averaging scores across intervention conditions. Adoption, sustainability, and fidelity outcomes were also examined within each of the four program types included in Program Interviews, based on averaging scores across all programs in each category. These results are mentioned when relevant to indicate major differences in implementation across program types, but discussion is limited given the small number of programs identified within each program type.

Tests of statistical significance were conducted using the Wilcoxon Signed Ranks Test, (http://www.fon.hum.uva.nl/Service/Statistics/Signed_Rank_Test.html), a non-parametric statistic that makes paired comparisons using two-tailed tests. It was chosen to account for the non-normally distributed data and the nesting of programs within communities. This test allowed investigation of the degree of difference between each intervention community and its randomly assigned matched control community in each of the measured outcomes.

Missing data were not included in the analyses, given very low rates of missingness at both time points. In 2007, rates of missing data were 6.1% for the Program Interviews (3.7% in intervention communities and 11.1% in control communities) and 0.2% for the Teacher Surveys. In 2010, rates of missing data were 3.5% for the Program Interviews (4.6% in intervention communities and 1.8% in control communities) and 0.04% for the Teacher Surveys.

Results

Tested and Effective Program Adoption, Sustainability, and Participation

Table 3 provides results related to the adoption and sustainability of tested and effective programs and program participation in CTC and control communities. According to the Program Interviews, the CTC intervention communities adopted significantly (p < 0.05) more tested and effective programs compared to control communities in 2007 and in 2010. In 2007, 3.5 years after the research study had begun, respondents in CTC communities reported adoption of 44 tested and effective prevention programs, whereas 19 such programs were reported in the control communities. In 2010, 1.5 years after the end of training, technical assistance, and funding to intervention sites, respondents in CTC communities reported the implementation of 43 tested and effective programs, compared to 26 in control communities. Intervention differences in program adoption favoring CTC communities were found for each program type in 2007 and 2010, with the largest differences evidenced for Parent Training and Tutoring programs (results not shown). Teachers reported a greater number of tested and effective programs in CTC versus control communities in both years, but these differences were not statistically significant (see Table 3).

CTC communities reported higher rates of program sustainability than control communities, as shown in Table 3. According to the Program Interviews, about three-fourths (78%) of the programs offered in intervention communities in 2010 were also delivered in 2007, compared to 43% of programs in control communities, a statistically significant difference. The Teacher Survey data also showed higher rates of sustainability in CTC communities, with 48% of programs sustained from 2007 to 2010, compared to 18% of programs in the control communities; however, this difference was not statistically significant.

Intervention effects favoring CTC sites were also demonstrated for program participation, as shown in Table 3. According to the Program Interviews, CTC communities delivered tested and effective programs to significantly more youth and parents in 2007 compared to control communities. Participation nearly doubled in intervention sites from 2007 to 2010 (from 11,261 to 20,932 participants), compared to smaller increases in control communities (from 3,864 to 5,220 participants), though the intervention effect was only marginally significant (p < 0.10) in 2010. Comparisons across program types indicated that CTC communities served a greater number of participants than did control communities with all types of programs in both years, with the exception of Mentoring programs in 2010. In both conditions, participation was most difficult to achieve in Parent Training and Mentoring programs; for example, only 110 families received Parent Training programs in the 12 control communities in 2007 and 63 families participated in such programs in 2010 (results not shown).

According to the Teacher Surveys, more students received tested and effective school-based programming in CTC communities than in control communities in both 2007 and 2010 (see Table 3). The intervention effect was statistically significant in 2007 and marginally significant (p < 0.10) in 2010. In contrast to the Program Interviews, teachers in both conditions reported fewer participants in 2010 compared to 2007, likely due to the lower numbers of tested and effective school programs reported in both conditions during the sustainability phase.

Program Fidelity

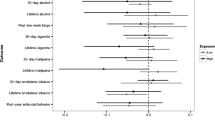

Table 4 presents information regarding the implementation fidelity of prevention programs in CTC and control communities during the intervention and sustainability phases of the project. Across all outcomes, respondents in both conditions reported relatively high rates of program compliance according to Program Interviews and relatively low rates of compliance according to the Teacher Surveys. Results were similar for intervention and control conditions, and there was only one statistically significant (p < 0.05) difference favoring CTC sites in terms of providing higher levels of program monitoring in 2010.

Adherence

Program Interview respondents in both CTC and control communities reported very high rates of program adherence with no significant differences between conditions (see Table 4). In 2007, over 70% of respondents in both conditions reported that staff were trained by program developers, that implementer and participant materials were purchased, and that the majority of the required core components and content were delivered to participants. Strong levels of adherence were sustained in 2010 in both CTC and control communities. Only one adherence measure, staff training, was measured on the Teacher Surveys, and only about half of the respondents in each condition reported that staff were trained by program developers in each year. A larger proportion of teachers (approximately two-thirds to three-fourths of all respondents; results not shown) reported receiving any type of training (e.g., reading the curriculum prior to class or receiving mentoring by other staff who had used the program), but these rates were reduced when limited to those receiving more rigorous and structured training (i.e., training delivered by program developers or their designated trainers).

Dosage

Data collected from the Program Interviews showed very high rates of dosage in both intervention and control communities, with no significant differences between conditions. In 2007, over 90% of the required number of lessons (or meetings, for Mentoring programs) were implemented in each condition, and high dosage levels were sustained in 2010 (see Table 4). These results were consistent across program types, although information on dosage was difficult to collect for Mentoring programs, as most respondents reported that they did not know or refused to report how often mentors and mentees were meeting.

Participant Responsiveness

Although rates of participation were higher in CTC compared to control communities (see Table 3), regular attendance at program sessions was evidenced in both CTC and control communities according to Program Interviews (see Table 4). About 80% of participants were reported as having attended the majority of offered sessions in both conditions in 2007, and nearly identical rates were demonstrated in the sustainability phase of the project.

Program Oversight

Significant (p < 0.05) intervention effects favoring CTC communities were found for one of the four measures of program oversight assessed via Program Interviews (see Table 4). In 2010, respondents in the CTC communities were significantly more likely than those in control communities to report monitoring program implementation. CTC sites were also more likely to report using information about implementation to improve the quality of delivery of programs (i.e., “quality assurance”), but this difference only approached significance in 2010 (p < 0.10). Both intervention and control communities reported high rates of program evaluation and staff coaching (i.e., providing staff with supervision and support) at both time points. Program oversight was less likely to be reported by teachers compared to respondents in the Program Interviews. In each year, only one-fourth to one-half of teachers reported that programs were monitored, while 24–35% reported that pre/post surveys of participants were used to evaluate program effectiveness.

Discussion

Enhancing communities’ ability to replicate, effectively implement, and sustain tested and effective preventive interventions is a priority of prevention science, but information on how to do so is lacking (Elliott and Mihalic 2004; Glasgow et al. 2003; Rohrbach et al. 2006; Spoth et al. 2008). Using data from a randomized controlled trial involving 24 communities, 12 of which implemented the Communities That Care (CTC) prevention system, and 12 of which conducted prevention activities as usual, this paper investigated the degree to which CTC increased the adoption, dissemination, implementation fidelity, and sustainability of tested and effective prevention strategies. Outcomes were assessed in 2007, 3.5 years after the CTC system was begun in intervention communities, and in 2010, 6.5 years after baseline and 1.5 years following the end of training, proactive technical assistance, and funding to intervention communities.

According to community agency directors and prevention program providers, CTC communities implemented significantly more tested and effective prevention programs in 2007 and 2010 and had higher rates of program sustainability compared to control communities. CTC sites also reached more children and families with prevention services at each time point, although the difference was significant only during the intervention phase of the research project (in 2007). Teachers reported that CTC sites implemented more school-based programs in 2007 and 2010 and were more likely to sustain these programs, compared to control communities, although these differences were not statistically significant. School programs reached significantly more students in CTC versus control communities in 2007 and somewhat more students in 2010. Only one significant intervention effect related to implementation fidelity was found, which indicated that CTC sites provided more program oversight during the sustainability phase of the project compared to control sites.

The results suggest that utilization of the CTC prevention system can increase the adoption, dissemination, and sustainability of science-based prevention activities. The positive findings regarding enhanced program adoption and dissemination during the intervention phase of the study have been reported previously (Fagan et al. in press). Analyses based on CRD data from 2001 to 2007 showed that, prior to adoption of the CTC system, intervention and control communities reported similar use of and participation in effective interventions, but 3.5 years post-baseline (in 2007), CTC communities reported higher rates of program adoption and participation compared to control communities, and similar levels of implementation fidelity. The positive effects on program adoption and participation were anticipated given that CTC sites received training, technical assistance, and funding during the intervention period to facilitate these outcomes. In addition, a separate process evaluation of intervention sites demonstrated that these communities implemented the CTC process itself with very high rates of implementation fidelity (Fagan et al. 2009). Nonetheless, program adoption was challenging for sites, particularly when trying to install new school-based programs (Fagan et al. 2009). Adoption of school programs required cultivation of champions within the school district, including administrators and teachers, and many conversations to determine how to best integrate new programs into the school. While all intervention communities eventually adopted new tested and effective school programs, success was contingent upon coalition members’ dedication to ensuring that youth received high quality, effective prevention programming, which was likely enhanced through CTC training.

Intervention communities also faced significant difficulties ensuring high rates of participation, particularly when trying to recruit families into universal, parent training interventions. Communications with intervention sites by the research team revealed that parent training classes offered prior to the study were typically implemented only once or twice a year to small numbers of families. Because the CTC model promotes widespread dissemination of prevention services in order to effect community-level changes in behavior, intervention sites were encouraged to set relatively ambitious participation goals and to work diligently to meet these goals (Fagan et al. 2009). Similar recruitment challenges were faced by communities implementing mentoring and tutoring programs. It is likely that the intervention effects related to program participation reflect the intervention communities’ increased commitment to expanding participation and changing their delivery methods to do so, whereas control communities were more likely to conduct “business as usual.”

The sustained effects in program adoption and dissemination favoring CTC communities reported in the current paper are encouraging and suggest that use of the CTC system does enhance local capacity to implement and sustain effective prevention programming, both in the short- and long-term. Prior research has suggested that program sustainability is contingent upon a variety of program-, organizational-, and community-level factors (August et al. 2006; Fixsen et al. 2005; Johnson et al. 2004; Scheirer 2005), but few studies have investigated the impact of these factors—particularly community-level processes–using rigorous, scientific methods. While this study did not examine the influence of discrete community factors on outcomes, it did investigate the degree to which the use of the CTC system, designed to enhance multiple organizational and community-level factors thought to be related to sustainability, led to increased dissemination and maintenance of prevention programs. We believe this is the first randomized, controlled evaluation to demonstrate the ability of a community-level intervention to result in the sustained use of effective prevention programs.

CTC provides diverse and broad-based community coalitions with a structured, science-based approach to selecting and effectively implementing prevention activities that have been previously tested and demonstrated as effective in reducing youth problem behaviors (Hawkins et al. 2002). Using a manualized and structured training process, CTC educates coalition members in how to identify elevated risk factors and depressed protective factors faced by community youth, assess current prevention services operating in the community, and fill gaps in these services with interventions selected from a menu of options listing tested and effective prevention strategies. By training coalition members from multiple community organizations in the importance of using, monitoring, and evaluating tested and effective prevention services, CTC enhances the general capacity of agencies, which has also been linked to increased sustainability (Elliott and Mihalic 2004; Fixsen et al. 2005; Johnson et al. 2004). By involving community members in prevention efforts, encouraging collaboration across sectors, and seeking changes in community norms related to problem behaviors, CTC fosters a more supportive environment in which to conduct prevention activities, which should promote sustainability (Gruen et al. 2008; Scheirer 2005; Shediac-Rizkallah and Bone 1998). It should also be noted that the CTC framework does not advocate the use of particular programs or require that communities reach a certain percentage of residents with services. Instead, the system emphasizes that community-wide changes in problem behaviors are more likely when coalitions select interventions that match the particular needs of their community and (in the case of universal interventions) implement them widely to reach the greatest number of residents.

The lack of intervention effects related to implementation fidelity are surprising given that, following the CTC model, training and technical assistance was provided during the intervention phase of this study to help intervention sites monitor the quality of implementation efforts and make changes as needed to ensure the quality and sustainability of services. We expected that this capacity-building would enhance implementation quality in the short- and long-term. It is possible, however, that practitioners in control communities have also become aware of the need to adhere to program guidelines and evaluate prevention practices. Alternatively, the high rates of fidelity reported in both intervention conditions may reflect social desirability, given that questions assessing implementation quality were self-reported by program staff, and research has demonstrated that self-reports tend to produce higher rates of fidelity than data collected from independent observers (Dusenbury et al. 2003; Lillehoj et al. 2004; Melde et al. 2006). It was not feasible to collect data on implementation quality in all communities using independent observers, given the scope of services and number of communities involved in the project, and this is a limitation of the study. It may also be that the CRD survey items were too general to capture true differences in the quality of implementation between sites. In fact, responses to detailed follow-up questions typically revealed lower rates of fidelity compared to the more general screening questions; for example, most respondents reported that programs were evaluated, but fewer indicated the use of rigorous methods of evaluation. Future investigations assessing implementation fidelity across multiple programs and sites should seek to monitor fidelity using observations and, if conducting staff interviews, ensure that questions probe as closely as possible into practices to ensure the validity of results.

That lower rates of implementation fidelity were reported by teachers compared to staff from other community agencies are consistent with past studies reporting poor implementation of school-based prevention activities (Gottfredson and Gottfredson 2002; Hallfors and Godette 2002). While the separate process evaluation of intervention sites, which relied on self-reported data from teachers and independent observations of lessons, indicated high rates of fidelity in the school-based programs funded by the project (Fagan et al. 2008, 2009), the current data suggest that these results did not generalize to the other school-based programs operating in intervention communities and included in the CRD surveys. Although few studies have assessed whether implementation fidelity predictors vary according to program setting or if schools have unique challenges that make fidelity less likely, Dariotis et al. (2008) also found lower rates of fidelity in school-based versus community-based programs implemented in Pennsylvania. They reported that schools had more difficulty cultivating champions, ensuring that prevention was a priority within the organization, and engendering parent and community support for prevention programs. Additional research identifying barriers to and facilitators of implementation fidelity across program types could help promote effective community-based prevention programming.

The findings from the current study, based on data from a large and representative sample of community agency and coalition directors, program providers, and teachers, indicate that use of the Communities That Care system can help increase the adoption, dissemination, and sustainability of effective prevention practices. The study also describes a methodology for collecting information on these outcomes, which is important given that few studies have systematically investigated the spread and quality of prevention services across an array of program types. Clearly, more investigation is needed to identify the extent to which communities effectively adopt, implement with fidelity, and sustain tested and effective prevention programs, as well as the factors that make these outcomes more or less achievable, and we hope that the results of the current study are useful in generating additional research in this area.

Notes

While agency and coalition interviews occurred in the fall of 2006 and 2009 and teacher surveys occurred in the spring of 2007 and 2010, we refer to the two data collection time points as 2007 and 2010.

A list of the programs included on the CRD surveys is available upon request from Blair Brook-Weiss (bbrooke@u.washington.edu) at the Social Development Research Group.

References

Altman, D. G. (1995). Sustaining interventions in community systems: On the relationship between researchers and communities. Health Psychology, 14, 526–536.

Arthur, M. W., Hawkins, J. D., Brown, E. C., Briney, J. S., Oesterle, S., & Abbott, R. D. (2010). Implementation of the Communities That Care prevention system by coalitions in the Community Youth Development Study. Journal of Community Psychology, 38, 245–258.

Arthur, M. W., Hawkins, J. D., Catalano, R. F., & Olson, J. J. (2002). Community key informant survey. Seattle, WA: Social Development Research Group, University of Washington.

August, G. J., Bloomquist, M. L., Lee, S. S., Realmuto, G. M., & Hektner, J. M. (2006). Can evidence-based prevention programs be sustained in community practice settings? The Early Risers’ advanced-stage effectiveness trial. Prevention Science, 7, 151–165.

Brown, L. D., Feinberg, M. E., & Greenberg, M. T. (2010). Determinants of community coalition ability to support evidence-based programs. Prevention Science, 11, 287–297.

Dariotis, J. K., Bumbarger, B. K., Duncan, L. G., & Greenberg, M. (2008). How do implementation efforts relate to program adherence? Examining the role of organizational, implementer, and program factors. Journal of Community Psychology, 36, 744–760.

David-Ferdon, C., & Hammond, W. R. (2008). Community mobilization to prevent youth violence and to create safer communities. American Journal of Preventive Medicine, 34, S1–S2.

Dusenbury, L., Brannigan, R., Falco, M., & Hansen, W. B. (2003). A review of research on fidelity of implementation: Implications for drug abuse prevention in school settings. Health Education Research, 18, 237–256.

Elliott, D. S., & Mihalic, S. (2004). Issues in disseminating and replicating effective prevention programs. Prevention Science, 5, 47–53.

Fagan, A. A., Arthur, M. W., Hanson, K., Briney, J. S., & Hawkins, J. D. (in press). Effects of Communities That Care on the adoption and implementation fidelity of evidence-based prevention programs in communities: Results from a randomized controlled trial. Prevention Science. doi:10.1007/s11121-011-0226-5.

Fagan, A. A., Brooke-Weiss, B., Cady, R., & Hawkins, J. D. (2009a). If at first you don’t succeed … keep trying: Strategies to enhance coalition/school partnerships to implement school-based prevention programming. Australian and New Zealand Journal of Criminology, 42, 387–405.

Fagan, A. A., Hanson, K., Hawkins, J. D., & Arthur, M. W. (2008). Bridging science to practice: Achieving prevention program fidelity in the Community Youth Development Study. American Journal of Community Psychology, 41, 235–249.

Fagan, A. A., Hanson, K., Hawkins, J. D., & Arthur, M. W. (2009b). Translational research in action: Implementation of the Communities That Care prevention system in 12 communities. Journal of Community Psychology, 37, 809–829.

Fagen, M. C., & Flay, B. R. (2009). Sustaining a school-based prevention program: Results from the Aban Aya sustainability project. Health Education and Behavior, 36, 9–23.

Feinberg, M. E., Greenberg, M. T., Osgood, D. W., Sartorius, J., & Bontempo, D. (2007). Effects of the Communities That Care model in Pennsylvania on youth risk and problem behaviors. Prevention Science, 8, 261–270.

Feinberg, M. E., Jones, D., Greenberg, M. T., Osgood, D. W., & Bontempo, D. (2010). Effects of the Communities That Care model in Pennsylvania on change in adolescent risk and problem behaviors. Prevention Science, 11, 163–171.

Fixsen, D. L., Naoom, S. F., Blase, K. A., Friedman, R. M., & Wallace, F. (2005). Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network (FMHI Publication #231).

Flewelling, R. L., Austin, D., Hale, K., LaPlante, M., Liebig, M., Piasecki, L., et al. (2005). Implementing research-based substance abuse prevention in communities: Effects of a coalition-based prevention initiative in Vermont. Journal of Community Psychology, 33, 333–353.

Glasgow, R. E., Lichtenstein, E., & Marcus, A. C. (2003). Why don’t we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. American Journal of Public Health, 93, 1261–1267.

Gottfredson, D. C., & Gottfredson, G. D. (2002). Quality of school-based prevention programs: Results from a national survey. Journal of Research in Crime and Delinquency, 39, 3–35.

Griner Hill, L., Maucione, K., & Hood, B. K. (2006). A focused approach to assessing program fidelity. Prevention Science, 8, 25–34.

Gruen, R. L., Elliott, J. H., Nolan, M. L., Lawton, P. D., Parkhill, A., McLaren, C. J., et al. (2008). Sustainability science: An integrated approach for health-programme planning. Lancet, 372, 1579–1589.

Hallfors, D., Cho, H., Livert, D., & Kadushin, C. (2002). Fighting back against substance use: Are community coalitions winning? American Journal of Preventive Medicine, 23, 237–245.

Hallfors, D., & Godette, D. (2002). Will the “Principles of effectiveness” Improve prevention practice? Early findings from a diffusion study. Health Education Research, 17, 461–470.

Hawkins, J. D., & Catalano, R. F. (1992). Communities that care: Action for drug abuse prevention. San Francisco, CA: Jossey-Bass Publishers.

Hawkins, J. D., Catalano, R. F., & Arthur, M. W. (2002). Promoting science-based prevention in communities. Addictive Behaviors, 27, 951–976.

Hawkins, J. D., Catalano, R. F., Arthur, M. W., Egan, E., Brown, E. C., Abbott, R. D., et al. (2008). Testing Communities That Care: Rationale and design of the Community Youth Development Study. Prevention Science, 9, 178–190.

Hawkins, J. D., Oesterle, S., Brown, E. C., Arthur, M. W., Abbott, R. D., Fagan, A. A., et al. (2009). Results of a type 2 translational research trial to prevent adolescent drug use and delinquency: A test of Communities That Care. Archives of Pediatric Adolescent Medicine, 163, 789–798.

Henggeler, S. W., Melton, G. B., Brondino, M. J., Scherer, D. G., & Hanley, J. H. (1997). Multisystemic Therapy with violent and chronic juvenile offenders and their families: The role of treatment fidelity in successful dissemination. Journal of Consulting and Clinical Psychology, 65, 821–833.

Johnson, K., Hays, C., Center, H., & Daley, C. (2004). Building capacity and sustainable prevention innovations: A sustainability planning model. Evaluation and Program Planning, 27, 135–149.

Kalafat, J., & Ryerson, D. M. (1999). The implementation and institutionalization of a school-based youth suicide prevention program. The Journal of Primary Prevention, 19, 157–175.

Kumpfer, K. L., & Alvarado, R. (2003). Family-strengthening approaches for the prevention of youth problem behaviors. American Psychologist, 58, 457–465.

Lillehoj, C. J., Griffin, K. W., & Spoth, R. (2004). Program provider and observer ratings of school-based preventive intervention implementation: Agreement and relation to youth outcomes. Health Education and Behavior, 31, 242–257.

Melde, C., Esbensen, F.-A., & Tusinski, K. (2006). Addressing program fidelity using onsite observations and program provider descriptions of program delivery. Evaluation Review, 30, 714–740.

Polizzi Fox, D., Gottfredson, D. C., Kumpfer, K. L., & Beatty, P. (2004). Challenges in disseminating model programs: A qualitative analysis of the Strengthening Washington DC families program. Clinical Child and Family Psychology Review, 7, 165–176.

Printz, R. J., Sanders, M. R., Shapiro, C. J., Whitaker, D. J., & Lutzker, J. R. (2009). Population-based prevention of child maltreatment: The US Triple P system population trial. Prevention Science, 10, 1–12.

Ringwalt, C., Hanley, S., Vincus, A. A., Ennett, S. T., Rohrbach, L. A., & Bowling, J. M. (2008). The prevalence of effective substance use prevention curricula in the nation’s high schools. Journal of Primary Prevention, 29, 479–488.

Ringwalt, C., Vincus, A. A., Hanley, S., Ennett, S. T., Bowling, J. M., & Haws, S. (2011). The prevalence of evidence-based drug use prevention curricula in US middle schools in 2008. Prevention Science, 12, 63–70.

Rohrbach, L. A., Grana, R., Sussman, S., & Valente, T. W. (2006). Type II translation: Transporting prevention interventions from research to real-world settings. Evaluation and the Health Professions, 29, 302–333.

Saul, J., Duffy, J., Noonan, R., Lubell, K., Wandersman, A., Flaspohler, P., et al. (2008a). Bridging science and practice in violence prevention: Addressing ten key challenges. American Journal of Community Psychology, 41, 197–205.

Saul, J., Wandersman, A., Flaspohler, P., Duffy, J., Lubell, K., & Noonan, R. (2008b). Research and action for bridging science and practice in prevention. American Journal of Community Psychology, 41, 165–170.

Scheirer, M. A. (2005). Is sustainability possible? A review and commentary on empirical studies of program sustainability. American Journal of Evaluation, 26, 320–347.

Shediac-Rizkallah, M. C., & Bone, L. R. (1998). Planning for the sustainability of community-based health programs: Conceptual frameworks and future directions for research, practice, and policy. Health Education Research, 13, 87–108.

Spoth, R. L., Redmond, C., Shin, C., Greenberg, M., Clair, S., & Feinberg, M. (2007). Substance use outcomes at eighteen months past baseline from the PROSPER community-university partnership trial. American Journal of Preventive Medicine, 32, 395–402.

Spoth, R. L., Rohrbach, L. A., Hawkins, J. D., Greenberg, M., Pentz, M. A., Robertson, E., et al. (2008). Type II translational research: Overview and definitions. Fairfax, VA: Society for Prevention Research. http://www.preventionscience.org/SPR_Type%202%20Translation%20Research_Overview%20and%20Definition.pdf.

Stevenson, J. F., & Mitchell, R. E. (2003). Community-level collaboration for substance abuse prevention. Journal of Primary Prevention, 23, 371–404.

Tibbits, M. K., Bumbarger, B., Kyler, S., & Perkins, D. F. (2010). Sustaining evidence-based interventions under real-world conditions: Results from a large-scale diffusion project. Prevention Science, 11, 252–262.

Wandersman, A., & Florin, P. (2003). Community intervention and effective prevention. American Psychologist, 58, 441–448.

Zakocs, R. C., & Guckenburg, S. (2007). What coalition factors foster community capacity? Lessons learned from the Fighting Back initiative. Health Education and Behavior, 34, 354–375.

Acknowledgments

This work was supported by research grants from the National Institute on Drug Abuse (R01 DA015183-03) with co-funding from the National Cancer Institute, the National Institute of Child Health and Human Development, the National Institute of Mental Health, and the Center for Substance Abuse Prevention, and the National Institute on Alcohol Abuse and Alcoholism. The content of this paper is solely the responsibility of the authors and does not necessarily represent the official views of the funding agencies. The authors gratefully acknowledge the on-going participation in the study and data collection efforts of the residents of the 24 communities described in this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fagan, A.A., Hanson, K., Briney, J.S. et al. Sustaining the Utilization and High Quality Implementation of Tested and Effective Prevention Programs Using the Communities That Care Prevention System. Am J Community Psychol 49, 365–377 (2012). https://doi.org/10.1007/s10464-011-9463-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10464-011-9463-9