Abstract

There is a widespread consensus about the need for accreditation systems for evaluating post-graduate medical education programs, but accreditation systems differ substantially across countries. A cross-country comparison of accreditation systems could provide valuable input into policy development processes. We reviewed the accreditation systems of five countries: The United States, Canada, The United Kingdom, Germany and Israel. We used three information sources: a literature review, an online search for published information and applications to some accreditation authorities. We used template analysis for coding and identification of major themes. All five systems accredit according to standards, and basically apply the same accreditation tools: site-visits, annual data collection and self-evaluations. Differences were found in format of standards and specifications, the application of tools and accreditation consequences. Over a 20-year period, the review identified a three-phased process of evolution—from a process-based accreditation system, through an adaptation phase, until the employment of an outcome-based accreditation system. Based on the five-system comparison, we recommend that accrediting authorities: broaden the consequences scale; reconsider the site-visit policy; use multiple data sources; learn from other countries’ experiences with the move to an outcome-based system and take the division of roles into account.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Accreditation of Postgraduate Medical Education (PGME) is an ongoing process of quality evaluation and monitoring of medical resident (doctor in training/postgraduate trainee) training in an institution (a university, program, department, clinic and others). The 1910 Flexner report was the first to highlight the importance of standardizing medical education and establishing accreditation process to assure standards are being met, while the 2010 Carnegie report stressed for standardizing learning outcomes and general competencies instead of length and structure of curriculum (Irby et al. 2010). National accreditation systems are expected to develop criteria for assessment, define desired outputs, and make sure that graduates achieve adequate competencies to meet societal health needs (Frenk et al. 2010). There is a broad consensus that accreditation of PGME is needed, but there is no universal way of accomplishing this (WHO 2013).

In the early 2000s, a shift gradually emerged in PGME from time/process-based models, which focus on the process of training a resident within a certain time frame, to Competency Based Medical Education (CBME), an outcome-based approach to the design, implementation, assessment and evaluation of medical education programs, using an organizing framework of competencies (Frank et al. 2010). It is based on monitoring residents’ personal progress at each stage of training, until they reach the level of specialist.

Traditional process-based accreditation systems focused on the resources and structure required for training physicians, and used structural parameters (the number of procedures, the number of senior physicians, a list of facilities and others), as well as on the process of formal teaching (Nasca et al. 2012). During the last few years, some countries began revising their accreditation systems in an attempt to examine not only the structure and process of training, but the outcomes of programs and learners, as well (Manthous 2014).

Accreditation systems in medical education vary from country to country and sometimes within countries. Great variation exists in accreditation processes world-wide, sometimes referring the term “accreditation” to different process which would not necessarily be expectable as “proper accreditation” (Karle 2006).

Accreditation systems have evolved based on environmental conditions, health system demands and other factors, but due to globalization, countries become acquainted with models existing in other countries and adopt new ideas. A vast body of literature describes medical education in the United States and Canada, but very little is written about accreditation of PGME, and even less is written about it in other countries. We aim to compare five accreditation systems, taking into consideration changes to these systems during the last 20 years. Our goal is to find both common principles and differences, which may help decision makers consider new ideas.

Methods

We focused our review on five systems of accreditation from different countries: The United States, Canada, The United Kingdom, Germany and Israel. We selected Western countries that have had PGME and accompanying accreditation systems for many years. Of all countries fitting this criteria, we looked for a diverse group, and thus included North American as well as European countries, countries differing in the number of physicians and residents, countries leading in medical education discussion and literature (as USA, UK and Canada), and other countries where the systems are less publicized. Accessible information in English, either published or available on request based on personal connections, was another factor.

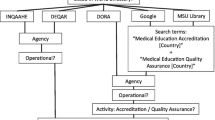

We based our comparison on three information sources. First, a literature review was conducted, using both Pub-Med and Web of Science searches, for articles concerning accreditation of PGME generally or specifically concerning one or more of the five selected countries. Second, an online search was conducted for all information and documents concerning accreditation of PGME publicly published until December 2018 by each relevant accreditation authority and other health authorities in the five countries (including position papers, regulations, protocols, standards, syllabi and information published on each authority’s web site). Finally, a request for further details was e-mailed to accreditation authorities in Canada and Germany.

For our analysis of the information we used Template Analysis, as described by King (2012), which was chosen for its flexibility and its intuitive use. An a priori list of themes was set based on first reading impressions and the authors’ perceptions of the subject. An initial template was then constructed by reviewing documents concerning two countries (the United States and Canada), coding statements that related to the a priori list of themes, as well as new themes located. The codes were then clustered into meaningful groups, each with hierarchical connections between themes in the same cluster. Some themes that appeared on the a priori list were not included in the initial template, as they proved to have fewer references than anticipated. Our choice of themes was conducted by looking for reoccurring themes in three or more of the countries examined. After reviewing all documents and marking all references to the relevant themes, we assembled our final template, which allowed a comparison among all five countries. Major themes emerging from the analysis were tabulated.

Results

Among the five countries we chose, we found diversified characteristics of PGME systems and health systems (Tables 1, 2).

How is accreditation performed?

Accreditation by standards

Accreditation by standards is a basic principle shared by most accreditation systems, though the standards differ in format and specification. The United states, Canada and Britain employ general standards for sites or institutions, while in Israel most of those general standards were canceled, with emphasis put on specific standards for each specialty and type of training site. Specialty specific standards are used in the United states and Canada as well, while Britain and Germany employ specific standards for trainers (ACGME 2016, 2000–2018; RCPSC et al. 2007–2013, 2007–2011; GMC 2015, 2016a; Ärztekammer 2014; GMA 2003–2015; IMA 2014). In Germany, standards are regulated by each state physicians’ chamber and therefore may vary from one to the other though they are all based on the (Model) Specialty Training Regulations, which are adopted by the German Medical Assembly (Nagel 2012). Variation may be so extensive that in some cases, as in Family Medicine PGME, some of the German states employ no standards at all (Egidi et al. 2014).

Though the content of the different standards has much in common, there are apparent differences as well. Those of the United states, Canada and Britain, for example, are more elaborate and have an additional facet of outcomes-based standards, corresponding with the move to CBME, which will be further explained in the section “Process-based/outcome-based accreditation system”.

Accreditation tools

We found that all five countries applied between 2 and 3 of the same three main accreditation tools to verify that standards are being met: site-visits, annual data collection and self-evaluations (Marsh et al. 2014; Kennedy et al. 2011; GMC 2016a; IMA 2014; November 2016 an e-mail correspodence from Jibikilayi E of the GMA, unrefferenced) (Table 3).

A site-visit, an external review performed in all five countries, mainly requires a team of surveyors to visit the facilities, interview the staff, meet with the management and compile a report of their findings and recommendations. Most countries used visits on a predefined cycle, though triggered visits by unexpected circumstances as well, such as complaints or concerns (Table 4). The British General Medical Council (GMC), scheduled regional reviews in England and national reviews in Northern Ireland, Scotland and Wales, uniquely designed to include both undergraduate and Postgraduate Medical Education at the same visit. Site visits in Germany were scheduled on a needs basis by the state chamber of physicians. It is up to the respective State Chambers of Physicians to decide whether (and, if so, to what extent) specialty training facilities and physicians authorized to provide specialty training should be monitored within the framework of quality assurance—either on a case-by-case basis or without a specific reason (October 2017, an e-mail correspondence from O’Leary S of the GMA, unreferenced.). In the United States, the ACGME conducts two main kinds of site-visits: A program site-visit, run on a 10-year schedule, and a Clinical Learning Environment Review (CLER), which is aimed for sponsoring institutions and designed to improve the way clinical sites engage resident and fellow physicians in learning to provide safe, high quality patient care. In this manuscript we mainly elaborate on the program site-visits.

Visiting teams vary among countries by the number of surveyors, their expertise (senior physicians, residents, public representatives, educators) and the length of the visit (see Table 4). In Canada and Israel, surveyors are all volunteer physicians, at least one of them from the same specialty and another from a different specialty. Since the visit focuses on the processes and framework of education and not on specific treatments, a surveyor from a different specialty serves for objectivity and spreading best practices between specialties (Interview with Prof. Shapira Y., unreferenced, 2018). In the United States, one or more surveyors employed by the Accreditation Council for Graduate Medical Education (ACGME), either physicians or PhDs in a relevant field, participate in each visit. In The United Kingdom, the visiting team includes public representatives, an undergraduate students and a resident (trainee) as well as senior physicians. We acquired no information regarding visiting teams in Germany. Visiting team’s roles, nevertheless, were found to be similar and include mostly some or all of the following: using data from all sources, meeting with management, interviewing program director, residents, faculty and other administrative representatives, reviewing documentation, touring physical facilities, writing a detailed written report and compiling recommendations or proposals for action.

While in The United Kingdom, teams visit a region and some of the LEPs located therein, in Canada visits are made to each university and all of its programs. Nevertheless, since one program might be provided by several LEPs, there may be little difference between the UK and Canada visit sampling de facto. In the United States, visits are made to programs with all the attached fellowships, and in Israel to each site. One major difference among the systems is the number of visits conducted annually. This is influenced by several factors: whether a visit is made to a site requesting initial accreditation (Canada and the United Kingdom rely on documentation only at that point); the length of the cycle (varying between 4 and 10 years); the inspection policy in general, which determines whether a visit is conducted to each site/program (as in the United States and Israel); or when a risk is suspected (as in the United Kingdom) and the number of training programs/sites in the country. This has implications for system costs and efficiency.

The five countries employ varied data sources (Table 5) to enrich data beyond the information gathered by site-visits. The most common method is surveys, in particular, resident surveys. The United States and the United Kingdom routinely gather information using a survey, while Canada, Germany and Israel are currently constructing a resident survey. In Germany, surveys were not declared to be specifically designed for accreditation purposes, but the questioner itself contains mainly questions regarding the training facility and training activities (GMA 2015).

In the United States, Residency Review Committees review programs annually by using multiple sources of data: annual updates (program changes, program characteristics, participating sites, educational environment and others), resident/fellow survey, clinical experience, certification examinations pass rate, faculty survey, scholarly activity, semi-annual resident evaluation (including milestones which will be further explained later on) and omission of data (Potts 2013).

Self-evaluation used in the United States, Canada, and the United Kingdom, requires the applying institution to conduct an internal review preceding the site visit and compiling a report promoting self-improvement.

Consequences of accreditation

An application for accreditation may result in various outcomes (ACGME 2015–2016; Potts 2013; GMA 2003–2015; RCPSC et al. 2012; GMC 2016a; IMA 2014): “Initial Accreditation”, “Continued Accreditation”, “Conditioned Accreditation” (also called “Probationary” or “With Warning”) and “Withdrawal of Accreditation. In some countries, a program that lacks the ability to support the full syllabus requirements, may receive accreditation by sending its residents to complete certain periods of training elsewhere. This is sometimes referred to as “Partial Accreditation”. Most countries allow for two programs/institutions/sites to join efforts and resources to train residents together under certain rules (“integrated sites”, “Inter-institution affiliation agreements”, “Consortium”, “Conjoint/combined accreditation” or other).

Withdrawal of accreditation was carefully used in all countries for which we could obtain numerical data. In 2016, there were 5 withdrawals in Israel (0.3% of accredited sites), 42 in the United States (0.4% of accredited programs) and 0 in the United Kingdom. In Canada, withdrawal is rare as the “notice of an intent to withdraw” category serves as a powerful tool to enable programs to make improvements (2018, telephone conversation with S Taber; unreferenced). Since some of these withdrawals are voluntary—this consequence is rarely used de-facto. In order to practically expand options for consequences and give programs/sites motivation for improvement, a broader scale of consequences is used in some countries.

Process-based/outcome-based accreditation system

Although outcome-based PGME models were first introduced during the late 1990s, it took almost 15 years for compatible outcome-based accreditation systems to be developed.

Our findings, as specified in Table 6, led us to portray a three-phased process of change in accreditation systems over the last two decades. Traditional time/process-based accreditation systems corresponding with time/process-based residency training frameworks prevailed until the late 1990s. Systems such as these emphasized program structure, increased the amount and quality of formal teaching, fostered a balance between service and education, promoted resident evaluation and feedback and gained positive results (Nasca 2012).

Implementation of new outcome-based PGME frameworks raised, in some countries, the idea of changing accreditation systems. For that purpose, standards were revised to include evaluation of each program’s efforts and resources to allow its residents to gain competencies in all the required domains. In some cases, data collection was upgraded as well and site-visits adapted to verify the information.

GMC standards, for example, require all postgraduate programs to give residents sufficient practical experience to achieve and maintain the clinical or medical competences (or both) required by their curriculum (GMC 2015). Both standards for curricula design and development “Excellence by Design” and standards for quality assurance and approval of training programs “Promoting Excellence” are concurrent with the outcome-based frameworks of “Good Medical Practice” and “General Professional Capabilities” (GMC 2015, 2017b). Canadian standards set the CanMEDS roles framework of competencies as the basis for each program in clearly defining objectives in outcome-based terms, in clinical, academic and scholarly content, in teaching and assessment activities and in faculty development (RCPSC 2007a, b Editorial Revision—June 2013, 2007 reprinted January 2011). The ACGME requires programs at their accreditation review, to describe how they are teaching and assessing their residents’ competencies and report changes made to improve residents’ learning opportunities (Swing 2007). As planned at this point of implementation, the use of outcome data in accreditation had not occurred yet, and therefore accreditation focused on the processes of teaching and assessing the competencies and not on of programs’ educational outcomes (Swing 2007).

Another phase of change took place when a need for better synchronization between the competency-based medical education and accreditation process was realized. In the United States, the ACGME pronounced its goal to accredit programs based on outcomes, to realize the promise of the “Outcomes Project” and to provide public accountability for outcomes (Potts 2013). To answer these goals and others, ACGME created in 2013 the Next Accreditation System (NAS) which emphasized an increased use of educational outcome data in accreditation. As part of NAS, the ACGME also introduced Milestones, competency-based developmental outcomes that can be demonstrated progressively by residents from the beginning of their education to the unsupervised practice of their specialties (ACGME 2015a). The milestones, which were said to permit fruition of the promise of “Outcomes”, are used by programs and institutions for self-evaluation and improvement. Data of milestones achieved by residents of all programs implementing NAS has been collected twice a year by ACGME. It has been used by Residency Review Committees as part of annual data review regarding a program and in its aggregated form as a specialty specific national normative data. Milestones were designed to serve as a benchmark for programs nationally (Potts 2013).

In 2017, after recognizing that outcomes-based education requires outcomes-based accreditation, the Canadian initiative CanRAC announced a new accreditation system called CanERA, which was developed to reflect the increasing number of residency programs shifting to a CBME model (CanRAC 2018a). The new systems introduced new features, among them a new evaluation framework, new standards, institution review process, new decision categories and thresholds, 8 years cycle and data integration, enhanced accreditation review, digital accreditation management system, emphasis on learning environment, emphasis on continuous improvement and evaluation and research (CanRAC 2018b)

Adaptation of the accreditation process to outcome-based process was gradual, concerning few universities at a time (Canada) or few specialties at a time (UK, USA). The implementation of CanERA, for example, includes a multi-phased approach containing 3 prototype testing phases in several universities prior to full implementation, which is planned for July 1, 2019 (CanRAC 2018c).

Figure 1 summarizes the process of change and Fig. 2 describes the current stage of change for each of the five reviewed countries.

Discussion

We found many similar principles in the way different countries accredit PGME-defining standards and verifying their fulfillment by site visits, information gathering and self-evaluations. The application details reveal differences originating from the structure and complexity of the local health system, as well as from culture and context (Saltman 2009; Segouin and Hodges 2005).

Site visits are one prominent tool applied to some extent by all five countries, but variations were found in their frequency, triggers, visiting teams, visited units and other factors. In Canada and Israel, surveyors are physicians, mostly unpaid for their work, while in The United Kingdom teams include specialists as well as trainees and public representatives, all paid the same daily fee. Peer-surveyors were seen to be advantageous by accrediting authorities, as well as in the surveyors’ opinion (Dos Santos et al. 2017) for fertilization and diffusion of innovations and best practices (Kennedy et al. 2011) and the ability to see what internal eyes may not notice. Nevertheless, physicians may not have enough time to devote to surveying and developing surveyors’ skills. Moreover, there is a challenge in maintaining peer-reviewers in a time of decline in volunteers (Kennedy et al. 2011). A combination of professional surveyors (as used in the United States) and peer-surveyors was suggested as a way of adding expertise to the process (Dos Santos et al. 2017), although it would increase costs. None of the other countries has yet appointed public representatives to the surveying teams as did the GMC in UK, though the idea deserves further discussion regarding the involvement of the consumers of health care as stakeholders in the results of PGME.

Self-evaluation is used by three countries and is relatively new to some of them (Guralnick et al. 2015). It is perceived by some regulators as the heart of the enhancement approach to quality assurance, while others suggest that it is unreliable (Colin Wright Associates Ltd 2012). Self-evaluation may increase emphasis on quality improvement at the local level, based on more trust in the institutions providing PGME (Akdemir et al. 2017). More research is needed to learn whether self-evaluation methods provide meaningful information that educational leaders can act on to improve their programs.

All accreditation authorities wish to maintain updated, accurate information regarding the quality of training in each institution. Accumulation of this information was once primarily based on information reported by a site visit once every few years. A shift to ongoing data collection in “real time” is advancing in several countries, using a variety of sources including surveys, annual reports or data collected through on-line platforms, concerns from residents of others and information from other health organizations.

Some of the reviewed countries came to put more emphasis on the institutional perspective among other changes. The ACGME introduced New Institutional Requirements, Institutional self-study visits and CLER (Clinical Learning Environment Review) visits. The RCPSC introduced an institution review process as an element of the new CanERA. The GMC’s standards for medical education and training “Promoting excellence” declare an expectation for organizations for educating and training medical students and doctors in the UK to take responsibility for meeting the standards.

Some of the countries we reviewed made the transition to an outcome-based PGME and realized it requires an adaptation of the accreditation system, as described earlier. The United States and Canada have taken another step by implementing an accreditation system based on outcomes. As mentioned earlier, milestones are a central component of the Next Accreditation System. Though the Next Accreditation System intentionally does not measure a single program up to its resident’s milestones records, it uses the milestones on an aggregated level. Alongside initial validity evidence of the milestones (Su-Ting 2017), some concerns were raised that programs’ needs to demonstrate effective education are influencing the measurement by the tendency to give trainees the scores they are expected to have (Witteles 2016). The ACGME itself declares its concern that programs may artificially inflate residents’ milestones assessment data if the milestones are used for high stakes decisions regarding residents or programs (ACGME, 2018b) and states that Review Committees will not judge a program based on the level assessed for each resident/fellow (ACGME 2018a), at least at the early phase.

The RCPSC has introduced milestones as part of the CanMeds 2015 framework, but does not integrate learner’s data for accreditation proposes. The RCPSC is looking for the right balance between the important procedural and structural requirements with any measurement of outcomes (2018, telephone conversation with S Taber; unreferenced).

Since an ultimate goal of any improvement to Postgraduate Medical Education is quality improvement of patient care, a future aspiration of accreditation processes may be measurement of patient care outcomes as another data source for accreditation decisions. More than a decade ago, the ACGME declared a vision of another phase to its Outcome Project, linking patient care quality and education in the competencies, in an attempt to establish that residency programs that have effective education in the competencies, give better care to their patients (Batalden et al. 2002; Swing 2007). Linking patient outcomes with educational interventions is a challenging task which would probably take more time and effort investment.

The level of centralization in PGME accreditation governance varies between countries: the division of roles between national and local authorities and the number of other PGME aspects governed by the accrediting authority (i.e., syllabi, board examinations). On one end of this centralization scale we found Canada and Israel, where one national authority is responsible for all three aspects of PGME (in collaboration with other health sector authorities), while on the decentralized end we found Germany, in which all PGME tasks are performed by the 17 state chambers with much variation. We found the decentralized nature of the German system to be a dominant factor influencing its functioning, as the federal structure of Germany has granted the state chambers of physicians far reaching self-regulation powers concerning the professional practice of physicians. To increase uniformity of specialty training regulations across the entire country, the German Medical Assembly (annual assembly of the German Medical Association) has adopted the recommended (Model) Specialty Training Regulations to serve as a template for the state chambers. However, these are not always implemented by the chambers exactly as advised (Nagel 2012). In a position paper published on 2013 (David et al. 2013), the German committee on graduate medical education of the Society for Medical Education has emphasized the need to establish a transparent and nationally standardized quality assurance procedure and therefore recommended the foundation of a single national quality assurance institution with capacities to evaluate structure, process, outputs, outcome and impact of graduate medical education in Germany. The institution should provide guidelines for implementing graduate medical training programs and initiate quality assurance programs, audits and peer-reviews. To the best of the authors knowledge, an institution as such has not yet been founded.

In the United Kingdom, though being a national authority, the GMC relies on the local boards and deaneries, and delegates much of its power of execution, allowing GMC itself to focus mainly on monitoring quality assurance and approvals. GMC’s mandate over undergraduate medical education as well as on Postgraduate Medical Education, is another unique and interesting characteristic which may have a positive contribution to streamlining standards and processes as well as effectively allocating resources between the different stages of medical education. As another role of the GMC is the approval of national curricula in all specialties, it has introduced during 2017 a new framework of standards called “excellence by design” (GMC 2017b) which sets standards for designing and developing of curricula in accordance with the fundamental principles underlining the accreditation standards as well (GMC 2015). Though a single authority may not easily cover a large jurisdiction, we do believe that integration of all roles in the hands of one authority makes synchronization of all aspects of PGME easier and may facilitate a reform such as the move to outcome-based medical education.

Accreditation procedures are complex and costly to maintain, in addition to the cost of residency training (Regenstein et al. 2016), both for the accrediting authority and institutions. Streamlining burden (e.g., time and resources) is important and may as well prevent a contribution to program leadership burnout (Dos Santos et al. 2017; Yager and Katzman 2015). Some of the reviewed countries are looking for more efficient accreditation procedures and are cutting down regulatory burden. Dos Santos et al. (2017) recommend accreditation authorities pay attention to the balance of invested resources between structure and process (i.e., required documentation, time for preparations), and human resources (i.e., training surveyors, recognition of surveyors work and efforts). It is yet unclear whether the financial and bureaucratic burden related to accreditation systems, which seems to increase whenever systems become more elaborate and demanding (Yager and Katzman 2015), is balanced against the benefit of the process.

Conclusions

All five countries have challenged their systems with innovation. It is probable that other countries are planning changes as well. Based on the comparison of the five countries examined, we point out some recommendations for accrediting authorities to consider:

-

1.

Scale of consequences The use of “Withdrawal of Accreditation” has severe consequences for the sponsoring institution, the residents, as well as to the patients cared for by those residents. Additional options used in some countries which we have highlighted, such as “Accreditation with Warning” and a limited time until the next review, may be helpful for others to consider so that withdrawal of accreditation is reserved for use in more extreme circumstances.

-

2.

Policy for site visits Frequency of visits and their triggers should be carefully considered. The examples shown in this article may facilitate an internal discussion, taking into account feasibility, cost effectiveness and educational impact. More research may help decision makers evaluate benefits and costs of alternatives.

-

3.

Multiple sources for “real time” data collection may serve as means for a risk-based approach as well as for lengthening the accreditation cycle. Nevertheless, considerations of feasibility and costs should be applied here, as well.

-

4.

A move to an outcome-based PGME requires adaptation of the accreditation system accordingly. This adaptation may benefit from a gradual transition as depicted earlier and in our three-phased model. No doubt, there is much to learn from its implementation in other countries.

-

5.

Division of roles between national and local authorities and other PGME aspects governed by the accrediting authority should be considered when planning a change.

We would stress that each country may need to make the suitable preparations accommodating for local context and culture before trying to adopt a new system, idea or tool. The common denominator of the challenges occupying the accreditation authorities would facilitate mutual learning of each country’s experience. Therefore, decision makers should constantly examine developments in accreditation in other relevant countries as an impetus for ongoing innovation and improvement.

Some recent evaluation models such as the experimental/quasi-experimental models, the Logic model, Kirkpatrick’s 4-level model or the CIPP model, may serve as a theoretical background for the creation and improvement of accreditation systems (Frey and Hemmer 2012). In the authors minds, the CIPP model which takes the structure and complexity, context and culture differences into account may offer support for accreditation decision makers in their efforts for change management and improvement, though decision makers are encouraged to familiarize themselves with different models.

Limitations of methods

Our review relied mostly on literature and published documents and therefore may lack details that were not made public. Information was abundant for some countries but lacking for others. Efforts were made to contact relevant authorities for further information but some details remained incomplete.

Abbreviations

- PGME:

-

Postgraduate Medical Education

- RCPSC:

-

Royal College of Physicians and Surgeons of Canada

- CFPC:

-

College of Family Physicians of Canada

- CMQ:

-

Collège des médecins du Québec

- ACGME:

-

Accreditation Council for Graduate Medical Education (USA)

- IMA:

-

Israeli Medical Association

- GMC:

-

General Medical Council (UK)

- GMA:

-

German Medical Association

- LEP’s:

-

Local Education Providers in UK (hospitals, trusts and other facilities employing residents)

- LETB’s:

-

Local Education and Training Boards in UK

References

Accreditation Council for Graduate Medical Education (ACGME). (2000–2018). About us Retrieved April 9, 2016 from http://www.acgme.org/About-Us/Overview.

Accreditation Council for Graduate Medical Education (ACGME). (2015a). Milestones: Frequently asked questions. Retrieved April 9, 2016 from http://www.acgme.org/Portals/0/MilestonesFAQ.pdf?ver=2015-11-06-115640-040.

Accreditation Council for Graduate Medical Education (ACGME). (2015b). Site visit FAQs. Retrieved August 10, 2017 fromhttp://www.acgme.org/What-We-Do/Accreditation/Site-Visit/Site-Visit-FAQs.

Accreditation Council for Graduate Medical Education (ACGME) (2015–2016). Data resource book: Academic year 2015–2016. Retrieved July 22, 2017 from http://www.acgme.org/About-Us/Publications-and-Resources/Graduate-Medical-Education-Data-Resource-Book.

Accreditation Council for Graduate Medical Education (ACGME). (2016). ACGME program requirements for graduate medical education in internal medicine. Retrieved February 22, 2016 from http://www.acgme.org/Specialties/Program-Requirements-and-FAQs-and-Applications/pfcatid/2/Internal%20Medicine.

Accreditation Council for Graduate Medical Education (ACGME). (2018a). Frequently asked questions: Milestones. Retrieved December 3, 2018 from https://www.acgme.org/Portals/0/MilestonesFAQ.pdf?ver=2015-11-06-115640-040.

Accreditation Council for Graduate Medical Education (ACGME). (2018b). Use of individual milestones data by external entities for high stakes decisions: A function for which they are not designed or intended. Retrieved December 3, 2018 from https://www.acgme.org/Portals/0/PDFs/Milestones/UseofIndividualMilestonesDatabyExternalEntitiesforHighStakesDecisions.pdf?ver=2018-04-16-143837-240.

Akdemir, N., Lombarts, K. M. J. M., Paternotte, E., Schreuder, B., & Scheele, F. (2017). How changing quality management influenced PGME accreditation: A focus on decentralization and quality improvement. BMC Medical Education, 17(1), 98.

Batalden, P., Leach, D., Swing, S., Dreyfus, H., & Dreyfus, S. (2002). General competencies and accreditation in graduate medical education. Health Affairs, 21(5), 103–111.

Berlin, Ä. (2014). Kriterien für die Erteilung einer Befugnis zur Leitung der Weiterbildung zum Facharzt für Innere Medizin. [Criteria for granting the right to lead continuing education as a specialist in internal medicine] German. Retrieved from https://www.aerztekammer-berlin.de/10arzt/15_Weiterbildung/12WB-Informationen/Befugniskriterien/Facharzt-Weiterbildungen/Befugniskriterien_Innere-Medizin-und-Angio_2014.pdf.

Canadian Post-MD Education Registry (CAPER). (2015–2016). Annual census of post-M.D. trainees. Retrieved July 22, 2017 from https://caper.ca/en/post-graduate-medical-education/annual-census/.

Canadian Residency Accreditation Consortium (CanRAC). (2018a). Connecting CanERA and CBME. Retrieved December 29, 2018 from http://www.canrac.ca/canrac/reform-cbme-e.

Canadian Residency Accreditation Consortium (CanRAC). (2018b). About CanERA. Retrieved December 29, 2018 from http://www.canrac.ca/canrac/about-e.

Canadian Residency Accreditation Consortium (CanRAC). (2018c). Timeline for accreditation reform. Retrieved December 29, 2018 from http://www.canrac.ca/canrac/am-i-affected-e.

Colin Wright Associates Ltd. (2012). Developing an evidence base for effective quality assurance of education and training: Final report. General Medical Council (UK). Retrieved April 29, 2017 from https://www.gmc-uk.org/Developing_and_evidence_base_for_effective_quality_assurance_of_education_and_training_May_2012.pdf_48643906.pdf

David, M. D., et al. (2013). The future of graduate medical education in Germany: Position paper of the Committee on Graduate Medical Education of the Society for Medical Education (GMA). GMS Zeitschrift für Medizinische Ausbildung, 30(2), 26.

Dos Santos, R. A., Snell, L., & Tenorio Nunes, M. D. (2017). The link between quality and accreditation of residency programs: The surveyors’ perceptions. Medical Education Online, 22(1), 1270093.

Egidi, G., Bernau, R., Börger, M., Mühlenfeld, H. M., & Schmiemann, G. (2014). Der Kriterienkatalog der DEGAM für die Befugnis zur Facharztweiterbildung Allgemeinmedizin − ein Vorschlag zur Einschätzung der Strukturqualität in Weiterbildungspraxen. GMS Zeitschrift für Medizinische Ausbildung, 31(1), Doc8.

Frank, J. R., Snell, L. S., ten Cate, O., Holmboe, E. S., Carraccio, C., Swing, S. R., et al. (2010). Competency based medical education: Theory to practice. Medical Teacher, 32, 638–645.

Frenk, J., Chen, L., Bhutta, Z. A., Cohen, J., Crisp, N., Evans, T., et al. (2010). Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. Lancet, 376(9756), 1923–1958.

Frey, A., & Hemmer, P. (2012). Program evaluation models and related theories: AMEE Guide No. 67. Medical Teacher, 34, e288–e299.

General Medical Council (GMC). (1983–2016). Medical act 1983. Retrieved May 10, 2017 from https://www.gmc-uk.org/about/legislation/medical_act.asp.

General Medical Council (GMC). (2015). Promoting excellence: Standards for medical education and training. Retrieved May 6, 2016 from https://www.gmc-uk.org/education/standards.asp.

General Medical Council (GMC). (2016a). Quality assurance framework. Retrieved December 8, 2016 from http://www.gmc-uk.org/education/qaf.asp.

General Medical Council (GMC). (2016b). National training survey 2016-key findings. Retrieved July 22, 2017 from https://www.gmc-uk.org/National_training_survey_2016___key_findings_68462938.pdf.

General Medical Council (GMC). (2016c). GMC news for doctors-how the GMC uses the annual fee paid by doctors. Retrieved August 5, 2017 from https://www.gmc-uk.org/publications/29035.asp.

General Medical Council (GMC). (2017a). Generic professional capabilities framework. Retrieved May 17, 2017 from https://www.gmc-uk.org/education/postgraduate/GPC.asp.

General Medical Council (GMC). (2017b). Exellence by design: Standards for postgraduate curricula. Retrieved December 20, 2018 from https://www.gmc-uk.org/education/standards-guidance-and-curricula/standards-and-outcomes/excellence-by-design.

General Medical Council (GMC). (2017c). Exploratory questions. Retrieved April 29, 2017 from http://www.gmc-uk.org/education/30043.asp

German Medical Association (GMA). (2003–2015). (Model) Specialty training regulations. Retrieved January 1, 2018 from http://www.bundesaerztekammer.de/fileadmin/user_upload/downloads/pdf-Ordner/Weiterbildung/MWBO_Englisch.pdf.

German Medical Association (GMA). (2014). Projekt “Evaluation der Weiterbildung” in Deutschland [Project “Evaluation of further education” in Germany], German. Retrieved August 11, 2016 from www.bundesaerztekammer.de/aerzte/aus-weiter-fortbildung/weiterbildung/evaluation-der-weiterbildung/.

German Medical Association (GMA). (2015). Bundesweit einheitlicher Kernfragebogen [Nationwide uniform core questionnaire]. Retrieved December 29, 2018 from http://www.bundesaerztekammer.de/aerzte/aus-weiter-fortbildung/weiterbildung/evaluation-der-weiterbildung/bundesweit-einheitlicher-kernfragebogen/.

Guralnick, S., Hernandez, T., Corapi, M., Yedowitz-Freeman, J., Klek, S., Rodriguez, J., et al. (2015). The ACGME self-study: An opportunity, not a burden. Journal of Graduate Medical Education, 7(3), 502–505.

Irby, D. M., Cooke, M., & O’Brien, B. C. (2010). Calls for reform of medical education by the carnegie foundation for the advancement of teaching: 1910 and 2010. Academic Medicine, 85(2), 220.

Israeli Medical Association (IMA). (2018). What is IMA? Retrieved December 20, 2018 from https://www.ima.org.il/ENG/ViewContent.aspx?CategoryId=4131

Israeli Medical Association (IMA), Scientific Council. (2014). “ועדת הכרה עליונה” [Supreme Accreditation Board], Hebrew. Retrieved May 12, 2016 from https://www.ima.org.il/mainsitenew/ViewCategory.aspx?CategoryId=1421.

Israeli Medical Association, Scientific Council (IMA). (2017). Numerical data as to physicians, residents, accredited sites, specialties & sub specialties recieved upon request.

Karle, H. (2006). Global standards and accreditation in medical education: A view from the WFME. Academic Medicine, 81(12), S43.

Kennedy M, Rainsberry P, Abner E. (2011). Accreditation of Postgraduate Medical Education. Members of the FMEC PG consortium. Retrieved September 17, 2016 from https://afmc.ca/pdf/fmec/11_Kennedy_Accreditation.pdf

King, N. (2012). Doing template analysis. In G. Symon & C. Cassell (Eds.), Qualitative organizational research: Core methods and current challenges (pp. 426–450). London: SAGE.

Manthous, C. A. (2014). On the outcome project. Yale J Biol Med, 87(2), 213–220.

Marsh, J. L., Potts, J. R., 3rd, & Levine, W. N. (2014). Challenges in resident education: is the Next Accreditation System (NAS) the answer? AOA critical issues. Journal of Bone and Joint Surgery American, 96(9), e75.

Nagel E. (Ed). (2012) The Healthcare System in Germany: A short introduction. Prepered on behalf of the German Medical Association and the Hans-Neuffer-Foundation by editors of the Deutscher Arzteblatt (Cologne, German Medical Association).

Nasca, T. J., Philibert, I., Brigham, T., & Flynn, T. C. (2012). The next GME accreditation system: Rationale and benefits. New England Journal of Medicine, 366(11), 1051–1056.

Organization for Economic Co-operation and Development (OECD). (2016). Health at a glance, OECD ilibrary. Retrieved 21 July, 2017 from https://www.oecd-ilibrary.org/social-issues-migration-health/health-at-a-glance_19991312.

Potts JR. (2013). Implementing the next accreditation system. ACGME Webinar. Retrieved March 11, 2016 from https://www.acgme.org/Portals/0/PFAssets/Nov4NASImpPhaseII.pdf?ver=2015-11-06-120601-263.

Regenstein, M., Nocella, K., Jewers, M. M., & Mullan, F. (2016). The cost of residency training in teaching health centers. New England Journal of Medicine, 375(7), 612–614.

Royal College of Physicians and Surgeons of Canada (RCPSC), College of Family Physicians of Canada (CFPC) and Collège des médecins du Québec (CMQ). (2004). Royal college residency accreditation commitee policies and procedres: Canadian Residency Education. Retrieved August 5, 2017 from http://www.royalcollege.ca/rcsite/accreditation-pgme-programs/accreditation-residency-programs-e.

Royal College of Physicians and Surgeons of Canada (RCPSC), College of Family Physicians of Canada (CFPC) and Collège des médecins du Québec (CMQ). (2007 Editorial Revision—June 2013). General standards applicable to the University and Affiliated Sites: A standards. Retrieved April 25, 2016 from http://www.royalcollege.ca/rcsite/accreditation-pgme-programs/accreditation-residency-programs-e.

Royal College of Physicians and Surgeons of Canada (RCPSC), College of Family Physicians of Canada (CFPC) and Collège des médecins du Québec (CMQ). (2007 reprinted January 2011). General Standards applicable to all residency programs: B standards. Retrieved April 25, 2016 from http://www.royalcollege.ca/rcsite/accreditation-pgme-programs/accreditation-residency-programs-e.

Royal College of Physicians and Surgeons of Canada (RCPSC), College of Family Physicians of Canada (CFPC) and Collège des médecins du Québec (CMQ). (2012). New terminology for the categories of accreditaion. Retrieved May 12, 2016 from http://www.royalcollege.ca/portal/page/portal/rc/common/documents/accreditation/new_terminology_categories_of_accreditation_june_27_2012_e.pdf.

Saltman, R. B. (2009). Context, culture and the practical limits of health sector accountability. In B. R. Rosen, A. V. Israeli, & S. T. Shortell (Eds.), Improving health and health care: Who ia responsible? Who is accountable? (pp. 35–50). Jerusalem: The Isarel National Institution for Health Policy Research.

Segouin, C., & Hodges, B. (2005). Educating doctors in France and Canada: are the differences based on evidence or history? Medical Education, 39(12), 1205–1212.

State of Israel. (1973–2017). ““תקנות הרופאים, אישור תואר מומחה ובחינות, התשל”ג- 1973 [Physicians’ Regulations: Approval of Specialty Title and Examinations-1973]. Hebrew. English translation available from: https://www.ima.org.il/internesnew/ViewCategory.aspx?CategoryId=7446.

Su-Ting, T. L. (2017). The promise of milestones: Are they living up to our expectations? Journal of Graduate Medical Education, 9(1), 54–57.

Swing, S. R. (2007). The ACGME outcome project: retrospective and prospective. Medical Teacher, 29(7), 648–654.

Witteles, R. M. (2016). Accreditation council for graduate medical education (ACGME) milestones-time for a revolt? JAMA Internal Medicine, 176(11), 1599–1600.

World Health Organization (WHO). (2013). Transforming and scaling up ‘health professionals’ education and training: World Health Organization Guigelines. Geneva: WHO.

Yager, J., & Katzman, J. E. (2015). Bureaucrapathologies: Galloping regulosis, assessment degradosis, and other unintended organizational maladies in post-graduate medical education. Academic Psychiatry, 39(6), 678–684.

Acknowledgements

The authors would like to thank colleagues from several countries for their kind and very helpful replies to our requests for information: Sarah Taber of the Royal College of Physicians and Surgeons of Canada for conversation and correspondence; Dr. Ramin Parsa-Parsi, Siobhan O’Leary and Elisabeth Jibikilayi of the German Medical Association for their detailed and comprehensive correspondence; Yoram Shapira, Professor Emeritus of Anesthesiology, Chairman of Israeli Supreme Accreditation Board of the Scientific Council of the Israeli Medical Association (at that time), for a comprehensive interview.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

D. Fishbain declares being an employee of the Israeli Medical Association, one of the authorities mentioned in the manuscript, as the director of its Scientific Council. Y. Danon and R. Nissanholz-Gannot report no declarations of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Fishbain, D., Danon, Y.L. & Nissanholz-Gannot, R. Accreditation systems for Postgraduate Medical Education: a comparison of five countries. Adv in Health Sci Educ 24, 503–524 (2019). https://doi.org/10.1007/s10459-019-09880-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10459-019-09880-x