Abstract

Cloud resource provisioning is a challenging job that may be compromised due to unavailability of the expected resources. Quality of Service (QoS) requirements of workloads derives the provisioning of appropriate resources to cloud workloads. Discovery of best workload–resource pair based on application requirements of cloud users is an optimization problem. Acceptable QoS cannot be provided to the cloud users until provisioning of resources is offered as a crucial ability. QoS parameters-based resource provisioning technique is therefore required for efficient provisioning of resources. This research depicts a broad methodical literature analysis of cloud resource provisioning in general and cloud resource identification in specific. The existing research is categorized generally into various groups in the area of cloud resource provisioning. In this paper, a methodical analysis of resource provisioning in cloud computing is presented, in which resource management, resource provisioning, resource provisioning evolution, different types of resource provisioning mechanisms and their comparisons, benefits and open issues are described. This research work also highlights the previous research, current status and future directions of resource provisioning and management in cloud computing.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction and background

Resource management is an umbrella activity that describes all the characteristics and usage of cloud resources. It encompasses tasks like resource provisioning, resource scheduling and resource monitoring. It also describes the resource provisioning evolution. Resource management controls user workloads mapped to the resources based on Quality of Service (QoS) requirements. The process of resource provisioning is shown in Fig. 1. Cloud workload is an abstraction of work of that instance or set of instances to be executed [1, 2]. For example running a Web services is a valid workload and resources are provisioned according to type of workloads. The types of workload that have been considered for this research work are Web sites, technological computing, endeavor software, performance testing, online transaction processing, e-commerce, central financial services, storage and backup services, production applications, software/project development and testing, graphics oriented, critical internet applications and mobile computing services. To serve different user’s requests, different types of resources are used for cloud resource provisioning at infrastructure level which includes physical resources such as compute, memory, storage, servers, processors and networking [3–5].

Cloud resource provisioning [1]

The challenges of resource management range from managing heterogeneity of resources and efficient matchmaking of available resources to workloads with the help of the workload analyzer (broker). The broker performs matchmaking (mapping of workloads to available resources) after submission of workloads by user and determines its possibility (whether workload can be provisioned on resources based on QoS requirements or not). Broker sends requests to resource scheduler for scheduling after successful provisioning of resources. The broker releases extra amount of resources from resource pool based on the performance required. The broker stores information about the resources for submitting workloads and monitors desired performance that will either cause the system to acquire or release resources. As shown in Fig. 1, Bulk of Workloads are coming for execution and are processed and stored in workload queue. Workload Analyzer (WA) contains the information about resources, details of QoS metrics and SLA, to provision the resources for execution of workloads based on QoS requirements as described by cloud consumer. In SLA Measure, WA receives the information from the suitable Service Level Agreement (SLA). After studying and confirming the various QoS constraints required by the workload, WA checks the availability of resources. QoS Metric Data contains the information regarding QoS metrics used to calculate weight for clustering of workloads. The different cloud workloads have different set of QoS requirements and characteristics. All the workloads are submitted and analyzed based on their QoS requirements. Different workloads are then clustered in different clusters (in case of large number of workloads) for execution on different set of resources. The resource details include the number of CPUs, size of memory, cost of resources, type of resources and number of resources [1]. All the common resources are stored in resource pool. Resource Provisioner provides the demanded resources to the workload for their execution in cloud environment only if required resources are available in resource pool. If the required resources are not available according to QoS requirement then the Workload Resource Manager (WRM) asks to resubmit the workload with modified QoS requirement based on the availability of existing resources. After the provisioning of resources, workloads are submitted to resource scheduler. Then the resource scheduler asks to submit the workloads for execution on provisioned resources. After this, WRM sends back the provisioning results (resource information) to the cloud user. After successful provisioning of resources, resource scheduler executes all the workloads on provisioned resources efficiently [2].

Thus, actual resource scheduling can be done in an efficient manner, after resource provisioning. To map the user workload to a corresponding cloud resource based on QoS requirements is a challenging task. Considering maximum QoS requirements is a necessary task for efficient resource provisioning in cloud. Without affecting the other QoS parameters, cloud workload should be executed on available resources. Therefore, it is essential to uncover the research challenges in cloud resource provisioning. Considering the high resource cost and execution time, resource provisioning has appeared as a hot spot field of research in cloud. Various provisioning parameters and criteria are directed to different types of Resource Provisioning Mechanisms (RPMs). This research work discusses the details of cloud resource provisioning. Effective cloud resource provisioning helps to improve the utilization of resources to reduce execution cost, execution time and energy consumption and impact of their execution on environment and considering other QoS parameters like reliability, security, availability and scalability [6].

In cloud computing environments, there are two parties: cloud providers and cloud users. On the one hand, providers hold massive computing resources in their large datacenters and rent resources out to users on a per-usage basis. On the other hand, there are users who have applications with fluctuating loads and lease resources from providers to run their applications. One remarkable characteristic of the cloud computing environment is that these parties are often distinct parties with their specific interests. Usually, the aim of providers is to produce as much profits as possible with lowest investment. To that end, they might want to embrace their computing resources; for example, by hosting as many workloads as possible on each resource. In other words, providers want to maximize utilization of their resources. Nevertheless, executing too many workloads on a single resource can cause workloads to interfere with each other and may result in unpredictable performance which, in turn, discourages the cloud consumer. Therefore, the cloud providers may remove present resources or reject resource requests to maintain service quality, but it could make the environment even more unpredictable. On the other hand, cloud consumers want their workloads done at least expenditure or, in other words, they seek to maximize their cost performance. This includes having suitable resources that suit the workload features of cloud consumers’ applications and consume resources efficiently. They also have to take unpredictable resources into account when they request resources and provision resources. However, these two parties do not want to share information with each other, which makes optimal resource allocation more challenging [7]. The challenges of resource provisioning like dispersion, uncertainty and heterogeneity of resources are not resolved with traditional RPMs in cloud environment. Thus, there is a need to make cloud services and cloud-oriented applications efficient by taking care of these properties of the cloud environment.

1.1 Need of resource provisioning

The objective of resource provisioning is to detect and provision the appropriate resources to the suitable workloads on time, so that applications can utilize the resources effectively. In other words, the amount of resources should be minimum for a workload to maintain a desirable level of service quality, or maximize throughput (or minimize workload completion time) of a workload. For better resource provisioning, best resource workload mapping is required. The aim of resource provisioning is to detect the adequate and suitable workload that supports the scheduling of multiple workloads, to be capable enough to fulfill different QoS requirements such as CPU utilization, availability, reliability, security, etc. for cloud workload. Therefore, resource provisioning considers the execution time of every distinct workload, but most importantly, the overall performance is also based on type of workload, i.e., heterogeneous (different QoS requirements) and homogenous (similar QoS requirements) [8, 9].

1.2 Motivation for research

-

Cloud resource provisioning is a static allocation of resources to cloud workloads prior to resource scheduling. Therefore, this study focuses on resource provisioning mechanisms based on different provisioning criteria.

-

We recognized the requirement of methodical literature survey after considering progressive research in cloud resource provisioning. Therefore, we concised the available research based on broad and methodical search in existing database and presented the research challenges for advanced research.

1.3 Related surveys

The three researchers Hussain et al. [10], Islam et al. [11] and Huang et al. [12] have done innovative literature reviews in the field of resource allocation. Nevertheless, the research has constantly grown in the field of resource provisioning. There is a need of methodical literature survey to evaluate and integrate the existing research available in this field. This research presents a methodical literature survey to evaluate and uncover the research challenges based on available existing research in the field of cloud resource provisioning.

1.4 Paper organization

The organization of rest of this paper is as follows: Sect. 2 presents the resource provisioning background, concepts of resource provisioning, scheduling and monitoring under the title of resource management. Section 3 describes the review technique used to find and analyze the available existing research, research questions and searching criteria. Section 4 presents the results of the methodical literature survey including resource provisioning mechanisms and their comparisons. Section 5 presents the discussions of this research work including benefits of resource provisioning and implications of this research work. Section 6 describes the future research directions in the area of cloud resource provisioning. Note, a glossary of acronyms used in this paper can be found in “Appendix 3”.

2 Background

Initially, we classify the various categories of cloud resource provisioning mechanisms, and the factors leading to cloud resource provisioning. After that we present the mechanisms of cloud resource provisioning and justify as to why cloud resource provisioning is advantageous occasionally.

2.1 Resource management

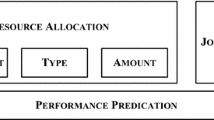

Cloud computing provides dynamic allocation of resources and delivers pay per use-based guaranteed and reliable services. Many cloud consumers can demand number of cloud services concurrently in cloud computing. Subsequently there is a need to provide all the resources to requesting cloud consumer in a well-organized way to fulfill their requirements. There are different ways to allocate the resources to cloud workloads that have been identified from the literature [1, 2]. The resource management in cloud computing comprises of three main functions: resource provisioning, resource scheduling and resource monitoring as shown in Fig. 2. Cloud consumer submits their workloads along with their QoS requirements to the cloud provider for execution.

After submission, the cloud provider wants to execute the workloads with minimum time while cloud consumer wants to execute with minimum execution cost. Based on QoS requirements and these constraints, the resources are provisioned from set of resources \(\{r_1, r_2, r_3 ,\ldots ,r_n \}\) for user’s workloads \(\{w_1, w_2, w_3,\ldots , w_m \}\) with maximum resource utilization and customer satisfaction.

Resource provisioning maps every cloud workload to appropriate resource based on QoS requirements of workloads and permitting workloads to fulfill some performance standard. QoS-based resource provisioning determines resources and allocates workloads to suitable resources. Efficient scheduling of workload can improve the performance by provisioning of appropriate resources. To maximize the revenue and improve the user satisfaction, an effective allocation of resources is desired in cloud environment. The execution cost is considered in order to optimize the execution of workloads. The cost of execution of workloads includes the leasing cost of resources, cost of violation of SLA and cost of configuration change [13, 14]. The benefit of these approaches is to manage performance challenges from simple to complex dynamic system. The performance of system may be changed and depends on the environmental conditions like variation of workloads or errors in configuration of system.

2.1.1 Resource provisioning

The term “resource provisioning” was introduced in the context of Grid computing. Cloud resource provisioning is a challenging task due to unavailability of the adequate resources [1]. The provisioning of appropriate resources to cloud workloads depends on the QoS requirements of cloud applications [15]. To provision the suitable resources to workloads is a difficult job and based on QoS requirements, identification of best workload–resource pair is an important research issue in cloud. Minimization of execution time is an optimization criteria considered in this problem as reported from existing research [1, 2]. The problem has been derived to acquire an optimal solution. The problem can be expressed as: consider a collection of individualistic cloud workloads \(\{w_1, w_2, w_3 ,\ldots , w_m \}\) to map on a collection of dynamic and heterogeneous resources \(\{r_1, r_2, r_3,\ldots ,r_n \}\). \({R} = \{r_1 \le {k} \le {n}\}\) is the collection of resources and n is the total number of resources. \({W} = \{w_i \} {\vert }1 \le {i} \le {m}\}\) is the collection of cloud workloads and m is the total number of cloud workloads [2]. The fundamental kinds of resource provisioning found from existing literature are adaptive based, cost based, time based, compromised cost time based, bargaining based, QoS based, SLA based, energy based, optimization based, nature inspired and bio-inspired based, dynamic and rule based. RPMs based on these kinds are described in Sect. 4.1. The basic resource provisioning model in cloud is shown in Fig. 3. As shown in Fig. 3, cloud consumer interacts with Resource Provisioning Agent (RPA) and submits cloud application (workload). RPA performs resource discovery and selects the best resource based on consumer requirements [16]. When workload is submitted to RPA, its access is the Resource Information Centre (RIC) which contains the information about all the resources in the resource pool and obtains the result based on requirement of workload as specified by user. Resource discovery is a process of identifying the available resources and generated list of identified resources. Resource selection is process of selecting the best workload resource match based on QoS requirement described by cloud consumer in terms of SLA from the list generated by resource discovery.

Figure 4 describes the process of cloud resource provisioning. Cloud consumer interacts through cloud portal and submits the QoS requirements of workload after authentication. Based on consumer requirements (QoS) and information delivered by RIC, RPA checks the available resources. It provisions the demanded resources to the workload for execution in cloud environment only if the desired resources are available in resource pool. RPA requests to submit the workload again with new QoS requirements as a SLA document if the required resources are not available according to QoS requirement [17]. After the effectively provisioning of resources, workloads are submitted to resource scheduler. Then the resource scheduler asks to submit the workload for the provisioned resources. After this, WRM sends back the provisioning results (resource information) to RPA, which further forwards the provisioning results to the cloud user.

2.1.2 Resource scheduling

The challenges to resource provisioning include dispersion, uncertainty and heterogeneity of resources that are not resolved with traditional RPMs in cloud environment. Thus, there is a need to make cloud services and cloud-oriented applications more efficient by taking care of these properties of the cloud environment. Resource scheduling comprises of two functions: Resource Allocation and Resource Mapping. Aim of Resource Allocation is to allocate appropriate resources to the suitable workloads on time, so that applications can utilize the resources effectively [18]. In other words, the amount of resources should be minimum for a workload to maintain a desirable level of service quality, or maximize throughput of a workload. To address this problem, resource provisioning provides new solutions. What resources should be acquired/released in the cloud, and how should the computing activities be mapped to the cloud resources, so that the application performance can be maximized within the budget constrains? Resource Mapping is a process of mapping of workloads to appropriate resources based on the QoS requirements as specified by user in terms of SLA to minimize the cost and execution time and maximize the profit. The QoS parameters like throughput, CPU utilization, memory utilization, etc. are generally considered for resource allocation for every consumer in cloud and utilizes the cloud services up to maximum as possible. To allocate the resources to all the cloud consumers without the violation of SLA is an important objective of resource provisioning [19]. There is a need of effective resource provisioning mechanism which can handle the fluctuation in requirements of workload to maximize resource utilization. Underprovisioning and overprovisioning of resources is a big challenge due to changes in the QoS requirements of the workloads and overestimation of load [2]. To make resource provisioning effective, adequate number of resources are required to execute the current load by avoiding underprovisioning and overprovisioning of resources.

2.1.3 Resource monitoring

Performance optimization can be best achieved by an efficient monitoring of the utilization of computing resources [1, 2, 7, 20]. So, we need a comprehensive intelligent monitoring agent to analyze the performances of resources. In SLA, both the parties (cloud provider and cloud consumer) should have specified the possible deviations to achieve appropriate quality attributes. Cloud provider’s SLA will give an indication of how much actual SLA deviation of service is feasible, and to what amount it is agreeable to require its own financial resources to compensate for unexpected outages. For successful execution of a cloud workload, the value of actual deviation should also be less than threshold value of deviation. The resource monitoring system collects the resource usages by measuring through performance metrics such as CPU and memory utilization [21]. Cloud provider needs to retain the adequate number of resources to deliver the continuous service to cloud consumer during peak load [22]. Resource monitoring is used to take care of important QoS requirements like security, availability, performance, etc. during workload execution. There are two main aspects of resource monitoring: (i) consumer wants to execute their workload at minimum cost and minimum time without violation of SLA and (ii) provider wants to execute the workload with minimum number of resources. For this, resource monitoring is a vital part of resource management to measure the SLA deviation, QoS requirements and resource usages [23]. The resources that are utilized by the physical and virtual infrastructures and the applications running on them must be measured efficiently. Resource Monitoring can be focused from different perspectives such as security monitoring to achieve confidentiality, integrity and availability of data.

2.2 Cloud resource provisioning evolution: previous research

The evolution of resource provisioning describes the QoS parameters in which the RPM is proposed across the backstory of the cloud. Further remarkable QoS parameters and Focus of Study (FoS) of resource provisioning by evolution of cloud across the various years are described in resource provisioning evolution as shown in Fig. 5. In year 2007, Zhang et al. [24] proposed a forecast prototype support runtime resource provisioning to categorize and identify phase behavior by using clustering technique by considering penalties and compensation related to violations of SLA and resource consumption design. Zhang et al. [25] presented an approach to analyze the behavior of submitted applications through clustering technique after exploring the consumption of resources. Based on historical records, future behavior of phase can be forecasted correctly. In year 2008, Juve and Deelman [26] examined several techniques (advance reservations, multi-level scheduling) based on resource provisioning that may be used to reduce these overheads (cost, performance and usability). In year 2009, Dejun et al. [27] studied performance behavior of stability of virtual instances with respect to time with variations in average response time in Amazon Elastic Compute Cloud (EC2). In year 2010, Berl et al. [28] presented a VM selection method that seeks to find good VM combinations for being provisioned together providing resource guarantees for VMs and better overall resource utilization. Xiao et al. [29] proposed reputation-based resource provisioning mechanism which considers QoS parameters by using Dirichlet Multinomial Model (DMM) to reduce the resource consumption cost and fulfilling QoS requirements by considering the statistical probability of the QoS metric, i.e., response time.

Then in 2011, Tian and Chen [30] described a resource provisioning approach which investigates the MapReduce processing procedure and price function used to make a relationship among complexity of the Reduce function, input values and available resource infrastructures. This approach reduces the consumption of resources and executes the user application within desired deadline and budget. Iqbal et al. [31] described an automatic approach for multi-tier Web application to discover and resolve the bottlenecks with minimum response time and used to identify overprovisioning of resources in cloud. This approach provides maximum resource utilization without violation of SLA. Buyya et al. [32] described an SLA-aware architecture which integrates market-oriented strategies of resource provisioning and the idea of virtualization to provision the required resources to corresponding workloads. In year 2012, Vecchiola et al. [33] presented deadline-aware resource provisioning technique for Aneka, considering QoS constraints of scientific applications and resources from different cloud providers to reduce application execution times by proficiently allocating resources from different cloud providers. Zhang et al. [34] described a control theory-based dynamic resource provisioning method to decrease the consumption of energy and achieving required performance whereas keeping the tolerable average provisioning deferral for different jobs. Calheiros et al. [35] presented a platform on which Aneka is used to develop cloud applications (scalable) and provisions the resources from various cloud providers for execution of different user applications. In year 2013, Grewal et al. [36] proposed resource provisioning mechanism based on rules for the hybrid cloud environment to minimize the execution and cost improve dynamic scalability. In year 2014, Bellavista et al. [37] presented a novel method for adaptive replication that trades fault tolerance for increased capacity during load spikes to reduce resource consumption while guaranteeing an upper-bound on information loss in case of failures. Kousiouris et al. [38] described behavior-based resource provisioning approach for cloud services to analyze the cloud consumer and application behavior.

2.2.1 Resource provisioning analysis

This section covers studies related to resource provisioning mechanisms based on QoS and FoS. Many resource provisioning mechanisms work on improving cloud by reduction of execution time, cost and other QoS parameters. Some studies investigated only resource provisioning mechanisms. These are also incorporated in this domain. Zhang et al. [24, 25] discussed prediction and on-demand-based resource provisioning mechanisms, respectively. Cost is considered as a QoS parameter and FoS is SLA. Juve and Deelman [26] presented resource capacity-based resource provisioning mechanism in which scalability is considered as a QoS parameter and FoS is workflow based applications. Dejun et al. [27] proposed EC2 performance analysis-based resource provisioning mechanism, response time is considered as QoS parameter and FoS is server-oriented applications. Berl et al. [28] and Xiao et al. [29] presented VM multiplexing and reputation-based QoS resource provisioning mechanisms, respectively. Resource utilization and response time is considered as a QoS parameters and FoS is virtualization and SLA. Tian and Chen [30], Iqbal et al. [31] and Buyya et al. [32] proposed optimal, adaptive and SLA-based resource provisioning mechanisms, respectively, in which QoS parameters is considered as an execution time, resource utilization and cost. FoS is autonomic resources, multitier and map reduce applications. Vecchiola et al. [33], Zhang et al. [34] and Calheiros et al. [35] presented deadline driven, dynamic energy-aware and QoS-based resource provisioning mechanisms, respectively. Execution time, energy, response time, deadline and cost are considered as a QoS parameters and FoS is workloads, scientific and elastic applications. Grewal et al. [36] proposed rule-based resource provisioning mechanism, in which scalability and cost is considered as QoS parameters and FoS is hybrid clouds. Bellavista et al. [37] and Kousiouris et al. [38] presented adaptive and dynamic resource provisioning mechanisms, respectively, in which execution time is considered as a QoS parameter and FoS is fault-tolerant and high-level applications.

3 Review technique

The methodical survey described in this research article has been taken from Kitchenham et al. [39]. The stages of this literature review include creation of review framework, executing the survey, investigating the results of review, recording the review results and exploration of research challenges. Table 1 describes the list of research questions required to plan the survey. Details of review technique used in this research work can be found in our previous review paper [40].

Table 2 describes the 1308 research papers retrieved in manual search and electronic database search. Figure 6 describes the review technique used in this systematic review.

3.1 Sources of information

Searching broadly in electronic database sources as recommended by Kitchenham et al. [39] and following electronic databases have been used for searching:

-

Springer (\(<\) www.springerlink.com \(>\))

-

ScienceDirect (\(<\) www.sciencedirect.com \(>\))

-

Google Scholar (\(<\) www.scholar.google.co.in \(>\))

-

IEEE eXplore (\(<\) www.ieeexplore.ieee.org \(>\))

-

ACM Digital Library (\(<\) www.acm.org/dl \(>\))

-

Wiley Interscience (\(<\) www.Interscience.wiley.com \(>\))

-

HPC (\(<\) www.hpcsage.com \(>\))

-

Taylor & Francis Online (\(<\) www.tandfonline.com \(>\))

3.2 Search criteria

The keyword “resource provisioning” is involved in the abstract of each research paper in every search. It is time-consuming process and general method for review. The various search strings used in this review are described in Table 2. This methodical literature survey included both types of research articles: quantitative and qualitative written in English language from year 2007 to 2014. The basic research in this area is commenced in 2000 but rigorous development took place after 2005. We included research papers from journals, conferences, symposiums, workshops and white papers from industry along with technical reports. Exclusion criteria used at different stages is described in Fig. 6. We applied individual search on some journals of Springer, Wiley, Taylor and Francis, Science Direct, etc. to cross-check the e-search. Our search retrieved over 1308 research articles as shown in Fig. 6, which were reduced to 701 research articles based on their titles, 495 research articles based on their abstracts and conclusion and 377 research articles based on full text. Then, these 377 research articles were investigated completely to find a final collection of 105 research articles through references investigation and eliminating common challenges based on the criterion of inclusion and exclusion.

3.3 Quality assessment

A quality assessment was implemented on the outstanding research articles subsequently using the criterion of inclusion and exclusion to find suitable research articles. Cloud resource provisioning-related research articles are included in various distinct conferences and journals. Every research article was explored for unfairness, external and internal validation of results according to CRD guidelines given by Kitchenham et al. [39] to provide high-quality resource provisioning research articles.

3.4 Data extraction

The 105 research articles included in this methodical literature survey according to data extraction guidelines are described in “Appendix 1”. “Appendix 1” used in process of information gathering to find out research questions. We faced certain problems like extracting suitable data when methodical literature survey started. We have contacted numerous authors to find the in-depth knowledge of research if required. The following procedure for data extraction was used in our review:

-

One author extracted data from 105 research articles after in-depth review.

-

Review results were cross checked by other author on random samples.

-

During cross checking, if there were any conflict, then compromised meeting was called to resolve the conflict.

4 Results

The objective of this review is to explore the existing research as per the research questions stated in Table 1. Out of 105 research articles, twenty six are published in prominent journals and the remaining is published in foremost conferences, symposiums and workshops on cloud computing. It is value stating about the publication for that research articles on resource provisioning mechanisms are published in comprehensive variety of journals and conference proceedings. “Appendix 2” lists the journals and conferences publishing most cloud resource provisioning-related research, including the number of papers which report cloud resource provisioning as prime study from each source. We observed that conferences like IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing, ICSE Workshop on Software Engineering Challenges of Cloud Computing, International Conference on Service-Oriented Computing and Applications (SOCA), International Conference on Cloud Computing (CLOUD) and International Conference on Cloud Computing Technology and Science (CloudCom) contribute large part of research articles. Premier journals like Future Generation of Computer Systems, Journal of Grid Computing, Concurrency and Computation: Practice and Experience, ACM SIGOPS Operating Systems Review, Journal of Parallel and Distributed Computing, IEEE Transactions on Parallel and Distributed Systems, ACM Computing Surveys and Journal of Supercomputing contributed significantly to our review area. Figure 7 shows the percentage of research paper discussing different resource provisioning mechanisms (adaptive, cost, time, compromised cost time, bargaining, QoS, SLA, energy, optimization, nature inspired and bio-inspired, rule and dynamic RPM) from year 2007 to 2014.

55 % of the studies were published in conferences and 35 % of the literature appeared in journals, 4 % studies were published in workshops and 6 % of the literature appeared in symposiums. The largest percentages of publications came from conferences (56 papers) followed by journals (19 papers). Figure 7 depicts the maximum research papers (15 %) in the area of cost-based resource provisioning mechanisms and dynamic resource provisioning mechanisms while only 2 % research papers in the area of adaptive-based resource provisioning mechanisms. Nature-inspired- and bio-inspired-based resource provisioning mechanisms contributes 10 % research papers, and SLA, Energy and QoS-based resource provisioning mechanisms contributes 11, 12 and 13 % respectively. Figure 8 describes the percentage of research papers which are considering different QoS parameters (execution time, scalability, cost, response time, energy and resource utilization) from year 2007 to 2014.

Figure 8 depicts that cost is used as QoS parameter in maximum research papers (26 %), while only 4 % research papers used scalability as a QoS parameter. Literature reported that there are four different types of study in cloud Resource Provisioning (RP): theory, simulation, survey and testbed as shown in Fig. 9 from year 2007 to 2014. Theory has been further divided into non-QoS-based RP, QoS-based RP, SLA-based RP and autonomic QoS-based RP. Simulation has been further divided into different simulators used in resource provisioning for validation in cloud: CloudSim, CloudAnalyst, GreenCloud, NetworkCloudSim, EMUSIM, SPECI, GroundSim and DCSim [18, 41, 42].

The number of research papers published in the area of different resource provisioning mechanism from year 2007 to 2014 are shown in Fig. 10. Various drifts can be realized for different resource provisioning mechanisms. Research in the area of energy-based resource provisioning increase abruptly in 2013 from a three research articles in 2012 to 12 research articles in 2013. The number of research articles published in area of nature-inspired- and bio-inspired-based resource provisioning rose abruptly, nearly doubling from about 3 research articles in 2012 and 8 research articles in 2013. The maximum research has been done on key areas of dynamic resource provisioning in 2012. It indicates the enhancement in requirement and gratitude of research in this field of cloud lately. On the other hand, minimum research has been done in the area of adaptive-based resource provisioning. On the contrary, number of research articles published in the field of optimization, compromised cost time, and rule-based resource provisioning remained stable throughout the years. The systematic map in Fig. 10 helps in recognizing important areas of resource provisioning are highlighted, whose resource provisioning has high usage in resource management and which areas need advance research. In the existing research, any research article including more than one tool in any aspect of resource provisioning is not found. We have identified the lack of interoperability among various tools for cloud resource provisioning. We discovered a lack of research work in SLA-based resource provisioning except year 2013. We found large number of research articles regarding cost, bargaining, energy and nature-inspired- and bio-inspired-based resource provisioning. There is shortage of research articles validating the methodical results of resource provisioning mechanisms.

Figure 11 describes the numbers of research paper published by considering different QoS parameters for resource provisioning mechanism from year 2007 to 2014.

Various drifts can be realized for different QoS parameters considering in different resource provisioning mechanisms. Research in the area of cost as a QoS parameter increases abruptly in 2012 from a 4 research articles in 2011 to 8 research articles in 2013 but highest in year 2013, i.e., 10 research articles. The number of publications in area of execution time as a QoS parameter rose abruptly, almost three times from 4 research articles in 2010, 8 research articles in year 2011 and 12 research articles in 2013. The maximum research has been done on key areas of energy and response time in 2013. It indicates the requirement of research and progress in appreciation in these fields lately. On the contrary, the number of research articles that has been published in the field of resource utilization almost remained stable throughout the years. We investigated a lack of research work in scalability as a QoS parameter in existing resource provisioning mechanism. We described large number of research articles about cost, energy and execution time as QoS parameters.

4.1 Cloud resource provisioning mechanisms: current status

Resource management is a collection of activities like resource provisioning, types of resource provisioning, resource monitoring, resource scheduling, RPMs and their evolution. It shows an essential character in efficient resource utilization. However, it too overlays with resource provisioning evolution, resource provisioning analysis and detection of best workload and resource which are discussed in Sect. 1. For any resource provisioning mechanism, the cost, time and energy are the most important characteristics. RPM plays an important role in provisioning the most appropriate resources to applications. In order to ensure QoS to the cloud workload according to the requirements of user, the mechanisms perform the provisioning of workloads to the resources. Sometimes resource provisioning mechanisms adopt dynamic behavior whereby resources are provisioned as soon as they are identified [43]. Such mechanisms are called dynamic RPMs and are considered as more efficient than the static resource provisioning. Another supposition is that RPMs should be designed in such a way to avoid underutilization and overutilization of resources. Types of resource provisioning mechanisms are identified from the existing literature as shown in Fig. 12. The provisioning of adequate resources to cloud applications depends on the QoS requirements of applications [44]. The resource monitoring system collects the virtual machine resource usages by measuring through performance metrics such as CPU and memory utilization [45]. Resource monitoring can be focused from different perspectives such as security monitoring to achieve confidentiality, integrity and availability of data. Some of the widely used cloud resource discovery and resource provisioning mechanisms are based on dynamic or distributed. Table 4 gives a comparison of these mechanisms based on their common features.

4.1.1 Cost-based RPMs

Resource provisioning research work based on cost has been done by following authors. Abdullah and Othman [46] presented Divisible Load Theory (DLT)-based RPM to minimize the execution time of user applications, maximum profit and satisfying QoS requirements described by user while executing on homogenous resources. This approach reduces cost and execution time but there is an issue of communication overhead and not able to handle dynamic workloads. Hwang and Kim [47] investigated cost-effective resource provisioning for MapReduce applications with deadline constraints, as the MapReduce programming model is useful and powerful in developing data-incentive applications based on two resource provisioning approaches: listed pricing policies and the other based on deadline-aware tasks packing. This approach reduces cost of Virtual Machine (VM) and meets deadline, but it can be suitable only for MapReduce applications. Integration of RPM with workflow technologies is challenging because it is difficult to find the exact requirement of resources required for execution of workflows with minimum execution cost and maximum resource utilization. Byun et al. [48] suggested framework to execute workflow-based applications automatically on resources and provisioned elastically and dynamically to find the minimum requirement of resources to execute the application within deadline described by user. This mechanism minimizes resource cost and satisfy deadline, reduces makespan and performs better than existing but unable to handle dynamic (runtime) workload. Malawski et al. [49] addressed the research issue based on dynamic and static approaches which deals with execution of applications within their deadline and budget for both resource provisioning and task scheduling. This approach executes applications with minimum provisioning delay and lesser failure rate, but it executes only homogenous workloads. Ming et al. [50] described a mechanism to scale the resources automatically based on QoS and performance requirements of workloads and complete the workload execution within their desired deadline. There is no long VM startup delay and satisfy deadline but not efficient for multi-tier applications.

Cost-based taxonomy Based on above literature, following taxonomy has been derived as shown in Fig. 13. Multi-QoS-based resource provisioning considered different QoS parameters such as time, energy, availability, etc. in a cost-based provisioning mechanism.

In virtualization-based cloud environment, provisioning mechanism is implemented to make cost-efficient resource provisioning. Different applications identified from existing research work, which has been deployed on cloud for cost-efficient resource provisioning and considers three types of applications: adaptive, data stream and scientific workflow based applications. In time based resource provisioning, execution time is also considered as a secondary QoS parameter after cost for optimization. Other QoS parameter, scalability is also taken care in cost-based resource provisioning to improve resource utilization by avoiding underutilization and overutilization of resources which can also help to optimize cost.

4.1.2 Time based RPMs

Resource provisioning research work based on time has been done by following authors. Abrishami et al. [51] presented Partial Critical Paths-based IaaS Cloud Partial Critical Paths (IC-PCP) and IC-PCP with Deadline Distribution (IC-PCPD2) to provision and schedule large workflows. The computation time is lesser in this approach, but this is not able to measure estimated execution and transmission time accurately. Buyya et al. [52] presented a robust provisioning algorithm with resource allocation policies that provision workflow tasks on heterogeneous cloud resources while trying to minimize the total elapsed time (makespan) and the cost. This mechanism increases the robustness and minimizes the makespan of workflow simultaneously but cost increases. Gao et al. [53] discussed RPM which reduces execution cost of user application by improving energy efficiency and completes within their desired deadline without the violation of SLA. This approach handles multi-user large-scale workloads easily but admission control is difficult. The power consumption has been reduced and profit has been increased but it is inefficient for hard real-time applications. Vecchiola et al. [33] presented deadline-aware resource provisioning technique for Aneka, considering QoS constraints of scientific applications and resources from different cloud providers to execute workloads by allocating resources efficiently to reduce makespan. It allocates resource efficiently with lesser execution time but not considering data-intensive HPC applications in this existing technique.

Time-based taxonomy Based on above literature, following taxonomy has been derived as shown in Fig. 14. Fulfilling the QoS requirements and minimize the execution time simultaneously is a challenging task in cloud computing.

In deadline based resource provisioning, resources are provisioned according to the urgent needs of user and based on characteristics of their workloads. Time-based resource provisioning mechanisms also considers budget as constraint for provisioning of resources. Based on budget as specified by user, resources are provisioned and inform user whether workload can execute within this budget by fulfilling desire within their deadline or increase budget. Time-based resource provisioning mechanisms also considered energy consumption along with deadline to improve energy efficiency and resource utilization.

4.1.3 Compromised cost time-based RPMs

Resource provisioning research work based on compromised cost time has been done by following authors. There is need to consider budget and deadline as a QoS parameter to execute cost-constrained workflows in cloud environment. Liu et al. [54] suggested compromised cost time-based RPM which considers cost-constrained workflows and taking execution time and cost are considered as QoS parameters. This approach meets user-designed deadline and achieve lower cost simultaneously but not considering heterogeneous workflow instances. Grekioti et al. [55] studied the structural properties of the time-cost model and explored how the existing provisioning techniques can be extended to handle the additional cost criterion. It makes lower cost schedule but it fails in tight deadlines.

Compromised cost time-based taxonomy Based on above literature, following taxonomy has been derived as shown in Fig. 15. Cloud workload is an abstraction of work of that instance or set of instances going to perform. For Example running a Web services or being a Hadoop data node are valid workloads and resources are provisioned according to type of workload. The different types of workload have been identified from existing literature which are discussed in Sect. 1. Workflow is a term used to describe the set of interrelated tasks and their distribution among different available resources for better resource provisioning.

4.1.4 Bargaining-based RPMs

Resource provisioning research work based on bargaining has been done by following authors. Negotiation among resource provider and resource consumer can be a bottleneck problem if it is carried out manually; to avoid this bottleneck problem, negotiation should be done automatic [2]. Dastjerdi et al. [56] presented automatic and negotiation-based RPM to assess the reliability of cloud services and considers resource utilization as QoS parameter during new negotiation. It minimizes cost and increases availability and profit, but it considers only homogeneous negotiation. Zaman and Grosu [57] presented auction-based dynamic VM provisioning mechanism considering consumer requirements during provisioning decisions. It has been identified that a user maximizes its utility only by attempting its accurate estimation for the requested VM resources. It considers online auction along with SLA based on QoS requirements as given by user dynamically and improves utilization of resources and efficiency of resource allocation, but it is inefficient in case of low demand. Wu et al. [58] presented that market-oriented-based resource provisioning mechanism contains service and task-level dynamic resource provisioning to assign task to service and task to VM, respectively. It reduces overall running cost of datacenters and optimizes the makespan but it is used for only local task to VM not for global.

Bargaining-based taxonomy Based on above literature, following taxonomy has been derived as shown in Fig. 16. In market-oriented-based resource provisioning, resources are provisioned based on QoS requirements of workloads and demand patterns in cloud market.

Different types of resources with different configurations are provided by different providers and minimum price is fixed for resources. Consumer uses bidding policy to choose the required resource set based on their requirements and also taking care budget and deadline in auction-based resource provisioning. In negotiation-based resource provisioning, user and provider negotiate QoS parameters in the form of written document called Service Level Agreement.

4.1.5 QoS based RPMs

Resource provisioning research work based on QoS has been done by following authors. QoS is the capability to guarantee a definite level of performance based on the parameters described by consumer The QoS parameters considered generally are accountability, performance, response time, cost and execution time in QoS-based RPMs. Calheiros et al. [35] presented a platform on which Aneka is used to develop cloud applications (scalable) and provisions the resources from various cloud providers for execution of different user applications. This approach meets even strict application deadline with minimum budget expenditure but actual resource utilization is not efficient, amount of time is extended and actual resource requirement is not determined accurately. This approach considers both scientific and elastic applications. Rosenberg et al. [59] presented Domain-Specific Language (DSL)-based RPM specifying QoS constraints and functional requirements. QoS-aware dynamic optimization is possible in this approach but difficult to handle queues at runtime. To handle queues, there is a need of re-composition which further leads to more time consumption. Resource provisioning in context of cloud considers accountability, performance, response time, cost and execution time as a QoS parameters.

QoS-based taxonomy Based on above literature, following taxonomy has been derived as shown in Fig. 17. QoS-based resource provisioning is done based on different applications and their QoS requirements.

Literature reported that QoS-based provisioning considers two main types of applications: scientific and elastic. Scientific applications are a sector that is increasingly using cloud computing systems and technologies. Cloud computing systems meet the needs of different types of applications in the scientific domain like data-intensive applications. Elastic applications are those applications which can be easily adjusted dynamically due to changing the number of resources to avoid underutilization and overutilization of resources. Following QoS parameters have been considered in QoS-based resource provisioning. Scalability is a capability of computing system to maintain the performance while increasing number of users or resource usage in order to fulfill the requirement of users. System should be able to produce the correct results when load is increased. Availability is an ability of a system to ensure the data are available with desired level of performance in normal as well as in fatal situations excluding scheduled downtime. Reliability is a capability of a system to perform consistently according to its predefined objectives. Security is ability to protect the data stored on cloud by using data encryption and passwords. Energy is amount of energy consumed by a resource to finish the execution of workload. Resource utilization is a ratio of actual time spent by resource to execute workload to total uptime of resource for single resource.

4.1.6 SLA-based RPMs

Resource provisioning research work based on SLA has been done by following authors. Cloud providers provide compensation to the cloud user in case of SLA violations. Simao and Veiga [60] proposed SLA-based cost model to provision the VMs to user application by considering power consumption as QoS requirement. It has lower environmental and operational cost but not considered heterogeneous workloads (synthetic and real workloads). Garg et al. [61] presented provisioning mechanism based on admission control which maximizes profit and resource utilization, however also consider different requirement of SLA as described by user. It permits heterogeneous workload’s execution with different SLA requirement but does not handle memory conflicts efficiently. Yoo and Sungchun [62] presented a SLA-Aware Adaptive (SAA) RPM for heterogeneous workload that employs a flexible determining model to maintain QoS produces better response time under varying workload at minimum cost of resource usage but it is difficult to determine appropriate measurement level. It considers both heterogeneous and homogenous cloud workloads. Kertesz et al. [63] introduced SLA-aware virtualization-based RPM considering QoS requirements is described in terms of SLA. It fulfills the expected utilization gains but it is not considering penalty and compensation in case of SLA violations.

SLA-based taxonomy Based on above literature, following taxonomy has been derived as shown in Fig. 18. SLA-based architecture has been designed in which both user and provider interact through user interface. User described their QoS requirement like budget, deadline, etc., while provider informs about cost and execution time. Further both user and provider can negotiate SLA through this architecture. In virtualization-based cloud environment, SLA-based resource provisioning mechanism is implemented to measure the SLA violation rate and SLA deviation. Cloud workload is an abstraction of work of that instance or set of instances going to perform. Workload is of two types: homogenous (with similar QoS requirements) and heterogeneous (with different QoS requirements). In autonomic resource provisioning, if there is violation of SLA (misses the deadline), then penalty delay cost is imposed automatically as mentioned in SLA or gives required compensation to consumer. Penalty delay cost is equivalent to how much the service provider has to give concession to users in case of SLA violation. It is dependent on the penalty rate and penalty delay time period.

4.1.7 Energy-based RPMs

Resource provisioning research work based on energy has been done by following authors. Gao et al. [53] discussed RPM in which execution cost is reduced of user application by improving energy efficiency and complete within their desired deadline without the violation of SLA. This approach handles multi-user large-scale workloads easily, but admission control is difficult [64]. The power consumption has been reduced and profit increased but inefficient for hard real-time applications. Kim et al. [65] described virtualization-based RPM to provision real-time VMs to user applications considering energy as QoS parameter by dynamic voltage rate scaling policies. It reduces power consumption and increases profit but it is inefficient for hard real-time applications. Liao et al. [66] described energy-based RPM for VM provisioning and considering SLA to execute user applications without the violation of SLA. The power consumption is reduced without violation of SLA but live migration is not possible.

Energy-based taxonomy Based on above literature, following taxonomy has been derived as shown in Fig. 19. Energy-based resource provisioning mechanisms also considered deadline along with energy to execute workloads with minimum execution time and within their desired deadline.

To measure the energy consumption in cloud datacenters, virtual cloud environment is created to test the validity of resource provisioning mechanism. Signed SLA document is also taken care during provisioning of resources because if there will be energy consumption more than threshold value which can further reduce resource utilization and increase cost.

4.1.8 Optimization-based RPMs

Resource provisioning research work based on optimization has been done by following authors. Singh and Deelman [67] presented dynamic RPM to execute the scientific workflows with minimum execution time by Advance Reservations (ARs). The execution time of workflow is reduced but the network and storage cost is not considered. It considers both elastic and scientific applications. Gao et al. [53] discussed RPM in which execution cost of user application is reduced by improving energy efficiency and complete within their desired deadline without the violation of SLA. This approach handles multi-user large-scale workloads easily but admission control is difficult. The power consumption is reduced and profit is increased but inefficient for hard real-time applications. Zhang et al. [68] considered the popular Pig technique for processing large datasets to provide abstraction (high-level SQL) on top of MapReduce engine to calculate the execution time of jobs (Pig program) and find the resource requirement to execute the job within deadline as specified by user. Resources are saving and it reduces completion time, but Service Level Objective (SLO) is not considered. Liao et al. [66] described energy-based RPM for VM provisioning and considering SLA to execute user applications without the violation of SLA. The power consumption is reduced without violation of SLA but live migration is not supported. Henzinger et al. [69] proposed FlexPRICE RPM to provide the flexibility at requirements level to provide different execution speed and execution level to provide a choice to select provisioning strategy. It provides flexibility to satisfy user deadline and hide complexity; and transparency is also improved but cost is higher. Javadi et al. [70] proposed scalable RPM for hybrid cloud infrastructure to meet the QoS requirements as described by user by considering failure relationships to forward request to adequate cloud provider. It improves user deadline violation rate, but does not supported resource co-allocation.

Optimization-based taxonomy Based on above literature, following taxonomy has been derived as shown in Fig. 20. Optimization-based resource provisioning considers energy consumption as a QoS parameter in which resources are provisioned without violating SLA. Deadline is also considered along with energy to execute workloads with minimum execution time and within their desired deadline in optimization-based resource provisioning mechanisms.

Different QoS parameters like cost, time, etc. are considered and optimize QoS parameters to improve the customer satisfaction and revenue. Literature reported that optimization-based resource provisioning considers scientific and elastic applications. Scientific applications are a sector that is increasingly using cloud computing systems and technologies like data-intensive applications. Elastic applications are those applications which can be easily adjusted dynamically due to changing the number of resources to avoid underutilization and overutilization of resources. A single task is divided into subtasks and identified the characteristics of every subtask. Based on their individual requirement, resources are provisioned and result of every subtask is integrated using dynamic programming to get final outcome. In hybrid cloud environment, resources are provisioned for different workloads and performance of every resource is checked periodically. In case failure of any resource, reserved resources can be used to complete the processing of current workload without degradation of performance.

4.1.9 Nature-inspired- and bio-inspired-based RPMs

Nature-inspired- and bio-inspired-research-work-based resource provisioning has been done by following authors. Tsai et al. [71] presented Improved Differential Evolution Algorithm (IDEA)-based RPM to optimize provisioning of resources by considering the proposed cost (receiving cost and processing cost) and time (receiving time, queuing time and processing time). It takes lesser time and cost and improves resource utilization but it is difficult to make decisions of resource allocation. Dhinesh Babu and Venkata Krishna [72] proposed Honey Bee Behavior-inspired Load Balancing (HBB-LB) RPM to improve load balancing in VMs to improve resource utilization and balance the priorities of workloads on VMs to reduce queuing time. Queuing time is lesser of task in queue, and execution time is also less, but it is not used for workflow with dependent tasks. Dasgupta et al. [73] proposed a load balancing-based RPM using Genetic Algorithm (GA) to balance the load of the cloud infrastructure while trying to minimize the makespan of a given tasks set. This approach provides an efficient utilization of resources and load balancing, but it is inefficient for heterogeneous workloads. Feller et al. [74] presented an Ant Colony Optimization (ACO)-based RPM for workload consolidation. It attains energy improvements and better utilization of resources and requires fewer machines but not considering SLA and heterogeneous workloads. Pandey et al. [75] proposed Particle Swarm Optimization (PSO)-based RPM to provision the resources to the workloads by considering data transmission and computation cost. It achieves three times better cost saving than Best Resource Selection (BRS) policy and good distribution of workloads, but execution time is not considered as QoS parameter in this technique. Paulin Florence et al. [76] proposed firefly-based resource provisioning approach to maximize the resource utilization and provide an effective load balancing among all the resources in cloud servers based on several factors such as, memory usage, processing time and access rate. It optimizes balance of loads, but it is not considered heterogeneous workloads.

Nature-inspired- and bio-inspired-based taxonomy Based on above literature, following taxonomy has been derived as shown in Fig. 21. In IDEA based resource provisioning, both DEA and Taguchi method are combined to find the Pareto front of total execution time and cost by applying non-dominated sorting technique.

Honey Bee Behavior-inspired Load Balancing used to identify the load of every virtual machine and grouping of VM is done in three groups: underloaded VMs, overloaded VMs and balanced VMs. Tasks are removed from overloaded VM and added to underloaded VM to make the effective load balancing. In HBB-LB, underloaded VMs are considered as the destination of the honey bees and task is considered as a honey bee. In GA-based resource provisioning, all the possible solution spaces are converted into binary strings and select few ones, and the value of fitness function calculated to identify the mutation value and resources are provisioned based on the minimum value of mutation. ACO-based resource provisioning, map the workloads to Physical Machines (PMs) as an instance of the Multi-Dimensional Bin Packing (MDBP) problem, in which workloads are to be packed and PMs are bins. In PSO-based resource provisioning, directed acyclic graph is used to represent the workflow and particle best position at any instance of time is calculated based on fitness value to provision the resources. In firefly-based resource provisioning, population is generated and based on objective function, attractiveness of every firefly with respect to other is identified and it has been found that attractiveness is decaying monotonically with distance and this mechanism selects the resource with minimum distance (maximum effective task-resource pair).

4.1.10 Dynamic RPMs

Dynamic resource provisioning research work has been done by following authors. In cloud computing, the provisioning of resources to the dynamically fluctuating workloads is a complex task. Lin et al. [77] presented threshold-based RPM to provision the virtual resources dynamically to the workloads based on the QoS requirements as specified by the user. It increases the utilization of resources but the complexity increases with increase for reallocation of physical resources. Zhang et al. [34] described a control theory-based dynamic resource provisioning method to reduce the consumption of energy, achieving required performance whereas keeping the tolerable average provisioning deferral for distinct jobs. It minimizes carbon footprints and handles demand fluctuation dynamically, but it did not consider heterogeneous resources. Zhang et al. [78] presented HARMONY, a heterogeneity-aware resource management system for dynamic capacity provisioning in cloud computing environments by the k-means-based clustering algorithm to divide the workload into distinct task classes with similar characteristics in terms of resource and performance requirements and dynamically adjusting the number of machines of each type to minimize total energy consumption and performance penalty in terms of provisioning delay. It saves energy and improves workload provisioning delay, but the complexity is increased due to heterogeneity in workloads and resources. Bi et al. [79] described clustering-based dynamic RPM for execution of virtualized multi-tier applications and helped to identify the VM requirement for every tier. It reduces cost, and improves resource utilization, flexibility and efficiency, but SLA and heterogeneous workloads are not considered. It comprises four types of applications: virtualization multi-tier, data streaming, high performance computing applications and server-oriented applications. Zhang et al. [80] studied resource allocation in a cloud market through the auction of VM instances by introducing combinatorial auctions of heterogeneous VMs, and models dynamic VM provisioning. Auction-based dynamic resource provisioning is effective in CPU utilization but lack of SLA fulfillment.

Le et al. [81] proposed adaptive resource management policy to handle requests of deadline-bound application with elastic cloud by dividing resource management into two parts: resource provisioning and job scheduling. Three job scheduling policies are raised to dequeue appropriate jobs to execute, First-Come-First-Service (FCFS), Shortest Job First (SJF) and Nearest Deadline First (NDF), for different preference toward execution order. In this, FCFS performs better than other but FCFS is complex due to SLA management. Pawar and Wagh [82] proposed RPM which considers many parameters of SLA (CPU time, memory required and network bandwidth) and also considered execution of preemptable task. It provides better resource utilization, and job execution time is also reduced, but there is a problem of starvation for largest jobs. Zhu et al. [83] presented a dynamic RPM and a hybrid queuing framework which provides flexibility to find the virtualized resources to provide to services of virtualized application. It increases global profit and reduces resource usage cost, but there is problem of SLA violation due to fluctuation in user requirement (QoS).

Dynamic RPM-based taxonomy Based on above literature, following taxonomy has been derived as shown in Fig. 22. In threshold-based resource provisioning, threshold value for resource utilization is identified to execute resources in virtual cloud environment. If the value of resource utilization is more than threshold value then resources will be reallocated dynamically.

Dynamic resource provisioning considers energy consumption as a QoS parameter in which resources are provisioned dynamically and executes workloads with minimum energy consumption. Heterogeneous workload is an abstraction of work of that resource set that is going to perform to fulfill the different QoS requirements of a workload. Literature reported that dynamic resource provisioning considers multi-tier virtual, data streaming, high performance computing and server-oriented applications. Resources are provisioned according to the important QoS requirements of applications. Auction-based policy is used to choose the required resource set based on their requirements and also taking care of budget and deadline in auction-based dynamic resource provisioning. In deadline-based dynamic resource provisioning, resources are provisioned according the urgent needs of user and based on their characteristics of their workloads, specially executing workload within their deadline. Priority of workload based on their execution time is identified and workloads are sorted in which, first workload will be executed which has minimum value of deadline. In virtualization-based cloud environment, SLA-based dynamic resource provisioning mechanism is designed and implemented to measure the SLA violation rate and SLA deviation and based on the availability of resources. SLA violation rate is dynamically changed for effective provisioning of resources.

4.1.11 Rule-based RPMs

Rule-based resource provisioning research work has been done by following authors. However, very little research considers the reliability of resources provisioned dynamically. Tian and Meng [84] described failure rules-based resource provisioning mechanism for heterogeneous cloud services. It provides robust node for heterogeneous services, less chances of unplanned failure, no undesirable influence on the performance of server and utilization of resources, but it is inefficient for heterogeneous and independent workloads. Grewal et al. [36] proposed rule-based resource provisioning mechanism for the hybrid cloud environment [85] to improve the dynamic scalability and minimize the execution cost. It provides better resource utilization under different requirements of priority and avoids overprovisioning, but there is a problem of underprovisioning of resources. Nelson and Uma [86] suggested resource provisioning system, and tasks and resources are designated semantically and kept with the use of resource ontology and semantic scheduler, and collection of inference rules is used to allocate the resources. It fulfills customer requirements, but it is inefficient for heterogeneous applications/workloads.

Rule-based taxonomy Based on above literature, following taxonomy has been derived as shown in Fig. 23. In cloud environment, resources are provisioned for different workloads, and performance of every resource is checked periodically. In case of failure of any resource, reserved resources can be used to complete the processing of current workload without degradation of performance. Different rules for resource provisioning have been designed to reduce overprovisioning and underprovisioning of resources and deployed rules based provisioning mechanism in hybrid cloud environment. For provisioning of resources to homogenous workloads, inference rules are designed using resource ontology and semantic scheduler is created.

4.1.12 Adaptive-based RPMs

Literature reported that little research has been done in the area of adaptive-based resource provisioning. Adaptive-based resource provisioning research work has been done by following authors. Song et al. [87] presented a virtualization-based methodology to provision resources based on demand of application dynamically and to reduce consumption of energy by optimizing the usage of servers. This approach performs better in hot spot migration and load balancing but live migration is not possible. Islam et al. [88] proposed prediction-based RPM using Linear Regression and Neural Network to fulfill forecast resource requirement. This approach is able to generate dynamic rules and auto scaling of resources but SLA is not considered in this approach.

Adaptive-based taxonomy Based on above literature, following taxonomy has been derived as shown in Fig. 24. Based on different criteria of different workloads, firstly important characteristics of workloads are identified and then resources requirement is predicted for efficient resource provisioning which avoids underutilization and overutilization of resources.

To solve the combinatorial NP-hard problem of autonomic provisioning of resources to the workloads is solved using online bin packing mechanism to identify the adequate and required number of resources.

4.2 Comparison of resource provisioning mechanisms

Comparison of resource provisioning mechanisms is a difficult task due to different types of resource provisioning mechanisms and the lack of benchmarks. Therefore comparison of RPMs is a significant to find the effective resource provisioning mechanisms. We considered different traits of resource provisioning mechanisms as discussed below.

4.2.1 Traits of resource provisioning mechanisms

RPM in cloud systems can be compared based on some common characteristics for solving provisioning problems. Sub type, searching mechanism, application type, optimal, operational environment, objective function, provisioning criteria, resource provisioning strategy, merits, demerits, technology, level, citations to RPM, validations, scalability and elasticity are some of the common and basic characteristics that should be examined in each RPM as described in Table 3. Table 4 shows the contrast of resource provisioning mechanisms based on these traits. Current status and open issues have further been classified based on resource provisioning mechanisms in Table 5.

5 Discussion

Total 105 research articles out of 1308 have been studied to classify Resource Provisioning mechanisms and to provide a reckonable summary. Unlike former reviews, our main focus is on resource management, resource provisioning and RPMs, and we have categorized the existing research work from various important sub-topics. Existing review articles by Hussain et al. [10], Islam et al. [11] and Huang et al. [12] have also found research issues. These review articles have presented initial research work in this area. Research work by Hussain et al. [10] concentrated on allocation of resources in distributed environment. Islam et al. [11] studied existing literature on adaptive resource provisioning in the cloud in systematic way and provided review article. Huang et al. [12] focused on algorithms of scheduling of jobs and policies of allocation of resources in cloud. Three research questions have been outlined to explore how many types of resource allocation methods, adaptive resource provisioning and algorithms of scheduling of jobs and policies of allocation of resources can be categorized and utilized. Only 15 main research articles of resource provisioning have been used by authors in their survey. We have used standard review strategies and have done a broader literature survey on cloud resource provisioning up to 2014. We have explored the research issues of RPMs along with resource management, resource provisioning analysis, resource provisioning evolution, best detection of workloads and resources, and resource scheduling with and without resource provisioning. A methodical technique has been used to develop a resource provisioning evolution which recognizes FoS and QoS parameters in resource provisioning mechanisms. We explored the resource provisioning mechanisms and their subtypes in detail and compared the resource provisioning mechanisms. We identified the problems addressed and challenges still pending in resource provisioning mechanisms. Furthermore after 2007, most important innovations in resource provisioning mechanisms have happened. From this survey, authors can easily find the recent research carried out after year 2007 along with previous surveys because we have categorized all the existing studies logically into various sections. The main outcomes of our methodical analysis have been discussed in Sect. 4.2 along with weaknesses and strengths of the proof. Next sections describe the benefits of cloud resource provisioning and implications for research scholars and professional experts.

5.1 Benefits of cloud resource provisioning

We have identified various benefits of cloud resource provisioning from the literature. Some of the key findings are:

-

1.

Effective cloud resource provisioning reduces execution time of cloud workloads.

-

2.

Better resource utilization under different requirements of priority and avoids overprovisioning and underprovisioning.

-

3.

No provisioning delay and lesser chances of resource failure due to efficient management of resources.

-

4.

No long VM startup delay provide provisioned resources immediately in effective cloud resource provisioning.

-

5.

Increase the robustness and minimize makespan of workflow simultaneously.

-

6.

Meet even strict application deadline with minimum budget expenditure and increases global profit.

-

7.

Power consumption reduced without violation of SLA in effective cloud resource provisioning.

-

8.

Efficient balancing of load by efficient distribution of the workloads on available resources.

-

9.

Improve user deadline violation rate due to resources provisioning before resource scheduling.

-

10.

Effective cloud resource provisioning reduces queuing time in workload queue.

-

11.

Minimize carbon footprints and enabled dynamic scalability to handle demand fluctuation in effective cloud resource provisioning.

-

12.

Provide robust node for heterogeneous services, less chances of unplanned failure, no negative impact on server performance and node resource utility.

5.2 Implications for research scholars and professional experts

This methodical analysis has implications for both research scholars who are doing research in cloud computing and looking for new ideas in resource provisioning and for professional experts employed in cloud-oriented corporations who want to use different RPMs for better cloud service. A number of opportunities exist for research scholars and professional experts. Resource management is a challenging and emerging field of research in cloud. It is very difficult to manage large amount of data in industries. So scalable RPMs are required which can be used to recognize the nature of workloads and QoS requirements described by consumer and to help the cloud provider to integrate into other development environments. A broad industrialized power RPM having incorporated recognition and developer responsive conception of workloads and resources would support the cloud provider to detect workloads and resources as and increasing during resource allocation.