Abstract

Elliptic polytopes are convex hulls of several concentric plane ellipses in \({{\mathbb {R}}}^d\). They arise in applications as natural generalizations of usual polytopes. In particular, they define invariant convex bodies of linear operators, optimal Lyapunov norms for linear dynamical systems, etc. To construct elliptic polytopes one needs to decide whether a given ellipse is contained in the convex hull of other ellipses. We analyse the computational complexity of this problem and show that for \(d=2, 3\), it admits an explicit solution. For larger d, two geometric methods for approximate solution are presented. Both use the convex optimization tools. The efficiency of the methods is demonstrated in two applications: the construction of extremal norms of linear operators and the computation of the joint spectral radius/Lyapunov exponent of a family of matrices.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Convex hulls of two-dimensional ellipses in \({{\mathbb {R}}}^d\) can efficiently replace usual polytopes in some problems of numerical linear algebra and of the operator theory. They provide convenient tools in the evaluation of Lyapunov functions and of extremal norms of linear operators, in the study of stability of linear switching systems and in the computation of the joint spectral radius. However, the construction of such convex hulls is computationally hard, especially in high dimensions. It is reduced to the following question: to decide whether a given ellipse is contained in the convex hull of other given ellipses. We study the complexity of this problem, show that it admits an explicit solution in low dimensions, and for higher dimensions, we derive several methods of its approximate solution.

Note that a solution merely by approximating each ellipse with a polygon is inefficient and leads to enormous computations to achieve a good precision. That is why the problem requires other approaches based on various geometric ideas. The paper is concluded with numerical results and applications. We also establish the relation to the well-known concept of balanced complex polytopes.

Definition 1.1

An elliptic polytope in \({{\mathbb {R}}}^d\) is a convex hull of several two-dimensional ellipses centred at the origin. Those ellipses which are not in the convex hull of the others are called vertices of the elliptic polytope.

An ellipse can be degenerate, in which case it is a segment centred at the origin. So, every (usual) polytope symmetric about the origin is also an elliptic polytope. We define an ellipse either by a pair of vectors \({\varvec{a}}, {\varvec{b}}\in {{\mathbb {R}}}^d\) as the set of points \({\varvec{a}}\cos t + {\varvec{b}}\sin t\), \(t \in {{\mathbb {R}}}\) and denote it as \(E({\varvec{a}}, {\varvec{b}})\), or by a complex vector \({\varvec{v}}= {\varvec{a}}+ i {\varvec{b}}\in {{\mathbb {C}}}^d\), and denote it as \(E({\varvec{v}}) = E({\varvec{a}}, {\varvec{b}})\), where \({\varvec{a}}= \mathfrak {Re}\,{\varvec{v}}\), \({\varvec{b}}= \mathfrak {Im}\,{\varvec{v}}\) are real and imaginary parts of \({\varvec{v}}\), respectively.

Two complex vectors \({\varvec{v}}_1, {\varvec{v}}_2\in {{\mathbb {C}}}^d\) define the same ellipse if either \({\varvec{v}}_2 = z {\varvec{v}}_1\) or \({\varvec{v}}_2 = z {\bar{{\varvec{v}}}}_1\) for some \(z\in {{\mathbb {C}}}\), \(\left|z\right| = 1\).

The plan of the paper is the following. In the next section we discuss the main applications of elliptic polytopes in problems of linear algebra and linear dynamical systems. Then we formulate the main problem, describe the main results, discuss their novelty and the main methods of the study.

In Sect. 4 we give necessary definitions, notation, and formulate auxiliary facts. In Sect. 5 we reduce the main problems in the optimization problems and study their complexity. As we shall see, the problem is computationally hard.

Section 6 deals with low-dimensional case, where the problem can be explicitly solved. This fact is rather surprising, taking into account that the number of ellipses can be arbitrarily large. Then we present two methods of approximate solution for higher dimensions: the complex polytope method (Sect. 8) and the cutting angle method (Sects. 9 and 10). As we shall see, the latter can be significantly improved by implementing the high-dimensional projection approach [2] for solving quadratic programming problems.

Numerical results and illustrative examples are presented in Sect. 11 followed by examples from applications.

2 Motivation and applications

Construction of elliptic polytopes arise naturally in the study of spectral properties of matrices, of asymptotics of long matrix products, the stability of linear dynamical systems, and in related problems. Below we consider some of these applications.

2.1 Application 1. Norms in \({{\mathbb {C}}}^d\) restricted to \({{\mathbb {R}}}^d\)

It is well-known that every convex body in \({{\mathbb {R}}}^d\) symmetric about the origin generates a norm in \({{\mathbb {R}}}^d\), called its Minkowski norm. In contrast, not every convex body in \({{\mathbb {C}}}^d\) defines a norm in \({{\mathbb {C}}}^d\). Such bodies have a particular structure: If S is a unit sphere of a norm \(\left\Vert \mathord {\,\cdot \,}\right\Vert \) in \({{\mathbb {C}}}^d\), then for every \({\varvec{v}}\in S\), the curve \(\{e^{-i s } {\varvec{v}}: s \in {{\mathbb {R}}}\}\) lies on S. Indeed, \(\left\Vert e^{-i s } {\varvec{v}}\right\Vert = \left\Vert {\varvec{v}}\right\Vert = 1\). Note that the real part of the point \({\varvec{v}}(s)\) runs over the ellipse \(E({\varvec{v}})\) as \(s\in {{\mathbb {R}}}\). Thus, a unit ball of a norm in \({{\mathbb {R}}}^d\) induced by an arbitrary complex norm is a convex hull of a (possibly infinite) set of ellipses. In particular, a piecewise linear approximation of a norm in the complex space is a balanced complex polytope with real and imaginary parts being elliptic polytopes. Therefore, elliptic polytopes are the real and imaginary part of a polyhedral approximation for unit balls of norms in \({{\mathbb {C}}}^d\).

2.2 Application 2. Lyapunov functions for linear dynamical systems

For a discrete time linear system of the form \({\varvec{x}}(k+1) = A{\varvec{x}}(k)\), \(k \in {{\mathbb {N}}}\), where A is a constant \(d\times d\) matrix, an important issue is the construction of a Lyapunov function \(f({\varvec{x}})\), for which \(f(A{\varvec{x}}) \le \lambda f({\varvec{x}})\), \({\varvec{x}}\in {{\mathbb {R}}}^d\). If such a function exists for \(\lambda = 1\), then the system is stable, if it exists for \(\lambda < 1\), then it is asymptotically stable. A Lyapunov function provides a detailed information on the asymptotic behaviour of the trajectories \({\varvec{x}}(k)\) as \(k\rightarrow \infty \). A standard approach is to find a quadratic Lyapunov function \(f({\varvec{x}}) = \sqrt{{\varvec{x}}^T M {\varvec{x}}}\), where M is a positive definite matrix. By the Lyapunov theorem such a matrix M exists whenever \(\rho (A) < 1\), where \(\rho \) is the spectral radius (maximal modulus of eigenvalues). The quadratic Lyapunov function can be found either by solving a semidefinite programming problem, or by finding all eigenvectors of A. In high dimensions (larger than 25–100), however, both those methods become hard. In this case one should consider Lyapunov functions from other classes, for example, from the class of polyhedral functions. To construct a polyhedral Lyapunov function one needs to to find a polytope P such that \(AP \subset P\). Such a polytope can be constructed iteratively starting with an arbitrary polytope \(P_0\) and running the process \(P_{k+1} = \textrm{co}\,\{AP_k, P_k\}\), where \(\textrm{co}\,\) denotes the convex hull. When \(P_{n+1} = P_n\) the algorithm halts and we set \(P = P_n\). In general, this algorithm never stops except when started with the leading eigenvector(s) \({\varvec{v}}\) related to the problem. If \({\varvec{v}}\) is not real then \(P_0\) should be chosen as \(E({\varvec{v}})\) and the final polytope P will be elliptic.

2.3 Application 3. Computation of the joint spectral radius

This is one of the most important applications of elliptic polytopes. The joint spectral radius of matrices is the maximal rate of asymptotic growth of norms of their long products. For an arbitrary family \({{\mathcal {A}}}= \{A_1, \ldots , A_m\}\) of \(d\times d\) matrices, the joint spectral radius (JSR) is the limit

Originated with J. K. Rota and G. Strang in 1960 the joint spectral radius found numerous applications, see [3, 9, 19, 21, 22, 29] for surveys. The computation of the joint spectral radius, even its approximation, is a hard problem.

The Invariant polytope algorithm introduced in [9] makes it possible to find a precise value of \(\rho ({{\mathcal {A}}})\) for a vast majority of matrix families. Its idea traces back to [11, 27]. Recent works [10, 23, 28] develop updated versions of that algorithm which efficiently perform computations in dimensions up to 25 for arbitrary matrices and up to several thousands for non-negative matrices. The main idea of the Invariant polytope algorithm is the following:

First, we find a candidate for the spectrum maximizing product \(\Pi \) of matrices from \({{\mathcal {A}}}\), for which the largest eigenvalue in modulus (the leading eigenvalue) \(\lambda \) is maximal, i.e. \(\left|\lambda \right| = \rho (\Pi )^{1/\left|\Pi \right|}\).

We make the assumption that the leading eigenvalue of \(\Pi \) is unique and simple. Then we construct an extremal norm \(\left\Vert \mathord {\,\cdot \,}\right\Vert \) in \({{\mathbb {R}}}^d\) such that \(\left\Vert A{\varvec{x}}\right\Vert \le \left|\lambda \right| \left\Vert {\varvec{x}}\right\Vert \) for all \(A\in {{\mathcal {A}}}\), \({\varvec{x}}\in {{\mathbb {R}}}^d\). Once such a norm is found, we have proved that \(\rho ({{\mathcal {A}}}) = \left|\lambda \right|\).

If \(\lambda \in {{\mathbb {R}}}\), then the extremal norm is constructed iteratively, starting with the leading eigenvector \({\varvec{v}}\) of \(\Pi \) (i.e. the eigenvector corresponding to the leading eigenvalue), considering its m images \(\lambda ^{-1}A{\varvec{v}}\), \(A\in {{\mathcal {A}}}\), then constructing their \(m^2\) images, etc.. To avoid the exponential growth of the number of points we remove all redundant points (those in the convex hull of others) in each iteration. If this process halts after several iterations (no new points appear), then the convex hull of the collected points forms an invariant polytope P, for which \(AP \subset \lambda P\) for all \(A\in {{\mathcal {A}}}\). The Minkowski norm of this polytope is extremal.

If the leading eigenvalue of \(\Pi \) is non-real, then P can be found as a balanced complex polytope (Definition 4.1) by the same iterative procedure starting with complex leading eigenvector \({\varvec{v}}\). This approach was elaborated in [11,12,13] and shown to be efficient for the JSR computation. There were, however, some disadvantages. First of all, this method was mostly applied in low dimensions. Second, for matrix families with complex leading eigenvalue

this algorithm suffers, since the uniqueness of the leading eigenvalue assumption is violated. Indeed for the latter case, if \(\lambda \) is a leading eigenvalue, then \({\bar{\lambda }}\) is also a leading entry.

We modify this method by using elliptic polytopes instead of balanced complex polytopes.

2.4 Application 4. Stability of linear switching systems

Extremal norm \(\left\Vert \mathord {\,\cdot \,}\right\Vert \) constructed by means of elliptic polytopes for computing the joint spectral radius play not solely an auxiliary role. They are of an independent interest as Lyapunov functions for the discrete time linear switching system \({\varvec{x}}(k+1) = A(k){\varvec{x}}(k)\), \(A(k) \in {{\mathcal {A}}}\), \(k \ge 0\), see [1, 6, 15, 20, 26, 31, 32]. For this system, \(\rho ({{\mathcal {A}}})\) has the meaning of the Lyapunov exponent, and the extremal norm \(\left\Vert \mathord {\,\cdot \,}\right\Vert \) is a Lyapunov function. Thus, the Lyapunov function of a discrete time linear switching system is constructed as the Minkowski functional of an elliptic polytope.

3 Problem statement and the description of main results

The following problem, which will be referred to as Problem EE (ellipse in ellipses) is crucial in constructing and studying elliptic polytopes.

Problem EE For given ellipses \(E_0, \ldots , E_N\) in \({{\mathbb {R}}}^d\), decide whether \(E_0 \subset \textrm{co}\,\{E_1,\ldots , E_N\}\).

An efficient solution to Problem EE makes it possible to “clean” every set of ellipses removing redundant ones and leaving only the vertices of an elliptic polytope containing all others. All the aforementioned applications in Sect. 1 are based on the use of Problem EE.

Concerning Application 1, an arbitrary norm in \({{\mathbb {C}}}^d\) can be approximated by a polyhedral norm \(\left\Vert {\varvec{x}}\right\Vert = \max _{j}\left|({\varvec{v}}_j, {\varvec{x}})\right|\). Consider the restriction of this norm to \({{\mathbb {R}}}^d\). Solving Problem EE one removes redundant vectors \({\varvec{v}}_k\). A term \(\left|({\varvec{v}}_k, {\varvec{x}})\right|\) is redundant if and only if the ellipse \(E({\varvec{v}}_k)\) is contained in the convex hull of the others \(E({\varvec{v}}_j)\), \(j\ne k\).

In the other applications, solving Problem EE also plays a major role. In the iterative construction of the Lyapunov functions and in the Invariant polytope algorithms, the removal of redundant ellipses in each iteration prevents the exponential growth of the number of ellipses and actually makes those algorithms applicable. Moreover, reducing the number of ellipses makes the Lyapunov function simpler and more convenient for applications.

Our second topic is the algorithmic implementation of the solution of Problem EE. In particular, we aim to modify the algorithm of the joint spectral radius (JSR) computation (Application 3) by using elliptic polytopes instead of complex polytopes. The same construction will be applied for finding invariant Lyapunov functions for switching systems (Application 4).

3.1 Possible approaches

An analogue to Problem EE for usual polytopes is solved by the standard linear programming technique. For elliptic polytopes, we are not aware of any known method. To the best of our knowledge, the only problem considered in the literature, which is related to Problem EE, is the construction of a balanced complex polytope. This technique was developed in [9, 11,12,13,14] for finding extremal Lyapunov functions in \({{\mathbb {C}}}^d\) and for computing the joint spectral radius. It is based on the following fact: An ellipse \(E({\varvec{v}}_0)\) is contained in the convex hull \(\textrm{co}\,\{E({\varvec{v}}_1), \ldots , E({\varvec{v}}_N)\}\) if there exist complex numbers \(z_k\) such that \({\varvec{v}}_0 = \sum _{k=1}^m z_k {\varvec{v}}_k\) and \(\sum _{k=1}^m \left|z_k\right| \le 1\). This condition, however, is only sufficient but not necessary. Moreover, it turns out that for solving Problem EE, this method gives a rather rough approximate solution. We are going to show that its approximation factor is \(\nicefrac {1}{2}\) and this estimate is tight (Theorems 8.2 and 8.3 in Sect. 7). Moreover, it works only if we add the complex conjugate vectors \({\bar{{\varvec{v}}}}_k\) to the set of vectors \({\varvec{v}}_k\), otherwise the approximation factor is zero. I.e. we will not obtain even an approximate solution. This aspect has been missed in the recent literature on the joint spectral radius computation.

Natural questions arise—How to get a precise solution of Problem EE and what is the complexity of this problem? What could be done to obtain approximate solutions with better approximation factors? Having answered those questions one can speed up the Invariant polytope algorithm for the joint spectral radius computation, construct extremal Lyapunov functions for discrete time systems that would be easier to define and to compute than those presented in the literature, and address other applications.

3.2 Summary of the main results

We begin with reducing Problem EE to an optimization problem (Sect. 5) The problem is highly non-convex and, therefore, can be hard. Indeed, we show that it is not simper than the problem of maximizing a quadratic form of rank 2 over a centrally symmetric polyhedron. We conjecture that the latter problem is NP-hard. An argument for that is established in Theorem 5.4, a positive semidefinite quadratic form of rank 2 in \({{\mathbb {R}}}^k\) under O(k) linear constraints may have \(2^k\) points of local maxima.

In low dimensions Problem EE admits a precise solution. This is shown in Sect. 6. Moreover, if the dimension is fixed, then the problem has a polynomial (in the number of ellipses) solution, although hardly realizable for \(d\ge 4\). For higher dimensions we can deal with approximate solutions only (Sect. 7).

The first method we present for the approximate solution of Problem EE is the complex polytope method. The theory of balanced complex polytopes originated with Guglielmi, Zennaro, and Wirth [11,12,13]. This method reduces Problem EE to a conic programming problem. First we observe one aspect missed in the literature: This method does not work, unless we add complex conjugate vectors to all given vectors (Proposition 8.1). After this modification, the method becomes applicable and gives an approximate solution to Problem EE with an approximation factor \(\nicefrac {1}{2}\). This is shown in Theorem 8.2. This factor is sharp and in general cannot be improved as shown in Theorem 8.3. Nevertheless, the empirical estimate obtained for random elliptic polytopes gives the expected value of the approximation factor around \(\nicefrac {1}{\sqrt{2}}\), which is, however, also quite rough. The main advantage of the complex polytope method is that it is simple and computationally cheap. The corresponding numerical results are presented in Sect. 11.

4. Our second method gives approximate solutions with a factor arbitrarily close to one (the factor 1 corresponds to the precise solution). This is a corner cutting algorithm derived in Sect. 9. By solving k conic programming problems with N constraints, where N is the number of ellipses, we get an approximate solution with an approximate factor of \( 1-\nicefrac {\pi ^2}{2(k+1)^2}\). Already for \(k=3\), we obtain the factor at least \(\nicefrac {1}{\sqrt{2}} \simeq 0.707\), which is better than the one by the polytope method. For \(k = 5\), the factor is approximately 0.923. These are the “worst case estimates” and in practice the corner cutting algorithm reaches a much higher accuracy already for small k.

In Sect. 10 we realize a modification of the corner cutting algorithm to a linear programming problem. This is done by applying the idea of Ben-Tal and Nemirovski of approximating quadrics by projections of higher dimensional polyhedra. The modified method reaches a very high accuracy.

After numerical results presented in Sect. 11 we illustrate some applications. First of all, the elliptic polytopes allow us to efficiently construct Lyapunov functions for linear dynamical systems even in high dimensions, for which a traditional way of finding a quadratic Lyapunov function by semidefinite programming is hardly reachable. Second, for the computation of the joint spectral radius of matrices, our results speed up the Invariant polytope algorithm in case of non-real leading eigenvalue and reduce a lot the number of ellipses defining the extremal Lyapunov function of the system. Finally, in the linear switching systems, the elliptic polytopes provide the invariant (Barabanov) norms.

4 Preliminary facts and notation

Throughout the paper we denote vectors by bold letters and numbers by standard letters. Thus \({\varvec{x}}= (x_1, \ldots , x_d)^T \in {{\mathbb {R}}}^d\). As usual, for two complex vectors \({\varvec{v}}, {\varvec{u}}\in {{\mathbb {C}}}^d\), their scalar product is \(({\varvec{v}}, {\varvec{u}}) = \sum _{k=1}^d v_k {{\bar{u}}}_k\). For two real vectors \({\varvec{a}}, {\varvec{b}}\), we consider the ellipse

This is an ellipse with conjugate radii (the halves of conjugate diameters) \({\varvec{a}}, {\varvec{b}}\). For a complex vector \({\varvec{v}}= {\varvec{a}}+ i{\varvec{b}}\) with real \({\varvec{a}}\) and \({\varvec{b}}\), we write \(E({\varvec{v}}) = E({\varvec{a}},{\varvec{b}})\). For every \(s \in {{\mathbb {R}}}\), the real and complex parts of the vector \(e^{- i s}{\varvec{v}}= {\varvec{a}}_s - i{\varvec{b}}_s\) are conjugate directions of the same ellipse and, therefore, \(E({\varvec{a}}_s, {\varvec{b}}_s) = E({\varvec{a}}, {\varvec{b}})\) for all \(s \in {{\mathbb {R}}}\). Indeed,

hence, \({\varvec{a}}_s={\varvec{a}}\cos s + {\varvec{b}}\sin s \) and \({\varvec{b}}_s = {\varvec{a}}\sin s - {\varvec{b}}\cos s\). Therefore, \({\varvec{a}}_s \cos t + {\varvec{b}}_s \sin t = {\varvec{a}}\cos (t+s) + {\varvec{b}}\sin (t+s)\). The pair \(({\varvec{a}}_s, {\varvec{b}}_s)\) is the image of \(({\varvec{a}}, {\varvec{b}})\) after the elliptic rotation by the angle s along the ellipse \( E = E({\varvec{a}}, {\varvec{b}})\). Consequently, the vectors \({\varvec{a}}_s, {\varvec{b}}_s\) are also conjugate directions of the ellipse E.

Elliptic polytopes are real parts of the so-called balanced complex polytopes defined as follows:

Definition 4.1

A balanced convex hull of a set \(K \subset {{\mathbb {C}}}^d\) is

A balanced convex set is a subset of \({{\mathbb {C}}}^d\) that coincides with its balanced convex hull, a balanced complex polytope is the balanced convex hull of a finite set of points.

If G is a balanced complex polytope, then ReG is a convex hull of ellipses. Indeed, if \(G=\textrm{cob}\,\{{\varvec{v}}_1, \ldots , {\varvec{v}}_N\}\) and \({\varvec{a}}_k = Re {\varvec{v}}_k\), \({\varvec{b}}_k = \mathfrak {Im}\,{\varvec{v}}_k\), \(k = 1, \ldots , N\), then for arbitrary complex numbers \(z_k = r_k e^{-it_k},\) where \(r_k = \left|z_k\right|\), \(k = 1, \ldots , N\), we have \( Re z_k {\varvec{v}}_k = r_k \bigl ( {\varvec{a}}_k \cos t_k + {\varvec{b}}_k \sin t_k \bigr ) \), and hence, the set ReG consists precisely of the points \(\sum _k r_k {\varvec{u}}_k \) with \({\varvec{u}}_k \in E({\varvec{v}}_k)\) and \(\sum _k r_k \le 1\). This is \(\textrm{co}\,\{E({\varvec{v}}_1), \ldots , E({\varvec{v}}_N)\}\).

Note that the balanced polytopes G and \({{\bar{G}}} = \{{\bar{{\varvec{v}}}}: {\varvec{v}}\in G\}\) have the same real parts and, hence, generate the same elliptic polytope P. Therefore, P does not change if we replace G by \(\textrm{cob}\,\{G, {{\bar{G}}}\}\). In what follows, if the converse is not stated, we assume that the balanced complex polytope is symmetric with respect to its conjugate, i.e. \(G = {{\bar{G}}}\). Clearly, this holds when the set of vertices is self-conjugated.

Given \(P\subset {{\mathbb {R}}}^s\), compact, convex, with non-empty interior and such that \(tP\subset P\) for all \(\left|t\right|\le 1\); We denote by \(\left\Vert \mathord {\,\cdot \,}\right\Vert _P\) the vector norm whose unit ball is P.

Remark 4.2

The imaginary part of a balanced complex polytope is the same elliptic polytope P. Indeed,

for some \({\tilde{z}}_k\in {{\mathbb {C}}}^s\). We see that the set \(\mathfrak {Im}\,G\) consists of the points \(\sum _k r_k {\varvec{v}}_k \) with \({\varvec{v}}_k \in E(v_k) =: E_k\) and \(\sum _k r_k \le 1\), and thus, \(\mathfrak {Im}\,G = P = Re G\). Of course, the same is true for an arbitrary balanced convex set: its real and imaginary parts coincide.

5 Equivalent optimization problems and their complexity

To analyse the complexity and possible solutions of Problem EE we reformulate it as an optimization problem.

5.1 Reformulation of Problem EE

Let \(P = \textrm{co}\,\{E_1, \ldots , E_N\}\) be an elliptic polytope. An ellipsoid \(E_0\) is not contained in P if and only if P possesses a hyperplane of support that intersects \(E_0\) at two points. For the outward normal vector \({\varvec{x}}\in {{\mathbb {R}}}^d\) of that hyperplane, we have

Note that

Therefore,

Thus, the assertion \(E_0 \not \subset P\) is equivalent to the existence of a solution \({\varvec{x}}\in {{\mathbb {R}}}^d\) for the system of inequalities

Normalizing the vector \({\varvec{x}}\), it can be assumed that \(({\varvec{x}}, {\varvec{a}}_0)^2 + ({\varvec{x}}, {\varvec{b}}_0)^2 = 1- \varepsilon \) where \(\varepsilon > 0\) is a small number, in which case the system (4) is equivalent to the system \(({\varvec{x}}, {\varvec{a}}_k)^2 + ({\varvec{x}}, {\varvec{b}}_k)^2 \le 1, \ k=1, \ldots , N\). Thus, we have proved:

Theorem 5.1

Problem EE is equivalent to the following optimization problem:

with d variables \((x_1, \ldots , x_d)^T = {\varvec{x}}\) and given vectors \({\varvec{a}}_k, {\varvec{b}}_k \in {{\mathbb {R}}}^d\).

Therefore, we need to maximize a positive semidefinite quadratic form of rank two on the intersection of cylinders. Problem EE is hard since it involves a maximization; Even though all functions involved are convex, and the feasible set is convex.

5.2 The complexity of Problem EE

Maximization of a convex function over a convex set is usually non-trivial. Problem EE, and its reformulation (5), do not seem to be an exception. Moreover, the feasible domain is defined by N quadratic inequalities in \({{\mathbb {R}}}^d\) and does not look simple either. Geometrically this is an intersection of N elliptic cylinders in \({{\mathbb {R}}}^d\) with two-dimensional bases. The following result sheds some light on the complexity of this problem and, hence, on the complexity of Problem EE.

Theorem 5.2

Maximization of a positive semidefinite quadratic form of rank two over a centrally symmetric polyhedron defined by 2N linear inequalities in \({{\mathbb {R}}}^d\) can be reduced to Problem EE.

Proof

An origin-symmetric polyhedron is defined by N inequalities of the form \({({\varvec{x}}, {\varvec{h}}_k)^2 \le 1}\). Choosing arbitrary numbers \(t_1, \ldots , t_N \in \bigl (0, \frac{\pi }{2} \bigr )\), we set \({\varvec{a}}_k = {\varvec{h}}_k\cos t_k, \ {\varvec{b}}_k = {\varvec{h}}_k\sin t_k \). Then the polytope is defined by the system of constraints of the reformulation (5). Finally, every quadratic form of rank two can be written as \(({\varvec{x}}, {\varvec{a}}_0)^2 + ({\varvec{x}}, {\varvec{b}}_0)^2\) for suitable \({\varvec{a}}_0, {\varvec{b}}_0\), which completes the proof. \(\square \)

Conjecture 5.3

Maximizing a positive semidefinite quadratic form of rank two over a centrally symmetric polyhedron is NP-hard.

Let us recall that the problem of maximizing a positive semidefinite quadratic form over a polyhedron is NP-hard even if that polyhedron is a unit cube, since it is not simpler then the Max-Cut problem [7, 16]. Moreover, even its approximate solution is NP-hard [17]. However, the rank two assumption may significantly simplify it. For example, the complexity of this problem on the unit cube becomes not only polynomial, but linear with respect to Nd. It is reduced to finding the diameter of a flat zonotope, see [5] for more result on this and related problems. Nevertheless, we believe in the high complexity of this problem. One argument for that is a large number of local extrema. The following theorem states that if we drop the assumption of the symmetry of the polyhedron, then the number of local maxima with different values of the function can be exponential.

Theorem 5.4

For each \(N\ge 2\) there exists a polyhedron in \({{\mathbb {R}}}^N\) with less than 2N facets and a positive semidefinite quadratic form of rank two on that polyhedron which has at least \(2^{N-2}\) points of local maxima with different values of the function.

The proof is in the Appendix.

On the other hand, as we shall see in the next section, in low dimensions Problem EE admits efficient solutions.

6 Problem EE in low dimensions

In dimensions \(d=2, 3\), Problem EE can be efficiently solved. The solution in the two-dimensional case is simple, the three-dimensional case is computationally harder.

6.1 The dimension \(d=2\)

In the two-dimensional plane the solvability of the system (4) is explicitly decidable, which solves Problem EE.

Proposition 6.1

In the case \(d=2\), Problem EE admits an explicit solution for arbitrary ellipses \(E_0, \ldots , E_N\). The complexity of the solution is linear in N.

The proof is constructive and gives the method for the solution.

Proof

Denote \({\varvec{x}}= (x, y)^T\) and rewrite the inequalities (4) in coordinates. After simplifications we get \(A_ky^2 + 2B_kxy + C_kx^2 > 0\), \(k = 1, \ldots , N\), where \(A_k, B_k, C_k\) are known coefficients. The set of solutions to the kth inequality is \(\frac{y}{x} \in I_k\), where \(I_k\) is either the interval with ends at the roots of the quadratic equation \(A_k t^2 + 2B_kt + C_k = 0\), if \(A_k < 0\) (if there are no real roots, then \(I_k = \emptyset \)); or the union of two open rays with the same roots, if \(A_k > 0\) (if there are no real roots, then \(I_k = {{\mathbb {R}}}\)); or one ray if \(A_k = 0, B_k \ne 0\); the other cases are trivial. Then the solution of the system (4) consists of points \({\varvec{x}}= (x, y)^T\) such that the ratio \( \frac{y}{x}\) belongs to the intersection \(\bigcap \nolimits _{k=1, \ldots , N} I_k\). Hence, \(E_0 \subset \textrm{co}\,\{E_1, \ldots , E_N\}\) if and only if this intersection is empty, i.e. \(\bigcap \nolimits _{k=1, \ldots , N} I_k = \emptyset \). \(\square \)

6.2 The three dimensional case

In three dimensions the solvability of the system (4) is also explicitly decidable, but much harder than in dimension 2.

Proposition 6.2

For \(d=3\) and arbitrary ellipses \(E_0, \ldots , E_N\), Problem EE is reduced to solving of \(O(N^2)\) bivariate quadratic systems of equations.

Proof

Denote \({\varvec{x}}= (x, y, z)^T\) and rewrite the inequalities (4) in coordinates. This is a system of homogeneous inequalities of degree 2. After the division by \(z^2\), we get a system of quadratic inequalities \(f_i(x, y) < 0, \ i = 1, \ldots , N\). It is compatible precisely when so is the system \(f_i(x, y) - \varepsilon \le 0, \ i = 1, \ldots , N\), for some small \(\varepsilon > 0\). Denote by \({{\mathcal {D}}}\) the set of its solutions and assume it is non-empty. This is a closed subset of \({{\mathbb {R}}}^2\) bounded by arcs of the quadrics \(\Gamma _i = \bigl \{(x, y)^T \in {{\mathbb {R}}}^2: f_i(x,y) - \varepsilon = 0\bigr \}\). The closest to the origin point of \({{\mathcal {D}}}\) belongs to one of the three sets: 1) the origin itself; 2) points of pairwise intersections \(\Gamma _i \cap \Gamma _j\), \(i\ne j\); 3) closest to the origin points of \(\Gamma _i\), \(i = 1, \ldots , N\). If some of those quadrics coincide or are circles centred at the origin, then we reduce the number of quadrics by the standard argument. Otherwise, the set 2 contains at most \(4\cdot \frac{N(N-1)}{2}= 2N(N-1)\) points; the set 3 contains at most 4N points. Hence, if \({{\mathcal {D}}}\) is non-empty, then it contains one of the points of the sets 1, 2, 3. Thus, to decide if \({{\mathcal {D}}}\) is empty or not, one needs to take each of those \(2N(N-1) + 4N +1=2N^2 + 2N +1\) points and check whether it belongs to \({{\mathcal {D}}}\), i.e. satisfies all the inequalities \(f_i(x, y) - \varepsilon \le 0, \ i = 1, \ldots , N\). If the answer is affirmative for at least one point, then system (4) is compatible and \(E_0 \not \subset P\), otherwise \(E_0 \subset P\).

Evaluating each of those \(O(N^2)\) points, except for the first one, is done by solving a system of two quadratic inequalities. \(\square \)

6.3 Problem EE in a fixed dimension

Similarly to Proposition 6.2, one can show that Problem EE in \({{\mathbb {R}}}^d\) is reduced to \(O(N^{d-1})\) systems of d quadratic equations with d variables. The complexity of this problem is formally polynomial in N, with the degree depending on d. However the method used in the case \(d=3\) (the exhaustion of points of intersections and of points minimizing the distance to the origin) becomes non-practical for higher dimensions.

7 Approximate solutions

Apart from the low-dimensional cases, most likely, no efficient algorithms exist to obtain an explicit solution of Problem EE. That is why we are interested in approximate solutions with a given relative error (approximation factor) according to the following definition:

Definition 7.1

A method solves Problem EE approximately with a factor \(q \in [0,1]\) if it decides between two cases: either \(E_0 \not \subset P \) or \(q E_0 \subset P\).

So, the extreme case \(q=1\) corresponds to a precise solution, the other extreme case \(q=0\) means that the method does not give any approximate solution. We consider two methods. The first one is based on the construction of a balanced complex polytope. Such polytopes were deeply analysed in [11, 13, 14]. At the first sight, the results of those works give a straightforward solution to Problem EE. However, this is not the case. We are going to show that the balanced complex polytope method provides only an approximate solution with the factor \(q=\nicefrac {1}{2}\) and this value cannot be improved. Moreover, this approximation is attained only after a slight modification of this method, otherwise the approximation factor may drop to zero. Then we introduce the second method which provides a better approximation (with the factor q arbitrarily close to 1, i.e. to the precise solution).

8 The complex polytope method

Given an elliptic polytope \(P = \textrm{co}\,\{E_1,\ldots ,E_N\}\) and an ellipse \(E_0\); We need to decide whether \(E_0 \subset P\). For each ellipse \(E_k\), we choose arbitrary conjugate radii \({\varvec{a}}_k, {\varvec{b}}_k\), thus \(E_k = E_k({\varvec{a}}_k, {\varvec{b}}_k), \ k = 1, \ldots , N\). Define \({\varvec{v}}_k = {\varvec{a}}_k + i {\varvec{b}}_k \) and consider the balanced complex polytope

To get an approximate solution of Problem EE we consider the following auxiliary problem:

Problem EE* For points \({\varvec{v}}_0, \ldots , {\varvec{v}}_N \in {{\mathbb {C}}}^d\), decide whether or not \({\varvec{v}}_0 \in \textrm{cob}\,\{{\varvec{v}}_1, \ldots , {\varvec{v}}_N\}\).

What is the relation between Problem EE and EE*? Clearly, if \({\varvec{v}}_0 \in G\), then \(E_0 \subset P\). Indeed, if \({\varvec{v}}_0 \in G\), then \(e^{it}{\varvec{v}}_0 \in G\) for all \(t \in {{\mathbb {R}}}\), hence \(E_0 = Re \{e^{it}{\varvec{v}}_0, \ t \in {{\mathbb {R}}}\} \subset Re G\). However, the converse is, in general, not true and Problem EE* is not equivalent to Problem EE. Moreover, Problem EE* does not even provide an approximate solution to Problem EE with a positive factor. This means that the assertion \(E_0 \subset P\) does not imply the existence of a positive q such that \(q{\varvec{v}}_0 \in G\).

Proposition 8.1

Problem EE* gives an approximate solution to Problem EE with the factor \(q=0\).

Proof

Let \({\varvec{a}}_0 = (1,0)^T\) and \({\varvec{b}}_0 = (0,1)^T\), and \({\varvec{a}}_1 = {\varvec{b}}_0, {\varvec{b}}_1 = {\varvec{a}}_0\). Clearly, \(E_0\) and \(E_1\) both coincide with the unit disc, hence P is also a unit disc and \(E_0 \subset P\). On the other hand, no positive number q exists such that \(q{\varvec{v}}_0 \in G\). Indeed, \(G = \{z {\varvec{v}}_1: \left|z\right| \le 1\}\). Denote \(z = t + i u\). If \(q{\varvec{v}}_0 = z{\varvec{v}}_1\), then \( q{\varvec{v}}_0 = t {\varvec{a}}_1 - u {\varvec{b}}_1 + i (t {\varvec{b}}_1 + u {\varvec{a}}_1) = t {\varvec{b}}_0 - u {\varvec{a}}_0 + i (t {\varvec{a}}_0 + u {\varvec{b}}_0). \) Hence, \( q {\varvec{a}}_0 = t {\varvec{b}}_0 - u {\varvec{a}}_0 \quad \text {and}\quad q{\varvec{b}}_0 = t {\varvec{a}}_0 + u {\varvec{b}}_0, \) which is coordinate wise \((q, 0) = (-u, t)\) and \((0, q) = (t, u)\). Therefore, \(q = t = u = 0\). \(\square \)

Thus, Problem EE* does not give an approximate solution to Problem EE. Nevertheless, under an extra assumption that G is self-conjugate, it does provide an approximate solution with the factor \(\nicefrac {1}{2}\). This factor is tight and cannot be improved. This follows from Theorems 8.2 and 8.3 proved below. Before formulating them, we briefly discuss the practical issue.

To solve Problem EE* we consider a self-conjugate balanced complex polytope \(G = \textrm{cob}\,\{{\varvec{v}}_k,\bar{{\varvec{v}}}_k: k = 1, \ldots N\}\). As we noted in Remark 4.2, it has the same real part P as the balanced polytope \(G = \textrm{cob}\,\{{\varvec{v}}_k: k = 1, \ldots , N\}\). Problem EE* is solved for G by the following optimization problem:

This problem finds the biggest \(t_0\) such that \(t_0{\varvec{v}}_0\) is a balanced complex combination of the points \({\varvec{v}}_1, \ldots , {\varvec{v}}_N, {\bar{{\varvec{v}}}}_1, \ldots , {\bar{{\varvec{v}}}}_N\). The coefficients of this combination are \(z_k = t_k + i u_k\), \(k = 1, \ldots , 2N\), the points \({\varvec{v}}_k, {\bar{{\varvec{v}}}}_k\) correspond to the coefficients \(z_k, z_{k+N}\) respectively. This is a convex conic programming problem with variables \(t_0, t_k, u_k\), where \(k = 1, \ldots , 2N\). It is solved by the interior point method on Lorentz cones. If \(t_0 \ge 1\), then \({\varvec{v}}_0 \in G\) and vice versa.

In Sect. 11 we present the numerical results showing that the problem is efficiently solved in relatively low dimension 2 to 20 and for the number of ellipses up to 2000.

Now we are going to see that if \({\varvec{v}}_0 \notin G\), then \(E_0 \not \subset \frac{1}{2} P\). Dividing by two, we obtain an approximate solution to Problem EE with the factor at least \(\nicefrac {1}{2}\): if \(\frac{1}{2} {\varvec{v}}_0 \notin G\), then \(E_0 \not \subset P\), otherwise, if \(\frac{1}{2} {\varvec{v}}_0 \in G\), then \(\frac{1}{2} E_0 \subset P\).

Theorem 8.2

If the set of vertices G is self-conjugated, then a precise solution of Problem EE* gives an approximate solution to Problem EE with the factor \(q\ge \frac{1}{2}\).

Proof

It suffices to show that if \({\varvec{v}}_0 \notin G\), then \(E_0 \not \subset \frac{1}{2} P\). If a point \({\varvec{v}}_0 = {\varvec{a}}_0 + i {\varvec{b}}_0\) does not belong to G, then it can be separated from G by a nonzero functional \({\varvec{c}}= {\varvec{x}}+ i{\varvec{y}}\), which means \( Re ({\varvec{c}}, {\varvec{v}}_0) > \sup _{{\varvec{v}}\in G} Re ({\varvec{c}}, {\varvec{v}}). \) Rewriting the scalar product in the left-hand side we obtain \( ({\varvec{x}}, {\varvec{a}}_0) - ({\varvec{y}}, {\varvec{b}}_0) > \sup _{{\varvec{v}}\in G} \ Re ({\varvec{c}}, {\varvec{v}}). \) Note that \(e^{-it}{\varvec{v}}\in G\) for all \(t \in {{\mathbb {R}}}\). Substituting this for \({\varvec{v}}\) in the right-hand side, we get \( ({\varvec{x}}, {\varvec{a}}_0) - ({\varvec{y}}, {\varvec{b}}_0)\ > \sup _{{\varvec{v}}\in G, \ t \in {{\mathbb {R}}}} \ Re ({\varvec{c}}, e^{-it}{\varvec{v}}). \) Since \(Re ({\varvec{c}}, e^{-it}{\varvec{v}}) = \big (({\varvec{x}}, {\varvec{a}}) - ({\varvec{y}}, {\varvec{b}})\big )\cos t + \big (({\varvec{x}}, {\varvec{b}}) + ({\varvec{y}}, {\varvec{a}})\big )\sin t \) and the supremum of this value over all \(t \in {{\mathbb {R}}}\) is equal to

we conclude that

Since G is symmetric with respect to the conjugate, we have \(\bar{\varvec{v}}\in G\) and, hence, \(i {\bar{{\varvec{v}}}}={\varvec{b}}+ i{\varvec{a}}\in G\). Hence, one can interchange \({\varvec{a}}\) and \({\varvec{b}}\) in (8) and get

If \(-({\varvec{x}}, {\varvec{a}}) \cdot ({\varvec{y}}, {\varvec{b}}) + ({\varvec{x}}, {\varvec{b}}) \cdot ({\varvec{y}}, {\varvec{a}}) \ge 0\), then (8) yields

Otherwise, if \(\ - ({\varvec{x}}, {\varvec{a}}) \cdot ({\varvec{y}}, {\varvec{b}}) + ({\varvec{x}}, {\varvec{b}}) \cdot ({\varvec{y}}, {\varvec{a}}) \le 0\), then we apply (9) and arrive at the same inequality (10). Since inequality (10) is strict, we take squares of its both parts and obtain that there exists \(\varepsilon > 0\) such that

Denote by \({\varvec{p}}\) the vector from the set \(\{{\varvec{x}}, {\varvec{y}}\}\) on which the maximum \( \max _{{\varvec{p}}\in \{{\varvec{x}}, {\varvec{y}}\}} ({\varvec{p}}, {\varvec{a}}_0)^2 + ({\varvec{p}}, {\varvec{b}}_0)^2 \) is attained. Note that \({\varvec{p}}\) depends on \({\varvec{c}}\) and \({\varvec{v}}_0\) only. Hence, for every point \({\varvec{v}}= {\varvec{a}}+ i {\varvec{b}}\in G\), we have

On the other hand,

Thus,

and, consequently,

Now observe that the right-hand side of this inequality is equal to \(\sup _{{\varvec{w}}\in E({\varvec{a}}, {\varvec{b}})} ({\varvec{p}}, {\varvec{w}})\) and the left-hand side is smaller than \(\sup _{{\varvec{w}}_0 \in E({\varvec{a}}_0, {\varvec{b}}_0)}({\varvec{p}}, {\varvec{w}}_0)\). Therefore, for every pair \({\varvec{a}}, {\varvec{b}}\in {{\mathbb {R}}}^d\) such that \({\varvec{a}}+ i {\varvec{b}}\in G\), we have

Thus, there exists a point \({\widehat{{\varvec{w}}}} \in E({\varvec{a}}_0, {\varvec{b}}_0)\) such that \(({\varvec{p}}, {\widehat{{\varvec{w}}}}) > \frac{1}{2} \sup _{{\varvec{w}}\in E({\varvec{a}}, {\varvec{b}})} ({\varvec{p}}, {\varvec{w}})\). This holds for every point \({\varvec{a}}+ i {\varvec{b}}\in G\) and, in particular, for each point \({\varvec{a}}_k + i {\varvec{b}}_k, \ k = 1, \ldots , N \). Hence, the linear functional \({\varvec{p}}\) strictly separates the point \({\hat{{\varvec{w}}}}\) of the ellipsoid \(E_0\) from all ellipsoids \(\frac{1}{2} E_k\), i.e. from their convex hull. Therefore, \({\widehat{{\varvec{w}}}} \notin \frac{1}{2} P\) and, hence, \(E_0 \not \subset \frac{1}{2} P\). \(\square \)

After Theorem 8.2 the natural question arises whether the approximation factor \(\nicefrac {1}{2}\) can be increased. The following theorem shows that the answer is negative.

Theorem 8.3

The factor \(q = \frac{1}{2}\) in Theorem 8.2 is sharp.

Proof

It suffices to give an example where this factor can be arbitrarily close to \(\nicefrac {1}{2}\). Consider the set S of pairs of vectors \(({\varvec{a}}, {\varvec{b}}) \in {{\mathbb {R}}}^2\times {{\mathbb {R}}}^2\) such that \({\varvec{a}}, {\varvec{b}}\) are collinear and \(\left\Vert {\varvec{a}}\right\Vert ^2 + \left\Vert {\varvec{b}}\right\Vert ^2 \le 1\). Then define \(Q = \{{\varvec{a}}+ i {\varvec{b}}: ({\varvec{a}}, {\varvec{b}}) \in S\}\). Thus, \(Q \subset {{\mathbb {C}}}^2\).

Since each pair \(({\varvec{a}}, 0)\) with \(\left|{\varvec{a}}\right| = 1\) belongs to S, we see that the set ReQ contains a unit disc centred at the origin. In our notation this disc can be denoted as \(E({\varvec{e}}_1, {\varvec{e}}_2)\), where \({\varvec{e}}_1 = (1,0)^T\) and \( {\varvec{e}}_2 = (0,1)^T\). Furthermore, if \({\varvec{v}}\in Q\), then \({\bar{{\varvec{v}}}} \in Q\) and \(e^{it}{\varvec{v}}\in Q\) for each \(t \in {{\mathbb {R}}}\). The first assertion is obvious. To prove the second one we observe that \(e^{i\tau }{\varvec{v}}={\varvec{a}}_{\tau } + i {\varvec{b}}_{\tau }\) with \({\varvec{a}}_{\tau } = {\varvec{a}}\cos \tau - {\varvec{b}}\sin \tau \) and \({\varvec{b}}_{\tau } = {\varvec{a}}\sin \tau + {\varvec{b}}\cos \tau \). Clearly, \({\varvec{a}}_{\tau }\) and \({\varvec{b}}_{\tau }\) are collinear and \(\left\Vert {\varvec{a}}_{\tau }\right\Vert ^2 + \left\Vert {\varvec{b}}_{\tau }\right\Vert ^2 = \left\Vert {\varvec{a}}\right\Vert ^2 + \left\Vert {\varvec{b}}\right\Vert ^2 \le 1\). Every point of the balanced convex hull \(G = \textrm{cob}\,(Q)\) has the form \(\sum _{k = 1}^N z_k {\varvec{u}}_k = \sum _{k = 1}^N |z_k|e^{i\tau _k}{\varvec{u}}_k\), where \(z_k = |z_k| e^{i\tau _k}\) and \({\varvec{u}}_k \in Q\), \(\sum _{k=1}^N|z_k| \le 1\). Writing \(t_k = |z_k|\) and \({\varvec{v}}_k = e^{i\tau _k}{\varvec{u}}_k\) and using that \({\varvec{v}}_k \in Q\), we see that every point of G has the form \(\sum _{k = 1}^N t_k {\varvec{v}}_k\) with all \({\varvec{v}}_k\) from Q and \(\sum _{k=1}^N t_k \le 1\).

Now let us solve Problem EE* for the set G and for the vector \(t_0({\varvec{e}}_1 + i {\varvec{e}}_2)\). We find the maximal positive \(t_0\) for which this vector belongs to G. We have \(t_0({\varvec{e}}_1 + i {\varvec{e}}_2) = \sum _{k=1}^N t_k {\varvec{v}}_k\) with \({\varvec{v}}_k = {\varvec{a}}_k + i {\varvec{b}}_k \in Q\) and \(t_k \ge 0\), \(\sum _{k=1}^N t_k \le 1\). We are going to show that \(t_0 \le \frac{1}{2}\).

Let \({\varvec{a}}_k\) be co-directed to the vector \((\cos \gamma _k, \sin \gamma _k)^T\); the vector \({\varvec{b}}_k\) has the direction \(\varepsilon _k (\cos \gamma _k, \sin \gamma _k)^T\), where \(\varepsilon _k \in \{1, -1\}\). Since \(\left\Vert {\varvec{a}}_k\right\Vert ^2 + \left\Vert {\varvec{b}}_k\right\Vert ^2 \le 1\), it follows that there is an angle \(\delta _k = \bigl [0, \frac{\pi }{2}\bigr ]\) and a number \(h_k \in [0,1]\) such that \(\left\Vert {\varvec{a}}_k\right\Vert = h_k \cos \delta _k, \ \left\Vert {\varvec{b}}_k\right\Vert = h_k \sin \delta _k \). We have \(\sum _{k=1}^N t_k {\varvec{a}}_k = t_0 {\varvec{e}}_1\). In the projection to the abscissa, we have \(\sum _{k=1}^N t_k ({\varvec{a}}_k, e_1) = t_0 \), and hence, \( \sum _{k=1}^N t_k h_k \cos \gamma _k \cos \delta _k = t_0. \) Similarly, after the projection of the equality \(\sum _{k=1}^N t_k {\varvec{b}}_k = t_0 {\varvec{e}}_2\) to the vector \({\varvec{e}}_2\), we get \( \sum _{k=1}^N \varepsilon _k t_k h_k \sin \gamma _k \sin \delta _k = t_0. \) Taking the sum of these two equalities, we obtain \( \sum _{k=1}^N t_k h_k \cos (\gamma _k - \varepsilon _k \delta _k) = 2t_0. \) Since all numbers \(h_k \cos (\gamma _k - \varepsilon _k \delta _k)\) do not exceed one, we conclude that \( \sum _{k=1}^N t_k \ge 2t_0 \) and, therefore, \(t_0 \le \frac{1}{2}\).

Hence, for the unit disc \(E_0({\varvec{e}}_1, {\varvec{e}}_2)\) and the set of ellipses \(\{E({\varvec{a}}, {\varvec{b}}): {\varvec{a}}+ i{\varvec{b}}\in G \}\), the solution of Problem EE* gives the approximation for Problem EE with the factor at most \(\frac{1}{2}\). This is not the end yet, since Q is infinite and so G is not a balanced complex polytope. However, G can be approximated by a balanced polytope with an arbitrary precision. For the obtained balanced polytope, the approximation factor is close to \(\frac{1}{2}\). Since it can be made arbitrarily close, the proof is completed. \(\square \)

9 The corner cutting method

A straightforward approach to approximate the solution of Problem EE could be to replace \(E_0\) by a sufficiently close circumscribed polygon and then to decide whether all its vertices belong to P. However, this idea turns out to be not efficient: A good approximation factor can only be obtained if this polygon has many vertices. We derive another approach based on step-by-step relaxation by cutting angles of a polygon: We iteratively search for the point of the ellipse \(E_0\) with largest norm with respect to P and check whether the norm is less than 1. We begin with the following auxiliary problem PE (point in ellipses), which can be seen as a special case of Problem EE

Problem PE In the space \({{\mathbb {R}}}^d\) there are ellipses \(E_1, \ldots , E_N\) and a point \({\varvec{w}}\). Find \(\left\Vert {\varvec{w}}\right\Vert _P\), where \(P = \textrm{co}\,\{E_1, \ldots , E_N\}\).

In particular, deciding whether \(\left\Vert {\varvec{w}}\right\Vert _P \le 1\) is equivalent to a special case of Problem EE when the ellipse \(E_0\) degenerates to a segment \([-{\varvec{w}}, {\varvec{w}}]\). This problem can be efficiently solved. Either precisely, by the conic programming method in subsection 9.2, or approximately by the linear programming method presented in Sect. 10.

9.1 The algorithm of corner cutting

We begin with description of the main idea and then define a routine of the algorithm.

9.1.1 The idea of the algorithm.

It may be assumed that \(E_0\) is a unit circle. We construct a sequence of polygons circumscribed around \(E_0\) as follows. The initial polygon is a square. In each iteration we cut off a corner of the polygon with the largest P-norm. So, we omit one vertex and add two new vertices. The cutting is by a line touching \(E_0\) orthogonal to the segment connecting that vertex with the centre.

Let us denote by \(\nu _j\) the largest P-norm of vertices after the jth iteration (the initial square corresponds to \(j=0\)). Since the norm is convex, its maximum on a polygon is attained at one of its vertices. Hence, the norm of the cut vertex is not less than the norm of each of the new vertices. Therefore, \(\nu _{j+1} \le \nu _j\), so the sequence \(\{\nu _j\}_{j\ge 0}\) is non-increasing. If at some step we have \(\nu _j \le 1\), then all the vertices of the polygon after j iterations are inside P. Hence, this polygon is contained in P and, therefore, \(E_0 \subset P\).

Otherwise, if \(\nu _j > 1\), we have \(E_0 \not \subset \nu _j \cos (\tau ) P\), where \(\tau \) is the smallest exterior angle of the resulting polygon. This is proved in Theorem 9.1 below. Thus, the algorithm solves Problem EE with the approximation factor \(q \ge \nu _j \cos (\tau )\).

Comments. In each iteration we need to find the vertex with the maximal P-norm. Therefore, we need to compute a norm of each vertex by solving Problem PE. For this, we compute the norms of two new vertices in each iteration. Due to the central symmetry, one can reduce computation twice. Among two symmetric vertices we compute the norm of one of them and in each iteration we cut off both symmetric vertices.

Let \(\tau \) be an arc of the unit circle connecting points \(\alpha \) and \(\beta \) and denote by the \(\left|\tau \right|\) its length. We assume that \(\left|\tau \right| < \pi \). Denote by \(\sigma = \sigma (\tau )\) the midpoint of the arc \(\tau \) and

where \({\varvec{a}}_0, {\varvec{b}}_0\) are the vectors defining the ellipse \(E_0 = E({\varvec{a}}_0, {\varvec{b}}_0)\). Two lines touching \(E_0\) at the points corresponding to the ends of the arc \(\tau \) meet at \({\varvec{w}}\).

9.2 The algorithm

9.2.1 Initialization

Choose the maximum number of iterations J. We split the upper unit semicircle (the part of the unit circle in the upper coordinate half-plane) into arcs of equal length \(\tau _1, \tau _2\) and compute the P-norms of the points \({\varvec{w}}(\tau _i)\), \(i = 1,2\). Denote by \(\nu _0\) the maximum of those two norms and set \({{\mathcal {T}}}= \{\tau _1, \tau _2\}\).

9.2.2 Main loop—the jth iteration

We have a collection \({{\mathcal {T}}}\) of \(j+1\) disjoint open arcs forming the upper semicircle, the P-norms of all \(j+1\) points \({\varvec{w}}(\tau )\), \(\tau \in {{\mathcal {T}}}\), and the maximal norm \(\nu _{j-1}\). Find an arc \(\tau \) with the biggest P-norm and replace that arc by its two halves \(\tau _1, \tau _2\). Update \({{\mathcal {T}}}\) and compute the P-norms of the points \({\varvec{w}}(\tau _1)\) and \({\varvec{w}}(\tau _2)\). Set \(\nu _j\) equal to the maximum of those two norms and of \(\nu _{j-1}\).

-

If \(\nu _j \le 1\), then \(E_0 \subset P\) and STOP.

-

If \(\nu _j > \cos (\tau )^{-1}\), where \(\tau \) is the minimal arc in \({{\mathcal {T}}}\), then \(E_0 \not \subset P\) and STOP.

-

If \(1 < \nu _j \le \cos (\tau )^{-1}\) and \(j=J\), then \(E_0 \not \subset \cos (\tau ) P\).

-

Otherwise go to the next iteration.

Theorem 9.1

The corner cutting algorithm after j iterations solves Problem EE with the approximation factor \(q \ge \nu _j\cos (\tau )\), where \(\tau \) is the minimal arc in \({{\mathcal {T}}}\).

Proof

Let \(\tau \) be the smallest arc after j iterations. Suppose this arc appears after the kth iteration, \(k\le j\). Then, its mother arc (let us call it \(2\tau \)) had the biggest value \(\left\Vert {\varvec{w}}(\mathord {\,\cdot \,})\right\Vert _{P}\) among all arcs in the kth iteration. This means that \(\left\Vert {\varvec{w}}(2\tau )\right\Vert _{P} = \nu _k\). Since the sequence \(\{\nu _i\}_{i\ge 0}\) is non-increasing, we have \(\nu _k \ge \nu _j\). The point \({\varvec{x}}= \cos (\tau ) {\varvec{w}}(2\tau )\) lies on \(E_0\). It does not belong to P precisely when \(\left\Vert {\varvec{x}}\right\Vert _P > 1\), i.e. when \({\varvec{w}}(2\tau ) > \cos (\tau )^{-1}\). Thus, if \(\nu _j > \cos (\tau )^{-1}\), then \({\varvec{w}}(2\tau ) = \nu _k > \cos (\tau )^{-1}\) and, hence, \({\varvec{x}}\notin P\). Therefore, the inequality \(\nu _j > \cos (\tau )^{-1}\) implies that \(E_0\) is not contained in P. \(\square \)

The length of each arc has the form \(2^{-s}\pi \), where s is the number of double divisions to arrive at that arc. We call this number the level of the arc. So, the original arcs of length \(\nicefrac {\pi }{2}\) are those of level one.

9.2.3 The complexity of the corner cutting algorithm

To perform j iterations one needs to solve Problem PE for \(j+2\) points \({\varvec{w}}(\mathord {\,\cdot \,})\). So, the complexity of the algorithm is defined by the complexity of solution of Problem PE. Below, in Sects. 9.2 and 10 we derive two methods of its solution, based on different ideas and compare them by numerical experiments. The approximation factor is \(\cos (\tau ) = \cos (2^{-s}\pi ) = 1 - 2^{-2\,s-1}\pi ^2 + O(2^{-4\,s})\), where s is the maximal level of the intervals after j iterations. Already for \(s=2\) (after one iteration) the approximation factor is \(q= \cos (\frac{\pi }{4}) = \nicefrac {1}{\sqrt{2}}\), which is better than in the complex polytope method, where \(q = \frac{1}{2}\). For \(s=3\) (after at most three iterations), we have \(q = \cos (\frac{\pi }{8}) = 0.923\ldots \), for \(s=5\), we have \(q= 0.995\ldots \), for \(s=10\), we have \(q > 1 - 10^{-5}\). In the worst case reaching the level s requires \(j=2^s-1\) iterations. However, in practice it is much faster. Numerical experiments show that j usually does not exceed \(s+2\).

In each iteration of the corner cutting algorithm we need to find the P-norm of the newly appeared vertices of the polygon. This means that we solve Problem PE for those vertices. The way of arriving at the solution actually defines the efficiency of the whole algorithm. We present two different methods and compare them.

9.3 Solving Problem PE via conic programming

The norm \(\left\Vert {\varvec{w}}\right\Vert _P\) is equal to the minimal \(r\in {{\mathbb {R}}}\) such that \({\varvec{w}}\in r P\), i.e. the minimal possible sum of non-negative numbers \(r_1, \ldots , r_j\in {{\mathbb {R}}}\) such that \({\varvec{w}}= \sum _{j=1}^N r_j E_j\). Thus, we obtain

Changing variables \(c_j = r_j\cos \tau _j\), \(s_j = r_j\sin \tau _j \) we obtain the conic programming problem

with 3N variables \(r_j, c_j, s_j\in {{\mathbb {R}}}\) and \(N(d+2)\) constraints. Among these constrains, there are \(N(d+1)\) linear and only N quadratic ones, but the latter actually define the complexity of this problem. The problem is solved by conic programming. This can be done efficiently for dimensions \(d\le 20\) and number of ellipsoids \(N \le 1000\).

The value r of the problem (12) is equal to the norm \(\left\Vert {\varvec{w}}\right\Vert _P\). In particular, \({\varvec{w}}\in P\) if and only if \(r\le 1\).

In the next section we introduce the second approach, when the conic programming (13) problem is approximated with a linear programming one with precision that increases exponentially with the number of extra variables.

10 The projection method

The corner cutting method makes use of a polygonal approximation of the ellipse \(E_0\). Can we go further and approximate all the N ellipses \(E_1, \ldots , E_N\) and thus approximate Problem PE with a linear programming (LP) problem? In principle, this is possible, but very inefficient. Cutting corners of N polygons is expensive and slow. If we do not involve cutting but just approximate each ellipse by a polygon, the situation will be still worse due to a large total number of vertices of all polygons. Nevertheless, each approximating polygon can be built much cheaper if we present it as a projection of a higher dimensional polyhedron. This technique was suggested by Ben-Tal and Nemirovski [2] for approximating quadratic problems by LP problems. See also [8] for generalizations to other classes of functions. We briefly describe this method (with slight modifications) and then apply it to Problem PE. Note that, in contrast to the conic programming, here we obtain only an approximate solution of Problem PE. However, this is not a restriction since the corner cutting algorithm also gives only an approximate solution for Problem EE. If \(q_1\) and \(q_2\) are approximation factors of those two problems, then the resulting approximation factor is \(q_1q_2\). If \(q_i = 1-\varepsilon _i\) with a small \(\varepsilon _i\), \(i=1,2\), then \(q_1q_2 > 1-\varepsilon _1-\varepsilon _2\).

10.1 A fast approximation of ellipses

The projection method realizes a polygonal approximation of ellipses by solving a certain LP problem and the precision of this approximation increases exponentially in the LP problem input. This is done by an iterative algorithm, whose main loop is a doubling of a convex figure.

10.1.1 Doubling of a figure

Consider an arbitrary figure \(F\subset {{\mathbb {R}}}^2\) located in the lower half-space of the Cartesian plane. Then the set

is the convex hull of F with its reflection about the abscissa, see Fig. 1. Indeed, each point \(A = (x, y)^T \in F\) produces a vertical segment \(\{(x, y'): y' \in [y, -y]\}\) which connects A with its reflection \(A'\) about the abscissa. Those segments fill the set \(F_0\).

In the same way one can double a figure F about an arbitrary line passing through the origin provided F lies on one side with respect to this line. Let a line \(\ell _{\alpha }\) be defined by the equation \(y = x\tan \alpha \); it makes the angle \(\alpha \in \bigl [ 0, \pi \bigr ]\) with the abscissa. After the clockwise rotation by the angle \(\alpha \) the line \(\ell _{\alpha }\) becomes the abscissa and F becomes a figure \(F'\) located in the lower half-plane. Since this rotation is defined by the matrix \( R_{\alpha } = \left( {\begin{matrix} \cos \alpha &{} \sin \alpha \\ -\sin \alpha &{} \cos \alpha \end{matrix}} \right) , \) it follows from formula (14) that the figure \(F_{\alpha }\), the convex hull of F with its reflection about the line \(\ell _{\alpha }\), consists of points \((x_1, y_1)\) satisfying the following system of inequalities:

10.1.2 Construction of a regular \(2^n\)-gon

Now we describe the algorithm of recursive doubling of a polygon.

We take an arbitrary radius \(r > 0\), denote \(\alpha _m = 2^{-m} \pi , m\ge 0\), and consider an isosceles triangle AOB, where \(A=(r,0)^T\), \(B = (r\cos \alpha _n, r\sin \alpha _n)^T\), and O is the origin. Double this triangle about the line \(\ell _{\alpha _n} = OB\), then double the obtained quadrilateral about \(\ell _{\alpha _{n-1}}\) (the lateral side different from OA), then about \(\ell _{\alpha _{n-2}}\), etc.. After n doublings (the last one is about \(\ell _1\), which is abscissa) we get the regular \(2^n\)-gon inscribed in the circle of radius r. We denote this polygon by \(rT_n\). Thus, \(T_n\) is the \(2^n\)-gon inscribed in the unit circle. Note that the initial triangle AOB is defined by the system of linear inequalities

Thus, we obtain the following description of the set \(rT_n\), which is a regular \(2^n\)-gon inscribed in the circle of radius r:

in the variables \(k=1,\ldots ,n\), \(r, x_1, \ldots , x_{2n+2}\). The inequality with modulus \(|a|\le b\) is replaced by the system \(a \le b\), \(-a \le b\). The system (16) consists of \(3n+3\) linear constraints (equations and inequalities) with \(2n+3\) variables. For all vectors \(X = (x_1, \ldots , x_{2n+2})^T\) satisfying system (16), the vector composed by the two last components \((x_{2n+1}, x_{2n+2})^T\) fills the regular \(2^n\)-gon. So, this \(2^n\)-gon is a projection of a \((2n+2)\)-dimensional polyhedron to the plane. This polyhedron has \(3n+3\) facets.

10.1.3 Construction of an affine-regular \(2^n\)-gon inscribed in an ellipse

For an arbitrary ellipse \(E({\varvec{a}}, {\varvec{b}})\), the point \(x_{2n+1}{\varvec{a}}+ x_{2n+2}{\varvec{b}}\) runs over an affine-regular \(2^n\)-gon inscribed in the ellipse \(rE({\varvec{a}}, {\varvec{b}})\) as the vector \(X = \bigl (x_1, \ldots , x_{2n+2}\bigr )\) runs over the set of solutions of the linear system (16) with this value of r.

10.2 Solving Problem PE by the fast polygonal approximation

We approximate all ellipses \(E_j = E({\varvec{a}}_j, {\varvec{b}}_j)\), \(j = 1, \ldots , N\) by polygons and then decide whether \({\varvec{w}}\in P\) with some approximation factor.

We fix a natural n and non-negative numbers \(r^{(1)}, \ldots , r^{(N)}\) such that \(\sum _{j=1}^N r^{(j)} = 1\). For each j, we consider the affine-regular polytope \( r_jT_{n}^{(j)} = x_{2n+1}^{(j)}{\varvec{a}}_j + x_{2n}^{(j)}{\varvec{b}}_j \) inscribed in \(E_j\), where \( (r_j, X^{(j)}) = l(r_{j}, x_1^{(j)}, \ldots , x_{2n+1}^{(j)}, x_{2n+2}^{(j)})^T \) is a feasible vector for the linear system (16). If \({\varvec{w}}\in r^{(1)}T_n^{(1)} + \cdots + r^{(N)}T_{n}^{(N)}\), then \({\varvec{w}}\in r^{(1)}E_1 + \cdots + r^{(N)}E_N\). Therefore, \({\varvec{w}}\in P\) whenever there exist numbers \(r^{(j)}\ge 0\) such that \(\sum _{j=1}^N r^{(j)} = 1\) and \({\varvec{w}}\in r^{(1)}T_n^{(1)} + \cdots + r^{(N)}T_{n}^{(N)}\). Hence, the assertion \({\varvec{w}}\in P\) is decided by the following LP problem:

in the variables \(k=1,\ldots ,n\), \(r^{(j)}, x_{s}^{(j)}\), \(j=1, \ldots , N\), \(s=1, \ldots , 2n+2. \) Let us remember that \(\alpha _m = 2^{-m}\pi \). The value of this problem \(r = \sum _{j=1}^N r^{(j)}\) is the minimal number such that \({\varvec{w}}\) belongs to the set \(rP_n\), where \(P_n = \textrm{co}\,\{T_n^{(1)}, \ldots , T_n^{(N)}\}\). In other words, \(r = \left\Vert {\varvec{w}}\right\Vert _{P_n}\). In particular, \({\varvec{w}}\in P_n\) precisely when \(r\le 1\).

The LP problem (17) has \((2n+3)N\) variables \(r^{(j)}\), \(x_{s}^{(j)}\) and \((3n+4)N + d+1\) linear constraints (equations and inequalities). Note that the matrix of this problem possesses only \((12n + 2d + 7)N +d \) nonzero coefficients, i.e. the total number of nonzero coefficients is linear in the size of the matrix. On the other hand, the product of the number of variables times the number of constraints exceeds \(6n^2N^2 + 2Nd\). Thus, this problem is very sparse.

Since \(P_n \subset P\), it follows that \({\varvec{w}}\in P\) whenever \(r\le 1\). In fact, problem (17) provides an approximate solution to Problem PE with the factor \(q = \cos (2^{-n}\pi )\).

Theorem 10.1

If r is the value of the LP problem (17), then for every \({\varvec{w}}\in {{\mathbb {R}}}^d\) we have \( r \cos (2^{-n}\pi ) \le \left\Vert {\varvec{w}}\right\Vert _P \le r \).

Proof

Since the ratio between the radii of the inscribed and the circumscribed circles of a regular \(2^n\)-gon is equal to \(q = \cos (2^{-n}\pi )\), we see that \(E_j \subset q T^{(j)}_n\) for each j. Consequently, \(qP \subset P_n\) and \(\left\Vert {\varvec{w}}\right\Vert _{P} \ge q\left\Vert {\varvec{w}}\right\Vert _{P_n}\), from which the theorem follows. \(\square \)

Corollary 10.2

If \(r \le 1\), then \({\varvec{w}}\in P\), otherwise \({\varvec{w}}\notin \cos (2^{-n}\pi ) P\).

Since \(\cos (2^{-n}\pi ) = 1 - 2^{-2n-1}\pi ^2 + O(2^{-4n})\), we see that already for small values of n we obtain a very sharp estimate. The rate of approximation for \(n \le 8\) is given in Table 1. For \(n=12\) we have \(q > 1 - 10^{-6}\); for \(n=17\) we have \(q > 1 - 10^{-9}\).

11 Numerical results

In this section we demonstrate practical implementations of our methods of finding the convex hulls of ellipses.Footnote 1

We obtain numerical results and compare them for the following methods presented in this paper:

-

Complex polytope method (Sect. 8)

-

Corner cutting method (Sect. 9)

-

Projection method (Sect. 10)

-

Mixed method

The Mixed method is a combination of the complex polytope method and the projection method. The former is the fastest algorithms of all three, the latter is the most accurate. The mixed method accepts an additional parameter bound describing the range of values one is interested in. Whenever the complex polytope method determined that the norm is inside or outside of the range of interest, the exact algorithm is not started, and thus the computation is sped up. For example, for the application of computing the joint spectral radius using the Invariant polytope algorithm, one is only interested whether an ellipse lies inside or outside of the convex hull of the elliptic polytope. Now, whenever the complex polytope method concludes that an ellipse lies inside or outside, one can already abort the computation.

The various implemented methods are optimized to a different degree, and thus, timings cannot be compared well.

To obtain quantitative measures of how the methods differ, we generated two test sets of random ellipses and elliptic polytopes.

(Dataset A) The first set contains ellipses and elliptic polytopes whose ellipses have normal distributed real and imaginary part. Dataset (A) consists of 365 elliptic polytopes in dimension 3 to 25 and the norm is computed approximately for 12 ellipses per elliptic polytope.

(Dataset B) The second set is generated by the Invariant polytope algorithm, where we stored the intermediate occurring ellipses and elliptic polytopes for some random sets of input matrices with complex leading eigenvalue. Dataset (B) consists of 119 elliptic polytopes in dimensions 2 to 12 and the norm is computed for 100 ellipses per elliptic polytopes.

The measured runtime of the algorithms with respect to the chosen accuracy and number of vertices can be seen in Fig. 9.

11.1 Behaviour of the complex polytope method

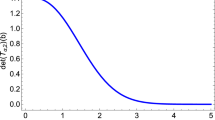

Although the theoretical approximation factor of this method is \(\nicefrac {1}{2}\), in numerical experiments it turns out that the average approximation factor is mostly larger than \(\nicefrac {1}{\sqrt{2}}\). In small dimensions, \(d=2,3\), the approximation factor is even close to 1 in a lot of cases, see Fig. 2 for the (estimated) probability density function of this method’s approximation factors.

Note that the numerical accuracy of the QP solver is approximately \(10^{-5}\) and thus, the maximal accuracy which can be reached is approximately 0.99999, which is quite exactly the position of the rightmost peaks in Fig. 2.

11.2 Behaviour of the corner cutting method

The corner cutting method is, like the complex polytope method, a QP problem, and thus, the absolute error of the solution returned by our numerical solvers is in the range of \(10^{-5}\). For the corner cutting method, this accuracy is on average obtained after 10 to 12 iterations in the generic case, as our experiments show. Apart from the chosen accuracy, the runtime of the algorithm mostly depends on, firstly, the geometry of the problem and, secondly, on the number of vertices of the elliptic polytope. The dimension of the problem only has a minor influence on the runtime.

11.3 Behaviour of the projection method

The absolute error of the LP solvers is roughly \(10^{-9}\), which is magnitudes higher than for the QP solver, which is typically around \(10^{-5}\). Solely due to this fact, the projection method is the most accurate method of all the described methods.

For the projection method one could increase the number of vertices of the polytopes approximating the ellipses of the elliptic polytope until the norm is computed up to the desired accuracy, similarly as in the corner cutting method. Unfortunately, this hinders the use of warm-starting the LP problem since this alters the underlying LP. Therefore, in our implementation we choose the approximation factor \(q_1\) corresponding to Problem EE* to be of the same magnitude as the approximation factor \(q_2\) corresponding to Problem EE, and such that \(q_1q_2\simeq q\), where q is the chosen accuracy.

12 Applications

12.1 Number of extremal vertices

We address the question of the expected number of vertices in the convex hull of random ellipses. This issue is important for both of the above applications since it shows the growth of the number of ellipses with respect to the number of the iterations of the algorithms.

The corresponding problem for the convex hull of random points originated with the famous question of Sylvester [30]. The answer highly depends on the domain on which the points are sampled and on the dimension. Various lower and upper bounds on the asymptotically expected number of points in the convex hull are known, see [4, 18] and references therein. It would be extremely interesting to come up with similar theoretical estimates for convex hulls of ellipses. Here we compare the two cases solely numerically. There is no canonical analogue between points sampled from some domain and ellipses sampled from some domain, since the ellipses are determined by two parameters instead of one. We introduce several numerical results with various samplings.

12.1.1 Uniformly sampled ellipsoids in the unit ball

Fraction of points or ellipses which belong to the convex hull of randomly selected points or ellipses which are uniformly distributed in the unit ball or have uniformly distributed real and imaginary part in the unit ball, respectively. Since the computational time increases significantly with the number of points or ellipses, for sets with more than 300 points or ellipses less examples were conducted. In the plot one can see the relative fraction of points or ellipses belonging to the convex hull, coloured with respect to the dimension. The point examples are plotted with a \(\cdot \) symbol, the ellipse examples are plotted with a \(\circ \) symbol

We are given ellipses whose real and imaginary parts are sampled uniformly from the unit ball and points uniformly sampled from the unit ball as well. The number of vertices and ellipses of their corresponding convex hull is computed and plotted in Fig. 3. Experiments are made for dimensions 2 to 10 and number of points and ellipses 1 to 1000. Interestingly, the two cases differ greatly. Whereas for dimensions 2 to 5 the fraction of ellipses belonging to the convex hull is less than for the point counterpart, the situation is reversed from dimension 7 upwards.

12.1.2 Uniformly sampled ellipsoids in the unit cube

Interestingly, when the points or the real and imaginary parts of the ellipses are sampled uniformly from a unit-cube, the behaviour between the point case and the ellipses is very similar, at least for small dimensions, as can be seen in Fig. 4.

12.1.3 Gaussian sampled like ellipsoids

Also for points and ellipses with real and imaginary part sampled from a d-dimensional normal distribution, the behaviour is similar. See Fig. 5 for a visualization of the obtained numerical results.

12.2 Lyapunov function for a discrete time linear system

Given a linear system defined by a \(d\times d\) matrix A with a complex leading eigenvalue, which is supposed to be unique and simple (up to complex conjugates); We need to construct a norm \(\Vert \cdot \Vert \) in \({{\mathbb {R}}}^d\) such that \(\Vert A{\varvec{x}}\Vert \le \rho (A)\Vert {\varvec{x}}\Vert \) for all \({\varvec{x}}\in {{\mathbb {R}}}^d\). This is the same as constructing a symmetric convex body \(P \subset {{\mathbb {R}}}^d\) for which \(AP\subset \rho (A)P\). It is obtained as an elliptic polytope by an iteration method, see Sect. 1, Application 2. In Fig. 6 the time needed to compute the invariant elliptic polytope P is plotted against the dimension. The colour indicates the number of vertices of the set V.

12.3 Spectral characteristics of matrices

As already noted, one application is the computation of the joint spectral radius, for example used for the computation of the Lyapunov function of a discrete time linear system.

Invariant elliptic polytope for the matrices \( \left[ {\begin{matrix} 0 &{} \,0 &{} -1 \\ 0 &{} -1 &{} \,0 \\ 0 &{} \,1 &{} -1 \end{matrix}}\right] \), \( \left[ {\begin{matrix} 0 &{} 1 &{} -1 \\ 1 &{} 0 &{} -1 \\ 1 &{} 1 &{} \,0 \end{matrix}}\right] \). The colour indicates the z-value of the convex hull. The black lines are drawn where the ellipses touch the boundary of the convex hull

Example 12.1

Given \(A=\left[ {\begin{matrix} 0 &{} \,0 &{} -1 \\ 0 &{} -1 &{} \,0 \\ 0 &{} \,1 &{} -1 \end{matrix}}\right] \), \(B=\left[ {\begin{matrix} 0 &{} 1 &{} -1 \\ 1 &{} 0 &{} -1 \\ 1 &{} 1 &{} \,0 \end{matrix}}\right] \); The invariant polytope algorithm computes \(\rho :=\mathop {JSR}(\{A,B\})=\rho (B)=\sqrt{2}\) and that, with the eigen-ellipse \(E_0=E(v_0)=E\left( \left[ {\begin{matrix}2\\ 2\\ 1-\sqrt{-7}\end{matrix}}\right] \right) \), i.e. \(BE_0=E_0\), the elliptic polytope \(P=\mathop {co}\{ E_0, AE_0,A^2E_0, BAE_0,A^3E_0, BA^2E_0, B^2AE_0,BA^3E_0, B^2A^2E_0, B^2A^3E_0 \}\) fulfils \(AP\subseteq \rho P\) and \(BP\subseteq \rho P\), see Fig. 7 for a plot of P.

In Fig. 6 the time needed to compute an invariant elliptic polytope P is plotted against the dimension. Currently, the invariant polytope algorithm can compute the joint spectral radius of pairs of matrices of up to dimension 17, for which, in median, the computational time is \(1000\,s\) and the invariant polytope consists of 1400 ellipses. It seems that the time increases sub-exponentially with the dimension and depends linearly on the number of ellipses of the invariant elliptic polytope. In other words, the number of ellipses of the elliptic polytope increases sub-exponentially with the dimension.

Now we analyse the performance of the Invariant polytope algorithm for computation of the joint spectral radius of a set of matrices. The elliptic polytopes are applied in the case when the spectrum maximizing product has a complex leading eigenvalue. In the construction of the invariant elliptic polytopes we can use each of our methods. The numerical tests show that the projection method always performs better than the corner cutting method and that the mixed method always performs better than the projection method. Thus, only two significant algorithms remain, the complex polytope method and the projection method. We are comparing them.

In Fig. 8 and 9 the results of our experiments are plotted.

The time of computation of the joint spectral radius for a pair of matrices \(A_1,A_2\in {{\mathbb {R}}}^d\), whose spectrum maximizing product has complex leading eigenvalue. The colour indicates the number of vertices of the polytope. On the x-axis we have the dimension of the generated example, the y-axis shows the time needed to compute an invariant polytope. The x-values are slightly distorted for better readability. Similar to the case of random matrices with real leading eigenvalue, it seems that the existence of a spectrum maximizing product with finite length is generic. The results obtained using the complex-polytope method are marked with a \(\cdot \) symbol, the results obtained using the projection method are marked with a \(\circ \) symbol. Examples where the algorithm could not find an invariant polytope are marked in both cases with a red \(\times \) symbol. We suspect the reason why the Invariant polytope algorithm may not terminate within reasonable time for certain examples is a long spectrum maximizing product for the set under test

Remark 12.2

In practice it occurs rather seldom that a spectrum maximizing product of a set of matrices possesses a complex leading eigenvalue and whose length is greater than one. One such example is given in the Appendix, Example A.3.

Data availability

The main algorithms are available at gitlab.com/tommsch, all supplementary data, including the scripts to generate the data is available at tommsch.com/science.php.

Notes

We use the following solvers: Matlab’s linprog and Gurobi for the linear programming (LP) problems and Gurobi for the quadratic programming (QP) problems. SeDuMi Gurobi is a commercial solver, but a free academic license can be obtained at gurobi.comSeDuMi is free and can be downloaded at github.com/sqlp/SeDuMi. The GitHub version is a maintained fork of the original project, whereas the original host does not seem to maintain SeDuMi any more. For the tests we used a PC with an AMD Ryzen 3600, 6 cores (5 cores used), 3.6 GHz, 64 GB RAM, Windows 10 build 1809 Matlab R2020a, Gurobi solver 9.0.2 from May 2019, SeDuMi solver 1.32 from July 2013, ttoolboxes v1.2 from June 2021, TTEST v0.9 from June 2021. The algorithms are implemented in Matlab and included in the ttoolboxes [24]. The scripts to generate and evaluate the data can be downloaded from tommsch.com/science.php All software is thoroughly tested using the TTEST framework [25].

References

Barabanov, N.E.: Lyapunov indicator for discrete inclusions, I–III. Autom. Remote Control 49(2), 152–157 (1988)

Ben-Tal, A., Nemirovski, A.: On polyhedral approximations of the second-order cone. Math. Oper. Res. 26(2), 193–205 (2001). https://doi.org/10.1287/moor.26.2.193.10561

Charina, M., Conti, C., Sauer, T.: Regularity of multivariate vector subdivision schemes. Numer. Algorithms 39, 97–113 (2005). https://doi.org/10.1007/s11075-004-3623-z

Donoho, D.L., Tanner, J.: Counting faces of randomly projected polytopes when the projection radically lowers dimension. J. Amer. Math. Soc. 22, 1–53 (2009). https://doi.org/10.1090/S0894-0347-08-00600-0

Ferrez, J.-A., Fukuda, K., Liebling, T.M.: Solving the fixed rank convex quadratic maximization in binary variables by a parallel zonotope construction algorithm. Eur. J. Oper. Res. 166(1), 35–50 (2005). https://doi.org/10.1016/j.ejor.2003.04.011

Gielen, R., Lazar, M.: On stability analysis methods for large-scale discrete-time systems. Autom. J. IFAC 55, 6–72 (2015). https://doi.org/10.1016/j.automatica.2015.02.034

Goemans, M.X., Williamson, D.P.: Improved approximation algorithms for maximum cut and satisfiability problems using semidefinite programming. J. ACM 42(6), 1115–1145 (1995). https://doi.org/10.1145/227683.227684

Gorskaya, E.S.: Approximation of convex functions by projections of polyhedra. Moscow Univ. Math. Bull. 65(5), 196–203 (2010). https://doi.org/10.3103/S0027132210050049