Abstract

We have developed a novel and affordable way to texture virtual hands from individually taken photographs and integrated the virtual hands into a mixed reality neurorehabilitation system. This mixed reality system allows for serious game play with mirrored and non-mirrored hands, designed for patients with unilateral motor impairments. Before we can ethically have patients use the system, we must show that embodiment can be achieved for healthy users. We compare our approach’s results to previous work in the field and present a study with 48 healthy (non-clinical) participants targeting visual fidelity and self-location. We show that embodiment can be achieved for mirrored and non-mirrored hand representations and that the higher realism of virtual hands achieved by our texturing approach alters perceived embodiment. We further evaluate whether using virtual hands resized to the individual’s hand size affects embodiment. We present a 16-participant study where we could not find a significant difference with personal resized hands. In addition to rehabilitation contexts, our findings have implications for the design and development of applications where embodiment is of high importance, such as surgical training and remote collaboration.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Virtual reality (VR) and mixed reality (MR) applications are increasingly finding their way out of the laboratories and into the real world. While some specialised application fields like virtual reality exposure therapy have been successfully applied in therapists’ offices for two decades already, others, like VR education systems, have only recently moved into seminar and class rooms (Powers and Emmelkamp 2008; Freina and Ott 2015). The affordability of current VR technology—including displays, tracking and capturing systems and computers capable of high-quality graphics—has led to a wider dissemination of VR and MR and also allows us to stretch the possibilities of what can be done even further. In the case of the research area targeted in this paper, truly manual interaction with virtual objects and environments can be implemented without the need to wear special devices or to instrument the users, as was the case in the past with data gloves or tracking markers. Furthermore, we can display realistic looking and believable hands in the virtual environment with high visual, spatial and temporal fidelity. This new quality opens up new application fields, which highly depend on accurate and predictable perception and interaction.

In our virtual neurorehabilitation mirror therapy scenario, patients are fooled about what they are seeing to allow for an incremental gain of lost motor function, e.g. damaged by traumatic brain injury or stroke. In the virtual environment, a patient is presented with a rehabilitation task in the form of a serious game, using a mirrored version of their healthy hand to visually mimic the movements of their impaired hand. Simply speaking, the brain “sees” the impaired limb moving and therefore re-wires itself; this effect is known as neuroplasticity (Doidge 2008; Giraux and Sirigu 2003). In our VR neurorehabilitation scenario, highly realistic virtual hands are necessary so that patients can feel embodied in that mirrored limb and the neuroplastic effects have a chance to occur.

Like in similar application scenarios, we have to give the user the feeling that what they are seeing are their own hands, that they are in control of those hands (mirrored or not), and that the hands perceived are in correct or simply believable spatial positions. Those three aspects are forming the feeling of embodiment. Different disciplines define embodiment differently; however, for this paper we will be using the Kilteni et al. (2012) definition who state that the Sense of Embodiment consists of three subcomponents: Sense of Self-Location, Sense of Agency and Sense of Ownership. Sense of Self-Location refers to where we perceive our body to be located. Sense of Agency refers to the relationship between our expected physical movement and actual performed movement. Sense of Ownership is the sense that our own body is the source of sensations. While there are numerous studies (detailed in Sect. 2) investigating embodiment of users’ hands, their application scenarios are mostly hypothetical and do not necessarily rely on embodiment; in contrast, our application scenario does.

We not only should be able to provoke and measure embodiment, but we have to also make sure that embodiment is achieved. To do so, we have developed a virtual neurorehabilitation application for stroke patients, which doubles as an experimental platform to investigate embodiment in general. Virtual reality clinical research experts have created a framework to help guide therapeutic VR application development and testing (Birckhead et al. 2019). Their framework consists of different stages that a therapeutic VR application should go through (from design of the system all the way through to clinical trials). Following their framework, before we can expose real patients to our system, we must show that embodiment can be achieved with our personalised virtual hands with healthy users first. In particular, we are interested in:

-

1.

What degree of visual hand fidelity is needed and sufficient for embodiment (specifically ownership and agency)? We are stretching existing research by automatically texturing users’ virtual hands with photographs taken of their own hands. We then compare this visualisation against current best practice in research.

-

2.

What effect does mirroring virtual hands have towards embodiment? Does the concept of self-location also apply to mirrored hands, which are, in a different and incongruent spatial position than the real hands in the real world? If so, this would enable us to use this technique in embodiment-requiring scenarios and would challenge commonplace assumptions on the necessity of positioning the hands in congruent positions.

-

3.

To what degree, if at all, does the size of the virtual hand affect embodiment (specifically ownership)? How do people perceive their hand size to be in a virtual environment?

We are addressing those research questions in the remainder of the paper starting with a review of the relevant literature, followed by a walk-through of our process to texture user’s hands by capturing four photographs of their hands (palm and back for both left and right hands) and present the results and interpretations of our two user studies.

The first user study investigates visual fidelity with our personalised virtual hand amongst three other virtual hand visualisations from two closely related works (Lin and Jörg 2016; Argelaguet et al. 2016). These two papers measured embodiment using the virtual hand illusion (threatening/dangerous scenario) and consisted of different virtual hand visualisations of varying visual fidelity. We present a different application scenario (non-threatening, rehabilitation task) which involves mirroring the virtual hands movements for neurorehabilitation (stroke, phantom limb pain, etc.). This neurorehabilitation task consists of a virtual memory game where participants interact by activating different tiles on a virtual game board to find matching food items. We measure perceived embodiment and task performance for these four virtual hand visualisations of varying visual fidelity and mirroring conditions (mirroring/non-mirrored).

The second user study investigates if resizing the personalised virtual hand to a participant’s actual hand size has an effect on embodiment/task performance. In this study, a different group of participants only use the personalised hand visualisation (mirrored and non-mirrored) and interact with the same rehabilitation task from the first study. The measures are the same as the first study except (at the end of the study) we ask participants to indicate how they perceive their actual hand size to be in VR. These two user studies provide insights on our three research questions as well as the effects of a personalised virtual hand in VR.

2 Related work

As defined earlier, Kilteni et al. (2012) stated that the Sense of Embodiment consists of three subcomponents: Sense of Self-Location, Sense of Agency and Sense of Ownership. Adapting that definition to our hand visualisation scenario, Sense of Self-Location refers to where we perceive our real hand to be physically located when we’re interacting using a virtual representation. Sense of Agency refers to our difference between expected and resulting hand movement with the virtual hand visualisation. Sense of Ownership refers to the feeling that the hand visualisation belongs to our body, which is also called the virtual hand illusion (VHI). Research into VHI is based on research into the similar rubber hand illusion (RHI), first introduced by Botvinick and Cohen (1998), which showed that participants could take ownership of a rubber hand near to their own hidden real hand.

The VHI swaps out the rubber hand for a virtual representation of a hand and investigates participant’s feeling of embodiment towards that hand. Previous research has shown that ownership of virtual limbs can occur (Slater et al. 2009), including the effects of danger on ownership (Ma and Hommel 2013), and that the RHI can be induced in immersive VR (Yuan and Steed 2010; IJsselsteijn et al. 2005). Ma and Hommel (2015) investigated two different virtual hand visualisations (hand and rectangle) and found that ownership is elicited with both hand visualisations and that synchronous agency contributed to increased perceived ownership.

There are two research papers that investigate the use of different VHI hand visualisations that are especially relevant to our work: Argelaguet et al. (2016) and Lin and Jörg (2016). Argelaguet et al. (2016) used three different hand visualisations (varying on visual fidelity) and evaluated their role in participant’s sense of embodiment in different experimental tasks/scenarios. Participants had two tasks to complete using these different hand visualisations: a pick and place task in which they had to avoid different potentially threatening obstacles and a rotating saw blade task in which the participants place their hands next to this (virtually) dangerous object. Surprisingly, they found that “simplified” (less realistic) hands provided faster and more accurate interactions as well as higher sense of agency (compared to their higher realism hand).

Lin and Jörg (2016) used six different hand visualisations (varying on visual fidelity) to evaluate the VHI. Their study involved two tasks: blocking flying balls with their virtual hand visualisation and a virtual threat scenario where a knife hits their virtual hand. They found that a wooden block (least realistic) hand visualisation was significantly lower for ownership. They also found that appearance does not affect agency. This is in contrast to Argelaguet et al. (2016) findings that agency is stronger for less realistic hand visualisations. However, both of these works feature an obscure and threatening task which differs from our rehabilitation task/scenario.

Other research has been carried out that has investigated ownership not just of a hand, but of the entire body. Preston et al. (2015) investigated body ownership in a mirror with a mannequin body (instead of a rubber hand). They evaluated ownership amongst conditions such as mirrored, non-mirrored and first-person perspective of the mannequin. They found strong ownership with the mirrored mannequin was as strong as when viewed from a first-person perspective and greater than when viewed from a third person perspective. Gonzalez-Franco et al. (2010) brought this into VR and evaluated ownership on a mirrored avatar that mimics participants’ movements. They found that participants did elicit ownership in the mirrored avatar which supports our approach for mirrored hand illusion neurorehabilitation. Nimcharoen et al. (2018) present an AR system that uses a single depth sensing camera to show users a mirrored capture of their body with different body sizes. They found participants reported a high sense of ownership and agency with the mirrored full body representation. Banakou et al. (2013) adapted the mirrored full body illusion in VR to present the users in a child avatar or an adult avatar scaled to the same height as the child avatar and how that affects ownership and object size estimation. They found participants experienced subjective ownership of both avatars; however, the child size avatar participants overestimated the size of virtual cubes compared to the adult avatars.

Jung et al. (2018) evaluated a “personalised” stereo video camera texture for object size estimation in virtual reality. They found increased body ownership and spatial presence with their “personalised” texture as well as being able to correctly estimate the size of virtual objects in proximity of their hand. Their personalised texture was used to estimate virtual box sizes near the virtual hand, but the personalised virtual hand was not used to actually interact with the virtual environment. They also had self-reported technical limitations that included: having to apply physical paint on participants hands to help them identify them in VR, camera colour accuracy dropping and becoming noisy after 1 minute of system use, asking participants not to move their head during use. These limitations made their approach infeasible for our practical, serious neurorehabilitation scenario. Schwind et al. (2017) evaluated how different genders perceive different virtual hand visualisations (default hand textures). They found that men accept and experience presence via both genders hands and that females experience less presence with male hands.

Khan et al. (2017) evaluated two different hand visualisations as a gesture interaction with virtual content in a 360-degree movie. They used two hand visualisations: Segmented hands from an RGB-D video camera and compared this with a skin textured Leap Motion hand model. They found that the video textured hand visualisation generated stronger ownership. While their findings are interesting, they are likely not of general applicability because (a) the Leap Motion hand was not in any way tailored to the participants and (b) their specific task afforded hand occlusions (self and between hands) which disadvantages the Leap Motion hand.

Work has also been carried out regarding the reconstruction of virtual hands from camera images. Mueller et al. (2019) provide real-time pose and shape reconstruction for two-handed interaction from a single depth camera. Their implementation is able to adjust the virtual hand to the user’s hand shape and able to adjust to two-handed interactions, gesture interactions and occlusion. Sharp et al. (2015) also use one depth camera to reconstruct virtual hands; however, it is limited to one hand and uses a standard hand model size. Romero et al. (2017) use a specialised 3D hand capture system which consists of five cameras to reconstruct a highly realistic and personalised virtual body/hand model. However, their implementation requires expensive, specialised 3D scanning hardware to reconstruct the person’s hand shape and focused more towards animation than real-time interaction.

Virtual hand visualisations have been previously used in stroke rehabilitation. Of particular relevance to us are those that have used the Leap Motion hand tracking system. Research has been done into the usability of the Leap Motion controller for stroke rehabilitation applications which showed acceptable accuracy and performance for clinical and rehabilitation use (Tung et al. 2015; Holmes et al. 2016). Stroke rehabilitation applications (using the Leap Motion controller) have shown promise in evaluating patient hand function (Khademi et al. 2014), hand function recovery (Iosa et al. 2015) and independent training (Liu et al. 2015).

While we have chosen our neurorehabilitation task, TheraMem (explained in detail in Sect. 4.3), other neurorehabilitation scenarios have been used in VR to help with stroke rehabilitation as well. Rubio et al. (2013) present a VR system with two tasks for stroke patients to carry out with virtual hands: wipe clean a dirty virtual table and squeeze a virtual lemon. These tasks are used to assess stroke survivors motor function, and they found strong correlation between measures in these tasks and clinical measures. Holmes et al. (2016) developed their TAGER immersive VR rehabilitation system which gives stroke patients a 3D pointing task where they must reach and point to randomly moving targets in the VR environment. Proença et al. (2018) carried out a systematic review of serious games for upper limb rehabilitation which showed potential for many different kinds of neurorehabilitation tasks, but no clear “gold standard” task for neurorehabilitation. Hung et al. (2016) carried out a survey of stroke patients and therapists to find out what they both look for in game-based rehabilitation. They found that patients prefer games that challenge their mind are user-driven, cost-effective and diverse in tasks during gameplay. Holden (2005) provide a comprehensive history on neurorehabilitation scenarios for motor rehabilitation in VR from the early 2000s.

Mirror therapy has been used previously in virtual and mixed reality contexts to promote recovery in stroke patients. Studies into the feasibility of mirror therapy have shown that VR/MR is feasible for clinical use (Hoermann et al. 2017; Assis et al. 2016). MacNeil (2017) presents an immersive VR mirror therapy system that consists of a number of activities of daily living such as rolling dough, stacking plates, clapping hands and hanging clothes on a line. They concluded with a small preliminary user study that investigated virtual mirror therapy against traditional mirror therapy and could not detect a difference in recovery between groups. Weber et al. (2019) also present an immersive VR mirror therapy system; however, hand visualisation/movement was limited by using an Oculus hand controller. Their system also consisted of similar activities of daily living and from a pilot study found that their system was feasible to use for stroke patients regarding safety, adherence and tolerance. Trojan et al. (2014) have developed an AR system (using an HMD) to carry out mirror therapy at home and they report increased performance in healthy participants.

There remains a gap in literature with respect to the provision of higher realism achieved by the individualisation of hands and its effect on perceived embodiment. There is also a gap in literature measuring embodiment in a practical (opposed to hypothetical) application scenario. Finally, the assumption about the necessity for congruent self-location hasn’t been challenged yet. We look to bridge these gaps by providing and evaluating individually textured virtual hand visualisations (in comparison with best practice in research), in non-mirrored and mirrored ways to explore how this would affect a person’s perceived embodiment in a practical application scenario.

3 Personalised virtual hands

We set out with the goal to create a highly realistic texture of an individual’s hand that can be applied to a virtual hand model. The hand visualisation should be of sufficient realism so that an individual could identify that hand as their own hand within the mixed reality (or Augmented Virtuality—AV) environment.

To create individually textured hand models, we generate a unique texture map for both hands of each participant from photographs of their hands. This process begins with the participant placing one hand in the centre of a photographic light box (light tent) where we simultaneously capture an image of both sides of their hand as shown in Fig. 1-left. We take the images simultaneously via a script on a connected PC to ensure consistent lighting conditions within the pair of photographs. We then use computer vision techniques to identify features on each side of the hand and map these to the UV texture space of a predefined realistic hand model. We developed this particular method as existing general approaches to human surface texture acquisition, such as multiview photogrammetry or depth sensing projection, did not meet our requirements for our application scenario: cheap, portable devices, and the ability to work with patients who may not be able to hold their hand steady and airborne for a long duration.

The remainder of this section describes the texture mapping process (Fig. 3) for a single hand, which is then repeated for the other hand. Similarly, the palm and back side of the hand are treated the same, with images from the left-side camera being flipped such that fingers are always pointing to the right of the image. We begin by determining which pixels in the image are likely to belong to the skin by using a combined RGB and YUV colour space segmentation as described by Al-Tairi et al. (2014). The result of this segmentation is used as the likely foreground region in GrabCut (Rother et al. 2004), which helps to refine the edges and fill any holes in the detected skin region.

From the created binary hand mask, we can also determine the outer contour of the hand using the border-following algorithm described by Suzuki and Abe (1985), along with the contour normals at each point on the edge of the hand with us of a Sobel filter. By combining information about edge locations and normals, we can identify key points in the hand photographs that can then be remapped to the corresponding points in the destination UV map.

a, b Corresponding points of a single side of a hand used to map a texture from a photograph taken in the light box to the destination UV map. c The result of the completed texturing process for both sides of one hand, including mapping, de-seaming, non-local blur at the finger edges and edge outpainting

The first points we detect are the finger and thumb tips (shown in blue in Fig. 2). We consider all contour points with a normal of \(0^\circ \pm 3^\circ\) and then filter these such that points are neither within 1 cm of each other nor have nearby regions with sudden normal changes; this removes false positives caused by incorrect hand segmentation. Of the remaining points, we take the four rightmost points as fingertips and the topmost one to be the thumb. Contour distance is estimated based on the camera field of view and the halfway point between the two cameras (Fig. 3).

With the fingertips found, we locate the centres of the fingernail edges by starting from each fingertip and then traversing the contour in opposite directions until finding opposing normals (yellow in Fig. 2). We detect the webs between fingers by taking the leftmost contour point between consecutive finger pairs (green in Fig. 2). Finally, we traverse the hand contour between and around the existing points for empirically measured distances to locate knuckles, wrists and other relevant features (shown in red).

The points gathered at this stage encapsulate the nonlinear characteristics of both the source (people hold their hands unpredictably) and destination (the UV texture map has nonlinear scaling between finger segments). However, the mapping can still be improved simply by adding keypoints via equidistant subdivision along the contour as shown in Fig. 2 by white dots—this allows convex or concave finger segments to be better captured.

After acquiring a set of points that correspond to targets on the destination map, we can begin the texture transfer process. We compute the Delaunay triangulation of the source point set and remap the triangles by corresponding vertex to the destination points, painting in texture patches for each.

Procedural flow of the personal virtual hand texture creation process described in detail in Sect. 3 from beginning (hand photograph) to end (Final UV texture)

Once the palm and back of the hand are mapped to a single target texture, we reduce local discontinuities (seams) at the locations where the textures do not meet, i.e. the sides of the fingers. We achieve this via non-local Gaussian blurring, using a pre-made map matching the edges of the front of the fingers to their corresponding neighbouring point on the back, and vice versa. Finally, we outpaint the mapped edges of the hands to the border of the texture so that any borderline texture lookups do not incorporate the background colour.

We piloted this approach and found the results to generate realistic representations of participants’ hands, with uniform lighting and minimal visible seams. The result of one such mapping is shown as the Augmented Virtuality hand in Fig. 1 (right) and Fig. 4. For the experiments, we captured all participants’ hands (regardless of assigned conditions), taking five sets of photo pairs per hand in case of failure. We ask participants to remove any jewellery that did not sit flush against the skin. When processed we found on average 1 in 10 photo pairs was unable to have keypoints correctly identified. Processing occurred between separate capture and experiment sessions and takes about 5 minutes per photo pair (Fig. 5).

4 Apparatus

Our developed system consists of low-cost, off-the-shelf hardware. Participants wore an Oculus Rift CV1 head-mounted display (HMD) to experience our immersive MR environment. Our neurorehabilitation scenario has been extended for participants to experience four different hand visualisations (Fig. 4). These hand visualisations vary in levels of realism, and the representations were chosen based on the work in Lin and Jörg (2016). We have fitted a Leap Motion depth sensing camera to the front of the HMD (Fig. 6-left) to capture participant’s hand movements. We used the Leap Motion controller in HMD mode provided by the Orion SDK (version 3.2.1). The physical experimental environment consists of a table with an anti-reflective cloth placed on it to reduce infrared interference (Fig. 6-left). Our desktop PC (Windows 10, Intel Core i7-6700 @ 3.4 GHZ, 8GB RAM, NVIDIA GTX 970) was placed under the desk, and the experimenter used a monitor and keyboard/mouse to control the experiment. Our virtual environment was developed using Unity3D (2017.3.0f3).

4.1 Hand visualisations

Our system is comprised of four different hand visualisations (Fig. 4) which varied in level of graphical realism. We based three of the four chosen hand visualisations on the work done by Lin and Jörg (2016), with the exception of adding our AV hand visualisation using individually textured hands. All of the non-mirrored hand visualisations are co-located with the participant’s own hand location on the physical table present in the experimental set-up (Fig. 6-left). The reason for the four fidelity levels of hand visualisations is based on the two related papers (Lin and Jörg 2016; Argelaguet et al. 2016) who also measured perceived embodiment with different levels of visual fidelity. Lin and Jörg (2016) had six levels of fidelity and Argelaguet et al. (2016) had three levels. These two papers had overlap on three levels of fidelity and so those levels were chosen (wood, robot and realistic/default skinned texture) to compare against our personalised hand texture as we could compare results regarding these levels of fidelity between studies. The four resulting hand visualisation levels are:

-

Augmented Virtuality (AV): Very High Realism. Resulting hand visualisation from the “Personalised Virtual Hands” process explained in Sect. 3, which textures the “PepperBaseCut” Leap Motion hand model with the participant’s hand photographs. The “PepperBaseCut” hand model is from the Leap Motion Orion “Hands Module” (2.1.0) Unity Core Asset Extension.

-

Realistic (RE): High realism. “PepperBaseCut” hand model from the Orion “Hands Module” (2.1.0) Unity Core Asset Extension. This hand model, however, has been textured with a default skin texture similar to the work by Lin and Jörg (2016).

-

Robot (RB): Low realism “CapsuleHand” hand model from the Orion SDK Core Asset Unity Package (4.2.1).

-

Wood (W): Very low realism. We created this hand model by mapping a flattened cube GameObject with the corresponding palm movement provided from the Leap Motion Orion SDK. This hand visualisation is based on the block hand used by Lin and Jörg (2016).

4.2 Mirroring of the hand visualisation

In addition to a user’s 1:1 interaction with the environment, we have implemented the option of mirroring the hand visualisations to evaluate the self-location assumption and to integrate into our neurorehabilitation (stroke rehabilitation) context. Mirroring of hand visualisations means that, for example, a participant’s left hand is presented to them as their right hand in our VR environment. The participant’s hand movement would also be mirrored such that when the participant moves their left hand to the left it is presented to them as their right hand moving to the right (Fig. 5). We have implemented the mirroring in a vertex shader by multiplying the hand world coordinates by a reflection matrix about the x-axis and rendering the resulting mirrored hand visualisation. In order for this implementation to work, the participant was seated and positioned directly in front of our virtual mirror plane position.

Example showing mirroring of the hand visualisation with the participant’s real hand (top left) and what they are experiencing in our mixed reality environment (bottom right). In this example, the participant’s right hand is mirrored so that it is presented to them in our mixed reality environment that their left hand is carrying out the mirrored hand movements

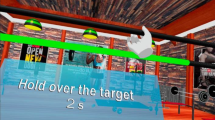

4.3 TheraMem

We have incorporated the TheraMem (Regenbrecht et al. 2011) memory game (Fig. 6-right) which has been successfully used with patients and healthy users. In this casual, serious game, users interact with a virtual memory game one- or two-handed, in both mirrored and non-mirrored ways. TheraMem has been used previously to provide stroke patients with a motivating, non-repetitive, clinician-controlled environment to physically and neurologically rehabilitate their impaired limbs.

The TheraMem virtual game environment consists of 12 white tiles which can be activated to reveal a food item stored underneath. There are six matching virtual food items randomly placed underneath each tile at the start of each game. The goal of the game is to find all six matching food items. The different hand visualisations all interact with the game board the same way—we cast a ray from the centre of the head-mounted display through the farthermost point on the given hand visualisation and check if it hits upon the game board. The tile will only “flip over” (turn green) and reveal the food item underneath if the user has activated it (turned the tile red) for one second. We have adapted TheraMem to work with a one-handed rehabilitation context by having the user flip over two tiles and then checking if it’s a matching pair. The game ends once all the matching pairs of food items have been found, and with this all the tiles have disappeared from the game board.

5 Realism experiment

In our first experiment, we investigate different levels of visual fidelity (from related works) against our personalised virtual hand model in terms of embodiment and task performance. We compare our study results to the results of previous work, in particular to Lin and Jörg (2016) and Argelaguet et al. (2016). Ethical approval was obtained from our university’s human ethics committee and written consent was collected from each participant before the start of the experiment.

5.1 Participants

We recruited 48 participants (30m, 18f) from the local university population through an email invitation to participate in a virtual reality study. The age of our participants ranged from 18 to 48 years (\({M}=23.90\); \(\textit{SD}=6.52\)). Forty-four participants indicated they were right-handed and 4 were left-handed.

5.2 Study design and hypotheses

Each participant interacted using two randomly assigned hand visualisations and experienced both mirroring conditions with each hand visualisation. We used a mixed between-subject/within-subject experimental design which consists of 12 groups to which participants are randomly assigned. These 12 groups derive from four possible randomly assigned hand visualisations to be used first and the three possible hand visualisations that they can be randomly assigned to use second (because they can’t interact with the same hand visualisation twice). Within these 12 groups, each participant will experience both mirroring conditions (mirrored and non-mirrored) for each hand visualisation. Hence, the experimental design comprises two independent variables: the virtual hand representations of the participant’s hand and the mirroring of the participant’s hand. The virtual hand representations consist of four levels: “Wood”, “Robot”, “Realistic” and “Augmented Virtuality”. The mirroring of a participant’s hand has two states: mirrored and non-mirrored.

Our hypotheses are based on the closely related work by Argelaguet et al. (2016) and Lin and Jörg (2016). In particular, H2 refers to Argelaguet et al. (2016) finding that lower realism leads to higher agency. We infer that lower agency leads to a higher task completion time.

-

H1: Perceived realism will be higher for the Augmented Virtuality hand visualisation than other hand visualisations.

-

H2: Higher realism leads to a lesser sense of agency

-

H3: Higher realism leads to higher sense of ownership

-

H4: Participants feelings of ownership toward the Augmented Virtuality hand visualisation will be higher than for the other hand visualisations.

5.3 Instruments

Two questionnaires have been filled out by the participants at different times throughout the experiment: a Hand Visualisation Realism Questionnaire and a Embodiment Questionnaire. The Hand Visualisation Realism Questionnaire consisted of images of our four hand visualisations which were randomly ordered and asked the question “How realistic do you perceive the hand visualisation in each image to be?”. This questionnaire is adapted from the work of Lin and Jörg (2016) and has a scale from 1 (less realistic) to 10 (more realistic). The Embodiment Questionnaire consisted of 16 questions which were adapted from the questionnaires used by Lin and Jörg (2016) (Q1–Q8) and Argelaguet et al. (2016) (Q9–Q16). This questionnaire used a 7-point Likert scale which ranged from 1 (Strongly Disagree) to 7 (Strongly Agree) and evaluated different aspects of embodiment. These questions also correspond with the three subcomponents of the Kilteni et al. (2012) definition of embodiment: agency (Q1, Q9, Q10, Q11, Q12, Q15, Q16), self-location (Q2, Q5, Q6) and ownership (Q3, Q4, Q7, Q13, Q14). We also collected data from the participant’s performance in the TheraMem game environment (Completion Time and Number of Attempts).

5.4 Procedure

Participants were randomly assigned to one group before arrival. If assigned to the “Augmented Virtuality” condition, they will have photographs taken of both sides of both of their hands. While the “Personalised Virtual Hands” process was carried out by the experimenter, the participants filled out their demographic questionnaire as well as a hand visualisation realism questionnaire which asked them to rate each hand visualisation based on realism. If the AV texturing procedure failed (for any reason), they were assigned to the next available group which didn’t include the AV hand visualisation.

Participants had the system set-up explained to them and received a demonstration of how the interaction with the tiles and their hand visualisation works. Instructions were given to ensure the system is operated with a flat hand on the table. Participants were randomly assigned one of their two hand visualisations to begin a “warm-up” round in the TheraMem environment. This has the same tile layout and interaction as the regular TheraMem; however, the goal was to find the one black coloured tile and then the black colour tile moved to another tile and they repeated the process. The purpose of this warm-up mode was for the participant to become comfortable with the virtual environment and learn how the interaction in the game works without being exposed to the actual TheraMem game. We wanted participants to understand the task interaction, but also we wanted to be able to detect any possible learning effect and pretraining/exposing them to the TheraMem game would affect that. The participant was asked to activate the black tile at least 10 times and to keep activating the black tile until they felt comfortable with that hand visualisation/mirroring condition to proceed. This warm-up mode was repeated for both hand visualisations they were randomly assigned to and for both mirroring conditions (a total of four warm-up rounds).

The participants then proceeded to use their randomly assigned hand visualisations to interact with the TheraMem game. The starting mirroring condition for each hand visualisation was also randomly assigned to ensure even starting conditions. After the participant had completed a round of TheraMem, they were asked to answer the 16 questions from the Embodiment Questionnaire (explained above under Instruments). The previous answers were available to the participant so that they were able to see how they answered questions from a previous round to give a relative (differential) judgment. The participant then used the same hand visualisation with the other mirroring condition and answered the same questionnaire. This process is then repeated for the second randomly assigned hand visualisation. There was no break between hand visualisations or after completing a questionnaire. In total, the participant played four rounds of TheraMem and answered the questionnaire four times. At the conclusion of the experiment, the participants were told how the illusion took place and ask any questions they had. They were then thanked for their time, compensated with a grocery store voucher. The duration of each experiment was not recorded; however, they were allocated a 45-minute session slot and all participants finished within that time frame.

5.5 Results

The hand visualisation realism questionnaire (scale 1–10) results are as follows: AV(M: 9.21 SD: 0.96), RE(M: 7.60 SD: 1.33), RB(M: 3.96 SD: 1.51), W(M: 1.19 SD: 0.53). A Wilcoxon signed rank test was performed which showed significant (\(p < 0.05\)) differences between all hand visualisations for the hand visualisations realism questionnaire only (as all 48 participants filled out this questionnaire). Wilcoxon rank sum (unpaired, equivalent to Mann–Whitney) tests were carried out to analyse the Embodiment Questionnaire responses between hand visualisations. Since the study design had participants use 2 of the 4 hand visualisations and both mirroring conditions, 24 participants experienced each hand visualisation (and both mirroring conditions). This means, for analysis, there were 24 partially dependent samples in each hand visualisation for the Embodiment Questionnaire questions. We looked at whether there was any difference between left/right handedness and could not find any statistical difference or effect between the two groups. Results from the Embodiment Questionnaires are presented in Table 1.

5.5.1 Non-mirrored results

Shapiro–Wilk’s normality test showed that many (51/64) of the distributions were not normal. Wilcoxon rank sum tests were run for comparison between all hand visualisations for each question (24 participants experienced each hand visualisation). Significant effects (\(p < 0.05\)) were found for seven questions. Participants generally reported differences on embodiment between using the AV hand and Wood hand. This difference was detected regarding agency (Q1 and Q13) as well as ownership (Q3 and Q4). A significant difference between the AV and Robot hand was only found for one ownership question (Q3). Participants perceived that the AV hand resembled their own hand (Q3) compared to all other hand visualisations (including Realistic). This was the only significant difference we could detect between the AV and Realistic hand in non-mirrored use. Participants reported the AV hand and Realistic hand both looked more realistic than the Robot and Wood hand (Q7). Participants’ feelings regarding the intersensory relationship of their own hand being located on screen (Q2) was observed for both the AV hand (between Wood and Robot) and Realistic hand (between Wood). Participants reported that the AV, Realistic and Robot hand (compared to the Wood) hand was controlled by their body (as if it was part of their body) (Q13). Participants observed a difference that their hand was located on the screen (Q2) when using the AV hand compared to Robot and Wood hand and also when using the Realistic hand compared to the Wood hand. TheraMem completion times for each non-mirrored hand visualisation were as follows: AV(M: 59.97 s SD: 20.96 s), RE(M: 63.46 s SD: 16.75 s), RB(M: 63.92 s SD: 28.01 s), W(M: 72.11 s SD: 25.63 s) (significance found between AV and W only, \(p = 0.03\)). We did not detect any learning effects regarding completion time between rounds played.

5.5.2 Mirrored results

Wilcoxon rank sum tests were run for the comparison between mirrored hand visualisations (24 participants experienced each hand visualisation). We found similar significant (\(p < 0.05\)) relationships between mirrored hand visualisations as we did for non-mirrored. However, three more questions detected significant differences between mirrored hand visualisations than they did for non-mirrored, meaning 10 questions showed significant effects between the mirrored hand visualisations. Shapiro–Wilk’s normality tests again showed many (53/64) distributions were not normal. Again, similar differences between AV and Wood hands were detected on agency (Q1 and Q13) and ownership (Q3 and Q4). Similarly, the AV hand was again observed to resemble participant’s own hand compared to all three other hand visualisations. This time, however, participants reported another difference between AV and Realistic on Q16 where they felt the AV hand was able to go through virtual obstacles compared to the Realistic hand. Participants perceived that their interaction with the environment was realistic (Q12) and was higher for the Realistic hand than the Wood hand. Regarding immersion (Q8), participants observed the AV and Realistic hands made them feel more immersed than the Wood hand. Participants also reported the mirrored AV and Realistic hands were more realistic (Q7) than the Robot and Wood hands. Participant’s reported that their real hand was becoming virtual (Q5) when using the AV hand compared to the Robot and Wood hands and also when using the Realistic hand when compared to the Wood hand. Participants observed that when they were using the AV, Realistic and Robot hand that they sensed that their hand was located on the screen (Q2) more than when using the Wood hand. TheraMem completion times for each mirrored hand visualisation were as follows: AV(M: 69.70 s SD: 21.87 s), RE(M: 77.52 s SD: 28.56 s), RB(M: 80.77 s SD: 27.17 s), W(M: 77.72 s SD: 24.35 s) (no significance). We did not detect any learning effects regarding completion time between rounds played.

5.6 Discussion

The results indicate that overall embodiment was achieved for two hand visualisations: Augmented Virtuality and Realistic. We base this off the results of the questions regarding ownership/agency for the AV and Realistic hand visualisations (in Table 1). For the questions corresponding with agency (Q1, Q9, Q10, Q11, Q12, Q15, Q16) and ownership (Q3, Q4, Q7, Q13, Q14), both these hand visualisations have ratings above the midpoint for all those questions. These ownership/agency ratings above the midpoint occurred for both mirroring conditions (mirrored and non-mirrored) for these two hand visualisations. There was a strong sense of agency in all four hand visualisations in both mirrored and non-mirrored use. There were no significant differences in TheraMem completion time. This means H2 was not supported by the results of the study because there were no significant differences in agency or task completion time (while lower on average, it was not significant). This finding contradicts with the findings of Argelaguet et al. (2016) where they found that the sense of agency was stronger for less realistic hand visualisations. We would argue that this is because of the nature of Argelaguet et al.’s study: they presented a threatening environment which is hardly applicable to our more general scenario.

Participants’ ownership responses are generally inconclusive between the four hand visualisations with the exception of AV and Wood hand visualisations. Perceived realism, however, is where the distinction between these visualisations lies. Q7 (virtual hand looked realistic) showed a significant difference with both the AV and Realistic hands between both the Robot and Wood hand visualisations. Q3 (virtual hand began to resemble my own hand) showed significant differences for the AV hand and all others. However, the Robot hand was only found to be significantly different than the Wood hand on Q13. Also, the Realistic and Robot hand was only found to be significantly different on Q7. Therefore, H3 was not supported by the results of the experiment based on the fact that differences between hand visualisations with higher perceived realism could not be detected across many of the ownership questions (only for Q7).

Q3 shows that for both mirrored and non-mirrored hand visualisations, participants indicated a significantly higher rating that the AV hand began to resemble their own hand. The Hand Visualisation Realism Questionnaire also indicated that the AV hand visualisation had significantly higher realism than other hand visualisations. This partially supports H1 that the AV hand visualisation would have higher perceived realism. However, for ownership in general, H4 was not supported by the results in the experiment because the AV hand was not significantly rated higher for many of the ownership questions.

We observed an interesting trend in the TheraMem completion time that the AV hand actually completed the game faster on average for both mirrored (M: 59.97 s SD: 20.96 s) and non-mirrored M: 69.70 s SD: 21.87 s) compared to the other hand visualisations. There is no significance in this finding; however, we hypothesise that participants felt more confident in interacting in this virtual environment using a hand they felt resembled their own hand (Q3).

We also looked into whether there was a difference between the mirrored and non-mirrored results of the AV hand visualisation. We ran Wilcoxon rank sum tests for a comparison of each Embodiment Questionnaire question between the mirrored and non-mirrored AV hand visualisations. We found significant differences (\(p < 0.05\)) for Q1, Q9, Q10, Q11 and Q13. Regarding agency, the non-mirrored AV hand visualisation was rated significantly higher than the mirrored AV hand indicating it was harder to execute intended movements when mirrored (Q1, Q9, Q10, Q11). However, the participant responses indicated agency was still achieved with the mirrored AV hand visualisation. Regarding ownership, one of the six ownership questions showed a significant difference (Q13, \(p = 0.03\)). However, participants were still able to achieve ownership and agency for the mirrored AV hand. The mirrored AV hand was still (significantly) reported to more resemble the participant’s hand than the other three hand visualisations (Q3). With ratings above the midpoint for questions regarding agency and ownership for the mirrored AV and Realistic hands, we believe this indicates that participants were able to achieve an overall sense of embodiment with those mirrored hand visualisations.

The indication that participants were able to achieve an overall sense of embodiment in their mirrored hand challenges commonly held beliefs regarding embodiment that Sense of Self-Location is a necessary component for embodiment to be achieved. With a mirrored hand visualisation, there is no Sense of Self-location towards their real hand; however, participants were still able to achieve similar (albeit less) embodiment than they did regarding their non-mirrored hand (which was co-located with their real hand). We hypothesise that the Sense of Self-Location contributed very minimally towards embodiment in general (at least for our scenario) and that ownership and agency were the main contributing factors.

We conclude that the AV hand has its use for certain application scenarios, but not all. For our rehabilitation context, having the participant observe a significant difference in that the AV hand resembles theirs (compared to others) is vital for the mirror illusion. For other manual interactions where high realism isn’t required, a non-personalised hand visualisation could suffice.

6 Hand size effect experiment

Our first study looked at the effect of “personally” texturing a default sized virtual hand model and its affects towards embodiment. However, people all have differently sized hands so we wanted to investigate if “personally” sizing the virtual hand model would have an effect towards embodiment. In this second experiment, we only use the “Augmented Virtuality” hand model as we were only evaluating if “personally” resizing the virtual hand (in addition to personally texturing) would have an effect on our previous results/embodiment. Ethical approval was obtained from our university’s human ethics committee, and written consent was collected from each participant before the start of the experiment (Fig. 7).

In our second experiment (hand size effect experiment), we measured participant’s hand width and length and scaled a “reference” virtual hand accordingly to give the participant’s a personally sized virtual hand to interact with. This example image shows two different virtual hand sizes as experienced in our mixed reality system

6.1 Measured hand resizing

To achieve a “personal” sized virtual hand, we first needed to know the measurement correspondence between the Leap Motion virtual hand and a real-world measured hand. Our virtual environment was implemented with real-world correspondence such that 1 unit in Unity corresponded with 1 m. We implemented a virtual ruler which consisted of 1 cm (0.01 Unity units) intervals. We defined a “reference” hand that would be scaled against participants’ hand measurements. The reference hand was 7.75 cm wide (measured straight across the top of palm, under the fingers) and 17.5 cm long (measured straight from centre of the bottom of the wrist to top of middle finger). The “Augmented Virtuality” hand model was then scaled on the X (width) and Z (length) axes until its “width” and “length” (measured using the virtual ruler) matched the reference hand measurements. The corresponding Unity transform values for the reference hand were 1.0 (x, hand width) and 0.75 (z, hand length). Participants' hands were measured using a ruler, and these measurements were used to scale their personally sized virtual hand model in relation to the reference hand measurements.

6.2 Perceived virtual hand size

We also wanted to investigate how participants perceived their own hand size to be in a virtual environment by allowing them to resize the virtual hand model to what they perceive to be their real hand size. To allow for participants to resize a virtual hand to their perceived hand size, we used an Oculus Remote as the input device to allow participants to scale the default hand while wearing the HMD. The Oculus Remote was configured for allowing a participant to resize their virtual hand by using the four D-pad buttons to scale the default sized virtual hand model. The four buttons corresponded with: left (reduce hand width), right (increase hand width), up (increase hand length) and down (decrease hand length).

6.3 Participants

We recruited 16 participants (9m, 7f) from the local university population through an email invitation to participate in a virtual reality study. The age of our participants ranged from 18 to 36 years old (\({M}=23.38\); \(\textit{SD}=4.76\)). All 16 participants indicated they were right-handed.

6.4 Study design and hypotheses

We used a within-subject experimental design which consisted of eight groups to which participants were randomly assigned. The eight groups were used to balance the starting hand size and mirroring conditions (Latin square-like design). The experimental design consists of two independent variables: The virtual hand size and mirroring a participant’s hands. The virtual hand size has two states: default sized or measured resize. The mirroring of a participant’s hand has two states: mirrored and non-mirrored. We had two hypotheses we wanted to evaluate:

-

H5: Participants will experience more ownership and agency with the measured resize hand size than the default hand size.

-

H6: Participants will overestimate their virtual hand size. We base this hypothesis on related work in VR that showed that people tend to overestimate their body size (Piryankova et al. 2014).

6.5 Instruments

The same Embodiment Questionnaire from the Realism Experiment (Sect. 5) was used for this experiment as well. Again, the Embodiment Questionnaire consisted of 16 questions which were adapted from the questionnaires used by Lin and Jörg (2016) (Q1–8) and Argelaguet et al. (2016) (Q9–16). This questionnaire used a 7-point Likert scale which ranged from 1 (Strongly Disagree) to 7 (Strongly Agree) and evaluated different aspects of embodiment. These questions also correspond with the Kilteni et al. (2012) definition of embodiment: agency (Q1, Q9, Q10, Q11, Q12, Q15, Q16), self-location (Q2, Q5, Q6) and ownership (Q3, Q4, Q7, Q13, Q14). We also collected data from the participant’s performance in the TheraMem game environment (Completion Time and Number of Attempts). At the end of the experiment, we also collected the perceived virtual hand size measurements (size they perceived their real hand size to be in VR).

6.6 Procedure

Participants were randomly assigned to a group prior to arrival for balanced starting conditions (Latin square-like design). Participants were scheduled for two sessions. The first session entailed having 20 hand photographs taken (10 of each hand) and having both their hands measured. Participants were allocated a 15-minute timeslot for the photograph. They were then sent away while the experimenter carried out the “Personalised Virtual Hands” process. From pilot testing, having 10 photographs of each hand meant a high degree of certainty that the texturing procedure would be a success.

When the participant returned for their second session, they followed the same procedure as the previous experiment in Sect. 5.4. The only difference being the new four conditions: Default Sized (Non-Mirrored), Default Sized (Mirrored), Measured Resize (Non-Mirrored) and Measured Resize (Mirrored). The participant filled out their demographic questionnaire. Participants had the system set-up explained to them and received a demonstration of how the interaction with the tiles and their hand visualisation works. Instructions were given to ensure the system is operated with a flat hand on the table. Participants were randomly assigned one of their two hand visualisations to begin a “warm-up” round in the TheraMem environment. This has the same tile layout and interaction as the regular TheraMem; however, the goal was to find the one black coloured tile and then the black colour tile moved to another tile and they repeated the process. The purpose of this warm-up mode was for the participant to become comfortable with the virtual environment and learn how the interaction in the game works without being exposed to the actual TheraMem game. The participant was asked to activate the black tile at least 10 times and to keep activating the black tile until they felt comfortable with that hand visualisation/mirroring condition to proceed. This warm-up mode was repeated for both hand visualisations they were randomly assigned to and for both mirroring conditions (a total of four warm-up rounds).

They then used their first hand visualisation/mirroring condition to interact with the TheraMem game. Once they had completed the round, they were asked to complete the Embodiment Questionnaire. The previous answers were available to the participant so that they were able to give a relative (differential) judgement. The participant then used the same hand visualisation with the other mirroring condition and answered the same questionnaire. This repeated for the remaining hand visualisation/mirroring conditions. There was no break between hand visualisations or after completing a questionnaire.

After experiencing all four conditions, they were asked to resize the default sized virtual hand to what they perceived to be their actual hand size. The participant was asked to look at both their hands before putting the HMD on to resize the default sized virtual hands. They started with their left hand, and when they indicated to the experimenter they believed it was their correct hand size, they repeated the process for their right virtual hand (default sized as well). This concluded the second session and they were thanked for their time and compensated with a grocery store voucher. Their hand photographs/textures were then deleted after the participant’s second session was completed. The duration of each experiment was not recorded; however, they were allocated a 45-minute session slot and all participants finished within that time frame.

6.7 Results

Table 2 presents the Embodiment Questionnaire results from the four conditions. For the non-mirrored hand size conditions, Shapiro–Wilk’s normality tests showed that many (25/32) of the distributions were not normal. As all participants experienced the same four conditions (default/resized hand with both mirroring conditions), Wilcoxon signed (paired) tests were used to detect significant differences between conditions. There was only one significant effect found between the two non-mirrored hand sizes (Q6). Participants more highly rated that they felt like they might have more than one dominant hand with the measured resize hand over the default sized hand (\(p = 0.0109\)). TheraMem completion times for the non-mirrored hand sizes were as follows: Default Sized (M: 61.62 s SD: 13.55 s) and Measured Resize (M: 60.20 s SD: 16.00 s).

For the mirrored hand size conditions, Shapiro–Wilk’s normality tests showed an even (16/32) split between normal and non-normal distributions. No significant effects were found between the two mirrored hand sizes. TheraMem completion times for the mirrored hand sizes were as follows: Default Sized (M: 70.88 s SD: 19.16 s) and Measured Resize (M: 82.03 s SD: 31.81 s) (no significance).

Results of participants’ perceived hand size evaluation indicated a significant misjudgement between a participants’ real hand length and their virtual hand length. Participants’ average measured left hand length was M: 17.81 cm (SD: 1.19 cm) and their average perceived left virtual hand length was M: 22.32 cm (SD: 2.42 cm). This disconnect also occurred with their right hand with participants’ averaged measured right hand length M: 17.88 cm (SD: 1.08 cm) and their average perceived right virtual hand length M: 22.05 cm (SD: 2.85 cm). Paired t tests showed a strong significant difference (\(p<0.0001\)) between measured and perceived hand length for both hands.

This misjudgement did not occur for the width of the participants’ hands/virtual hands. Participant’s average measured left width was M: 8.03 cm (SD: 0.77 cm) and their average perceived left virtual hand width was M: 7.84 (SD: 0.82 cm). Participants achieved similar accuracy with their right hand with an averaged measured right width of M: 8.10 cm (SD: 0.68 cm) and averaged perceived right virtual hand width of M: 7.74 cm (SD: 0.93 cm). No significant differences were detected.

6.8 Discussion

Our results could not show that hand size had an effect towards embodiment in participants’ personalised virtual hands. However, we cannot definitively state that personally resized virtual hands do not affect perceived embodiment. We ran statistical tests to find a difference between our two conditions (resized vs non-resized) and could not detect a significant difference, but that does not necessarily mean there is no difference. This result isn’t too surprising as the difference in size between the default sized hand model and average resized model was very small. The default sized hand model was 17.5 cm long and 7.75 cm wide. The average left hand was 17.81 cm long and 8.03 cm wide. The average right hand was 17.88 cm long and 8.10 cm wide. Since the default sized hand is so close in size to the average resized hand, not being able to detect any differences is to be expected.

Participants expressed significant disparities in their perceived virtual hand length from their measured hand length. Overestimating their hand length corresponds with related work on body ownership in VR which showed that people tended to overestimate their body size. It is interesting, however, that this overestimation did not occur for their hand width. Therefore, the results partially support H6 as they did overestimate their hand length, but not hand width.

The results of the study, therefore, do not support H5. The default sized virtual hand was generally longer in length than a participant’s measured resize virtual hand. Taking participants overestimation of their perceived virtual hand size into account with the default sized hand (being longer lengthwise) could account for the generally higher ownership ratings for the default sized virtual hand; however, this wasn’t significant. For future application scenarios that require personalised, embodied hands, we cannot detect any effect resizing the virtual hands has towards embodiment. We conclude that going through the effort to resize the virtual hands is likely not necessary for most applications, even those that require embodied hands.

7 Limitations

This paper involves a number of limitations that must be discussed. The main limitation of our system, like with any other system using the Leap Motion controller, lies in the hand tracking quality. Certain visual discrepancies in the virtual hand visualisation occur when the real hand is positioned in a way that causes tracking errors. The visual discrepancies are unique to the participant’s real position (this could include a finger momentarily being in the wrong position/orientation). We did our best to address this concern by using the latest Leap Motion SDK (Orion, which included hand tracking improvements), by placing infrared absorbent cloth on the desk to limit interference and by having the participant keep their hand close to the table and at a stable tracking position (fingers close together). Visual discrepancies did sometimes occur; however, they were able to perform the memory game task adequately and achieve high agency/ownership measures for the different hand visualisations.

The Realistic hand visualisation has the same default texture for all participants regardless of their own skin colour. This was done to match the two closely related works, Lin and Jörg 2016; Argelaguet et al. 2016, who also did not factor in participant’s skin colour with the virtual hand textures. However, it is natural to suspect that this might have an effect towards ownership (although the Realistic hand visualisation rated above the midpoint consistently indicating high sense of ownership).

The TheraMem memory game is a cognitively demanding task and no pretesting was done regarding participant’s memory capabilities. However, it should be noted that the memory game can be adapted to different cognitive abilities (tiles can be added or removed to increase/decrease difficulty). We also note that every participant (48 for the first study and 16 for second study) were able to complete all assigned rounds of the memory game. It is also a relatively competitive game in nature which could influence perceptions with the hand visualisations.

8 Conclusion

We have developed an affordable system to provide virtual neurorehabilitation therapy and to experiment with the concept of embodiment. We have developed a procedure to personally texture a virtual hand using two photographs taken of each of the user’s hands. Through a 48-participant user study, we evaluated the hand visualisation (and other relevant hand visualisations from related studies) in a serious game style, relevant, mirror therapy scenario (in contrast to the more hypothetical scenarios in other works). We evaluated the different hand visualisations regarding embodiment (agency, ownership and self-location) and found that healthy participants indicated a higher overall sense of embodiment in both the mirrored and non-mirrored instances of the individually textured hand. This comes from ratings above the midpoint on questions regarding agency and ownership. We have shown that healthy users can achieve a high sense embodiment in our personalised virtual hands; in future work, we can ethically approach clinicians to potentially use this system with their real patients. We also ran a second user study to investigate if resizing the personalised virtual hand to their own hand size would have any effect towards embodiment and could not find any significant differences.

The personalised virtual hand was found to be significantly higher rated in terms of resembling a participant’s own hand compared to other hand visualisations. Participants were also able to achieve a high sense of agency using this high realism hand visualisation which differs from some findings in related literature. However, participants also reported a high (ratings above the midpoint) sense of ownership/agency in a non-individually textured hand which leads to the discussion of when an individually textured hand is necessary. For our mirror therapy scenario, where the illusion depends on the patient believing it is their impaired hand carrying out the mirrored hand movements, this finding is of very high importance and is a relevant finding to many other application scenarios in the field.

The findings that participants were able to achieve an overall sense of embodiment in mirrored hand visualisations challenges commonly held beliefs that the Sense of Self-Location is a necessary component (along with agency and ownership) to achieve embodiment in a virtual hand visualisation. Our findings (between mirrored and non-mirrored hand visualisations) show that self-location contributes little, if at all, towards overall embodiment (as shown by embodiment being achieved in mirrored hand visualisations as long as there is agency/ownership). We hope this opens the discussion for future research regarding the (lack of) importance of self-location towards embodiment.

References

Al-Tairi ZH, Rahmat RW, Saripan MI, Sulaiman PS (2014) Skin segmentation using yuv and rgb color spaces. J Inf Process Syst 10(2):283–299. https://doi.org/10.3745/JIPS.02.0002

Argelaguet F, Hoyet L, Trico M, Lécuyer A (2016) The role of interaction in virtual embodiment: effects of the virtual hand representation. In: Virtual reality (VR), 2016 IEEE. IEEE, pp 3–10

Assis GAd, Corrêa AGD, Martins MBR, Pedrozo WG, Lopes RdD (2016) An augmented reality system for upper-limb post-stroke motor rehabilitation: a feasibility study. Disabil Rehab Assist Technol 11(6):521–528

Banakou D, Groten R, Slater M (2013) Illusory ownership of a virtual child body causes overestimation of object sizes and implicit attitude changes. Proc Nat Acad Sci 110(31):12846–12851

Birckhead B, Khalil C, Liu X, Conovitz S, Rizzo A, Danovitch I, Bullock K, Spiegel B (2019) Recommendations for methodology of virtual reality clinical trials in health care by an international working group: Iterative study. JMIR Mental Health 6(1):e11973

Botvinick M, Cohen J (1998) Rubber hands’ feel’touch that eyes see. Nature 391(6669):756

Doidge N (2008) The brain that changes itself: stories of personal triumph from the frontiers of brain science. carlton north. Victoria: Scribe Publications

Freina L, Ott M (2015) A literature review on immersive virtual reality in education: state of the art and perspectives. In: The international scientific conference eLearning and software for education, vol 1, pp 10–1007

Giraux P, Sirigu A (2003) Illusory movements of the paralyzed limb restore motor cortex activity. Neuroimage 20:S107–S111

Gonzalez-Franco M, Perez-Marcos D, Spanlang B, Slater M (2010) The contribution of real-time mirror reflections of motor actions on virtual body ownership in an immersive virtual environment. In: Virtual reality conference (VR), 2010 IEEE. IEEE, pp 111–114

Hoermann S, Ferreira dos Santos L, Morkisch N, Jettkowski K, Sillis M, Devan H, Kanagasabai PS, Schmidt H, Krüger J, Dohle C et al (2017) Computerised mirror therapy with augmented reflection technology for early stroke rehabilitation: clinical feasibility and integration as an adjunct therapy. Disabil Rehabil 39(15):1503–1514

Holden MK (2005) Virtual environments for motor rehabilitation. Cyberpsychol Behav 8(3):187–211

Holmes D, Charles D, Morrow P, McClean S, McDonough S (2016) Usability and performance of leap motion and oculus rift for upper arm virtual reality stroke rehabilitation. In: Proceedings of the 11th international conference on disability, virtual reality & associated technologies, Central Archive at the University of Reading

Hung YX, Huang PC, Chen KT, Chu WC (2016) What do stroke patients look for in game-based rehabilitation: a survey study. Medicine 95(11):e3032

IJsselsteijn W, de Kort Y, Haans A (2005) Is this my hand i see before me? The rubber hand illusion in reality, virtual reality, and mixed reality 41–47

Iosa M, Morone G, Fusco A, Castagnoli M, Fusco FR, Pratesi L, Paolucci S (2015) Leap motion controlled videogame-based therapy for rehabilitation of elderly patients with subacute stroke: a feasibility pilot study. Top Stroke Rehabil 22(4):306–316

Jung S, Bruder G, Wisniewski PJ, Sandor C, Hughes CE (2018) Over my hand: Using a personalized hand in vr to improve object size estimation, body ownership, and presence. In: Proceedings of the symposium on spatial user interaction. ACM, pp 60–68

Khademi M, Mousavi Hondori H, McKenzie A, Dodakian L, Lopes CV, Cramer SC (2014) Free-hand interaction with leap motion controller for stroke rehabilitation. In: Proceedings of the extended abstracts of the 32nd annual ACM conference on human factors in computing systems. ACM, pp 1663–1668

Khan H, Lee G, Hoermann S, Clifford R, Billinghurst M, Lindeman R (2017) Evaluating the effects of hand-gesture-based interaction with virtual content in a 360 movie. In: ICAT-EGVE

Kilteni K, Groten R, Slater M (2012) The sense of embodiment in virtual reality. Presence Teleoper Virtual Environ 21(4):373–387

Lin L, Jörg S (2016) Need a hand? How appearance affects the virtual hand illusion. In: Proceedings of the ACM symposium on applied perception. ACM, pp 69–76

Liu Z, Zhang Y, Rau PLP, Choe P, Gulrez T (2015) Leap-motion based online interactive system for hand rehabilitation. In: International conference on cross-cultural design. Springer, pp 338–347

Ma K, Hommel B (2013) The virtual-hand illusion: effects of impact and threat on perceived ownership and affective resonance. Front Psychol 4:604

Ma K, Hommel B (2015) The role of agency for perceived ownership in the virtual hand illusion. Conscious Cogn 36:277–288

MacNeil LB (2017) Virtual mirror therapy system for stroke and acquired brain injury patients with hemiplegia. PhD thesis

Mueller F, Davis M, Bernard F, Sotnychenko O, Verschoor M, Otaduy MA, Casas D, Theobalt C (2019) Real-time pose and shape reconstruction of two interacting hands with a single depth camera. ACM Trans Graph 38(4):1–13

Nimcharoen C, Zollmann S, Collins J, Regenbrecht H (2018) Is that me?–embodiment and body perception with an augmented reality mirror. In: 2018 IEEE international symposium on mixed and augmented reality adjunct (ISMAR-Adjunct). IEEE, pp 158–163

Piryankova IV, Wong HY, Linkenauger SA, Stinson C, Longo MR, Bülthoff HH, Mohler BJ (2014) Owning an overweight or underweight body: distinguishing the physical, experienced and virtual body. PLoS ONE 9(8):e103428

Powers MB, Emmelkamp PM (2008) Virtual reality exposure therapy for anxiety disorders: A meta-analysis. J Anxiety Disord 22(3):561–569

Preston C, Kuper-Smith BJ, Ehrsson HH (2015) Owning the body in the mirror: the effect of visual perspective and mirror view on the full-body illusion. Sci Rep 5(1):1–10

Proença JP, Quaresma C, Vieira P (2018) Serious games for upper limb rehabilitation: a systematic review. Disabil Rehabil Assist Technol 13(1):95–100

Regenbrecht H, McGregor G, Ott C, Hoermann S, Schubert T, Hale L, Hoermann J, Dixon B, Franz E (2011) Out of reach? A novel ar interface approach for motor rehabilitation. In: 2011 10th IEEE international symposium on mixed and augmented reality (ISMAR). IEEE, pp 219–228

Romero J, Tzionas D, Black MJ (2017) Embodied hands: modeling and capturing hands and bodies together. ACM Trans Graph 36(6):245

Rother C, Kolmogorov V, Blake A (2004) “GrabCut”: interactive foreground extraction using iterated graph cuts. ACM Trans Graph 23(3):309–314. https://doi.org/10.1145/1015706.1015720

Rubio B, Nirme J, Duarte E, Cuxart A, Rodriguez S, Duff A, Verschure P (2013) Virtual reality based tool for motor function assessment in stroke survivors. In: Converging clinical and engineering research on neurorehabilitation. Springer, pp 1037–1041

Schwind V, Knierim P, Tasci C, Franczak P, Haas N, Henze N (2017) These are not my hands!: Effect of gender on the perception of avatar hands in virtual reality. In: Proceedings of the 2017 CHI conference on human factors in computing systems. ACM, pp 1577–1582

Sharp T, Keskin C, Robertson D, Taylor J, Shotton J, Kim D, Rhemann C, Leichter I, Vinnikov A, Wei Y, et al. (2015) Accurate, robust, and flexible real-time hand tracking. In: Proceedings of the 33rd annual ACM conference on human factors in computing systems, pp 3633–3642

Slater M, Perez-Marcos D, Ehrsson HH, Sanchez-Vives MV (2009) Inducing illusory ownership of a virtual body. Front Neurosci 3(2):214

Suzuki S, Abe K (1985) Topological structural analysis of digitized binary images by border following. Comput Vis Graph Image Process 30(1):32–46. https://doi.org/10.1016/0734-189X(85)90016-7

Trojan J, Diers M, Fuchs X, Bach F, Bekrater-Bodmann R, Foell J, Kamping S, Rance M, Maaß H, Flor H (2014) An augmented reality home-training system based on the mirror training and imagery approach. Behav Res Methods 46(3):634–640

Tung JY, Lulic T, Gonzalez DA, Tran J, Dickerson CR, Roy EA (2015) Evaluation of a portable markerless finger position capture device: accuracy of the leap motion controller in healthy adults. Physiol Meas 36(5):1025

Weber LM, Nilsen DM, Gillen G, Yoon J, Stein J (2019) Immersive virtual reality mirror therapy for upper limb recovery after stroke: a pilot study. Am J Phys Med Rehabil 98(9):783–788

Yuan Y, Steed A (2010) Is the rubber hand illusion induced by immersive virtual reality? In: 2010 IEEE virtual reality conference (VR). IEEE, pp 95–102

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 (mp4 75602 KB)

Rights and permissions

About this article

Cite this article

Heinrich, C., Cook, M., Langlotz, T. et al. My hands? Importance of personalised virtual hands in a neurorehabilitation scenario. Virtual Reality 25, 313–330 (2021). https://doi.org/10.1007/s10055-020-00456-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10055-020-00456-4