Abstract

Nowadays, autonomous underwater vehicle (AUV) is playing an important role in human society in different applications such as inspection of underwater structures (dams, bridges). It has been desired to develop AUVs that can work in a sea with a long period of time for the purpose of retrieving methane hydrate, or rare metal, and so on. To achieve such AUVs, the automatic recharging capability of AUVs under the sea is indispensable and it requires AUVs to dock itself to recharging station autonomously. Therefore, we have developed a stereo-vision-based docking methodology for underwater battery recharging to enable the AUV to continue operations without returning surface vehicle for recharging. Since underwater battery recharging units are supposed to be installed in a deep sea, the deep-sea docking experiments cannot avoid turbidity and low-light environment. In this study, the proposed system with a newly designed active—meaning self-lighting—3D marker has been developed to improve the visibility of the marker from an underwater vehicle, especially in turbid water. Experiments to verify the robustness of the proposed docking approach have been conducted in a simulated pool where the lighting conditions change from day to night. Furthermore, sea docking experiment has also been executed to verify the practicality of the active marker. The experimental results have confirmed the effectiveness of the proposed docking system against turbidity and illumination variation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Japan has huge sea area from which resources can be taken out using advanced technologies. Autonomous underwater vehicle (AUV) plays an important role in deep-sea works such as oil pipe inspection, survey of seafloor and searching rare metal [1,2,3,4]. The Japanese government is now seriously considering searching methane hydrate as a future energy solution. To do such novel works that need long duration time in the deep sea, one of the main limitations of AUVs is limited power capacity. To solve this problem, underwater battery recharging with a docking function is one of the solutions to extend the operation time of AUVs. Several approaches using different sensors have been conducted worldwide for underwater docking operation [5, 6]. Normally, long navigation is performed using acoustic sensors, and camera vision is used for the final step of docking process. Vision-based navigation is one of the dominant positioning units especially when high accuracy is essential. The vision-based system can be integrated with other sensor units.

Most of the studies related to vision-based navigation for the underwater vehicle are based on single camera [7,8,9]. An optical-guided system in which the lights were installed at the entrance of the funnel-shaped docking hole and the relative pose was calculated using the geometry of the lights for the autonomous underwater docking [10]. In this kind of approach, the calculation of the pose, especially orientation, was more complicated and difficult than detection of the position. Even though the attitude keeping control was used for the final docking step, the obtained orientation information did not have high accuracy in [10]. On the other hand, proposed dual-eye camera pose detection method has a merit that can use parallactic nature of dual-eyes, which cannot be utilized in single-camera approach. Apart from them, we have developed a stereo-vision-based docking approach for AUV [11,12,13,14]. In our approach, the relative pose between the underwater vehicle and a known 3D marker is estimated using Real-time Multi-step GA (RM-GA), that is, real-time 3D pose estimation method. Avoiding the disadvantages of feature-based recognition methods that are based on 2D-to-3D reconstruction, the 3D-model-based matching method that is based on 3D-to-2D projection method is used in our approach. One of the main drawbacks of 2D-to-3D reconstruction is incorrectly mapping between corresponding points in images.

Since the underwater environment is complex, there are many disturbances for vision-based underwater vehicles. Therefore, it is important to make the vision system to be robust against possible disturbances. The common disturbances for the vision-based underwater vehicle are light environment and turbidity. Since underwater battery recharging units are supposed to be installed in the deep-sea bottom, the deep-sea docking cannot avoid the turbidity and low-light environment. According to the authors’ knowledge, no existing study has conducted the docking using stereo-vision-based real-time visual servoing with performance tolerance of turbidity and illumination varieties.

In our previous researches [11,12,13,14], the passive marker was used to conduct the experiment. The docking experiments were conducted utilizing the passive marker in the pool [11, 12], having verified the effectiveness of the proposed system in the day time in an environment with less turbid water. The robustness against occlusion of the passive marker in daytime pool condition has been confirmed in [13]. The lighting direction from the ROV affects the pose estimation also in pool condition was discussed in [14].

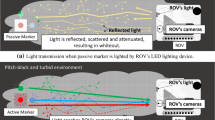

In the turbid water environment and nighttime condition, conventional idea that ROV’s LED illuminates the marker makes the input image just white since the turbid particles in the sea water reflect the lighting from the ROV, resulting in full white image. Then, the lighting from the vehicle has been confirmed to not be an effective method to detect something in turbid and dark condition. On the other hand, point light marker with no lighting from the ROV has been sometimes used for pose estimation [15], which is possibly hidden by small sea weeds or something easily. So, we have developed solid lighting 3D marker in this paper. Based on this motivation, some experiments were conducted to confirm the effectiveness of the proposed lighting marker system with turbidity and nighttime condition simulating sea bottom docking in this paper.

The improvement of the proposed system by utilizing new active/lighting 3D marker for real-time pose estimation is one of the main contributions of the present paper. In addition, the docking experiment was conducted to verify the turbidity tolerance of the proposed docking approach in a simulated pool where the turbidity of the water and the lighting was simulated from daytime to nighttime as shown in Fig. 1. Finally, sea docking experiment against turbidity was conducted to confirm the performance of the 3D-MoS system driven by RM-GA under the condition whereby the turbidity degrades the visual quality. The experimental results have confirmed the effectiveness of the proposed docking system using active 3D marker against turbidity under changing lighting condition.

2 Remotely operated vehicle

Hovering-type underwater vehicle (manufactured by

Kowa cooperation) was used as a test bed as shown in Fig. 2. Two fixed cameras installed at the front of the vehicle are used for real-time pose tracking. Four thrusters with maximum thrust force of 4.9 N each are controlled to move the vehicle along the desired path. The vehicle can dive up to 50 m and two LED light sources are also installed on the vehicle.

3 3D moving on sensing (3D-MoS) using real-time multi-step GA

In a previous study [11], we already introduced and explained 3D-MoS that uses three-dimensional measurement with solid object recognition based on visual servoing technology. In this system, RM-GA is used to estimate in real time the relative pose between the vehicle and a known 3D marker. Here, we discuss 3D pose estimation using RM-GA briefly, a background for readers.

Model-based matching method using dual-eye cameras and 3D marker. A solid object in 3D search space a is the real target and dotted one is the model of the 3D marker. The degree of matching between the projected 2D model and the real 3D marker as captured in both camera images b, c is calculated using the fitness function

Figure 3 shows the model-based matching method using dual-eye cameras for 3D pose estimation. In Fig. 3, \(\Sigma _{\mathrm{{IL}}}\) and \(\Sigma _{\mathrm{{IR}}}\) are the left and right image coordinate systems. \(\Sigma _{\mathrm{{H}}}\) is the reference frame of the ROV. \(\Sigma _{\mathrm{{M}}}\) is the reference frame of the real target object and \(\Sigma _{\mathrm{{Mi}}}\) is the reference frame of the ith model. The solid model of the real target object in space is projected naturally to the dual-eye camera images. The dotted 3D marker model, where the pose is given by one of GA’s genes, is projected from 3D-to-2D. The plural poses defined by genes are evaluated by comparing the projected 2D image and the solid model captured by the dual-eye cameras. Finally, the best model—most overlapping to the real 3D marker—of the target object that represents the true pose can be obtained based on its highest fitness value.

3.1 Real-time multi-step GA

The problem of finding/recognizing the 3D marker and detecting its pose is converted into an optimization problem with a multi-peak distribution.

The meaning of the fitness function is to calculate the correlation between the model and the real target. Refer to [16, 17] for detailed descriptions of the derivation of a fitness function from a correlation function. When the model and the real target are coincided, the fitness value has the maximum. The highest peak in the fitness distribution represents the true pose of the target object. Adversely, the fitness function should be designed to have a highest peak at the true pose of the 3D marker. Then, the pose estimation problem could be thought to have been converted into optimization problem, enabling RM-GA to solve it in real time.

The genetic algorithm is used and utilized as RM-GA to estimate the relative pose between the ROV and 3D marker.

Figure 4a shows the flowchart of the RM-GA. First, a random population of the models with different poses is generated in the 3D search space. A new pair of left and right images that were captured by ROV’s cameras is input every 33 ms. The GA procedure is performed continuously within 33 ms with 9 times evolution for every image. Then, the fittest new generation is forwarded to the next step as the initial models for the next new generation that is closer to the real target 3D marker projected naturally to camera images. By performing this procedure repeatedly, the RM-GA searches the best solution that can represent the truthful pose of the target object in a successively input dynamic images. The convergence behavior of GA procedure is illustrated as shown in Fig. 4b from the first generation to the final generation.

3.2 Controller

The proportional controller is used to control the vehicle. The four thrusters that are mounted on the underwater robot are controlled by sending the command voltage based on the feedback relative pose between the underwater robot and the object. The block diagram of the control system is shown in Fig. 5. The control voltage of the four thrusters is controlled by the following equations:

where \(v_1\), \(v_3\) and \(v_4\) are the control voltages of the four thrusters of \(x-, z-, y\)-directions, respectively. \(x_d,y_d,z_d\) are the desired relative pose between the vehicle and the target. \({\epsilon }_{3d}\) is the rotation direction around the z-axis and it is expressed as the value of \(v_2\). According to the experimental result, the gain coefficient is adjusted experimentally to perform so that the visual servoing errors could be kept less than \(\pm 40\) mm and orientation error around z-axis could also be kept less than \(\pm 7^{\circ }\). The gain coefficient values of \(k_{p1}\), \(k_{p2}\), \(k_{p3}\), \(k_{p4}\) are 0.003, 0.07, 0.02, and 0.01, respectively.

3.3 Active marker

In the present study, the active marker was designed and constructed to improve the pose estimation at turbid water in day- and nighttime. The appearance of the active/lighting 3D marker is shown in Fig. 6. The circuit was designed by combining the variable resistors, resistors, and the light-emitting diodes (LED) such as red, green, and blue. The 3D marker was constructed with a waterproof box (100 mm \(\times\) 100 mm \(\times\) 100 mm) and the three white spheres (diameter: 40 mm) were attached to the box as shown in Fig. 6. The red, green and blue LEDs were installed inside the white spherical balls, and spherical balls were covered by the color balloon. The 3D pose estimation can be improved by emitting the light LED from 3D marker under day and night environment. The effectiveness of the active marker will be discussed in Sect. 4 based on experimental results.

4 Experimental results and discussion

4.1 3D pose estimation accuracy

The reliability of the proposed system has been checked by conducting the recognition performance with plain background. The 3D marker was fixed in the water with a relative pose of \(x_t\) = 341 mm, \(y_t\) = 0 mm, \(z_t\) = \(-67\) mm, and \(\epsilon _{3t}\) = 0\(^{\circ }\) based on \(\Sigma _H\) in Fig. 9. The detected errors for \(x_e\), \(y_e\), \(z_e\), and \(\epsilon _{3e}\) that are the results of subtracting the pose estimated by the top gene for \(\hat{x}\), \(\hat{y}\), \(\hat{z}\), and \(\hat{\epsilon _{3}}\) from the ground-truth measurement at a sample time of 10 s are \(x_{e}\) = \(x_{t}-\hat{x}\) = 341–350.20 = \(-9.20\) mm, \(y_{e}\) = 0–12.11 = \(-12.11\) mm, \(z_{e}\) = \(-67-(-68.37) = 1.37\) mm, and \(\epsilon _{3e}\) = \(0-(-0.607)\) = 0.607\(^{\circ }\). This accuracy is based on the condition that is plain background, clear water, passive marker and natural daytime illumination. These factors affect the accuracy of the proposed 3D pose estimation.

4.2 3D pose estimation in turbid water

The 3D pose estimation was conducted in a simulated pool (1540 mm \(\times\) 1060 mm \(\times\) 590 mm) which was filled with 800 \(m^3\) of fresh water. The ROV and 3D marker were fixed in position and the recognition experiment was conducted against different turbidity levels under day and night conditions. The amount of turbidity was controlled by adding mud in water in the tank. Mud was chosen to simulate the natural condition. The mud was taken from near the sea environment at Ushimado in Okayama prefecture. In this experiment, the turbidity level (Formazin Turbidity Unit, FTU) was measured using a portable turbidity monitoring sensor TD-M500 (manufactured by OPTEX). In the recognition experiment, the ROV and 3D marker were fixed in position at the distance 600 mm. The ROV performed the visual servoing at about 600 mm in docking operation, meaning waiting and stabilizing for docking operation to recognize the target object. Therefore, we selected 600 mm distance for recognition experiment in this section. The illumination was simulated using artificial light sources. The maximum illumination in the daytime is 1280 lx and minimum illumination in the nighttime is 80 lx. Figure 7 shows the fitness values against different turbidity levels under day and night conditions. The fitness value calculated by RM-GA was used to verify the performance of the proposed system under different turbidity levels. The horizontal axis is described by the amount of mud (g/m\(^3)\) and the left vertical axis is expressed in terms of fitness values and the right vertical axis is described in terms of FTU values which were measured using turbidity sensor. According to the depicted results in the graph, the fitness value decreases from 1.3 to 0.1 in the case of daytime, and from 0.6 to 0.1 in the case of nighttime when the turbidity is gradually increased from 0 FTU (0 g/m\(^3\)) to 50.2 FTU (378.5 g/m\(^3\)). The fitness values are nearly same at daytime and nighttime when mud input is bigger than 100 FTU (125 g/m\(^3\)). When the turbidity level reaches 50.2 FTU (378.5 g/m\(^3\)), the RM-GA cannot recognize the 3D marker. At that time, the fitness value is below 0.2 in both cases of day- and nighttime.

Fitness values against turbidity at the distance 600 mm between the ROV and 3D marker. The illumination for day- and nighttime are 1280 (lx) and 80 (lx), respectively. The left and right camera images taken at (A), (B), (C) are shown in Fig. 8

Left and right images corresponding to the conditions of (A), (B), (C) in Fig. 7 for day- and nighttime. Dotted cycles mean recognized poses by RM-GA. Even though the currents of red, blue, green LEDs are flowing in day and night conditions, they are not illuminated in daytime and look illuminated in nighttime. (Color figure online)

4.3 Docking performance against turbidity under changing lighting condition

This experiment was conducted in an indoor pool as shown in Fig. 1 in which the turbidity was created by adding mud 10 FTU. The desired pose (\(x_d\) = 600 mm (350 mm for docking completion), \(y_d\) = 15 mm, \(z_d\) = \(-20\) mm, and \(\epsilon _{3d}\) = 0 deg) between the target and the ROV (see Fig. 9) is predefined so that the ROV performs docking by means of visual servoing. For detailed explanation of docking strategy, the reader is referred to our previous study [11]. After the docking operation completed, the vehicle returned to a distance of 600 mm from the target in the x-direction for the next docking iteration.

Continuous docking was performed successfully for a total of 17 times by changing lighting from daytime to nighttime as shown in Fig. 10. Figure 10 shows the lighting simulation for each docking time. The horizontal direction is described by the number of docking times and the vertical direction is expressed with the illumination (lx). By adjusting the lighting condition, the maximum illumination is 1280 lx in the daytime and minimum illumination is 80 lx in the nighttime. The illuminance was measured using a lux sensor (model: LX-1010B, manufactured by Milwaukee).

The results of docking performance against turbidity at the maximum illumination 1280 lx (daytime) are shown in Fig. 11, and the results of docking performance at the minimum illumination 80 lx are shown in Fig. 12. In Fig. 11a, the fitness value is above 0.8 for the few seconds of the recognition process and then increased to 1, which means that the system could recognize the 3D pose of the active marker well. Figure 11b, c, e, f represents the relative pose between the desired pose and the estimated pose of the active marker recognized by RM-GA. Figure 11d indicates the trajectory of the underwater robot measured by RM-GA based on \(\Sigma _{H}\) in Fig. 9 during the docking process.

In docking strategy, visual servoing starts when the 3D marker is detected, which means the fitness value is above a defined threshold (0.4 in the present study). When the pose of the vehicle is within the allowable error range of \(\pm 40\) mm of the desired pose, as shown in Fig. 11c, e, and the orientation around the z-axis (f) is controlled to within 7 deg for the desired period (165 ms, which is equal to five times the control loop period) in this experiment, docking starts by decreasing the distance between the ROV and the 3D marker from 550 to 350 mm, as shown in Fig. 11b. The dotted line labeled “A” in each subfigure of Fig. 11 indicates the visual servoing state, where the desired position along the x-axis is 600 mm, and the desired position along the y-axis is within the error allowance range, as shown in Fig. 11c. Visual servoing continues until the desired pose becomes less than the error range for the y- and z-directions and the orientation around the z-axis, as shown in Fig. 11c, e, f. At time “B”, as shown in Fig. 11b–d, the docking criteria are satisfied and docking operation starts. Note that the position in the x-direction at point “B” is approximately 500 mm because only the positions in the y- and z-directions and the orientation around the z-axis are considered in the docking criteria. The docking operation started approximately 7 s indicated by “B” after starting the experiment. Finally, the docking operation was successfully completed approximately 20 s after starting the experiment. The dotted line labeled “C” in each subfigure of Fig. 11 indicates the state whereby the docking is completed.

In the case of 80 lx (nighttime), the fitness value is about 0.8 in recognition of active 3D marker at the start of the experiment and then decreased to about 0.5 as shown in Fig. 12a. The ROV could recognize the active marker even though the environment is dark. The desired position along the orientation around the z-axis is out of the error range at 3 s as shown in Fig. 12f. Therefore, visual servoing continues until the desired pose of other directions, y, z, and orientation around z-axis are within the error allowance range. The time for docking completion from the start of the experiment is 25 s in this case. The underwater robot was confirmed to maintain the desired pose while docking was performed under changing lighting condition at turbidity, as shown in Figs. 11 and 12a–f. According to the experimental results, even though the lighting condition was changed from day to night in high turbidity, the relative pose of the 3D marker can be recognized well and the docking has been done successfully against turbidity under changing lighting condition.

4.4 Sea docking experiment

In Sect. 4.2, the effectiveness of the proposed system in docking against turbidity and illumination variations in an artificial environment was discussed. However, actual sea environment may degrade the visibility of the system more than the simulated pool due to the other disturbances such as sunlight and reflections. Therefore, we would like to confirm the validity of the proposed system in the actual sea environment with turbidity. Based on this motivation, the docking experiment was conducted in the turbid coastal environment rather than clear oceanic water.

4.4.1 Environmental conditions and experiment layout of the sea docking experiment

The docking experiment was conducted on the coast of Okayama prefecture, Japan. The time was about 18:39 p.m. The illumination at the sea surface and under at a 1 m depth in water was 0 lx, the turbidity level was 9 FTU. The water depth from the surface to the sea bottom was 1.8 m. The unidirectional docking station was designed as shown on the right side of Fig. 13 in which the two rectangle docking holes (100 mm \(\times\) 100 mm) and the 3D act1ive marker were installed. The docking station (600 mm \(\times\) 450 mm \(\times\) 3000 mm) was oriented with the long sides to the pier. Two underwater cameras were attached to the docking station for monitoring the behavior of the ROV during the docking experiment and further analyses as shown in Fig. 14. The two docking poles were attached to the left and right side of the ROV for the purpose of staying in front of the docking station after completion of docking without being controlled by visual servoing when the data were stored. The ROV is supposed to recharge during the stay step intended for the battery recharging operation. The center distance between the two rectangle docking holes and the 3D marker was 145 mm. The ROV was tethered and connected by a 200-mm-long cable to the GA-PC (controller and 3D pose estimator) on the pier. The layout of the sea docking coordinate system is shown in Fig. 14.

4.4.2 Sea docking experiment against turbidity

The results of the docking experiment are shown in Fig. 15a–j. In Fig. 15a–e, the vertical direction is the fitness value, position along the x-, y-, z-axes in mm and the orientation around the z-axis in degree. The horizontal direction is the time in second. The vertical dotted lines denoted by “A”–“G” indicate the docking stage, as explained in the caption of Fig. 15. The pairs of horizontal solid lines in Fig. 15c–e are the error allowance range for the docking. Figure 15g–j indicates the output voltage of each thruster and the pairs of horizontal solid lines are the dead zone range, which is eliminated by software filters. Figure 15f shows the trajectory tracking of the ROV from the start point to the end point of the docking experiment. The docking trajectory is measured based on the pose which was estimated by RM-GA.

The vehicle approached the docking station manually until the 3D marker was in the field of view of the camera about 1 m distance. After detecting the 3D marker, the relative pose between the vehicle and the 3D marker is estimated using RM-GA. When the fitness value was above 0.2, the visual servoing started. The desired pose (\(x_{d}\)= 600 mm, \(y_{d}\)= 0 mm, \(z_{d}\)= 0 mm, \(\epsilon _{3d}\)= 0\(^{\circ }\)) between the ROV and the target was predefined. When the vehicle pose is stable within the tolerance error range of relative pose by adjusting the x-, y-, z-directions within \(\pm 40\) mm and the orientation is within \(\pm 7\) degree (see Fig. 14), it switched from the visual servoing to the docking.

Docking experiment in actual sea environment with turbidity at night 0 lx: a fitness value, b position along the x-axis, c position along the y-axis, d position along the z-axis, e orientation around the z-axis, f 3D trajectory of the underwater vehicle, g voltage along the x-axis, h voltage along the y-axis, i voltage along the z-axis, j voltage around the z-axis, k corresponding photographs taken at the time “A”, l corresponding photographs taken at the time “E”, and m corresponding photographs taken at the time “G”. The dotted lines “A”–“G” in each subfigure correspond to docking stages as follows: (A–C) transition to visual servoing, (D) start docking, (D–G) completion of docking. The lower photographs (k), (l), (m) were taken at the same time by left and right cameras of ROV (1), (2) and two underwater monitoring cameras (3), (4) (top view and side view of docking hole). The positions of the two underwater cameras (3)–(4) are shown in Fig. 14

When the fitness value increased to 0.5 at about 0.15 s, the visual servoing started (dotted line “A” in Fig. 15). During the visual servoing stage, the desired position in x-direction remained constant at about 600 mm because the position in y-axis and orientation around in z-axis exceeded the error allowance range at times “B” and “C”. Photograph corresponding to the time denoted by “A” is shown in Fig. 15k–m. When the position in y-, z-axes and the orientation around z-axis were stable within the error allowance range, the docking started at about 28 s (dotted line “D” in Fig. 15). During the docking stage, the position along the y-axis exceeds the error allowance range at the time from 42 s (dotted line “E”) to 50 s and from 57 s (dotted line “F”) to 65 s as shown in Fig. 15c.During the above time periods (42–50 s, 57–65 s), the desired position along the x-axis remained constant during the docking step because the fluctuations in the position along the y-axis exceeded the error allowance range. The enlarged view of Fig. 15b, c is shown in Fig. 16 to show clearly the desired position in x-axis. In Fig. 16b, the desired position in x-axis is described by the solid line to separate clearly the constant portion and the descent portion. The oscillation amplitude of the position in z-axis was smaller than the ones of y- and z-axes during the docking operation.

The position in y-axis exceeded the error allowance range during the docking stage and the orientation around z-axis has some fluctuations. There are more fluctuations in each direction compared with the pool test, but the controller tried to adjust the thrusters in each direction by controlling the output voltage as shown in Fig. 15g–j. This fluctuation seems to have occurred because of the effect of the waves. Finally, the ROV could perform the docking operation after 72 s (dotted line “G” in Fig. 15) against turbidity under dark environment. Photographs corresponding to the times denoted by “E” and “G” are shown at the bottom of Fig. 15K–m corresponding to the times “A,” “E,” “G.” The part surrounded by the dotted circle as shown in Fig. 15m at time “G” is the docking pole tip and it can be fitted in the correct position in docking hole. After finishing the docking stage, the vehicle stops the visual servoing and then the stay step was performed for storing the final data from memory into the hard disk by giving the constant voltage 0.1 V to the forward thruster in x-direction as shown in Fig. 15g. After completing the data-storing stay step, the vehicle performed the launching step for the next docking trial.

According to the above experimental results, the ROV was automatically controlled by visual servoing and the docking operation was performed even though there were disturbances such as waves and turbidity.

Enlarged view of Fig. 15b, c to see clearly the desired position in the x-axis

5 Conclusion

In the present study, visual servoing-based 3D pose estimation and docking experiment against turbidity for an underwater vehicle under changing lighting environment are presented. A real-time pose detection scheme was implemented by means of 3D model-based recognition and real-time multi-step GA using dual-eye cameras and an active 3D marker. The experimental results show that the proposed system can keep recognizing the pose of the active/lighting 3D marker in pool test although the different turbidity levels and illumination conditions were changed. The experimental results confirmed the 3D pose estimation and docking performance in the actual sea environment against turbidity using the proposed system.

References

Jasper A (2012) Oil/Gas pipeline leak inspection and repair in underwater poor visibility conditions: challenges and perspectives. J Environ Protect 3(5):394

Kume A, Maki T, Sakamaki T, Ura T (2013) A method for obtaining high-coverage 3D images of rough seafloor using AUV-real-time quality evaluation and path-planning-. JRM 25(2):364–374

Ribas D, Palomeras N, Ridao P, Carreras M, Mallios A (2012) Girona 500 auv: from survey to intervention. IEEE/ASME Trans Mechatron 17(1):46–53

Krupiński S, Allibert G, Hua MD, Hamel T (2012) Pipeline tracking for fully-actuated autonomous underwater vehicle using visual servo control. In: American control conference (ACC), 2012. IEEE, pp 6196–6202

Yu SC, Ura T, Fujii T, Kondo H (2001) Navigation of autonomous underwater vehicles based on artificial underwater landmarks. OCEANS, MTS/IEEE Confer Exhib 1:409–416

Cowen S, Briest S, Dombrowski J (1997) Underwater docking of autonomous undersea vehicles using optical terminal guidance. In OCEANS’97. MTS/IEEE Confer Proc 2:1143–1147

Eustice RM, Pizarro O, Singh H (2008) Visually augmented navigation for autonomous underwater vehicles. IEEE J Ocean Eng 33(2):103–122

Jung J, Cho S, Choi H T, Myung H (2016) Localization of AUVs using depth information of underwater structures from a monocular camera. In: 2016 13th international conference on ubiquitous robots and ambient intelligence (URAI). IEEE, pp 444–446

Ghosh S, Ray R, Vadali SR, Shome SN, Nandy S (2016) Reliable pose estimation of underwater dock using single camera: a scene invariant approach. Mach Vis Appl 27(2):221–36

Park JY, Jun BH, Lee PM, Lee FY, Oh JH (2009) Experiments on vision guided docking of an autonomous underwater vehicle using one camera. IEEE J Ocean Eng 36(1):48–61

Myint M, Yonemori K, Lwin KN, Yanou A, Minami M (2017) Dual-eyes vision-based docking system for autonomous underwater vehicle: an approach and experiments. J Intell Robot Syst. https://doi.org/10.1007/s10846-017-0703-6

Myint M, Yonemori K, Yanou A, Ishiyama S, Minami M (2015) Robustness of visual-servo against air bubble disturbance of underwater vehicle system using three-dimensional marker and dual-eye cameras. In: OCEANS'15 MTS/IEEE Washington. IEEE, pp 1–8

Myint M, Yonemori K, Yanou A, Lwin KN, Minami M, Ishiyama S (2016) Visual servoing for underwater vehicle using dual-eyes evolutionary real-time pose tracking. J Robot Mechatron 28(4):543–558

Myint M, Yonemori K, Yanou A, Lwin KN, Minami M, Ishiyama S (2016) Visual-based deep sea docking simulation of underwater vehicle using dual-eyes cameras with lighting adaptation. In: OCEANS 2016-Shanghai. IEEE, pp 1–8

Maki T, Shiroku R, Sato Y, Matsuda T, Sakamaki T, Ura T ( 2013) Docking method for hovering type AUVs by acoustic and visual positioning. In: 2013 IEEE international underwater technology symposium (UT). IEEE, pp 1–6

Minami M, Agbanhan J, Asakura T (2003) Evolutionary scene recognition and simultaneous position/orientation detection. Soft computing in measurement and information acquisition. Springer, Berlin Heidelberg, pp 178–207

Song W, Minami M, Aoyagi S (2008) On-line stable evolutionary recognition based on unit quaternion representation by motion-feedforward compensation. Int J Intell Comput Med Sci Image Process 2(2):127–139

Acknowledgements

The authors would like to thank Monbukagakusho; Mitsui Engineering and Shipbuilding Co., Ltd.; and Kowa Corporation for their collaboration and support for this study.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was presented in part at the 23rd International Symposium on Artificial Life and Robotics, Beppu, Oita, January 18–20, 2018.

About this article

Cite this article

Lwin, K.N., Mukada, N., Myint, M. et al. Docking at pool and sea by using active marker in turbid and day/night environment. Artif Life Robotics 23, 409–419 (2018). https://doi.org/10.1007/s10015-018-0442-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10015-018-0442-1