Abstract

A new method for the resolution and quantitative kinetic analysis of overlapping electron paramagnetic (EPR) spectra, based on a combination of smoothing by B-splines and/or discrete cosine transformation and linear algebra methods for finding eigenvalues and eigenvectors of the covariance matrix using the Frobenius normal form with subsequent final basis adjustment by the Moore–Penrose pseudo-inverse matrix, is proposed. This algorithm does not require the use of the time-consuming multiple iterations of the least squares method. Our approach has been tested on several specific chemical systems including stable organic radicals and paramagnetic V4+ and VO2+ ions. In all the cases, the analysis of experimental EPR spectra demonstrated that the new approach produces good results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The problem of identification of two or more electron paramagnetic resonance (EPR) signals with rather similar g-factors, hyperfine splitting (HFS) constants etc., arises for the researchers using EPR spectroscopy very often, especially for those who apply X-band CW devices. Q-band and W-band spectrometers are still rather rare, much more expensive and not available for a lot of scientists working in chemistry and molecular biology.

The fundamental work by Lawton and Sylvestre [1] entitled “Self Modeling Curve Resolution” published 50 years ago has proposed a method of separating the additive spectral response Y(X) as a sum of two initially unknown non-negative linearly independent components. The history of development of signal separation methodology, its achieved results and main limitations were given in detail in Ref. [2]. Only 15 years later the next step has been made, leading from the analysis of two-component mixtures to the analysis of multi-component systems; and the complex spectrum splitting on three components was carried out for the first time in Ref. [3] in which an ambiguity of the solution has been shown.

It looks strange that during 50 years, among hundreds and thousands of publications we could find only one paper [4] in which application of the Multivariate Curve Resolution (MCR) method was described for the analysis of EPR spectra. The second article in this area seems to have become our recent paper [5]. To that moment we did not know about the existence of the first one. We would say that the authors of [4] did not return to the topic of computer splitting complex EPR spectra during the last 15 years. There were a few publications that concerned distinguishing nuclear magnetic resonance spectra, e.g. Ref. [6].

Since the real EPR signal is usually a superposition of individual spectra of several components in a mixture, the serious problem consists in splitting the composite signal to the basic components. The main goal of our work is to suggest the appropriate approach to solve this problem relying on the formal methods of linear algebra and using additional kinetic information.

2 Mathematical formulation of the problem

Quantitative determination of individual EPR spectra of chemical compounds mixtures without prior knowledge of their composition is known to be a complex task. The most difficult are three problems:

-

1.

Determination of the number of significant components in the mixture;

-

2.

Identification of EPR spectra of each component

-

3.

Calculation of the concentration–time profiles.

When only mixed (overlapped) spectra are available, analysts say about “blind recognition” [7].

By now, two main approaches to solving such problems are distinguished [8, 9]. They are: the Independent Component Analysis (ICA) and a Multivariate Curve Resolution Alternating Least Squares (MCR-ALS). ICA approach is considered the most powerful method of signal processing and it allows one to separate so-called “Blind source signals” (BSS), i.e. to determine the base spectra in the absence of reference ones. MCR allows resolution, identification and quantitative determination of all components in an unknown mixture without prior chemical and/or physical separation based on multidimensional indicators (optical absorption, density, electrical conductivity, pH, etc.). ICA method is more focused on statistical independence of component profiles, while MCR is aimed at achieving selectivity by employing instrumental methods of analysis and maximizing explained variance in the data. We would note that similarities between both methods are many more than differences.

Resolving multidimensional curves using MCR is a well-established method for evaluation and soft simulation of evolving systems and 2D correlation spectroscopy (2DCoS), which extends the modulated spectra to the second spectral dimension, thereby increasing the spectral resolution [10].

3 Solution of the Problem in Terms of Linear Algebra

All methods of algebraic analysis of the spectral data are based on the assumption of proportionality of the recorded signal to the amount of the component, as in the Beer-Lambert law. Experimental spectra are stacked in a matrix row by row, the column varies the wavelength, frequency or a wave number for optical spectra. In the case of chromatographic or mass spectrometric signals, it is just a number of the channel. MCR method decomposes the experimental data matrix D into the product of two smaller matrices C and ST. Herewith a column C is a matrix of concentration profiles for each component, and ST is the transposed matrix of the basis spectra. In S matrix, lines are spectra of corresponding individual (or alleged) components while columns are channel numbers as before.

Here E is a matrix of errors, i.e., residual unexplained dataset of changes not related to any chemical contribution.

The matrix of the obtained data D(NR, NC) is assumed to be informationally redundant and usually it is so. The number of its lines NR equal to the number of the experimentally recorded spectra are certainly more than the number of independent components in a chemical mixture, and a number of columns NC (the number of spectroscopic channels) allows one to distinguish one spectrum from another outside of noise.

Thus, it is necessary to divide most correctly D into factors C and S, moreover, to do this when the prior information about reference spectra and concentrations is unavailable. Such a solution is evidently ambiguous but obtaining information at decomposition is valuable per se. Users of such an approach have to define in some way the number of linearly independent components contributing to D, which should be modeled to provide initial estimations of C or ST. A matrix of concentrations C (a matrix, and not a vector) has as many columns as there are independent components in the mixture MC (do not confuse with the number of channels NC) and so many lines NR as there are in matrix D, i.e. by the number of the spectra.

After setting new starting values for C or ST, one has to iteratively optimize one by one C and ST in the alternating Multivariate Curve Resolution Alternating Least Squares (MCR ALS) algorithm until the required accuracy is achieved. On each odd cycle, a new estimation of the matrix of basis spectra is made ST by selecting a concentration matrix which minimizes the functionality of errors—differences between experimental and calculated data matrixes:

On every even cycle, the object of variation changes using the least square method and the concentration matrix C is estimated by a new selection of the basic spectra ST:

Concentration and spectra matrices are repeated cyclically until the specified accuracy is achieved. The convergence of the least squares is not generally guaranteed. The problem of false minimums with this technique in the case of choosing a starting approach is also known.

Spectroscopic methods allow to obtain a lot of information quickly on chemical reactions but without using matrix algebra, the problem usually cannot be solved. Concentration evolution in time of the certain compound is accompanied by an increase or decrease in its spectrum. The problem is that spectral signals are not always selective and overlap strongly with signals of other substances very often. In such cases, a multivariate factor analysis is especially helpful [8].

For example, it is noted in [11] that the main problem of NMR spectroscopic analysis of mixtures is signals overlapping which makes difficult to independently obtain spectra for identification of the individual components. We have used different algorithms, more resembling independent component analysis: Mutual Information Least dependent Component Analysis (ICA- MILCA) and Stochastic Non-Negative Independent Component Analysis (SNICA).

Mathematical expression of ICA method is similar to the equation for MCR–ALS with a difference except in letter designations:

Here matrix X (I × J) are initial experimental data; A(I × N) are so called mixing matrix in notation ICA (in MCR it was a matrix of concentrations), and ST(N × J) is the original matrix of reference spectra, and E(I × J) is a matrix of errors. I and J are numbers of rows and columns of the data matrix X correspondingly, N is a number of components included in the bilinear form of decomposition in Eq. (4). ICA algorithms try to find a “decoupling” matrix W, inversed to mixing matrix A according to Eq. (5):

where \({\widehat{{\varvec{S}}}}^{{\varvec{T}}}\) is the evaluation of the original matrix \({{\varvec{S}}}^{{\varvec{T}}}\)

According to Eq. (6), when \({\varvec{W}}={{\varvec{A}}}^{+}\) is a pseudo-inversion of the mixing matrix A, the estimated source signal \({\widehat{{\varvec{S}}}}^{{\varvec{T}}}\) will be equal to the original source signal \({{\varvec{S}}}^{\mathbf{T}}\)

An important peculiarity of ICA implication is the application of “Occam’s razors”, i.e. minimizing the so-called mutual information [12]. It means that each spectral peak or “feature” must be included in the base set the minimum number of times, once as an ideal. This requirement is similar to the orthogonality of the created basis but for some practical problems it is not realistic. For example, a chromophore group can contribute to the spectrum of the initial compound and of the reaction product.

A useful option of “reducing mutual information” is minimization of Amari indices [13] defined as:

Here \({p}_{i.j}={(C{C}^{+})}_{i,j}\), C are true concentration profiles and \({C}^{+}\) was obtained in the course of solving the pseudo-inversion of the concentration profile matrix C (instead of ICA notation, we have used MCR-resolution notation). Amari index will be zero when the true and estimated concentrations differ only by scaling or by rearranging the components. Low values of the Amari Index are considered desirable.

4 Algorithms Used for a Specific Implementation of the Method

The described algorithms of the recognition and deconvolution of spectra have already been published [14,15,16,17].

However, we have developed our own original scheme for the decomposition of the spectral signal in terms of the basis, which is shown in Fig. 1.

First of all, spectra must be properly smoothed, to avoid possible parasite solution of characteristic matrix. Then the covariation matrix is constructed and its eigenvalues are determined—they are necessary precursors to establish the basis of eigenvectors. Next step assumes preparation of preliminary basis of eigenvectors, but it can include negative signal values and even give negative concentrations. After normalization of the basis, the last step, giving the most probable decomposition can be achieved using Moore–Penrose inverse transformation.

A program package was developed and executed in codes for blind processing of the EPR spectra sets, which is oriented on using the most efficient calculation methods to avoid iterative procedures and give up the basic use of fittings by the least square method. Besides, we could develop our own original techniques suitable for application to tasks of spectroscopic recognition. VBA Excel has been chosen as a developing environment due to it being the most suitable for pre-processing. Excel has the most advanced graphical display capabilities for all processed arrays at any stage with interactive control and transformation capabilities.

5 Data Preprocessing: Interpolation, Smoothing, and Integration

Primary data of the EPR spectrum, especially when recorded on not so modern devices, does not have a set of indicators suitable for direct use in MCR procedures. First, further discrimination of the basis expansions is much more convenient with the integral form of the spectrum, and not with a derivative since the “norm” for the derivative is even ideally zero.

Second, the number of experimental points often exceeds several thousand which unreasonably increases the computation time with matrixes. Also, points may be not quite evenly located along the abscissa. Spectrum preprocessing means casting an experimental array to an acceptable dimension of 250–300 equidistant points, which requires interpolation.

Third, the experimental data contains noise and other parasitic signals. One can get rid of them and facilitate the task of dividing the spectrum into components using spline approximations which not only provide anti-aliasing, i.e., high-frequency filtering but they can also remove the zero line drift, cutting off the lowest frequencies.

5.1 Cubic Hermite and Farrow Interpolation

The simplest to realize are Hermite Cubic Spline Interpolation Procedures [18] which builds a polynomial whose values at the selected points coincide with the values of the original function at these points. All derivatives of the polynomial up to some order m at these points coincide with the values of the derivatives of the function. Better accuracy can be obtained by polynomial interpolation by Farrow splines. Since both these procedures are described in detail, we will not discuss them in detail.

It should be noted that both algorithms have excellent performance and can be recommended in cases where simple interpolation is sufficient. If, in addition to "dimensioning" the input array, it is also necessary to filter it from noise and/or other high-frequency deposits, more serious and time consuming procedures are required.

5.2 B-Splines with Evenly and Optimally Spaced Knots

The algorithm for expanding the experimental spectra in terms of the most probable set of basis spectra implies a set of linear algebraic transformations with data arrays which require the identity of the abscissas data, in our case, the magnetic field H. Modern serial EPR devices automatically provide data in a format suitable for further processing where the points along the abscissa are equidistant. In some cases, the field value data are digitized from an analog signal, the result being that, for each spectrum, the set of abscissas has an individual character. Thus, the first preprocessing task consists in converting primary arrays to a unified set of equidistantly located field values on the abscissa axis. This problem can be solved using B-spline interpolation.

In 1972, Cox [19] and de Boor [20] suggested to use the smooth expansion of an arbitrary function s(x) by polynomials \({N}_{i}^{k}\) of degree k of a special form defined recursively (B-splines):

To construct a spline, first, a grid of horizontal nodes is set, located so that there are g nodes inside the interval [a, b], and along the edges—by k + 1, where k is the degree of the spline:

Here \(g\) is the number of internal nodes of the spline.

The main requirement for a spline is that the n-th derivative of the function s(x) was continuous at any point in the interval [a, b] for derivatives of all orders, less than the degree of the spline, i.e. for any n = 1, …, k − 1. This is provided automatically by the polynomial spline construction. The form of these polynomials is determined recursively. Each spline at any interior point of the interval between adjacent nodes \({x\in [\lambda }_{j},{\lambda }_{j+1}]\) can be represented in basic form as a recursive relation with splines of the previous order:

where

and the splines of the first order, from which the recursion begins, are given by “steps” in the form of a meander:

With a selected set of nodes, the spline is uniquely defined by a set of coefficients [21]. To calculate the coefficients that best approximate the original noisy array from n experimental points, it is necessary and sufficient to find the minimum of the functional:

or in the matrix form:

The minimum is reached when the gradient in the coefficients for the residual (the sum of the squares of the deviations of the calculated spline points from the experimental ones) is equal to zero:

Here the matrix A is block-diagonal. We define the operator 〈⋯, ⋯〉 of the scalar product:

and

Let us introduce the notation:

Then Eq. (7) and the whole problem are reduced to solving a simple, albeit multidimensional, system of linear equations:

A characteristic feature of such problems is that the matrix B turns out to be (2 k + 1)- diagonal, since for \(\left|i-j\right|>k\) scalar product \(\langle {N}_{i},{N}_{j}\rangle =0\). In this case, instead of the standard methods for solving the SLAE, such as the Gauss method, the solution can be found much more efficiently using the Cholesky decomposition [22]. As the spline provides not only fitting the primary data, but also their smoothing, it is required to reduce the so-called fluctuations of the spline. For this, an additional requirement is introduced to minimize the “jumps” of the derivatives of the spline polynomial up to the k-th order. Hence, it is necessary to minimize the difference between the derivative to the left and the derivative to the right of each node.

This problem is reduced to solving the SLAE using the Cholesky algorithm, but this time the matrix is degenerate. To avoid the numerical errors that arise, a small perturbation is introduced into the initial data (at a level of 10−7 from the main signal). The B-spline smoothing algorithm is described in detail in [23]; the implementation of the method in Python is also given there. For mass preparation and processing of data (preprocessing and post-processing), we carried out the implementation of the specified algorithm to the VBA-Excel environment, ideally suited for these purposes.

We have to flesh out three similar concepts which are:

-

1.

Ny is the number of points in the experimental data array.

-

2.

N = 250 is the number of interpolation points.

-

3.

g is the number of internal nodes of the spline which cannot be less than the spline order and exceed the number of experimental points.

When choosing the order of the B-spline, it is most often chosen as k = 4, since the degree of 3 is minimal, satisfying the requirement of continuity of the second derivative at the connection points.

5.3 Frequency Filter Based on Discrete Cosine Transform (DCT)

This algorithm, with all the math and example code, suitable for use in MATLAB-2007 is described in Ref. [24, 25]. The proposed method is based on a discrete cosine transform (DCT) and provides reliable smoothing of equally spaced data in one or more dimensions. The method consists in minimizing the criterion for balancing data accuracy measurable residual sum of squares (RSS) and a penalty member (P) reflecting roughness of smooth data. Thus, we have to minimize a function:

Direct double brackets denote Euclidean norm (sum of squares of deviations), and the penalty term gives the degree of signal coarsening, based on separated second-order differences (generalization of the concept of derivatives to the discrete case).

D is a tridiagonal matrix defined by the formulas

For equidistant data, the matrix D takes the simplest form:

The expansion of the matrix D in terms of eigenvectors gives the representation:

where \(\Lambda =diag({\lambda }_{1 }, \dots , {\lambda }_{n})\) is a diagonal matrix of eigenvalues:

For a smoothed signal, the following expression is applied:

The components of the diagonal matrix Γ are given by the formula:

We use further forward and reverse Discreet Cosine Transform (DCT), which is similar to the fast Fourier transform but ignores its imaginary (sine) part. The direct DCT is:

The reverse IDCT is:

The meaning of the direct and inverse cosine transforms is to select the optimal parameter s, which sets the cutoff of the frequency response Г:

The parameter s makes sense to ask with a logarithmic step. The following Fig. 2 demonstrate changes in the frequency characteristic Г with its variation in the interval of five orders and how the smoothed spectrum is transformed in this case.

A A set of spectral Gamma-functions Г vs. the harmonic number calculated for different values of the smoothing parameter s = 10p, where p is equal to: − 1.0 (1), − 0.5 (2), 0.0 (3), 0.5 (4), 1.0 (5), 2.0 (6), 3.0 (7), and 4.5 (8). B calculated EPR spectra under B-splining by this technique at p: < 1.0 (a), 3.0 (b), 4.0 (c), 5.0 (d), and 7.0 (e)

At p < 1, no signal loss (and hence no smoothing) occurs. At p > 5, the drop in the modulating function Г (s = 10p) is too sharp and the “smoothing” is accompanied by the loss of the information important part of the signal. As an example, we would like to show the result of B-splining for the experimental system (Fig. 3). EPR spectra a reproduce the low field part of the rather noisy spectra recorded in 1 day after preparation of VO2+-modified TiO2 (anatase) nanosized photocatalyst [26].

It is clear, that plot with an excessive number of nodes (n = 120, curve 3b) is undersmoothed and repeats almost all noises of initial signal. From the other side, if the number of nodes is insufficient, the plot is oversmoothed and important details of signal can be lost (n = 20, curve 3d). In our case, spline with approximately 40 nodes (Fig. 3c) looks best prepared for further decomposition. The estimation of «proper» number of nodes has mathematical basis, nevertheless includes heuristic component. Anyhow, all fails in preprocessing of initial data, becomes critical on the step of eigenvalues discrimination.

6 Fine Recognition of EPR Spectra

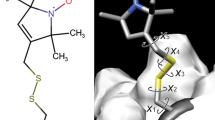

Some comments to the algorithm for reaching the base spectra. Software-extracted eigenvectors (basic spectra) represent an arbitrary linear combination of true spectra but can also give negative values of intensities devoid of physical meaning. An example of such a basis is shown in Fig. 4-1.

Sequential processing of baseline EPR spectra: 4–1—basic spectra which have not passed normalization (the negative parts are observed); 4–2—normalized basis spectra with minimal mutual presence of components; 4–3—optimized basis spectra. Lines a, c, e denote signals of the high-field complex, and lines b, d, f are related to the low-field one

Both spectra on each subplot (1, 2, 3) are absolutely equal in rights—these are two components of two-dimensional basis. It is known that any linear combination of basis vectors, in turn, is also a basis. In the first pair (plot 1) we have almost arbitrary construction, given out by the program. Next step (plot 2) brings the basis to a positive norm. Anyhow, so called rotational ambiguity of the basis still remains. It is excluded only on the third plot, and is achieved after Moore–Penrose transformation.

The presence of outliers in the negative region, as well as the presence of coinciding peaks in both basic spectra, shows that each of them does not belong to an individual substance, but is a linear combination of them. To normalize the basis, we have proposed an algorithm that involves cycling two procedures: (1) alternative output of all spectra in the positive region, and (2) sequential “removal” of common spectral peaks, ideally, each line should be contained only in one basic spectrum.

Entering the positive region (procedure 1) involves iteratively repeating steps: for each spectrum, the largest negative value (by its module) is sought and another basic spectrum, in which the same spectral channel has the most positive value, is mixed to this “dip”. The second procedure sequentially selects each spectrum and tries to subtract from it as much weights of remaining spectra, as possible keeping it in the positive region.

The whole algorithm cyclically repeats procedures 1 and 2 for all basis spectra in turn, until the required accuracy is achieved. It gives a plausible set of basis spectra (Fig. 4-2), but the relative fraction of the initial reagent not necessarily starts from 1. Figure 4-3 reflects the correct ratio between components. To fit the spectra to the expected correct kinetics \(A\to B\) for pure shares and mutual presence, it is necessary to use the Moore–Penrose inverse transformation [27,28,29,30], which will give us kinetic curves allowing to determine the conversion rate constant (Fig. 4-3).

7 Scale and Rotational Ambiguity. Moore–Penrose Transform

Let us briefly describe the algorithm for applying the Moore–Penrose transform and introduce the concept of a pseudoinverse matrix. To this end, the following relationships must be observed:

It is important that for any matrix its pseudo-inverse exists and is unique. For a square non-degenerate matrix, the pseudoinverse is the same as the inverse:

For finding the pseudo-inverse matrix, the following methods can be used. If A is a full column rank matrix, i.e. all columns are linearly independent, then:

If A is a is a matrix of full string rank, then A+ is:

In our case, it is more convenient to assume that the sweep of the spectra along the magnetic field is oriented horizontally, and then the set of basis vectors of the reference spectra is also horizontal, and a mixing matrix C extended vertically. Let us return to the Eq. (1):

The approximate equal sign means that we have neglected the error matrix, and the mixing matrix \({{\varvec{C}}}_{\mathbf{o}\mathbf{l}\mathbf{d}}\) was obtained in a rather arbitrary way in the course of solving the system of linear equations with an arbitrarily chosen Frobenius basis of eigen-vectors \({{\varvec{S}}}_{\mathbf{o}\mathbf{l}\mathbf{d}}^{{\varvec{T}}}\). In addition to permutation arbitrariness, this basis also has a scale uncertainty and pivotal ambiguity. Hence, we have achieved the minimum of the mutual presence of spectral lines in various etalons but this does not mean that our pseudo-etalons are spectra of individual substances. They may turn out to be linear combinations of them but which ones—we do not know with “blind recognition”.

If we have any ideas about the kinetics of processes occurring in a mixed system, then we can calculate the expected calculated sweep in time \({{\varvec{C}}}_{\mathbf{n}\mathbf{e}\mathbf{w}}\) for each of the individual substances. This sweep must correspond to the new basis, so that the observed pattern of changes in the total spectra over time is preserved.

We have to find the linear transformation matrix W translating the existing old basis \({{\varvec{C}}}_{\mathbf{o}\mathbf{l}\mathbf{d}}\) into a desired, but as yet unknown new \({{\varvec{S}}}_{\mathbf{n}\mathbf{e}\mathbf{w}}^{{\varvec{T}}}\) decomposition according to which will be obtained the "correct" dependences of concentrations on time. Therefore, we multiply the left and right sides of the Eq. (10) on the pseudoinverse \({{\varvec{C}}}_{\mathbf{n}\mathbf{e}\mathbf{w}}^{+}\):

Substituting instead of \({{\varvec{C}}}_{\mathbf{n}\mathbf{e}\mathbf{w}}^{+}\) its decomposition by Eq. (8), we will obtain:

Thus, the transition matrix from a basis specifying a certain expansion to a basis, the expansion according to which will be obtained a reasonable kinetic picture is carried out by the algebraic Moore–Penrose transformation without requiring serious computational resources and the use of delicate iterative minimization and fitting procedures.

We get the result without using iterative procedures!

8 Application of the Method to Some Real EPR Spectra

We have to illustrate the developed approach by its usage in a few certain EPR systems.

For the first example we have chosen melamine–barbituric acid (M-BA) supramolecular self-organized assemblies which have interesting perspectives as a pH-dependent organic radical trap material [31]. EPR spectroscopy study using X-band CW EPR device demonstrated noticeable changes in the spectra upon varying pH values of the system as well as under illumination with UV and visible light as one can see in Fig. 5 and were partially reported in Refs. [31, 32].

The computer analysis of the complete set of X-band EPR spectra recorded at different pH values and concentrations revealed the existence of two types of stable radicals, R1 and R2, which spectra are very close, hardly distinguishable separately and rather noisy (Fig. 5a–c). It is interesting that signal R2 was photosensitive while R1 did not change under illumination. Analysis of the spectra using the developed computer method showed difference between signals with the following parameters: gR1 = 2.0050 ± 0.0005 and gR2 = 2.0027 ± 0.0005 correspondingly, thus, the splitting between centers of both singlets ΔB1–2 is equal to ca. 0.4 mT. Later, we could register EPR spectrum of the sample M-BA prepared at pH 6.2 at Q-band device, which is shown in Fig. 5 (insert). Experimentally measured values from this spectrum are: gR1 = 2.0047 and gR2 = 2.0037 which is reasonably close to those estimated from the X-band data. The measured value of ΔB1–2 = 1.64 mT and it corresponds very well with 0.4 mT calculated from X-band spectra. This result confirms applicability of the developed methodic for analyzing poorly resolved (overlapping) EPR spectra with low signal-to-noise ratios. The authors are thankful to Prof. Ekaterina V. Skorb (Infochemistry Scientific Center, ITMO University, Saint Petersburg, Russia) for providing us samples of M-BA, and Dr. Ruslan B. Zaripov (Zavoisky Physical-Technical Institute, FRC Kazan Scientific Center of RAS, Kazan, Russia) for recording a Q-band EPR spectrum.

Another example on applications of the methodology to a real system relates to the surface of TiO2 (anatase, Hombikat UV 100) nanoparticles modified by VOSO4 which formed three various vanadyl (VO2+) complexes coordinated to the titania particles by different number of the surface oxygen atoms [5]. The real situation was even more complicated due to the parallel formation of some areas with very high local concentration of such (VO2+)n-TiO2 clusters (aggregates), which content varied with the initial [VO2+]0/TiO2 ratio and also on the incubation time.

One of the main problems was how to characterize slow time-dependent transitions between the isolated individual complexes marked “A”, “B” and “C”, which components related to the parallel orientation in the magnetic field are shown in Fig. 6. Using the approach described above and after analysis of the experimental results, we could obtain the effective kinetic data about slow transformations of the initial complex “A” finally to complex “C”. Kinetics of these transitions are shown in Fig. 7. Both kinetics are exponential, of the first order, similar in the case of 2.65·1019 < [VO2+]0 < 1.25·1020 spin/g, and the calculated effective rate constant was equal to ca. 0.15 (day)–1 [5]. Thus, the application of this computational method to a spectroscopically complex composite substantially supplemented semiquantitative results presented in Ref. [26].

In the last example studying the influence of the mechanochemical activation (MCA) time of mixed binary metal oxides structure and properties, it was found that the process of material transformations strongly depends on the metal oxide nature as well as on time of treatment [33]. The process of milling has started from a coarse mixture of two oxides B2O3 and V2O5 in the molar ratio 2:1 characterized by a broad asymmetric single line related to the V4+ PCs which exist as highly concentrated aggregates in V2O5 oxide (Fig. 8 insert, a) and resulted as a mixture of the residual initial V4+ PCs and the main amount of the isolated VO2+ PCs with well resolved typical spectrum (Fig. 8).

Using our calculating methodology, there were obtained quantitative data from EPR Investigation of several binary mixed oxides contained V2O5 as a permanent component and MoO3, TiO2, B2O3, Bi2O3, In2O3, and Tm2O3, prepared by MCA method. Figure 9 illustrates the kinetic dependence of the initial and resulting compounds as a function of milling time in the case of V2O5/2B2O3, mixture. The calculated value of the transformation rate constant k was equal to 0.036 ± 0.005 min−1 while for the rest of the systems k values varied from 0.005 to 0.045 min−1.

9 Conclusion

A new universal method for resolution of overlapping EPR spectra and kinetic analysis of reactions occurring in the system is proposed, based on a combination of smoothing by B-splines and/or discrete cosine transformation and linear algebra methods for finding eigenvalues and eigenvectors of the covariance matrix using the Frobenius normal form with subsequent final basis fitting by the Moore–Penrose pseudo-inverse matrix. This method avoids the existing disadvantages of the previously proposed approaches in particular, it does not require multiple iterations using the least squares method. Several specific examples of the analysis of experimental EPR spectra demonstrate the results of applying the new proposed approach.

References

W.H. Lawton, E.A. Sylvestre, Technometrics 13, 617 (1971)

A. De Juan, R. Tauler, Anal. Chim. Acta 1145, 59 (2021)

O.S. Borgen, B.R. Kowalski, Anal. Chim. Acta 174, 1–26 (1985)

M. Abou Fadel, A. de Juan, N. Touati, H. Vezin, L. Duponchel, J. Magn. Reson. 248, 27 (2014)

A.I. Kulak, S.O. Travin, A.I. Kokorin, Appl. Magn. Reson. 51, 1005 (2020)

T.K. Karakach, R. Knight, E.M. Lenz, M.R. Viant, J.A. Walter, Magn. Reson. Chem. 47, S105 (2009)

Y.B. Monakhova, S.A. Astakhov, A. Kraskov, S.P. Mushtakova, Chemometr. Intel. Lab. Systems 103, 108 (2010)

H. Parastar, M. Jalali-Heravi, R. Tauler, Trends Analyt. Chem. 31, 134 (2012)

A. de Juan, R. Tauler, Crit. Rev. Anal. Chem. 36, 163 (2006)

P. Tomza, W. Wrzeszcz, M.A. Czarnecki, J. Molec, Liquids 276, 947 (2019)

Y.B. Monakhova, A.M. Tsikin, T. Kuballa, D.W. Lachenmeier, S.P. Mushtakova, Magn. Reson. Chem. 52, 231 (2014)

D. Erdogmus, K.E. Hild, Y.N. Rao, J.C. Príncipe, Neural Comput. 16, 1235 (2004)

S. Amari, A. Cichocki, H.H. Yang, Proc Intern. Symp. Nonlinear Theory Appl. I, 37 (1995)

Y.B. Monakhova, S.P. Mushtakova, Zh. Analit, Khimii 72, 119 (2017). (in Russian)

Y.B. Monakhova, S.A. AStakhov, S.P. Mushtakova, J. Anal. Chem. 64, 495 (2009). (in Russian)

Y.B. Monakhova, S.A. Stakhov, S.P. Mushtakova, L.A. Gribov, J. Anal. Chem. 66, 361 (2011). (in Russian)

Y.B. Monakhova, I.V. Kuznetsova, S.P. Mushtakova, Zh. Analit, Khimii 66, 582 (2011). (in Russian)

E.V. Shikin, L.I. Plis, Curves and Surface on a Computer Screen (User guide to splines (Dialog-MIFI), Moscow, 1996). (in Russian)

M.G. Cox, J. Inst. Maths. Appl. 15, 95 (1972)

C. de Boor, J. Approx. Theory 6, 52 (1972)

K. Lee, Basics of CAD/CAM/CAE (Piter, St. Peterburg, 2004). (in Russian)

V.M. Verzhbitskiy, Ocнoвы Чиcлeнныx Мeтoдoв (High School, Moscow, 2009). (in Russian)

L. Piegl, W. Tiller, The NURBS Book, 2nd edn. (Springer, New York, 1996)

A. Efremov, V. Havran, H. Seidel, Robust and Numerically Stable Bezier Clipping Method for Ray Tracing NURBS Surfaces (Comp. Sci. Dept., Univ Saarland, Germany, 2005)

D. Garcia, Comput. Stat. Data Anal. 54, 1167 (2010)

A.I. Kokorin, V.I. Pergushov, A.I. Kulak, Catal. Lett. 150, 263 (2020)

R.G. Kozin, Algorithms of Numerical Methods of Linear Algebra and Their Software Implementation (NIYaU MIFI, Moscow, 2012). (in Russian)

I.K. Kostin, Voprosy Radioelektroniki, ser. RLT, No. 1, 130 (2004), eLIBRARY id: 9178222

J.C.A. Barata, M.S. Hussein, J. Phys. 42, 146 (2011)

V.N. Katsikis, D. Pappas, A. Petralias, Appl. Math. Comp. 217, 9828 (2011)

V.V. Shilovskikh, A.A. Timralieva, P.V. Nesterov, A.S. Novikov, P.A. Sitnikov, E.A. Konstantinova, A.I. Kokorin, E.V. Skorb, Chem. Eur. J. 26, 16603 (2020)

V.V. Shilovskikh, A.A. Timralieva, E.V. Belogub, E.A. Konstantinova, A.I. Kokorin, E.V. Skorb, Appl. Magn. Reson. 51, 939 (2020)

A.I. Kokorin, S.O. Travin, I.V. Kolbanev, E.N. Degtyarev, A.B. Borunova, G.A. Vorobieva, A.A. Dubinsky, A.N. Streletskii, Appl. Magn. Reson. (2021). https://doi.org/10.1007/s00723-021-01314-5

Acknowledgements

This work was performed within the framework of the Program of Fundamental Research of the Russian Academy of Sciences on the research issue of FRCCP RAS № 0082-2019-0014 (State reg. AAAA-A20-120021390044-2)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Travin, S.O., Kokorin, A.I. Kinetic Analysis and Resolution of Overlapping EPR Spectra. Appl Magn Reson 53, 1069–1088 (2022). https://doi.org/10.1007/s00723-021-01426-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00723-021-01426-y