Abstract

Optimization has been a field of interest in science and engineering and many metaheuristic algorithms have been developed and applied to various problems. These, however, often require parameter adjustments to achieve a suitable performance. This paper proposes a new framework to improve the performance of metaheuristics, termed Multi-Stage Parameter Adjustment (MSPA), which integrates Metaheuristics, an efficient sampling approach, and Machine Learning. The sampling method utilized here known as Extreme Latin Hypercube Sampling (XLHS) is used to divide parameter spaces into equally probable subspaces, ensuring better coverage due to the continuous nature of variables. These parameters are then improved through a primary optimizer for different numbers of variables using a selected benchmark problem. The resultant data are utilized to train an artificial neural network (ANN). The adjusted metaheuristic algorithm is subsequently employed for structural optimization. In this respect, the input data for the ANN comprise the average of the lower and upper bounds of each subspace and the number of variables, while output data are the optimized values obtained using the Primary Optimizer, which does not require extensive parameter adjustments. To evaluate the efficiency of the proposed framework in comparison with the original version and some other algorithms in the literature, the parameters of Particle Swarm Optimization, chosen for its widespread applicability, are adjusted and tested against some mathematical benchmarks, two engineering, and two truss structural optimization problems. Results demonstrate the efficacy of the presented framework in enhancing the performance of metaheuristic algorithms, particularly in the optimal design of truss structures.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Optimization has found many applications in civil engineering and many algorithms have been developed and presented [1]. Typically, optimization can be employed in various fields including engineering design, computer sciences, and economics where no efficient algorithms exist for their hard problems [2,3,4].

In this regard, Metaheuristics can be employed as a problem-solving tool in computational optimization scenarios. Achieving successful outcomes when applying metaheuristics to structural optimization problems necessitates the discovery of optimal initial parameter configurations, a laborious and time-intensive undertaking [5]. Each metaheuristic possesses a predefined parameter set that must be appropriately initialized before execution and these influence the exploitation or exploration rate of the search space [6].

Various investigations were carried out regarding parameter adjustment for one specific metaheuristic. For instance, Wong [7] and, Fığlalı et al. [8] studied parameter tuning in Ant Colony Optimization (ACO) [9]. In this regard, Akay and Karaboga [10] investigated the performance of the Artificial Bee Colony (ABC) algorithm [11] by analyzing the influence of its parameters. Iwasaki et al. [12] proposed an adaptive Particle Swarm Optimization (PSO) [13] algorithm employing the average absolute velocity value of all particles as dynamic parameter tuning. Yang et al. [14] introduced a framework to fine-tune the Firefly algorithm [15]. Tan et al. [16] introduced a machine-learning-based approach to control parameters in the context of a metaheuristic algorithm, specifically the Bee Colony Optimization (BCO) algorithm [17].

As mentioned, there are various types of parameter adjustment methods that significantly affect the performance of metaheuristic algorithms. This can also enhance the process of structural optimization resulting in better results [18]. Hence, this paper introduces a new framework for the adjustment of metaheuristics parameters (MSPA) which employs the tremendous advantages of both metaheuristics and machine learning for optimal design.

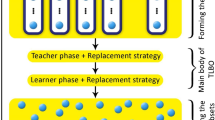

This framework consists of four stages, 1. Data Generation using Extreme Latin Hypercube Sampling (XLHS), 2. Parameter Optimization employing Primary Optimizer (PO), and 3. Training through machine learning (ANN) 4. Optimization of structures. It combines the benefits of XLHS, which is proposed in this study, for appropriate coverage of search spaces for continuous variables and the prediction capability of machine learning techniques to adjust initial parameter settings for new problem instances.

This paper is structured as follows: The following section briefly introduces Extreme Latin Hypercube Sampling, Primary Optimizer, and Machine Learning. Section 3 illustrates the proposed framework, and its phases, in further detail. In Sect. 4, some mathematical, engineering, and structural optimization problems are utilized to show the efficiency of the framework. In the last section, concluding remarks are highlighted.

2 Basic concepts and definitions

2.1 Extreme latin hypercube sampling (XLHS)

There are various forms of sampling in terms of the design of experiments such as random sampling, full factorial sampling, and its variations [19]. Since the variables of some optimization problems like metaheuristic parameters are continuous, such sampling that can appropriately represent the search space to find the optimum solution for these problems is required. Therefore, one form of sampling that can satisfy the needs of this framework, termed Extreme Latin Hypercube Sampling (XLHS) due to the inspiration of partitioning the search space by Latin Hypercube Sampling (LHS) [20], is proposed. In this form, the reasonable ranges of variables are determined and then they are partitioned according to the nature of the problem like LHS. Following with initializing populations in each part i.e., for each run, the initial populations are generated in the corresponding part to have appropriate coverage and representation of that part so that the optimal solution is found. The number of parts relates to the required number of datasets for training ANNs and also the range of each variable. In the context of two dimensions, XLHS with a population of 20 is illustrated in Fig. 1 where the search space is partitioned and in each one, 20 points are randomly generated as the initial population of the run corresponds to that part. The partitioning of the search space of the parameters is done according to their ranges and number. This partitioning is the division of search space into equal spaces (equally probable subspaces) and then the random population is generated in each subspace. The larger search space there is the more partitions are required. Besides, the number of populations is varied based on the number of partitions, i.e., smaller partitions need a lower number of populations and vice versa.

Basically, data sets using XLHS are generated to have an appropriate search all over the search space and more importantly, provide data sets for ANNs. In this regard, although there is more computational cost and time to collect data and train ANNs, this process is done for each algorithm once, and when the predictive model is made, the adjusted parameters for an optimization problem with a specific number of variables are obtained using the ANN model. To reduce the computational cost and time through the process of training, PSO parameters are optimized by employing GA for a mathematical benchmark problem, which in this study is Sphere (here F1) but can be altered, as its objective function. Also, for its applicability, the number of variables for this objective function varies in increments to have the potential of being applied to various optimization problems whose number of variables is different such as the ones investigated in this study.

2.2 Primary optimizer (PO)

In this stage, one metaheuristic algorithm whose parameters need not extensive adjustment, such as Genetic Algorithm [21], Colliding Bodies Optimization [22], etc., is selected as the PO to optimize the parameter of the secondary or main metaheuristic. To demonstrate the framework, from here on, GA, which has been selected for its popularity and vast employment in optimization problems specifically structural optimization [23, 24], is considered as the Primary Optimizer in this study but any other metaheuristic algorithms can be employed in studies. However, GA has some parameters such as mutation and crossover rates along with the number of population and iterations, these do not significantly influence the results for predefined parameters and a fixed number of function evaluations.

The genetic algorithm represents a search heuristic that draws inspiration from Charles Darwin's theory of natural evolution. This algorithm emulates the intricate process of natural selection. The mechanism of natural selection commences with identifying the most proficient individuals within a population. This algorithm encompasses five distinct phases, as presented in Fig. 2.

2.3 Machine learning (ML)

Machine learning, a subset of artificial intelligence, empowers computers to make predictions by learning from available data and algorithms, allowing them to autonomously improve their performance without explicit programming [25]. In this study, Artificial Neural Networks (ANN) are utilized as a form of machine learning for generating optimized data, while other forms depending on the problem and secondary metaheuristic can be used. The structure of an ANN consisting of just one hidden layer, which has n–H neurons, is presented in Fig. 3.

Artificial neural networks have been used for various applications in engineering such as structural optimization whose pioneering studies were done by Kaveh et al. [26]. Besides, other applications with respect to civil and structural engineering [27,28,29] include data classification [30], magnitude prediction [31, 32], and pattern recognition [33, 34] through a process of learning known as training.

In the present study, a feed-forward ANN was employed, utilizing a standard form of neurons. This neural network, equipped with appropriately adjusted weight magnitudes, calculates an approximate mathematical nonlinear relationship between the input layer (\({I}_{ni}\)) and the output layer (\({O}_{ui}\)). The inputs are multiplied by these weighted values (\({w}_{ij}\)), and the outcomes of each neuron layer are combined with a bias term (\({B}_{i}\)), as shown below:

where f is the activation function.

3 The proposed framework

This research proposes a new framework that tackles the parameter adjustment of metaheuristics issues utilizing a combination of XLHS, Primary Optimizer (GA), and Machine Learning (ANN) for optimal design. This encompasses four stages, whose initial is data generation through Extreme Latin Hypercube Sampling, proceeding with optimization employing Primary Optimization (GA) to optimize the secondary metaheuristic parameters for the objective function of the benchmark problem. Then, ANN models are developed to predict the optimum parameters and lastly, the main optimization is performed. To present a thorough articulation, the aforementioned process with its stages is illustrated in detail through its application in PSO algorithm owing to the fact that PSO has many extensions and applications; and most metaheuristic algorithms proceed likewise so, if MSPA enhances PSO performance, its extensions and other metaheuristics performance can be improved. This process is represented in Fig. 4.

3.1 Generating data

The first step is to determine the range of each parameter for extracting its upper and lower bounds. Then Extreme Latin Hypercube Sampling is applied to the obtained bounds to generate a complete data set. PSO parameters and their reasonable ranges are given in Table 1. There are also other parameters like the number of particles and the number of iterations which directly affect the number of objective function evaluations and in the optimization problems when this number increases, mostly a better answer is found. So, in this study, these parameters are fixed and only the ones in Table 1 are adjusted to have a fair comparison with the same number of function evaluations.

3.2 Processing data

Having prepared data sets, the genetic algorithm, which is a potent tool for optimization to find the adjusted parameters, is applied. To do so, the objective function of the considered metaheuristic, which here is PSO, is also the objective function of GA. However, in GA, the variables are PSO parameters called alternative variables, and PSO variables are the ones that correspond to the benchmark problem. As stated earlier, the Sphere function was used to adjust the PSO parameters because it requires much less computational costs compared with structural optimization problems. Besides, the parameters in increments are optimized for different numbers of variables so that they can be applied to various optimization problems such as structural optimization.

There is one loop for each increment of the data set. In each loop, the initialization of the GA population is limited to the lower and upper bounds of each increment according to the data set i.e., the partitions as depicted in Fig. 1. Although their overall lower and upper bounds through optimization are the primary ones, as supplied in Table 1. Finally, the outcome of GA is the best design of variables, PSO adjusted parameters, resulting in the best cost of PSO objective function, which here is Sphere. The initial objective function values using each increment’s upper and lower bounds and their optimized ones are shown in Fig. 5 illustrating the influence of parameter adjustment on the best cost value and metaheuristics performance.

3.3 Training data

After processing data, the adjusted parameters of PSO for each increment with different numbers of variables are obtained. As mentioned in the introduction section, ANNs are powerful tools in terms of prediction, so this capability is utilized to develop a predicting model that can be used to adjust any initial metaheuristic parameters. In this respect, the average values of the increments (average values of parameters for each part) and the number of variables are input data, and their corresponding adjusted parameters are considered output data. Although there can be different numbers of hidden layers, to avoid the complexity of ANN structure and the satisfactory accuracy of the developed model, one hidden layer is chosen, as shown in Fig. 3. This network was trained with different numbers of neurons in its hidden layer and as can be seen in Fig. 6, the correlation coefficient of the model almost converged with 19 neurons (0.995), and no further enhancement was observed; so, its number of neurons was selected 19. Furthermore, the Levenberg–Marquardt algorithm, which is suitable for nonlinear least squares problems, is employed for training artificial neural networks, and tangent hyperbolic and pure linear are activation functions of hidden and output layers, respectively. To avoid overfitting, N-fold cross-validation is utilized. In Fig. 7, the regressions of ANN in training, validation, and test are presented, and their corresponding error histogram is depicted in Fig. 8.

4 Mathematical, engineering, and structural optimization examples

As mentioned earlier, the proposed framework has been applied to PSO to evaluate its performance against several numerical, engineering, and structural optimization problems. The comparison made is due to the results obtained utilizing default and optimized parameters of PSO with other studies employing PSO variations and other state-of-the-art metaheuristic algorithms. Statistical measures, encompassing averages and standard deviations, are meticulously scrutinized. Additionally, the convergence rate of the algorithms is thoroughly deliberated during this phase evaluating the algorithms' performance.

4.1 Mathematical benchmark problems

In this section, PSO parameters have been adjusted, and its performance has been tested against some mathematical problems, including unimodal, multimodal, and multimodal with fixed dimensions. These functions are presented in Table 2, where their formulas, dimension, range, and global minimum are listed as well. Also, the 3D representations of these functions are depicted in Fig. 9. In PSO, the number of particles is 50, and the maximum iteration for each run is considered 200. The algorithm is executed 30 times independently for each problem to present a fair comparison. The corresponding results consisting of the best, average, and standard deviation for both default and adjusted PSO are mentioned in Table 3. As can be observed, there is a significant improvement in PSO performance in all types of mathematical optimization benchmark problems, for instance, in F1, the best solution improved from 0.00439 to 5.29E-12, demonstrating the efficiency of the proposed model in enhancing metaheuristics performance.

4.2 Engineering optimization problems

We further examined the influence of the proposed framework on PSO for solving two constrained engineering design problems with continuous and discrete variables: pressure vessel design and compound gear design. To have a fair comparison, the adjusted PSO is executed 30 times independently, and their best cost, worst cost, mean, and standard deviation were obtained. Moreover, the best design related to the best cost of each is presented and compared to the ones corresponding to other algorithms. The constraints are implemented employing the penalty function, and to solve the discrete problem, the position of particles is rounded in each iteration. Some of the comparing results were in the work of Kaveh and Dadras [35]. The problems are explained in the following.

4.2.1 Pressure vessel design

The first engineering problem under investigation within this study pertains to the intricacies of pressure vessel design, as depicted in Fig. 10. The main objective function herein resides in the minimization of the cost associated with the pressure vessel design; a challenge explored in [36]. To engender the optimization process, four design variables come to the fore: Ts or x1 (representing shell thickness), Th or x2 (denoting head thickness), R or x3 (connoting inner radius), and L or x4 (signifying cylinder length). The mathematical model of this pressure vessel design problem is given as follows.

Consider \(\overrightarrow{{\varvec{X}}}=\left[{x}_{1},{x}_{2},{x}_{3},{x}_{4}\right]=[{T}_{s},{T}_{h},R,L]\)

Minimize \({f}_{cost}\left(\overrightarrow{{\varvec{X}}}\right)=0.6224{x}_{1}{x}_{3}{x}_{4}+1.7781{{x}_{2}x}_{3}^{2}+3.1661{x}_{1}^{2}{x}_{4}+19.84{x}_{1}^{2}{x}_{3}\)

Subjected to:

Variable range: \(0\le {x}_{1},{x}_{2}\le 99\) and \(10\le {x}_{3},{x}_{4}\le 200\)

The optimal design for this problem is compared with some well-known algorithms like RSA [37], TLBO [38], GSA [39], PSO [13], GA [21], and some variations of PSO including CPSO[40], HPSO [41], G-QPSO [42], and PSO-DE [43]. According to the results of the pressure vessel design problem, as presented in Table 4, the adjusted PSO achieved better results. The best cost function value achieved by the adjusted PSO was 5881.39517, which is not only better than the default and hybrid PSOs but also other algorithms as well as the obtained average cost of 6068.024. Also, the standard deviation for 30 runs was improved by almost 84% compared with the default PSO, demonstrating the proposed framework's efficiency.

4.2.2 Compound gear design

Finally, we confront an exemplar of a discrete design problem within the domain of mechanical engineering, as addressed in [44]. The aim is to find the minimization of the gear ratio, a parameter concretely defined as the ratio between the angular velocity of the output shaft and that of the input shaft. Figure 11 illustrates the compound gear whose number of teeth is taken as discrete variable. The lower and upper bounds of the variables are 12 and 60, respectively. The ensuing mathematical formulation represents this optimization problem.

Consider \(\overrightarrow{{\varvec{X}}}=\left[{x}_{1},{x}_{2},{x}_{3},{x}_{4}\right]=[{n}_{A},{n}_{B},{n}_{C},{n}_{D}]\)

Minimize \({f}_{\text{cost}}\left(\overrightarrow{{\varvec{X}}}\right)={\left(\frac{1}{6.931}-\frac{{{x}_{3}x}_{2}}{{x}_{1}{x}_{4}}\right)}^{2}\)

Discrete variable range: \(12\le {x}_{1},{x}_{2},{x}_{3},{x}_{4}\le 60\)

The optimal design of adjusted PSO is compared to the results of some famous algorithms such as GA [21], PSO [13], ICA [45], MFO [46], BBO [47], CBO [22], and NNA [48], which were reported in the work of Kaveh et al. [35]. Table 5 provides these results regarding the compound gear design problems for some known algorithms and the present study. It can be seen that the adjusted PSO could find the global optima like some of the comparing algorithms which is 2.7009E−12. Also, the obtained average cost for the adjusted and default PSO was enhanced noticeably from 7.9383E−08 to 5.6471E−10 which is the best among the mentioned algorithms. This improvement is observed in the worst cost from 1.0222E−06 to 2.3576E−09 showing the influence of the adjustment.

4.3 Structural optimization problems

This section presents two illustrative instances of optimal design of space truss structures, namely the 72-bar spatial truss, and the 120-bar dome truss. Subsequently, a comparative analysis is conducted between the outcomes of the default and adjusted Particle Swarm Optimization, and other studies conducted likewise using the state-of-art algorithms showcasing the efficacy of the proposed framework. The penalty function has been employed for constraint handling in the optimization process. To have a fair comparison 30 independent runs were executed.

For truss structures, the constraints are as follows:

where m is the number of nodes; nc denotes the number of compression elements; σi and δi are the stress and nodal deflection, respectively; σib represents allowable buckling stress in member i when it is in compression.

4.3.1 The 72-bar spatial truss

The 72-bar spatial truss configuration illustrated in Fig. 12 has been the subject of an investigation by various scholars, including Schmit and Farshi [49], Khot and Berke [50], Lee and Geem [51], Li et al. [52], Degertekin and Hayalioglu [53], Talatahari et al. [54], and Kaveh et al. [55]. The material possesses a density of 0.1 lb/in.3 and an elasticity modulus of 10,000 ksi. The individual members are exposed to stress thresholds within the range of ± 25 ksi, while the uppermost nodes encounter displacement constraints of ± 0.25 in. in both the x and y directions.

The structural framework consists of 72 members, categorized into 16 groups as follows: (1) A1–A4, (2) A5–A12, (3) A13–A16, (4) A17–A18, (5) A19–A22, (6) A23–A30, (7) A31–A34, (8) A35–A36, (9) A37–A40, (10) A41–A48, (11) A49–A52, (12) A53–A54, (13) A55–A58, (14) A59–A66 (15) A67–A70, (16) A71–A72. In Case 1, the prescribed minimum cross-sectional area for each member is set at 0.1 in2, while in Case 2, the stipulated minimum cross-sectional area is 0.01 in2 for each member. These load cases are presented in Table 6.

As presented in Table 7, the adjusted PSO found the lightest design with a value of 369.6438 lb and the best average and standard deviation. It also could converge with a much smaller number of analyses which is vividly shown in Fig. 13. The related nodal displacement ratios for the active degrees of freedom and the elemental stress ratios are presented in Fig. 14.

This 72-bar spatial truss was also subjected to both load cases, as listed in Table 6, and the same table and figures are presented for this scenario. Figure 15 compares the convergence rates for the adjusted PSO and the aforementioned algorithms under these load cases. It is clear that the adjusted PSO had a better convergence compared with PSOs and its rate is acceptable and comparable with other algorithms having considered that its design is the lightest value 363.839441 lb, as presented in Table 8. Also, the ratios for nodal displacement and elemental stress for the 72-bar spatial truss are plotted in Fig. 16.

4.3.2 The 120-bar dome truss

The last structural optimization problem is a dome space truss. The configuration of the dome truss structure is displayed in Fig. 17. The design parameters are presented in Table 9, and the allowable stresses for member i are defined according to [56] as follows:

where σc is calculated as follows:

where fy is the yield stress, E is the elastic modulus, Cc is the critical slenderness ratio (\({C}_{c}=\sqrt{2{\pi }^{2}E/{f}_{y}}\)), λi is the slenderness ratio (\({\lambda }_{i} =k{L}_{i}/{r}_{i}\)), k is the effective length factor, and ri is the radius of gyration. The members are divided into 7 groups, as represented in Table 10. The structure is subjected to the vertical loads at all unsupported nodes as follows: − 13.49 kips at node 1, − 6.744 kips at nodes 2 through 14, and − 2.248 kips at the remaining ones.

In this optimization problem, likewise other ones presented in this study, PSO parameters have been adjusted employing the framework. Then, the PSO algorithm was applied to optimize the weight of the 120-bar space truss. The corresponding results consisting of the best, the average, the standard deviation, and the number of analyses of the adjusted and the PSO variations by Kaveh et al. [55, 57, 58], Talatahari et al. [54], and Jafari et al. [59] are provided in Table 10. It can be observed that by adjusting the parameters, PSO carried out much better performance in not only the values but also standard deviation showing the reliability of the algorithm for improving a basic version of an algorithm. For instance, as is shown in Fig. 18, the adjustment led to convergence after almost 3000 analyses while for PSO [54] and PSO [59] was 15,000. Also, the best design of the adjusted PSO was satisfactory, and just HPSSO [55] and PSOPC [59] could reach a lighter design with a mere small difference. Moreover, the related stress and displacement ratios for the 120-bar dome truss using the adjusted PSO when subjected to the nodal loads are presented in Fig. 19.

5 Concluding remarks

In this study, a framework for the optimal design using metaheuristic parameter adjustment, termed Multi-Stage Parameter Adjustment (MSPA) was proposed. This framework consists of four stages: data generation, Primary Optimizer, data processing, and optimization. To perform this, initially, parameter ranges were determined then, to generate a data set, XLHS was utilized to completely cover the search space of continuous variables (parameters) and prepare data for machine learning. Secondly, the Primary Optimizer (GA), whose parameters do not require extensive adjustment, was employed for each increment to optimize the main optimizer’s (PSO) parameter based on the benchmark problem for different numbers of variables. In the third stage, an ANN model was developed whose input data is the average values of the increments (average values of parameters for each part) and the number of variables, and their corresponding adjusted parameters are considered output data. Lastly, structural optimization employing the adjusted metaheuristic was performed. This adjustment was tested against some mathematical, which were unimodal, multimodal, and multimodal with fixed dimensions, two engineering, and two structural optimization problems. The results demonstrate that the proposed framework enhanced the performance of the metaheuristics in the majority of optimization problems, even up to about 10E + 6 times smaller value in the mathematical optimization problem (F1), which shows the efficiency of the presented framework (MSPA) and its influence led to the results which were better than the basic PSO and even most of the other state-of-the-art algorithms. As explained earlier, this framework is flexible in the selection of Primary Optimizer and Machine Learning method, and this establishes the effectiveness and applicability of MSPA.

References

Kaveh, A.: Advances in metaheuristic algorithms for optimal design of structures. Springer (2014). https://doi.org/10.1007/978-3-319-46173-1

Yang, X.-S.: Engineering optimization: an introduction with metaheuristic applications. John Wiley & Sons (2010).

Sherif, K., Witteveen, W., Puchner, K., Irschik, H.: Efficient topology optimization of large dynamic finite element systems using fatigue. AIAA J. 48, 1339–1347 (2010). https://doi.org/10.2514/1.45196

Kugi, A., Schlacher, K., Irschik, H.: Optimal control of nonlinear parametrically excited beam vibrations by spatially distributed sensors and actors. In: International Design Engineering Technical Conferences and Computers and Information in Engineering Conference. p. V01CT12A020. American Society of Mechanical Engineers (1997). https://doi.org/10.1115/DETC97/VIB-4171

Eiben, Á.E., Hinterding, R., Michalewicz, Z.: Parameter control in evolutionary algorithms. IEEE Trans. Evol. Comput. 3, 124–141 (1999). https://doi.org/10.1109/4235.771166

Bäck, T., Schwefel, H.-P.: An Overview of Evolutionary Algorithms for Parameter Optimization. Evol. Comput. 1, 1–23 (1993). https://doi.org/10.1162/evco.1993.1.1.1

Wong, K.Y.: Parameter tuning for ant colony optimization: A review. In: 2008 International Conference on Computer and Communication Engineering. pp. 542–545. IEEE (2008). https://doi.org/10.1109/ICCCE.2008.4580662

Fığlalı, N., Özkale, C., Engin, O., Fığlalı, A.: Investigation of ant system parameter interactions by using design of experiments for job-shop scheduling problems. Comput. Ind. Eng. 56, 538–559 (2009). https://doi.org/10.1016/j.cie.2007.06.001

Dorigo, M., Birattari, M., Stutzle, T.: Ant colony optimization. IEEE Comput. Intell. Mag. 1, 28–39 (2006). https://doi.org/10.1109/MCI.2006.329691

Akay, B., Karaboga, D.: Parameter tuning for the artificial bee colony algorithm. In: Computational Collective Intelligence. Semantic Web, Social Networks and Multiagent Systems: First International Conference, ICCCI 2009, Wrocław, Poland, October 5–7, 2009. Proceedings 1. pp. 608–619. Springer (2009). https://doi.org/10.1007/978-3-642-04441-0_53

Karaboga, D., Akay, B.: A comparative study of artificial bee colony algorithm. Appl. Math. Comput. 214, 108–132 (2009). https://doi.org/10.1016/j.amc.2009.03.090

Iwasaki, N., Yasuda, K., Ueno, G.: Dynamic parameter tuning of particle swarm optimization. IEEJ Trans. Electr. Electron. Eng. 1, 353–363 (2006). https://doi.org/10.1002/tee.20078

Eberhart, R., Kennedy, J.: Particle swarm optimization. In: Proceedings of the IEEE international conference on neural networks. pp. 1942–1948. Citeseer (1995). https://doi.org/10.1109/ICNN.1995.488968

Yang, X.-S., Deb, S., Loomes, M., Karamanoglu, M.: A framework for self-tuning optimization algorithm. Neural Comput. Appl. 23, 2051–2057 (2013). https://doi.org/10.1007/s00521-013-1498-4

Yang, X.-S.: Firefly algorithms for multimodal optimization. In: International symposium on stochastic algorithms. pp. 169–178. Springer (2009). https://doi.org/10.1007/978-3-642-04944-6_14

Tan, C.G., Choong, S.S., Wong, L.-P.: A machine-learning-based approach for parameter control in bee colony optimization for traveling salesman problem. In: 2021 International Conference on Technologies and Applications of Artificial Intelligence (TAAI). pp. 54–59. IEEE (2021). https://doi.org/10.1109/TAAI54685.2021.00019

Teodorović, D.: Bee colony optimization (BCO). In: Innovations in swarm intelligence. pp. 39–60. Springer (2009). https://doi.org/10.1007/978-3-642-04225-6_3

Huynh, T.N., Do, D.T.T., Lee, J.: Q-Learning-based parameter control in differential evolution for structural optimization. Appl. Soft Comput. 107, 107464 (2021). https://doi.org/10.1016/j.asoc.2021.107464

Fisher, R.A., Fisher, R.A., Genetiker, S., Fisher, R.A., Genetician, S., Britain, G., Fisher, R.A., Généticien, S.: The design of experiments. Oliver and Boyd Edinburgh (1966)

McKay, M.D., Beckman, R.J., Conover, W.J.: A comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 42, 55–61 (2000). https://doi.org/10.1080/00401706.2000.10485979

Sastry, K., Goldberg, D., Kendall, G.: Genetic algorithms. Search methodologies: Introductory tutorials in optimization and decision support techniques. 97–125 (2005). https://doi.org/10.1007/0-387-28356-0_4

Kaveh, A., Mahdavi, V.R.: Colliding bodies optimization: a novel meta-heuristic method. Comput. Struct. 139, 18–27 (2014). https://doi.org/10.1016/j.compstruc.2014.04.005

Rahami, H., Kaveh, A., Gholipour, Y.: Sizing, geometry and topology optimization of trusses via force method and genetic algorithm. Eng. Struct. 30, 2360–2369 (2008). https://doi.org/10.1016/j.engstruct.2008.01.012

Khatri, C.B., Yadav, S.K., Thakre, G.D., Rajput, A.K.: Design optimization of vein-bionic textured hydrodynamic journal bearing using genetic algorithm. Acta Mech. 235, 167–190 (2024). https://doi.org/10.1007/s00707-023-03734-9

Jordan, M.I., Mitchell, T.M.: Machine learning: Trends, perspectives, and prospects. Science 1979(349), 255–260 (2015). https://doi.org/10.1126/science.aaa8415

Iranmanesh, A., Kaveh, A.: Structural optimization by gradient-based neural networks. Int J Numer Methods Eng. 46, 297–311 (1999)

Kaveh, A., Dadras Eslamlou, A., Javadi, S.M., Geran Malek, N.: Machine learning regression approaches for predicting the ultimate buckling load of variable-stiffness composite cylinders. Acta Mech. 232, 921–931 (2021). https://doi.org/10.1007/s00707-020-02878-2

You, L.-F., Zhang, J.-G., Zhou, S., Wu, J.: A novel mixed uncertainty support vector machine method for structural reliability analysis. Acta Mech. 232, 1497–1513 (2021). https://doi.org/10.1007/s00707-020-02906-1

Volchok, D., Danishevskyy, V., Slobodianiuk, S., Kuchyn, I.: Fuzzy sets application in the problems of structural mechanics and optimal design. Acta Mech. 234, 6191–6204 (2023). https://doi.org/10.1007/s00707-023-03713-0

Yuan, X., Chen, G., Jiao, P., Li, L., Han, J., Zhang, H.: A neural network-based multivariate seismic classifier for simultaneous post-earthquake fragility estimation and damage classification. Eng. Struct. 255, 113918 (2022). https://doi.org/10.1016/j.engstruct.2022.113918

He, Z.C., Peng, Y., Han, J., et al.: A Cluster and Search Stacking Algorithm (CSSA) for predicting the ultimate bearing capacity of an HSS column. Acta Mech. 234, 1627–1648 (2023). https://doi.org/10.1007/s00707-022-03446-6

Kaveh, A., Eskandari, A., Movasat, M.: Buckling resistance prediction of high-strength steel columns using Metaheuristic-trained Artificial Neural Networks. In: Structures. p. 104853. Elsevier (2023). https://doi.org/10.1016/j.istruc.2023.07.043

Taha, M.M.R., Lucero, J.: Damage identification for structural health monitoring using fuzzy pattern recognition. Eng. Struct. 27, 1774–1783 (2005). https://doi.org/10.1016/j.engstruct.2005.04.018

Satpathy, R.P.K., Kumar, K., Hirwani, C.K., Kumar, V., Kumar, E.K., Panda, S.K.: Computational deep learning algorithm (vision/frequency response)-based damage detection in engineering structure. Acta. Mech. 234, 5919–5935 (2023). https://doi.org/10.1007/s00707-023-03709-w

Kaveh, A., Eslamlou, A.D.: Water strider algorithm: A new metaheuristic and applications. In: Structures. pp. 520–541. Elsevier (2020). https://doi.org/10.1016/j.istruc.2020.03.033

Sandgren, E.: Nonlinear integer and discrete programming in mechanical design. In: International design engineering technical conferences and computers and information in engineering conference. pp. 95–105. American Society of Mechanical Engineers (1988). https://doi.org/10.1115/DETC1988-0012

Abualigah, L., Abd Elaziz, M., Sumari, P., Geem, Z.W., Gandomi, A.H.: Reptile Search Algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 191, 116158 (2022). https://doi.org/10.1016/j.eswa.2021.116158

Rao, R.V., Savsani, V.J., Vakharia, D.P.: Teaching–learning-based optimization: a novel method for constrained mechanical design optimization problems. Comput. Aided Des. 43, 303–315 (2011). https://doi.org/10.1016/j.cad.2010.12.015

Rashedi, E., Nezamabadi-Pour, H., Saryazdi, S.: GSA: a gravitational search algorithm. Inf. Sci. (N Y). 179, 2232–2248 (2009). https://doi.org/10.1016/j.ins.2009.03.004

He, Q., Wang, L.: An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng. Appl. Artif. Intell. 20, 89–99 (2007). https://doi.org/10.1016/j.engappai.2006.03.003

He, Q., Wang, L.: A hybrid particle swarm optimization with a feasibility-based rule for constrained optimization. Appl. Math. Comput. 186, 1407–1422 (2007). https://doi.org/10.1016/j.amc.2006.07.134

dos Santos Coelho, L.: Gaussian quantum-behaved particle swarm optimization approaches for constrained engineering design problems. Expert Syst. Appl. 37, 1676–1683 (2010). https://doi.org/10.1016/j.eswa.2009.06.044

Liu, H., Cai, Z., Wang, Y.: Hybridizing particle swarm optimization with differential evolution for constrained numerical and engineering optimization. Appl. Soft Comput. 10, 629–640 (2010). https://doi.org/10.1016/j.asoc.2009.08.031

Kannan, B.K., Kramer, S.N.: An augmented Lagrange multiplier based method for mixed integer discrete continuous optimization and its applications to mechanical design. J. Mech. Des (1994). https://doi.org/10.1115/1.2919393

Atashpaz-Gargari E, Lucas C (2007) Imperialist competitive algorithm: an algorithm for optimization inspired by imperialistic competition. In: 2007 IEEE congress on evolutionary computation. pp. 4661–4667. IEEE. https://doi.org/10.1109/CEC.2007.4425083

Mirjalili, S.: Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl Based Syst. 89, 228–249 (2015). https://doi.org/10.1016/j.knosys.2015.07.006

Simon, D.: Biogeography-based optimization. IEEE Trans. Evol. Comput. 12, 702–713 (2008). https://doi.org/10.1109/TEVC.2008.919004

Sadollah, A., Sayyaadi, H., Yadav, A.: A dynamic metaheuristic optimization model inspired by biological nervous systems: Neural network algorithm. Appl. Soft Comput. 71, 747–782 (2018). https://doi.org/10.1016/j.asoc.2018.07.039

Schmit, L.A., Jr., Farshi, B.: Some approximation concepts for structural synthesis. AIAA J. 12, 692–699 (1974). https://doi.org/10.2514/3.49321

Khot, N.S., Berke, L.: Structural optimization using optimality criteria methods. (1984)

Lee, K.S., Geem, Z.W.: A new structural optimization method based on the harmony search algorithm. Comput. Struct. 82, 781–798 (2004). https://doi.org/10.1016/j.compstruc.2004.01.002

Li, L.-J., Huang, Z.B., Liu, F., Wu, Q.H.: A heuristic particle swarm optimizer for optimization of pin connected structures. Comput. Struct. 85, 340–349 (2007). https://doi.org/10.1016/j.compstruc.2006.11.020

Degertekin, S.O., Hayalioglu, M.S.: Sizing truss structures using teaching-learning-based optimization. Comput. Struct. 119, 177–188 (2013). https://doi.org/10.1016/j.compstruc.2012.12.011

Talatahari, S., Kheirollahi, M., Farahmandpour, C., Gandomi, A.H.: A multi-stage particle swarm for optimum design of truss structures. Neural Comput. Appl. 23, 1297–1309 (2013). https://doi.org/10.1007/s00521-012-1072-5

Kaveh, A., Bakhshpoori, T., Afshari, E.: An efficient hybrid particle swarm and swallow swarm optimization algorithm. Comput. Struct. 143, 40–59 (2014). https://doi.org/10.1016/j.compstruc.2014.07.012

Construction, A.: Manual of steel construction: allowable stress design. American Institute of Steel Construction: Chicago, IL, USA. 95, (1989)

Kaveh, A., Talatahari, S.: A hybrid particle swarm and ant colony optimization for design of truss structures. Asian J. Civ. Eng. 9(4), 329–348 (2008)

Kaveh, A., Talatahari, S.: Particle swarm optimizer, ant colony strategy and harmony search scheme hybridized for optimization of truss structures. Comput. Struct. 87, 267–283 (2009). https://doi.org/10.1016/j.compstruc.2009.01.003

Jafari, M., Salajegheh, E., Salajegheh, J.: Optimal design of truss structures using a hybrid method based on particle swarm optimizer and cultural algorithm. In: Structures. pp. 391–405. Elsevier (2021). https://doi.org/10.1016/j.istruc.2021.03.017

Funding

No funding was received for conducting this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kaveh, A., Eskandari, A. Multi-stage parameter adjustment to enhance metaheuristics for optimal design. Acta Mech (2024). https://doi.org/10.1007/s00707-024-04052-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00707-024-04052-4