Abstract

Deep learning (DL) is gaining significant prevalence in every field of study due to its domination in training large data sets. However, several applications are utilizing machine learning (ML) methods from the past several years and reported good performance. However, their limitations in terms of data complexity give rise to DL methods. Intrusion detection is one of the prominent areas in which researchers are extending DL methods. Even though several excellent surveys cover the growing body of research on this subject, the literature lacks a detailed comparison of ML methods such as ANN, SVM, fuzzy approach, swarm intelligence and evolutionary computation methods in intrusion detection, particularly on recent research. In this context, the present paper deals with the systematic review of ML methods and DL methods in intrusion detection. In addition to reviewing ML and DL methods, this paper also focuses on benchmark datasets, performance evaluation measures and various applications of DL methods for intrusion detection. The present paper summarizes the recent work, compares their experimental results for detecting network intrusions. Furthermore, current research challenges are identified for helping fellow researchers in the era of DL-based intrusion detection.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the growth of the digital world, Internet has become an integral part of our lives. The dependence on Internet is growing day by day with the development of smart cities, autonomous cars, health monitoring via smartwatches and mobile banking etc. (Ziegler 2019; Taddeo et al. 2019; Serrano 2019). Although these technologies bring in many benefits to the users and society in general, they also pose several risks. Hackers can exploit the vulnerabilities resulting in theft and sabotage, affecting the lives of people globally. Figure 1 illustrates the most frequent targeted cyber warfare attacks between 2009 and 2019 (geopolitical-attacks 2019).

Most frequent targeted cyber warfare attacks between 2009 and 2019 (geopolitical-attacks 2019)

Cyberattacks can be costly for businesses next to financial loss; it also leads to loss of reputation (Ghose et al. 2019). Therefore network security has become an important topic. The conventional methods like firewalls, encryption and anti-virus software packages adopted by organizations play a significant role in securing network infrastructure. Still, these methods provide the first level of defence and cannot completely protect the networks and systems from progressive attacks and malware (Srinivas et al. 2019; Kandan et al. 2019). As a result, some intruders still manage to penetrate, resulting in a breach.

Organizations use intrusion detection systems (IDSs), which Denning proposed in 1987, as an additional security technique for securing their networks (Pradhan et al. 2020). The research efforts of Denning have given directions to construct detection models effectively and accurately. In literature, IDS methods are mainly classified as Knowledge-based, Statistical and ML methods (Kumar et al. 2010) as discussed in Sect. 2.2. Artificial intelligence (AI) and ML methods determine the models from the training dataset (Arrieta et al. 2020).

These ML methods have shown excellence to achieve high detection accuracy. Still, there are some limitations of ML methods like handling raw, unlabeled or high dimensional data (Nguyen and Reddi 2019), degrades accuracy in case of a large dataset, manual feature extraction, requires expensive data labelling, time-consuming and tedious task, unable to detect multi-classification attacks (Alzaylaee et al. 2020; Meng et al. 2020). To combat these limitations, deep learning (DL)-based methods emerged in 2006. Fortunately, DL methods, known for their abilities to handle labelled or unlabelled data or solve complex problems with the help of the high powered GPU (Nguyen and Reddi 2019).

To simplify the use of ML and DL methods in intrusion detection, it is necessary to understand IDS, standard benchmark datasets, ML methods, their challenges and the reasons behind the evolution of DL methods (Nguyen and Reddi 2019; Chaabouni et al. 2019). The summarized review of ML/DL methods helps the researchers explore their advantages and disadvantages in IDS.

This paper has a dual objective. The first objective is to present a survey of recent contributions to ML and DL methods. The second objective is to explore the reasons behind the evolution of DL methods for intrusion detection.

The review paper is organized into different sections. Section 2 discusses IDSs and their taxonomy. Section 3 describes various benchmark datasets and performance evaluation measures of IDS. ML methods used for intrusion detection are discussed in Sect. 5. Section 6 introduces DL-based intrusion detection. The crucial challenges for accurate intrusion detection are discussed in Sect. 7. Finally, a conclusion is drawn in Sect. 8 at the end of this paper. To meet this paper’s objectives, we attempt to answer the following research questions, given in Table 1.

2 Background

This section introduces intrusion detection systems followed by a taxonomy of IDS. The main motive of this section is to give an overview of the IDS and its taxonomy.

2.1 Intrusion detection systems

IDS can be a hardware or software system that is used to detect suspicious activity in the network. Monitoring the network, finding breaches and reporting to the administrator are some of the main functions performed by IDS (Vinayakumar et al. 2019; Almomani et al. 2020). Advanced IDS can also take actions when malicious activities are found like blocking the traffic from the source IP Address (Vinayakumar et al. 2019; Chevalier et al. 2020). IDSs can be divided based on different criteria like the technology used, the response of IDSs etc. IDSs can be classified into three categories based on the methodology used in intrusion detection, IDS’ reaction, and IDS’ architecture as depicted in Fig. 2.

2.1.1 IDSs classification based on detection method

IDS may be categorized into two classes, namely, misuse and anomaly detection. Misuse detection operates with predefined patterns of known attacks, also called signatures. It can be further divided into stateless and state-full IDS (Pandey et al. 2019). State-less methods use only existing signature, whereas state-full methods also use previous signatures and existing signatures (Pandey et al. 2019).

This approach provides high accuracy and low false alarm rates for known attacks but is not practical for detecting novel attacks. One of the known solutions to address this problem is regularly updating the database, which is a time-consuming and costly process. Hence it is not considered as feasible (Kurniabudi et al. 2019).

In contrast, anomaly detection deals with profiling user behaviour. In this approach, a particular model of regular user activity is defined, and any deviation from this model is known as anomalous. Anomaly detection methods can be further categorized into static and dynamic methods (Kurniabudi et al. 2019). The static anomaly detection method works only on the fixed part of the system. The dynamic anomaly detection method extracts patterns (also known as “profiles”) from network usage history. This method can detect novel attacks but may lead to high FAR and lacks high accuracy (Kurniabudi et al. 2019; Mäkelä 2019). Another drawback of this system is that an attacker can slowly change its behaviour from abnormal to normal when he feels that he is being profiled. Researchers have also suggested hybrid approaches in the recent past for further improvement in intrusion detection (Guo et al. 2016; Kim et al. 2014).

2.1.2 IDSs classification based on reaction/response method

IDS can be classified into passive and active IDSs (Tidjon et al. 2019; Aljumah 2017) based on type of its response. Passive IDS is set up to only monitor and inform administrator about the intrusions by generating alerts. In contrast, an active IDS can act in real-time by blocking the suspected attack/intrusion (Tidjon et al. 2019; Kim et al. 2014).

2.1.3 IDSs classification based on architecture

IDSs can be divided into three categories based on their architecture, viz., host, network and hybrid IDSs (i.e. a mix of host and network). In a host-based IDS, an agent/sensor is installed on each computer system involved (Feng et al. 2019). It identifies intrusions by analyzing application logs, audit trails, system calls and other activities within the host. In case of a need to generate additional event information/logs, there is a dependency on the developer to modify the operating system kernel code. This approach increases cost which might be unacceptable for some customers (Arabo 2019). Also, deployment of the agent across all computer systems can be cumbersome.

In the network-based system, IDS is installed on the server. The sensors are deployed to identify intrusions by monitoring network traffic across multiple hosts (Chevalier et al. 2020). They are independent of the operating system, are highly portable and easy to implement. However, it shows limitations when high peaks in network traffic or high-speed data are involved. In a hybrid system, IDS is required on the server as well as on each client. It combines host and network approaches and is considered as the most effective and logical approach for intrusion detection (Chevalier et al. 2020; Kurniabudi et al. 2019).

2.2 IDS taxonomy

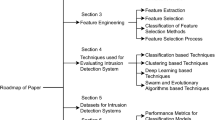

Figure 3 shows the proposed IDS taxonomy of IDS as per literature analysis. As mentioned in Sect. 2.1, Intrusion detection methods are divided into the anomaly and signature-based methods.

2.2.1 Signature based IDS

Organizations use Signature-based IDS to protect themselves from various known attacks whose signatures are available in the database. This IDS search audited pattern against a series of malicious bytes/known patterns. Signature-based IDS communicate the cause of intrusion alert (Jacob and Wanjala 2018). Signature-based IDS can easily detect known attacks, but it fails to work for new attacks where patterns are not known or not updated in the database. Regular updates of patterns in the database can deal with this issue. But when the user uses advanced technologies in mounting attacks like no operation (NOP) generators, payload encoders and encrypted data channels, signature-based detection does not work well. Its efficiency decreases significantly with creating a new signature for every variation (Rao and Raju 2019). Also, with the increase in the number of signatures, the performance of the system engine decreases. The failure to detect novel attacks and update the database for new patterns regularly are the causes to work in the field of anomaly detection IDS (Rao and Raju 2019; Kang and Kang 2016).

2.2.2 Anomaly based IDS

Anomaly-based IDS detect both network and computer intrusions by monitoring the system. After monitoring, instead of patterns or signatures, it uses heuristics/rules to classify the events as either normal or anomalous and attempts to detect abnormal operation (Farzaneh et al. 2019; Worku 2019). Anomaly detection methods can detect novel attacks but defining its ruleset is a cumbersome task. Anomaly-based IDS are further classified into three classes: knowledge-based, statistical, and machine learning methods as depicted in Fig. 3 described below.

Statistical anomaly IDSs were used for detecting intrusions in information systems earlier. Statistical tests were performed to check whether the observed behaviour is different from the expected behaviour. For statistical approaches, previous knowledge and frequent updates of the signatures are not required. It can detect low and slow attacks, especially DoS attacks. The statistical approach’s limitation is the long lead time involved in learning to deliver accurate and valuable results. The most commonly used methods in this category include Markov method, deviation method, multivariate method, and time series method.

Knowledge-based IDS works by gathering knowledge about specific attacks and system vulnerabilities (Hussain and Khan 2020). They work by looking into its knowledge base to identify an attack. Expert System, Petri Nets, Signature Analysis and State Transition are the various examples of knowledge-based IDS. The accuracy rate of results produced using these methods is high, with a low false alarm rate. To keep knowledge-based IDS effective, attack data needs to be updated regularly. The updation of regular data is very time-consuming, which is the main limitation of knowledge-based IDS (Hussain and Khan 2020).

Machine learning is a large field of study that overlaps with and inherits ideas from many related fields such as artificial intelligence. The focus of the field is learning, that is, acquiring skills or knowledge from experience. Most commonly, this means synthesizing practical concepts from historical data. Nowadays, most researchers focus on ML methods due to its built-in properties like robustness, resilience to noisy data and adaptability. Interested researchers can further explore the topic at (Lin et al. 2015; Liao et al. 2013; Aissa and Guerroumi 2016). ML methods proposed for intrusion detection are depicted in Fig. 3 and explained in Sect. 5.

3 Intrusion detection datasets

Several benchmark datasets have been designed to evaluate and compare the performance of IDSs. This section focuses on the most commonly used datasets for intrusion detection.

Training and evaluating IDS need data. So, data is gathered from different sources like network data packets, low-level system information like log files or system dumps etc which is used as benchmark dataset. Datasets are categorized into three types: Synthetic or Self-produced, Benchmark and Real-life datasets, as shown in Fig. 4.

3.1 Synthetic datasets

Synthetic datasets are used to fulfil particular demands or conditions in evaluating IDS. These datasets are used for designing any model for theoretical analysis. These designs can be refined to test and create various types of test scenarios. It facilitates the designers to construct realistic behaviour profiles to test a proposed system for attackers and regular users. It provides initial validation of a particular method; if the outcome proves satisfactory, the developers then continue to evaluate a method in a specific field of real-life data. The shortcomings of other datasets are the reasons for the origin of self-produced datasets. This results in the generation of artificial data and merger into training sets.

3.2 Benchmark datasets

Benchmark datasets include datasets like DARPA 98 KDD Cup99 (Uci 2019), NSL-KDD (Unb 2019a), DEFCON 2000/2002 dataset (Sharafaldin et al. 2018), UNM dataset, CAIDA2002/2016 datasets, LBNL dataset (Gharib et al. 2016), CDX 2009 (Sangster et al. 2009) dataset, Twente 2009 (Sperotto et al. 2009),UMASS 2011, ISCX 2012 (Unb 2019b), ADFA 2013 (Creech and Hu 2013)and CSE-CIC-2018 dataset. These datasets have been used commonly for evaluating IDSs in literature. Among these DARPA 98, KDD Cup99 and NSL-KDD are the most common ones used for the evaluation of IDS shown in Table 2.

DARPA 98, the base dataset of KDD 99 dataset contained raw TCP/ IP dump files. This dataset contained 38 attacks. The training size of dataset was 6.2 Gb and testing Size was 3.67 Gb. The training and testing of data was done for seven weeks and two weeks respectively for this purpose.

In 1999, DARPA 98 dataset was summarized with 41-features which is known as KDD 99 benchmark dataset for intrusion detection. KDD 99 dataset covered Probing attacks, DoS attacks, U2R attacks and R2L attacks. KDD dataset was divided into labeled and unlabeled containing 4,898,431 records and 311,029 records respectively. The various types of attacks available in KDD99 dataset are described in Table 3.

Here the training size and testing size of attacks U2R and R2L was very small. This dataset contains huge number of redundant records as shown in Table 4.

The shortcomings of KDD99’s related to IDS are well documented in literature (Brugger and Chow 2007; Mahoney and Chan 2003; Sommer and Paxson 2010).

The NSL-KDD dataset is a refined version of the KDD’99. (Tavallaee et al. 2009). Most researchers have applied different methods and tools on NSL-KDD dataset to build effective IDS. The NSL-KDD dataset’s analysis using various ML methods available in the WEKA tool is described in Revathi and Malathi (2013). To train and test several novels and existing attacks, NSL-KDD dataset was used by K-means clustering algorithm (Kumar et al. 2013). In Sanjaya and Jena (2014) the comparative study on the KDD99 data set with NSL-KDD dataset was done using ANN and SOM. The ML algorithms are used to analyse various datasets like KDD99, NSL-KDD and GureKDD (Sanjaya and Jena 2014). The various types of attacks in NSL-KDD dataset are described in Table 5.

Improvements in KDD’99 dataset (Unb 2019a) The key advantages of NSL-KDD data set over the original KDD data set are:

-

1.

The classifiers will not be biased toward frequent records due to not inclusion of redundant records in the training set.

-

2.

The performance of the learners is not biased.

-

3.

Reasonable number of records in the train and test sets is available.

Since NSL-KDD is a refined version of KDD-99. So shortcomings of KDD99 also present in the NSL-KDD dataset. Some of the other benchmark datasets depicted in Fig. 4 are explained here.

LBNL (Lawrence Berkeley National Laboratory and ICSI—2004/2005) This dataset contains normal user behaviour. It is not labelled and suffers from heavy anonymization (Gharib et al. 2016).

UNM UNM dataset was proposed in 2004 and unable to fulfil current computer technology trends.

CDX 2009 This dataset was created during network welfare competition to generate a labelled dataset. Attackers used various tools like Web Scarab, Nikto, and Nessus to investigate and detect attacks automatically.IDS alert rules can also be tested by it. Volume and lack of traffic diversity are the limitations of this dataset (Sangster et al. 2009).

Twente—2009 It is a labelled and more realistic dataset. It captured data from a honey pot network and includes few unknown and uncorrelated alerts traffic. It also includes OpenSSH, Apache web server and Proftp using auth/ident on port 113. It suffers from diversity of attacks and lack of volume (Sperotto et al. 2009).

UMASS—2011 This dataset includes various trace files both from wireless applications and network packets (U. of massachusetts amherst 2019; Nehinbe 2011).It lacks a variety of traffic and attacks. This limitation is not beneficial for testing IDS methods (Prusty et al. 2011).

ADFA—2013 KDD and UNM datasets fails to fulfil the present needs of computer technology. ADFA Linux (ADFA-LD) was proposed as a new dataset by Creech and Hu (2013). This dataset is used for the evaluation of ML-based IDS. The attributes of ADFA-LD cybersecurity dataset are challenging to understand. Therefore, there is a need to improve its attributes for better understanding (Abubakar et al. 2015).

CSE-CIC-2018 Dataset This dataset uses a systematic approach to generate a benchmark dataset to detect intrusion. It is based on the creation of user profiles and behaviours seen on the network. This dataset includes seven different attack scenarios.

3.3 Real life datasets

This kind of datasets contains real-life data records. It includes Kyoto 2006/2009, ISCX2012, and UNSW-NB15 datasets.

Kyoto 2006/2009 This dataset contains 14 statistical features (Kyoto2006+ 2015; Song et al. 2011) derived from KDD Cup99 dataset ignoring redundant features. Besides, it also includes ten features for better evaluation and analysis of NIDS. It tries to overcome the limitations of KDD Cup99 dataset. In this dataset, only those attacks are directed at the honey-pots, so it provides a limited view of network traffic. The normal traffic used for simulation during the attacks does not represent normal traffic from the real world. There are no false positives, which are essential for reducing the number of alerts (Song et al. 2011; Sato et al. 2012; Chitrakar and Huang 2012). A comparison of various datasets is given in Al-Dhafian et al. (2015) this paper.

ISCX2012 The dynamic approach was used to generate this dataset. To generate realistic and practical evaluation datasets for IDS, the author presents good guidelines. Alpha and beta profiles are the two parts of this approach. This dataset comprises of relevant profiles and network traces. New network protocols are not considered in this dataset (Shiravi et al. 2012).

UNSW-NB15 TCP-dump tool was used to capture raw traffic. It was used for academic research purpose and contained a hybrid of normal activities and attack behaviours. To generate this dataset, twelve algorithms and tools were used.

Based on the literature, it is concluded that different researchers used different datasets as per their requirements. In the literature, KDD Cup99 and NSL-KDD dataset are primarily used for evaluating ML-based IDSs. KDD Cup99 dataset does not represent real traffic data. NSL-KDD is the refined version of KDD Cup99, but shortcomings of KDD Cup99 also present in NSL-KDD dataset. Both of these datasets are very old. So, there is a need to use more than one datasets to validate the performance of IDS (Table 6).

4 Performance metrics

IDS effectiveness can be judged by performance evaluation in terms of metrics. It can be evaluated based on different metrics computing using confusion matrix described below.

4.1 Confusion matrix

Confusion matrix often used to describe the performance of classification models. It summarizes performance of a classification algorithm by giving predicted result. It contains information regarding different combinations of actual and predicted classifications as shown in Fig. 5.

There are four components in confusion matrix True Positives (TP), False Positives (FP), True Negatives (FN) and True Negative (TN). TP means the actual class and the predicted class of data points both are 1 (true). It represents the attacks that the IDS successfully detects. FP refers to the normal behaviour being wrongly classified as attacks by IDS. FN means 0 (false) attack events that are missed by the IDS incorrectly classified as normal events 1 (true), and TN refers to the actual class and the predicted class of data points both are 0 (false). FP is referred to as Type I error and FN is referred to as Type II error. Confusion matrix is a powerful tool in classification, but its performance is not suitable for comparing IDS. To solve this problem, different performance metrics are described with the help of confusion matrix variables. The performance metrics gives output in the form of numeric values, which are easy to compare. The most common metrics are described below.

-

Accuracy It describes how much the classifier is correct. It is the ratio of correct predicted samples to the total number of samples and can be computed as Eq. 1:

$$\begin{aligned} \frac{(\text {TP}+\text {TN})}{\text {Total Number of Instances}} \end{aligned}$$(1)It is a perfect metric for balanced data but diminishes its value in the case of imbalanced data.

-

Detection rate (DR) It is also known as Sensitivity/Recall. It refers to the percentage of actual attacks correctly identified by the system and can be expressed as:

$$\begin{aligned} \frac{\text {TP}}{(\text {TP}+\text {FN})} \end{aligned}$$(2)It provides information on the classifier’s performance concerning false negatives.

$$\begin{aligned} \frac{\text {FP}}{(\text {TN}+\text {FP})} \end{aligned}$$(3) -

Specificity It measures the proportion of negatives that are correctly identified by the system. This performance metric can be calculated with the help of Eq. 4:

$$\begin{aligned} \frac{\text {TN}}{(\text {TN}+\text {FP})} \end{aligned}$$(4) -

False alarm rate (FAR) The ratio of false-negative samples to total positive samples is known as FAR and can be calculated by Eq. 5:

$$\begin{aligned} \frac{\text {FN}}{(\text {TP}+\text {FN})} \end{aligned}$$(5) -

Precision It is an important metric and tells what percentage of our true precision is true. It helps to evaluate the model better and can be calculated with the help of Eq. 6:

$$\begin{aligned} \frac{\text {TP}}{(\text {TP}+\text {FP})} \end{aligned}$$(6) -

System utilization It means the amount of CPU and memory utilization required for IDS.

4.2 Receiver operating characteristic (ROC)

ROC analysis is concerned with a field called “Signal Detection Theory” (Signal detection theory 2019). During World War II, electrical engineers and radar engineers first developed the ROC Curve to detect enemy objects on battlefields. The performance of different systems can be compared effectively with ROC Curves. It is a plot between TPR and FPR for the different possible cut-points of a diagnostic test. For many decades it is increasingly used in ML research. ROC Curve is used to count the detection costs and evaluates various detection learning methods in intrusion detection. DR and FAR are mainly used performance metrics. High DR and low FAR is preferred for IDS.

5 Machine learning methods for IDSs

ML is a branch of AI that learned or adapted to the new environment. It allows programs to finds and learns the patterns within data. It explores various methods, also called ML methods, that can learn from and then make predictions on data. ML methods usually operate based on the features that represent the characteristic of the object.

It is an interdisciplinary field that draws on ideas from various disciplines, including mathematics, science, and engineering. Face recognition, which allows users to tag and post images of their friends on social media, Optical character recognition (OCR), Recommendation engines, Self-driving vehicles, Image recognition, Speech recognition, Medical diagnosis, Virtual personal assistant, E-Mail spam and malware filtering, Online fraud detection, and several other problems have been solved with it.

In general, ML is divided into three sub-domains: supervised, unsupervised, and reinforcement learning as shown in Fig. 6.

Supervised learning requires labelled data for training (both inputs and desired outputs). It discovers the relationship between data and its class, while unsupervised learning is used when labelled data is not available. These methods find the hidden pattern in the data. Reinforcement learning is based on a feedback mechanism. Here, computer program interacts with the environment and learns by experience. Several ML methods have been proposed for accurate intrusion detection. The most commonly used methods are summarized in the following sub-sections.

5.1 Artificial neural networks (ANN)

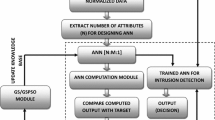

ANNs are designed based on biological neural networks. They learn from examples and generalize from noisy and incomplete data to perform tasks. The original aim of the ANN approach was to solve problems similar to the human brain. Such systems are successfully employed for data-intensive applications. The various types of ANN and their contributions and performances on intrusion detection will be discussed in this section. Several ANN designs have been proposed based on different learning strategies as depicted in Fig. 7.

5.1.1 Supervised ANN models

In this learning, we train ANN model using labelled data and a new set of examples. ANN model analyzes training data and produces a correct outcome from labelled data. Feedforward neural network and recurrent neural network (RNN) are examples of supervised learning.

Feed forward neural network was the first and most straightforward type of ANN. In this network, information is transferred from the input nodes to the hidden nodes and through hidden nodes to the output nodes in only one direction forward. This type of network does not form a cycle.

Single Layer Perception (SLP) consists of a single neuron with adjustable weights and bias. It is used to classify linearly separable patterns, and the training in the perception continuous until no error occurs. MLP and RBF are two examples of Feed-Forward ANNs used for modelling patterns. Static backpropagation is used to train the MLPs networks. This network’s advantage is that they are easy to handle and can approximate any input/output map. The disadvantages of MLPs are slow training and requiring a lot of training data.

Several researchers used ANN in the supervised mode for detecting intrusions. For instance, Gupta et al. (2012) have used a feedforward neural network for predicting several zombies involved in flooding DDoS attacks. In this paper, the relationship between the zombies and sample entropy is identified. The zombies are predicted involved in a DDoS attack with significantly less test error. A generalization of the MLP over one or more layers is known as Generalized feedforward (GFF) networks. In real life, GFF networks often solve the problem much more efficiently than MLP. Akilandeswari and Shalinie (2012) used a Radial Basis Function Neural Network (RBFNN) for classifying DDoS attack traffic and regular traffic. This method achieves the highest accuracy for DDoS flooding attacks. RNNs are connectionist models that capture the dynamics of sequences via cycles. It is a sequential learning model and learns features from the memory of previous inputs. It shows promising results in ML tasks when input and output are of variable length.

Tong et al. (2009) reported a hybrid RBF/ Elman neural network model for anomaly and misuse detection. Elman network is used to restore past events and RBF network memory as real-time pattern classification. The results show that the IDSs using this hybrid neural network improve the Detection Rate(DR) and effectively decrease the false positive rate. Aljumah (2017) has used a trained ANN algorithm to detect various attacks. A mirror image of a real-life environment was used for learning. The author got 98% detection accuracy. The old and up-to-date datasets were used to train the algorithm for further evaluation. This approach is not able to handle DDoS attacks.

5.1.2 Unsupervised ANN models

Training of a machine without a teacher is known as unsupervised learning. It uses information that is neither classified nor labelled. Kohonen Self Organizing Map and Adaptive Resonance Theory (ART) come under the category of unsupervised learning. SOM is used to build a 2D map of a problem space using unsupervised learning. It can generate a visual representation of data on a rectangular grid. Nonlinearity is the main advantage of SOM networks. It can preserve the topological structure of the data. It clusters the samples into predefined classes and then orders the classes into meaningful maps. It comprises two layers, i.e. input and output layer.

Several researchers used ANN in an unsupervised mode for detecting intrusions. For instance, Chen et al. (1996) described a multi-layered SOM algorithm, which permitted unlimited layers of Kohonen maps, also called M-SOM. This algorithm has been tested in many applications like internet entertainment-related home-pages and electronic brainstorming comments. According to Kalteh et al. (2008) SOM applications are based on ad-hoc approaches and featured by trial and error approaches. They perform better than other methods to solve various problems in cases like climate and environmental issues.

Ibrahim et al. (2013) implemented the SOM to detect anomalies on KDD dataset and NSL-KDD dataset. The author achieved 92.37% attack detection with KDD dataset and 75.49% with NSL-KDD dataset. The SOM network’s advantage is its high speed and fast conversion rates compared with other learning methods.

Adaptive Resonance Theory (ART): These are self-organizing neural architectures. It clusters the pattern space and produces appropriate weight vector templates. Stephen Grossberg invented it in 1976. The resonance is related to the resonant state of a neural network. Conventional ANNs have failed to solve the stability-plasticity problem. ART algorithms solve the problem of plasticity, which is required to learn new patterns. It is an unsupervised learning model.

Many researchers, Aljumah (2017) used ANN to find DDos attacks while others (Tong et al. 2009) used a hybrid neural network for both misuse and anomaly detection. The following points can be concluded on the basis of contributions given by researchers:

-

1.

Network data traffic can be filtered and modelled more efficiently using ANN. Always train the ANN with a new dataset instead of the old dataset. Otherwise, it will display poor results.

-

2.

RBF takes less time to train compared to MLP.

-

3.

Adhoc approaches are generally used in SOM applications.

-

4.

SOM has high speed and fast conversion rates as compared with other learning methods.

-

5.

Hybrid networks are required to improve the DR and decrease FPR.

5.2 Support vector machine (SVM)

It is a supervised model used for classification, regression and outlier detection. It linearly separates the data based on the hyperplane. SVM maps the data into feature space and divides it into classes using a hyperplane with the most significant margin between the classes’ instances. It is a binary classifier that can also do multi-class classification. SVM is most useful when dealing with nonlinear data.

Several researchers used SVM for detecting intrusions. For instance, Wang et al. (2017) proposed SVM model for detecting network intrusions. To improve detection efficiency, the authors emphasized the importance of high-quality training data. The authors proposed an efficient IDS based on enhanced SVMs. To obtain new and better-quality SVM detection, they introduced a logarithm marginal density ratio transformation(LMDRT). The empirical results showed practical values such as high DR and good efficiency.

Wang’s work was expanded by Gu et al. (2019) by introducing an ensemble-based intrusion detection model based on the LMDRT transformation, which also achieves competitive intrusion detection outcomes. Kabir et al. (2018) proposed optimum allocation based most miniature square support vector machine (OA-LS-SVM) based on the idea of sampling. This method can handle both static and incremental data. The suggested technique is explored and validated using the KDD 99 dataset. In terms of accuracy and performance, the proposed method achieves a realistic result. Similarly, Gu and Lu (2021) proposed an efficient IDS based on SVM and naive Bayes feature embedding. Four datasets UNSW-NB15, NSL-KDD, Kyoto 2006 and CICIDS2017 were selected for the experiment. The result showed that the proposed detection approach achieved strong and robust results, with an accuracy of 93.75% on the UNSW-NB15 dataset, 98.92% on the CICIDS2017 dataset, 99.35% on the NSL-KDD dataset, and 98.58% on the Kyoto 2006+ dataset. Key findings of SVM studies include followings:

-

1.

Training time is more in SVM.

-

2.

SVM is most useful when dealing with nonlinear data.

-

3.

Single SVM still has a significantly higher FAR.

-

4.

Because of its promising performance in classification and prediction, the Support Vector Machine (SVM) is becoming more popular.

5.3 Naive Bayes (NB)

It is a classification algorithm defined based on Bayes Theorem. This classifier assumes that the probability of every feature belonging to a given class value is independent of other features. Prediction can be attained by calculating the instance probabilities of each class and by selecting the class value of the highest probability.

Several researchers used NB for detecting intrusions. For instance, Kevric et al. (2017) developed a combining classifier model using random tree and NBTree algorithms for NIDS. This algorithm was evaluated on NSL-KDD dataset and accuracy achieved was 89.24%. It was also concluded that combining the two best individual classifiers could not result in the best overall performance. Depending on the form of attack, a hybrid layered IDS was proposed by Çavuşoğlu (2019) that employed various ML methods. NSL-KDD dataset was used for training and testing. Transformation and normalization operations were performed on the dataset. In all attack types, the results revealed that the proposed method achieved high accuracy and low FPR.

The SVM and NB feature embedding was used by Gu and Lu (2021) to develop an efficient intrusion detection system as discussed in SVM section. Key findings of NB studies include the followings:

-

1.

The NB classifier performs the best on real-time dataset.

-

2.

On a variety of classification tasks, NB algorithms were found to be surprisingly accurate on small datasets.

-

3.

The precision of NB does not scale up and decision trees in specific, more extensive databases.

5.4 k-nearest neighbour (kNN)

KNN used both for classification and regression problems, but it is most appropriate for classification problems. It is a lazy learner and simples stores all the training data. It uses this data to find the similarities between available data and new data. Based on the Euclidean distance, the test data is allotted to the class of kNN. This method is computationally expensive.

Several researchers used NB for detecting intrusions. For instance, Guo et al. (2016) developed a hybrid method to achieve a high DR with a low FPR. The system was based on a two-tier hybrid approach that includes two anomaly detection components and a misuse detection component. In stage 1, a low-complexity anomaly detection method was built and used to construct the detection portion. In order to construct the two detection components for stage 2, the k-nearest neighbour’s algorithm was used. The stage 1 detection component was involved in creating the two-stage detection components that reduce the number of false positives and false negatives produced by the stage 1 detection component. The experimental results showed that this approach could effectively detect network anomalies with a low FPR on the KDD’99 dataset and the Kyoto University Benchmark dataset.

Saleh et al. (2019) proposed a hybrid IDS to handle the multi-class classification problem. It was based on a triple edged strategy due to its three main contributions, which were: (i) NBFS, employed for dimensionality reduction, (ii) OSVM, applied for outlier rejection, and (iii) PKNN, used for detecting input attacks. The KDD Cup ’99, Kyoto 2006+ and NSL-KDD datasets were used to compare the HIDS against recent techniques. It was capable of detecting attacks rapidly and can be employed for real-time intrusion detection.

5.5 Logistic regression (LR)

LR estimates the discrete values in the form of 0 or 1 based on independent values. Fitting data will predict the event that will have occurred or not to the logistic function. 0.5 is considered a threshold, and the values greater than 0.5 are considered as 1 or lower than 0.5 is considered 0.

Several researchers used NB for detecting intrusions. For instance, Palmieri (2019) introduced a novel network anomaly detection approach focused on nonlinear invariant properties of Internet traffic. The overall findings showed that the method effectively isolates a wide range of volumetric DoS attacks in the sense of complex traffic flows with high accuracy and precision.

Key findings of LR studies include the followings:

-

1.

LR is known for its high performance, low computational burden, and good interpretability.

-

2.

It also produces well-calibrated prediction probabilities without requiring any scaling or tuning of its input features.

-

3.

LR outperforms other probabilistic classifiers by being more tolerant of feature correlation, allowing it to make better predictions even though multiple correlated features are present.

5.6 Decision tree (DT)

DT is used for both regression and classification problems, but it is mainly used for classification problems. A regression tree is one with continuous values, whereas a decision tree is one with a range of symbolic labels. It classifies a sample through a sequence of decisions represented in a tree structure, in which the current decision helps to make the subsequent decision. Such a sequence of decisions is represented in a tree structure. Classification and Regression Tree (CART) is a popular program for constructing decision trees.

Several researchers used DT for detecting intrusions. For instance, Kim et al. (2014) proposed a hybrid intrusion detection method based on the misuse and anomaly detection. The experiment was conducted on NSL-KDD dataset. The proposed method was better in terms of DR, low FPR and reduced time complexity. The proposed method’s ability to reduce time was not as good as it could be. As a result, future research will concentrate on improving the C4.5 decision tree algorithm. Similarly, Mousavi et al. (2019) also proposed IDS based on ant colony optimization and decision trees’ ensemble. In this method,16 essential features were selected for representing different network visits using a gradually feature removal method. The accuracy of 99.92% was obtained using the proposed method.

5.7 Random forest (RF)

RF, as the name suggests, constructs a forest with several decision trees. It is created by combining several decision trees, which predicts by averaging the predictions of each component tree. It is generally much more accurate than a single indicator. In general, the more trees in a forest, the more robust it appears.

Several researchers used RF for detecting intrusions. For instance, Farnaaz and Jabbar (2016) proposed a model based on RF classifier for intrusion detection. RF was used as an ensemble classifier and outperformed other conventional classifiers in terms of successful attack classification. The results showed that the proposed model was efficient with low FAR and high DR. Belavagi and Muniyal (2016) proposed a model for intrusion detection using ML classifiers on NSL-KDD dataset. The results concluded that the RF classifiers outperformed other classifiers, and the accuracy obtained was 99%. Hasan et al. (2019) discussed several ML models’ accuracy for predicting attacks and anomalies on IoT systems. The accuracy obtained for DT, RF and ANN classifiers was 99.4%, but in terms of other performance metrics, RF classifier outperformed other classifiers. Saranya et al. (2020a) explored the comparative study of ML algorithms used in IDS on KDD cup dataset. The accuracy obtained was 99.65%, 98.1% and 98% for RF, LDA, and CART algorithms. It was observed from the results that RF outperformed other classifiers in terms of accuracy and concluded that the classifiers’ performance was also dependent on the application used and the size of the dataset. Key findings of RF studies include the followings:

-

1.

While increasing the trees, the RF adds more randomness to the model. When splitting a node, it looks for the best function among a random subset of features rather than the most appropriate feature. As a consequence, there is a lot of variation, which leads to a better model.

-

2.

Random forest’s versatility is one of its most appealing features. It can be used for both regression and classification tasks, and the relative importance it assigns to the input features can be easily viewed.

-

3.

Overfitting is one of the most common problems in ML, but RF classifier will not overfit the model if there are enough trees in the forest.

5.8 K-means clustering method

It is one of the unsupervised ML algorithms. Like an unsupervised algorithm, there is no labelled data in this method. This algorithm works based on the finding groups in the data. It groups objects into clusters based on their similarities and differences with objects in other clusters. K-means algorithm is highly used in time series data for pattern matching. The K-Means algorithm has the disadvantage of not applying to non-spherical results.

Several researchers used K-means method for detecting intrusions. For instance, Mohamad Tahir et al. (2015) proposed a hybrid ML method for NIDS centred on a combination of K-means clustering and SVM classification. The NSL-KDD dataset was used for evaluation and the results obtained were a positive DR and reduced FAR. In another work, Al-Yaseen et al. (2017) suggested a changed K-means method for reducing the training dataset’s size and balancing the data for SVMs and Extreme Learning Machines training (ELMs). The experimental results obtained were 95.75% accuracy with a FAR of 1.87%.

5.9 Fuzzy systems

In the early 1960s, Zadeh initiated fuzzy set theory to deal with problems like incomplete information. It is an essential tool used to analyze the security of a place and begin for scientific applications. Fuzzy logic was introduced for intrusion detection, mainly due to quantitative features and security (Luo 1999). Fuzzy set theory assigns values ranging from 0 to 1 (Tsoukalas and Uhrig 1997). An object can belong to different classes simultaneously in fuzzy logic, which is beneficial when the difference between classes is not adequately defined. Due to this concept, fuzzy theory can be applied in intrusion detection when the differences between the normal and abnormal classes are not well defined (Gomez and Dasgupta 2002). Fuzzy sets help in recognizing dangerous events and reducing false alarms level during intrusion detection.

Several researchers used Fuzzy logic in detecting intrusions. For instance, Porras et al. (2002) proposed the EMERALD Mission Impact Intrusion Report Correlation System or M-Correlator to alert prioritization and aggregation. It is an alert ranking technique. These methods work better for misuse-based IDSs than anomaly-based IDSs. Qin and Lee (2003) discussed the alert score to describe the cruelty of attack and its applicability. Yu et al. (2004) presented multiple IDS to detect real-time network intrusions. In this paper, a novel IDS alert management system known as FuzMet was discussed (Alsubhi et al. 2012). It extends the works of Porras et al. (2002), Yu et al. (2004) which is used both for misuse and anomaly-based IDSs. Kudłacik et al. (2016) presented a fuzzy-based intrusion detection method. It consists of two profiles of the user’s activity, i.e. local profile and fuzzy profile. This method has low computational complexity, and due to this, the monitoring server can process a large number of incoming local profiles in real-time.

Key findings of the review of fuzzy logic-based methods are that fuzzy logic builds flexible patterns for detecting intrusion. The fuzzy theory can differentiate between abnormal and normal class in intrusion detection. It enhanced the readability as well as the understanding ability of some ML algorithms. Various researchers used fuzzy logic or fuzzy sets to recognize the dangerous events and reduce false alarm rates.

5.10 Evolutionary computation

Evolutionary computation (EC) is a problem-solving technique of computational intelligence motivated by natural and biological evolution. Traditional systems are unable to solve complex problems. So, researchers have been using evolutionary computational methods to solve such problems. EC is an idea through which a computer can develop its solutions to problems rather than write the computer program manually by going through complicated steps. As a result computer program could be ready in a matter of minutes. It enables computers to solve complex real-world problems that are difficult for a human being to tackle. The researchers have used EC for automatic model design, optimization, and even learning for classification in intrusion detection. In this section, some critical issues like the working of EC, EC methods, and algorithms used in EC will be discussed. After the initialization of candidate solutions, new solutions are created by applying mutation and crossover operators. The resulting solution’s evaluation is done based on their fitness, and after this, the selection is applied to find solutions for the next generation. A flow chart of EC is depicted in Fig. 8.

Genetic algorithms (GA), genetic programming (GP), grammatical evolution (GE), evolutionary algorithms (EA), evolutionary programming, evolution strategy, learning classifier system etc., are examples of EC methods. These methods can be differentiated based on representing the individuals like GP uses trees; GE uses the Backus-Naur Form (BNF) grammar. GA is implemented as chromosome-like data structures and uses parameters, operators and processes like selection, crossover, mutation and fitness function to arrive at a particular solution. Several researchers used EC methods for detecting intrusions. For instance, Li (2004) has applied GA to identify anomalous network behaviours. Crosbie et al. (1995) applied the multiple agent technology and GP to detect network anomalies. The proposed methodology has the advantage when many small autonomous agents are used. The training process can be time-consuming if the agents are not correctly initialized or communication occurs among the agents. Abdullah et al. (2009) has used GAs for getting classification rules for intrusion detection. Ojugo et al. (2012), has applied GAs to build rule-based intrusion detection. Maniyar and Musande (2016) revised the genetic algorithm to generate the rules to detect or classify attacks using network audit data, and fitness function is used for the selection of rules. GA based IDS can be implemented in two steps, i.e. to generate classification rules and use these rules for intrusion detection. For intrusion detection, GA has to go through a series of steps which are discussed below:

-

1.

Information about the network traffic is collected by the sniffer present in the IDS.

-

2.

On this captured data, IDS applies GA. The collected information is used to frame classification rules.

-

3.

The set of rules of the previous phase are then applied to the incoming traffic by IDS, resulting in population initialization. A new population having good qualities is generated as a result. After this evaluation is performed on this population, and a new generation with better qualities is generated. Then genetic operators are applied to the newly created generation until the most suitable individual is found.

The implementation of GA is depicted in Fig. 9.

In the literature, GP is the most popular technique of EC. GP is the extension of GA and was introduced by Koza in 1992. It is a domain-independent method, and to solve a problem, GP genetically breeds a population of computer programs. Le Goues et al. (2011) described and evaluated genetic Program Repair technique based on existing test cases. It automatically generates repairs for real-world bugs in legacy applications. GenProg can efficiently repair programs containing multiple errors drawn from multiple domains.

Jebur and Nasereddin (2015) introduced Fuzzy-genetic IDS combined with feature selection. It allows the system to develop an optimal subset of an attribute in the middle of enormous network information. To reduce the training time, the author uses 15 features to describe the rules. Fuzzy logic is used to generate rules. The soft computing approach generates more efficient rules than complex computing. Further, a GA is applied to generate essential rules by tuning. The feature selection strategies perform poorly in the case of unbalanced data.

To solve this problem, Viegas et al. (2018) proposed a new feature selection technique based on Genetic Programming that works well with balanced and unbalanced data. It is capable of selecting a set of discriminative features. Biological and Textual datasets are used for evaluation. The solution proposed by the author improves the efficiency of the learning process and also bringing down the size of the data space. Besides GA and GP, Grammatical Evolution (GE) is a technique based on biological process. With GE’s help, complete programs can be generated in an arbitrary language by developing programs written in BNF grammar. The evolution process can be performed on variable-length binary strings instead of actual programs. This transformation provides mapping, which simplifies the application of search.

Şen and Clark (2009) applied GE technique on route disruption and DoS attacks on MANETs. Intrusion detection programs are developed for each attack and distributed to every node on the network. GE technique shows good performance on evolving efficient detectors for known attacks. Nyathi and Pillay (2018) compared the GA to GE to automate GP classification algorithms’ design. This approach is trained and tested using real-world binary and multi-class data. The result shows that GE is suitable for binary classification while the GA is suitable for multi-class classification.

Evolutionary Algorithms (EAs) are black-box search optimization methods based on population and not required assumptions like continuity or differentiability. They are very appropriate for dealing with MOPs (Yang et al. 2013). To summarize above, our findings are that due to having simple structures, EC has shown excellence to represent the possible solutions to a large variety of problems. It plays a vital role for classifiers learning, optimization and automatic model design. These algorithms are easily transferable from one application to another. The important application areas of evolutionary algorithms are numerical and combinatorial optimization. Black-box optimization is the most challenging. Following features make the EAs attractive:

-

1.

They make no explicit assumptions about the problem. Due to this, they are widely applicable and can be transferable at a low cost.

-

2.

They are flexible and can be easily used in collaboration with existing methods.

-

3.

They are strong due to randomized choices.

-

4.

They are also less sensitive to noise.

-

5.

Algorithm terminates with several solutions and not focused on a single solution.

5.11 Swarm intelligence

Beni and Wang (1993) firstly triggered the term “Swarm Intelligence” (SI) for cellular robotics system and later on for problem-solving in AI. It provides a distributed solution to complex problems by interactions between agents and their environment. Self-organization and division of labour are the two necessary properties of SI. Self-organization is the capability of a system to devolve its agents without any external help and labour division. It refers to the parallel execution of feasible and straightforward tasks that enable it to solve complex problems. The two popular swarm inspired methods are ACO and PSO. ACO simulates ants’ behaviour and suitable for discrete optimization problems whereas PSO simulates the behaviour of flocks of birds and is used to solve nonlinear optimization problems. The ants foraging behaviour inspires ACO. The indirect communication between the ants utilizing chemical pheromone trails enables them to find short paths between their nest and food sources. ACO algorithms are used to solve computational and discrete optimization problems. Researchers have applied ACO algorithms to solve complex problems like Traveling Salesman, Vehicle Routing and Telecommunication network etc.

Several researchers applied SI for detecting intrusions. For instance, Tabakhi et al. (2014) described an unsupervised feature selection method based on ant colony optimization (UFSACO). This technique is used to find the optimal feature subset with several iterations without using any learning algorithms. The redundancy is minimized by the computation of feature relevance based on the similarity between features. Hence it is classified as a filter-based multivariate method. It exhibits low computational complexity. The result indicates that the method outperforms the unsupervised methods and comparable with the supervised methods. Aghdam and Kabiri (2016) applied ACO for intrusion detection problem area using dimensionality reduction. Due to solid search capability, it could efficiently found minimal feature subset. This technique uses KDD Cup 99 and NSL-KDD benchmark data sets for intrusion detection and obtained higher accuracy with a lower false alarm. Hajimirzaei and Navimipour (2019) proposed a hybrid approach for intrusion detection. NSL-KDD dataset and CloudSim simulator are used, root mean square error (RMSE), mean absolute error (MAE), and the kappa statistic is chosen for evaluation criteria. This hybrid approach gives better results than earlier methods. Kennedy, Eberhart and Shi introduced the PSO as an optimization technique to guide the particles to seek optimal global solutions. Various researchers in intrusion detection using this technique due to several advantages like ease to implement,simplicity, robustness,scalability, fast finding optimal solution and flexibility, etc. To improve the accuracy of attack detection, Bamakan et al. (2015) presented a new method based on multiple criteria linear programming(MCLP) and PSO. MCLP is a classification method and is capable of solving real-life data mining problems. It is based on mathematical programming. To improve the performance of MCLP classifier, PSO, a robust and straightforward technique was used. KDD CUP 99 Benchmark Datasets are used to evaluate the performance. PSO-MCLP model shows the high accuracy of 99.13 percentage and a low FAR of 1.947 percentage.

Similarly, Bamakan et al. (2016) again proposed a time-varying chaos particle swarm optimization method (TVCPSO). This technique is based on two conventional classifiers, i.e. MCLP and SVM, to detect intrusion using NSL-KDD dataset. This method shows high accuracy in detecting intrusions with a more discriminative feature subset.

Ali et al. (2018) proposed a PSO-FLN for intrusion detection problem. KDD99 benchmark dataset was used for validation. This model was compared with algorithms, i.e. ELM, and FLN classifier. This technique provides high testing accuracy, which can be further increased by increasing the number of hidden neurons in the ANN.

After reviewing ACO and PSO methods, it can be concluded that discrete and nonlinear optimization problems can be easily solved with SI methods. Researchers used these SI methods for the generation of classification rules or to discover clusters for Anomaly Detection. Some researchers used hybrid approaches for the enhancement of intrusion detection. These approaches showed better results than traditional or single approaches. Due to self-organization and division of labour like properties, challenging problems can be decomposed into smaller ones and handed over to an agent to work in parallel. So by adopting SI methods, real-life problems can be easily solved. The comparative study of ML methods is shown in Table 7.

5.12 Challenges of ML methods and its remedies

It can be concluded from the literature mentioned above that ML methods have been widely used to detect various types of attacks. It helps the network administrator to take the counter steps to deal with attacks. However, most conventional ML methods belong to shallow learning (SL) and often focus on feature engineering and selection. The learning capacity of traditional detection approaches is limited, and learning efficiency further decreases as the network structure complicated. They only represent partial information, i.e. one or two levels of information and cannot effectively solve the real network application problem. The multi-classification task will lead to decreased accuracy due to the dynamic growth of data sets.

Shallow learning methods require a vast quantity of training data for the operation, which become a challenge in a heterogeneous environment. Besides, shallow learning is expensive and labour intensive and not suited for forecasting high-dimensional learning requirements with massive data. When dealing with a large number of multi-type variables, logistic regression is easy to underfit, and the accuracy is low; decision trees are prone to overfitting and neglect the problems caused by inter-data correlation; SVM is inefficient when dealing with large samples, and it can be challenging to find a suitable kernel function that can deal with missing data.

To address these limitations, DL methods, an advanced subset of ML, are receiving interest across multiple domains. It has attracted researchers due to its several advantages over ML methods like automatic feature learning, flexible adaptation to novel problems which make it possible to work upon big data etc. Its superior layer feature learning ability can show improved or at least the same ML methods performance, as shown in Table 8.

6 Deep learning methods for IDSs

DL methods come into existence in 2006 and have become a prominent research topic. The word deep stands for many hidden layers in the neural network. It is a subcategory of ANN and has a more number of hidden layers than traditional neural networks, which goes up to 150. Although it is a branch of ML, complexity in the structure and learning data representations makes it a broader version of ML. DL deals with algorithms that learn from examples the same as in ML. The performance of ML and DL algorithm varies as the scale of the data increases. To find the network patterns, DL algorithms require massive data, whereas ML algorithms require lesser data. The structure can be made deep by adding one or more hidden layers in ANNs, and since the data is processed at each layer, thus, making the learning task deeper.

The DL models are applied in the research of computer vision, audio recognition, natural language processing, speech recognition, face recognition, image recognition, information retrieval, failure prediction, handwriting recognition, feature learning , social network filtering, machine translation, dimensionality reduction, intrusion detection and so on. Table 17 shows the architecture and application areas of DL methods.

DL methods are categorized into supervised learning and unsupervised learning. Convolutional Neural Network (CNN) and Recurrent Neural Network (RNN) comes under the category of supervised learning, and Auto-Encoder(AE) and Deep Belief Network (DBN)comes under the category of unsupervised learning. There exist many other DL models as variants of these basic models (Fig. 10).

6.1 Supervised DL models

In supervised learning, the training of the machine is done with labelled data. After that, to analyze the training data, the machine is trained with a new set of examples and produce a correct result from labelled data. One popular network under supervised learning is CNN.

6.1.1 Convolutional neural network (CNN)

CNN are a particular form of feed-forward ANNs which works under supervised learning. These networks are made up of neurons with learn-able biases and weights. These models process data that comes in multiple arrays and eliminates the need for manual feature extraction. It works by withdrawing relevant features directly from images without retaining them. Feature identification is the main application of ConvNets. The automated feature extraction of ConvNets makes it highly accurate for computer tasks (Tirumala 2014). The architecture of CNN is shown in Fig. 11. The limitation of ConvNets is its limited ability to process natural data in its raw form.

Several researchers used CNN method for detecting intrusions. For instance, Shen et al. (2018) proposed a new compressed CNN model for image classification called CS-CNN that incorporates compressive sensing theory at the input layer of CNN models both minimize resource consumption and improve accuracy. MINST and CIFAR-10 datasets were used for the evaluation. This method improved the training speed and classification accuracy. Praanna et al. (2020) proposed a method that combines the CNN algorithm and the LSTM algorithm. The proposed method was evaluated with KDD99. According to the experiments’ results, the proposed model outperformed SVM, CNN and DBN with 99.78% accuracy. Nguyen and Kim (2020) proposed a novel algorithm for a NIDS based on genetic algorithm (GA)-based exhaustive search and fuzzy C-means. The most successful CNN structure, called the deep feature extractor, was chosen using a GA-based optimization process. It was concluded from the results that deploying the proposed algorithm on real-world internet networks would boost computer network security by clustering criminal activities. The proposed algorithm performed better for multiple classifications. The time needed to implement a GA-based exhaustive search method to select a specific feature subset and an appropriate CNN structure was a limitation of this study.

CNN can accommodate image translation, rotation, size difference, and other types of deformations while providing accurate classification results. In a nutshell, it has good generalization potential when interacting with noisy inputs. The following factors contribute to the performance of CNN models in classification tasks:

-

1.

The availability of comprehensive ground truth training sets with labels, e.g., ImageNet.

-

2.

Implementations of high-speed GPU clusters for training a vast number of parameters.

-

3.

Regularization techniques like dropout, which are carefully planned, increase generation capacity.

6.1.2 Recurrent neural network (RNN)

RNN is an extension of a conventional feed-forward network. It is a sequential learning model and is appropriate for sequential tasks like speech and language. It learns features from previous inputs’ memory and has cyclic connections making them robust for modelling sequences. RNNs are very good at predicting the next character in the text or the next word in a sequence, but they can also be used for more complex tasks. The RNN can capture arbitrary-length dependencies from a theoretical point of view which is difficult to handle and hard to train. However, it makes the gradient exploding or vanishing while training with Back Propagation Training Time algorithm. LSTM models are presented to prevent gradient exploding. RNN obtains the best performance in many applications such as speech recognition, natural language processing and machine translation.

Several researchers used RNN method for detecting intrusions. For instance, Yin et al. (2017) applied a DL-based RNN approach on NSL-KDD dataset to find various attacks in the network. After that, the results were compared with traditional classification methods like SVM, ANN proposed by previous researchers and found that RNN-IDS was very appropriate for modelling a classification model with high accuracy, and its performance was superior to ML classification methods in both binary and multi-class classification but to reduce the time, training time using GPU acceleration needs to be focused in future.

In another research work, Liu et al. (2019) suggested a payload classification approach to analyze payloads based on PL-CNN and PL-RNN use in attack detection. The proposed methods help end-to-end detection by learning feature representations from original payloads without requiring feature engineering. When applied to the DARPA1998 dataset, PL-CNN and PL-RNN techniques achieved accuracies of 99.36% and 99.98%, respectively. PL-RNN outperformed the PL-CNN on a variety of datasets. There were two issues with these models. First, unlike conventional ML models, these models had more parameters. Consequently, changing model parameters was complex, i.e., model training was difficult and required specific skills. Second, these methods were not well-interpreted.

6.2 Unsupervised DL models

In this learning, no teacher is available for guidance or training. Here the machine is trained using information that is neither labelled nor classified. The unsorted information is grouped by machine according to patterns, similarities and differences without any previous data training. Therefore, the machine can find the hidden structure in unlabeled data by self-learning. AE and DBN come under the category of unsupervised learning.

6.2.1 Auto encoder (AE)

In AE, its input is copied to its output. The reduction in dimensionality or feature learning is made by transforming high dimensional data into lower dimensional code. Data will be recovered from the code by a decoder network. Initially, random weights are assigned to both encoder and decoder networks. The training of AE is done by observing the difference between input and output obtained from encoding and decoding. Then the error is fed back to the decoder and encoder network, respectively (Tirumala 2014). This model’s significant change is done by Bengio et al. (2009) changing the unsupervised training to supervised for identifying the significance of the training paradigm. The stacked auto-encoders(SAE) with unsupervised training are more efficient than the SAE with supervised pre-training. The performance of SAE based on deep architecture is slightly less than the performance of RBMs based architecture because SAE is unable to ignore random noise in its training data. The architecture of SAE is shown in Fig. 12.

Several researchers used RNN method for detecting intrusions. For instance, Javaid et al. (2016) described a DL-based approach for developing a flexible and efficient NIDS on NSL-KDD benchmark datasets. In this paper, STL scheme based on unsupervised learning has been applied to training data using a sparse-auto encoder. The trained features were used on a labelled test dataset for classification into the normal and attack. N-fold cross-validation methods were used for performance evaluation, and the result obtained was reasonable. Accuracy, Precision, Recall, and F-measure values metrics were used for performance evaluation. The results were also compared with the soft-max regression (SMR) when applied directly to the dataset without feature learning. After evaluation, it was found that the performance of STL was better as compared to the previous work.

The autoencoders were also applied for anomaly detection (Sakurada and Yairi 2014), in which nonlinear feature reduction by autoencoders was used to train normal network profile. In their study, the authors analyzed the learned features in the hidden layer of AE. They found that AE learned the normal state properly and activated it differently with anomalous input.

Many historical evidence shows that SAE can perform better classification tasks and multiple levels of higher-quality representation in terms of feature learning than their shallow counterparts. But the limitation of SAE is difficulty in effectively performing feature learning on “Big data”, having a large number of heterogeneous data due to the use of vectors to represent every hidden layer’s input data and learning features. A vector cannot model the highly nonlinear distribution of the input data. To solve this problem, multi-modal DL models have been proposed by Ngiam et al. (2011), Srivastava and Salakhutdinov (2012). Firstly, feature learning from each modality is performed using conventional DL models and then integrate the learned features at different levels as shared representations of multi-model data. Multi-model DL models help capture the high-order correlations across multiple modalities to form the hierarchical representations of multi-modal data. However, they cannot model the nonlinear distribution of the heterogeneous input data since they learn features from different modal data independently, leading to the failure in learning useful features on big data.

Sakurada and Yairi (2014) proposed a tensor DL model for heterogeneous data. The stacking of multiple tensor auto-encoder models was used to build the data computation model. This model achieved higher classification accuracy for heterogeneous data than multi-modal DL models.

6.2.2 Deep belief network (DBN)

DBN model was designed by Hinton et al. in 2006. It is based on MLP model with greedy layer-wise training and can learn feature representations from both the labelled and unlabeled data. It comprises many interconnected hidden layers in which each layer acts as an input to the next layer and is visible only to the next layer. Each layer in a DBN has no lateral connection between its nodes present in that layer. It first takes the benefit of an efficient layer by layer greedy learning strategy to initialize the deep network and then fine-tune all the weights jointly with the desired outputs. It optimizes its weights at time complexity linear to the depth and size of the networks. In this model, unsupervised pre-training and supervised fine-tuning strategies are used. Developing a DBN model is computationally expensive. The DBN architecture proposed by Hinton et al. is depicted in Fig. 13. Several researchers used DBN method for detecting intrusions. For instance, Kang and Kang (2016) used DBN for intrusion detection in-vehicular network. DBN based unsupervised pre-training models could improve intrusion detection accuracy, as demonstrated by various researchers. The limitation of this model is that its centralized approach might limit its practicality in fog networks.

Boltzmann Machines (BM) is the form of log-linear Markov Random Field (MRF), where the energy function is linear in its free parameters. The hidden nodes can be introduced to make them robust enough. The modelling capacity of the BM can be increased by introducing more hidden variables. RBM is the most popular version of BM.

For regular RBM, the relationship between visible units and hidden units is limited to constants that certainly downgrade the representation capability of the RBM. To avoid this error and enhance DL capability, the fuzzy restricted Boltzmann machine (FRBM) and its learning algorithm are proposed by Chen et al. (2015). Here, the parameters governing the model are replaced by fuzzy numbers. As per results, the representation capacity of FRBM is better than traditional RBM, and when the noise-contaminated the training data, FBRM reveals better robustness property than RBM. To train the DNNs with many parameters creates an overfitting problem to solve the overfitting problem (Srivastava et al. 2014) introduced the dropout Restricted Boltzmann Machine model, which performs better than standard RBM. Dropout is a technique for dropping out units in a neural network. Dropping a unit out means temporarily removing it from the network.

Denoising AutoEncoder (DAE) is the process that uses similar input and output data. The denoising power is produced by adding noise to the training procedure. DAE are an essential and critical tool for feature selection and extraction. Variational AutoEncoders (VAEs) are a deep learning tool that can be used to learn latent representations. It is appropriate for sensor failure detection, application of IoT device security, and intrusion systems’ security. The VAEs executes the visualization, recognition, representation, and denoising task.

Li et al. (2015), the malicious code detection was performed using AE for feature extraction and DBN as a classifier. KDDCUP’99 benchmark dataset was used for the experiment. The results have shown that the hybrid approach is more effective and accurate in time and detection accuracy than a single DBN. The advantage of these networks is that they are more beneficial than shallow ones in cyber-attack detection. The dataset required in this research should be the latest, which is its main drawback.

Table 9 summarizes DL methods for IDS (2015-2021).

6.3 Comparative analysis of experimental results for intrusion detection

A comparison of several ML and DL algorithms used for the IDS on benchmark datasets is presented in this section. The evaluation metrics used for the comparisons are accuracy, precision and recall. Table 10 shows the evaluation comparison of several ML classifiers using tenfold cross-validation on KDD99 dataset. Similarly, Table 11 shows the evaluation comparison of several ML classifiers using tenfold cross-validation on the UNSW-NB15 dataset.

We can see from Table 10 that HT, KNN, DT,and RF performed well in terms of classifying normal and abnormal traffic and achieved an accuracy of 99.22%, 99.83%, 99.86% and 99.94% respectively. The same order of superiority is retained (refereed to Table 11) by HT, KNN, DT, and RF using tenfold cross validation with accuracy of 93.53%, 93.71%, 95.54% and 96.07% respectively on UNSW-NB15 dataset.

Tables 12 and 13 present the evaluation comparison of several ML classifiers using tenfold cross validation on the supplied UNSW-NB15 and KDD99 dataset of the testing phase.

The empirical analysis of classifying spam traffic using supplied data sets from Tables 12 and 13 demonstrate that RF outperformed KNN, SMO and DT with a smaller margin. At the same time, its superiority is more significant as compared to other state of the art algorithms with a large margin. The accuracy obtained by SMO, KNN, DT and RF are 95.11%, 96.01%,96.22%,96.79%, respectively. The result analysis from Tables 9–12 shows that RF classifier gives better performance in most cases because while increasing the trees, the RF adds more randomness to the model. When dividing a node, it looks for the best feature among a random subset of features rather than the most significant feature. As a result, there is a lot of variety, which leads to a better model.

Time complexity of all classifiers used for the testing and training on UNSW-NB15 and KDD99 datasets are given in Table 14. It is observed from the results that RF classifier requires more time for training because it uses many decision trees to define the class.

The accuracy comparison for all data set using ML state of the art algorithms can be visualized in Fig. 14. From, Fig. 14 it is verified that RF, DT, KNN and HT gives high results in the tenfold cross-validation test mode of both datasets compared to the other classifiers, while the RF, DT, KNN and SMO achieve high results in the supplied test mode of both datasets compared to the other classifiers.