Abstract

TOPSIS is a popular used model for multiple attribute decision-making problems. Recently, Chen and Lee (Exp Syst Appl 37(4):2790–2798, 2010) extended TOPSIS method to interval type-2 fuzzy sets (IT2 FSs) environment. They first compute the ranking values of the elements in fuzzy-weighted decision matrix, and used the ranking values to compute the crisp relative closeness through traditional TOPSIS computing process. Such ranking computation leads to the information loss of the weighted decision matrix. In this paper, we introduce an analytical solution to IT2 FSs-based TOPSIS model. First, we propose the fractional nonlinear programming (NLP) problems for fuzzy relative closeness. Second, based on Karnik–Mendel (KM) algorithm, the switch points of the NLP models are identified, and the analytical solution to IT2 FSs-based TOPSIS model can be obtained. Compared with Chen and Lee’s method, the proposed method operates the IT2 FSs directly and keeps the IT2 FSs formats in the whole process, and the result of which is precise in analytical form. In addition, some properties of the proposed analytical method are discussed, and the computing process is summarized as well. To illustrate the analytical solution, an example is given and the result is compared with that of Chen and Lee’s method (Exp Syst Appl 37(4):2790–2798, 2010).

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The fuzzy set theory introduced by Zadeh (1965) has achieved a great success in various fields. Later, Zadeh (1975) introduced the type-2 fuzzy sets (T2 FSs), which was an extension of the fuzzy set, and the membership values are type-1 fuzzy sets on interval \([0,1]\). Mendel (2001) further generalized the interval fuzzy set and defined the notion of IT2 FSs, which has been found useful to deal with vagueness and uncertainty in decision problems, such as perceptual computing (Mendel and Wu 2010; Mendel et al. 2010), control system (Wu and Tan 2006; Wagner and Hagras 2010; Wu 2012), time-series forecasting (Khosravi et al. 2012; Chakravarty and Dash 2012; Miller et al. 2012), information aggregation (Zhou et al. 2010, 2011; Huang et al. 2014) and decision-making (Chen and Lee 2010; Wang et al. 2012; Chen and Wang 2013).

MADM is a widespread method, which is applied to find the most desirable alternatives according to the information about attributes and weights provided by decision makers (Damghani et al. 2013; Xu 2010). TOPSIS, introduced by Yoon and Hwang (1981), uses the similarity to ideal solution to solve MADM problems, where the performance ratings and weights are given as crisp values. Later, Triantaphyllou and Lin (1996) introduced fuzzy TOPSIS method based on fuzzy arithmetic operations. Chen (2000) extended TOPSIS method to fuzzy group decision-making situations. Wang and Elhag (2006) proposed a fuzzy TOPSIS method based on alpha level sets. Wang and Lee (2007) generalized TOPSIS method in fuzzy MADM environment. Chen and Tsao (2008) and Ashtiani et al. (2009) extended the TOPSIS method to interval-valued fuzzy numbers environment. Boran et al. (2009) proposed an intuitionistic fuzzy TOPSIS method for supplier selection problem. Li (2010) proposed TOPSIS-based NLP methodology with interval-valued intuitionistic fuzzy sets. Tan (2011) introduced a multi-criteria interval-valued intuitionistic fuzzy group decision-making method using Choquet integral-based TOPSIS method. Robinson and AmirtharajE (2011) developed TOPSIS method under triangular intuitionistic fuzzy sets. Behzadian et al. (2012) summarized the research on TOPSIS applications and methodologies. In addition to the developments of fuzzy TOPSIS in traditional type-1 fuzzy formats, a notable progress was the appearance of interval type-2 fuzzy TOPSIS method proposed by Chen and Lee (2010). They first computed the ranking values of the IT2 FSs elements in weighted decision matrix, then counted the crisp relative distance through traditional TOPSIS computing process. However, both the defuzzification from the very beginning and the crisp distance computation are approximate, which do not realize the IT2 FSs formats crossing the whole computing process, and lead to decision information loss.

In this paper, we provide an analytical solution to IT2 FSs-based TOPSIS model with KM algorithm. KM algorithm (Karnik and Mendel 2001) is a kind of the standard way to compute the centroid and perform type reduction for type-2 fuzzy sets and systems (Hagras 2007; Mendel 2007a, 2013). It transforms the fractional nonlinear programming problems into identifying the switch points of \(\alpha \) levels, which is monotonically and superexponentially convergent to the optimal solution (Mendel and Liu 2007). Some applications of KM algorithm in decision-making have also been proposed. Wu and Mendel (2007) used the KM algorithm to compute the linguistic weighted average (LWA) of type-2 fuzzy sets. Liu and Mendel (2008) proposed a new \(\alpha \)-cut algorithm for solving the fuzzy weighted averaging (FWA) problem with the KM algorithm. Liu et al. (2012) proposed the analytical solution to FWA with KM algorithm. Liu and Wang (2013) introduced the analytical solution to generalized FWA with KM algorithm as well.

Based on KM algorithm (Karnik and Mendel 2001; Liu et al. 2012), we propose an analytical solution to the TOPSIS model with IT2 FSs variables. First, similar to the case of type-1 fuzzy TOPSIS method (Kao and Liu 2001; Li et al. 2009), we transform the IT2 FSs TOPSIS model into several interval fractional NLP problems with \(\alpha \) levels for finding the fuzzy relative closeness among the alternatives. Then, we use the KM algorithm to identify the switch points of the interval fractional NLP problems with \(\alpha \) levels. The switch points are the optimal values of the interval parameters, which can be used to directly express the optimal solutions to the interval fractional NLP problems in an analytical way. Finally, we propose a computational procedure to obtain the analytical solution to IT2 FSs-based TOPSIS method. Compared with the current interval type-2 fuzzy TOPSIS method proposed by Chen and Lee (2010), it realizes the actual sense of IT2 FSs-based TOPSIS method computation, as the defuzzification of the fuzzy relative closeness is dealt with at the end of the computing process, not from the very beginning. It is accurate, as the fractional NLP problem considers all the conditions when computing the IT2 FSs-based fuzzy relative closeness, and all the switch points are identified through expressions.

The paper is organized as follows. Section 2 introduces the concept of IT2 FSs, KM algorithm and fuzzy TOPSIS method. Section 3 proposes the fractional NLP models of IT2 FSs-based TOPSIS model with KM algorithm. Section 4 introduces the analytical solution to IT2 FSs-based TOPSIS model, and discusses some properties of it. Section 5 illustrates a MADM problem under IT2 FSs environment, and compares the results with that of the original method. Section 6 summarizes the main results and draws conclusions.

2 Preliminaries

In this section, we introduce the concepts of IT2 FSs, KM algorithm and the process of computing fuzzy TOPSIS method.

2.1 IT2 FSs and KM algorithm

2.1.1 IT2 FSs

The type-2 fuzzy sets are characterized by a fuzzy membership function, where the membership value is a fuzzy set in \([0,1]\), not a crisp number.

Definition 1

Zadeh (1975) The type-2 fuzzy sets are represented by a type-2 membership function \(\mu _{\tilde{\tilde{A}}}\), which can be shown as:

where \(x\) is the primary variable, \(J_{x}\in [0,1]\) is the primary membership of \(x\), \(u\) is the secondary variable, and \(\int _{u\in J_{x}} \mu _{\tilde{\tilde{A}}}(x,\mu )/(x,u)\) is the secondary membership function at \(x\).

Mendel (2001) generalized the interval fuzzy set and defined the notion of IT2 FSs, which are defined as follows.

Definition 2

Mendel (2001) The IT2 FSs \(\tilde{\tilde{A}}\) is an objective, which has the parametric form as:

where \(x\) is the primary variable, \(J_{x}\in [0,1]\) is the primary membership of \(x\), \(u\) is the secondary variable, and \(\int _{u\in J_{x}} 1/(x,u)\) is the secondary membership function at \(x\).

Definition 3

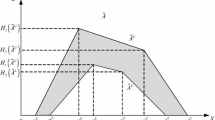

Mendel (2007b) For the IT2 FSs \(\tilde{\tilde{A}}\), the footprint of uncertainty of \(\tilde{\tilde{A}}(\mathrm{FOU}(\tilde{\tilde{A}}))\) is defined as:

where FOU is shown as the shaded region in Fig. 1. It is bounded by an upper membership function (UMF) \(\tilde{A}^U(x)\) and a lower membership function (LMF) \(\tilde{A}^L(x)\).

Definition 4

Lee and Chen (2008) Suppose \(\tilde{\tilde{A}}_i\) be an trapezoidal IT2 FSs shown in Fig. 1, where \(\tilde{\tilde{A}}_i\!=\!\!\big ((x^U_{i1},x^U_{i2},x^U_{i3},x^U_{i4};\) \(H_1(\tilde{A}^U_i),H_2(\tilde{A}^U_i)), (x^L_{i1},x^L_{i2},x^L_{i3},x^L_{i4};H_1(\tilde{A}^L_i),H_2(\tilde{A}^L_i)))\), the ranking value \(\mathrm{Rank}(\tilde{\tilde{A}}_i)\) can be defined as Eq. (2).

In Eq. (2), \(M_p(\tilde{A}^L_i)\) denotes the average of elements \(x^j_{ip}\) and \(x^j_{i(p+1)}\), \( M_p(\tilde{A}^j_i)=\frac{x^j_{ip}+x^j_{i(p+1)}}{2}\), \(p=\) 1, 2, 3. \(S_q(\tilde{A}^j_i)\) denotes the standard deviation of elements \(x^j_{iq}\) and \(x^j_{i(q+1)}\), \(S_q(\tilde{A}^j_i)=\sqrt{\frac{1}{2} \sum \nolimits _{k=q}^{q+1}\left( x^j_{ik}-M_q(\tilde{A}^j_i)\right) ^2}\), \(q=1,2,3. \) \(S_4(\tilde{A}^j_i)=\) \(\sqrt{\frac{1}{4} \sum \nolimits _{k=1}^4 \left( (x^j_{ik})^2-\frac{1}{4}\sum \nolimits _{k=1}^4 x^j_{ik}\right) ^2}\) denotes the standard deviation of elements \(x^j_{ik}\) \((k=1,2,3,4)\), \(j\in \{U,L\}\). \(H_p(\tilde{A}^j_i)\) denotes the membership value of element \(x^j_{p+1}\) in trapezoidal membership function \(\tilde{A}^j_i\), \(p=1,2\), \(j\in \{U,L\}\), \(i=1,2,\ldots ,n\).

It is obvious that IT2 FSs is the simplest form of type-2 fuzzy sets. In this paper, we just discuss the TOPSIS method under IT2 FSs environment.

2.1.2 KM algorithm

KM algorithm (Karnik and Mendel 2001) is a type reduction method in IT2 FSs, which was originally used to compute the centroid of IT2 FSs. The principle of which can be described as follows.

Definition 5

Mendel and Liu (2007) For an interval type-2 fuzzy set \(\tilde{A}\), the centroid \(c_{\widetilde{A}}=[c_l, c_r]\) can be defined as the maximum and minimum solutions to the following interval fractional programming, respectively.

where \(x_i\)s are increasing in the domain \(X\), and \(\theta _i\) can be changed between the lower membership function (LMF) \(\underline{\mu }_{\widetilde{A}}(x_i)\) and upper membership function (UMF) \(\overline{\mu }_{\widetilde{A}}(x_i)\).

The derivative of function \(y(\theta _1,\theta _2,\ldots ,\theta _n)\) with variable \(\theta _k(k=1,2,\ldots ,n)\) is denoted as:

In Eq. (4), because of \(\sum \nolimits _{i=1}^n\theta _i > 0\), it is concluded that \(x_k\) is the switch point, which determines the monotonicity of function \(y(\theta _1,\theta _2,\ldots ,\theta _n)\). That is if

According to Eq. (5), suppose the maximum (minimum) of \(\theta _k\) is \(\overline{\mu }_{\widetilde{A}}(x_i) (\underline{\mu }_{\widetilde{A}}(x_i))\), it is implied that \(y(\theta _1,\theta _2,\ldots ,\theta _n)\) reaches the minimum, i.e., \(y^L\), if (1) for those values of \(k\), it follows \(x_k < y(\theta _1,\theta _2,\ldots ,\theta _n)\), such that \(\theta _k=\overline{\mu }_{\widetilde{A}}(x_i)\); (2) for those values of \(k\), it follows \(x_k > y(\theta _1,\theta _2,\ldots ,\theta _n)\), such that \(\theta _k=\underline{\mu }_{\widetilde{A}}(x_i)\). Similarly, it can easily be deduced that \(y(\theta _1,\theta _2,\ldots ,\theta _n)\) reaches the maximum, i.e., \(y^U\), if (1) for those values of \(k\), it follows \(x_k > y(\theta _1,\theta _2,\ldots ,\theta _n)\), such that \(\theta _k=\overline{\mu }_{\widetilde{A}}(x_i)\); (2) for those values of \(k\), it follows \(x_k < y(\theta _1,\theta _2,\ldots ,\theta _n)\), such that \(\theta _k=\underline{\mu }_{\widetilde{A}}(x_i)\). Combined with these conclusions together, it is easy to verify that \(y^L\) or \(y^U\) switch only once between \(\overline{\mu }_{\widetilde{A}}(x_i)\) and \(\underline{\mu }_{\widetilde{A}}(x_i)\).

Coupled with these facts altogether, the centroid of IT2 FSs \(\widetilde{A}\), \(c_{\widetilde{A}}=[c_l, c_r]\), can be computed as:

where \(k_l\) and \(k_r\) are called “switch points” with \({x}_{k_l}\le c_l\le {x}_{k_l+1}\) and \({x}_{k_r}\le c_r\le {x}_{k_r+1}\). The determination of \(k_l\) and \(k_r\) can be performed using the KM algorithm (Mendel and Liu 2007). The computation process is omitted because we only use the principle of them.

2.2 The process of computing fuzzy TOPSIS method

2.2.1 The process of computing type-1 TOPSIS method

Suppose a fuzzy MADM problem has \(n\) alternatives \(A_{1-n}\), and \(m\) decision criteria \(C_{1-m}\), \(\tilde{x}_{ji}\) \((j=1,2,\ldots ,m;\) \(i=1,2,\ldots ,n)\) is the type-1 fuzzy rating of alternative \(A_j\) for criteria \(C_i\), \(\tilde{w}_i\) is the type-1 fuzzy weight for criteria \(C_i\).

The process of computing type-1 fuzzy TOPSIS method can be summarized as follows (Wang and Elhag 2006).

-

Step 1. Construct the decision matrix \(\tilde{X}\), and normalize average decision matrix as \(\tilde{\bar{X}}=(\tilde{x}_{ji})_{m \times n}\).

-

Step 2. Construct the weighting matrix \(\tilde{W_p}\), and normalize average weighting matrix as \(\tilde{\bar{W}}=(\tilde{w}_i)_{1 \times n}\).

-

Step 3. Define the positive ideal solution and the negative ideal solution.

-

Step 4. Compute the fuzzy relative closeness for alternatives as below.

As \(\mathrm{RC}_j\) is a triangular fuzzy number, the lower and upper limits can be obtained by the following fractional NLP models:

where \(x_{ji}=[x^U_{ji},x^L_{ji}]\) and \(w_i=[w^U_i,w^L_i]\) are the intervals of \(\tilde{x}_{ji}\) and \(\tilde{w}_i\), and \(\mathrm{RC}_j=\left[ \mathrm{RC}^U_j,\mathrm{RC}^L_j\right] \).

-

Step 5. Defuzzify and rank alternatives in terms of their relative closenesses. The bigger the \(\mathrm{RC}^*_j\) is, the better alternative \(A_j\).

2.2.2 The process of computing IT2 FSs TOPSIS method

Suppose a fuzzy MADM problem has \(n\) alternatives \(A_{1-n}\), and \(m\) decision criteria \(C_{1-m}\), \(\tilde{\tilde{x}}_{ji}\) is the interval type-2 fuzzy average evaluation for alternative \(A_j\) with criteria \(C_i\), \(\tilde{\tilde{w}}_i\) is the interval type-2 fuzzy average weighting with criteria \(C_i\).

According to Chen and Lee (2010), the process of computing IT2 FSs TOPSIS method is denoted as follows.

-

Step 1–3. Construct the fuzzy-weighted decision matrix \(\bar{Y}_w=(\tilde{\tilde{v}})_{m \times n}=\tilde{\tilde{w}}_i \otimes \tilde{\tilde{x}}_{ji}(j=1,2,\ldots ,m;\) \(i=1,2,\ldots ,n)\).

-

Step 4. Compute the ranking values of the elements in fuzzy-weighted decision matrix \(\bar{Y}_w\) using Eq. (2), and construct the crisp ranking-weighted decision matrix \(\bar{Y}^*_w=\mathrm{Rank}(\tilde{\tilde{v}}_{ji})\).

-

Step 5. Define the positive ideal solution and the negative ideal solution from matrix \(\bar{Y}^*_w\).

-

Step 6. Calculate the distances of the alternative from the ideal solution and the negative ideal solution.

-

Step 7. Calculate the crisp relative closeness to the ideal solution.

-

Step 8. Rank alternatives in terms of their crisp relative closenesses. The bigger the \(\mathrm{RC}^*_j\) is, the better alternative \(A_j\).

3 The fractional NLP models of IT2 FSs-based TOPSIS method

Here, we extend the type-1 fuzzy TOPSIS method to IT2 FSs environment. Through solving the fractional NLP models of IT2 FSs fuzzy relative closeness with KM algorithm, the analytical solutions to IT2 FSs-based TOPSIS can be obtained.

3.1 The fractional NLP models for IT2 FSs-based TOPSIS method

Suppose \(\tilde{\tilde{x}}_i\) and \(\tilde{\tilde{w}}_i\) are the normalized IT2 FSs, \(\tilde{\tilde{x}}_i \in [\tilde{x}^U_i(\alpha _j), \tilde{x}^L_i(\alpha _j)]\), \(\tilde{\tilde{w}}_i \in [\tilde{w}^U_i(\alpha _j), \tilde{w}^L_i(\alpha _j)]\), \(\tilde{x}^L_i(\alpha _j) \in [a_{ir}\) \((\alpha _j), b_{il}(\alpha _j)]\), \(\tilde{x}^U_i(\alpha _j) \in [a_{il}(\alpha _j), b_{ir}(\alpha _j)]\), \(\tilde{w}^L_i(\alpha _j) \in [c_{ir}\) \((\alpha _j), d_{il}(\alpha _j)]\) and \(\tilde{w}^U_i(\alpha _j) \in [c_{il}(\alpha _j), d_{ir}(\alpha _j)]\), the UMF and LMF of which has the same maximum and minimum membership value, respectively, are shown in Fig. 2.

Provided that the membership value of \(\tilde{x}^L_i(\alpha )\) \((\tilde{w}^L_i(\alpha ))\) and \(\tilde{x}^U_i(\alpha )(\tilde{w}^U_i(\alpha ))\) is denoted as \(h^L_{\tilde{x}_i(\alpha )} (h^L_{\tilde{w}_i(\alpha )})\) and \(h^U_{\tilde{x}_i(\alpha )}\) \((h^U_{\tilde{w}_i(\alpha )})\), respectively. The corresponding maximum and minimum membership value of which is denoted as \(h_{\max }\) and \(h_{\min }\), respectively. That is

According to Problem (8), the fuzzy relative closeness of IT2 FSs-based TOPSIS method for each alternative by solving NLP models is denoted as Problem (11), which is also shown in Fig. 3.

where \(\tilde{x}^L_{ji}(\alpha ) (\tilde{w}^L_i(\alpha ))\) is the left region of IT2 FSs \(\tilde{\tilde{x}}_{ji}(\tilde{\tilde{w}}_i)\), \(\tilde{x}^R_{ji}(\alpha )(\tilde{w}^R_i(\alpha ))\) is the right region of IT2 FSs \(\tilde{\tilde{x}}_{ji}(\tilde{\tilde{w}}_i)\), \(x_{ji}(\alpha )=[\tilde{x}^L_{ji}(\alpha ), \tilde{x}^R_{ji}(\alpha )]\) and \(w_i(\alpha )=[\tilde{w}^L_i(\alpha ),\) \( \tilde{w}^R_i(\alpha )]\) are the \(\alpha \)-level sets of \(\tilde{\tilde{x}}_{ji}\) and \(\tilde{\tilde{w}}_i\).

Similar to the principle of Problems (9) and (10), the left and right region can be obtained by solving fractional NLP models as Problems (12) and (13), respectively.

where \(\tilde{x}^L_{ji}(\alpha )=[a_{jil}(\alpha ), a_{jir}(\alpha )]\), \(\tilde{x}^R_{ji}(\alpha )=[b_{jil}(\alpha ),\) \(b_{jir}(\alpha )]\), \(\tilde{w}^L_i(\alpha )=[c_{il}(\alpha ), c_{ir}(\alpha )]\) and \(\tilde{w}^R_i(\alpha )=[d_{il}(\alpha ),\) \(d_{ir}(\alpha )]\).

It is obvious that \(\widetilde{\widetilde{\mathrm{RC}}}_j (\alpha )=[\widetilde{\mathrm{RC}}^L_j(\alpha ), \widetilde{\mathrm{RC}}^R_j(\alpha )]\) can be generated by solving NLP Problems (12) and (13).

According to Eq. (8), the final interval type-2 fuzzy relative closeness \(\widetilde{\widetilde{\mathrm{RC}}}_j (\alpha )\) can be expressed as:

Next, we introduce a new NLP problem, through which the optimal solution to Problems (12) and (13) can be computed indirectly.

Theorem 1

For \(\tilde{\tilde{x}}\) and \(\tilde{\tilde{w}}\) is the IT2 FSs-based aggregated element and weight, respectively. Let

if \(\tilde{\tilde{x}}_{ji}=\tilde{x}^L_{ji}\) and \(\tilde{x}^L_{ji}\) reaches its minimum (maximum) point, then the left region \(\tilde{f}^L(\alpha )\) and \(\widetilde{\mathrm{RC}}^L(\alpha )\) obtain its maximum (minimum) and minimum (maximum) values correspondingly; otherwise, if \(\tilde{\tilde{x}}_{ji}=\tilde{x}^R_{ji}\) and \(\tilde{x}^R_{ji}\) reaches at minimum (maximum) point, then the right region \(\tilde{f}^R(\alpha )\) and \(\widetilde{\mathrm{RC}}^R(\alpha )\) get the maximum (minimum) and minimum (maximum) in correspondence.

Proof

See Appendix A. \(\square \)

From the conclusions of Theorem 1, it is evident to see that the optimal solution to Problem (11) can be realized if Problem (15) holds.

Accordingly, the optimal solutions to Problems (12) and (13) can be computed by solving Problems (16) and (17) indirectly.

Then, the fuzzy relative closeness of IT2 FSs-based TOPSIS method can be obtained through solving Eqs. (18), (19).

3.2 The fractional NLP models of IT2 FSs-based TOPSIS method with KM algorithm

Here, we prove Problem (15) satisfies the principle of KM algorithm.

Let us rewrite the objective function of Problem (15) into the relation between \(\tilde{\tilde{f}}(\tilde{\tilde{w}}_j)\) and \(\tilde{\tilde{w}}_j\), and get

Then, the derivative of \(\tilde{\tilde{f}}(\tilde{\tilde{w}}_1,\tilde{\tilde{w}}_2,\ldots ,\tilde{\tilde{w}}_n)\) to \(\tilde{\tilde{w}}_k(k=1,2,\) \(\ldots ,n)\) can be expressed as:

From Eq. (20), it is obvious that

It is concluded that the extreme points of \(\tilde{\tilde{f}}(\tilde{\tilde{w}}_1,\tilde{\tilde{w}}_2,\ldots ,\) \(\tilde{\tilde{w}}_n)\) can be obtained through changing the direction of weighting \(\tilde{\tilde{w}}_k\). If we compute \(\tilde{f}^{*R} (\tilde{f}^{*L})\), \(\tilde{\tilde{w}}_k\) switches only once between \(\tilde{w}^R_k(\alpha )\) and \(\tilde{w}^L_k(\alpha )\). Hence, the computation of the maximum (minimum) of \(\tilde{\tilde{f}}(\tilde{\tilde{w}}_1,\tilde{\tilde{w}}_2,\ldots ,\tilde{\tilde{w}}_n)\) can be converted into solving the maximum (minimum) of \(\tilde{\tilde{w}}_i=\tilde{w}^R_k(\alpha )\) (\(\tilde{\tilde{w}}_i=\tilde{w}^L_k(\alpha )\)).

According to the principle of KM algorithm, if \(\tilde{x}^L_i(\alpha )\) and \(\tilde{x}^R_i(\alpha )\) are increasingly orders, the solutions to \(\tilde{\tilde{f}}(\tilde{\tilde{x}})\) are reduced to finding the switch points of \(k_L\) and \(k_R\).

Putting all of these facts together, problems (16) and (17) can be transformed into Eqs. (22) and (23).

In Eqs. (22), (23), \(\tilde{a}_i(\alpha )\) and \(\tilde{b}_i(\alpha )\) are increasing orders; \(\tilde{f}^{*L}\) and \(\tilde{f}^{*R}\) denotes the left region and right region of the function \(\tilde{\tilde{f}}\); \(k_L \triangleq k_L(\alpha )\) and \(k_R \triangleq k_R(\alpha )\), both of which are the switch points, such that

As \(\tilde{\tilde{f}}\) is a monotonically increasing function, Eqs. (22), (23) can be changed into Eqs. (26)–(29).

In Eqs. (26)–(29), \(a_{il}(\alpha )\), \(a_{ir}(\alpha )\), \(b_{il}(\alpha )\) and \(b_{ir}(\alpha )\) are increasing orders.

Suppose the left region \(\tilde{f}^L(\alpha )\) and the right region \(\tilde{f}^R(\alpha )\) can be denoted as:

In the following, we propose the expressions to compute the fuzzy relative closeness for Eqs. (26)–(29).

Theorem 2

The following properties are true.

-

(1)

In Eq. (26), \(f_\mathrm{Ll}\) can be specified as Eq. (30), where \(a_{il}(\alpha )\) is an increasing order, \(k_\mathrm{Ll}\) is the switch point satisfying \(a_{{k_\mathrm{Ll},l}}(\alpha ) \le f^*_\mathrm{Ll}(\alpha ,k) \le a_{{k_\mathrm{Ll}+1},l}(\alpha )\).

-

(2)

In Eq. (27), \(f_\mathrm{Lr}\) can be specified as Eq. (31), where \(a_{ir}(\alpha )\) is an increasing order, \(k_\mathrm{Lr}\) is the switch point satisfying \(a_{{k_\mathrm{Lr},r}}(\alpha ) \le f^*_\mathrm{Lr}(\alpha ,k) \le a_{{k_\mathrm{Lr}+1},r}(\alpha )\).

$$\begin{aligned}&f^*_\mathrm{Ll}(\alpha ,k)\nonumber \\&\quad =\frac{\sum \limits _{i=1}^{k_\mathrm{Ll}} \left( d_{ir}(\alpha ) (a_{il}(\alpha )-1)\right) ^2+\sum \limits _{i=k_\mathrm{Ll}+1}^n c_{il}(\alpha )\left( a_{il}(\alpha )-1)\right) ^2}{\sum \limits _{i=1}^{k_Ll} \left( d_{ir}(\alpha ) a_{il}(\alpha )\right) ^2+\sum \limits _{i=k_\mathrm{Ll}+1}^n \left( c_{il}(\alpha ) a_{il}(\alpha )\right) ^2},\nonumber \\\end{aligned}$$(30)$$\begin{aligned}&f^*_\mathrm{Lr}(\alpha ,k)\nonumber \\&\quad =\frac{\sum \limits _{i=1}^{k_\mathrm{Lr}} \left( d_{il}(\alpha ) (a_{ir}(\alpha )-1)\right) ^2+\sum \limits _{i=k_\mathrm{Lr}+1}^n c_{ir}(\alpha )\left( a_{ir}(\alpha )-1)\right) ^2}{\sum \limits _{i=1}^{k_Lr} \left( d_{il}(\alpha ) a_{ir}(\alpha )\right) ^2+\sum \limits _{i=k_\mathrm{Lr}+1}^n \left( c_{ir}(\alpha ) a_{ir}(\alpha )\right) ^2},\nonumber \\\end{aligned}$$(31)$$\begin{aligned}&f^*_\mathrm{Rl}(\alpha ,k)\nonumber \\&\quad =\frac{\sum \limits _{i=1}^{k_\mathrm{Rl}} \left( c_{ir}(\alpha ) (b_{il}(\alpha )-1)\right) ^2+\sum \limits _{i=k_\mathrm{Rl}+1}^n d_{il}(\alpha ) \left( b_{il}(\alpha )-1)\right) ^2}{\sum \limits _{i=1}^{k_\mathrm{Rl}} \left( c_{ir}(\alpha ) c_{ir}(\alpha )\right) ^2+\sum \limits _{i=k_\mathrm{Rl}+1}^n \left( d_{il}(\alpha ) b_{il}(\alpha )\right) ^2},\nonumber \\\end{aligned}$$(32)$$\begin{aligned}&f^*_\mathrm{Rr}(\alpha ,k)\nonumber \\&\quad =\!\frac{\sum \limits _{i=1}^{k_\mathrm{Rr}} \left( c_{il}(\alpha ) (b_{ir}(\alpha )\!-\!1)\right) ^2\!+\!\sum \limits _{i=k_\mathrm{Rr}\!+\!1}^n d_{ir}(\alpha ) \left( b_{ir}(\alpha )\!-\!1)\right) ^2}{\sum \limits _{i=1}^{k_\mathrm{Rr}} \left( c_{il}(\alpha ) b_{ir}(\alpha )\right) ^2\!+\!\sum \limits _{i=k_\mathrm{Rr}\!+\!1}^n \left( d_{ir}(\alpha ) b_{ir}(\alpha )\right) ^2},\nonumber \\ \end{aligned}$$(33) -

(3)

In Eq. (28), \(f_\mathrm{Rl}\) can be specified as Eq. (32), where \(b_{il}(\alpha )\) is an increasing order, \(k_\mathrm{Rl}\) is the switch point satisfying \(b_{{k_\mathrm{Rl},l}}(\alpha ) \le f^*_\mathrm{Rl}(\alpha ,k) \le b_{{k_\mathrm{Rl}+1},l}(\alpha )\).

-

(4)

In Eq. (29), \(f_\mathrm{Rr}\) can be specified as Eq. (33), where \(b_{ir}(\alpha )\) is an increasing order, \(k_\mathrm{Rr}\) is the switch point satisfying \(b_{{k_\mathrm{Rr},r}}(\alpha ) \le f^*_\mathrm{Rr}(\alpha ,k) \le b_{{k_\mathrm{Rr}+1},r}(\alpha )\).

Proof

See Appendix B. \(\square \)

Remark 1

In Theorem 2, there may exist intersection among the aggregated elements in Eqs. (30)–(33). If they do, the aggregated elements \(a_{il}(\alpha )\), \(a_{ir}(\alpha )\), \(b_{il}(\alpha )\) or \(b_{ir}(\alpha )\) must be ordered increasingly in each subsection with different \(\alpha \) levels, and write the corresponding function \(f^*\), respectively.

From the conclusions of Theorem 2, it can easily be seen that \(k=k_\mathrm{Ll}\), \(k=k_\mathrm{Lr}\), \(k=k_\mathrm{Rl}\) and \(k=k_\mathrm{Rr}\) in Eqs. (30)–(33) becomes the optimal solutions to Eqs. (34)–(37), respectively.

Coupled with the conclusions of Theorem 1, the switch point \(k=k_\mathrm{Ll}\), \(k=k_\mathrm{Lr}\), \(k=k_\mathrm{Rl}\) and \(k=k_\mathrm{Rr}\) in Eqs. (34)–(37) is also the optimal solution to Eqs. (38)–(41), respectively. It follows that

4 The analytical solution to IT2 FSs-based TOPSIS model

4.1 The identification of the switch points

Next, we introduce another functions called difference functions to compute the switch points in Eqs. (34)–(37).

Theorem 3

The optimal solution to Eqs. (34)–(37) with \(k=k_\mathrm{Ll}\), \(k=k_\mathrm{Lr}\), \(k=k_\mathrm{Rl}\) and \(k=k_\mathrm{Rr}\) can be determined as Eqs. (42)–(45), respectively.

-

(1)

In Eq. (42),

$$\begin{aligned} d_\mathrm{Ll}(\alpha ,k)&= \sum \limits _{i=1}^{k_\mathrm{Ll}} (a_{k_\mathrm{Ll}+1,l}(\alpha )-a_{il}(\alpha ))(2a_{k_\mathrm{Ll}+1,l}(\alpha )a_{il}(\alpha )\nonumber \\&\quad -\, a_{k_\mathrm{Ll}+1,l}(\alpha )-a_{il}(\alpha )) (d_{ir}(\alpha ))^2 \nonumber \\&\quad +\,\sum \limits _{i=k_\mathrm{Ll}+2}^n(a_{k_\mathrm{Ll}+1,l}(\alpha )-a_{il}(\alpha ))(2a_{k_\mathrm{Ll}+1,l}(\alpha )a_{il}(\alpha )\nonumber \\&\quad -\,a_{k_\mathrm{Ll}+1,l}(\alpha )-a_{il}(\alpha )) (c_{il}(\alpha ))^2, \end{aligned}$$(42)\(d_\mathrm{Ll}(\alpha ,k)\) is a decreasing function with respect to \(k(k=0,1,\ldots ,n-1)\), and there exists \(k=k_\mathrm{Ll}(k_\mathrm{Ll}=1,2,\ldots ,n-1)\), such that \(d_\mathrm{Ll}(\alpha ,k_\mathrm{Ll}-1) \ge 0\) and \(d_\mathrm{Ll}(\alpha ,k_\mathrm{Ll}) < 0\). So, \(k_\mathrm{Ll}\) is the optimal solution to Eq. (34), i.e., \(k_\mathrm{Ll}=k^*\). Moreover, when \(k=0,1,\ldots ,k_\mathrm{Ll}\), \(f(\alpha ,k)\) is an increasing function concerning \(k\); when \(k=k_\mathrm{Ll},k_\mathrm{Ll}+1,\ldots ,n\), \(f(\alpha ,k)\) is a decreasing function with respect to \(k\). So, \(k_\mathrm{Ll}\) is the global maximum solution to Eq. (34) with \(f_\mathrm{Ll}(\alpha )=f(\alpha ,k_\mathrm{Ll})\).

-

(2)

In Eq. (43),

$$\begin{aligned} d_\mathrm{Lr}(\alpha ,k)&\! =\! \sum \limits _{i=1}^{k_\mathrm{Lr}} (a_{k_\mathrm{Lr}+1,r}(\alpha )-a_{ir}(\alpha ))(2a_{k_\mathrm{Lr}\!+\!1,r}(\alpha )a_{ir}(\alpha )\nonumber \\&\quad -\,a_{k_\mathrm{Lr}+1,r}(\alpha )-a_{ir}(\alpha )) (d_{il}(\alpha ))^2 \nonumber \\&\quad +\!\sum \limits _{i=k_\mathrm{Lr}\!+\!2}^n(a_{k_\mathrm{Lr}\!+\!1,r}(\alpha )\!-\!a_{ir}(\alpha ))\nonumber \\&\qquad \times (2a_{k_\mathrm{Lr}\!+\!1,r}(\alpha )a_{ir}(\alpha )\nonumber \\&\quad -\, a_{k_\mathrm{Lr}+1,r}(\alpha )-a_{ir}(\alpha ))(c_{ir}(\alpha ))^2, \end{aligned}$$(43)\(d_\mathrm{Lr}(\alpha ,k)\) is a decreasing function with respect to \(k(k=0,1,\ldots ,n-1)\), and there exists \(k=k_\mathrm{Lr}(k_\mathrm{Lr}=1,2,\ldots ,n-1)\), such that \(d_\mathrm{Lr}(\alpha ,k_\mathrm{Lr}-1) \ge 0\) and \(d_\mathrm{Lr}(\alpha ,k_\mathrm{Lr}) < 0\). So, \(k_\mathrm{Lr}\) is the optimal solution to Eq. (35), i.e., \(k_\mathrm{Lr}=k^*\). Moreover, when \(k=0,1,\ldots ,k_\mathrm{Lr}\), \(f(\alpha ,k)\) is an increasing function concerning \(k\); when \(k=k_\mathrm{Lr},k_\mathrm{Lr}+1,\ldots ,n\), \(f(\alpha ,k)\) is a decreasing function with respect to \(k\). So, \(k_\mathrm{Lr}\) is the global maximum solution to Eq. (35) with \(f_\mathrm{Lr}(\alpha )=f(\alpha ,k_\mathrm{Lr})\).

-

(3)

In Eq. (44),

$$\begin{aligned} d_\mathrm{Rl}(\alpha ,k)&= -\sum \limits _{i=1}^{k_\mathrm{Rl}} (b_{k_\mathrm{Rl}+1,l}(\alpha )-b_{il}(\alpha ))\nonumber \\&\qquad (2b_{k_\mathrm{Rl}+1,l}(\alpha )b_{il}(\alpha )\nonumber \\&\quad -\, b_{k_\mathrm{Rl}+1,l}(\alpha )-b_{il}(\alpha )) (c_{ir}(\alpha ))^2 \nonumber \\&\quad -\sum \limits _{i={k_\mathrm{Rl}}+2}^n(b_{k_\mathrm{Rl}+1,l}(\alpha )-b_{il}(\alpha ))\nonumber \\&\quad \times (2b_{k_\mathrm{Rl}+1,l}(\alpha )b_{il}(\alpha )\nonumber \\&\quad -\, b_{k_\mathrm{Rl}+1,l}(\alpha )-b_{il}(\alpha ))(d_{il}(\alpha ))^2, \end{aligned}$$(44)\(d_\mathrm{Rl}(\alpha ,k)\) is an increasing function with respect to \(k(k=0,1,\ldots ,n-1)\), and there exists \(k=k_\mathrm{Rl}(k_\mathrm{Rl}=1,2,\ldots ,n-1)\), such that \(d_\mathrm{Rl}(\alpha ,k_\mathrm{Rl}-1 \le 0)\) and \(d_\mathrm{Rl}(\alpha ,k_\mathrm{Rl} > 0)\). Hence, \(k_\mathrm{Rl}\) is the optimal solution to Eq. (36), i.e., \(k_\mathrm{Rl}=k^*\). Moreover, when \(k=0,1,\ldots ,k_\mathrm{Rl}\), \(f(\alpha ,k)\) is a decreasing function of \(k\); when \(k=k_\mathrm{Rl},k_\mathrm{Rl}+1,\ldots ,n\), \(f(\alpha ,k)\) is an increasing function of \(k\). So, \(k_\mathrm{Rl}\) is the global minimum solution to Eq. (36) with \(f_\mathrm{Rl}(\alpha )=f(\alpha ,k_\mathrm{Rl})\).

-

(4)

In Eq. (45),

$$\begin{aligned} d_\mathrm{Rr}(\alpha ,k)&= -\sum \limits _{i=1}^{k_\mathrm{Rr}} (b_{k_\mathrm{Rr}+1,r}(\alpha )-b_{ir}(\alpha ))\nonumber \\&\quad \times \,(2b_{k_\mathrm{Rr}+1,r}(\alpha )b_{ir}(\alpha )\nonumber \\&\quad -\,b_{k_\mathrm{Rr}+1,r}(\alpha )-b_{ir}(\alpha )) (c_{il}(\alpha ))^2 \nonumber \\&\quad -\sum \limits _{i=k_\mathrm{Rr}+2}^n(b_{k_\mathrm{Rr}+1,r}(\alpha )-b_{ir}(\alpha ))\nonumber \\&\quad \times \,(2b_{k_\mathrm{Rr}+1,r}(\alpha )b_{ir}(\alpha )\nonumber \\&\quad -\,b_{k_\mathrm{Rr}+1,r}(\alpha )-b_{ir}(\alpha ))(d_{ir}(\alpha ))^2, \end{aligned}$$(45)\(d_\mathrm{Rr}(\alpha ,k)\) is an increasing function with respect to \(k(k=0,1,\ldots ,n-1)\), and there exists a value of \(k=k_\mathrm{Rr}(k_\mathrm{Rr}=1,2,\ldots ,n-1)\), such that \(d_\mathrm{Rr}(\alpha ,k_\mathrm{Rr}-1 \le 0)\) and \(d_\mathrm{Rr}(\alpha ,k_\mathrm{Rr} > 0)\). Hence, \(k^*\) is the optimal solution to Eq. (37), i.e., \(k_\mathrm{Rr}=k^*\). Moreover, when \(k=0,1,\ldots ,k_\mathrm{Rr}\), \(f(\alpha ,k)\) is a decreasing function of \(k\); when \(k=k_\mathrm{Rr},k_\mathrm{Rr}+1,\ldots ,n\), \(f(\alpha ,k)\) is an increasing function of \(k\). So, \(k_\mathrm{Rr}\) is the global minimum solution to problem (37) with \(f_\mathrm{Rr}(\alpha )=f(\alpha ,k_\mathrm{Rr})\).

Proof

See Appendix C. \(\square \)

Remark 2

In Theorem 3, the optimal solutions to Eqs. (42)–(45) may not be unique, that is to say, there may exist multiple results of \(k^*\) with respect to a difference function. In that case, these optimal solutions must be located together as continuous sequence, and have the same fuzzy relative closeness, which constitute the global analytical solutions to Eqs. (30)–(33).

From the conclusions of Theorem 3, it can easily be seen that the switch points in Eqs. (30)–(33) can be obtained by computing the difference functions of Eqs. (42)–(45), which are also the optimal switch points in Eqs. (38)–(41).

Combined with the conclusions of Theorem 2 and Theorem 3, the procedure of computing fuzzy relative closeness for IT2 FSs-based TOPSIS method can be concluded in Tables 1, 2.

Remark 3

As IT2 FSs is bounded by UMF \(\tilde{A}^U(x)\) and LMF \(\tilde{A}^L(x)\), both of which are type-1 fuzzy sets, it is obvious that the analytical solution to IT2 FSs-based TOPSIS method shown in Tables 1, 2 is also applicable to type-1 fuzzy TOPSIS method.

4.2 The procedure of the analytical solution to IT2 FSs-based TOPSIS model

Based on the proposed process for computing fuzzy relative closeness in Tables 1, 2, the procedure of the analytical solution to IT2 FSs-based TOPSIS method can be summarized as follows.

-

Step 1. Construct the decision matrix \(\tilde{\tilde{X}}\), and normalize average decision matrix as \(\tilde{\tilde{\bar{X}}}=(\tilde{\tilde{x}}_{ji})_{m \times n}\).

-

Step 2. Construct the weighting matrix \(\tilde{\tilde{W}}\), and normalize average weighting matrix as \(\tilde{\tilde{\bar{W}}}=\) \((\tilde{\tilde{w}}_i)_{1 \times n}\).

-

Step 3. Define the positive ideal solution \(A^*=\{1,1,\ldots ,\) \(1\}\) and the negative ideal solution \(A^-=\{0,0,\ldots \) \(,0\}\).

-

Step 4. Write the normalized average evaluations and weights with \(\alpha (\alpha \in [0,1])\) level as: \(a_{il}(\alpha )\), \(a_{ir}(\alpha )\), \(b_{il}(\alpha )\), \(b_{ir}(\alpha )\), \(c_{il}(\alpha )\), \(c_{ir}(\alpha )\), \(d_{il}(\alpha )\) and \(d_{ir}(\alpha )\).

-

Step 5. Compute the UMF \(\widetilde{\mathrm{RC}}^U_j(\alpha )\) of IT2 FSs-based fuzzy relative closeness according to Table 1.

-

Step 6. Compute the LMF \(\widetilde{\mathrm{RC}}^L_j(\alpha )\) of IT2 FSs-based fuzzy relative closeness according to Table 2.

-

Step 7. Draw the closed form of IT2 FSs-based fuzzy relative closeness \(\widetilde{\widetilde{\mathrm{RC}}}^*_j\) according to the final expressions in Steps 5–6.

-

Step 8. Computing the ranking values Rank(\(\widetilde{\widetilde{\mathrm{RC}}}^*_j\)) according to Eq. (2), the bigger the Rank(\(\widetilde{\widetilde{\mathrm{RC}}}^*_j\)) is, the better alternative \(A_j\).

5 Example

The example was investigated by Chen and Lee (2010), there are three alternatives \(A_{1-3}\) evaluated against four criteria \(C_{1-4}\) by three decision makers \(D_{1-3}\). The linguistic evaluation variables are duplicated in Table 3. Tables 4, 5 show the average weights and assessments provided by the three decision makers. The aggregated fuzzy numbers are obtained by averaging the fuzzy opinions of the three decision makers, that is \(\tilde{\tilde{w}}_j=\frac{1}{3}\sum \nolimits _{k=1}^3 \tilde{\tilde{w}}_j^k (j=1,2,\ldots ,4)\) and \(\tilde{\tilde{x}}_{ij}=\frac{1}{3}\sum \nolimits _{k=1}^3 \tilde{\tilde{x}}_{ij}^k\) \((i=1,2,3; j=1,2,\ldots ,4)\), where \(\tilde{\tilde{w}}_j^k\) and \(\tilde{\tilde{x}}_{ij}^k\) are the relative weights and the ratings given by the \(k\)th decision maker, respectively.

5.1 Computing process

Here, we take alternative \(A_1\) as an example, and show the process of computing the IT2 FSs-based fuzzy relative closeness in an analytical way.

-

Step 1 Construct the decision matrix \(\tilde{X}\), and normalize the fuzzy average decision matrix, which is shown in Table 5.

-

Step 2 Construct the weighting matrix \(\tilde{W}\), and normalize the fuzzy average weighting matrix as \(\tilde{\bar{W}}=(\tilde{w}_i)_{1 \times n}\) shown in Table 4.

-

Step 3 Define the positive ideal solution \(A^*=\{1,1,\ldots ,1\}\) and the negative ideal solution \(A^-=\{0,0,\ldots ,0\}\).

-

Step 4 Write the average fuzzy evaluations for alternative \(A_1\) and the average weights with \(\alpha \) level, respectively.

$$\begin{aligned}&\tilde{x}^U_{11}(\alpha )=(0.57+0.2\alpha ,0.93-0.16\alpha ),\\&\tilde{x}^U_{21}(\alpha )=(0.77+0.16\alpha ,1-0.07\alpha ),\\&\tilde{x}^U_{31}(\alpha )=(0.77+0.16\alpha ,1-0.07\alpha ),\\&\tilde{x}^U_{41}(\alpha )=(0.77+0.16\alpha ,1-0.07\alpha ),\\&\tilde{w}^U_1(\alpha )=(0.83+0.14\alpha ,1-0.03\alpha ),\\&\tilde{w}^U_2(\alpha )=(0.83+0.14\alpha ,1-0.03\alpha ),\\&\tilde{w}^U_3(\alpha )=(0.43+0.2\alpha ,0.83-0.2\alpha ),\\&\tilde{w}^U_4(\alpha )=(0.77+0.16\alpha ,1-0.07\alpha ). \end{aligned}$$ -

Step 5 Compute the fuzzy relative closeness \(\mathrm{RC}_\mathrm{Ll}\) for alternative \(A_1\).

-

(1)

Sort the aggregated elements \(a_{il}(\alpha )\) \((i=1,2,3,4)\) in increasing order. According to the expressions of the aggregated elements \(a_{il}(\alpha )\) \((i=1,2,3,4)\), the graph can be drawn as Fig. 4. For any \(\alpha \in [0,1]\), it follows that \(a_{1l}\) \((\alpha ) \le a_{2l}(\alpha )=a_{31}(\alpha )=a_{41}(\alpha )\). Hence, the order of \(a_{il}(i=1,2,3,4)\) need not be changed.

-

(2)

Construct the left difference functions \(d_\mathrm{Ll}(\alpha ,k)\) \((k=0,1,2,3)\) for alternative \(A_1\). According to Eq. (42), the difference functions \(d_\mathrm{Ll}(\alpha ,k)(k=0,1,2,3)\) for alternative \(A_1\) are denoted as Eq. (46)–(48), which are also shown in Fig. 5.

-

(3)

Find the switch point of difference function \(d_\mathrm{Ll}(\alpha ,k^*)\) for alternative \(A_1\). From Fig. 5, it can easily be seen that for any \(\alpha \in [0,1],\) it follows \(d_\mathrm{Ll}(\alpha ,0)\ge 0\) and \(d_\mathrm{Ll}(\alpha ,1)<0\). According to the conclusions of Theorem 3(1), it is obvious that if \(\alpha \in [0,1]\), such that the switch point \(k^*=k_\mathrm{Ll}=1\).

-

(4)

Write the expression of function \(f_\mathrm{Ll}(\alpha )\) for alternative \(A_1\). According to Eq. (30), when \(k_\mathrm{Ll}=1\), the closed-form expression of function \(f_\mathrm{Ll}(\alpha )\) can be denoted as Eq. (49).

-

(5)

Write the analytical solution to \(\widetilde{\mathrm{RC}}_\mathrm{Ll}(\alpha )\) for alternative \(A_1\). Substitute Eq. (49) in Eq. (38), for \(\forall \alpha \in [0,1]\), the closed-form function of fuzzy relative closeness \(\widetilde{\mathrm{RC}}_\mathrm{Ll}(\alpha )\) can be expressed as Eq. (50).

$$\begin{aligned}&\mathrm{RC}_\mathrm{Ll}(\alpha )\nonumber \\&\quad \!=\!\frac{1}{1\!+\!\sqrt{\frac{0.26249+0.00222\alpha ^4+0.00783\alpha ^3+0.04464\alpha ^2-0.25662\alpha }{1.19451+0.00222\alpha ^4+0.03546\alpha ^3+0.27503\alpha ^2+0.95576\alpha }}},\nonumber \\&\qquad \alpha \in [0,1]. \end{aligned}$$(50) -

(6)

Write the analytical solution to fuzzy relative closeness \(\widetilde{\mathrm{RC}}_\mathrm{Rr}(\alpha )\) for alternative \(A_1\). Similarly, the maximal fuzzy relative closeness \(\mathrm{RC}_\mathrm{Rr}\) for alternative \(A_1\) can be shown as Eq. (51).

$$\begin{aligned}&\mathrm{RC}_\mathrm{Rr}(\alpha )\nonumber \\&\quad \!=\!\frac{1}{1\!+\!\sqrt{\frac{0.00338+0.00073\alpha ^4+0.00378\alpha ^3+0.03611\alpha ^2+0.01657\alpha }{3.28473+0.00073\alpha ^4-0.0089\alpha ^3+0.09888\alpha ^2-0.91246\alpha }}},\nonumber \\&\qquad \alpha \in [0,1]. \end{aligned}$$(51) -

(7)

Draw the closed form of UMF \(\widetilde{\mathrm{RC}}^U_1\) for alternative \(A_1\). Combined with Eqs. (50) and (51) together, the closed form of UMF \(\widetilde{\mathrm{RC}}^U\) for alternative \(A_1\) can be written as Eq. (52).

-

Step 6 Compute the LMF \(\widetilde{\mathrm{RC}}^L_1\) of relative closeness for alternative \(A_1\). According to the computing process in Table 2, the closed-form function of fuzzy relative closeness \(\widetilde{\mathrm{RC}}^L_1\) can be obtained as Eq. (53).

-

Step 7 With the same method, compute the whole closed-form function of fuzzy relative closeness for alternatives \(A_2\) and \(A_3\). The pictures of IT2 FSs-based fuzzy relative closeness for the three alternatives are shown in Fig. 6.

-

Step 8 Using Eq. (2), the final ranking values of the IT2 FSs-based fuzzy relative closeness for the three alternatives are computed as: Rank\((A_1)=8.83836\), Rank\((A_2)=8.95285\), Rank\((A_3)=8.75709\). That is the best alternative is \(A_2\), and the ranking of the alternatives is

$$\begin{aligned} A_2 \succ A_1 \succ A_3. \end{aligned}$$

5.2 Discussion

Compared with Chen and Lee (2010), it is coincidental that the ranking results are the same. But the proposed method is completely different from that of Chen and Lee (2010), the differences of which are summarized as follows.

-

(1)

It realizes the actual sense of IT2 FSs-based TOPSIS method computation. As the IT2 FSs formats are kept through the whole computing process when solving the fuzzy relative distance functions for the three alternatives, and the defuzzification is dealt with at the end of the computing process, instead of the defuzzification from the very beginning of Chen and Lee (2010).

-

(2)

It is accurate. As the fractional NLP models consider all conditions when computing the IT2 FSs-based fuzzy relative closeness for the three alternatives. And the switch points of which are recognized through solving difference functions. By computing the algebraic formula of the object function within \(\alpha \in [0,1]\), the analytical solution to IT2 FSs-based TOPSIS model can also be obtained, which avoids information loss in computing process. However, in Chen and Lee (2010), after the defuzzification of the elements in weighted decision matrix, the crisp relative closeness is computed through traditional TOPSIS method, which cause decision information loss.

-

(3)

Moreover, a global accurate picture of the IT2 FSs-based fuzzy relative closeness for the three alternatives can also be, respectively, obtained, which provides a possibility to further analyze the properties of the results.

6 Conclusion

In this paper, we have proposed an analytical solution to IT2 FSs-based TOPSIS model for solving the fuzzy MADM problems. First, we have created the fractional NLP problems to find the fuzzy relative closeness. Second, based on the principle of KM algorithm, we have transformed the fractional NLP problem into identifying the switch points of \(\alpha \) levels. Finally, by computing the algebraic formula of object function within \(\alpha \in [0,1]\), we obtained the analytical solution to IT2 FSs-based TOPSIS model. Moreover, we have also discussed some properties of the proposed method. The main difference from Chen and Lee (2010) is that it keeps IT2 FSs format for the evaluations and weights in the whole computing process, and realizes the actual sense of IT2 FSs-based TOPSIS solution. It is accurate, as the computation is a continuous process and all the switch points are found through solving expressions. Moreover, a global picture of the fuzzy relative closeness can also be obtained for further analysis.

References

Ashtiani B, Haghighirad F, Makui A, Montazer (2009) Extension of fuzzy TOPSIS method based on interval-valued fuzzy sets. Appl Soft Comput 9(2):457–461

Behzadian M, Otaghsara SK, Yazdani M, Ignatius J (2012) A state-of the-art survey of TOPSIS applications. Exp Syst Appl 39(17):13051–13069

Boran FE, Genc S, Kurt M, Akay D (2009) A multi-criteria intuitionistic fuzzy group decision making for supplier selection with TOPSIS method. Exp Syst Appl 36(8):11363–11368

Chakravarty S, Dash P (2012) A PSO based integrated functional link net and interval type-2 fuzzy logic system for predicting stock market indices. Appl Soft Comput 12(2):931–941

Chen C (2000) Extensions of the TOPSIS for group decision-making under fuzzy environment. Fuzzy Sets Syst 114(1):1–9

Chen T, Tsao C (2008) The interval-valued fuzzy TOPSIS method and experimental analysis. Fuzzy Sets Syst 159(11):1410–1428

Chen SM, Lee LW (2010) Fuzzy multiple attributes group decision-making based on the interval type-2 TOPSIS method. Exp Syst Appl 37(4):2790–2798

Chen SM, Wang CY (2013) Fuzzy decision making systems based on interval type-2 fuzzy sets. Inf Sci 242:1–21

Damghani KK, Nezhad SS, Tavana M (2013) Solving multi-period project selection problems with fuzzy goal programming based on TOPSIS and a fuzzy preference relation. Inf Sci 252:42–61

Hagras H (2007) Type-2 FLCs: a new generation of fuzzy controllers. IEEE Comput Intell Mag 2(1):30–43

Huang HD, Lee CS, Wang MH, Kao HY (2014) IT2FS-based ontology with soft-computing mechanism for malware behavior analysis. Soft Comput 18(2):267–284

Hwang CL, Yoon K (1981) Multiple attribute decision making. Springer, Berlin

Kao C, Liu S (2001) Fractional programming approach to fuzzy weighted average. Fuzzy Sets Syst 120(3):435–444

Karnik NN, Mendel JM (2001) Centroid of a type-2 fuzzy set. Inf Sci 132:195–220

Khosravi A, Nahavandi S, Creighton D, Srinivasan D (2012) Interval type-2 fuzzy logic systems for load forecasting: a comparative study. IEEE Trans Power Syst 27(3):1274–1282

Lee LW, Chen SM (2008) Fuzzy multiple attributes group decision-making based on the extension of topsis method and interval type-2 fuzzy sets. In: Proceedings of 2008 international conference on machine learning and cybernetics, vols 1–7, pp 3260–3265

Li D, Wang Y, Liu S, Shan F (2009) Fractional programming methodology for multi-attribute group decision-making using IFS. Appl Soft Comput 9(1):219–225

Li D (2010) TOPSIS-based nonlinear-programming methodology for multiattribute decision making with interval-valued intuitionistic fuzzy sets. IEEE Trans Fuzzy Syst 18(2):299–311

Liu F, Mendel JM (2008) Aggregation using the fuzzy weighted average as computed by the Karnik–Mendel algorithms. IEEE Trans Fuzzy Syst 16(1):1–12

Liu X, Mendel JM, Wu D (2012) Analytical solution methods for the fuzzy weighted average. Inf Sci 187:151–170

Liu X, Wang Y (2013) An analytical solution method for the generalized fuzzy weighted average problem. Int J Uncertain Fuzziness Knowl Based Syst 21(3):455–480

Mendel JM (2001) Uncertain rule-based fuzzy logic system: introduction and new directions. Prentice-Hall, NJ

Mendel JM, Wu D (2010) Perceptual computing: aiding people in making subjective judgments, vol 13. Wiley, New York

Mendel JM (2007a) Advances in type-2 fuzzy sets and systems. Inf Sci 177:84–110

Mendel JM (2007b) Type-2 fuzzy sets and systems: an overview. IEEEComput Intell Mag 2(1):20–29

Mendel JM, Liu F (2007) Super-exponential convergence of the karnik-mendel algorithms for computing the centroid of an interval type-2 fuzzy set. IEEE Trans Fuzzy Syst 15(2):309–320

Mendel JM, Zadeh LA, Lotfi A, Trillas E, Yager RR, Lawry J, Hagras H, Guadarrama S (2010) What computing with words means to me. IEEE Comput Intell Mag 5(1):20–26

Mendel JM (2013) On KM algorithms for solving type-2 fuzzy set problems. IEEE Trans Fuzzy Syst 21(3):426–446

Miller S, Gongora M, Garibaldi J, John R (2012) Interval type-2 fuzzy modelling and stochastic search for real-world inventory management. Soft Comput 16(8):1447–1459

Robinson PJ, AmirtharajE CH (2011) Extended TOPSIS with correlation coefficient of triangular intuitionistic fuzzy sets for multiple attribute group decision making. Int J Decis Support Syst Technol 3(3):15–41

Tan C (2011) A multi-criteria interval-valued intuitionistic fuzzy group decision making with Choquet integral-based TOPSIS. Exp Syst Appl 38(4):3023–3033

Triantaphyllou E, Lin C (1996) Development and evaluation of five fuzzy multiattribute decision-making methods. Int J Approx Reason 14(4):281–310

Wagner C, Hagras H (2010) Toward general type-2 fuzzy logic systems based on zslices. IEEE Trans Fuzzy Syst 18(4):637–660

Wang Y, Elhag TM (2006) Fuzzy TOPSIS method based on alpha level sets with an application to bridge risk assessment. Exp Syst Appl 31(2):309–319

Wang Y, Lee H (2007) Generalizing TOPSIS for fuzzy multiple-criteria group decision-making. Comput Math Appl 53(11):1762–1772

Wang W, Liu X, Qin Y (2012) Multi-attribute group decision making models under interval type-2 fuzzy environment. Knowl Based Syst 30:121–128

Wu D, Tan WW (2006) Genetic learning and performance evaluation of interval type-2 fuzzy logic controllers. Eng Appl Artif Intell 19(8):829–841

Wu D, Mendel JM (2007) Aggregation using the linguistic weighted average and interval type-2 fuzzy sets. IEEE Trans Fuzzy Syst 15(6):1145–1161

Wu D (2012) On the fundamental differences between interval type-2 and type-1 fuzzy logic controllers. IEEE Trans Fuzzy Syst 20(5):832–848

Xu Z (2010) A method based on distance measure for interval-valued intuitionistic fuzzy group decision making. Inf Sci 180(1):181–190

Zadeh LA (1965) Fuzzy sets. Inf Control 8(3):338–353

Zadeh LA (1975) The concept of a linguistic variable and its applications to approximate reasoning, part I. Inf Sci 8(3):199–249

Zhou SM, John RI, Chiclana F, Garibaldi JM (2010) On aggregating uncertain information by type-2 OWA operators for soft decision making. Int J Intell Syst 25(6):540–558

Zhou SM, Chiclana F, John RI, Garibaldi JM (2011) Alpha-level aggregation: a practical approach to type-1 OWA operation for aggregating uncertain information with applications to breast cancer treatments. IEEE Trans Knowl Data Eng 23(10):1455–1468

Acknowledgments

The authors are very grateful to the Associate Editor and the anonymous reviewers for their constructive comments and suggestions to help improve my paper. This work was supported by the National Natural Science Foundation of China (NSFC) (71171048 and 71371049) and the Research Fund for the Doctoral Program of Higher Education of China (20120092110038).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by V. Loia.

Appendices

Appendix A: Proof of Theorem 1

Proof

Let

where \(y\) is a continuous variable, and \(y \in (0,1]\).

For Eq. (54), the derivative of function \(\rho (y)\) to \(y\) can be written as:

It is obvious that \(\rho (y)\) is an monotonically decreasing function with respect to \(y\).

Let \(y \equiv \widetilde{\widetilde{\mathrm{RC}}}\), substitute Eq. (11) into Eq. (54), and get

From Eq. (15), the derivative of function \(f(\tilde{\tilde{x}})\) with \(\tilde{\tilde{x}} \in (0,1]\) is written as:

It is concluded that \(f(\tilde{\tilde{x}})\) is a decreasing function with variable \(\tilde{\tilde{x}}\).

From the conclusions of Eqs. (55), (56), it is deduced that when \(\tilde{\tilde{x}}\) lies in the left region \(\tilde{x}^L\) and reaches its minimum (maximum) value, \(\tilde{f}^L(\tilde{x}^L)\) reaches its maximum (minimum) value, and \(\widetilde{\mathrm{RC}}^L(\tilde{x}^L)\) reaches its minimum (maximum) value; when \(\tilde{\tilde{x}}\) lies in the right region \(\tilde{x}^R\) and reaches its minimum (maximum) value, \(\tilde{f}^R(\tilde{x}^R)\) reaches its maximum (minimum) value, and \(\widetilde{\mathrm{RC}}^R(\tilde{x}^R)\) reaches its minimum (maximum) value.

The proof of Theorem 1 is completed. \(\square \)

Appendix B: Proof of Theorem 2

Proof

For simplification, we denote \(a_{il}(\alpha )\), \(\tilde{c}_i(\alpha )\), \(c_{il}(\alpha )\), \(c_{ir}(\alpha )\), \(\tilde{d}_i(\alpha )\), \(d_{il}(\alpha )\), \(d_{ir}(\alpha )\) as \(a_{il}\), \(\tilde{c}_i\), \(c_{il}\), \(c_{ir}\), \(\tilde{d}_i\), \(d_{il}\), \(d_{ir}\), respectively.

-

(1)

Let

$$\begin{aligned}&g_\mathrm{Ll}(\tilde{c},\tilde{d})\equiv \frac{\sum \limits _{i=1}^{k_\mathrm{Ll}} \left( \tilde{d}_i (a_{il}-1)\right) ^2+\sum \limits _{i=k_\mathrm{Ll}+1}^n \left( \tilde{c}_i (a_{il}-1)\right) ^2}{\sum \limits _{i=1}^{k_\mathrm{Ll}} \left( \tilde{d}_i a_{il}\right) ^2+\sum \limits _{i=k_\mathrm{Ll}+1}^n \left( \tilde{c}_i a_{il}\right) ^2}, \end{aligned}$$(57)where \(a_{il}\) is an increasing order, \(\tilde{c}_i\equiv [\tilde{c}_{k_\mathrm{Ll}+1,l}, \tilde{c}_{k_\mathrm{Ll}+2,l},\) \( \ldots , \tilde{c}_{k_n,l}]^T\), \(\tilde{d}_i\equiv [\tilde{d}_1, \tilde{d}_2, \ldots , \tilde{d}_{k_\mathrm{Ll}}]^T\), \(\tilde{c}_i \in [c_{il}, c_{ir}]\) and \(\tilde{d}_i \in [d_{il}, d_{ir}]\).

Correspondingly, Eq. (26) can be rewritten as:

In Eq. (24), it is concluded that there exists \(g_\mathrm{Ll}(\tilde{c}, \tilde{d})=f_\mathrm{Ll}(\alpha )\) satisfying

Next, it is proved that \(g_\mathrm{Ll}(\tilde{c}, \tilde{d})\) reaches its minimum only on condition that Eq. (57) holds.

-

(a)

When \(i \le k_\mathrm{Ll}\) and \(a_{k_\mathrm{Ll},l} \le g_\mathrm{Ll}(\tilde{c}, \tilde{d})\). According to Eq. (57), the derivative of function \(g_\mathrm{Ll}(\tilde{c}, \tilde{d})\) to \(d_i\) can be expressed as:

$$\begin{aligned} \frac{\partial g_\mathrm{Ll}(\tilde{c}, \tilde{d})}{\partial d_i}&= \frac{2d_{ir}\left( (a_{k_{il}}-1)^2-(a_{k_{il}})^2 g_\mathrm{Ll}(\tilde{c}, \tilde{d})\right) }{\sum \limits _{i=1}^{k_\mathrm{Ll}} \left( d_{ir} a_{il}\right) ^2+\sum \limits _{i=k_\mathrm{Ll}+1}^n \left( c_{il} a_{il}\right) ^2} \\&\le \frac{2d_{ir}\left( 1-\left( \frac{a_{il}a_{il}-a_{il}}{a_{il}a_{k_\mathrm{Ll},l}-a_{k_\mathrm{Ll},l}}\right) ^2\right) }{\sum \limits _{i=1}^{k_\mathrm{Ll}} \left( d_{ir} a_{il}\right) ^2+\sum \limits _{i=k_\mathrm{Ll}+1}^n \left( c_{il}a_{il}\right) ^2}. \end{aligned}$$As \(a_{il}\) is an increasing order and \(i < k_\mathrm{Ll}\), it is concluded that \(a_{il} \le a_{k_\mathrm{Ll},l}\), and \(a_{il}a_{il}-a_{il} \ge a_{il}a_{k_\mathrm{Ll},l}-a_{k_\mathrm{Ll},l}\), that is \(1-\left( \frac{a_{il}a_{il}-a_{il}}{a_{il}a_{k_\mathrm{Ll},l}-a_{k_\mathrm{Ll},l}}\right) \le 0\). Therefore, when \(k_{il} < k_\mathrm{Ll}\), the derivative of function \(g_\mathrm{Ll}(\tilde{c}, \tilde{d})\) to \(d_i\) \(\frac{\partial g_\mathrm{Ll}(\tilde{c}, \tilde{d})}{\partial d_i} \le 0\). On the other word, \(g_\mathrm{Ll}(\tilde{c}, \tilde{d})\) decreases when \(d_i (i \le k_\mathrm{Ll})\) increases. Hence, the computation of minimal \(g_\mathrm{Ll}(\tilde{c}, \tilde{d})\) must use the maximal \(d_i (i \le k_\mathrm{Ll})\) as stated in Eq. (30).

-

(b)

When \(i \ge k_\mathrm{Ll}\) and \(g_\mathrm{Ll}(\tilde{c}, \tilde{d}) \le a_{k_\mathrm{Ll}+1,l}\). According to Eq. (57), the derivative of function \(g_\mathrm{Ll}(\tilde{c}, \tilde{d})\) to \(c_i\) can be denoted as:

$$\begin{aligned} \frac{\partial g_\mathrm{Ll}(\tilde{c}, \tilde{d})}{\partial c_i}&= \frac{2c_{ir}\left( (a_{k_{il}}-1)^2-(a_{k_{il}})^2 g_\mathrm{Ll}(\tilde{c}, \tilde{d})\right) }{\sum \limits _{i=1}^{k_\mathrm{Ll}} \left( c_{ir} a_{il}\right) ^2+\sum \limits _{i=k_\mathrm{Ll}+1}^n \left( c_{il} a_{il}\right) ^2} \\&\ge \frac{2c_{ir}\left( 1-\left( \frac{a_{il}a_{il}-a_{il}}{a_{il}a_{k_\mathrm{Ll},l}-a_{k_\mathrm{Ll},l}}\right) ^2\right) }{\sum \limits _{i=1}^{k_\mathrm{Ll}} \left( d_{ir} a_{il}\right) ^2+\sum \limits _{i=k_\mathrm{Ll}+1}^n \left( c_{il}a_{il}\right) ^2}. \end{aligned}$$

As \(a_{k_{il}}\) is an increasing order and \(k_{il} > k_\mathrm{Ll}\), it is concluded that \(a_{k_{il}}(\alpha _j) \ge a_{k_\mathrm{Ll}}\), and \(a_{il}a_{il}-a_{il} \le a_{il}a_{k_\mathrm{Ll},l}-a_{k_\mathrm{Ll},l}\), that is \(1-\left( \frac{a_{il}a_{il}-a_{il}}{a_{il}a_{k_\mathrm{Ll},l}-a_{k_\mathrm{Ll},l}}\right) \ge 0\).

Therefore, when \(i > k_\mathrm{Ll}\), the derivative of function \(g_\mathrm{Ll}(\tilde{c}, \tilde{d})\) to \(d_i\) \(\frac{\partial g_\mathrm{Ll}(\tilde{c}, \tilde{d})}{\partial d_i} \ge 0\). On the other word, \(g_\mathrm{Ll}(\tilde{c}, \tilde{d})\) increases when \(d_i (i \le k_\mathrm{Ll})\) increases. Hence, the computation of minimal \(g_\mathrm{Ll}(\tilde{c}, \tilde{d})\) must use the minimal \(d_i (i \le k_\mathrm{Ll})\) as stated in Eq. (30).

Hence,

This completes the proof of Theorem 2 (1).

As the proofs of Theorem 2 (2–4) are similar to that of Theorem 2 (1), they are omitted here.

This completes the proof of Theorem 2. \(\square \)

Appendix C: Proof of Theorem 3

Proof

For simplification, we denote \(a_{il}(\alpha )\), \(\tilde{c}_i(\alpha )\), \(c_{il}(\alpha )\), \(c_{ir}(\alpha )\), \(\tilde{d}_i(\alpha )\), \(d_{il}(\alpha )\), \(d_{ir}(\alpha )\) as \(a_{il}\), \(\tilde{c}_i\), \(c_{il}\), \(c_{ir}\), \(\tilde{d}_i\), \(d_{il}\), \(d_{ir}\), respectively.

-

(1)

The proof of Theorem 3 (1).

For Eq. (30), when \(k=1,2,\ldots ,n-1\), it is right that

Hence, the difference of \(f_\mathrm{Ll}(k+1)-f_\mathrm{Ll}(k)\) can be obtained as Eq. (58).

As \(c_{il} \in [0,1]\), \(d_{ir} \in [0,1]\) and \(d^2_{k_\mathrm{Ll}+l}>c^2_{k_\mathrm{Ll}+l}\), the direction of \(f_\mathrm{Ll}(k+1)-f_\mathrm{Ll}(k)\) is determined by the sign of

To simplify the notation in the rest of the proof, \(d_\mathrm{Ll}(k)\) is defined as Eq. (59).

As \(a_{1l} \le a_{2l} \le \cdots \le a_{nl}\), the difference of \(d_\mathrm{Ll}(k)-d_\mathrm{Ll}(k-1)\) can be computed as Eq. (60).

For \((a_{k_\mathrm{Ll}+1,l}-a_{k_\mathrm{Ll},l})(2a_{k_\mathrm{Ll}+1,l}a_{k_\mathrm{Ll},l}-a_{k_\mathrm{Ll}+1,l}- a_{k_\mathrm{Ll},l})\) \((d^2_{k_\mathrm{Ll}+1,l}+c^2_{k_\mathrm{Ll}+1,l})\), \(a_{il}\) is an increasing order, so \(a_{k_\mathrm{Ll}+1,l}\) \(-a_{k_\mathrm{Ll}}>0\). And \(a_{il} \in [0,1]\), for all \(i=1,2,\ldots ,n\), it follows that \(a^2_{il} \le a_{il}\).

Hence, it is easy to prove that

Combined with the conclusions together, it is right that \((a_{k_\mathrm{Ll}+1,l}-a_{k_\mathrm{Ll}})(2a_{k_\mathrm{Ll}+1,l}a_{k_\mathrm{Ll}}-a_{k_\mathrm{Ll}+1,l}- a_{k_\mathrm{Ll}})\) \((d^2_{k_\mathrm{Ll}+1,r}+c^2_{k_\mathrm{Ll}+1,l})\le 0\).

Meanwhile, it follows that

Note that \(0 \le a_{k_\mathrm{Ll}} <a_{k_\mathrm{Ll}+1,l}\le 1\), it is obvious to get that \(0 \le \frac{a_{k_\mathrm{Ll}+1,l}+a_{il}}{2} \le 1\) and \(1-\frac{a_{k_\mathrm{Ll}+1,l}+a_{il}}{2} \ge 0\).

Hence, \((2a_{il}-1)(a_{k_\mathrm{Ll}+1,l}-a_{il})-2a^2_{il} \le 0\), that is \(((2a_{il}-1)(a_{k_\mathrm{Ll}+1,l}-a_{il})-2a^2_{il})c^2_{il} \le 0\).

Correspondingly, it can easily be seen that

and

Coupled with the conclusions proved above, it can easily be shown that

That is \(d_\mathrm{Ll}(k)\) is a decreasing function with respect to \(k\).

Combined with Eq. (60), Eq. (58) can also be rewritten as:

where

Since

With the decreasing property of \(d_\mathrm{Ll} (k)\) for \(k\), there must exist \(k=k_\mathrm{Ll} (k_\mathrm{Ll}=1,2,\ldots ,n-1)\), such that for any \(k=0,1,\ldots ,k_\mathrm{Ll}\), \(d_\mathrm{Ll}(k)\ge 0\), and for any \(k=k_\mathrm{Ll},k_\mathrm{Ll}+1,\ldots ,n-1\), \(d_\mathrm{Ll}(k_\mathrm{Ll})< 0\).

In Eq. (61), it follows that for any \(k=0,1,\ldots ,k_\mathrm{Ll}\), \(f_\mathrm{Ll}(k+1)-f_\mathrm{Ll}(k) \ge 0\), and for any \(k=k_\mathrm{Ll},k_\mathrm{Ll}+1,\ldots ,n-1\), \(f_\mathrm{Ll}(k+1)-f_\mathrm{Ll}(k)< 0\). So, \(f_\mathrm{Ll}(k_\mathrm{Ll})\) is the global maximum point with \(k_\mathrm{Ll}=k^*\).

This completes the proof of Theorem 3 (1).

As the proofs of Theorem 3 (2–4) are similar to that of Theorem 3 (1), they are omitted here.

This completes the proof of Theorem 3. \(\square \)

Rights and permissions

About this article

Cite this article

Sang, X., Liu, X. An analytical solution to the TOPSIS model with interval type-2 fuzzy sets. Soft Comput 20, 1213–1230 (2016). https://doi.org/10.1007/s00500-014-1584-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-014-1584-2