Abstract

A number of statistical downscaling methodologies have been introduced to bridge the gap in scale between outputs of climate models and climate information needed to assess potential impacts at local and regional scales. Four statistical downscaling methods [bias-correction/spatial disaggregation (BCSD), bias-correction/constructed analogue (BCCA), multivariate adaptive constructed analogs (MACA), and bias-correction/climate imprint (BCCI)] are applied to downscale the latest climate forecast system reanalysis (CFSR) data to stations for precipitation, maximum temperature, and minimum temperature over South Korea. All methods are calibrated with observational station data for 19 years from 1973 to 1991 and validated for the more recent 19-year period from 1992 to 2010. We construct a comprehensive suite of performance metrics to inter-compare methods, which is comprised of five criteria related to time-series, distribution, multi-day persistence, extremes, and spatial structure. Based on the performance metrics, we employ technique for order of preference by similarity to ideal solution (TOPSIS) and apply 10,000 different weighting combinations to the criteria of performance metrics to identify a robust statistical downscaling method and important criteria. The results show that MACA and BCSD have comparable skill in the time-series related criterion and BCSD outperforms other methods in distribution and extremes related criteria. In addition, MACA and BCCA, which incorporate spatial patterns, show higher skill in the multi-day persistence criterion for temperature, while BCSD shows the highest skill for precipitation. For the spatial structure related criterion, BCCA and MACA outperformed BCSD and BCCI. From the TOPSIS analysis, we found that MACA is the most robust method for all variables in South Korea, and BCCA and BCSD are the second for temperature and precipitation, respectively. We also found that the contribution of the multi-day persistence and spatial structure related criteria are crucial to ranking the skill of statistical downscaling methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Global climate models (GCMs) are a primary tool not only in providing insight into global climate responses to natural and anthropogenic changes, but also in assessing potential impacts of climate change on local hydrologic systems and water resources (Christensen and Lettenmaier 2007; Kim et al. 2007; Liu et al. 2015; Hay et al. 2014). Most climate change impact studies require fine-resolution input data sufficient to model and simulate climate-relevant problems at regional or local scales, whereas GCMs provide climate information at a coarse resolution, in general on ~1° to 2° grids (Olsson et al. 2001; Dibike and Coulibaly 2006; Fowler et al. 2007). In addition, simulated outputs from climate models (global and regional) have shown systematic biases with respect to observational data sets (Mearns et al. 2012; Sillmann et al. 2013) mainly due to unresolved sub-grid scale processes (Cherubini et al. 2002), physical parameterizations (Jenkins and Lowe 2003; Mizuta et al. 2006), and cascading errors from boundary forcing in regional climate models (Deque et al. 2007; Christensen et al. 2007).

To provide relevant climate information at scales needed to assess local and regional impacts, downscaling techniques are often employed (Wilby et al. 1998; Haylock et al. 2006; Hidalgo et al. 2008; Abatzoglou and Brown 2011; Stoner et al. 2013). Between the two main categories of downscaling (dynamical and statistical), many prior studies have applied statistical downscaling methods, which have advantages in computational efficiency and ability to reproduce essential statistics of regional observed climate data (Bardossy et al. 2005; Haylock et al. 2006; Eum and Simonovic 2012).

Recently bias-correction/spatial disaggregation (BCSD, Wood et al. 2004), bias-correction/constructed analogue (BCCA, Maurer et al. 2010), multivariate adaptive constructed analogs (MACA, Abatzoglou and Brown 2011), bias-correction/stochastic analog (BCSA, Hwang and Graham 2013), and bias-correction/climate imprint (BCCI, Hunter and Meetemeyer 2005) model output statistics (MOS)-based methods have been actively applied to downscale outputs of GCMs at local scales. As a result of the plethora of techniques, inter-comparison studies have been conducted to identify more robust downscaling methods with regard to extremes (Segui et al. 2010; Goodess et al. 2012; Thrasher et al. 2012; Bürger et al. 2013; Werner and Cannon 2016), wildfire applications (Abatzoglou and Brown 2011), and water resources assessment (Eum et al. 2010; Chen et al. 2011; Gutmann et al. 2014; Rana and Moradkhani 2016; Mizukami et al. 2016). We selected four statistical downscaling methods because the methods have been widely used in natural hazards, hydrology, and water sectors such as water resfources planning (Brown et al. 2012; Miller et al. 2013), integrated water management (Hanson et al. 2014), climate change impact studies (Salathé et al. 2007; Brekke et al. 2009; Werner et al. 2013), and wild fire warning systems (Abatzoglou and Brown 2011): (1) BCSD, (2) BCCA, (3) MACA, and (4) BCCI. In addition, the selected techniques have been tested and documented very well (Gutmann et al. 2014) and downscaled data archives are available for US (at http://gdo-dcp.ucllnl.org/downscaled_cmip_projections/dcpInterface.html#Limitations, and http://climate.northwestknowledge.net/MACA/downloadTools.php) and Canada (http://tools.pacificclimate.org/dataportal/downscaled_gcms/map/). This study applied the four statistical downscaling methods for South Korea and assessed the characteristics of each method based on a suite of evaluation metrics.

Formulating precise and informative evaluation metrics is a prerequisite to accurately quantify statistical robustness and performance for statistical downscaling inter-comparison studies. Hayhoe (2010) grouped performance metrics into five categories from 466 recent journal articles: (1) climatological biases, (2) correlation, (3) variance, (4) extremes, and (5) persistence. At the same time, a standardized evaluation framework for intercomparison studies was suggested to provide pertinent, transparent and independent statistical and physical tests, across three categories: (1) mean values and trends, (2) threshold, exceedance probabilities, quantiles, and (3) multi-day persistence. An evaluation framework working group comprised of scientists and practitioners in National Climate Predictions & Projections (NCPP) (refer to https://earthsystemcog.org/projects/downscaling-2013/metrics for more details) also suggested an expanded form of evaluation framework from three groups that proposed evaluation metrics related to uniform comparison, application-specific comparison, and process-related issues, respectively. In particular, the first group proposed metrics of time series and distribution related, temporal structure, and spatial correlation while the second group suggested indices related to accumulated parameters such as cold and dry spells, heat waves, etc. The third group suggested process-related indices such as monsoon, weather typing of extreme events. In accordance with the NCPP evaluation framework for the uniform comparison, Murdock et al. (2013) evaluated statistical downscaling methods using three diagnostics including sequencing of events, distribution of values, and spatial structure. Bürger et al. (2013) intercompared multiple methods in terms of ability to reproduce climate extremes indices recommended by the World Meteorological Organization’s Expert Team on Climate Change Detection and Indices (ETCCDI) (Zhang et al. 2011). Integrating all performance metrics and these diagnostics employed in prior studies, we suggest a suite of evaluation metrics which is grouped into five categories: (1) time-series related, (2) distribution related, (3) multi-day persistence, (4) extremes, and (5) spatial structure. More details are discussed in the methodology section. We use the climate forecast system reanalysis (CFSR) version 2 (Saha et al. 2010) for training and validation, which places more focus on the skill of methods rather than fidelity of one or more climate models (Wilby et al. 2000; Fasbender and Ouarda 2010; Nicholas and Battisti 2012).

In prior studies, downscaling methods showed different levels of skill for different performance metrics. This makes selection of the most skillful method overall difficult and potentially ambiguous. As a branch of multi-criteria decision making (MCDM), the technique for order of preference by similarity to ideal solution (TOPSIS), originally developed by Hwang and Yoon (1981), provides a tool to sort alternatives that are simultaneously far from the worst solution and close to the best condition (Triantaphyllou 2000). TOPSIS has a compensatory procedure that compares a set of alternatives by calculating geometric distance of each alternative from an ideal solution with an assumption that the criteria are monotonically increasing or decreasing (Garvey 2008). Therefore, it is easy not only to define the best and worst solutions but also to apply and order the preference of alternatives (Ozturk and Batuk 2011; Chu 2002). The TOPSIS method has successfully been applied to water sectors in South Korea, for instance, for identifying hydrological vulnerable locations (Chung and Lee 2009; Jun et al. 2013) and alternatives for watershed management (Kim and Chung 2014). Using the TOPSIS technique, therefore, we present an approach to rank multiple statistical downscaling methods based on the full suite of evaluation framework suggested in this study. The resulting rank may be informative for end-users to select the most suitable method in an available inventory.

Therefore, the main objectives of this study are to: (1) develop and apply the four statistical downscaling methods in South Korea, (2) intercompare the skill of each method on the four performance metrics, and (3) rank the methods using the TOPSIS technique.

2 Study area and climate data sets

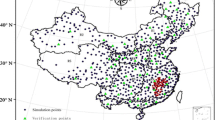

We developed and applied the four statistical downscaling methods over South Korea (~100,210 km2), a country with complex topography including islands (approximately 3000 km2) and the southern portion of the Korean peninsula (refer to Fig. 1a). Because the spatial resolutions of GCMs are too coarse to describe regional climate characteristics over South Korea, downscaling plays a crucial role in regional applications.

For historical validation, we selected the CFSR data set at 1.0 degree grid spacing over the period from 1979 to the present (Saha et al. 2010). The latest CFSR is a coupled (i.e. atmosphere, ocean, land, and sea ice) reanalysis system with an interactive sea ice model, assimilation of satellite radiance data for the entire period, and relatively high horizontal and vertical resolutions. We downscaled daily precipitation (PRCP), maximum temperature (TMAX) and minimum temperature (TMIN) from CFSR by the four statistical downscaling methods to the observational station network.

In South Korea, the Automatic Weather Station (AWS) and the Automated Synoptic Observing System (ASOS) networks provide observational climate data with inter-station spacing of approximately 12 km resolution (refer to Figs. 1b, 2). ASOS provides long-term climate data information (more than 30 years) whereas AWS stations have only operated since 2000, thereby providing a short term data set for only ~10 to 15 years. In general, a reliable long-term observed data set is a prerequisite to apply statistical downscaling models that reflect the climate variability of historical period. In this study, 60 stations (Fig. 2) that provide nine observational climate variables (PRCP, TMAX, TMIN, and average air temperatures, dew point temperature, average and maximum wind speed, average and minimum humidity) with few missing values for a common period from 1973 to 2010 were selected. Although leave-one-year-out cross validation with 38 years can avoid the drawback of having a short data record for model validation, it can provide overly optimistic estimates of skill due to year-to-year persistence related to low frequency climate variability such as El Niño Southern Oscillation (ENSO) etc. Therefore, we incorporated a split-sample validation approach with the first 19 years for calibration and the latest years of 19 years for validation.

3 Methodology

3.1 Simple spatial interpolation (SSI)

As the simplest downscaling method, simple spatial interpolation (SSI) is conducted to produce downscaled values at each station by the inverse distance technique (Lapen and Hayhoe 2003). Therefore, downscaled values preserve bias of the climate model, which varies with levels of topographical complexity at each station. This means that the skill of SSI is entirely dependent on the skill of the climate model. Consequently, we can quantitatively evaluate effects of statistical downscaling methods by measuring improvement in downscaling skill relative to SSI.

3.2 Daily bias-correction/spatial disaggregation (BCSD)

BCSD was originally developed to downscale GCM output to provide input data for a macro-scale process-based hydrologic model (Wood et al. 2004). Traditionally, BCSD downscales at a monthly time step and temporally disaggregates to a daily time step by randomly selecting daily sequences from the historical data set (Maurer and Hidalgo 2008; Werner et al. 2013). The use of BCSD has been extended to directly downscale at daily scale (Abatzoglou and Brown 2011; Thrasher et al. 2012). We employ daily BCSD for the intercomparison of statistical downscaling methods in this study. Because daily BCSD performs spatial disaggregation and bias-correction with daily coarse-resolution climate data, this method does not require temporal disaggregation. As a result, it maintains the daily spatial and temporal structure of the coarse-resolution data. Therefore, the skill of the coarse-resolution historical data plays an important role in the performance of daily BCSD.

Daily BCSD first spatially disaggregates coarse-resolution data to a finer resolution by an inverse distance interpolation scheme (Lapen and Hayhoe 2003), and then applies bias-correction by a quantile mapping algorithm that equates empirical cumulative distribution functions (CDFs) of observed and modeled data (F o and F m , respectively),

where \(\hat{x}_{m} (t)\) and \(x_{m} (t)\) are bias-corrected and modeled data at time t, respectively. Note that CDFs, F o and F m , are formulated by observed and modeled data during historical period in this study. Although the majority of the model outputs can be corrected by Eq. (1), some extreme cases may be out of the range of the historical data. For these cases, the CDFs can be estimated by parametric distribution functions and then corresponding values are extrapolated (Wood et al. 2004). For precipitation, Gumbel distributions are employed for high precipitation events whereas a normal distribution is employed for temperature. To formulate the sample CDFs, we use observational and modeled daily data within 15-day moving windows centered on each calendar day.

3.3 Bias-correction/climate imprint (BCCI)

A spatial climate imprint which represents the correspondence between the coarse and fine resolutions is obtained from the mean value at each grid point for the calibration period from 1973 to 1991. The fine-resolution historical data are aggregated to the CFSR spatial resolution prior to bias correction by quantile mapping. Ratios of daily model output relative to the mean values at the coarse resolution are calculated for PRCP and then spatially interpolated to the fine (station-based) resolution. The interpolated ratios are multiplied by the mean values at each station to obtain downscaled values [Eq. (2)]. For TMAX and TMIN, differences between daily model output and the long-term mean values are used instead of the ratios [Eq. (3)]. We have

where P downscaled is downscaled PRCP, R interpolated is the spatially interpolated ratio at each station, P ave is the long-term mean precipitation at each station, T downscaled is downscaled TMAX or TMIN, D interpolated is spatially interpolated difference at each station, and T ave is the long-term mean temperature at each station. As a post-process, BCCI again applied quantile mapping at the fine scale as in daily BCSD.

3.4 Bias correction/constructed analogue (BCCA)

A primary assumption of analogue downscaling methods is that a weather pattern in the historical record can be used to represent those in the future (Lorenz 1969; van den Dool 1994). By incorporating a relationship between coarse and find scale historical the current weather patterns and a specified GCM weather event, useful coarse-scale analogues can be constructed and used for downscaling (Timbal et al. 2003; van den Dool et al. 2003; Diez et al. 2005). Because a linear combination of multiple patterns rather than a single analogue (Fernández and Sáenz 2003) provides added-value in forecasts (van den Dool et al. 2003), a best-fit analogue is constructed by a linear combination of multiple coarse-resolution historical weather patterns (called the library) that match the bias-corrected GCM weather pattern on a given day; The fine-resolution historical data are aggregated to the climate model resolution to form the library. Then, the constructed analogue weights at the coarse-resolution are applied to the fine-resolution weather patterns on those days to produce downscaled outputs. As Maurer and Hidalgo (2008) found that the greatest skill was obtained by using precipitation and temperature as predictors, the library is composed of precipitation and temperature patterns in this study. Bias-correction is applied to the coarse-resolution GCM data using quantile mapping. Hence, following Maurer et al. (2010), absolute values are used to identify analogues in BCCA rather than anomalies.

Mathematically, the target pattern (T m ) should be defined, as shown in Eq. (4), by the regression coefficient matrix (A analogue) and analogue patterns (C analogue) composed of the best 10 days selected based on root mean square error (RMSE) between the target pattern and the historical weather patterns in the library during the calibration period.

Applying the Moore–Penrose inverse to C analogue, A analogue can be obtained by Eq. (5).

For precipitation, the matrix to be inverted in Eq. (5) is often near-singular matrix, for example when the domain is dominated by dry grid cells. Therefore, we employ the ridge regression technique (Tikhonov et al. 1995) with a small penalty to solve in these cases. The same regression coefficient matrix (A analogue) is applied to the fine-resolution weather patterns on the same days that correspond to the date of the constructed analogue (C analogue) at the coarse-resolution. That is, the downscaled values (V downscaled) are obtained by Eq. (6).

where V analogue is a constructed fine-resolution analogue, i.e. spatial pattern of climate variables over the 60 stations.

3.5 Multivariate adapted constructed analogues (MACA)

Mathematically, MACA follows the same procedure as BCCA except that it incorporates additional variables into the analogues at both coarse and fine resolutions. An epoch adjustment that removes and re-introduces the difference of mean values between the current and future time slices (e.g. 1990s and 2050s) in the original MACA methodology (Abatzoglou and Brown 2011) is skipped in this study because we downscale the CFSR data set over a relatively stationary historical period. After analyzing correlations between variables (Table 1), MACA is conducted for PRCP with minimum humidity and for TMAX and TMIN with average temperature to improve coherence in spatial weather patterns on a given day. Thus, we can evaluate the added-value of MACA, which introduces more variables into the constructed analogues, relative to BCCA.

3.6 Assessment of downscaling skill

The downscaled daily PRCP, TMAX, and TMIN from CFSR for the validation period from 1992 to 2010 are assessed using the five criteria comprised of 23 ETCCDI indices (refer to Table 2 for details) and spatial correlation for PRCP, TMAX and TMIN as presented in Table 3. In Table 3, quantitative evaluations for 23 ETCCDI indices are implemented by the Euclidean distances of errors for mean and standard deviation between observed and downscaled (simulated) ETCCDI indices at 60 ASOS stations. Note that all metrics are normalized by Eq. (7) and then the Euclidean distances are evaluated by Eq. (8).

where, μE and σE are normalized errors of mean and standard deviation, and subscript s and o represent simulated and observed values, respectively, and D represents the Euclidean distance of errors.

The first metric measures the ability to reproduce indicators related to time-series, for instance, annual precipitation, trends for annual averages, annual summer and frost days, and so on. Among them, τ is evaluated by Mann–Kendall trend test, which represents the magnitude of the trend for variables. The second metric measures the ability to reproduce distribution of values, which is estimated by the Kolmogorov–Smirnov (K–S) D statistic, the supremum of the set of distances of empirical distributions between observed and downscaled data. This study evaluates the K–S D for each month and then the average value of RMSEs from all months is used as an evaluation metric. The third metrics measures the ability to reproduce multi-day persistence by consecutive dry day (CDD) and consecutive wet day (CWD) for precipitation, the warm spell duration index (WSDI) for TMAX, and cold spell duration index (CSDI) for TMIN, respectively. The fourth metric measures the ability to reproduce extremes, i.e. values of maximum, minimum, and exceedance threshold. The fifth metric measures the ability to reproduce observed spatial patterns, which is measured by RMSE of spatial correlations between observed and downscaled data sets. In other words, we calculated correlations between stations for observed and downscaled data sets individually (1830 cases for 60 stations in this study). Then, RMSE of spatial correlations was calculated based on the difference of correlations between the observed and downscaled data. In this way, we compared RMSEs for all statistical downscaling methods. As in the second metric, the RMSEs of spatial pattern are evaluated every month and then the average value is used in this study.

3.7 Technique for order of preference by similarity to ideal solution (TOPSIS)

A goal of this study is to identify the most suitable technique among the four statistical downscaling methods considered in this study for South Korea. The use of multiple performance criteria means that methods may perform well in some areas but not others, potentially leading to ambiguity when making a final recommendation. Therefore, we introduce the use of TOPSIS, a systematic process for determining suitable alternatives to a multi-criteria problem. Because of its simplicity, TOPSIS has been applied to a range of problems, including a business model comparison (Zhou et al. 2012) and watershed management (Chung and Lee 2009; Jun et al. 2013; Lee et al. 2013). TOPSIS starts by creating an evaluation matrix, (x ij ) m × n for the ith alternative and jth criterion. Then, the weighted normalized decision matrix, (t ij ) m × n is given by

where n ij is normalized matrix from x ij by the vector normalization [Eq. (10)], and w j represents weighting on the jth criterion (Hwang and Yoon 1981). The weights reflect the preferences of decision makers, i.e. relative importance of criteria under consideration. However, objectively assigning the weights is not straightforward but arguable because it significantly depends on the aims of a particular task. Employing, therefore, the law of large numbers to determine a proper number of weighting combinations without the distribution of weighting, we generated 10,000 combinations of weights on the five criteria including equal weighting (=1/n). The use of 10,000 combinations allows us to sure that the mean value of weighting for each criterion is within 0.01 of the population mean of weighting at 95 % significance level. Then we ranked the statistical downscaling methods to identify a robust method among the four statistical downscaling methods. Then, determining the worst (A w ) and best (A b ) conditions [refer to Eqs. (11) and (12)] is necessary to calculate Euclidean distances from the worst condition and best condition at the target alternatives i., d iw and d ib , using Eqs. (13) and (14), respectively.

For the four performance criteria (n = 5) from the four downscaling methods (m = 4) in this study, for example, (x ij )4×5 is evaluated and then the weighted normalized matrix (t ij ) can be obtained. According to the rescaled metrics (t ij ) and characteristics of each performance metric, the worst and best conditions (A w and A b ) are determined. In this study, all performance criteria are in J −. For instance, t w1 and t b1 are the minimum and maximum values of time-series related criterion from four downscaling methods, respectively. Using Eqs. (13) and (14), then, Euclidean distances from the worst condition and best condition for each of downscaling method are calculated.

As a final step in TOPSIS, we rank all alternatives based on the similarity [Eq. (15)] for determining suitable alternatives or excluding worst alternatives by introducing a cut-off threshold.

When s iw = 1, it indicates the alternative is equal to the best condition, and s iw = 0 if the alternative is equal to the worst condition. That is, the ith statistical downscaling method nearest to s iw = 1 is the most suitable and robust method, and vice versa.

4 Results and discussion

4.1 Time-series related criterion

Performance evaluation of the four statistical downscaling methods for all variables is shown in Table 4 where all values are averaged over 60 ASOS stations. The performance of SSI in SDII, the standardized precipitation intensity per day, is the poorest, which may be related to a drizzling bias in CFSR precipitation fields. That is, very small amounts of precipitation are generated in the CFSR precipitation data (Demirel and Moradkhani 2016). The skill in the ID indicator, icing days, is very low for all methods but the four statistical downscaling methods improved the skill compared with SSI. Interestingly, the skill in reproducing trends for PRCP, TMAX, and TMIN is quite good for all methods, even SSI because the CFSR reanalysis data provides high skill in replication of day-to-day weather in the region. Overall, BCSD provides the highest skill for PRCP and TMAX while MACA does for TMIN. However, the difference in evaluation scores between BCSD and MACA is not considerable in most indicators.

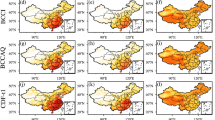

PRCPTOT (annual precipitation) is often used to categorize climatologically wet and dry areas. Figure 3 displays spatial patterns of mean PRCPTOT during the validation period (1992–2010) for observations and all statistical downscaling methods. SSI underestimates PRCPTOP over South Korea while the other four methods show good agreement with the observations, in particular in the southeast. However, statistical downscaling methods underestimate PRCPTOT at the central and northern areas. In addition, time series of spatially averaged indices in Fig. 4 show that the statistical downscaling methods improve the skill in reproducing time-series related indicators compared to SSI. In particular, SSI extremely underestimates SDII, SU, and FD mainly due to drizzling effect in CFSR, cold bias for TMAX, and warm bias for TMIN, respectively. The biases for TMAX and TMIN may result from a coarse grid size that includes both ocean and land in a grid cell.

4.2 Distribution related criterion

The skill of statistical downscaling methods in reproducing distributions of observed daily climate data is evaluated by the K–S D statistic, which is defined as the maximum distance between empirical cumulative density functions (CDFs) of the observed and downscaled climate data sets. Therefore, a lower value of K–S D represents better performance on this criterion.

Seasonal K–S D values are shown in Table 5. As for time-series related criteria, all values represent the averaged K–S D values over 60 stations. Notably, K–S D values for all variables are considerably improved by the four downscaling methods by 67 % for TMAX, 55 % for TMIN, and 88 % for PRCP compared with SSI. Interestingly, K–S D values for TMAX and TMIN during winter have the poorest performance among the four seasons, which indicates that CFSR lacks skill in capturing hot and cold extreme events during winter. As expected, SSI has the lowest skill in simulating summer precipitation with the K–S D statistic improved by introducing the statistical downscaling methods. BCSD, which incorporates spatial disaggregation with quantile mapping bias-correction, outperforms other methods in reproducing the distribution of station data for all variables and seasons. Corresponding to the main types of statistical downscaling algorithms described in the methodology section, the spatial patterns of K–S D statistics from BCSD and BCCI are similar, while BCCA is similar to MACA (not shown here).

4.3 Multi-day persistence related criterion

Four indicators related to multi-day persistence criteria are evaluated for TMAX, TMIN, and PRCP. Table 6 presents the Euclidean distances of errors between observed and simulated values for each downscaling method. All values are spatially averaged over all stations. In general, MACA and BCCA, which incorporate spatial patterns, show higher skill for TMAX and TMIN than BCSD and BCCI employing spatial disaggregation. In particular, MACA and BCCA outperform others for CSDI which represents the skill in reproducing at least 6 consecutive extreme cold days, i.e. when TMIN is less than the minimum temperature of the 10th percentile. Cold extremes of TMIN for the long-term (i.e. longer than 6 days) are normally affected by both surface conditions and a large-scale forcing such as cold waves derived from large-scale patterns (Christensen et al. 2007). Therefore, downscaling methods employing spatial patterns may provide better skill at long lasting cold events as well as warm events. For PRCP, on the contrary, BCSD shows the highest skill in reproducing consecutive dry and wet days.

Figure 5 shows the time series of the four indicators spatially averaged during the validation period. As shown in Table 6, SSI and BCSD show poor performance in CSDI, overestimating from 1998 to 2001. In addition, sequencing of WSDI and CSDI for SSI and BCSD is very similar to each other, which indicates that BCSD may highly depend on the skill of climate model in reproducing long-lasting consecutive warm and cold events. For precipitation indicators, SSI shows much better skill at CDD compared with CWD, which indicates that CFSR overpredicts wet days (refer to CWD in Fig. 7), resulting from the drizzling effect in the CFSR precipitation data while CFSR may provide reliable CDD. However, the four statistical downscaling methods properly bias-correct sequencing of dry and wet days in the CFSR data by quantile mapping with daily data (Hwang and Graham 2013).

4.4 Extremes related criterion

The extremes related criterion measures how well statistical downscaling models simulate extreme events. Table 7 shows evaluation metrics for TMAX, TMIN, and PRCP. In general, the performance of the statistical downscaling methods follows that for SSI, indicating that downscaling performance may be highly dependent on the skill of CFSR. We also found that CFSR reproduces observed PRCP extremes well, i.e. the lowest Euclidean distance of errors on average. Despite the high baseline, performance is considerably improved by the statistical downscaling methods relative to SSI. Based on the average over the error distances of all indicators for each variable, BCSD and BCCI show the best performance for TMAX, PRCP and TMIN, respectively. Overall, BCSD shows the highest skill in simulating extremes, which may be related to its performance on the distribution related criterion described above as the maximum difference between distributions often occur at the most extreme values.

In addition, time series of spatially averaged extreme related indicators show poor skill by SSI compared to the statistical downscaling methods. Figure 6 displays time series of indices related to TMAX (TXn, TXx, TX10p, and TX90p). SSI has a cold bias and underestimates in most cases, mainly resulting from a lack of reflecting regional topographical characteristics due to a coarse grid size that includes both ocean and land parts in a grid cell. Specifically, annual maximum values of TMAX (TXx) are underestimated by 2.7 °C on average for SSI while the four downscaling methods remove the bias and show large improvement in TXx. For extreme indicators related to TMIN (Fig. 7), the performance of SSI for extreme related TMIN indicators is comparable to those of the downscaling methods. However, SSI has a warm bias in most cases, leading to an underestimation of FD and TNx and an overestimation of TNn and TN90p. On the contrary, the four statistical downscaling methods show a cold bias in TNn, which causes worse error distances as shown in Table 7. Such results indicate that downscaling methods may cause additional biases on climate data. Figure 8 shows time series of precipitation related extreme indicators (Rx1 day, Rx5 day, R95pTOT, and R99pTOT). SSI underestimates Rx1 day and Rx5 day, likely due to the inability of CFSR to capture topographic effects on precipitation by CFSR. Total amounts of precipitation above a threshold (i.e. R95pTOT and R99pTOT) from SSI show relatively good agreement with observations while overestimation of extreme precipitation is found in Rx1 day, R95pTOT, and R99pTOT during years with high precipitation. These results may be induced by extrapolating with extreme distributions (e.g. Gumbel) for the values out of calibration range in the statistical downscaling methods. The extrapolation may cause a substantial distortion of climate events (Maraun et al. 2010; Maraun 2013). However, all indices are considerably improved by the statistical downscaling methods in most cases.

As in Fig. 6 except TMIN

As in Fig. 6 except PRCP

4.5 Spatial structure related criterion

The last metric we employ measures the ability of methods to reproduce the spatial correlation of each variable, which is an important factor when simulating hydrologic responses for water resource management because spatial precipitation distributions play a crucial role in simulating floods and drought events. Root mean square errors (RMSEs) of seasonal spatial correlations between the observed and downscaled data sets are shown in Table 8. RMSEs for SSI during summer for all variables are higher than those during other seasons, which may be attributed to the localized nature of heat waves and heavy rainfall during summer. However, RMSEs are considerably reduced by the downscaling methods. In particular, large improvements by BCCA and MACA are found for all variables, up to 45 % on average by MACA for PRCP versus 8 % for BCCI. An important finding is that the spatial structure of summer precipitation is improved by MACA. Summer precipitation, as indicated above, is significantly affected by typhoons, leading to localized heavy rainfall under a monsoon climate, thus it is difficult to capture the spatial structure over the study area. Approximately 60 % of the annual precipitation occurs during summer, which has significant implications for water resources management as a balance must be kept between maintaining water supply during the drawdown period (spring, autumn, and winter) and flood prevention during summer (Eum et al. 2011).

Figure 9 displays quantile–quantile (Q–Q) plots of downscaled versus observed spatial correlations (1830 cases for 60 stations) for summer PRCP during the validation period. For summer precipitation, SSI and BCCI overestimate spatial correlations, lying above the diagonal line, due to the intrinsic bias of CFSR mainly induced by the coarse resolution of CFSR, whereas MACA is concentrated near the 1:1 line. Overall, methods incorporating spatial weather patterns (BCCA and MACA) better reproduce spatial structure of PRCP, TMAX, and TMIN, while methods that rely on spatial interpolation schemes (SSI, BCSD, and BCCI) tend to overestimate spatial correlations mainly due to the mismatch of spatial resolution between CFSR and station-spacing.

4.6 Selecting a robust statistical downscaling method with TOPSIS

In previous sections, each method showed different levels of skill on each performance metric. For example, BCSD outperformed other methods in the distribution related criterion while MACA did in the spatial structure criterion. Selecting a preferred technique when considering a single metric is straightforward. In general, however, multiple criteria such as the evaluation framework suggested in this study should be considered as accurate simulation of hydrologic and ecological responses depend on all aspects of skill considered in this study. Therefore, we use a systematic procedure with the TOPSIS technique, which implements experiments with various combinations of weighting for the five criteria to identify a robust downscaling method, across a range of the criteria.

Table 9 shows the percentage of each ranking for the five downscaling methods among TOPSIS experiments with 10,000 different weighting combinations based on the comprehensive performance metrics evaluated in this study. Bold numbers represent the highest chance to be the rank in this experiment. The orders of ranking for the statistical downscaling methods are changed with different variables. However, MACA shows the highest ranking for all variables. BCCA is the second for TMAX and TMIN while BCSD is the second for PRCP. These results imply that MACA and BCCA employing spatial climate patterns can provide more reliable downscaled temperature information while BCSD may provide reliable precipitation data with regard to distribution and extremes. This makes sense because temperature is dominated by large-scale forcing such as heat and cold waves while precipitation is mainly affected by local effects such as topography and local convection (Christensen et al. 2007; Eum et al. 2016). Therefore, the most robust method among the four statistical downscaling methods compared in this study is MACA overall followed by BCCA for temperature (TMAX and TMIN) and BCSD for PRCP. In addition, MACA employing auxiliary variables to improve coherence of the analogues may bring improved performance based on the experiment in this study. Because MACA requires more variables as predictors, BCCA and BCSD can be considered as the second alternatives for temperature and precipitation, respectively, when only TMAX, TMIN, and PRCP fields are available as predictors.

We also need to determine which criteria have more important role in contributing to ranking in the TOPSIS analysis. Although we tested the sensitivity of ranking to the change in the weightings for all criteria, we present the results for the two criteria—3 (multi-day persistence) and 5 (spatial structure)—that showed a prominent contribution to the ranking between the statistical downscaling methods. Figure 10 shows the ranking of each method when weightings on criteria 3 and 5 are changed. For PRCP, contributions of each criterion to the ranking are more prominent, i.e. the weightings of criterion 5 (spatial structure) have the most crucial role in determining rankings between the four statistical downscaling methods. When the weighing of criterion 5 is higher (>0.1), the ranking of MACA and BCCA is also higher: first for MACA and the third for BCCA. When criterion 5 is negligible, however, BCSD and BCCI are ranked first and third, respectively. For TMAX, the higher the weightings of criteria 3 and 5 (>0.2), the more the ranking orders are prominent, i.e. MACA is the first, BCCA is the second, and BCSD is the third, mainly due to considering the spatial patterns in MACA and BCCA. When lower weightings, however, the cases BCSD becomes the first occur, These results indicate that the both criteria play a crucial role in ranking among the four methods for TMAX. For TMIN, MACA is not sensitive to a range of weightings on all criteria, i.e. MACA is ranked the first in most cases. As in TMAX, however, criteria 3 and 5 have an important role in deciding the ranking between other methods (BCCA, BCSD, and BCCI). When the weightings of criteria 3 and 5 are higher, BCCA has the highest chance to be the second alternative. In particular, the weighting of criterion 3 is more crucial in ranking between BCSD and BCCI because the performance of BCSD in CSDI is substantially different from other methods (refer to Table 6).

5 Conclusions

MOS-based statistical downscaling methods have been developed and successfully used to downscale outputs of GCMs to a local scale over South Korea. Four methods (BCSD, BCCI, BCCA, and MACA), which have been widely used in various fields as well as SSI as a surrogate downscaling scheme to measure skill of CFSR, have been evaluated with an evaluation framework that consists of time-series, distribution, multi-day persistence, extremes, and spatial structure related indicators. We downscaled PRCP, TMAX, and TMIN from CFSR to 60 ASOS stations over South Korea. Dividing historical observational station data into two parts, all statistical downscaling models were calibrated with 19 years data from 1973 to 1991 and validated on 19 years from 1992 to 2010. Based on the evaluation framework, we employed the TOPSIS technique with 10,000 weighting combinations to identify a robust method in the experiment for the study area.

Regarding the time-series criterion, BCSD and MACA showed comparable skill for all variables. However, BCSD outperformed other methods in reproducing the distribution of variables. In addition, all methods showed less skill at higher elevation stations where more extremes are influenced by orographic effects and complex topography. For the multi-day persistence criterion, MACA and BCCA that incorporate spatial patterns show higher skill for TMAX and TMIN, in particular both methods outperformed BCSD in CSDI which is an indicator to estimate an ability to reproduce consecutive cold extremes. This result implies that the spatial patterns of cold events may play an important role in providing better skill in long-lasting extreme cold and warm temperature. On the contrary, BCSD shows the highest skill in reproducing consecutive dry and wet days. For the extreme related criterion, BCSD showed the highest skill in simulating extremes overall, which is related to the best performance in the distribution related criterion. While the four statistical downscaling methods improved the cold bias compared to SSI, they may cause additional biases on climate data when climate models provide relatively accurate extreme indicators mainly due to extrapolation for values outside of the range of values during the calibration period. In terms of spatial structure, BCCA and MACA outperformed BCSD and BCCI, mainly because they incorporate spatial weather patterns into the downscaling process. In particular, MACA showed large increases in skill for summer precipitation which is affected by localized heavy rainfall under a monsoon climate. Based on the TOPSIS analysis, MACA is the most reliable and robust method for all variables in South Korea while BCCA is the second for TMAX and TMIN while BCSD is the second for PRCP. We also found that the weightings on the criteria 3 (multi-day persistence) and criteria 5 (spatial structure) are crucial to ranking the skill of statistical downscaling methods.

As one of the first downscaling intercomparison studies for South Korea, we downscaled CFSR data to a network of stations. However, climate variables downscaled to a gridded climate data set may be useful for distributed hydrologic models that require input data on a regular grid. Recently, gridded precipitation and temperature data have been generated at 1 and 5 km grid spacing for South Korea (Hong et al. 2007; Shin et al. 2008; Kim et al. 2014). A straightforward next step would be to repeat our analysis for these data sets. Also, recent studies have focused on 21st century downscaled climate change projections based on the fifth phase of Coupled Model Intercomparison Project (CMIP5) (Maloney et al. 2014). At the same time, studies have raised potentially serious issues with bias-correction techniques such as quantile mapping, which is used in BCSD, BCCI, BCCA, and MACA, with regard to preserving long-term projected trends from the driving GCMs (Maraun 2013; Maurer and Pierce 2014). In response, various algorithms have been developed to preserve the long-term trend of climate projections such as equidistant quantile matching (Li et al. 2010), the ISI-MIP approach (Hempel et al. 2013), detrended quantile mapping (Bürger et al. 2013), and quantile delta mapping (Cannon et al. 2015). Employing such a new bias correction technique with the methods recommended in this study, we plan to downscale various CMIP5 projections for regional applications to climate change impacts studies in South Korea.

References

Abatzoglou JT, Brown TJ (2011) A comparison of statistical downscaling methods suited for wildfire applications. Int J Climatol 32:772–780. doi:10.1002/joc.2312

Bardossy A, Bogardi I, Matyasovszky I (2005) Fuzzy rule-based downscaling of precipitation. Theor Appl Climatol 82:119–129

Brekke LD, Kiang JE, Olsen JR, Pulwarty RS, Raff DA, Turnipseed DP, Webb RS, White KD (2009) Climate change and water resources management: a federal perspective: U.S. Geological Survey Circular 1331, 65 p

Brown C, Ghile Y, Laverty M, Li K (2012) Decision scaling: linking bottom-up vulnerability analysis with climate projections in the water sector. Water Resour Res 48:W09537

Bürger G, Murdock TQ, Werner AT, Sobie SR, Cannon AJ (2013) Downscaling extremes—an intercomparison of multiple statistical methods for present climate. J Clim 25(12):4366–4388

Cannon AJ, Sobie SR, Murdock TQ (2015) Bias correction of GCM precipitation by quantile mapping: how well do methods preserve changes in quantiles and extremes? J Clim 28(17):6938–6959. doi:10.1175/JCLI-D-14-00754.1

Chen J, Brissette FP, Leconte R (2011) Uncertainty of downscaling method in quantifying the impact of climate change on hydrology. J Hydrol 401:190–202

Cherubini T, Ghelli A, Lalaurette F (2002) Verification of precipitation forecasts over the Alpine region using a high-density observing network. Weather Forecast 17:238–249

Christensen N, Lettenmaier DP (2007) A multimodel ensemble approach to assessment of climate change impacts on the hydrology and water resources of the Colorado River basin. Hydrol Earth Syst Sci 11:1417–1434

Christensen JH, Carter TR, Rummukainen M, Amanatidis G (2007) Evaluating the performance and utility of regional climate models: the PRUDENCE project. Clim Change 81(Suppl 1):1–6

Chu TC (2002) Selecting plant location via a fuzzy TOPSIS approach. Int J Adv Manuf Technol 20(11):859–864

Chung ES, Lee GS (2009) Identification of spatial ranking of hydrological vulnerability using multi-criteria decision making techniques: case study of Korea. Water Resour Manag 23:2395–2416

Demirel MC, Moradkhani H (2016) Assessing the impact of CMIP5 climate multi-modeling on estimating the precipitation seasonality and timing. Clim Change 135:357–372

Deque M, Rowell DP, Luthi D, Giorgi F, Christensen JH, Rockel B, Jacob D, Kjellstrom E, Castro M, van den Hurk B (2007) An intercomparison of regional climate simulations for Europe: assessing uncertainties in model projections. Clim Change 81:53–70

Dibike YB, Coulibaly P (2006) Temporal neural networks for downscaling climate variability and extremes. Neural Netw 19:135–144

Diez E, Primo C, Garcia-Moya JA, Gutierrez JM, Orfila B (2005) Statistical and dynamical downscaling of precipitation over Spain from DEMETER seasonal forecasts. Tellus Ser A 57:409–423

Eum H-I, Simonovic SP (2012) Assessment on variability of extreme climate events for the Upper Thames River basin in Canada. Hydrol Process 26:485–499. doi:10.1002/hyp.8145

Eum H-I, Simonovic SP, Kim Y-O (2010) Climate change impact assessment using k-nearest neighbor weather generator: case study of the Nakdong River basin in Korea. J Hydrol Eng 15(10):772–785

Eum H-I, Kim Y-O, Palmer RN (2011) Optimal drought management using sampling stochastic dynamic programming with a hedging rule. J Water Resour Plan Manag 137(1):113–122

Eum H-I, Gachon P, Laprise R (2016) Impacts of model bias on the climate change signal and effects of weighted ensembles of regional climate model simulations: a case study over Southern Québec, Canada. Adv Meteorol 2016:1–17

Fasbender D, Ouarda TBMJ (2010) Spatial Bayesian model for statistical downscaling of AOGCM to minimum and maximum daily temperatures. J Clim 23:5222–5242. doi:10.1175/2010JCLI3415.1

Fernández J, Sáenz J (2003) Improved field reconstruction with the analog method: searching the CCA space. Clim Res 24:199–213

Fowler HJ, Blenkinsop S, Tebaldi C (2007) Linking climate change modelling to impacts studies: recent advances in downscaling techniques for hydrological modelling. Int J Climatol 27:1547–1578

Garvey PR (2008) Analytical methods for risk management: a system engineering perspective. CRC Press, Boca Raton, pp 243–250

Goodess CM, Anagnostopoulou C, Bardossy A, Frei C, Harpham C, Haylock MR, Hundecha Y, Maheras P, Ribalaygua J, Schmidli J, Schmith T, Tolika K, Tomozeiu R, Wilby RL (2012) An intercomparison of statistical downscaling methods for Europe and European regions-assessing their performance with respect to extreme temperature and precipitation events. Climate Research Unit Research Publication 11 (CRU RP11)

Gutmann E, Pruitt T, Clark MP, Brekke L, Arnold JR, Raff DA, Rasmussen RM (2014) An intercomparison of statistical downscaling methods used for water resources assessments in the United States. Water Resour Res 50:7167–7186. doi:10.1002/2014WR015559

Hanson RT, Lockwood B, Schmid W (2014) Analysis of projected water availability with current basin management plan, Pajaro Valley, California. J Hydrol 519(A):131–147

Hay L, LaFontaine J, Markstrom S (2014) Evaluation of statistically downscaled GCM output as input for hydrological and stream temperature simulation in the Alalachicola-hattahoochee-Flint River Basin (1961–1999). Earth Interact 18:1–32. doi:10.1175/2013EI000554.1

Hayhoe, KA (2010) A standardized framework for evaluating the skill of regional climate downscaling techniques. University of Illinois at Urbana-Champaign

Haylock MR, Cawley GC, Harpham C, Wilby RL, Goodess CM (2006) Downscaling heavy precipitation over the UK: a comparison of dynamical and statistical methods and their future scenarios. Int J Climatol 26:1397–1415

Hempel S, Frieler K, Warszawski L, Schewe J, Piontek F (2013) A trend-preserving bias correction-the ISI-MIP approach. Earth Syst Dyn 4(2):219–236

Hidalgo H, Dettinger M, Cayan D (2008) Downscaling with constructed analogues: daily precipitation and temperature fields over the United States, Rep. CEC-500-2007-123, Calif. Energy Comm., PIER Energy-Related Environ. Res., Sacramento, CA

Hong KO, Suh MS, Rha DK, Chang DH, Kim C, Kim MK (2007) Estimation of high resolution gridded temperature using GIS and PRISM. Atmosphere 17:255–268 (in Korean)

Hunter RD, Meetemeyer RK (2005) Climatologically aided mapping of daily precipitation and temperature. J Appl Meteorol 44:1501–1510

Hwang S, Graham WD (2013) Development and comparative evaluation of a stochastic analog method to downscale daily GCM precipitation. Hydrol Earth Syst Sci Discuss 10:2141–2181. doi:10.5194/hessd-10-2141-2013

Hwang CL, Yoon K (1981) Multiple attribute decision making: methods and applicasions. Springer, New York

Jenkins G, Lowe J (2003) Handling uncertainties in the UKCIP02 scenarios of climate change. Hadley Centre Technical Note 44, Exeter

Jun K-S, Chung E-S, Kim Y-G, Kim Y (2013) A fuzzy multi-criteria approach to flood risk vulnerability in South Korea by considering climate change impacts. Expert Syst Appl 40:1003–1013

Kim Y, Chung E-S (2014) An index-based robust decision making framework for watershed management in a changing climate. Sci Total Environ 473–474:88–102

Kim B, Kim HS, Seoh BH, Kim NW (2007) Impact of climate change on water resources in Yongdam Dam basin, Korea. Stoch Environ Res Risk Assess 21:457. doi:10.1007/s00477-006-0081-2

Kim JP, Lee W-S, Cho H, Kim G (2014) Estimation of high resolution daily precipitation using a modified PRSM model. J Korean Soc Civil Eng 34(4):1139–1150

Lapen DR, Hayhoe HN (2003) Spatial analysis of seasonal and annual temperature and precipitation normals in Southern Ontario, Canada. J Great Lakes Res 29(4):529–544

Lee G, Jun K-S, Chung E-S (2013) Integrated multi-criteria flood vulnerability approach using fuzzy TOPSIS and Delphi technique. Nat Hazards Earth Syst Sci 13:1293–1312

Li H, Sheffield J, Wood EF (2010) Bias correction of monthly precipitation and temperature fields from Intergovernmental Panel on Climate Change AR4 models using equidistant quantile matching. J Geophys Res 115:D10101. doi:10.1029/2009JD012882

Liu W, Xu Z, Zhang L, Zhao J, Yang H (2015) Impacts of climate change on hydrological processes in the Tibetan Plateau: a case study in the Lhasa River basin. Stoch Envrion Res Risk Assess 29:1809–1822

Lorenz EN (1969) Atmospheric predictability as revealed by naturally occurring analogues. J Atmos Sci 26:636–646

Maloney ED, Camargo SJ, Chang E, Colle BC, Fu R, Geil KL, Hu Q, Jiang X, Johnson N, Karnauskas KB, Kinter J, Kirtman B, Kumar S, Langenbrunner B, Lombardo K, Long LN, Mariotti A, Meyerson JE, Mo KC, Neelin JD, Pan Z, Seager R, Serra Y, Seth A, Sheffield J, Stroeve J, Thibeault J, Xie S-P, Wang C, Wyman B, Zhao M (2014) North American climate in CMIP5 experiments: part III: assessment of twenty-first century projections. J Clim 27:2230–2270

Maraun D (2013) Bias correction, quantile mapping, and downscaling: revisiting the inflation issue. J Clim 26(6):2137–2143

Maraun D, Wetterhall F, Ireson AM, Chandler RE, Kendon EJ, Widmann M, Brienen S, Rust HW, Sauter T, Themeßl M, Venema VKC, Chun KP, Goodess CM, Jones RG, Onof C, Vrac M, Thiele-Eich I (2010) Precipitation downscaling under climate change: Recent developments to bridge the gap between dynamical models and the end user. Rev Geophys 48. doi:10.1029/2009RG000314

Maurer EP, Hidalgo HG (2008) Utility of daily vs. monthly large-scale climate data: an intercomparison of two statistical downscaling methods. Hydrol Earth Syst Sci 12:551–563

Maurer EP, Pierce DW (2014) Bias correction can modify climate model simulated precipitation changes without adverse effect on the ensemble mean. Hydrol Earth Syst Sci 18(3):915–925

Maurer EP, Hidalgo HG, Das T (2010) The utility of daily large-scale climate data in the assessment of climate change impacts on daily streamflow in California. Hydrol Earth Syst Sci 14:1125–1138. doi:10.5194/hess-14-1125-2010

Mearns LO, Arritt R, Biner S, Bukovsky MS, McGinnis S, Sain S, Caya D, Correia J Jr, Flory D, Gutowski W (2012) The North American regional climate change assessment program: overview of phase I results. Bull Am Meteorol Soc 93(9):1337–1362

Miller WP, DeRosa GM, Gangopadhyay S, Valdés JB (2013) Predicting regime shifts in flow of the Gunnison River under changing climate conditions: regime shifts over the Gunnison River basin. Water Resour Res 49:2966–2974. doi:10.1002/wrcr.20215

Mizukami N, Clark MP, Gutmann ED, Mendoza PA, Newman AJ, Nijssen B, Livneh B, Hay LE, Arnold JR, Brekke LD (2016) Implications of the methodological choices for hydrologic portrayals of climate change over the contiguous United States: statistically downscaled forcing data and hydrologic models. J Hydrometeorol 17(1):73–98. doi:10.1175/JHM-D-14-0187.1

Mizuta R, Oouchi K, Yoshimura H, Noda A, Katayama K, Yukimoto S, Hosaka M, Kusunoki S, Kawai H, Nakagawa M (2006) 20-km-mesh global climate simulations using JMA–GSM model—mean climate states. J Meteorol Soc Jpn 84:165–185

Murdock TQ, Cannon AJ, Sobie SR (2013) Statistical downscaling of future climate projections. Pacific Climate Impacts Consortium (PCIC) Report (No.KM170-12-1236)

Nicholas RE, Battisti DS (2012) Empirical downscaling of high-resolution regional precipitation from large-scale reanalysis fields. J Appl Meteorol Climatol 51:100–114. doi:10.1175/JAMC-D-11-04.1

Olsson J, Uvo C, Jinno K (2001) Statistical atmospheric downscaling of short-term extreme rainfall by neural networks. Phys Chem Earth 26B:695–700

Ozturk D, Batuk F (2011) Technique for order preference by similarity to ideal solution (TOPSIS) for spatial decision problems. Proceedings of Gi4DM 2011, Antalya, Turkey

Rana A, Moradkhani H (2016) Spatial, temporal and frequency based climate change assessment in Columbia River basin using multi downscaled-scenarios. Clim Dyn 47(1–2):579–600. doi:10.1007/s00382-015-2857-x

Saha S, Moorthi S, Pan H-L, Wu X, Wang J, Nadiga S, Tripp P, Kistler R, Woollen J, Behringer D, Liu H, Stokes D, Grumbine R, Gayno G, Wang J, Hou Y-T, Chuang H-Y, Juang H-MH, Sela J, Iredell M, Treadon R, Kleist D, Van Delst P, Keyser D, Derber J, Ek M, Meng J, Wei H, Yang R, Lord S, Van Den Dool H, Kumar A, Wang W, Long C, Chelliah M, Xue Y, Huang B, Schemm J-K, Ebisuzaki W, Lin R, Xie P, Chen M, Zhou S, Higgins W, Zou C-Z, Liu Q, Chen Y, Han Y, Cucurull L, Reynolds RW, Rutledge G, Goldberg M (2010) The NCEP climate forecast system reanalysis. Bull Am Meteorol Soc 91(8):1015–1057

Salathé EP, Mote PW, Wiley MW (2007) Review of scenario selection and downscaling methods for the assessment of climate change impacts on hydrology in the United States Pacific Northwest. Int J Climatol 27:1611–1621

Segui PQ, Rebies A, Martin E, Habets F, Boe J (2010) Comparison of three downscaling methods in simulating the impact of climate change on the hydrology of Mediterranean basins. J Hydrol 383:111–124

Shin SC, Kim MK, Suh MS, Rha DK, Jang DH, Kim CS, Lee WS, Kim YH (2008) Estimation of high resolution gridded precipitation using GIS and PRISM. Atmospheres 18:71–81 (in Korean)

Sillmann J, Kharin V, Zhang X, Zwiers F, Bronaugh D (2013) Climate extremes indices in the CMIP5 multimodel ensemble: part 1. Model evaluation in the present climate. J Geophys Res Atmos 118(4):1716–1733

Stoner A, Hayhoe K, Yang X (2013) An asynchronous regional regression model for statistical downscaling of daily climate variables. Int J Climatol 33:2473–2494. doi:10.1002/joc.3603

Thrasher B, Maurer EP, McKellar C, Duffy P (2012) Technical note: bias correcting climate model simulated daily temperature extremes with quantile mapping. Hydrol Earth Syst Sci 16:3309–3314. doi:10.5194/hess-16-3309-2012

Tikhonov AN, Goncharsky AV, Stepanov VV, Yagola AG (1995) Numerical methods for the solution of ill-posed problems. Springer, Netherlands

Timbal B, Dufour A, McAvaney B (2003) An estimate of future climate change for western France using a statistical downscaling technique. Clim Dyn 20:807–823

Triantaphyllou E (2000) Multi-criteria decision making methods. Springer, US

van den Dool HM (1994) Searching for analogues, how long must one wait? Tellus Ser A 46:314–324

van den Dool H, Huang J, Fan Y (2003) Performance and analysis of the constructed analogue method applied to US soil moisture over 1981–2001. J Geophys Res 108(D16)

Werner AT, Cannon AJ (2016) Hydrologic extremes—an intercomparison of multiple gridded statistical downscaling methods. Hydrol Earth Syst Sci 20(4):1483–1508. doi:10.5194/hess-20-1483-2016

Werner AT, Schnorbus MA, Shrestha RR, Eckstrand HD (2013) Spatial and temporal change in the hydro-climatology of the Canadian portion of the Columbia River basin under multiple emissions scenarios. Atmos Ocean 51(4):357–379

Wilby R, Wigley T, Conway D, Jones P, Hewitson B, Main J, Wilks D (1998) Statistical downscaling of general circulation model output: a comparison of methods. Water Resour Res 34:2995–3008. doi:10.1029/98WR02577

Wilby RL, Hay LE, Gutowski WJ, Arritt RW, Takle ES, Pan Z, Leavesley GH, Clark MP (2000) Hydrological responses to dynamically and statistically downscaled climate model output. Geophys Res Lett 27(8):1199–1202. doi:10.1029/L006078

Wood AW, Leung LR, Sridhar V, Lettenmaier DP (2004) Hydrologic implications of dynamical and statistical approaches to downscaling climate model outputs. Clim Change 62:189–216

Zhang X, Alexander L, Hegerl GC, Jones P, Tank AK, Peterson TC, Trewin B, Zwiers FW (2011) Indices for monitoring changes in extremes based on daily temperature and precipitation data. Wiley Interdiscip Rev Clim Change 2(6):851–870. doi:10.1002/wcc.147

Zhou YG, Wen JJ, Chen DW (2012) Study on the competitive and layout of commercial pedestrian streets’ business forms though IEW & TOPSIS- two comparative cases in Hangzhou. J Zhejiang Univ Sci 39(6):724–731

Acknowledgments

This research was supported by the APEC Climate Center (APCC) and partially by a grant (14AWMP-B082564-01) from Advanced Water Management Research Program funded by the Ministry of Land, Infrastructure and Transport of Korean government. The authors would like to thank Pacific Climate Impacts Consortium and APEC Climate Center for approving visiting research and their valuable comments and suggestions on earlier draft of this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Eum, HI., Cannon, A.J. & Murdock, T.Q. Intercomparison of multiple statistical downscaling methods: multi-criteria model selection for South Korea. Stoch Environ Res Risk Assess 31, 683–703 (2017). https://doi.org/10.1007/s00477-016-1312-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00477-016-1312-9