Abstract

Since digital microscopy (DM) has become a useful alternative to conventional light microscopy (CLM), several approaches have been used to evaluate students’ performance and perception. This systematic review aimed to integrate data regarding the use of DM for education in human pathology, determining whether this technology can be an adequate learning tool, and an appropriate method to evaluate students’ performance. Following a specific search strategy and eligibility criteria, three electronic databases were searched and several articles were screened. Eight studies involving medical and dental students were included. The test of performance comprised diagnostic and microscopic description, clinical features, differential, and final diagnoses of the specimens. The students’ achievements were equivalent, similar or higher using DM in comparison with CLM in four studies. All publications employed question surveys to assess the students’ perceptions, especially regarding the easiness of equipment use, quality of images, and preference for one method. Seven studies (87.5%) indicated the students’ support of DM as an appropriate method for learning. The quality assessment categorized most studies as having a low bias risk (75%). This study presents the efficacy of DM for human pathology education, although the high heterogeneity of the included articles did not permit outlining a specific method of performance evaluation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Since the seventeenth century, the conventional light microscope (CLM) has been used as the primary device to examine human tissues at microscopic level for histological/pathological analysis, diagnosis, research, and educational purposes [1, 2]. However, CLM has a number of limitations, including the need for production and storage of large numbers of glass slides, care in preservation, and periodical slide replacement [3, 4]. More recently, users’ needs for quick case discussion, remote and online access, integration of data (e.g. slides and annotations), and a demand for more attractive and engaging learning platforms, have led to advances in technology, as well as development of electronic tools for incorporation into education, as an attempt to improve the students’ learning and commitment to modules [5,6,7].

Digital microscopy (DM), which represents whole slide imaging systems (WSI), is designed to accurately digitize images from glass slides, converting them into numerous high-quality images using specific hardware, combined with software which assembles these multiple images into a single digital image resembling the original glass slide [4, 8, 9]. Given the many possibilities for DM application, this technology has also become a useful alternative to CLM for human pathology teaching, with a significant acceptance described by both students and teachers [10,11,12,13], although some authors reported disagreements regarding total replacement of CLM by DM [14,15,16].

Following implementation of DM, different strategies have been used to assess students’ performance and perception, in order to determine the effectiveness of this technology over CLM [16,17,18,19]. However, the absence of educational guidelines remains a limitation for these assessments’ utility, which may impair the reliability and interpretation of results.

Considering this scenario, this systematic review compiled the published data regarding the use of DM for teaching of human pathology to medical and dental students, in order to investigate whether this technology is sufficient for teaching and learning as a stand-alone tool, and to determine the proper method to evaluate students’ performance using DM.

Materials and methods

This study was conducted according to the PRISMA (Preferred Report Items for Systematic reviews and Meta-Analyses) [20] and was registered in the PROSPERO database (protocol CRD42019132602). The review questions were as follows: “Is whole slide imaging reliable to be used as a single technique instead of the association with conventional light microscopy for human pathology teaching?” and “What is the appropriate method to evaluate the students' performance using digital microscope?”

Literature review

One author carried out a literature review in order to identify whether there were any existing systematic reviews that were already registered (in process or published), similar to the scope of our study. Two similar reviews were identified [21, 22]; however, some limitations were highlighted. The study published in 2016 [21] reported a timeline searching until 2014, which may have impaired the assessment of other more recent and relevant articles published in this 2-year interval, and between 2016 and 2019. Seven databases were reported in the search; however, Scopus (Elsevier, Amsterdam, the Netherlands) and MEDLINE (Medline Industries, Mundelein, Illinois) by PubMed platform (National Center for Biotechnology Information, US National Library of Medicine, Bethesda, Maryland) were not used. Although meta-analysis was performed, the authors did not report which scale was used by each study to allow quantitative synthesis [21]. Limitations found in both reviews were the lack of clarity to describe the search strategy, the screening of articles only published in English language, and the inclusion of studies that may had increased the heterogeneity of the results [21, 22]. Moreover, both studies included articles involving both cytology and histology samples.

Based on these observations, we decided to proceed with this systematic review to assess more homogeneous and well-designed studies, reducing the risk of bias as much as possible, providing more consistent evidence regarding the use of digital microscopy as a proper teaching method for human pathology only, and the best methods that can be applied to evaluate the learner’s performance using this technology.

Eligibility criteria

Inclusion criteria comprised studies that aimed to assess the performance and/or the perception of students using DM to analyse its importance for educational purposes. For comparative studies, if the participants were distributed into two groups, these must be crossed-over. The participants should also have analysed the sample by both methods (DM and conventional light microscopy) at two separate times, not blending the technologies. The performance results should be indicated with a score, as well as the method that was used for measures of performance. In case of studies that assessed the student’s perception, it should be reported how it was obtained. Studies published in English, Portuguese, Spanish, or French languages were screened. Exclusion criteria considered literature reviews, letters to the editors, book chapters, and abstracts published in annals. Studies which included cytopathology/hematopathology, as well as those that examined animal histology/pathology, were also excluded. Moreover, retrieved publications that could not be found or accessed were excluded. Validation studies and studies which evaluated efficacy and accuracy were not included. The publications in which the modality of WSI was not clear or specified were excluded.

Search strategy

An electronic search was conducted on May 15, 2019, without timeline restriction, in the following databases: Scopus (Elsevier, Amsterdam, The Netherlands), MEDLINE (Medline Industries, Mundelein, Illinois) by PubMed platform (National Center for Biotechnology Information, US National Library of Medicine, Bethesda, Maryland) and Embase (Elsevier, Amsterdam, The Netherlands). In order to expand the numbers, we used an association of two different searches, which retrieved several original articles in each one. This strategy was reproduced similarly in all three databases. Firstly, we included the terms (ALL ( "digital microscopy" ) AND ALL (student*) ). The second search strategy considered the following terms: (ALL ( "virtual microscopy" ) AND ALL ( student* ) ). A manual search was also carried out to identify possible additional studies.

Article screening and eligibility evaluation

Two authors independently screened the titles and abstracts of all articles, and then excluded the ones that were not in accordance with the eligibility criteria. In sequence, these authors read the full texts to identify eligible articles. The reasons for exclusion were listed and specified in the flow chart. Divergences were solved initially by discussion and then by consulting a third author to assure that the appropriate publications were selected according to the eligibility criteria. Rayyan QCRI [23] was used as the reference manager to conduct the screening of the articles, as well as the exclusion of duplicates and registrations of a primary reason for exclusion.

Quality assessment

The risk of bias was carried out by using the Joanna Briggs Institute Critical Appraisal Checklist for Analytical Cross Sectional Studies (University of Adelaide, Australia) [24]. This tool designed different checklists of items for each category of study and is recommended by the Cochrane Methods [25]. For cross-sectional studies, the questions comprised, in general, study sample and participants, methodology’s design and execution, and tools for analysis of the results. In the “confounding factors” sections, we considered the previous contact with DM prior to the study, as well as students’ module retention as potential biases. The available answers for each item were “yes”, “no”, and “not applicable”, and after finishing the questionnaire, an overall score for every article was achieved. We considered a cut-off of 50% of checklist answers to rate the publications as having as “high”, “moderate”, or “low” risk of bias. Two authors independently performed the quality assessment. Disagreements were initially solved by discussion, and later by conferring with a third author for settlement.

Data extraction

Information available in the publications was independently extracted by one author and further reviewed by a second author. A specific extraction form was designed using Microsoft Excel® software, which was also used to organize and process the qualitative and quantitative data. For each elected study, the following information was extracted (when available): year and country of publication, which variable was analysed (performance, perception or both), number of participants, students’ educational level, type of equipment and software used to assess WSI, types of workstation, digital slides accessibility, equipment training, CLM availability and its specification, number and scope of used samples, and how the students’ performance and/or perception were assessed and their results.

Analysis

The qualitative and quantitative data were presented descriptively. Given the high heterogenicity of the available information of the studies, especially regarding the methods used for perception and/or performance measures, we were not able to perform a meta-analysis in this systematic review. A narrative synthesis about the findings of the included publications was performed.

Results

PRISMA flowchart

The search performed through all databases initially identified 873 publications, with a timeline from 1998 to 2019. After exclusion of duplicates, 563 were screened by reading their titles and abstracts, resulting in 60 publications for eligibility assessment. Following full text reading, 52 articles were excluded according to the eligibility criteria, and 8 were included in the qualitative synthesis. The article selection process is summarized in Fig. 1.

Flowchart of study screening process adapted from PRISMA [20]

Methodological features of the studies

The included articles were published from 2008 to 2019, and originated from six countries: Australia (1); Brazil (1); Germany (1); Grenada (1); Saudi Arabia (1), and USA (3). Six publications (75%) assessed students’ perception and performance [5, 14, 16, 26,27,28], whereas two studies (25%) only evaluated students’ perception of DM in comparison with CLM [3, 12]. The number of participants ranged from 35 to 192 students; three articles included medical students (37.5%), three included dental students (37.5%), from the second to fifth year, and two publications comprised medical residents (25%).

The main methodological features of the included articles are summarized in Tables 1 and 2. The scope of the samples used in the studies encompassed general and systemic pathology, dermatopathology, histopathology (general and advanced), oral histology, and oral pathology. Most commonly used WSI workstations included computers with specific software and/or a web-based interface for digital slides visualization (5 studies; 62.5%). Three studies (37.5%) provided additional data, such as clinical history, radiographs, laboratory exams, and specimen annotation [3, 14, 28], and remote access to slides was described in 5 studies (62.5%). Six publications reported the availability of CLM concomitantly with DM (75%), although 3 studies did not describe whether CLM was completely abolished after DM assessment and establishment (37.5%). Detailed information of the included studies is available in Supplementary Table 1.

Performance analysis

Two studies required establishment of diagnosis in their assessments, either through multiple-choice or open-ended questions [5, 16], although one study also considered a differential diagnosis as a possible correct answer [16]. Two publications provided an admixture of requests, comprising identification of diagnostic features, microscopic description of the specimen, and clinical features besides the differential and final diagnoses [14, 28]. One study did not specify the questions’ content of the multiple-choice exam [27], and another asked the participants to assess a tissue specimen and demonstrate their interpretation through paper illustrations [26]. Time for performance’s test accomplishment was described by two studies, with a mean of 66 min. Five of six studies (83.3%) did not provide any type of equipment training prior to the exam (Table 1).

One study did not describe the numeric results of performance assessment, stating that there were no statistical differences in academic achievement between students who had used a specific technology [14]. In contrast with three studies [16, 26, 28], two others reported a similar or a slightly higher performance using CLM [5, 27] (Table 3).

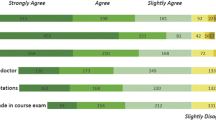

Perception analysis

All publications utilized question surveys to assess the students’ perceptions regarding DM and/or CLM, ranging from 5 to 30 questions. Five studies (62.5%) used a 5-point Likert scale for answers (e.g. 1—strongly agree; 2—agree; 3—undecided; 4—disagree; 5—strongly disagree). Three studies also used open-ended questions, and two provided an additional section for students’ personal considerations (Table 1). As presented in Table 4, most of the studies asked the students about the easiness of equipment utilization (6 studies; 75%), quality and magnification of images (6 studies; 75%), and preference for either DM or CLM (5 studies; 62.5%).Concerning the role of DM, seven studies reported that most of the students believed in this technology to be an appropriate method for improved and efficient learning (87.5%). When the students were asked to choose one method, two studies described the students’ predilection for both methods (25%), whereas the others did not provide this option for answer. Overall, the students preferred DM over CLM (Table 3).

Quality assessment (risk of bias)

Six publications achieved an overall low risk of bias (75%). One study was categorized as a moderate risk overall, since the criteria for sample selection was not clearly defined, there was no identification of confounding factors and evidence of strategies to deal with them, and no description of statistical analysis used in the study that could be reviewed. The study that was classified as having a high risk of bias failed in the following items: clear sample inclusion criteria, description of criteria used for measurement, identification of confounding factors and their management, and detail of statistical analysis. Full quality assessment of the included articles is available in Supplementary Table 2 and summarized in Fig. 2.

Discussion

The learning curve of pathology encompasses the association between morphological changes and clinical aspects. Thus, an unimpaired visual representation supports solidifying concepts and principles and inserts a real-life component that cannot be recognized through theory alone [10, 14]. Classically, the use of glass slides and CLM has been the core of practical knowledge, which has gradually improved by the introduction of digital cameras connected to microscopes, generating static images and live videos. Although these devices enabled live examination and exhibition of slides to several participants at the same time, their control was limited to one person, simply serving as a teaching supplement [2, 8].

The Accreditation Council for Graduate Medical Education (ACGME) is an American organization that has highlighted six areas of competency, including patient care, medical knowledge, professionalism, communication skills, practice-based learning and improvement, and systems-based practice [29, 30]. Moreover, pathology courses have undergone modifications in their curricula, which have changed the dynamics of microscope laboratory sessions, such as time, physical space, and equipment availability and, consequently, facilitated the application of different teaching and learning methods including cooperative and distance learning as well as the association with new technologies [10, 31].

In this context, DM represents an important tool as it allows any computer to work as a CLM; instead of providing sets of glass slides, especially the ones with limited availability and variability, all users can access the same material collection, either in or outside the educational facilities at any time, minimizing the use of tissue and number of glass slides required ensuring standardization [9, 30, 31]. Several slides can also be simultaneously exhibited on the same screen, enabling interpretation and comparison between histochemical and immunohistochemical stains, different sections, and specimens [2, 8, 32]. Annotations, measurements, macroscopic pictures, imaging studies, and labels can be added within the images, facilitating interactive study and distant communication [6, 30, 33]. Moreover, WSI systems are more ergonomic for users in comparison with a CLM station, provide larger field of vision, and permit a broader range of magnifications. The presence of a thumbnail indicating the area on the screen also promotes better orientation [9, 32].

Challenges of DM include its cost, with a large initial investment for WSI system implementation, including hardware, computers, and software, which need regular maintenance to guarantee proper functioning [2]. The high-resolution of WSI images result in files with large size, demanding the use of large capacity to store, back-up, and allocate data whenever required, as well as a high-speed network [4, 34]. Currently, these systems still have individual designs, resulting in the absence of a universal virtual slide format, which limits the access and distribution of DM image files without relying on web-based collections, although this is negated to some extent by availability of free WSI viewing software from most vendors [2, 4, 32], as demonstrated in Table 5. Other common complaints are related to image limitations, such as the contrast and resolution [4, 5, 8].

Conversely, as observed in our results, there has been a great acceptance of DM by students from both medical and dental backgrounds, for technical and/or educational reasons [13, 15, 17, 18]. Since students are more familiar with computers, their preference for DM is not surprising as CLM use is gradually reducing or being removed from courses [2, 6]. Still, earlier studies have reported uncertainties about choosing one single method, especially in pathology residency programs, probably due to the practical routine requirements, such as image zooming and speed of glass slide assessment, as well as the importance of learning how to operate a CLM, which are being overcome with more sophisticated technologies, such as multiplane focusing high-resolution, faster, and ubiquitous hardware and software [1, 14, 27, 48].

The performance outcomes of the included articles indicate that WSI can be considered as an effective learning tool, being equivalent to CLM, as previously reported [2, 17, 18, 48]. However, the remarkable heterogeneity of the methodologies used in the studies, i.e. different assessment methods, learners with different levels of education and experience, different samples for each technology testing, long time intervals between the use of DM and CLM for evaluation, and lack of prior instructions to manipulate these devices may have compromised the reliability of the results [5, 16, 27, 28, 49].

For diagnostic purposes, guidelines have been recommended for validation of WSI systems, such as the simulation of a real clinical environment for technology use, the participation of a WSI-trained pathologist, and the use of at least 60 cases, which might be presented during routine practice. Moreover, other guidelines include the need for both DM and CLM evaluation, a washout period of at least 2 weeks between viewing digital and glass slides, and assessment of the same material presented in both glass and digital slides [50].

Based on these parameters [50] and the lack of testing standardization to assess learners’ performance and perceptions regarding the use of WSI systems in comparison with CLM, we attempted to provide guidelines for further validation studies, as demonstrated in Table 6.

In conclusion, DM and WSI can be considered reliable technologies for use in human pathology education, showing acceptance by users. Although we could not determine the most appropriate approach for students’ performance assessment, the assembled data in this study highlights the demand for education validation guidelines. Therefore, we expect that our recommendations might provide the platform for more homogeneous data and higher-level evidence for other systematic reviews and meta-analyses in future.

References

Boyce BF (2015) Whole slide imaging: uses and limitations for surgical pathology and teaching. Biotech Histochem 90:321–330

Saco A, Bombi JA, Garcia A, Ramírez J, Ordi J (2016) Current status of whole-slide imaging in education. Pathobiology 83:79–88

Merk M, Knuechel R, Perez-Bouza A (2010) Web-based virtual microscopy at the RWTH Aachen University: didactic concept, methods and analysis of acceptance by the students. Ann Anat 192:383–387

Higgins C (2015) Applications and challenges of digital pathology and whole slide imaging. Biotech Histochem 90:341–347

Koch LH, Lampros JN, Delong LK, Chen SC, Woosley JT, Hood AF (2009) Randomized comparison of virtual microscopy and traditional glass microscopy in diagnostic accuracy among dermatology and pathology residents. Hum Pathol 40:662–667

McCready ZR, Jham BC (2013) Dental students’ perceptions of the use of digital microscopy as part of an oral pathology curriculum. J Den Educ. 77:1624–1628

Ariana A, Amin M, Pakneshan S, Dolan-Evans E, Lam AK (2016) Integration of traditional and E-learning methods to improve learning outcomes for dental students in histopathology. J Den Educ. 80:1140–1148

Al-Janabi S, Huisman A, Van Diest PJ (2012) Digital pathology: current status and future perspectives. Histopathology. 61:1–9

Araújo ALD, Amaral-Silva GK, Fonseca FP, Palmier NR, Lopes MA, Speight PM, de Almeida OP, Vargas PA, Santos-Silva AR (2018) Validation of digital microscopy in the histopathological diagnoses of oral diseases. Virchows Arch. 473:321–327

Fónyad L, Gerely L, Cserneky M, Molnár B, Matolcsy A (2010) Shifting gears higher – digital slides in graduate education – 4 years experience at Semmelweis University. Diagn Pathol. 5:73

Szymas J, Lundin M (2011) Five years of experience teaching pathology to dental students using the WebMicroscope. Diagn Pathol. 30:6

Alotaibi O, ALQahtani D (2016) Measuring dental students' preference: a comparison of light microscopy and virtual microscopy as teaching tools in oral histology and pathology. Saudi Dent J 28:169–173

Brierley DJ, Speight PM, Hunter KD, Farthing P (2017) Using virtual microscopy to deliver an integrated oral pathology course for undergraduate dental students. Br Dent J. 223:115–120

Braun MW, Kearns KD (2008) Improved learning efficiency and increased student collaboration through use of virtual microscopy in the teaching of human pathology. Anat Sci Educ 6:240–246

Fonseca FP, Santos-Silva AR, Lopes MA et al (2015) Transition from glass to digital slide microscopy in the teaching of oral pathology in a Brazilian dental school. Med Oral Patol Oral Cir Bucal. 20:e17–e22

Fernandes CIR, Bonan RF, Bonan PRF, Leonel ACLS, Carvalho EJA, de Castro JFL, Perez DEC (2018) Dental students' perceptions and performance in use of conventional and virtual microscopy in oral pathology. J Dent Educ. 82:883–890

Kumar RK, Velan GM, Korell SO, Kandara M, Dee FR, Wakefield D (2004) Virtual microscopy for learning and assessment in pathology. J Pathol. 204:613–618

Ordi O, Bombó JA, Martínez A et al (2015) Virtual microscopy in the undergraduate teaching of pathology. J Pathol Inform. 6:1

Walkowski S, Lundin M, Szymas J, Lundin J (2014) Students’ performance during practical examination on whole slide images using view path tracking. Diagn Pathol. 9:208

Moher D, Liberati A, Tetzlaff J et al (2009) Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 151:264–269

Wilson AB, Taylor MA, Klein BA et al (2016) Meta-analysis and review of learner performance and preference: virtual versus optical microscopy. Med Educ 50:428–440

Kuo KH, Leo JM (2018) Optical versus virtual microscope for medical education: a systematic review. Anat Sci Educ. 12:678–685. https://doi.org/10.1002/ase.1844

Ouzzani M, Hammady H, Fedorowicz Z et al (2016) Rayyan—a web and mobile app for systematic reviews. Syst Ver. 5:210

Moola S, Munn Z, Tufanaru C, Aromataris E, Sears K, Sfetcu R, Currie M, Qureshi R, Mattis P, Lisy K, Mu P-F (2017) Chapter 7: Systematic reviews of etiology and risk. In: Aromataris E, Munn Z (eds) Joanna Briggs Institute Reviewer's Manual. The Joanna Briggs Institute. Available from https://reviewersmanual.joannabriggs.org/

Cochrane Effective Practice and Organisation of Care (EPOC) (2017) EPOC Resources for review authors. https://epoc.cochrane.org/resources/epoc-resources-review-authors. Accessed 6 April 2019

Farah CS, Maybury T (2009) Implementing digital technology to enhance student learning of pathology. Eur J Dent Educ 13:172–178

Brick KE, Sluzevich JC, Cappel MA, DiCaudo DJ, Comfere NI, Wieland CN (2013) Comparison of virtual microscopy and glass slide microscopy among dermatology residents during a simulated in-training examination. J Cutan Pathol 40:807–811

Nauhria S, Ramdass P (2019) Randomized cross-over study and a qualitative analysis comparing virtual microscopy and light microscopy for learning undergraduate histopathology. Indian J Pathol Microbiol. 62:84–90

Holmboe ES, Edgar L, Hamstra S, The Accreditation Council for Graduate Medical Education (ACGME) (2016) The Milestones Guidebook. https://www.acgme.org/Portals/0/MilestonesGuidebook.pdf. Accessed 10 Oct 2019

Hassel LA, Fung KM, Chaser B (2011) Digital slides and ACGME resident competencies in anatomic pathology: An altered paradigm for acquisition and assessment. J Pathol Inform. 2:27

Triola MM, Holloway WJ (2011) Enhanced virtual microscopy for collaborative education. BMC Med Educ. 11:4

Lee LMJ, Goldman HM, Hortsch M (2018) The virtual microscopy database-sharing digital microscope images for research and education. Anat Sci Educ. 11:510–515

Mione S, Valcke M, Cornelissen M (2013) Evaluation of virtual microscopy in medical histology teaching. Anat Sci Educ. 6:307–315

Volynskaya Z, Evans AJ, Asa SL (2017) Clinical applications of whole-slide imaging in anatomic pathology. Adv Anat Pathol. 24:215–221

Leica Biosystems (2020) Scan – Aperio Digital Pathology Slide Scanners. https://www.leicabiosystems.com/digital-pathology/scan/. Accessed 10 July 2020

Olympus Corporation (2019). VS200 Research Slide Scanner. https://www.lri.se/onewebmedia/VS200.pdf. Accessed 10 July 2020

3DHISTECH Ltd. (2020) PANNORAMIC Digital Slide Scanners. https://www.3dhistech.com/products-and-software/hardware/pannoramic-digital-slide-scanners/. Accessed 10 July 2020 .

Hamamatsu photonics (2020) NanoZoomer Series. https://nanozoomer.hamamatsu.com/resources/pdf/sys/SBIS0126E_NanoZoomer_Lineup.pdf. Accessed 10 July 2020

Koninklijke Philips NV (2020) Ultra Fast Scanner - Digital pathology slide scanner. https://www.usa.philips.com/healthcare/product/HCNOCTN442/ultra-fast-scanner-digital-pathology-slide-scanner. Accessed 10 July 2020 .

Zeiss Germany (2020) ZEISS Axio Scan.Z1 - Your Fast and Flexible Slide Scanner for Fluorescence and Brightfield. https://www.zeiss.com/microscopy/int/products/imaging-systems/axio-scan-z1.html. Accessed 10 July 2020

Leica Biosystems (2017) Aperio ImageScope User’s Guide. https://drp8p5tqcb2p5.cloudfront.net/fileadmin/img_uploads/digital_pathology/MAN-0001-Rev-Q.12.4_pdf.pdf. Accessed 10 July 2020

3DHISTECH Ltd. (2018) CaseViewer 2.2 User Guide. https://assets.thermofisher.com/TFS-Assets/APD/Product-Guides/US-Only-CaseViewer-2-2-Win-User-Guide-EN-Rev1.pdf. Accessed 10 July 2020

Hamamatsu photonics (2020) NDP.view2 Instruction manual. https://www.hamamatsu.com/sp/sys/en/manual/NDPview2_manual_en.pdf. Accessed 10 July 2020

Koninklijke Philips NV (2020) Image Management System viewer - Pathology case viewer. https://www.usa.philips.com/healthcare/product/HCNOCTN444/intellisite-pathology-suite. Accessed 10 July 2020

Zeiss Germany (2020) ZEISS ZEN lite - The Perfect Software Solution to Work with Microscope Images in CZI File Format. https://www.zeiss.com/microscopy/int/products/microscope-software/zen-lite.html. Accessed 10 July 2020

Bankhead P, Loughrey MB, Fernández JA, Dombrowski Y, McArt DG, Dunne PD et al (2017, 2017) QuPath: Open source software for digital pathology image analysis. Sci Rep 7(1)

Pathcore (2019) PathcoreSedeen™. https://pathcore.com/sedeen/. Accessed 10 July 2020

Dee FR (2009) Virtual microscopy in pathology education. Hum Pathol. 40:1112–1221

Mirham L, Naugler C, Hayes M et al (2016) Performance of residents using digital images versus glass slides on certification examination in anatomical pathology: a mixed methods pilot study. CMAJ Open. 25:E88–E94

Pantanowitz L, Sinard JH, Henricks WH, Fatheree LA, Carter AB, Contis L, Beckwith BA, Evans AJ, Lal A, Parwani AV, College of American Pathologists Pathology and Laboratory Quality Center (2013) Validating whole slide imaging for diagnostic purposes in pathology: guideline from the College of American Pathologists Pathology and Laboratory Quality Center. Arch Pathol Lab Med. 137:1710–1722

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Ethical responsibilities of author section

All authors contributed significantly to the conception, design, and execution of this study, as well as the retrieval, assessment, and interpretation of results. The draft and the final version of the manuscript was reviewed and approved by all parts. The authors also state that the study is original, has not been published elsewhere, and has been submitted only to the Virchows Archiv.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the Topical Collection on Quality in Pathology

Electronic supplementary material

Supplementary Table 1.

Methodological features of the articles fully assessed in this study. (XLSX 14 kb)

ESM 1

(DOCX 16 kb)

Rights and permissions

About this article

Cite this article

Rodrigues-Fernandes, C.I., Speight, P.M., Khurram, S.A. et al. The use of digital microscopy as a teaching method for human pathology: a systematic review. Virchows Arch 477, 475–486 (2020). https://doi.org/10.1007/s00428-020-02908-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00428-020-02908-3