Abstract

We report a computational method based on deep learning (DL) to generate planar distributions of soot particles in turbulent flames from line-of-sight luminosity images. A conditional generative adversarial network (C-GAN) was trained using flame luminosity and planar laser-induced incandescence (LII) images simultaneously recorded in a turbulent sooting flame with an exit Reynolds number of 15,000. Such a training built up the underlying relationship between the two types of images i.e., a predictive model which was then used to predict LII images from luminosity images and the accuracy was assessed using four different methods. Results show that the model is effective and capable of generating LII images with acceptable prediction accuracies of around 0.75. The model was also found to be applicable over a range of heights in the flames, as well as for the flames with a range of exit Reynolds numbers spanning from 8000 to 20,000. Besides, the probability density function (PDF) of LII signals in different flames can also be predicated using the model. This work, for the first time, demonstrates the feasibility of predicting planar signals from corresponding line-of-sight signals from turbulent flames, which potentially offers a much simpler optical arrangement for a modest trade-off in accuracy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Planar laser-based diagnostic methods are key experimental tools for understanding the complicated processes of interactions between fluid dynamics and chemical reactions because of the unique advantages including non-intrusive and high spatiotemporal resolution [1,2,3,4]. For most planar diagnostic methods, however, their applications are still limited to laboratory scale flames, targeting fundamental research due to the harsh environment and access difficulty encountered in industrial flames, as well as the sensitivity and cost of the laser setup. Another limitation relates to the low data collection rate of some laser-based planar diagnostic techniques either due to limited frequency of the pulsed laser system or due to the limitation of technique itself, e.g., the intrusion of laser beams. High-speed laser-based diagnostic techniques for turbulent combustion have seen rapid development in recent years [5,6,7,8,9,10,11], but are still relatively complicated and expensive. Therefore, there is an ongoing need to develop more flexible techniques for planar diagnostics in combustion.

Spontaneous light emissions from flames can be used to reveal information about flame turbulent structures, including chemiluminescence from key species (e.g., CH and OH radicals) [12,13,14,15], incandescence from soot and even infrared thermal emission from H2O and CO2 [14, 16,17,18]. For example, in a turbulent sooting flame, information about the distribution of soot can be revealed from the flame luminosity, such as by tomographic reconstruction. However, resolution of the planar distribution of soot is more limited from this line-of-sight signal and the need of multiple detection views challenges practical application of the tomographic methods [19,20,21,22,23]. Therefore, planar laser-induced incandescence (LII) has been developed and widely used in experimental studies of turbulent sooting flames to measure the detailed structure of soot sheets. However, the technique is also limited in terms of high repetition rate because of the relatively long lifetime of LII signals.

Machine learning architecture, or deep neural network in particular, has been rapidly growing as an effective approach of computational imaging [24,25,26], not only for image interpretation and machine vision but also for image formation [27, 28]. For example, as a prominent branch of deep neural networks, generative adversarial network (GAN) has attracted tremendous attention in recent years because of its great potential for image generation based on the patterns extracted from reference images [29]. For example, Shi et al. proposed a GAN-based footprint building method to generate footprint structure from optical satellite imagery, thus affording 3D reconstruction for urban models [30]. Merkle et al. explored the possibility of GAN to generate accurate ground control points from the SAR satellite images [31]. Osokin et al. trained a GAN to generate green channel cell image-which contains 41 types of co-exist specific proteins that can only be identified in green channel-from the detected red channel signal [32]. These various successful applications of GAN in interpreting images from complex feature structures inspire the reconstruction of planar images based on line-of-sight data using GAN to predict the distribution of species in two dimensions, as such an assessment has not yet been reported.

Therefore, the main aim of the present work is to evaluate the feasibility and accuracy of using deep learning to predict the planar turbulent structures of flames from direct imaging, particularly, whether the flame luminosity can be used for predicting time-averaged planar distribution of soot. In this paper, an improved GAN neural network is trained using simultaneous LII and flame luminosity images acquired from turbulent sooty flames, building up a correlation (model) between the two kinds of images, which are then employed to predict LII images from luminosity images. Four methods are used to carefully assess the prediction accuracies under different flame conditions, specifically, to evaluate the accuracy of the use of the model trained with one turbulent condition to those with different turbulence intensities and combustion regions.

2 Methodology

2.1 Experiment

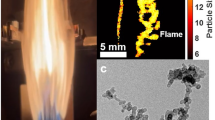

The experimental setup at the University of Adelaide is shown on the left part of Fig. 1. Turbulent bluff-body sooting flames were studied for this paper [36]. The diameter of the fuel jet is 4.6 mm, while that of the bluff body is 50 mm. The burner is mounted in a co-flow of air exiting a 190 mm diameter contraction at a velocity of 20 m/s. Synchronized flame luminosity images and LII images were collected from a series of ethylene-nitrogen (4:1 by volume) bluff-body turbulent flames with exit Reynolds numbers of 8,000, 15,000, 18,000 and 20,000 at a flame height spanning from 280 to 350 mm above the burner, corresponding an x/d of 60.8 to 76.1. Moreover, for the case of Re = 15,000, the luminosity and LII image pairs were also collected at 5 different flame heights, i.e., 280–630 mm (x/d ranges from 60.8 to 136.9), with each height spanning 70 mm.

Schematic of the experimental setup for the simultaneous LII and flame luminosity measurements, as well as the deep learning framework that takes experimental luminosity images as input, experimental LII as validation, and generates LII images as output, through a series of convolutional and deconvolutional layers

Flame luminosities were collected using an intensified CCD camera (ICCD, 1024 × 1024 pixels, PI-Max 4, Princeton Instruments) with an exposure time of 1 μs. A band pass filter centered at 700 nm with a transmission bandwidth of 20 nm (Andover 700FS20) was installed in front of the camera to reduce potential interference, e.g., that from C2. Shortly after luminosity collection (~ 2 µs), planar LII signals were collected. The fundamental output (1064 nm) of a pulsed (10 Hz) Nd:YAG laser (Q-Smart 850, Quantel) was used as the excitation light source for LII measurements. A laser sheet of approximately 500 µm in thickness was formed using three cylindrical lenses and led into the flame with a laser fluence of approximately 0.50 J/cm2 in the measurement volume. This value is higher than the threshold (around 0.20 J/cm2) of the plateau region in the LII response curve [22]. The use of such a higher laser fluence ensures the value to be still in the plateau region when beam steering and attenuation occur in the turbulent sooting flames, thus mitigating the influences of both effects on LII signals. Prompt LII signals were collected within 50 ns from the start of laser pulse using another PI-Max-4 ICCD camera, in front of which a band pass filter of 435 ± 20 nm (Semrock, FF02-435/40) was installed. The spatial resolutions are 0.1872 mm/pixel and 0.1859 mm/pixel for the LII images and luminosity images, respectively. For the turbulent sooty flames studied in the current work, the maximum magnitude of signal trapping is generally less than 10% according to the experimental assessment in our previous work [37]. Moreover, signal trapping attenuates both LII and luminosity signals with similar magnitudes, thus the effects of signal trapping was neglected in this research.

It is worth noting that this method is based on the assumption that there is a strong correlation between planar distribution of soot concentration measured by LII and line-of-sight soot radiation detected by flame luminosity. This assumption is considered to be valid, even with the temperature variation of soot being accounted for. This is because firstly, the flames studied in the present work are non-smoking flames, hence the loading of ‘cold’ invisible soot, i.e., smokes, is expected to be very low and the contribution of cold soot to the difference between LII and flame luminosity signals is negligible. However, a potential uncertainty is the ‘hot’ soot particles with different temperatures. According to our previous work [3] and [4], soot is typically found at the locations with gas temperature between 1400 and 2200 K. A narrower range of 400 K was also reported for particle temperature in a turbulent flame with different fuels [5]. Such a difference in temperature can lead to significant difference in soot radiation at the spectral channel of 700 nm. However, because the mean value of soot temperature appears consistently within a smaller temperature range of 1750 K ~ 1900 K [4], the influence of temperature distribution on the time-averaged values or PDFs of soot concentration is expected to be minor.

2.2 The neural network model

The GAN method consists of two neural networks: a generator (G), which takes noise variables as input to generate a new data instance, and a discriminator (D), which decides whether each instance of data belongs to the actual training data set or not [29, 30]. However, as an unsupervised deep learning approach, its implementation was limited due to the lack of validation from the supervised training data. To address this issue, conditional generative adversarial networks (C-GAN) were proposed by introducing a conditional y to constrain both the generator and discriminator [38]. Its cross-entropy loss function can be described as follows:

where \(E_{{p_{x} }}\) and \(E_{{p_{z} }}\) are the empirical estimation of the expected value of training data x (luminosity image in this research) under distribution px and noise variable z (predicted LII) under distribution pz, respectively; \(G\left( {x|y} \right)\) trains the generator based on condition y (experimental LII); \(D\left( {x|y} \right)\) trains the discriminator with data x under conditional probability y. The values of G and D were trained simultaneously to minimize the value of \(\log \left( {1 - D\left( {G\left( z |y\right)} \right)} \right)\) and maximize that of \(\log \left( {D\left( x |y\right)} \right)\) [29, 30, 38].

It has been shown in an earlier work [39] that GAN can perform better by introducing an additional L1 loss term \(L\left( G \right) = \left| {G\left( z|y \right) - y} \right|\) in the loss function, thus forming conditional GAN, i.e., C-GAN, of which the loss function can be written with an added coefficient as follows:

In the present research, a U-net structure was adopted as the generator because its cross-layer connection would preserve high frequency characteristics of images. As is shown in Fig. 1, the collected images were split into three channels (each channel being the same with the original gray-scale image), with a spatial resolution of 512 × 512. The gray scale images were then converted into three channel ones for model training as the latter is proven to result in higher accuracy in practice. The 3-channel 512 × 512 luminosity image was convoluted in each encoder layer and higher dimensional features are extracted. Subsequently, the decoder layers reconstruct a deconvoluted image through features recovered from the last layer as well as the cross-layer connection until a 3-channel 512 × 512 image is recovered. We define the generated image as a predicted LII. Then, both the predicted LII and target (measured) LII are sent into a 6-layer convolutional discriminator net to generate and supervise a 1 × 30 × 30 dimensional feature map, respectively. By training the generator and discriminator with pairs of measured flame luminosity (input of Generator) and LII (conditional target of discriminator) images, the loss function (Eq. 2) was computed to quantify the difference between the generated and measured LII images, and the loss was subsequently back-propagated following gradient descent algorithm to optimize the network [24].

2.3 Training and testing procedures

The specification of training and testing data set used here is shown in Table 1. First of all, the image pairs were divided into two set, namely the training set and test set. For the training and testing of DL on various Reynolds number, the training set includes 2688 image pairs (Re = 15,000, x/d: 60.8–76.1), while each testing set (Re = 8000, 15,000, 18,000, 20,000, x/d: 60.8–76.1) contains 300 image pairs. And for the training and testing on different x/d, the training set contains 3500 image pairs under Re = 15,000, while x/d expands from 60.8 to 136.9, each testing set (x/d: 121.7–136.9, 106.5–121.7, 91.3–106.5, 76.1–91.3, 60.8–76.1, Re = 15,000) contains 300 image pairs. The training and testing were undertaken on NVIDIA V100 GPU and Intel Xeon E5-2682 v4 CPU.

2.4 Index for quantitative evaluation

To quantitatively evaluate the accuracy of prediction at a pixel to pixel level, four indices were adopted, namely intersection over union (IoU), average relative error (ARE), structure similarity index (SSIM) and gradient magnitude similarity mean (GMSM).

An IoU method was proposed to measure the difference between experimental and predicted LII images in spatial appearance [40]. The binary images xb and yb were acquired after binarization of images x and y based on the mean values of image intensity (binarization threshold), respectively. Thus, as shown in Fig. 2, a white region implies the intensity above the threshold, indicating the presence of LII signal, while a black represents intensity below the threshold and indicates a region with no signal. By overlapping the two binary images, we obtained the gray region, which represents the signal difference between measured and DL predicted LII. The index of IoU is defined by the ratio of intersection over union of xb and yb:

The average relative error (ARE) is designed to evaluate the pixel-to-pixel bias of the region where signal is available in image x and y. It can be written as:

where \(p_{xi} ,p_{yi} \in (x \cup y)\) represents pixels in the signal available region, and N represents the amount of pixels in the region of \(x \cup y\).

Furthermore, the structure similarity index (SSIM) [41] is also introduced to quantify the regional structure accuracy of prediction. SSIM is described by the following equation:

where \(\mu\) and \(\sigma\) represent the mean and variance of image x and image y, and \(\sigma_{xy}\) is their covariance. Following a previous work [41], a 9 × 9 pixels (1.7 × 1.7 mm2) sub-window was adopted to calculate the local SSIM first, which was then averaged to get a global SSIM of the whole image.

Besides SSIM, Gradient magnitude similarity mean (GMSM) was also used to estimate the similarity of the spatial gradient of two images [42]. In this method, the gradient magnitudes of image x and y at position i can be denoted as:

where xv and yv represent the vertical gradient component of image x and y, while xh and yh represent the horizontal components. Then the gradient magnitude map (GMS) is computed as:

afterward, the GMSM can be calculated as:

where N represents the total number of pixels in the image.

With regards to the range of these four indices, ARE ranges from 0 to 2, with lower ARE value representing higher degree of similarity. Meanwhile, IoU, SSIM and GMSM all range from 0 to 1, where 0 denotes two images that are completely different, while 1 indicates that the two images that are the same.

3 Results and discussion

Figure 2, in which the images represent were selected with the similarity indices being around the arithmetic mean value, shows representative images of measured luminosity and LII, together with the predicted LII, their binary and IoU for Re = 15,000 and x/d of 60.8–76.1. Typical highly intermittent and complex structures of soot sheets in a turbulent flame can be found in both measured and predicted images. It can also be seen from the IoU images that, most of the effective signal region has been qualitatively well predicted, with IoU ranging from 0.67 to 0.84, as is shown in Table 2. The values of other three quantitative measures, i.e., ARE, SSIM and GMSM, are also shown in Table 2, which indicates that the structures and the signal intensities can be fairly well predicted in the snapshot. It is noteworthy that the index of ARE represents deviation between measurement and prediction, while the other indices indicate similarity. Therefore, being around 0.3 for ARE is consistent with being around 0.7 for the other indices.

Figure 3 presents the results predicted for the flames with different Reynolds numbers (8000, 18,000 and 20,000) using the model trained with the data for Re = 15,000. All the results are for the same height with x/d spanning from 60.8 to 76.1. Compared with the experimental LII, the DL predicted results have similar structure, intensity level and spatial gradient distribution, even when the luminosity appears to be quite different from the LII images, e.g., at Re = 20,000. From the perspective of intersection over union, the model predicts most of the effective signal region with an average IoU being 0.72, 0.68 and 0.65 under Re = 8000, 18,000 and 20,000, respectively. However, it is also evident that the accuracy tends to decrease with an increase in Reynolds number. This can be attributed to the increased complexity in the flame structure with Re, so that the collected dataset is insufficient as a training set to cover all the transient states of the flames.

Experimental and predicted LII images, their binary counterparts and the IoU of the binary images. The model was trained at Re = 15,000 and tested at different Reynolds numbers, i.e., a, b: Re = 8000; c, d: Re = 18,000; e, f: Re = 20,000. The indices of each case are shown in Table 5

Figure 4 presents time-averaged LII images (I), intermittency (II), profiles of time-averaged LII signal intensity (III) and intermittency (IV) crossed at HAB = 300 mm. These statistic results were calculated from 300 snapshots under (a) Re = 8000, (b) 15,000, (c) 18,000 and (d) 20,000. A smoothing window size of 40 × 40 pixels (7.5 × 7.5 mm2) was applied to these subfigures. It was found that at this HAB, the DL prediction agree with the experimental ones to within 13.5% for the mean and 15.2% for the intermittency with Reynolds numbers ranging from 8000 to 20,000.

The time-averaged LII and intermittency at a Re = 8000, b Re = 15,000, c Re = 18,000 and d Re = 20,000. Each case includes (I) Time-averaged LII signals measured and predicted using DL; (II) the intermittencies of LII signals; (III) the profiles of time-averaged LII and (IV) those of intermittency crossed at HAB = 300 mm

Figure 5 presents the probability density function (PDF) of the IoU, ARE, SSIM and GMSM indices calculated over 300 pairs for different Reynolds numbers and for x/d ranging from 60.8 to 76.1. The average values of the above-mentioned indices are shown in Table 3. The average values of IoU, SSIM, GMSM indices range from 0.65 to 0.85, and ARE ranges from 0.33 to 0.38, this is again because ARE represents deviation, while the other indices measure similarity. However, the values of IoU, SSIM and GMSM decrease, while ARE increase with an increase in Reynolds numbers, consistent with an increase in the variation and complexity of flames at a higher Reynolds number. It is possible that this may be overcome by training the model for a wider range of Re, but this will need to be verified.

Figure 6 presents the probability density function of the pixel intensity in the predicted and measured LII images at different Reynolds numbers for x/d ranging from 60.8 to 76.1. The PDF curve in each sub figure was acquired from 300 Gray-scale images, for both measured and predicted LII. It was found that the predictions agree well with the measurements, with the maximum bias of 9.1, 12.3, 13.9 and 10.2% under different Reynolds numbers (8000–20,000), respectively. The model accurately predicted the PDFs of LII signals in the flames with different turbulence strengths, which reveals the effect of exit Reynolds number on soot loading in turbulent flames [43].

Probability density function of the predicted and measured LII signals for the cases of a Re = 8000; b Re = 15,000; c Re = 18,000; and d Re = 20,000 at the HABs ranging from 280 to 350 mm, correspondingly, x/d from 60.8 to 76.1). The results were calculated over 300 images with a smoothing window of 40 × 40 pixels (7.5 × 7.5 mm2)

Figure 7 presents the performance of the DL model for instantaneous predictions at a range of flame heights for Re = 15,000. This is to test the potential of the DL in predicting the flame structures with an expanded data set. In this case, the training set consists of data with x/d ranging from 60.8 to 136.9 for Re = 15,000, and the trained model is used to predict the LII image at different axial distances. At lower HABs of flames, the precursor of soot, polycyclic aromatic hydrocarbon (PAH), starts to form from the pyrolyzed products. Then, with the particle nucleation, surface growth and particle coagulation, the particle sizes increase upon the collision of the growing soot particles and agglomerate into fractal clusters later, e.g., chain like structures. The process of formation, growth and oxidation that soot experiences is consistent with the feature of LII images along the axial axis. Due to the light soot accumulation around lower HAB, e.g., from 280 to 350 mm in Fig. 7, the pixel-level correlation between LII and luminosity images is lower, and their image features are relatively indistinct for the GAN model to capture and compare. As soot grows with HAB, soot volume fraction increases, which results in higher pixel intensity and more obvious feature regions on both LII and luminosity images, thus easier for the convolutional neural network to capture the correlation.

Instantaneous realization of the measured luminosity and LII, together with the predicted LII, their binary counterparts and the IoU of the binary images. Image sets are predicted for a series of axial distances with deep learning algorithms trained for x/d ranging from 60.8 to 136.9 and for Re = 15,000. The indices for each case are shown in Table 6

The statistical measures of the indices are presented in Fig. 8 and Table 4 for various heights above the burner. The most probable values of IoU are typically 0.7, which is a useful level of accuracy. A low average ARE value indicates a low a pixel-to-pixel level error, while higher SSIM and GMSM at each flame height suggest higher degree of similarity in the spatial distribution of flame structure and the spatial gradient of signal intensity. Furthermore, it is found that ARE tends to be lower, while SSIM and GMSM tend to be higher at higher HAB, e.g., x/d from 91.3 to 136.9. The higher prediction accuracy at higher HAB should be related to the structure of two-dimensional soot sheets. According to our previous study [44], the width and length of soot sheet become less dispersed at the downstream of the flames because of the oxidation of soot along the flame propagation. The high prediction accuracy is also potentially because of the consistent optical properties of matured soot at the downstream of the flames, but this hypothesis is extremely challenging to experimentally validate. These factors, when recorded and quantified on pixel level, lead to concentrated pixel bunches and clear features. Because the convolutional neural network performs better on capturing and preserving the highlighted features [24, 45,46,47], the LII and luminosity images can be correlated well by the GAN model with a relatively higher precision around higher HAB.

Figure 9 reports the probability density function of the pixel intensity in the predicted and measured LII images, in which sub-figures (a–e) are the results calculated from different axial distances with x/d ranging from 60.8 to 136.9 for Re = 15,000. Like the data presented in Fig. 6, the PDF curve in each sub figure was acquired from 300 Gray-scale images, for both measured and predicted LII. As shown in Fig. 9, the experimental LII and predicted results have very similar PDF profiles with the maximum calculated deviation being 13.2, 8.2, 10.8, 11.3, 9.5% for data from different HAB measurements. The prediction results in Fig. 9 consistently reveal the oxidation process of soot in the downstream region because of the entrainment of surrounding air.

Probability density function of LII intensity at various HAB ranging from 280 to 630 mm (x/d from 60.8 to 136.9). a 560–630 mm (121.7–136.9); b 490–560 mm (106.5–121.7); c 420–490 mm (91.3–106.5); d 350–420 mm (76.1–91.3); e 280–350 mm (60.8–76.1). The results were calculated from 300 LII images with a smoothing window of 40 × 40 pixels (7.5 × 7.5 mm2)

Figure 10 shows that the prediction accuracy, e.g., SSIM, increases with the size of the training dataset, although the growth rate tends to slow down at larger dataset. Therefore, a general lesson we learn from the present work is that a total of 2688 or 3500 images (pair) is marginal for the model training, further increasing the size of dataset would be effective in improving the accuracy of the trained model. But the influence may be not significant because of the natural frequency of flames.

With regards to the transferability of the model, as can be found from the results above, the trained DL model is transferable from one Reynolds number to some other Reynolds number, and from one flame height, to other heights. The main reason behind is that the images for model training already have very complex structures and high intermittency, which are similar with the cases at different heights or with different turbulence strength. Therefore, the model should also be transferable to the flames of different fuels or different ambient pressures. The main challenge of this methodology may appear on the prediction of flames with significant different structures or combustor configurations, for which new training data may have to be used.

4 Conclusions

A conditional generative adversarial neural network (C-GAN) was trained using simultaneous flame luminosity and LII images in a turbulent flame (Re = 15,000) to generate a prediction model that characterizes the relationship between two kinds signals. It was found that:

-

1.

The model trained at Re = 15,000 can be used to predict the complex planar distributions of soot particles in the flame at the same Reynolds number and flame height with a fair accuracy level, with a typical degree of similarity between prediction and measurement being around 0.75.

-

2.

The trained model is extendable to predict soot distributions at different heights in the same flame and those in the similar flames with different exit Reynolds numbers (Re = 8000, 18,000, and 20,000), also with the same accuracy level of approximately 0.75.

-

3.

The time-averaged LII images predicted by the DL model agree with the experimental ones to within 13.5%, and an agreement of 15.2% was found for the intermittency, with Reynolds numbers ranging from 8000 to 20,000.

-

4.

Probability density distribution of LII signals can also be well predicted using the model for flames of different heights and Reynolds numbers, with the maximum deviation between prediction and measurement being around 10%.

This work provides the impetus to a promising approach where a deep neural network model is well-trained off-site using experimental luminosity and synchronously recorded LII images, and to then applying this model to predict the LII signal directly from the luminosity on site to decrease the cost and limitation of the experiment setup in practical systems. The method also has potential to be used for diagnosing non-sooting flames based on flame chemiluminescence images. Moreover, the methodology, namely using deep learning algorithms together with other references to predict or fit the target imaging, may also be suitable and promising in overcoming the obstacles in the extinction-based measurements of two-dimensional-data in soot clouds [48] or other droplet/particle laden flows [49] as well.

References

K. Kohse-Höinghaus, R.S. Barlow, M. Aldén, J. Wolfrum, Proc. Combust. Inst 30(1), 89–123 (2005)

J.C. Oefelein, R.W. Schefer, R.S. Barlow, AIAA J. 44(3), 418–433 (2006)

M. Aldén, J. Bood, Z. Li, M. Richter, Proc. Combust. Inst 33(1), 69–97 (2011)

C.F. Kaminski, J. Hult, M. Aldén, Appl. Phys. B 68(4), 757–760 (1999)

A. Verdier, J. Marrero Santiago, A. Vandel, G. Godard, G. Cabot, B. Renou, Combust. Flame 193, 440–452 (2018)

Y. Gao, X. Yang, C. Fu, Y. Yang, Z. Li, H. Zhang, F. Qi, Appl. Opt. 58(10), C112–C120 (2019)

J. Köser, T. Li, N. Vorobiev, A. Dreizler, M. Schiemann, B. Böhm, Proc. Combust. Inst 37(3), 2893–2900 (2019)

S. Roy, P.S. Hsu, N. Jiang, M.N. Slipchenko, J.R. Gord, Opt. Lett. 40(21), 5125–5128 (2015)

S. Ren, K. He, R. Girshick, J. Sun, Faster R-CNN: towards real-time object detection with region proposal networks. (2015), p.

M. Köhler, I. Boxx, K.P. Geigle, W. Meier, Appl. Phys. B 103(2), 271 (2011)

V. Beyer, D.A. Greenhalgh, Appl. Phys. B 83(3), 455 (2006)

Z. Wang, P. Stamatoglou, B. Zhou, M. Aldén, X.-S. Bai, M. Richter, Fuel 234, 1528–1540 (2018)

H. Jiang, D. Sun, V. Jampani, M.-H. Yang, E. Learned-Miller, J. Kautz, Super SloMo: high quality estimation of multiple intermediate frames for video interpolation, in arXiv e-prints, (2017), p.

Z.S. Li, B. Li, Z.W. Sun, X.S. Bai, M. Aldén, Combust. Flame 157(6), 1087–1096 (2010)

B.J. Kirby, R.K. Hanson, Appl. Phys. B 69(5), 505–507 (1999)

D. Zeng, P. Chatterjee, Y. Wang, Proc. Combust. Inst 37(1), 825–832 (2019)

C.R. Shaddix, T.C. Williams, Proc. Combust. Inst 36(3), 4051–4059 (2017)

J. Yang, X. Dong, Q. Wu, M. Xu, Combust. Flame 188, 66–76 (2018)

G.J. Nathan, P.A.M. Kalt, Z.T. Alwahabi, B.B. Dally, P.R. Medwell, Q.N. Chan, Prog. Energy Combust. Sci. 38(1), 41–61 (2012)

Z.W. Sun, D.H. Gu, G.J. Nathan, Z.T. Alwahabi, B.B. Dally, Proc. Combust. Inst 35(3), 3673–3680 (2015)

B. Menkiel, A. Donkerbroek, R. Uitz, R. Cracknell, L. Ganippa, Combust. Flame 159(9), 2985–2998 (2012)

C. Schulz, B.F. Kock, M. Hofmann, H. Michelsen, S. Will, B. Bougie, R. Suntz, G. Smallwood, Appl. Phys. B 83(3), 333 (2006)

S. De Iuliis, F. Migliorini, F. Cignoli, G. Zizak, Appl. Phys. B 83(3), 397 (2006)

Y. LeCun, Y. Bengio, G. Hinton, Nature 521(7553), 436–444 (2015)

E.Z. Omar, Appl. Phys. B 126(4), 54 (2020)

L. Zhang, R. Xiong, J. Chen, D. Zhang, Appl. Phys. B 126(1), 18 (2019)

G. Barbastathis, A. Ozcan, G. Situ, Optica 6(8), (2019)

C. Dong, C.C. Loy, K. He, X. Tang, in Computer Vision—ECCV 2014, (2014)

I.J. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, Y. Bengio, Generative adversarial networks, in arXiv e-prints, (2014), p.

Y. Shi, Q. Li, X.X. Zhu, I.E.E.E. Geosci, Remote. Sens. Lett. 16(4), 603–607 (2019)

N. Merkle, S. Auer, R. Muller, P. Reinartz, IEEE J Sel Top Appl Earth Obs Remote Sens 11(6), 1811–1820 (2018)

A. Osokin, A. Chessel, R. E. C. Salas, F. Vaggi, GANs for biological image synthesis, in 2017 IEEE International Conference on Computer Vision (ICCV), pp. 2252–2261 (2017)

D. Gu, Z. Sun, B.B. Dally, P.R. Medwell, Z.T. Alwahabi, G.J. Nathan, Combust. Flame 179, 33–50 (2017)

Z. Sun, B. Dally, Z. Alwahabi, G. Nathan, Proc. Combust. Inst (2020)

C.R. Shaddix, J. Zhang, in 8th US National Combustion Meeting 2013, May 19, 2013—May 22, 2013, (2013)

A. Rowhani, Z.W. Sun, P.R. Medwell, Z.T. Alwahabi, G.J. Nathan, B.B. Dally, Combust. Sci. Technol. 1–19 (2019)

Z.W. Sun, Z.T. Alwahabi, D.H. Gu, S.M. Mahmoud, G.J. Nathan, B.B. Dally, Appl. Phys. B 119(4), 731–743 (2015)

M. Mirza, S. Osindero, Conditional Generative Adversarial Nets, in arXiv e-prints, (2014), p.

P. Isola, J. Zhu, T. Zhou, A.A. Efros, in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), (2017)

S. Nowozin, in 2014 IEEE Conference on Computer Vision and Pattern Recognition, (2014)

Z. Wang, A.C. Bovik, H.R. Sheikh, E.P. Simoncelli, IEEE Trans Image Process 13(4), 600–612 (2004)

W. Xue, L. Zhang, X. Mou, A.C. Bovik, IEEE Trans Image Process 23(2), 684–695 (2014)

S.M. Mahmoud, G.J. Nathan, Z.T. Alwahabi, Z.W. Sun, P.R. Medwell, B.B. Dally, Combust. Flame 187, 42–51 (2018)

N.H. Qamar, G.J. Nathan, Z.T. Alwahabi, Q.N. Chan, Combust. Flame 158(12), 2458–2464 (2011)

N. Samuel, T. Diskin, A. Wiesel, IEEE Trans. Signal Process. 67(10), 2554–2564 (2019)

K. Fukushima, S. Miyake, Pattern Recogn. 15(6), 455–469 (1982)

Y. Lecun, L. Bottou, Y. Bengio, P. Haffner, Proc. IEEE 86(11), 2278–2324 (1998)

J. Manin, L.M. Pickett, S.A. Skeen, Two-color diffused back-illumination imaging as a diagnostic for time-resolved soot measurements in reacting sprays, (SAE International, 2013)

M. Koegl, B. Hofbeck, S. Will, L. Zigan, Proc. Combust. Inst 37(4), 4965–4972 (2019)

Acknowledgements

This research was financially supported by the National Natural Science Foundation of China under Grant No. 52006137, Shanghai Sailing Program, under Grant No. 19YF1423400, as well as China Postdoctoral Science Funding under Grant No. 2016M600313. The experimental research was supported by The Australian Research Council (ARC).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Zhang, W., Dong, X., Liu, C. et al. Generating planar distributions of soot particles from luminosity images in turbulent flames using deep learning. Appl. Phys. B 127, 18 (2021). https://doi.org/10.1007/s00340-020-07571-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00340-020-07571-9