Abstract

Currently, invisibility devices are increasingly approaching practical application requirements, such as using easily obtained materials for construction and hiding dynamic objects. Here, using phase retrieval and computer-generated holography techniques, we design an invisibility system in simulation to produce a phase-conjugation signal that changes with the dynamic object to hide it. This system is highly selective for the hidden objects, i.e., it only hides the target object and has no effect on the others. Such function may provide our invisibility system with great potential in special fields, such as biology and military applications for living and dynamic target recognition, selective camouflaging, and others.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Due to the development of transformation optics [1, 2], much scientific progress has been made in designing and demonstrating invisibility devices. So far, different devices, such as shell-type cloaks [3,4,5], complementary media-based distant cloaking devices [6], carpet-like cloaks [7,8,9,10,11,12], magnetic cloaks [13], and unidirectional invisibility devices [14], have been proposed and partly realized. Looking back at the development of invisibility devices, the constitutive materials have changed, from artificial materials that are anisotropic or inhomogeneous [4, 8], to dielectric [15, 16] or even natural materials [17, 18]. Meanwhile, the working waveband of invisibility devices has developed from microwave [4, 19] to infrared [15] and even visible frequencies [17, 18]. This means that devices are gradually approaching the practical application requirements. Recently, cloaks based on ray-optics [20] make it possible to hide macroscopic dynamic objects, even creatures. However, shell-cloaks, including cloaks based on ray-optics, and carpet-like cloaks must enclose or cover the objects to be hidden and hide the entire covered region without selectivity.

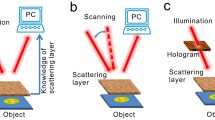

Physically, some invisibility devices, such as complementary media-based distant cloaks, can be seen as time-reversal devices, which produce phase-conjugation signals to hide objects [21]. There are many methods to obtain phase-conjugation signals, such as real-time holography [22], adaptive optics [23], the time-reversed ultrasonically encoded technique [24], and wavefront shaping [25]. These techniques are widely used in imaging or focusing through a scattering medium [22,23,24,25], focusing beyond the diffraction limit [26], etc. Here, based upon the time-reversal principle, we design a long-distance invisibility system and simulate the working process of the system. In this simulation system, we use phase retrieval and computer-generated holography techniques to produce phase-conjugation signals that change with the object to compensate for the wavefront distortion and light absorption of the object. Thus, the system can hide dynamic objects which are complex phase and amplitude modulated. Compared with shell-cloaks and carpet-like cloaks, the system is highly selective for the hidden objects, meaning that the system only hides the target object and has no effect on the others. Even when the target object covers other objects or background patterns, our system can reconstruct the image of the covered region and make the background patterns unaffected by the hidden objects. Moreover, our system can work at visible frequencies to hide a time-varying object at the centimeter scale. Such function may make our invisibility system more applicable in some special fields, such as biology and military applications for living and dynamic target recognition, selective camouflaging, and others.

2 Theoretical analysis, optical path setup and phase retrieval algorithm

2.1 Theoretical analysis

According to Fourier optics [27], the wavefront distortions caused by an object can be canceled when it is illuminated by a light beam that is phase-conjugated with the transmission light of the object as it is illuminated by plane light [21]. Phase-conjugation signals can be produced through the time-reversal principle [28]. We used traditional holography to produce time-reversed light to compensate for scattered light fields of one object to make it invisible and, moreover, to create illusions at visible frequencies [29,30,31]. However, the post-processing procedure of traditional hologram is too long, which makes the devices in our previous works only hide static objects. Recently, computer-generated holography has replaced traditional holography in many fields such as imaging and optical security [32]. In our previous work of hiding dynamic objects [33], we combined phase retrieval technology and computer-generated holography to produce time-reversed light which changed with the target object to conceal dynamic phase-modulated objects. In this paper, by developing the hiding system, we design a system to hide dynamic objects which are complex phase and amplitude modulated. We use a spatial light modulator (SLM) to produce computer-generated holography which contains both the phase-conjugated signal of the object and the reconstructed signal of the background patterns to complete the patterns covered by the target object when hiding it. In addition, by improving the phase retrieval algorithm, we reduce the system noises to make our system work for intricately detailed objects.

2.2 Optical path setup

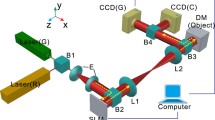

The optical system used in our simulation is sketched in Fig. 1. The object light beam W, which propagates through an SLM and an object in plane O, is divided into two parts: one beam (T1) propagates to the observation plane to show the working effect of our system; the other beam (T2) is detected and analyzed to give feedback information to control the SLM. The SLM plane and object plane O are the front and back focal planes of the 4f optical system, which consists of lenses L1 and L2; object plane O and imaging plane O’ are the focal planes of the 4f optical system consisting of lenses L3 and L4. Thus, O and O’ are one-to-one image planes of the SLM plane and plane O, respectively. D1, D2 and D3 are detecting planes that are 1.0, 1.2 and 1.4 m away from imaging plane O’, respectively.

Sketch of optical path in the simulation. A light beam with wavelength λ = 632.8 nm from a He–Ne laser is modulated by an SLM. Lenses L1 and L2 construct a 4f optical system with focal length f = 10 cm. The SLM and object are placed on the front and rear focal plane, respectively, to make the SLM plane a one-to-one image on the object plane O. The diaphragm placed between L1 and L2 is used to block the stray light. E is a beam splitter that divides W into two parts: beam T1 (observed to show the working effect of our system) and beam T2 (detected to retrieve the entire light field of the object). O’ is the one-to-one image plane of O through the 4f optical system constructed by lenses L3 and L4 with focal length f = 10 cm. The distance between O’ and the three detecting planes D1, D2 and D3 is 1, 1.2 and 1.4 m, respectively. We establish a feedback system between the detected planes and SLM to modulate the wavefront of object light beam W. The details of the object with bubbles and with a random background are shown in the middle and on the bottom right, respectively

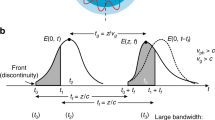

To obtain all information of the transmission light field of an object, we input an even-distributed phase signal into SLM. In this case, the detectors on planes D1-D3 detect the intensity distributions of light beam T2, which only carries information regarding the dynamic object. Actually, it is impossible to recover the transmission light field of an object directly from the image detected in each of the three planes. However, comprehensively analyzing the intensity distribution in the three detecting planes, we can retrieve all light field information on the back surface (the surface towards beam splitter E) of an object using the improved Gerchberg and Saxton (GS) iterative algorithm [34, 35]. The specific calculation process is detailed in the next section. To obtain a light beam that is time-reversed to the transmission light of an object, we input a special phase distribution into SLM based on the retrieved transmission light field of the object. When illuminated by a light source, the SLM produces phase-conjugated light that changes with the dynamic object. The absolute value of the amplitude of the light field on an observation plane that is 10 cm behind the object is simulated to see if the object that is picked out becomes invisible.

2.3 Phase retrieval algorithm

We assume that the amplitude distributions of D1-D3 are \({A_1}({x_1},{y_1})\), \({A_2}({x_2},{y_2})\), \({A_3}({x_3},{y_3})\) and the phase distributions are \({\varphi _1}({x_1},{y_1})\), \({\varphi _2}({x_2},{y_2})\), \({\varphi _3}({x_3},{y_3})\), respectively. The phase on the back surface of object is determined using the following procedure:

Step A. Randomly choose the initial estimation of \({\varphi _1}({x_1},{y_1})\) and combine it with the detected amplitude \({A_1}({x_1},{y_1})\) to construct the complex light field on plane D1.

Step B. Calculate the amplitude and phase distributions on plane D2 by using the complex light field on plane D1. Replace the calculated amplitude by detected amplitude \({A_2}({x_2},{y_2})\) to construct the new complex light field on plane D2.

Step C. Calculate the amplitude and phase distributions on plane D3 by using the complex light field on plane D2. Replace the calculated amplitude by detected amplitude \({A_3}({x_3},{y_3})\) to construct the new complex light field on plane D3.

Step D. Calculate the amplitude and phase distributions on plane D1 by using the complex light field on plane D3. Replace the calculated amplitude by detected amplitude \({A_1}({x_1},{y_1})\) to construct the new complex light field on plane D1.

Step E. Repeat steps B, C, and D 100 times.

Step F. Use the calculated complex light field on plane D1 to deduce the amplitude and phase distributions on the back surface of object.

3 Numerical simulation results

3.1 Simulation results of hiding a dynamic object from other objects

In our simulation, the dynamic object on plane O is a variable complex phase- and amplitude-modulated plate that projects an animation of a running deer [36] with bubbles around it. We assume that the size of the object is 2 cm × 2 cm and that the playback speed of the animation is 3 frames per second. To explain the working process of our system in detail, we take the first frame of the animation as an example of the object. The absolute value of the amplitude distribution of the object is shown in Fig. 2a, and the phase distribution is as shown in Fig. 2b. To get a quantitative description, we show in each panel the three-dimensional (3D) profile (right-hand panel) of the planar graph and its line graph along the y-axis at x = 1 cm (inset). We can distinguish the dear from the background in Fig. 2a clearly. In addition, the phase corresponding to the deer region and the edges of bubbles is outstanding compared to the background in Fig. 2b. Note that the original object information is unknown throughout the entire process of hiding the object. We provide Fig. 2a, b to contrast with the retrieved object information. Figure 2c presents the simulated absolute value of the amplitude of the light field on the observation plane when the object is directly illuminated by a laser beam at λ = 632.8 nm. The boundaries of the bubbles in the photograph on the left are due to the diffraction effect. The right-hand panel and inset are the 3D intensity profile of the image and its line graph along the y-axis at x = 1 cm, respectively. The peaks in the line graph correspond to the boundaries of the deer and the bubbles, meaning that both the deer and bubbles are visible when the object is illuminated by plane light directly. A movie illustrating the dynamic image on the observation plane when the object is illuminated by plane light directly is provided (see Visualization 1). Figure 2d shows the absolute value of amplitude distributions of the light field detected in the planes D1-D3, respectively. Using only Fig. 2d, we can retrieve all light field information on the back surface of the object via the improved GS iterative algorithm.

Sketches of the first frame of object used in the simulation. a Planar image (left hand panel) of the absolute value of the amplitude distribution of the object and its 3D amplitude profile (right hand panel). b Planar image (left hand panel) of the phase distribution of the object and its 3D phase profile (right hand panel). c Planar image (left hand panel) of the absolute value of amplitude distribution of the light field on the observation plane and its corresponding 3D intensity profiles (right hand panels) when the object is illuminated by plane light directly. d Absolute value of amplitude distributions of the light filed detected on planes D1, D2, and D3, respectively, when the object is illuminated by plane light directly. Insets in (a), (b), and (c) are the line graphs of the corresponding images along the y axis at x = 1 cm

Figure 3a, b show the calculated absolute value of amplitude distribution and phase distribution of the light field on the back surface of the object, respectively, after optimization iteration. The difference between Figs. 3a and 2a is shown in Fig. 3c, in which the values fluctuate less than 1e-3 around 0. Comparing Fig. 3b with Fig. 2a, we determine that the difference between the actual and retrieved phase distributions changes little at different pixels (Fig. 3d). This difference does not affect the results in our simulation because the unified phase difference does not change the propagation wavefront of the light. Thus, both the amplitude distribution and phase fluctuation of the transmission light field of an unknown object can be retrieved accurately using only the absolute value of amplitude distributions of the light field on planes D1-D3. From the retrieved object light field, we can determine the signal which is input into the SLM. To hide the selected deer without changing other objects, the SLM-modulated light contains both the information to hide the deer and the information to reconstruct the image of bubbles in the deer region. Therefore, the light illuminating the object should have both intensity and phase distributions. However, most commercial SLMs only work in one mode, i.e., phase mode or intensity mode. Thus, we use a kind of computer-generated holography encoding technique which was proposed by Chu [37, 38] to generate a kinoform to be displayed on the SLM (Fig. 3e). We take the pure phase modulated SLM from BNS (America) as an example. To this kind of SLM, the input signal is a gray image, in which different gray levels correspond to different phase retardations. Thus, the planar graph in Fig. 3e is the actual image to be embedded into SLM directly. After propagating through the spatial filter consisting of the 4f system and the diaphragm, the light modulated by the SLM contains both intensity variations and phase fluctuations to compensate for the absorbing object. The absolute value of the amplitude on the back surface of the object is the same everywhere (Fig. 3f), meaning that the light intensity difference is canceled by our system. The phase distribution on the back surface of the object (Fig. 3g) and the amplitude distribution on the observation plane (Fig. 4h) contain only the patterns of bubbles without any of the running deer’s information, even in the region that belonged to the deer before. A movie illustrating the observed image when our invisibility system works on the dynamic object, changing at a speed of 3 frames per second, is provided (see Visualization 2). Comparing Visualization 1 to Visualization 2, we see that although the object is complex phase and amplitude modulated, there is no signal of the running deer but floating bubbles when our system works, meaning that our system can hide dynamic objects selectively. If we want to make the deer visible again, we input 0 to every pixel of the SLM. Then the SLM becomes a transparent medium layer without any phase retardation and we can get the image in Fig. 2c again on the observation plane.

Simulation results of hiding a dynamic object at λ = 632.8 nm. a Retrieved absolute value of amplitude distribution of the object. b Retrieved phase distribution of the the object. c Difference between retrieved and original absolute value of amplitude distributions of object. d Difference between retrieved and original phase distributions of object. e Phase distribution that is input into the SLM. f Absolute value of amplitude distribution of the light field on the back surface of object when our system works. g Phase distribution of the light field on the back surface of object when our system works. h Amplitude distribution on the observation plane when our system works. i–k Amplitude distribution on the observation plane when adding noise and vibrations to the system

Simulation results of hiding running deer which is mixed with random distributed background at λ = 532 nm. a Phase distribution of the object to be hidden. b Absolute value of amplitude distribution on the observation plane when the object is illuminated by the plane light directly. c Phase distribution of the light field on the back surface of the object when our system works. d Absolute value of amplitude distribution on the observation plane when our system works. e Partial enlargements of the phase distribution in the white square frame of Fig. 4a (left) and Fig. 4c (right), respectively. f Partial enlargements of the image in the white square frame of Fig. 4b (left) and Fig. 4d (right), respectively

To be closer to the situations in practical applications, we introduce noise and vibrations in the simulation. When we add random noise with signal–noise ratio of 10:1 to the images detected on D1–D3 (Fig. 3i), and introduce position deviation to D1–D3 along z axis of 1 mm (Fig. 3j) and along x axis of 0.08 mm (Fig. 3k), the amplitude distribution on the observation plane still contains only the image of bubbles. Thus, except for those background noises on the observation plane, our system works well even with slight noise and vibrations. From the line graphs in these three figures, we can find out that noises in Fig. 3k is more obvious than noises in other two figures, meaning that our system is more sensitive to position deviation along x axis of D1–D3.

3.2 Simulation results of hiding a dynamic object from randomly distributed background

Figure 4 shows that our system works when the illumination light has different wavelengths and the target object to be hidden is mixed with a randomly distributed background. We use a phase-modulated object and take the first frame of the dynamic object as an example. When illuminated by plane light at wavelength λ = 532 nm, the phase distribution of the light field on the back surface of the object is as shown in Fig. 4a, in which we can see a deer in front of a randomly distributed background. Without our system, the absolute value of the amplitude of the light on the observation plane contains the outline of the deer and speckle imaging of the random background (Fig. 4b), meaning that the deer is visible in this situation. A movie illustrates the dynamic image on the observation plane when the object is illuminated by plane light directly (see Visualization 3). When our system works, the phase distribution on the back surface of the object contains only the signal of the random background (Fig. 4c). Moreover, we can only see the speckle imaging of the random background without any of the deer’s information on the observation plane (Fig. 4d), meaning that the deer is concealed. A movie illustrates the dynamic image on the observation plane when our system works (see Visualization 4). The running deer in Visualization 3 disappears in Visualization 4, while the dynamic random background still exists. To illustrate that our system hides the deer without affecting the background, we show partial enlargements of the areas in the white square frame of Fig. 4a [left hand panel in Fig. 4e], 4c [right hand panel in Fig. 4e], 4b [left hand panel in Fig. 4f] and 4d [right hand panel in Fig. 4f] and adjust the contrast to make the details of the background clear. Contrasting the details of the panels in Fig. 4e, f, we observe that the phase distribution on the back surface of the object and the absolute value of the amplitude distribution on the observation plane remain unchanged in the background region regardless of whether our system works, while the lines outside the deer are concealed by our system and are reconstructed to be the same as the background. Thus, our system only conceals the deer, which is selected, and has no effect on randomly distributed background signals.

4 Discussions

When our system works, the time-reversed light, which is used to compensate for the scattered field of the object, changes based on the absolute value of amplitude distribution of the light field on planes D1–D3. Thus, unlike traditional invisibility devices and time-reversal systems, which are fixed in structure, our system can be modulated when external conditions change because we establish a feedback system between the detectors on planes D1–D3 and the SLM. For every frame of the animation, we need 20 s to obtain all of the object’s information and the signal for input into the SLM because of the calculating ability of our computer. This means that there are tens of seconds of time between seeing and hiding the object. Using a more advanced computing device, one could reduce the time between detecting the signals in D1–D3 and modulating the SLM. If the time spent in the feedback process is less than the time spent by the detector placed on the observation plane to take one frame, this system can be expanded to hide dynamic objects in real time. In the simulation, considering the computing ability and convergence speed, we use three detecting planes D1–D3 in the phase retrieval algorithm and choose 100 to be the number of iterations. If more planes are measured to provide amplitude distributions or the number of iterations increases, the difference between the calculated and original phase distribution of the object will decrease. In our system, the object should be seen and chosen before being hidden. In some occasions such as biological imaging, observers can see the image of the sample and pick out tissues which influence the observation of the target object before hiding the tissues. Furthermore, by combining with image recognition technology, our system can automatically pick out the target object which is decided to be hidden beforehand without human observers. This combination makes our system useful in some regions such as the military field.

Unlike the cloaks that hide the entire space covered by the shell, our invisibility system only hides the running deer, which we select from the entire object, and maintains other signals when the entire object is illuminated by SLM-modulated light. The high selectivity of our invisibility system makes it more practical because a target object is always mixed with other objects and background signals in real situations. The distance between the object and other optical elements is determined by the focal distances of lenses that comprise the 4f optical systems in the simulation. To simplify the calculation process, we use lenses with focal distance f = 10 cm in our simulation. In practical operation, one can use lenses with long foci with different focal distances to hide long-distance objects in different places. For simplification, we only simulate the situation in which our system works at λ = 632.8 and λ = 532 nm. However, for many commercial SLMs working at visible frequencies, the operating wavelength range covers approximately 400–700 nm, meaning that the system can be used to hide objects when the illumination wavelength changes over a large range. Although we only consider the situation of hiding planar objects in this paper, theoretically our system can be extended to work for 3-dimensional (3D) objects with a complex shape. Using 3D laser scanning technology, which can rapidly capture the surface geometry of an object, we can retrieve the scattered fields for different angles of a 3D object and calculate its phase-conjugated light field. Based on the 3D computer-generated holography, the target object can be hidden by modulating multiple SLMs in different directions to produce the light field containing the object’s phase-conjugated signal.

5 Conclusion

We have designed a long-distance invisibility system and simulated the working effect of the system. Unlike traditional invisibility devices, our system hides only the chosen object and has no effect on other surrounding objects or background signals, meaning that the system is highly selective. Even when the target object covers the background patterns, we can reconstruct the patterns to complete them without the influence of the hidden object. Our system works well in hiding dynamic objects. The system can conceal complex phase- and amplitude-modulated objects by producing a light field to compensate for the wavefront distortion and light absorption of the object. The object we used in the simulation is at centimeter scale and the system works at visible frequencies. Such functions provide our invisibility system with great potential in special fields, such as biology and military applications for living and dynamic target recognition and selective camouflaging, among others.

References

J. Ward, J.B. Pendry, J. Mod. Opt. 43, 773–793 (1996)

U. Leonhardt, Science. 312, 1777–1780 (2006)

J.B. Pendry, D. Schurig, D.R. Smith, Science. 312, 1780–1782 (2006)

D. Schurig, J.J. Mock, B.J. Justice, S.A. Cummer, J.B. Pendry, A.F. Starr, D. R. Smith: Science. 314, 977–980 (2006)

H.A. Zhang, R. Madni, X. Hao, X. Zhang, E. Liu, H. Li, Chen,: Light-Sci. Appl. 5, e16177 (2016)

Y. Lai, H.Y. Chen, Z.-Q. Zhang, C.T. Chan, Phys. Rev. Lett. 102, 093901 (2009)

J. Li, J.B. Pendry, Phys. Rev. Lett. 101, 203901 (2008)

R. Liu, C. Ji, J.J. Mock, J.Y. Chin, T.J. Cui, D. R. Smith: Science. 323, 366–369 (2009)

N. Xingjie, W.Z. Jing, M. Michael, W. Yuan, Z. Xiang: Science. 349, 1310–1314 (2015)

X. Chu, C.J. Zhai, P. Lee, Y. Wang, D.P. Duan, B. Tsai, Y. Zhang, Luo, Laser Photonics Rev. 9, 399–404 (2015)

Y. Yang, H. Wang, F. Yu, Z. Xu, H. Chen, Sci. Rep. 6, 20219 (2016)

Y. Yang, L. Jing, B. Zheng, R. Hao, W. Yin, E. Li, C.M. Soukoulis, H. Chen, Adv. Mater. 28, 6866 (2016)

F. Gömöry, M. Solovyov, J. Souc, C. Navau, J.P. Camps, A. Sanchez: Science. 335, 1466 (2012)

Z. Lin, H. Ramezani, T. Eichelkraut, T. Kottos, H. Cao, D.N. Christodoulides, Phys. Rev. Lett. 106, 213901 (2011)

J. Valentine, J. Li, T. Zentgraf, G. Bartal, X. Zhang, Nature Mater. 8, 568–571 (2009)

R. Schittny, M. Kadic, T. Buckmann, M. Wegener, Science. 345, 427–429 (2014)

X. Chen, Y. Luo, J. Zhang, K. Jiang, J.B. Pendry, S. Zhang, Nature Commun. 2, 176 (2011)

B. Zhang, Y. Luo, X. Liu, G. Barbastathis, Phys. Rev. Lett. 106(3), 033901 (2011)

B. Edwards, A. Alù, M.G. Silveirinha, H. Engheta, Phys. Rev. Lett. 103, 153901 (2009)

H. Chen, B. Zheng, L. Shen, H. Wang, X. Zhang, N.I. Zheludev, B. Zhang, Nature Commun. 4, 2652 (2013)

K. Wu, Q. Cheng, G.P. Wang, J. Opt. Soc. Am. B. 28, 1467–1474 (2011)

Z. Yaqoob, D. Psaltis, M.S. Feld, C. Yang, Nat. Photon. 2, 110–115 (2008)

Finkbeiner, Nature. 517, 430–432 (2015)

X. Xu, H. Liu, L.V. Wang, Nat. Photon. 5, 154–157 (2011)

M. Vellekoop, Opt. Express. 23, 12189–12206 (2015)

G. van Putten, D. Akbulut, J. Bertolotti, W.L. Vos, A. Lagendijk, A.P. Mosk, Phys. Rev. Lett. 106, 193905 (2011)

J.W. Goodman Introduction to Fourier Optics, 3rd edn. (McGraw-Hill, 2005)

J.B. Pendry Science. 322, 71–73 (2008)

Q. Cheng, K. Wu, G.P. Wang, Opt. Express. 19, 23240–23248 (2011)

Q. Cheng, K. Wu, Y. Shi, H. Wang, G.P. Wang Sci. Rep. 3, 1974 (2013)

Q. Cheng, K. Wu, Y. Shi, H. Wang, G.P. Wang Sci. Rep. 5, 8581 (2014)

W. Chen, X. Chen, A. Stern, B. Javidi, IEEE Photonics J. 5, 6900113 (2013)

Q. Cheng, Z. Tan, H. Wang, G.P. Wang, Sci. Rep. 7, 10231 (2017)

R.W. Gerchberg, W.O. Saxton Optik. 35, 237–250 (1972)

Q. Zeng, Y. Tan, G. Yan, Jin, Appl. Optics. 46, 6872–6878 (2007)

N. Ozawa, J. Zhang, CG World. 37, 82–93 (2005)

Z. Yu, G. Jin Computer-Generated Hologram, 1st edn. (Tsinghua University Press, 1984)

D.C. Chu, J.R. Fienup, J.W. Goodman, Appl. Optics. 12, 1386–1388 (1973)

Acknowledgements

National Natural Science Foundation of China (NSFC) (11574218, 61605053, 11604112, 61505063); Fundamental Research Funds for the Central Universities (2662015QD023, 2662015QD042, 2662015BQ046, 2662016PY059); Hubei Provincial Natural Science Foundation of China (2015CFB479).

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 (MOV 313 KB)

Supplementary material 2 (MOV 281 KB)

Supplementary material 3 (MOV 260 KB)

Supplementary material 4 (MOV 255 KB)

Rights and permissions

About this article

Cite this article

Cheng, Q., Zhang, S., Ding, C. et al. A simulation system to hide dynamic objects selectively at visible wavelengths. Appl. Phys. B 124, 53 (2018). https://doi.org/10.1007/s00340-018-6922-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00340-018-6922-0