Abstract

We present a scheme to produce clock-synchronized photons from a single parametric downconversion source with a binary division strategy. The time difference between a clock and detections of the herald photons determines the amount of delay that must be imposed to a photon by actively switching different temporal segments, so that all photons emerge from the output with their wavepackets temporally synchronized with the temporal reference. The operation is performed using a binary division configuration which minimizes the passages through switches. Finally, we extend this scheme to the production of many synchronized photons and find expressions for the optimal amount of correction stages as a function of the pair generation rate and the target coherence time. Our results show that, for the generation of this heralded single-photon per output state at an optimized input photon flux, the output rate of our scheme scales essentially with the reciprocal of the target output photon number. With current technology, rates of up to 104 synchronized pairs per second could be observed with only 7 correction stages.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A deterministic photon source is of great interest for quantum information processing. While many features of such a source can be achieved with faint pulses or attenuated beams, the statistics of such light sources limit their applications and weaken some of the strengths of quantum information algorithms [1–3]. Efforts to engineer a practical triggered, single-photon source have led to several different approaches, ranging from single atoms in cavities [4, 5], nonlinear cavities [6], quantum dots in microcavities [7] and nitrogen-vacancy centers in diamond [8]. Another approach is to use heralded photons produced through spontaneous parametric down-conversion (SPDC). Using this technology, several schemes have been proposed: a spatial multiplexing of several outputs from down-conversion crystals and recombined with a set of electro-optic switches was devised by Migdall et al. [9]; the temporal multiplexing of photons produced through SPDC and delayed on a storage loop was studied by Pittman et al. [10] using a CW source, and by Kwiat’s group with a pulsed source [11]; a pulsed scheme based on multiplexing outputs of four down-conversion crystals pumped by the same pulsed laser to actively produce single photons was demonstrated by Zeilinger’s group [12],

2 Proposal

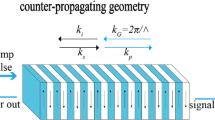

The proposal presented in this work is sketched in Fig. 1. It allows for photons to be produced with an arbitrarily high probability synchronized with an external clock. The setup works by using timing information in the detection of the herald photon to modify the signal’s path so that it exits the synchronizer at a clock tick. Recent technological developments make this scheme viable. Mainly, the increased pair generation rate and spectral width of photons produced by SPDC at a periodically poled nonlinear crystal (PPNLC). Others such as the detector jitter, timing circuits and fast fiber switches also contribute fairly to this scheme’s viability.

General scheme for synchronizing photons. A pair of photons is generated at a random moment by SPDC. One of these photons is detected, and the time difference between this detection and a synchronization clock is measured. The path of the other photon is altered so that it arrives at the output at the precise clock time. Path modification is done by a series of fast fiber switches connected to a binary division fiber network. Also, Fabry–Perot filters limit bandwidth, ensuring coherence times long enough to account for time measurement limitations

Photon pairs are generated at a PPNLC pumped by a CW single-longitudinal-mode laser that generates collinear SPDC. Having in mind a source of synchronized photons at telecommunication wavelength, the down-conversion process can be either non-degenerate or degenerate. Degenerate pairs at 1,550 nm are obtained by pumping with a 775-nm laser diode. Non-degenerate pairs can, for example, be generated at 810 and 1,550 nm from a 532-nm Nd:YAG pump laser [13]. The latter option has the advantage of providing high detection efficiency for the herald photons at 810 nm with Si detectors. Photons coupled into fibers are spectrally filtered at each arm by a Fabry–Perot to reach coherence times larger than the detector jitter. Upon the detection of a herald photon, timing correction information is generated by comparing this time to an external clock. A fast digital circuit activates fiber-integrated optical switches which correct the signal’s path. This occurs while the signal photon is delayed by means of a fixed fiber delay line. After the passage through the programmed delay, the photon exits the fiber line at a clock tick.

The variable optical delay uses a binary division strategy. It is formed by a chain of fixed delay single-mode fiber spools of increasing lengths connected via fast optical switches, in such a way that each of them can be bypassed through a fiber patchcord of negligible length. The shortest delay of this variable length chain (\({ \varDelta t_0}\)) should be a fiber patch of half the coherence length of the down-converted photons. All the other links are constructed doubling the previous length, \({ \varDelta t_{m+1}=2 \varDelta t_m}\) (Fig. 1). In this way, the maximum delay that can be set with a chain of m such delays is

This binary division approach is an improvement on previous methods [9–12], in which the passages through switches are reduced to their minimum and total losses are almost the same for any time delay. Using m switches, a total of 2m − 1 temporal bins, or modes, can be multiplexed into one temporal output mode. This scheme reduces losses significantly for it is in these switching stages where the losses are higher. Also, an all-fiber system avoids the problems of mode matching and the need for Gaussian relay imaging as used by Pittman et al. [10] and the alignment of large, stable resonators [10, 11]. The whole setup takes advantage of the large coherence lengths that can be achieved with a CW SPDC source. There is therefore no need for any synchronization between the source and the timing clock.

Alternatively, this photon synchronization can be also accomplished with a pulsed source. In this case, the spectral constrain imposed on the down-converted photon pairs by the detector jitter is transferred to a constrain in the repetition rate. In such condition, spectral filters may not be needed. However, the synchronization stages must be matched within the pulse duration.

2.1 State-of-the-art optoelectronics

Current available technology determines many of the working parameters at which such a scheme would work. In this technical section, we discuss different available options and how these relate to the coherence time of the photons, and therefore the shortest delay \(\varDelta t_0\), and the maximum repetition rate \({f_{\rm max}=1/{\mathcal{T}}}\) achievable.

Photons can only be synchronized as precisely as their detection time can be measured. The detector jitter τ j is mainly responsible for this limit, and it will set a minimum \(\varDelta t_0>\tau_{j}\). Typical detectors have a timing jitter of approximately 40–500 ps, depending on their active area and timing electronics. Some standard commercial devices are listed in [14]. Another limiting characteristic of detectors is their dead time, which is typically ≈30 ns for actively quenched circuits. This sets the maximum repetition rate to at least the limit value \({{\mathcal{T}}>30}\) ns unless some kind of detection multiplexing is done.

Measuring the photon detection time and acting on the delay line in the picosecond domain is also a challenging issue, but they do not require longer times than those set by single-photon avalanche photodiodes. There are three crucial steps: measurement, decision and response. One aims at a measurement with precision in the order of the detector jitter time, so that the measurement time does not increase the minimum synchronization step \(\varDelta t_0\) any further. Also it would be ideal to have an overall response that is faster than the total delay time \({{\mathcal{T}}}\) so that there is no dead time between synchronization rounds.

The delay line’s settling time is mainly determined by the temporal response of the fiber switches. Commercial switches offer switching times of up to 1 ns [15]. Even faster interferometric Mach–Zehnder type switches can be built by using phase modulators, which are standard telecommunication devices with operating frequencies exceeding 40 GHz=1/25 ps. Nevertheless, switches need to act once every \({{\mathcal{T}}}\) which is an order of magnitude larger than these switching times.

For measurement and decision making, a couple of options are available, each with their own advantages and disadvantages. An FPGA-based system can measure times down to the nanosecond scale and reach propagation (decision) times of about 10 ns. Although they rise \(\varDelta t_0\) to at least an order of magnitude above the detector jitter limit, they are easy to set up and reconfigure. Another option is using time-to-digital converters that can achieve temporal resolutions down to 10 ps [16], but are slower (≈50 ns) in reporting the measured value. This alternative could be reasonable in the best detector jitter condition and if one were to need several (m ≈ 10) correction stages. Finally, the fastest option available is a dedicated circuit built with fast discrete logic. Measuring and acting on the circuit can be achieved with only a binary counter and a latch. Typical times for fast logic devices of this type can reach measurement times of 30 ps and overall times of 1 ns with CML technology [17].

2.2 SPDC source and spectral width

The election of the minimum correction time \(\varDelta t_0\) also sets a minimum coherence length to which photons have to be filtered. From the above discussion and through the rest of the paper, we choose the correction time to match the coherence time, \(\varDelta t_0=2\) ns as a conservative estimate to illustrate what follows. This election implies the need of photons with a spectral bandwidth of \(\varDelta\lambda \approx4\) pm. Pairs of photons with such narrow bandwidths can be generated even at high count rates. Parametric down-conversion from PPNL-waveguide crystals produces photons with bandwidths of the order of the nanometer and count rates of about 109 s −1 mW−1 [18–20]. The initial coherence lengths can be further lengthened by spectral filtering on both beams. Using Fabry–Perot interferometers integrated with optical fibers and pump lasers of several tens of mW, spectral bandwidths can be pushed to the desired limit of 4–10 pm with count rates of 107 s−1 [21, 22]. A narrow-band emission spectrum of the pump will ensure high correlation between the filtered down-converted photons. Also, fiber-integrated Fabry–Perot filters allow for low loss, stability and easy setup [23]. More relevant than the pair production per second for an SPDC source is the pair emission flux per coherence time. This flux is independent of the filtered bandwidth, since narrower filtering decreases the number of photons per second but increases their coherence time by the same factor. Outputs of 2 × 10−2 pairs per coherence time have been reported with a PPNLC waveguide [22].

2.3 Timing

We now analyze the performance of the proposed system in synchronizing photons. The conclusion of this section is illustrated in Fig. 2. Under the experimental conditions described above, and with an appropriate amount of stages, it is possible to synchronize photons with high probability. As a rule of thumb, this situation can be achieved when there is a high probability of detecting a photon during the total synchronization interval \({{\mathcal{T}}}\) and a low probability of multiple detections within the detection time \(\varDelta t_0\), as it will be discussed over a specific case below. We now describe this concept in a formal manner.

Time scheme for synchronizing photons and relative probabilities for a specific realistic case. The clock time \(\varDelta t_0\) is set to match the detector jitter time τ j . These must be below the coherence time t c of the generated photons. Probabilities are shown for \(\varDelta t_0=2\,\hbox{ns}\), a pair generation rate of 107 s−1 and m = 7 correction stages. The probability of finding two photons \(P(2, \varDelta t_0)\) in a clock interval is two orders of magnitude lower than the probability \(P(1, \varDelta t_0)\) of finding one photon in this same interval. During the whole synchronization round, there is, however, a high probability \({\mathcal{P}(1,{\mathcal{T}})}\) of obtaining at least one photon

To be able to synchronize a single photon, at least one photon must be generated by SPCM within the synchronization interval \({{\mathcal{T}}=2^m \varDelta t_0 }\) and at most one photon must be detected on each detection time \(\varDelta t_0\). The chance of occurrence of this situation at the output \(\mathcal{P}(1,\mathcal{T})\) can be calculated using a modified version of the Mandel formula [24, 25] that calculates the probability at the input of having one or more photons during \({{\mathcal{T}}, P(\geq1,{\mathcal{T}})}\), conditioned to the probability of having at most one photon at the input per minimum detection time, \(P(\leq 1, \varDelta t_0)\). This leads to the following expression:

This is the success probability for m binary synchronization stages, at an input count rate of n and a coherence time of \(\varDelta t_0\). The fraction of combinations accounts for the probability of finding k single photons distributed in 2m temporal windows. Figure 3 shows the dependence of the success probability \({\mathcal{P}(1,{\mathcal{T}})}\) with the product of the input photon rate n and the detection time \(\varDelta t_0\), for different amount of stages in the synchronization network (m). As the total synchronization time is increased, the system shows better success probability, reaching more than 90 % for 8 stages and almost 99 % for 12 stages. Furthermore, this improved success rate is obtained at lower input photon rates (for a fixed detection time \(\varDelta t_0\)) for an increasing number of stages. Two limit conditions are worth mentioning: for m = 0, no correction is applied and the Poisson probability of a coherent source is recovered: \(\mathcal{P}(1, \varDelta t_0)\equiv P(1, \varDelta t_0) =n \varDelta t_0 \exp(-n \varDelta t_0)\). On the other hand, the dashed curve shows the probability of having at least one photon during the synchronization interval \({{\mathcal{T}}:P(\geq1,{\mathcal{T}})=1-\exp(-n \varDelta t_0 2^m)}\) for the particular case of m = 12. For low input photon numbers, both probabilities overlap, but as the photon number (or the duration of the detection time) is increased, multiple events during the detection time are more likely to happen; hence, \({\mathcal{P}(1,{\mathcal{T}})}\) decreases.

Success probability of heralding a single photon during the whole synchronization interval \({{\mathcal{T}}}\), as a function of the input photon rate times the detection time \(\varDelta t_0\), for different amounts of synchronization stages (total synchronization time is \({{\mathcal{T}}= \varDelta t_0 2^m}\)). The dashed line shows the probability of detecting one or more events on \({{\mathcal{T}}}\), while the curve corresponding to non-temporal correction (m = 0) depicts the Poisson probability of detecting one photon on that temporal interval, which is the expected result for a coherent source

The peak on these curves indicates that the trade-off between a high input rate and single-photon detections is still present, but the time correction scheme allows to increase the probability of detecting a single photon while maintaining a low ratio of single-photon to multiple-photon events. In order to see this feature more clearly, we can compute the signal-to-noise ratio of our source:

which quantifies the ratio of single events to multiple, unwanted events. For a coherent source, the probability of detecting a single photon is e −1, obtained for \(n \varDelta t_0=1\). This condition gives a SNR of ≈1.39, which limits the use of such a source as a single-photon one. One can improve the quality of the non-vacuum states produced, at the expense of reducing the success probability. This is the idea behind faint light pulses as an approximation of a single-photon source: for a mean photon number \(n \varDelta t_0=0.1\) , the probability of detecting a single photon decreases to 0.09, but now, the SNR rises to 19.3.

Following the work of Mazzarella et al. [26], in order to compare the output quality of this scheme for different configurations, we calculate a SNR-optimized probability; that is, the maximum single-photon probability that can be obtained for a given threshold SNR, σ:

Figure 4 shows the optimized probability for different correction lengths, as a function of the minimum guaranteed SNR. Increasing the number of correction stages produces not only an increase in the success probability, but also allows this probability to be sustained for large SNR values. Again, m = 0 recovers the results for a faint coherent pulse source.

2.4 Scalability

A photon synchronizer as presented can be used to generate a state of single photons on multiple outputs: instead of having many synchronizers, one can time multiplex a single one using a multiple-output fiber switch and optical delay lines. Figure 5 shows a general scheme for the implementation of such multiphoton synchronization: To produce a state with μ photons, the switch alternates the output between μ output ports connected to fibers setting a delay \([0,1,\ldots,\mu] \varDelta t_02^m\). Naturally, the generation rate is also reduced by a factor 1/μ. It is worth to note that the output state is not a multiphoton Fock state with μ photons in a single mode, but rather a state where μ single, synchronized photons at μ outputs are obtained.

It is interesting to calculate the final amount of synchronized photons for such a scheme. This will depend on the μth power of the single-photon synchronization probability derived before. It must also be normalized by dividing it by the highest synchronization frequency that can be reached, that is \(f_{\rm max}=(\mu \varDelta t_0 2^m)^{-1}\). Also, to calculate real observable count rates, one must account for losses at each synchronizing stage η mstg , general losses ηgnl and the efficiency of the herald photon detector ηdet. The full expression for the number of synchronized photons then reads

The main source of losses on the synchronized side are the optical switches, which, in the best devices available, have attenuation factors of 1.2 dB, considering insertion losses and splicing. The distributed loss within the fiber is an order of magnitude below considering 0.5 dB/km for a telecommunication fiber. Detector efficiency for InGaAs avalanche photon counting diodes reaches 25 % in the 1,550-nm range [27]. Higher detection efficiency can be obtained with superconducting edge single-photon counters, but their timing performance is not as good so the above considerations should be rescaled. Also very recently, a new type of superconducting single-photon detector based on a traveling-wave geometry that maximizes the photon--detector interaction length was developed; this allows for both high-speed and high-efficiency single-photon detection, with on-chip efficiencies reaching 90 % and timing jitter as low as 18 ps at telecommunication wavelengths [28]. Although these detectors may improve the overall predicted performance of the present proposal—and emerge as a major breakthrough for scalable quantum computation in general—we restrict the analysis to commercially available technology.

Figure 6 plots the output rate for synchronized 2-photon and 4-photon states (μ = 2 and μ = 4, respectively), both for the ideal and the lossy case. For the lossy case, we assume the efficiencies mentioned above. For a small amount of stages, the synchronized pair production is low because the probability of having a photon pair in the interval \({{\mathcal{T}}}\) scales as \(\propto n \varDelta t_0\). Noticeably, for a given coherence time, the initial photon pair rate also imposes a limit on the amount of correcting stages that can be piled up for optimum synchronization. Once this condition is reached, further addition of correcting stages only increases the whole cycle time and therefore will lower the throughput of the system by limiting the synchronization rate f max. Also, there is also a limit imposed by multiple event detections on the herald side: For high enough input rates, the rate of multiple detections per minimum detection time dominates the scenario, as shown in Fig. 3, and the scheme looses efficiency. However, as more correcting stages are piled up, the optimum input rate decreases; there is a trade-off between the initial photon rate and the amount of correcting stages needed to maximize the synchronizer throughput, and indeed, a low brightness source can have better performance than a brighter one, with the inclusion of more correcting stages.

Synchronized multiple photons for m correcting stages and different pair generation rates, assuming a minimum correction time of \(\varDelta t_0=2\) ns. Top pane shows output rates for 2-photon states (μ = 2) and bottom pane rates for 4-photon states (μ = 4). The solid color curves are for the ideal zero-loss case, while the light colored curves with smaller symbols account for all losses as mentioned in the main text. Dots show values for different number of stages; lines connecting dots are present for clarity and visual aid

Surprisingly, the optimum number of stages shows strong dependence on the mean input photon rate, and the amount of correcting stages, but only increases slightly with the desired output μ-photon state, as can be seen by following the shift of the peak of the curves in Fig. 6. For example, for an input photon rate of 107 s−1 and \(\varDelta t_0=2\) ns, the optimum amount of stages is between 6 and 7 for all the studied cases (μ = 0 to μ = 14).

From the point of view of output repetition rate, it seems always convenient to have higher input brightness, although this is currently limited, not by the sources but mainly by maximum detector counts. Current technology allows for counting at 107 s−1 with actively quenched avalanche photon counting diodes. Improvements in electronics and multiplexed detection technologies could increase this value a couple of orders of magnitude. We see that in the present configuration, this difficulty can be hurdled by adding more correcting stages. This would significantly benefit this scheme by increasing the output rate proportionally. As stated by Eq. (2), it has to be kept in mind that in order to avoid overlap between multiple photons, the photon rate per clock time \(\varDelta t_0\) has to be kept low, that is, ensuring that the multiple photon occurrence is at least an order of magnitude lower than a single detection.

The incidence of multiple pair emission in a CW SPDC, which ultimately limits the coherence visibility, is expected to be at least an order of magnitude lower than in a pulsed scheme, since the pair production probability is proportional to the instantaneous power of the pump source times the pulse duration/detection window [29]. The effect of higher photon number states on the visibility of two-photon interference was studied by Scarani et al. [30] and by others [31, 32], and the relevant parameter turns out to be the probability of creating a photon pair within the spectral range defined by the filters, per time resolution of the detector. Quoting reference [30]: “for a fixed detection rate we shall find a given visibility, no mater whether the rate was obtained by pumping weakly and putting no filters, or by pumping strongly and putting narrow filters”; spectral filtering does not degrade the visibility. For a probability of creating a pair during the detector resolution window within the filtered spectral range of 2 × 10−2 (see below), the expected visibility is of the order of 0.95 [30]. This effect can nevertheless be further reduced, implementing a number-resolving setup with two or more detectors at the herald side, as studied in [11].

We consider a specific case, using low-jitter detectors and matching the coherence length to this detector jitter as described above and illustrated in Fig. 2. In this way, we analyze the use of 4-pm spectral filters at 1,550 nm. The coherence time is of the order of the 2 ns, which is comfortably above the maximum detector jitter and electronic timings, so the clock time can be closely matched to this coherence time. In such a situation and with a maximum input photon rate of 107 s−1, a mean number of 2 × 10−2 photons per clock time \(\varDelta t_0\) are detected. That gives a probability of 2 × 10−4 of having more than one photon within a single detection window. Similar experimental conditions have been reported by Halder et al. [22]. The total correction time \({{\mathcal{T}}}\) depends on the amount of stacked binary stages, \({{\mathcal{T}}=2^m \varDelta t_0}\). For m = 7, the total correction time is \(128 \varDelta t_0\) and the probability of detecting at least one event during that time is 0.87 for the assumed input photon rate.

In summary, a short temporal detection window with a continuous-wave source gives a low probability to detect more than one photon per clock time (like in a non-deterministic single-photon source). The chain of multiple correction stages leads to a high probability that a photon is temporally re-routed and time-corrected within the total temporal correction window. This sequence is repeated every \({{\mathcal{T}}=1/f}\).

3 Discussion

This temporally multiplexed setup allows to approximate a deterministic single-photon source. For example, with 8 correcting stages and an input rate of 2 × 106 pairs/s, there is a 92 % chance of obtaining a single photon every clock pulse, while multiple photon occurrence probability is below 5 %. On the other hand, pushing the downconversion source to the detector count rate limit, 107 pairs/s, the optimum amount of stages to obtain two photons turns out to be 6. In such a situation, the loss due to the switching stages and the detector efficiencies—now the whole set of detectors at the output has to be taken into account—leads to a rate of 1.7 × 104 pairs/s. Multiphoton count rates for 4, 6 and 8 synchronized photons obtained are 179, 3.7 and 0.09 s−1, respectively. The present proposal involves a mechanically simple and robust setup that uses a low-power pump laser, plus an almost 100 % fiber-coupled system. This multiple-output state of single, synchronized photons allows for a straightforward implementation of a multiple-qubit quantum register. The method we present here has a generation rate which (excluding detector efficiencies, which are present in any method) scales mainly inversely proportional to the number of photons to be synchronized, while the contribution of the exponential dependence on the photon number (5) is much weaker than in μ photon down-conversion, which is the current method used to produce multiple photon states. This is a crucial issue in building scalable devices for quantum information processing.

References

P. Grangier, B. Sanders, J. Vuckovic, Focus on single photons on demand. New J. Phys. 6 (2004). doi:10.1088/1367-2630/6/1/E04

E. Knill, R. Laflamme, G.J. Milburn, A scheme for efficient quantum computation with linear optics. Nature 409(6816), 46–52 (2001)

C. Bennett, G. Brassard, A. Ekert, Quantum cryptography. Sci. Am. 267, 50–57 (1992)

J. Cirac, P. Zoller, H. Kimble, H. Mabuchi, Quantum state transfer and entanglement distribution among distant nodes in a quantum network. Phys. Rev. Lett. 78(16), 3221–3224 (1997)

A. Parkins, P. Marte, P. Zoller, O. Carnal, H. Kimble, Quantum-state mapping between multilevel atoms and cavity light fields. Phys. Rev. A 51(2), 1578 (1995)

A. Imamolu, H. Schmidt, G. Woods, M. Deutsch, Strongly interacting photons in a nonlinear cavity. Phys. Rev. Lett. 79(8), 1467–1470 (1997)

C. Santori, M. Pelton, G. Solomon, Y. Dale, Y. Yamamoto, Triggered single photons from a quantum dot. Phys. Rev. Lett. 86(8), 1502–1505 (2001)

T. Schröder, F. Gädeke, M. Banholzer, O. Benson, Ultrabright and efficient single-photon generation based on nitrogen-vacancy centres in nanodiamonds on a solid immersion lens. New J. Phys. 13(5), 055017 (2011)

A. Migdall, D. Branning, S. Castelletto, Tailoring single-photon and multiphoton probabilities of a single-photon on-demand source. Phys. Rev. A 66(5), 053805 (2002)

T. Pittman, B. Jacobs, J. Franson, Single photons on pseudodemand from stored parametric down-conversion. Phys. Rev. A 66(4), 042303 (2002)

E. Jeffrey, N. Peters, P. Kwiat, Towards a periodic deterministic source of arbitrary single-photon states. New J. Phys. 6(1), 100 (2004)

X. Ma, S. Zotter, J. Kofler, T. Jennewein, A. Zeilinger, Experimental generation of single photons via active multiplexing. Phys. Rev. A 83(4), 043814 (2011)

F. Bussières, J. Slater, J. Jin, N. Godbout, W. Tittel, Testing nonlocality over 12.4 km of underground fiber with universal time-bin qubit analyzers. Phys. Rev. A 81(5), 052106 (2010)

Standard photon counting modules AQRH by Excellitas have τ j = 500 ps @ 808 nm, this timing resolution can be modified to produce τ j = 250 ps 34, IdQuantique reaches τ j = 40 ps @ 808 nm with their ID100 model and τ j = 200 ps @ 1550 nm with their ID200 model. http://www.excelitas.com/downloads/DTS_SPCM-AQRH.pdf. http://www.idquantique.com/images/stories/PDF/id201-single-photon-counter/id201-specs.pdf

A 2 × 1 Lithium Niobate switch with response time below the nanosecond is available from JDSU. Insertion loss, however, is high due to the technology involved. Several manufacturers offer solid-state switches with response times on the order of hundreds of nanoseconds and typical total insertion loss of 0.6 dB. http://www.jdsu.com/ProductLiterature/2x2is_ds_cc_ae_050406.pdf

For example, the time-to-digital converter TDC-GPX from ACAM can measure time differences between two channels with 10 ps resolution. http://www.acam.de/fileadmin/Download/pdf/English/DB_AMGPX_e.pdf

Rise and fall times of 35 ps and 260 ps propagation delays can be obtained with a 10 GHz \(\div4\) clock divider with Current Mode Logic output structure, such as the NB7V33M, from On Semiconductors. http://www.onsemi.com/pub_link/Collateral/NB7V33M-D.PDF

M. Fiorentino, S. Spillane, R. Beausoleil, T. Roberts, P. Battle, M. Munro, Spontaneous parametric down-conversion in periodically poled ktp waveguides and bulk crystals. Opt. Express 15(12), 7479–7488 (2007)

T. Zhong, F. Wong, T. Roberts, P. Battle, High performance photon-pair source based on a fiber-coupled periodically poled ktiopo4 waveguide. Opt. Express 17(14), 12019–12030 (2009)

H. Hübel, D. Hamel, A. Fedrizzi, S. Ramelow, K. Resch, T. Jennewein, Direct generation of photon triplets using cascaded photon-pair sources. Nature 466(7306), 601–603 (2010)

A. Haase, N. Piro, J. Eschner, M. Mitchell, Tunable narrowband entangled photon pair source for resonant single-photon single-atom interaction. Opt. Lett. 34(1), 55–57 (2009)

M. Halder, A. Beveratos, N. Gisin, V. Scarani, C. Simon, H. Zbinden, Entangling independent photons by time measurement. Nat. Phys. 3(10), 692–695 (2007)

Micron Optics Inc. http://www.micronoptics.com/

L. Mandel, Fluctuations of photon beams: the distribution of the photo-electrons. Proc. Phys. Soc. 74, 233–243 (1959)

L. Mandel, E. Wolf, Optical Coherence and Quantum Optics (Cambridge University Press, Cambridge, 1995)

L. Mazzarella, F. Ticozzi, A.V. Sergienko, G. Vallone, P. Villoresi, Asymmetric architecture for heralded single photon sources (2012). arXiv preprint arXiv:1210.6878

ID Quantique SA. http://www.idquantique.com/scientific-instrumentation/id201-ingaas-apd-single-photon-detector.html

W. Pernice, C. Schuck, O. Minaeva, M. Li, G. Goltsman, A. Sergienko, H. Tang, High-speed and high-efficiency travelling wave single-photon detectors embedded in nanophotonic circuits. Nat. Commun. 3, 1325 (2012)

I. Marcikic, de H. Riedmatten, W. Tittel, V. Scarani, H. Zbinden, N. Gisin, Time-bin entangled qubits for quantum communication created by femtosecond pulses. Phys. Rev. A 66(6), 062308 (2002)

V. Scarani, de H. Riedmatten, I. Marcikic, H. Zbinden, N. Gisin, Four-photon correction in two-photon bell experiments. Eur. Phys. J. D At. Mol. Opt. Plasma Phys. 32(1), 129–138 (2005)

H. Eisenberg, G. Khoury, G. Durkin, C. Simon, D. Bouwmeester, Quantum entanglement of a large number of photons. Phys. Rev. Lett. 93(19), 193901 (2004)

S.A. Podoshvedov, J. Noh, K. Kim, Stimulated parametric down conversion and generation of four-path polarization-entangled states. Opt. Commun. 232(1), 357–369 (2004)

R. Krischek, W. Wieczorek, A. Ozawa, N. Kiesel, P. Michelberger, T. Udem, H. Weinfurter, Ultraviolet enhancement cavity for ultrafast nonlinear optics and high-rate multiphoton entanglement experiments. Nat. Photonics 4(3), 170–173 (2010)

I. Rech, I. Labanca, M. Ghioni, S. Cova, Modified single photon counting modules for optimal timing performance. Rev. Sci. Instrum. 77(3), 033104 (2006)

Acknowledgments

We acknowledge fruitful discussions with Juan Pablo Paz and Gabriel Larotonda. This work was supported by funds from the ANPCyT and MinDef projects. C.T.S. was funded by a CONICET scolarship.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Schmiegelow, C.T., Larotonda, M.A. Multiplexing photons with a binary division strategy. Appl. Phys. B 116, 447–454 (2014). https://doi.org/10.1007/s00340-013-5718-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00340-013-5718-5