Abstract

Because environments can vary over space and time in non-predictable ways, foragers must rely on estimates of resource availability and distribution to make decisions. Optimal foraging theory assumes that foraging behavior has evolved to maximize fitness and provides a conceptual framework in which environmental quality is often assumed to be fixed. Another more mechanistic conceptual framework comes from the successive contrast effects (SCE) approach in which the conditions that an individual has experienced in the recent past alter its response to current conditions. By regarding foragers’ estimation of resource patches as subjective future value assessments, SCE may be integrated into an optimal foraging framework to generate novel predictions. We released Allenby’s gerbils (Gerbillus andersoni allenbyi) into an enclosure containing rich patches with equal amounts of food and manipulated the quality of the environment over time by reducing the amount of food in most (but not all) food patches and then increasing it again. We found that, as predicted by optimal foraging models, gerbils increased their foraging activity in the rich patch when the environment became poor. However, when the environment became rich again, the gerbils significantly altered their behavior compared to the first identical rich period. Specifically, in the second rich period, the gerbils spent more time foraging and harvested more food from the patches. Thus, seemingly identical environments can be treated as strikingly different by foragers as a function of their past experiences and future expectations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Natural selection should favor individuals that are the most efficient in exploiting resources (MacArthur and Pianka 1966). The idea that behavioral traits of animals are adaptive has led to the growth of foraging theory and its empirical tests (Stephens and Krebs 1986). One of the better known concepts in optimal foraging theory is Charnov’s marginal value theorem (MVT; Charnov 1976), which predicts that a forager should remain in a resource patch until the intake rate of resources in the patch falls to a rate that equals the long-term average intake rate in the environment. Brown (1988) expanded Charnov’s theorem to include other fitness costs of foraging and introduced a new approach to measure animals’ foraging decisions based on patch characteristics using giving up densities (GUDs—the amount of food left in resource patch after exploitation). According to the expanded theorem, an optimal forager should exploit a patch so long as its harvest rate (H) of resources from the patch exceeds its energetic (C), predation (P), and missed opportunity (MOC) costs for foraging in that patch (i.e., H = C + P + MOC). More usefully for us, for a model when fitness is given by p·F, where p is the probability to survive to the next breeding season and F is fitness gained for those that survive, the patch use equation can be rewritten as H = C + μ·F/(∂F/∂e) + λ/(p·/(∂F/∂e)) (Brown 1992). Here, μ is the risk of predation, e is energy gain, ∂F/∂e is the marginal value of energy and is the short-term contribution of energy to fitness, and λ is the marginal value of time, which is determined by the value of alternative activities and alternative patches; the second term on the right-hand side of the equation is P, and the third is MOC. As GUD is directly related to the harvest rate of the forager at the time it abandoned the patch (Brown 1988; Kotler and Brown 1990), it can be used to measure the forager’s marginal benefit from the patch at that time (Kotler and Blaustein 1995; Berger-Tal et al. 2010). This principle has been verified many times both theoretically and empirically (see reviews in Brown and Kotler 2004; Bedoya-Perez et al. 2013 and examples within).

Charnov’s MVT rests on the assumption that animals have perfect information regarding the benefits and costs of foraging and make their decisions accordingly. However, in reality, foragers’ information about their environment is never perfect. When foragers possess sensory and cognitive capabilities that allow them to assess patch quality in an environment that is constant enough to permit dependable predictions, i.e., having complete information, they are called prescient foragers (Valone and Brown 1989). In the more likely cases when resources are unpredictable, hidden, and/or difficult to be precisely assessed prior to exploitation or when a prescient strategy is too costly (Olsson and Brown 2010), foragers are expected to behave in an approximately Bayesian manner (Green 2006; McNamara et al. 2006). Instead of re-evaluating each patch separately, Bayesian foragers make their foraging decisions based on the weighted average of a prior estimate of patch qualities and sampling information from the current patch (Olsson and Brown 2006), where the prior estimate is either derived from past experience or natural selection (McNamara et al. 2006; Berger-Tal and Avgar 2012).

Another important assumption that underlies the vast majority of optimal foraging models, whether Bayesian or otherwise, is that the value of the environmental quality is regarded as fixed (usually measured by quantity or quality of food resources), yet animals may make relative judgments when assessing the quality of the environment, especially when environmental quality can change (Flaherty 1996). Successive contrast effects (SCE) describe cases in which the conditions an individual have experienced in the recent past alter its response to current conditions (Flaherty 1996; McNamara et al. 2013). SCE have been reported for a wide range of species, including humans (e.g., Kobre and Lipsitt 1972; Pecoraro et al. 1999; Bentosela et al. 2009; Freidin et al. 2009), but only recently did researchers start investigating the adaptive ecological values of these effects (Freidin et al. 2009; McNamara et al. 2013). For example, McNamara et al. (2013) have demonstrated that contrast effects can result from an adaptive response to uncertainty in a changing, unpredictable environment and that a forager’s past conditions should shape its future expectations and thus influence its behavior. By adding subjective future value assessments to foragers’ estimation of resource patches, SCE may be incorporated into an optimal foraging framework to generate novel predictions. While optimal foraging is used to make predictions from an ultimate adaptive perspective, SCE can be used to provide the proximal or mechanistic explanations for foragers’ decision-making. In a way, we can think of such effects as rules of thumb (Pyke 1982) for Bayesian updating, guiding Bayesian foragers in making decisions on when to leave resource patches. Such integration between the two complementary approaches can be achieved by investigating the missed opportunity cost of foraging (MOC).

MOC is the cost of not engaging in other activities while foraging in a particular patch (Brown 1988; Olsson and Molokwu 2007). These alternative activities may include mating, resting, and feeding in other patches. However, in order to assess the missed opportunity costs of not feeding in other patches, the forager must have some knowledge of the value of these patches. Thus, a forager’s MOC will be determined by its estimate of the environment’s quality (determined by the marginal value of energy (∂F/∂e) in the short run, survival probability (p) in the long run, and marginal value of time (λ) under MVT conditions), which makes MOC an ideal factor for evaluating differences in perceived quality between habitats (Olsson and Molokwu 2007; Vickery et al. 2011). The MOC of foragers has been measured to estimate habitat quality (e.g., Persson and Stenberg 2006) or to investigate behavioral routines at various temporal scales (Olsson et al. 2000; Molokwu et al. 2008). Nevertheless, so far, MOC has received relatively little attention in the literature compared to other foraging costs (e.g., predation).

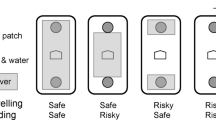

Theory predicts that when environmental quality decreases, the marginal value of energy will increase, decreasing MOC for all patches and lowering the GUD. However, when harvest rate remains high in rich patches and becomes low in poor patches, there will be greater differences in rich patches between the initial patch quality and GUD (increased use), but smaller differences in poor patches (decreased use). This should cause prescient foragers to increase their foraging activity in the rich patches and decrease it in the poor patches. Alternatively, if the forager is behaving in a Bayesian manner, it should make a decision according to the average quality of the environment and keep updating it, which should, on average, result in an initial overuse of the poor patches and underuse of the rich patches (Valone and Brown 1989). In addition, if the reduction in the quality of resources is causing negative contrast effects (i.e., if the fact that the environment used to be richer is causing the forager, whether prescient or Bayesian, to evaluate the current environment as even less valuable than it is), then the forager should show an overall reduced foraging behavior (McNamara et al. 2013) and increased searching behavior for alternative resources (Pecoraro et al. 1999). When the decrease in environmental quality is followed by an increase in quality back to its original level, both prescient and Bayesian foragers are predicted to return to their previous foraging levels, although for the Bayesian forager this might take longer since additional sampling will be required. However, if the increase in environmental quality is causing positive contrast effects (i.e., if the contrast between the past poor conditions and the current better condition is causing the forager to overestimate the objective quality of the resource patches), foragers are predicted to greatly increase their foraging efforts to higher levels than exhibited during the original, identical, and rich period. We tested these predictions by investigating the effects of temporal changes in environmental quality on the foraging behavior of Allenby’s gerbils (Gerbillus andersoni allenbyi), examining their behavioral responses to an environment that varies temporally in the quality of most (but not all) of its food patches.

Methods

Study species

Allenby’s gerbil is a small desert rodent that occupies sandy areas in the Western Negev Desert and the coastal dunes of Israel. It is mostly found in stabilized sand dunes with relatively dense vegetation cover (Abramsky et al. 1985). It is a solitary burrow dweller that forages nocturnally for seeds, which constitute the majority of its diet (Bar et al. 1984). The species has been studied extensively in the context of patch use theory (e.g., Kotler and Brown 1990; Kotler et al. 2010). Gerbils in this study were live-trapped from the Shivta dunes in the Western Negev Desert. When not participating in the experiment, the gerbils were kept individually in separate 20 × 30 cm cages with sandy substrate and were provided with shelter and ad libitum millet seeds and water.

Experimental setup

We conducted the experiment from May to October 2011 in two 6 × 6 m outdoor enclosures, each containing one or two small nest boxes, depending on the number of gerbils participating in the experiment. As moonlight strongly affects the foraging activity of gerbils (Kotler et al. 2010), we controlled for its effects by covering the enclosures with two layers of 90 % shade cloth on all sides. In each trial session, we introduced male Allenby gerbils into the enclosure. As part of a larger experiment examining the effects of competition on the gerbils’ behavior, we introduced either one or two gerbils. All gerbils were individually marked with passive induction transponder (PIT) tags.

Every night, we provided four resource patches in the form of seed trays, placed in fixed positions in each enclosure, and numbered from 1 to 4 (i.e., tray 1, tray 2, etc.). The trays were placed along a line running away from the nest boxes, with tray 2 and tray 3 staggered to the left and right of the line, respectively. The gerbils, however, did not always use the nest boxes, and in some cases, they dug and used borrows which were in closer proximity to any of the trays. Seed trays were 28 × 38 × 8 cm plastic containers filled with 3 L of sifted sand into which we mixed millet seeds. Trials were carried out during the first 3 h of the night (approximately from 2000 hours to 2300 hours). Gerbils have been shown on numerous occasions to deplete patches gradually during the night (e.g., Kotler et al. 1993; Kotler et al. 2002). By limiting foraging time to 3 h, we ensured that gerbils did not run out of valuable activities (i.e., foraging in trays) and thus faced positive missed opportunity costs throughout the experiment (Brown 1992). In particular, time spent foraging in one tray took away from time available to exploit another. We positioned PIT tag readers and loggers (model SQID; Vantro Systems, Burnsville MN, USA) under each seed tray. The PIT tag readers and loggers electronically recorded every visit by a gerbil to a tray, the identity of the visitor, the time of the visit, and the duration of the visit at a resolution of 1 s.

Experimental protocol

An experimental session for each individual gerbil was composed of four consecutive three-night periods (a total of 12 nights per session). We considered the first three nights after the gerbils were introduced to the enclosure as the Training period. During this period, all four seed trays contained 4 g of millet seeds mixed into the sand. The main purpose of this period was to allow the gerbils to become familiar with the location of the resource patches and the hours in which they were available. The behavior of the gerbils during this period was not considered in our analyses. The training period was followed by Rich period A. During this three-night period, all trays still contained 4 g of millet seeds. This period was followed by a three-night Poor period in which we reduced the amount of seeds in trays 2–4 to 1 g only while tray 1 still contained 4 g of seeds. The last three-night period was Rich period B in which, once again, all trays contained 4 g of millet seeds. We refer to tray 1, which maintained the initial seed density of 4 g throughout the experiment as the rich tray. The positions of all trays were kept constant during the entire experiment. Between the trials, we destroyed all existing burrows in the enclosures and made sure no cached seeds remained in them in order to prevent any carry-over effects among trials. We therefore assume that resources available outside of the food patches were negligible and unchanging through time.

We repeated the abovementioned experimental protocol eight times, each time using different individuals one at a time, and an additional seven times using different pairs of gerbils, making a total of 22 unique individual gerbils that participated in the experiment.

Data analysis

Every night, at the end of the 3-h foraging trial, we sifted the remaining seeds from the sand in each of the trays and weighed them. The amount of seeds left in each tray is the giving up density (GUD) for this tray. As the GUD is directly related to the harvest rate of the forager at the time it quit the patch (Brown 1988; Kotler and Brown 1990), it measures the forager’s marginal benefit from the patch at the time it left (Brown 1988; Kotler and Blaustein 1995). Since optimal foragers should exploit a patch as long as their harvest rate of resources exceeds their perceived costs of foraging in the patch (Charnov 1976; Brown 1988), the GUD for a patch also indicates the marginal costs of foraging in it. We also calculated the total amount of seeds that the gerbils harvested each night by subtracting the GUDs for each tray from the tray’s initial seed density. The sum of the weight of seeds harvested in the four trays gave us the total amount of food harvested by the gerbils in a particular night.

In addition to GUDs, we used the data obtained from the PIT tag readers and loggers to measure other foraging behaviors for each foraging unit. A foraging unit consists of either a single foraging gerbil or the combined results for a pair of gerbils. This is done to avoid possible pseudoreplication as a result of competitive interactions between foraging individuals. The behavioral measurements we took are as follows: the amount of time spent in each tray, the number of visits paid to each tray, the number of switches made between trays (i.e., the amount of times the gerbils moved from one tray to another), and the minimum distance traversed in a given night (calculated based on the known distances between trays and the sequence of visits to trays made by each foraging unit every night). This assumes that movement between trays was in a straight line and that gerbils did not engage in any other activities in between. As such, it provides a minimum estimate. Since it is likely that this estimate scales positively with actual distance traveled, we used the measurement as a proxy for overall activity during each night.

We analyzed the data using repeated measures ANOVA using the night, the environmental quality treatment and the number of gerbils as categorical variables. The effects of the night (ranging from 1 to 3, 1 being the first night of a given treatment and 3 being the last) were nested within the effects of the treatment (Rich period A, Poor period, and Rich period B). In the cases when the assumption of sphericity was not met (switching between trays and distance analyses), we employed repeated measures MANOVA. We used a priori contrasts to specifically compare between different treatments. When analyzing the GUDs for trays 2, 3, and 4, we only compared between the two rich periods and did not include the poor period because the initial seed density conditions are different between the treatments (4 vs. 1 g), making any observed differences trivial and uninformative. For tray 1 (for which the initial seed density was constant throughout the experiment), we compared the results for all three periods. For all analyses of trays 2, 3, and 4, which always had identical initial amounts of seeds and only differed in their location, we used a weighted Z test of combined probabilities under the same null hypothesis (Whitlock 2005). This analysis was conducted using the MetaP free software (Dongliang 2009).

We used STATISTICA 10.0 software (StatSoft, Inc., Tulsa, OK, USA) for all other statistical analyses. Mean values throughout the text are presented as mean ± 1 SE.

Results

When checking for the effects of the number of gerbils participating in a trial on their foraging behavior, we found no interactions between the number of gerbils and any of the other factors—the gerbils responded in the same manner to the changes in the quality of the environment whether there was one gerbil present or two (p > 0.05 for all cases). Other effects of the number of gerbils on their foraging behavior within each treatment are reported elsewhere (Berger-Tal et al. 2014). Therefore, we concentrate here on the effects of the temporal changes to the environment on the foraging behavior of all gerbils.

One pair of gerbils (out of 15 individuals and pairs tested) behaved very differently than the rest of the gerbils. These gerbils consumed much more food than any other gerbil in the first rich period and consequently created an outlier in all of our analyses (usually with results that were two to three times larger than the nearest data point). We therefore removed this pair from our analysis.

With the exception of one analysis (time spent in tray 1—the gerbils spent less time foraging in tray 1 on the first night of the Rich A and Poor periods compared to the second and third nights), there were no significant differences among the nights within the treatments in any of the results (p > 0.1 for all cases), and there was never an interaction between the night and any of the other factors (i.e., the order of the nights within each treatment had no effect on the gerbils’ behavior).

Food harvesting

The GUD (amount of food left in the food patch) in the rich tray (tray 1) significantly differed among the treatments (Rich A period, 3.23 ± 0.28 g; Poor period, 2.55 ± 0.44 g; Rich B period, 2.57 ± 0.38 g; F2,24 = 7.061; p = 0.004). While we found no difference between the GUDs during the Poor and Rich B periods (Fig. 1), a priori contrasts reveal that GUDs in the Rich A period were higher than those in both the Poor (t = 3.321, p = 0.006) and Rich B periods (t = 3.522, p = 0.004).

The giving up densities (amount of food left in a resource patch) in grams in the different trays. Dark gray bars represent the rich environment treatments. Light gray bars represent the poor environment treatment. Tray 1’s initial seed density was constantly rich regardless of the treatment, while the initial seed density in the poor treatment for trays 2, 3, and 4 is represented by the dashed line. Error bars represent 1 SE

The GUDs in the trays that were poor during the Poor period (trays 2, 3, and 4) also significantly decreased between the two Rich treatments (i.e., the gerbils harvested more food in Rich period B) even though these trays all had initial seed densities of 4 g of millet during these periods (Stouffer’s z trend, p < 0.001). That is, to say, even though environmental richness and initial food abundances in the trays were identical in Rich period A and Rich period B, gerbils depleted food patches to lower GUDs during Rich period B.

Overall, the amount of food the gerbils harvested from all trays each night significantly changed between the periods (Rich A, 2.46 ± 1.09 g; Poor, 1.99 ± 0.55 g; Rich B, 4.74 ± 1.26 g; F2,24 = 11.68, p < 0.001). A priori contrasts reveal that the reduction in food consumption between the Rich A period and the Poor period was not significant (t = 0.822, p = 0.427), while in Rich period B, the gerbils harvested significantly more seeds than in the Rich A period (t = 3.842, p = 0.002).

Foraging activity

The gerbils spent more time in tray 1 in the Poor and Rich B periods than in Rich A period (F2,24 = 5.973, p = 0.008; a priori contrasts: Rich A-Poor: t = 2.993, p = 0.011; Rich A-Rich B: t = 3.070, p = 0.010; Fig. 2a). The same trend was also apparent in the number of visits to tray 1 (F2,24 = 5.868, p = 0.008; a priori contrasts: Rich A-Poor: t = 3.099, p = 0.009; Rich A-Rich B: t = 2.938, p = 0.012; Fig. 2b)

The average amount of time the gerbils spent in a resource patch in seconds (a) and the average number of visits the gerbils made to resource patches (b) in the different treatments. The thin solid line represents time and visits to tray 1 (the rich tray). The long dashed, short dashed, and dotted-dashed lines represent trays 2, 3, and 4, respectively. The thick solid line at the top of the graph represent the time spent and number of visits to all of the trays combined. Error bars represent 1 SE

The amounts of time the gerbils spent in trays 2, 3, and 4 were greater in Rich B period than in the Poor and Rich A periods (Stouffer’s z trend, p < 0.001; Fig. 2a). This was true also for the average number of visits to the trays (Stouffer’s z trend, p < 0.001; Fig. 2b).

Overall, the gerbils spent more time foraging in trays in the second Rich period than in the other two periods (F2,24 = 5.884, p = 0.008; a priori contrasts: Rich A-Poor: t = 1.402, p = 0.186; Rich A-Rich B: t = 3.047, p = 0.010; Fig. 2a); they also visited the trays more times in the Rich Period B than in the other two periods (F2,24 = 8.769, p = 0.001; a priori contrasts: Rich A-Poor: t = 0.879, p = 0.400; Rich A-Rich B: t = 3.500, p = 0.004; Fig. 2b).

Lastly, the gerbils’ rate of switching between trays per minute of foraging was significantly higher in both Rich periods compared to the Poor period (F2,11 = 8.682, p = 0.005; a priori contrasts: Rich A-Poor: t = 2.177, p = 0.050; Rich B-Poor: t = 2.855, p = 0.014; Fig. 3a), as was the minimum distance the gerbils covered per minute of foraging (F2,11 = 6.525, p = 0.014; a priori contrasts: Rich A-Poor: t = 1.854, p = 0.088; Rich B-Poor: t = 2.719, p = 0.019; Fig. 3b).

Discussion

In agreement with optimal foraging theory, gerbils increased their foraging activity in the rich patch when the environment became poor. A poor environment decreases the MOC for the feeding patches, and thus, foragers should stay in them longer and harvest more food from it. This should be most pronounced in the rich part where the initial seed density is the highest. Our results therefore show that the gerbils responded to the decrease in environmental quality as optimal foragers. The behavioral response of the gerbils to the environment becoming rich again was even more pronounced. The gerbils’ overall foraging activity did not return to its previous levels (despite the fact that the conditions in the two rich periods were identical), but rather increased dramatically. This increase came about by augmenting the foraging activity in the patches that used to be poor, while maintaining the same high foraging activity as exhibited when the environment was poor in the always rich patch. This goes against the predictions for both prescient and Bayesian foraging, and therefore, classic models of optimal foraging cannot explain these results.

There are at least three plausible explanations for the increase in foraging activity in the second rich period. To begin with, the gerbils harvested less food in the poor period (although not significantly so) which may have decreased their physical state (i.e., they became hungrier, and their marginal value of energy increased), causing them to increase food harvesting activities once food became abundant again. However, if the gerbils’ behavior is only motivated by state-dependent foraging, we would have expected that once the environment became rich again, the harvesting of resources would decrease with time as the gerbils replenished their dwindled resources, yet this was not the case: The order of nights within the treatments had no effect on the gerbils’ foraging activity, which means there was no decreasing trend of foraging during the 3 days of the second rich period. Moreover, during the Poor period, the gerbils’ harvest of seeds did not significantly differ from the energetic needs necessary for them to maintaining a stable field metabolic rate (2.15 g of millet seeds; Degen 1997). Nevertheless, during Rich B period, they harvested considerably more seeds than they needed. Therefore, while it is quite likely that the change in the foragers’ behavior is partly due to a state-dependent mechanism, our results indicate the involvement of additional behavioral mechanisms.

Experience improves performance (Rutz et al. 2006). Thus, a second explanation for the results is that as the gerbils became familiar with their surroundings, they became more accustomed to our experimental setup and more efficient with time at extracting resources from it, regardless of the feeding treatments. While we cannot completely rule out the effects of time on the gerbils’ performance (perhaps gerbils become more familiar with their surroundings at 3-day intervals), the fact that there was no significant effect of the night within each treatment on the gerbils’ behavior (i.e., there was no temporal trend in behavior within each period) does not support this explanation. Moreover, many studies show that a 3-day training period is a long enough period for rodents to become familiar with an experimental feeding setup and to stabilize their GUDs (e.g., Pastro and Banks 2006; Shaner et al. 2007). Still, to be able to better untangle the effects of learning and time on the gerbils behavior from other alternative explanations, an additional control group for which the food does not vary throughout the experiment will be needed. We hope to address this issue in future experiments.

Most optimal foraging models consider the value of a given environmental quality as absolute, regardless of the level of knowledge the forager is assumed to have. However, research on a variety of species, ranging from bacteria to humans, clearly shows that organisms view their environment subjectively (e.g., Tversky and Simonson 1993; Shafir et al. 2002; Latty and Beekman 2011). Therefore, the third explanation for the results is that the gerbils experienced a positive contrast effect that induced context-dependent estimates of the quality of the environment. According to this explanation, once the foragers knew that the resource patches can become poor, they treated the patches in the rich period as more valuable. Unlike before, during the second Rich period, the gerbils possessed information that the environment can change and that it can change for the worse. This should affect the future prospects of the foragers, which in turn should alter their foraging behavior (Brown 1988, 1992; Kotler et al. 1999; van der Merwe et al. 2014). Gerbils are food hoarders, caching food for future use. This means that their foraging decision should reflect a balance between their present and future needs. By experiencing a poor environment, the future value of food was greatly increased, changing the marginal value of time (λ) to make foraging in the patches a much more valuable activity (Kotler et al. 1999). In other words, to compensate for possible unforeseen future poor periods, positive contrast effects caused the foragers to treat each patch as more valuable (decreasing the MOC for the patch in the process) and increase their foraging activity in it. Indeed, numerous theoretical and empirical studies show that animals should store more food in stochastic environments (Brodin and Clark 2007).

Despite the positive contrast effects exhibited in the second rich period, the gerbils did not seem to be affected by negative contrast effects in the poor period. As noted above, negative contrast is expected to decrease the foraging activity of the gerbils and increase their searching behavior for alternative resources (Freidin et al. 2009). However, the gerbils did not reduce their foraging activity in the poor period (Figs. 1 and 2) and their inter-patch movement only decreased in the poor period (Fig. 3). Presumably, the low costs of foraging in our experimental arena (the gerbils were kept in a predator-free environment, and competition was only experienced by the pair of gerbils and limited to one competing individual) reduced the motivation of the gerbils to decrease their foraging activity even in an environment perceived as low quality (i.e., there was less reason to stop foraging and wait for the rich conditions to return when foraging is relatively cost-free). Future studies should examine contrast effects under different predation pressures in order to test this hypothesis.

To conclude, we found that animals’ estimate of the environment can be context-dependent and that foragers can treat environments that are seemingly identical as strikingly different, depending on their knowledge and experiences. This can have a direct effect on the missed opportunity cost of foraging in a patch, making past experience an important component of optimal foraging theory.

References

Abramsky Z, Brand S, Rosenzweig ML (1985) Geographical ecology of gerbilline rodents in sand dunes habitats of Israel. J Biogeogr 99:363–372

Bar Y, Abramsky Z, Gutterman Y (1984) Diet of gerbilline rodents in the Israeli desert. J Arid Environ 7:371–376

Bedoya-Perez MA, Carthey AJR, Mella VSA, McArthur C, Banks PB (2013) A practical guide to avoid giving up on giving-up densities. Behav Ecol Sociobiol 67:1541–1553

Bentosela M, Jakovcevic A, Elgier AM, Mustaca AE (2009) Incentive contrast in domestic dogs (Canis familiaris). J Comp Psychol 123:125–130

Berger-Tal O, Avgar T (2012) The glass is half-full: overestimating the quality of a novel environment is advantageous. PLoS One 7:e34578

Berger-Tal O, Mukherjee S, Kotler BP, Brown JS (2010) Complex state-dependent games between owls and gerbils. Ecol Lett 13:302–310

Berger-Tal O, Embar K, Kotler BP, Saltz D (2014) Everybody loses: intraspecific competition induces tragedy of the commons in Allenby’s gerbils. Ecology. doi:10.1890/14-0130.1

Brodin A, Clark CW (2007) Energy storage and expenditure. In: Stephens DW, Brown JS, Ydenberg RC (eds) Foraging: behavior and ecology. University of Chicago, Chicago, pp 221–269

Brown JS (1988) Patch use as an indicator of habitat preference, predation risk, and competition. Behav Ecol Sociobiol 22:37–47

Brown JS (1992) Patch use under predation risk. I. Models and predictions. Ann Zool Fenn 29:301–309

Brown JS, Kotler BP (2004) Hazardous duty pay: studying the foraging cost of predation. Ecol Lett 7:999–1014

Charnov EL (1976) Optimal foraging, the marginal value theorem. Theor Popul Biol 9:129–136

Degen AA (1997) Ecophysiology of small desert mammals. Springer, Berlin

Dongliang G (2009) MetaP software, http://compute1.lsrc.duke.edu/softwares/MetaP/metap.php. Accessed 29 Dec 2012

Flaherty CF (1996) Incentive relativity. Cambridge University, Cambridge

Freidin E, Cuello MI, Kacelnik A (2009) Successive negative contrast in a bird: starlings’ behaviour after unpredictable negative changes in food quality. Anim Behav 77:857–865

Green RF (2006) A simpler, more general method of finding the optimal foraging strategy for Bayesian birds. Oikos 112:274–284

Kobre KR, Lipsitt LP (1972) A negative contrast effect in newborns. J Exp Child Psychol 14:81–91

Kotler BP, Blaustein L (1995) Titrating food and safety in a heterogeneous environment: when are the risky and safe patches of equal value? Oikos 74:251–258

Kotler BP, Brown JS (1990) Rates of seed harvest by two species of gerbilline rodents. J Mammal 71:591–596

Kotler BP, Brown JS, Slotow R, Goodfriend W, Strauss M (1993) The influence of snakes on the foraging behavior of gerbils. Oikos 67:307–318

Kotler BP, Brown JS, Hickie M (1999) Food storability and the foraging behavior of fox squirrels (Sciurus niger). Am Midl Nat 142:77–86

Kotler BP, Brown JS, Dall SRX, Gresser S, Ganey D, Bouskila A (2002) Foraging games between owls and gerbils: temporal dynamics of resource depletion and apprehension in gerbils. Evol Ecol Res 4:495–518

Kotler BP, Brown JS, Mukherjee S, Berger-Tal O, Bouskila A (2010) Moonlight avoidance in gerbils reveals a sophisticated interplay among time allocation, vigilance, and state dependent foraging. Proc R Soc Lond B 277:1469–1474

Latty T, Beekman M (2011) Irrational decision-making in an amoeboid organism: transitivity and context-dependent preferences. Proc R Soc Lond B 278:307–312

MacArthur RH, Pianka ER (1966) An optimal use of a patchy environment. Am Nat 100:603–609

McNamara JM, Green RF, Olsson O (2006) Bayes’ theorem and its applications in animal behaviour. Oikos 112:243–251

McNamara JM, Fawcett TW, Houston AI (2013) An adaptive response to uncertainty generates positive and negative contrast effects. Science 340:1084–1086

Molokwu MN, Olsson O, Nilsson J-A, Ottosson U (2008) Seasonal variation in patch use in a tropical African environment. Oikos 117:892–898

Olsson O, Brown JS (2006) The foraging benefits of information and the penalty of ignorance. Oikos 112:260–173

Olsson O, Brown JS (2010) Smart, smarter, smartest: foraging information states and coexistence. Oikos 119:292–303

Olsson O, Molokwu MN (2007) On the missed opportunity cost, GUD, and estimating environmental quality. Isr J Ecol Evol 53:263–278

Olsson O, Wiktander U, Nilsson SG (2000) Daily foraging routines and feeding effort of a small bird feeding on a predictable resource. Proc R Soc Lond B 267:1457–1461

Pastro LA, Banks PB (2006) Foraging responses of wild house mice to accumulations of conspecific odor as a predation risk. Behav Ecol Sociobiol 60:101–107

Pecoraro NC, Timberlake WD, Tinsley M (1999) Incentive downshifts evoke search repertoires in rats. J Exp Psychol 25:153–167

Persson A, Stenberg M (2006) Linking patch-use behavior, resource density, and growth expectations in fish. Ecology 87:1953–1959

Pyke G (1982) Optimal foraging in bumblebees: rule of departure from an inflorescence. Can J Zool 60:417–428

Rutz C, Whittingham MJ, Newton I (2006) Age-dependent diet choice in an avian top predator. Proc R Soc Lond B 273:579–586

Shafir S, Waite TA, Smith BH (2002) Context-dependent violations of rational choice in honeybees (Apis mellifera) and grey jays (Perisoreus Canadensis). Behav Ecol Sociobiol 51:180–187

Shaner P-J, Bowers M, Macko S (2007) Giving-up density and dietary shifts in the white-footed mouse, Peromyscus leucopus. Ecology 88:87–95

Stephens D, Krebs JR (1986) Foraging theory. Princeton University, Princeton

Tversky A, Simonson I (1993) Context-dependent preferences. Manag Sci 39:1179–1189

Valone TJ, Brown JS (1989) Measuring patch assessment abilities of desert granivores. Ecology 70:1800–1810

van der Merwe M, Brown JS, Kotler BP (2014) Quantifying the future value of cacheable food using fox squirrels (Sciurus niger). Isr J Ecol Evol. doi:10.1080/15659801.2014.907974

Vickery WL, Rieucau G, Doucet GJ (2011) Comparing habitat quality within and between environments using giving up densities: an example based on the winter habitat of white-tailed deer Odocoileus virginianus. Oikos 120:999–1004

Whitlock MC (2005) Combining probability from independent tests: the weighted Z-method is superior to Fisher’s approach. J Evol Biol 18:1368–1373

Acknowledgments

We wish to thank Ishai Hoffman for his invaluable assistance in the preparation of the experimental setup. O. B-T is supported by the Adams Fellowship Program of the Israel Academy of Sciences and Humanities. This study was funded by an ISF grant 1397/10. This is publication number 847 of the Mitrani Department of Desert Ecology.

Ethical standards

This research complies with all current laws of the state of Israel and meets all of its ethical standards. This research was carried out under permit 37697/2011 from the Israel Nature and Parks Authority.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by P. B. Banks

Rights and permissions

About this article

Cite this article

Berger-Tal, O., Embar, K., Kotler, B.P. et al. Past experiences and future expectations generate context-dependent costs of foraging. Behav Ecol Sociobiol 68, 1769–1776 (2014). https://doi.org/10.1007/s00265-014-1785-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00265-014-1785-9