Abstract

Charles Darwin aided his private decision making by an explicit deliberation, famously deciding whether or not to marry by creating a list of points in a table with two columns: “Marry” and “Not Marry”. One hundred seventy-two years after Darwin’s wedding, we reconsider whether this process of choice, under which individuals assign values to their options and compare their relative merits at the time of choosing (the tug-of-war model), applies to our experimental animal, the European Starling, Sturnus vulgaris. We contrast this with the sequential choice model that postulates that decision-makers make no comparison between options at the time of choice. According to the latter, behaviour in simultaneous choices reflects adaptations to contexts with sequential encounters, in which the choice is whether to take an opportunity or let it pass. We postulate that, in sequential encounters, the decision-maker assigns (by learning) a subjective value to each option, reflecting its payoff relative to background opportunities. This value is expressed as latency and/or probability to accept each opportunity as opposed to keep searching. In simultaneous encounters, choice occurs through each option being processed independently, by a race between the mechanisms that generate option-specific latencies. We describe these alternative models and review data supporting the predictions of the sequential choice model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

When Charles Darwin set out to decide whether or not to marry his cousin, Emma Wedgewood, he found himself facing a difficult decision. To help himself decide about the advantages of marrying (apparently regardless of the identity of the partner), he wrote his two possible actions (“Marry” and “Not Marry”) at the top of a split page and proceeded to list the pros and cons of each of these options under each heading. At the bottom of the page, he seems to have weighted the relative strength of each argument, writing “Marry. Marry. Marry. Q.E.D.”, and a few months later, he did indeed marry (Darwin 1838). This method offers a starting point for the theoretical analysis of decision processes. In modern terminology, these columns might have been labelled “go” and “no go”. Half a century earlier, Benjamin Franklin (1772) had described his own preferred method to deal with hard decisions in a letter to Joseph Priestley. Franklin explained that, when faced with complex decisions including a number of opposing factors, he would write the pros on one column and the cons in another, then proceed to simplify the dilemma by crossing out elements of equivalent weight on each column, until only a few pros or cons remained, thus determining the right course of action. He called his method “Moral, or Prudential Algebra”. It is tempting to assume that something similar to these procedures might be embodied in the cognitive mechanisms underlying choice in humans and other species, but, as we will discuss here, this assumption deserves close scrutiny. An implicit property of any such process would be that choosing involves a number of cognitive operations and should thus take time: acting in the presence of a single alternative should be faster than acting when a choice between two or more is required. We contest this intuition both theoretically and empirically.

Motivated by a number of empirical observations (reviewed later), we propose instead that, when two options are simultaneously available, animals do not explicitly deliberate between two options, but instead rely on the same process as when facing independent, sequential opportunities. When an animal faces a single foraging opportunity, it can choose whether to chase it or skip it. Many factors can exert influence, including the payoff of the present option relative to other opportunities available in the environment. Let us assume the animal decides to chase the prey. Action, of course, is not synchronous with perception, and the time between perception and action is of interest here. We argue that the duration of this latency to act reflects a tendency to skip the prey in favour of continuing to forage. Latency is not the minimum processing time in the neural path between perception and action (the reaction time), but the sum of the minimal processing time and a motivational component that expresses (or, in behavioural terms, is) the willingness to chase that prey type. Across sequential encounters with various prey types, the animal builds up a library of subjective values, each corresponding to a prey type and generating a probability density function of latencies to chase that prey type in different encounters. The functional expectation, from an optimal foraging perspective, is that the central tendency and spread of the resulting latency distribution will be a decreasing function of the expected fitness gain from that prey type relative to the lost opportunity in the whole environment. For simplicity, we are treating the environment and the resulting library of prey types used by the decision-maker as static. This is valid for the laboratory tests we discuss presently, but, in natural situations, an additional complication may arise from how the organism tracks the temporal variations in its field of opportunities.

The core question for this article is what happens when two prey types are met simultaneously. This, we surmise, is easily and frequently arranged in the laboratory, but probably rare in natural hunting situations. Quantitative estimates of the relative frequency of simultaneous versus sequential encounters are hard to achieve because, to some extent, there will always be some minor difference in the moment of perception and proximity of stimuli relevant to each item. Indeed, categorising an encounter as simultaneous may be a matter of setting a threshold for the temporal/spatial proximity between multiple stimuli. We assume, however, that encountering multiple stimuli in sufficient proximity to qualify as simultaneous is sufficiently rare not to lead to the development of special neuro-behavioural adaptations to deal with them optimally, so that simultaneous choices are dealt with using the same mechanisms evolved for efficient handling of sequential encounters. When multiple stimuli overlap, these mechanisms operate in parallel: each option sets in motion a process that would normally generate a latency and then action, but the option that generates the shorter latency “censors” the alternative(s) so that one of them is expressed behaviourally and one latency is measured. In other words, we postulate that each simultaneous choice is treated as independent, non-interfering sequential opportunities. Crucially, there is no comparative evaluation of the merits of each available option at the time of choice. Most alternative models of choice, however, assume some sort of comparison at the time of choice.

At the heart of conventional models of decision processes lies the assumption that there is a trade-off between the accuracy of choice and cost of evaluation and that this implies longer time costs in simultaneous than sequential encounters. This is a natural assumption whether the agent has incomplete knowledge of the alternatives’ parameters or is fully acquainted with them. When knowledge is incomplete (for instance, when facing stochastic options of unknown probabilities), it may be adaptive to allocate a number of initial responses to gain knowledge and optimise the balance between exploration and exploitation, so that a short-term exploratory cost is paid to decrease the long-term cost of errors (Krebs and Kacelnik 1978). The optimal amount of behaviour allocated to exploration increases with the number of alternatives, since exploration gives no gains when the agent only faces one option.

If the agent deals instead with fully known sources of reward, the same logic applies to the handling of previously encoded information: facing two or more options can be expected to call for some evaluation of previous experience and the entailed processing of information to cause greater latency to act (the time cost of the evaluation) than when no choice is faced. Delay to act under this scenario implies a time cost and should be minimal when only one option is present, as there is no point in paying any evaluation cost if there is nothing to choose.

This intuitive logic also applies to mechanistic (rather than functional) models of choice: the chain from perception of potential targets to action might be thought to be longer when facing more than one option, since competition for control of the behavioural final common path must involve more cognitive operations for greater number of options. When only one option is present, action may not involve any competition and should thus be faster.

Triggered by some recent findings in the decision behaviour of starlings (Sturnus vulgaris), we examine the two previously mentioned alternative views of choice processes. We start with an informal description of the models, then discuss them from a functional perspective and offer some formalisation. Finally, we move on to contrast their predictions against empirical results. Although we present two basic approaches, we are aware that they are not the only possibilities, but hope that they are sufficiently broad to illustrate the main issues to which other theoretical constructs could be related. We call them, for reasons that will become clear, the tug-of-war model (ToW) and the sequential choice model (SCM). A detailed review of a set of models dealing with forced choices between two alternatives has been presented by Bogacz et al. (2006). These authors focused on an experimental paradigm (the two-alternative forced choice) in which a complex ambiguous stimulus is presented and subjects choose between two responses (left or right), only one of which is correct. We deal, instead, with subjects facing either one or two foraging alternatives that differ in their attractiveness and examine behaviour when either a single alternative is present or both are presented simultaneously. Our primary emphasis here is not on optimality in the process by which the subject judges the correctness of two contrasting hypotheses, but on narrowing down the plausible processes by which subjects may use stored information to reach action.

Models of choice mechanisms

We assume that, as an animal experiences a source of reward in a given context, it develops a certain valuation of it. This valuation is sensitive to absolute properties of each source (particularly the ratio of amount of reward to the delay between action and outcome; e.g. Bateson and Kacelnik 1996; Shapiro et al. 2008), to the state of the subject during learning about it (sources encountered under greater need are valued more highly as they yield greater effective utility; e.g. Marsh et al. 2004; Pompilio et al. 2006; Vasconcelos and Urcuioli 2008) and to the available alternatives in the same context (a source is more highly valued the lower the average gains in the environment where it is typically found; e.g. Fantino and Abarca 1985; Pompilio and Kacelnik 2010). From a functional point of view, this scenario relates to the sequential choice situations that dominate optimal foraging theory models, such as Charnov (1976a, b) and see also, Houston (2010). Rather than assuming that the dominant selective pressure that modelled present-day mechanisms of choice was the balance between accuracy of choice between simultaneous opportunities and time costs to evaluate each choice, we assume that the prevalent conflict was between taking a given opportunity and letting it pass to pursue what the background environment had to offer. Hence, our explicit reference to sequential choices.

To model how valuation is transduced into action in such choice situations, both models assume that when a decision-maker encounters a stimulus associated with a source of reward a set of neurons (not necessarily contiguous) starts discharging at a given (stochastic) rate R i, where i indicates the option (see Fig. 1, top panel). The rate of discharge is assumed to be positively related to the subjective value of the option encountered, and, as explained earlier, this value is a function of learning. The output R i of this assemblage is then integrated to produce a quantity S i that expresses the instantaneous tendency of the subject to respond to the option. When, after responding, the stimulus disappears, the value of S i is reset to zero. We refer to the time elapsed between the onset of the stimulus and action as the “latency” to respond. For a given option, this latency varies from sequential encounter to sequential encounter due to the stochasticity in the neurons’ discharge rate. Consequently, across encounters, an observer can build up a specific distribution of latencies to accept each of the options available in the environment. The two models we contrast differ, however, in the way tendencies to act compete for behavioural expression when more than one option is simultaneously available; although in both of them, preference is constructed at the time of choice (e.g. Slovic 1995).

Top panel: Block diagram of a sequential choice. R rate of neuronal discharge, S integrated signal, t threshold. Bottom left panel: Block diagram of a simultaneous choice according to the ToW model. R rate, S integrated signal, t threshold, and  and

and  = sum, where black indicates negative input. Bottom right panel: Block diagram of a simultaneous choice according to the SCM. R rate, S integrated signal, t threshold

= sum, where black indicates negative input. Bottom right panel: Block diagram of a simultaneous choice according to the SCM. R rate, S integrated signal, t threshold

Tug-of-war

In this model (see Fig. 1, bottom left panel), when the stimuli relevant to two or more potential targets are simultaneously present, a computation of their relative instantaneous tendency to generate action occurs. In the figure, this is implemented as the difference between the S i values, but we have no empirical access to the quantitative values of R i or S i, and the precise comparison operation is not crucial for our argument. What is important, however, is that at this stage, the subject computes an index of the relative attractiveness between the options and somehow ranks them. In the figure’s example, when the difference in attractiveness exceeds a given threshold, the animal takes action, with the selected target depending on which of the values is larger (i.e. on the sign of the difference). The left panel of Fig. 2 illustrates the same idea in a different format. Here, the value of the difference between S A and S B is seen in an imaginary trial progressing irregularly (due to the stochasticity of R A and R B) until it reaches a threshold, when action occurs. The probability distributions shown upwards and downwards of the threshold lines indicate the latencies to act that an observer would see across trials when either option is chosen.

Left panel: progression of ΔS (see Fig. 1) in an imaginary trial. Right panel: progression of SA and SB in an imaginary trial. When one signal reaches the threshold, the other is censored. A and B represent frequency distributions of latencies observed when each option is chosen

Because the signals from each candidate option compete, they can be seen as tugging the input to the behavioural final common path in opposite directions. At the extreme, if for the sake of illustration, we assume that two equally valuable targets are present and that the process has no noise, their difference might never reach the action threshold and the decision-maker would be caught in the dilemma of Buridan’s Ass, who died when facing two piles of hay because it could not choose between them (Zupko 2006). On a more plausible case, it is to be expected that action towards each candidate would take longer in the presence of competitors than when the same option is encountered alone. In the context of Fig. 1 (bottom left panel), if, say, S B = 0, then the difference will be just S A, which will find it easier to pass the threshold for action than when S B detracts from the overall sum (i.e. S B? 0). Thus, the probability distributions to be observed when only one option is present would be shifted to the left respect to those shown.

Different versions of this model could include the evaluation before the integration steps, the use of a proportional ratio [say S A/(S A + S B)] rather than a difference for the comparison and the use of different thresholds for each action. None of these variations are of importance for our present purposes, as will become clear below. The core of this model is that any process where there is a competitive evaluation between attractors is likely to have a time cost because of the computation of an index of attractiveness and a consequent ranking before action takes place.

The intuitively expected increase in choice time with number of options has empirical support in humans, where it is encapsulated in Hick-Hyman Law (Hick 1952; Hyman 1953), an empirical regularity that is popular in human–computer interaction studies. Hick-Hyman’s law says that the time T required to make a choice among n items is described by T = b*log2(n + 1), where b is a fitted parameter and n the number of alternatives in the set. Thus, time to choose should increase sub-linearly with the number of options. The view that adding options makes choice more demanding and time-consuming is widely accepted and frequently described as the “Paradox of Choice” (Schwartz 2004).

Sequential choice model

This model differs from the previous one in that it does not include a comparative evaluation between the tendencies to respond to each action, but cross-censorship occurs at the final stage (see Fig. 1, bottom right panel). Here, the values S i are compared with a threshold for action independently, so that, when one of them reaches the threshold, the agent responds, the stimuli for all options go away and all S i are reset to zero. This simple process has an interesting consequence for the empirical observations of latency to act. When two options are present, each act of choice produces a latency observation for the candidate that has reached the threshold earlier, but no information about the aborted process regarding other options. Observed latencies thus show the result of cross-censorship between the underlying distributions of latencies for each candidate option. By “underlying” here we mean the distribution of latencies to act that is observed when that option is encountered multiple times without competition. The result of this cross-censorship is that the latencies to act recorded during simultaneous choice situations should be shorter than those for encounters with single options because censorship is bound to suppress the underlying distributions asymmetrically, censoring longer-than-average samples more severely than shorter-than-average samples. This is shown in the right panel of Fig. 2. Here, we see how, in a given trial, both tendencies to act race towards the threshold. The winning tendency is expressed as latency, while the losing one remains unobserved. Due to stochasticity, it is to be expected that each option will have a higher chance of winning in trials when its rate R i has been faster than average, so that more latencies will be recorded from the left than from the right tail of each underlying distribution.

Notice that this prediction has the opposite sign of that of the ToW model: rather than predicting a time cost of evaluation, here, we predict a shortening of the delay to act when two stimuli are present, with respect to when an option is encountered alone. When two candidate options that differ in attractiveness are met, the more attractive option will rarely be censored, and thus, in most choice trials, its latency will be expressed and that of the alternative will not. This means that the latency distribution for the less preferred option will be more severely censored than that of the preferred option. In fact, the few values we do observe for the less preferred option will only be those that out-compete the latencies of the preferred option and thus must have been sampled from the left tail of its underlying latency distribution. This leads to another prediction: that the shortening of latencies to act in choice with respect to sequential encounters should be more extreme for the option that is chosen less frequently.

In summary, by assuming that only the decision mechanisms involved in sequential encounters have been pruned by natural selection (i.e. there are no special adaptations for simultaneous choices) and that these are co-opted whenever two or more options are presented simultaneously, we progress toward a model that challenges the conventional expectation of a time cost for choosing. Importantly, the model makes several experimentally testable predictions. In essence, the SCM implies that (a) the valuation process involves learning and reflects the attractiveness of the present option relative to the background of opportunities, (b) cross-censorship between the processes generating latencies to act leads to observing shorter latencies during simultaneous choices than sequential encounters, particularly for the less preferred option and (c) one should be able to predict partial preferences between options, when faced simultaneously, from behaviour (i.e. latencies) during sequential encounters.

The predictions of the SCM can be made more mathematically explicit, without loss of generality. Suppose that sequential encounters with options A and B generate the distributions of latencies f A and f B, respectively, with cumulative distributions Φ A and Φ B. When these two options are met simultaneously, the probability of choosing A is simply the probability P A of a sample from f A being shorter than one from f B and is given by

where l A and l B are random samples from the respective distributions and x is a particular latency value.

Equation 1 can be used to predict the distribution of latencies for each option in choice trials. For A, this is given by the conditional probability that l A = x given that l A < l B in that trial, for all x. Formally, we seek F(R|S), where R means “x < l A < = x + dx” and S means l A < l B. Generally,

where F 1 (R,S) gives the probability of joint occurrence of R and S, and F 2 (S) gives the probability of S independently of R. We can express F 1 as:

Since F 2 (S) is given by Eq. 1, we can write Eq. 2 as

Eq. 4 predicts the distribution of latencies in choice trials using the distributions of latencies in sequential encounters (i.e. latencies observed when only one option is present). Mean latencies during choice are obtained by integration of this function.

Tug-of-war versus sequential choice: empirical tests of the two models

Latencies as an expression of a valuation process

Thus far, all explicit tests of the SCM have been performed with European starlings working for food reinforcement in operant chambers. Previous research (e.g. Bateson and Kacelnik 1995; Reboreda and Kacelnik 1991) had already suggested that latencies in no-choice trials offer an orderly view of preferences in choice trials, particularly when options are risky. More recent studies have probed the relationship between latencies and preferences more directly. Shapiro et al. (2008) trained starlings in multiple environments, each defined by two possible options. Each option was signalled by a different symbol in a response key and offered a particular combination of amount and delay to food (henceforth, we use the terms rate or profitability to refer to the ratio between amount and delay). Notice that throughout we use “delay” to refer to the time elapsed between an animal’s action and the consequences. In computations of actual rates of return, the time between each outcome and the forthcoming choice opportunity (including such time components as inter-trial intervals, search, travel times and handling times) should be included. We assume that these times form part of the valuation of each option (the sparser the encounter of opportunities the higher the valuation of all options in such a context), but since they affect all options in the same direction, the learning mechanism by which stimuli are attributed responsibility for specific outcomes downgrade their influence. Consequently, times other than delays between actions and outcomes play a small role in the choices we discuss (for an explicit discussion of this point see Kacelnik 2003).

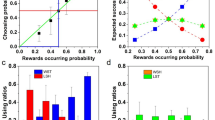

The main assumptions and predictions of the SCM can be tested independently. In terms of assumptions, the SCM crucially depends on the dependence between latency to respond to an opportunity when encountered on its own and the benefit that would result from taking that opportunity given the background alternatives. This is shown in top panel of Fig. 3. The top panel of the figure shows the median latencies to accept each option in sequential encounters (i.e. when only one option was present) as a function of the option’s rate and the rate of the alternative option in that environment, as observed by Shapiro et al. (2008). The figure shows that the average of individual’s median latencies to respond depends both on the rate of the option present in any particular sequential encounter and on the rate of the alternative in that particular treatment, even though this alternative is not present at the time. As predicted, latencies to accept each option decrease as a function of an option’s profitability and increase as a function of the profitability of the background alternative. Since Shapiro et al. tested this effect within individuals, it is clear that this is a learning-dependent effect. Thus, the main assumption of the SCM is fulfilled.

Top panel: Median latencies, averaged across subjects, to accept each option during sequential (single-option) encounters as a function of the option’s rate (amount/delay) and the rate of the alternative option that can potentially be encountered in that environment. Statistical analyses confirmed a significant effect of the option’s rate, the rate of the alternative and their interaction (adapted from Shapiro et al. 2008). Bottom panel: dots represent the average proportion of choices (−SEM) of R (the “reject” key) in trials offering a choice between B (the leaner of the reinforced alternatives) and R; bars show median latencies (+SEM) in sequential encounters with option B, averaged across subjects (adapted from Freidin et al. 2009)

From a functional perspective, we have argued that the latency variation reflects a tendency to skip opportunities in favour of the background. This is supported by Freidin et al. (2009). Here, starlings were again trained with two options that led to food, A and B, each signalling a different delay to reinforcement. Option A always signalled a delay of 1 s while option B signalled a delay of 4, 8, 12, 16.8 or 24 s, depending on condition. However, in this study, birds also encountered a third option (R) that was never reinforced but gave the subjects the chance to terminate the current trial and advance to the next one after the usual inter-trial-interval. As expected, during sequential encounters, latencies to accept the best option (A) were very short (average of individual’s median latencies <1.0 s) with little variation across conditions, but latencies to accept B were not only longer than those for A in every condition, but also increased significantly as B’s rate decreased (Fig. 3, bottom panel). This study also supports the functional rationale of the SCM, as R allowed the birds to actively reject options in favour of continuing to forage for alternatives. When A and R were presented together, birds chose R (i.e. rejected A) on less than 1% of the trials, independently of the profitability of B in that treatment. But, the pattern in B vs. R trials was quite different: birds increasingly rejected B as its signalled delay increased, up to a rejection level of 73%. This trend follows the predictions of Charnov’s (1976a) diet choice model. What is crucial for our present discussion, however, is that longer delays to food were associated with both longer latencies to accept the option and an increasing probability of rejecting it when given the opportunity.

These results suggest a strong correlation between latencies to accept an option and willingness to reject it (r = 0.99 in this particular study), supporting the logic of the SCM and the assumption that latencies observed during sequential encounters are an index of the option’s attractiveness relative to the background opportunities.

While these observations are a crucial necessity for the SCM, they are also compatible with the alternative that we called the tug-of-war model. However, the two models make different predictions which we will proceed to examine.

Latency predictions: shortening of latencies during simultaneous choices

As previously mentioned, the SCM makes the rather counterintuitive prediction that latencies observed during simultaneous choices should be shorter than latencies observed during sequential encounters and that this effect should be stronger for the least preferred option. In contrast, the ToW model predicts that latencies in simultaneous encounters should be longer than in sequential ones, due to the time taken to evaluate their relative worth. Latencies observed by Shapiro et al. (2008) support the shortening predicted by SCM. To compare latency distributions during sequential and simultaneous encounters, the authors summarised these distributions as cumulative frequency scores. They divided latencies into 70 bins and calculated the proportion of all latencies accounted for by each bin. The cumulative score was then given by the total area under the cumulative distribution plot. High scores capture shorter latencies to respond because, in such cases, most latencies must occur in earlier bins (the maximum possible score of 70 occurs when all latencies occur in the first bin). Figure 4 plots the differences between the cumulative scores of the latency distributions observed during sequential and simultaneous encounters. Negative values indicate earlier latency distributions during simultaneous encounters relative to the latency distribution during sequential encounters. The figure neatly contrasts the predictions of the two models: the ToW model predicts bars to be to the right of the origin while the SCM predicts them to be to the left. For the richer option (A; upper panel), there were no substantial differences between the frequency distributions for latencies expressed in simultaneous and sequential encounters, regardless of whether A’s rate was two or four times that of B (grey and black bars, respectively). However, for the less preferred option (B; lower panel), latencies during simultaneous encounters were significantly shorter than latencies observed during sequential encounters with B, both whether the rate of A was two or four times the rate of B (grey and black bars, respectively).

Differences between latency distribution scores observed during sequential and simultaneous encounters (modified from Shapiro et al., 2008). To summarise shifts in the distributions of latencies, these authors generated cumulative frequency scores using the areas under the cumulative distributions, so that higher scores indicate shorter latency distributions. The category axis displays the difference between these scores, with negative values corresponding to shorter latencies in choice than in single-option trials. The vertical axis gives frequency as number of individuals. Latencies to options A and B are shown in the top and bottom panel, respectively. Grey and black bars identify treatments in which option A’s profitability was twice or four times that of option B, respectively. Vertical, dashed lines indicate no differences in cumulative scores between choice and single-option trials

Thus, the expected shift towards shorter latencies in simultaneous relative to sequential encounters is supported by the data, and, as expected, this shift is stronger for the least preferred (most censored) option. This prediction is hard to test in experiments when the preference for one of the options nears exclusivity, as in those cases the distribution of the preferred option is hardly censored at all and the sample sizes for choice latencies of the least preferred option can be too small to assess. There is also an expected floor effect, as latencies are likely to be composed of two elements (a minimum reaction time and a facultative and potentially variable “strategic” latency related to the value of each option relative to its context). When this value is very high, the strategic component is close to zero, and all we see in either choice or single-option trials is the reaction time so that the shift simply cannot occur.

Predicting simultaneous choice from sequential encounters

A further, exclusive, prediction of SCM is that we should be able to predict choices made when facing more than one alternative simultaneously from the latencies expressed during sequential encounters with those same alternatives. Several studies suggest that such predictions are not only possible but also surprisingly accurate (Shapiro et al. 2008; Freidin et al. 2009; Aw 2008; Vasconcelos et al. 2010).

Shapiro et al. (2008) tested this prediction in the study discussed above. To this effect, they trained starlings in several environments, each composed of two options, A and B, where A signalled a rate of reinforcement that was equal, two times greater or four times greater than B. They calculated the preference for A predicted by the model using latencies observed during sequential encounters with A and with B and compared those predictions with observed levels of preference in simultaneous choice trials. To avoid making assumptions regarding the shape of the latency distributions, the authors calculated the proportion of consecutive pairs of sequential encounters (one with A and the other with B) in which the latency for A was shorter than the latency for B and used that proportion as their overall prediction of preference strength for A. The top panel of Fig. 5 shows the results of this analysis. The slope of the resulting regression was close to one and the intercept close to zero, with R 2 ranging from 0.93 to 0.94 depending on whether or not they imposed a y-intercept of zero. This implies a very high level of accuracy in the predictions.

Top panel: scatter plot of the obtained (ordinate) versus predicted (abscissa) proportion of choices for the option with a higher rate according to the SCM. Each rate is represented by a different symbol. Two linear regression lines are included, using the across-subject proportions as data, as well as their parameters and regression coefficients (generated by the average obtained results; from Shapiro et al., 2008). Bottom panel: proportion of choices (±SEM) accurately predicted by SCM, averaged across subjects and binary-choice pairs, as a function of the number of preceding sequential encounters used to predict each individual choice. The weighted moving average was calculated as follows:\( \frac{{\sum\limits_{{i = 1}}^n {{l_{{ - 1}}} \cdot {i^{{ - 1}}}} }}{{\sum\limits_{{i = 1}}^n {{i^{{ - 1}}}} }} \) where n = 1, 2, 4, 8, 16 or 32 and l = latency (from Vasconcelos et al. 2010

While the previous analysis shows that it is possible to predict the overall preference strength in simultaneous choices from latencies expressed during sequential encounters, these predictions of overall preference strength may fail to capture local fluctuations in motivation or preference. It is to be expected that latencies registered in sequential encounters may lead to closer predictions in choice trials that are temporally close than in choice trials that are temporally distant from the sequential trials in which latencies are measured. Furthermore, while Shapiro et al. (2008) tested the predicted versus observed proportion of choices of A distributed across their data set, it should be possible to make a specific prediction for each choice based on the latencies of sequential encounters that occur in temporal vicinity.

The performance of the model in terms of global and local predictions, although presumably correlated, can in fact be dissociated. Obviously, any model capable of predicting each individual choice is necessarily also efficient over aggregates of choices, but the reverse is not necessarily true. A model that performs well over aggregates of choices can fail to predict each particular choice (e.g. Prokasy and Gorezano 1979). Suppose that an animal encounters options A and B simultaneously ten times and chooses A in the first five encounters and B in the last five. A model could predict a choice of B in first five and of A in the last five. Overall, the model’s predictions would match the animal’s preferences (the model would predict that A would be chosen 50% of the time and the animal would have chosen A in exactly that proportion). However, for each particular simultaneous encounter, there would be a complete mismatch between prediction and observed behaviour (every time the model predicted choice of A, the animal chose B and vice versa). Such an extreme situation is admittedly unlikely, but the point remains that global (or “molar”, as they are sometimes called) predictions are not identical to local (or “molecular”) predictions, and models that can be tested at both levels should be examined at both.

Vasconcelos et al. (2010) set out to test the predictions of the SCM at global and local levels, training starlings with four different options, each signalling a different delay to food reward (3, 6, 12 or 24 s). Starlings encountered these options either alone (sequential encounters) or in pairs during simultaneous presentations of two of the four options, which could generate any of six possible pairs (simultaneous choices). This was, by design, a stricter test of the model than those tried by Shapiro et al. (2008) and Freidin et al. (2009): starlings were trained not with two but with four options in the same context and faced not only one type of simultaneous binary choice but six possible choice pairings. Again, instead of fitting theoretical functions to the distributions of latencies, Vasconcelos et al. (2010) used the empirical latencies recorded during sequential encounters to generate the local predictions. To make predictions, the authors used an algorithm, developed by Aw (2008), whereby, for each particular simultaneous encounter they took, the latencies from the most recent sequential encounters involving those options and predicted that the option with the shortest latency would be chosen. Using this rather simple procedure, the authors accurately predicted 84% of all individual choices.

If the success of such a local approach is due to the sensitivity of their algorithm to local fluctuations in preference, it could be argued that such fluctuations could be better captured by some weighted average of latencies in the temporal vicinity of the choice. To test this possibility, Vasconcelos et al. (2010) generated predictions using a weighted average of recent sequential encounters, including 2, 4, 8, 16 or 32 sequential encounters per option. They used a hyperbolically decaying moving average to account for recency, with each latency’s weight inversely proportional to its distance (in number of trials) from the simultaneous choice that was being predicted. Of course, this may not be the optimal decay parameter for the temporal aggregation of latencies, but despite this shortcoming, the results were surprising: the ability of the model to accurately predict each choice increased monotonically as the number of sequential encounters considered per option increased (Fig. 5, bottom panel). When the most recent 32 sequential encounters per option were considered, the model was able to accurately predict 93% of all choices.

In summary, the SCM’s predictions appear to be fulfilled. Both Shapiro et al. (2008) and Vasconcelos et al. (2010) clearly show that latencies expressed during sequential encounters can be used to predict simultaneous choices. Most importantly, the model is able to generate both global and local predictions with considerable accuracy. The alternative model that we discussed, which involves a comparative evaluation at the time of simultaneous encounters does not, in its present form, predict choices from behaviour in sequential encounters.

Concluding remarks

We have contrasted the predictions and assumptions of two models of the mechanisms of foraging choices using starlings as model organism. The main distinction between the two models is the putative temporal cost of the cognitive operations leading to a choice. We found empirically that, when an action is pursued after being met in the presence of a simultaneous alternative, the time it takes starlings to respond is shorter than when the same action is performed in response to the same option presented alone.

On these and other collateral pieces of evidence, we favour a model of choice in which choices between simultaneously present opportunities result from each alternative being processed independently at the time of choice. Since foraging opportunities encountered individually are taken after a delay that is variable and inversely related to the context-dependent profitability of each option, the choice process resembles a horse race rather than a tug-of-war.

Although we framed our paper in a concrete, empirically based setting, we are aware of raising broader issues in the sphere of quantitative approaches to behavioural research. These issues include (1) the extent to which it is possible to produce mathematical expressions that, with few parameters and acceptable generality, model cognitive mechanisms of choice; (2) the relation between functional (optimality) considerations and mechanistic modelling approaches; (3) the criteria that are appropriate to prefer one model or class of models over another and (4) the role of parsimony in this last respect. We use these concluding remarks to explore some of the ways we feel our research relates to these questions.

The problem of models’ uniqueness is central to all of this. Because information-processing mechanisms are invisible, they must be inferred from behaviour (in humans, introspection contributes, albeit in a potentially misleading way, at least to the formulation of hypotheses). This implies that, for any given behavioural data set, there may be an infinite number of models capable of simulating the qualitative properties of the data, but it does not deny the possibility of rejecting classes of models. For the experimental protocols used here, we can discard models that lead to a time cost of choice. We cannot, however, discard all models that use some form of evaluation at the time of choice, but differ from our preferred proposal of independent, parallel processing. As an example, imagine a model like our ToW (Fig. 1, bottom left) but in which the instantaneous attractiveness (S i) of the options are summed at the time of choice instead of their difference being computed. This model would have both an evaluation component and show a shortened time to action in choices than in sequential encounters because the simultaneously present options would act synergistically, rather than competitively, shortening the time required in reaching the threshold for action.

Our leaning towards the parallel processing model instead of synergistic evaluation reflects a preference for parsimony, in two respects. The first is a preference for simpler processes. According to the SCM, there is no dedicated mechanism evolved to optimise the control of simultaneous choices. The same system that drives action in sequential encounters results in choices when options happen to be met simultaneously. Heyes (1998) forcefully made the point that parsimony may be unjustified if it is only based on a given interpretation being simpler for us to deal with, rather than being simpler for the brain to implement. We agree with her point and feel that our preference for SCM is based on the latter, rather than the former. A related second argument can be derived from evolutionary considerations. It is reasonable to assume, but not empirically tested as yet, that, in nature, foraging animals encounter feeding opportunities sequentially more often than simultaneously (Stephens and Krebs 1986). This may be particularly so for secondary consumers whose preys are likely to be more sparsely occurring and mobile. If two kinds of prey are encountered during search, each with an independent probability distribution of intervals, simultaneous encounters will involve the product of these probabilities. Admittedly, prey encounters may not be totally independent, since prey of different species may aggregate, but only very extreme and implausible aggregation parameters would make simultaneous choice as frequent as sequential encounters. As a result, natural selection has greater opportunity to optimise mechanisms that regulate behaviour in sequential than in simultaneous encounters. If mechanisms that are suitable for sequential encounters are capable of satisfactory performance and good account of data in choice situations, it seems inappropriate to postulate the existence of dedicated mechanisms for choice.

Admittedly, animals face behavioural decisions other than prey choice, and some have proposed that such decisions may often involve simultaneous choice. If this were indeed the case, then it would be possible for the mechanisms pruned by natural selection to deal efficiently with such simultaneous choice situations to be co-opted do deal with simultaneously available prey. Sexual selection offers here a paradigmatic example. It has been suggested, for example, that simultaneous choice is involved in female choice when males display in a common area, a lek (e.g. Bradbury and Gibson 1983) and when females use acoustical cues to choose (e.g. Catchpole et al. 1984; Ryan and Wilczinski 1988). But, even these claims are, at least for the time being, controversial as females probably approach displaying males sequentially (e.g. Ryan, 1985; Gibson 1990). In most other female choice situations, it is seems uncontroversial that females evaluate males sequentially (e.g. Janetos 1980; Wittenberger 1983; Parker 1983; Real 1990, 1991; Bakker and Milinski, 1991; Gibson and Langen 1996).

At the risk of belabouring the point, a further methodological consideration is worth mentioning. We instrumentalised the contrast between sequential and simultaneous encounters using trials where only one or two options were available. The fact that we can predict choices in two-option trials from behaviour in single-option trials makes it worth discussing whether this is simply a correlation or implies a causal link. We favour the latter because there is a substantial asymmetry: while it is possible to predict choices in two-option trials from latencies in single-option trials, the reverse is not possible. The data from single-option trials contain more information than those from choice trials.

One caveat relates to the broader application of our results and ideas. No set of experimental results can be used to completely exclude a model or class of models in situations or species different from those on which the ideas are tested. We have used a number of laboratory choice paradigms on a single species, and in all cases, we found that assuming independent, parallel processing of each input accommodates the results better than assuming a competitive interaction between them. At this stage, there is no way of telling whether some yet-unexplored experimental protocol or physical configuration might eventually prove the presence of an evaluation mechanism at the time of choice, that starlings’ choices in other dimensions or after further training might lead to different conclusions, or that other species might use different choice processes. The confidence in our hypothesis grows inductively: the greater and more diverse the number of protocols in which the parallel approach is a winner the greater our confidence in its correctness and its broader implications.

While fitting empirical results is a positive metric, other factors come into the picture. Different models differ in the conceptual bridges they establish with other fields. For instance, a strong tradition in animal decision-making research relates to the logic of optimal foraging theory, where decision rules (sometimes called rules of thumb or heuristics) are assumed to have evolved as a consequence of their efficiency to achieve maximal rates of return. The two most influential optimal foraging models are those proposed by Charnov to analyse rate-maximising diet choice (1976a) and rate-maximising patch exploitation (the marginal value theorem, 1976b). These models, and many later developments, incorporate the economic principle of contrasting the value of a given option against that of the background environment. This comparison may explain the presence of substantial latencies to respond in single-option trials. The latency to respond may reflect the searching effort for alternatives. Hence, when an option is the worse in its environment, an animal facing it might spend some time searching for alternatives. This was corroborated by a design in which a “reject” action was included (Freidin et al. 2009). The animals used the rejection key only when facing the worst option in the environment. This result was consistent with the predictions of the diet choice model and was predicted by the latencies to respond in encounters with the same option when the rejection key was not present, suggesting that the SCM efficiently implements the rate-maximising diet choice model.

Our continuing aim is to further explore the performance of this model across a larger number of experimental protocols and animal species, but present empirical results give us reasons for optimism for the predictive power of the SCM.

References

Aw J (2008) Decisions under uncertainty: common processes in birds, fish and humans. University of Oxford, Dissertation

Bakker TCM, Milinski M (1991) Sequential female choice and the previous male effect in sticklebacks. Behav Ecol 29:205–210

Bateson M, Kacelnik A (1995) Preferences for fixed and variable food sources: variability in amount and delay. J Exp Anal Behav 63(3):313–329

Bateson M, Kacelnik A (1996) Rate currencies and the foraging starling: the fallacy of the averages revisited. Behav Ecol 7(3):341–352

Bogacz R, Brown E, Moehlis J, Holmes P, Cohen JD (2006) The physics of optimal decision making: a formal analysis of models of performance in two-alternative forced-choice tasks. Psychol Rev 113(4):700–165

Bradbury JW, Gibson RM (1983) Leks and mate choice. In: Bateson P (ed) Mate choice. Cambridge University Press, Cambridge, pp 109–138

Catchpole CK, Dittami J, Leisler B (1984) Differential responses to male song repertoires in female songbirds implanted with oestradiol. Nature 312:563–564

Charnov EL (1976a) Optimal foraging: attack strategy of a mantid. Am Nat 110:145–156

Charnov EL (1976b) Optimal foraging: the marginal value theorem. Theor Pop Biol 9:129–136

Darwin CR (1838) ‘This is the question Marry Not Marry’ [Memorandum on marriage]. CUL-DAR210.8.2 (Darwin Online, http://darwin-online.org.uk/)

Fantino E, Abarca N (1985) Choice, optimal foraging, and the delay-reduction hypothesis. Behav Brain Sci 8:315–329

Franklin B (1772) Letter to Joseph Priestley. Printed 1987 in Writings, pp. 877–878. New York: The Library of America.

Freidin E, Aw J, Kacelnik A (2009) Sequential and simultaneous choices: testing the diet selection and sequential choice models. Behav Process 80:218–223

Gibson RM (1990) Relationship between blood parasites, mating success and phenotypic cues in male sage grouse Centrocercus urophasianus. Am Zool 30:271–278

Gibson RM, Langen TA (1996) How do animals choose their mates? Trends Ecol Evol 11:468–470

Heyes C (1998) Theory of mind in nonhuman primates. Behav Brain Sci 21:101–148

Hick WE (1952) On the rate of gain of information. Quart J Exp Psychol 4:11–26

Houston A (2010) Central-place foraging by humans: transport and processing. Behav Ecol Sociobiol. doi:10.1007/s00265-010-1119-5

Hyman R (1953) Stimulus information as a determinant of reaction time. J Exp Psychol 45:188–196

Janetos AC (1980) Strategies of female mate choice: a theoretical analysis. Behav Ecol Sociobiol 7:107–112

Kacelnik A (2003) The evolution of patience. In: Loewenstein G, Read D, Baumeister R (eds) Time and decision: economic and psychological perspectives on intertemporal choice. New York, Russell Sage, pp 115–138

Krebs JR, Kacelnik TP (1978) Tests of optimal sampling by foraging great tits. Nature 275:27–31

Marsh B, Schuck-Paim C, Kacelnik A (2004) Energetic state during learning affects foraging choices in starlings. Behav Ecol 15(3):396–399

Marshall J, McNamara J, Houston A (2010) The state of Darwinian theory. Behav Ecol Sociobiol. doi:10.1007/s00265-010-1121-y

Parker GA (1983) Mate quality and mating decisions. In: Bateson P (ed) Mate choice. Cambridge University Press, Cambridge, pp 141–166

Pompilio L, Kacelnik A (2010) Context-dependent utility overrides absolute memory as a determinant of choice. PNAS 107(1):508–512

Pompilio L, Kacelnik A, Behmer ST (2006) State-dependent learned valuation drives choice in an invertebrate. Science 311:1613–1615

Prokasy WF, Gorezano I (1979) The effect of US omission in classical aversive and appetitive conditioning of rabbits. Anim Learn Behav 7(1):80–88

Real L (1990) Search theory and mate choice. I. Models of single-sex discrimination. Am Nat 136:376–405

Real L (1991) Search theory and mate choice. II. Mutual interaction, assortative mating, and equilibrium variation in male and female fitness. Am Nat 138:901–917

Reboreda JC, Kacelnik A (1991) Risk sensitivity in starlings: variability in food amount and food delay. Behav Ecol 2(4):301–308

Ryan MJ (1985) The Túngara frog, a study in sexual selection and communication. University of Chicago Press, Chicago

Ryan MJ, Wilczinski W (1988) Coevolution of sender and receiver: effect on local mate preference in cricket frogs. Science 240:1786–1788

Schwartz L (2004) The paradox of choice: why more is less. Harper Collins, New York

Shapiro MS, Siller S, Kacelnik A (2008) Simultaneous and sequential choice as a function of reward delay and magnitude: normative, descriptive and process-based models tested in European starling (Sturnus vulgaris). J Exp Psych Anim Behav Proc 34:75–93

Slovic P (1995) The construction of preference. Am Psychol 50:364–371

Stephens DW, Krebs JR (1986) Foraging theory. Princeton University Press, Princeton

Vasconcelos M, Urcuioli P (2008) Deprivation level and choice in pigeons: a test of within-trial contrast. Learn Behav 36:12–18

Vasconcelos M, Monteiro T, Aw J, Kacelnik A (2010) Choice in multi-alternative environments: a trial-by-trial implementation of the sequential choice model. Behav Process 84:435–439

Wittenberger JF (1983) Tactics of mate choice. In: Bateson P (ed) Mate choice. Cambridge University Press, Cambridge, pp 435–447

Zupko J (2006) J Buridan. Stanford Encyclopedia of Philosophy. http://plato.stanford.edu/entries/buridan/. Accessed 18 February 2010.

Author note

This work was supported by Biotechnology and Biological Sciences Research Council Grant BB/G007144/1 to AK. MV, TM, and JA were supported by an Intra-European Marie Curie Fellowship, a doctoral grant from the Portuguese Foundation for Science and Technology, and a Biotechnology and Biological Sciences Research Council Fellowship, respectively. We are grateful to Miguel Rodriguez-Gironés for discussions and advice.

Conflict of Interest

The authors declare that they have no conflict of interest.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Guest Editor A. Houston

This contribution is part of the Special Issue “Mathematical Models in Ecology and Evolution: Darwin 200” (see Marshall et al. 2010).

Rights and permissions

About this article

Cite this article

Kacelnik, A., Vasconcelos, M., Monteiro, T. et al. Darwin’s “tug-of-war” vs. starlings’ “horse-racing”: how adaptations for sequential encounters drive simultaneous choice. Behav Ecol Sociobiol 65, 547–558 (2011). https://doi.org/10.1007/s00265-010-1101-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00265-010-1101-2