Abstract

Gene duplication is a fundamental process that has the potential to drive phenotypic differences between populations and species. While evolutionarily neutral changes have the potential to affect phenotypes, detecting selection acting on gene duplicates can uncover cases of adaptive diversification. Existing methods to detect selection on duplicates work mostly inter-specifically and are based upon selection on coding sequence changes, here we present a method to detect selection directly on a copy number variant segregating in a population. The method relies upon expected relationships between allele (new duplication) age and frequency in the population dependent upon the effective population size. Using both a haploid and a diploid population with a Moran Model under several population sizes, the neutral baseline for copy number variants is established. The ability of the method to reject neutrality for duplicates with known age (measured in pairwise dS value) and frequency in the population is established through mathematical analysis and through simulations. Power is particularly good in the diploid case and with larger effective population sizes, as expected. With extension of this method to larger population sizes, this is a tool to analyze selection on copy number variants in any natural or experimentally evolving population. We have made an R package available at https://github.com/peterbchi/CNVSelectR/ which implements the method introduced here.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

A major goal in computational genomics is to uncover the intra- and inter-specific changes that affect organismal phenotypes, including those driven by selective forces. An extensive suite of methods exists to characterize the fixation and divergence of point mutations (Anisimova and Liberles 2012), but the methods developed to date aimed at studying gene duplicates have been mostly inter-specific and based upon on patterns of sequence divergence (Conant and Wagner 2003) or retention patterns over a phylogeny (Tofigh et al. 2010; Yohe et al. 2019; Arvestad et al. 2009). There is a need for new methods that characterize selection on segregating copy number variants.

Gene duplication affecting a single gene occurs through two predominant processes. Tandem duplication leads to duplicate copies that may encompass the entire length of the gene and are initially found adjacent to one another on a single chromosome (Katju and Lynch 2006). Transposition, including retrotransposition-mediated processes, is the other common mode of duplication, which leads to unlinked duplicate copies (Innan and Kondrashov 2010).

Evidence of gene duplication has been found in all three domains of life (Zhang 2003; Lynch and Force 2000a). Within gene families, divergent function has been identified, suggesting gene duplication is an important contributor to genome diversification (Innan and Kondrashov 2010). A dramatic case involves the convergent expansion of gene families through duplication in the devil worm and in oyster genomes in response to temperature stress (Guerin et al. 2019). Additionally, copy number variation (CNV) is known to be associated with disease (Zhang et al. 2013) and the ability to adapt to new or changing environments (Perry et al. 2007; Bornholdt et al. 2013). While formally copy number variation involves large scale duplication that need not respect the boundaries of genes, our analysis and interest in analyzing copy number variation is in the subset that contains genes and is more likely to experience selection. Despite the apparent importance of duplication in genomic evolution, the mechanisms by which gene duplicates are fixed and maintained in a population are not well understood, including the mutational state (the collection of mutations differentiating the duplicate copies from each other) at the point of fixation.

Gene duplication occurs in an individual and can be fixed or lost in the population. At the time of duplication, the frequency of the new locus in a haploid population will be 1/N and 1/2N in a diploid population. If the duplicated gene is selectively neutral, its probability of fixation is its frequency in the population. As redundant duplicates segregate, mutations can accumulate. In the absence of non-neutral forces, the probability of a single mutation going to fixation decreases as population size grows. The number of functions that a protein encoding gene has or if there is an ensemble of structures that the protein can fold into can affect the likelihood of individual genes losing function (pseudogenization), becoming subfunctionalized through partition of ancestral functions, or gaining a new function (neofunctionalization). (Hughes 1994; Force et al. 1999; Lynch et al. 2001; Siltberg-Liberles et al. 2011). Mutations that disrupt the function of non-redundant proteins will be selected against at a population genetic level (Ohno 1970). Gene duplication provides new material for drift or selection to act on, and therefore has been proposed as a major driving force for functional diversification (Hughes 1994; Ohno 1970). Identical duplicates with redundant function allows natural selective pressures to be relaxed on both copies while redundancy of the functions is maintained. Reduced selective pressures on redundant copies of a locus may allow otherwise prohibited mutations (i.e. mutations which absent the redundancy introduced by duplication would have extremely negative selective effects, effectively preventing the mutated individual from reproducing) to accumulate, potentially leading to novel functions (Hughes 1994).

Duplicates can be stably maintained in a population when they differ in some aspects of their function (Zhang 2003). There is a high probability that random genetic drift will cause loss of any given duplicated gene. Most mutations in gene duplicates will be deleterious to function, although potentially selectively neutral when there are redundant copies. Loss of one copy is likely to be through fixation of a null mutation at the duplicated locus, leading to pseudogenization (Lynch and Force 2000b). Persistence of gene duplicates in the population may be driven by fixation of rare, beneficial mutations (neofunctionalization) (Ohno 1970), subfunctionalization (Force et al. 1999) or dosage balance in cases where genes are duplicated together with interacting partners (Konrad et al. 2011).

The origin of novel function is an important outcome of gene duplication. Positive selection speeds up fixation of nearly neutral substitutions that create a new, but weakly active function (Zhang 2003). In larger populations, positive selection is likely to supercede nearly neutral mutations as a driver of fixed changes. In addition to selection on mutations in the duplicate copy, there can also be selection on the duplicate copy itself. Positive and negative fitness effects have been reported for gene duplicates in many different genes. Copy-number increase in human salivary amylase (AMY1) has enabled adaptation to a high-starch diet (Perry et al. 2007) and segmental duplications of the chemokine gene CCL3L1 gene are associated with decreased susceptibility to HIV infection (Gonzalez et al. 2005). Methods to characterize selection on point mutations within duplicate genes are well established (for example, dN/dS, MacDonald-Kreitman tests, or population genetic outliers), whereas those that detect selection directly on copy number variants do not exist (Anisimova and Liberles 2012). Probabilistic models for inferring selection on gene duplicates have previously been described in an inter-specific context, but not intra-specifically (Stark et al. 2017; Konrad et al. 2011).

Allele age can be defined as the duration of time a mutant allele has been segregating in a population (De Sanctis et al. 2017). Directional selection, both positive and negative, can lead to a functional allele that is younger than expected given its frequency (Platt et al. 2019). If not lost from the population, an allele under directional selection will reach a given frequency faster than a neutral allele (Maruyama 1974).

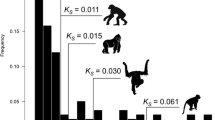

Methods to detect selection on individual SNPs that are segregating in a population (Platt et al. 2019) rely on the complication of examination of tracts of identical descent that have not been interrupted by recombination to establish age. No such method exists for CNVs, but in principle, such methods can be much simpler because the coding sequence of the gene can accumulate synonymous mutations with time anywhere in its sequence. From this, a pairwise dS value is a natural measure of CNV age, with an assumption of the neutrality of synonymous mutations. This assumption is in some cases violated, but is reasonable and dS is commonly used as a molecular clock (Anisimova and Liberles 2012). The approach makes a reasonable assumption that mutation is clock-like and consistent within a CNV.

Modelling in the biological sciences involves a balance between describing processes and mechanisms that are acting to generate biological patterns and mathematical tractability (Liberles et al. 2013; Steel 2005; Rodrigue and Philippe 2010). A key attribute is to describe patterns sufficiently while retaining as much simplicity as possible in generating an approach that extrapolates to data that has not been fit before. In describing duplicate gene segregation, such modelling trade-offs have been made in producing a new modelling framework. One common assumption in population genetic models that has been applied here is that of a constant population size. This assumption can easily be relaxed in generating a more complex model as would be appropriate.

With this in mind, a continuous time Moran model (Moran 1958) is proposed, to infer from duplicate age (measured in pairwise dS for the duplicate pair), the expected duplicate frequency in a population depending upon relevant population genetic and selective parameters. The Moran model is a stochastic model of mutational and selective processes that assumes a fixed population size (N) over generations and can be implemented in either a haploid or a diploid setting. At each instant when the state of the model may change, one gamete is chosen at random to die and is replaced by a new gamete with probabilities assigned to each genotype based on reproductive fitness and frequency in the population. Transitions in this Markov chain occur at the death of a single individual. Using this model, we test the null hypothesis of neutral evolution with the aim of building a method that enables detection of non-neutral processes acting on segregating duplicated genes based upon their age (measured in pairwise dS units) and their frequency in the population. This model has been implemented exactly in both haploid and diploid populations and is first described here. Future approximations will need to be introduced to enable extension to realistic population sizes to fit fungal, metazoan, plant, or other datasets.

Methods

We consider a locus which has only one allele at the time of duplication, so that the population starts with one individual having two unlinked copies of the gene, and \(N-1\) individuals having only a single copy. This initial frequency could easily be changed (for example if there is migration into an isolated population), but most relevant mutational processes will generate such an initial frequency at the point of origin. We present two population genetic models to model the subsequent evolution of such a population, one for the haploid case, and one for the diploid case. Both models are continuous time Markov chains similar to the classic Moran model (Moran 1958). We introduce a simple statistical test to detect selection based on this model with the underlying distribution derived from the population genetic model. The test compares the observed proportion of the population carrying the duplicate copy at a particular time to the distribution of proportions that would be attained by the model under neutrality, and can be extended to a time-series test to improve statistical power where such data would be available [for example from experimental evolution studies (Lauer et al. 2018)]. The test itself is identical for the haploid and diploid cases, except that the underlying distribution is derived from the corresponding model.

The two models share a common set of assumptions:

-

We assume that pseudogenization occurs at Poisson rate \(\mu _{\text {p}}\) for each duplicate copy, and we model an individual with a pseudogenized copy as equivalent to an individual without the extra copy, e.g. if a haploid individual has two copies of the gene, one of which becomes pseudogenized, we then consider the individual to be equivalent to a ‘wild-type’ individual with a single copy.

-

We assume that neofunctionalization occurs at a Poisson rate \(\mu _{\text {n}}\) for each duplicate copy, and always confers a fixed fitness benefit. We further assume that only one of the two loci (representing the original and duplicated copies) can become neofunctionalized, and we do not keep track of which is which.

-

We assume that individuals in the population are replaced uniformly at random at Poisson rate 1, and that they are replaced by a new individual according to the relative fitness of the potential new individual and haploid/diploid breeding regime—the individual to be replaced can participate in breeding their own replacement.

Haploid Model

We model the haploid population as a continuous time Markov chain with state space

where i tracks the number of individuals carrying an unmodified duplicate copy of the gene in question, and j tracks the number of individuals carrying a duplicate copy which has become neofunctionalized. The number of single-copy ‘wild-type’ individuals is given by \(N-i-j\).

We let \(f_{\text {d}}\) and \(f_{\text {n}}\) denote the fitness of an individual with an unmodified and a neofunctionalized duplicate copy, respectively, relative to the single-copy wild type. We allow \(f_{\text {d}}\) to take any value greater than 0, and we can interpret different values of \(f_{\text {d}}\) as corresponding to different biological processes.

-

\(f_{\text {d}} < 1\) represents a situation in which there is a cost to maintaining the duplicate that might be expected based upon the biosynthetic cost of maintaining and synthesizing products from an extra gene (Wagner 2005).

-

\(f_{\text {d}} > 1\) represents the situation where dosage effects confer a selective benefit for increasing gene dosage, for example as might occur when the product is limiting in its pathway. The extra copy can lead to increased expression, and in turn improve some physiological function.

-

\(f=1\) is the neutral case, where the effects of biosynthetic cost and dosage are negligible.

We will only consider cases here in which \(f_{\text {n}}\ge 1\), representing neofunctionalization, although allowing \(f_{\text {n}} < 1\) does not break any of our modelling assumptions. We will however consider \(1 \le f_{\text {n}} < f_{\text {d}}\), representing a situation where the neofunctionalized copy is less beneficial than the dosage effect of maintaining an extra copy of the original gene.

The process is characterized by its generator matrix

where the non-zero off-diagonals of \({{\varvec{Q}}}\) are given by,

In each case the first factor of the first term represents the death of an individual chosen uniformly from the population, while the second factor represents the birth of their replacement, chosen dependent on the relative fitness of the replacement allele to the overall fitness of the population. This factor is simply formulated as weighted sampling. The second term (where such exists) represents the effect of mutation. This generator matrix can be exponentiated to create a P(t) probability matrix for any t.

In the neutral case, where \(f_{\text {d}} = f_{\text {n}} = 1\) the model can be simplified to a simple birth-and-death process, as in this case ‘neofunctionalization’ is no longer meaningful, and we need not track the number of neofunctionalized copies. Doing so allows for much more efficient computation for the neutral case, which is of primary concern in our test for selection.

Diploid Model

The diploid model is similar to the haploid model, but there are six possible genotypes to consider: \(AA- -\), \(AAA-\) , AAAA, \(AAA'-\), \(AAAA'\), \(AAA'A'\), where A represents an unmodified copy, \(A'\) represents a neofunctionalized copy, and − represents the absence of a copy. The idea here is that we think of two sets of loci, the original and the duplicated locus, with each of the two chromosomes initially having a single copy of the gene (\(AA- -\)). The copies on both chromosomes are assumed to be duplicated by the initial duplication event (AAAA) consistent with an origin through retrotransposition, and the remaining combinations come about through subsequent mating, and neofunctionalization. The state space of this model is the set of 5-vectors with natural valued entries summing to \(\le N\),

In the interests of brevity we present the transition rates in a compact form as

where \({\varvec{s}}\) is the state of the process \({\varvec{e}}_x\) is a unit vector with 1 in the x entry, \(p_{\text{b}}(x|{\varvec{s}})\) is the probability that an individual of type x is born when the process is in state \({\varvec{s}}\), \(p_{\text {d}}(y|{\varvec{s}})\) is the probability that an individual of type y dies when the process is in state \({\varvec{s}}\), and \(C_{\text{n}}(\cdot )\), \(C_{\text{p}}(\cdot )\) are functions counting the number of ways in which \({\varvec{s}} + {\varvec{e}}_x - {\varvec{e}}_y\) can be reached from \({\varvec{s}}\) by neofunctionalization and pseudogenization, respectively. The specifics of each function are easy to determine, but unwieldy when written out, so we omit them here.

In this case, we parameterize selection in terms of the selection coefficients \(s_{\text {d}}\) and \(s_{\text{n}}\), and we assume that

where X denotes a genotype, \(n_{\text {d}}\) is the total number of duplicate copies, including neofunctionalized, while \(n_{\text {n}}\) is the number of neofunctionalized copies. For example, \(f_{AAAA'} = 1+2s_{\text {d}} + 1s_{\text {n}}\). Effectively, we assume that any selective benefit conferred from a duplicate copy is also conferred to a neofunctionalized copy, ontop of any benefit conferred from the neofunctionalization, and that the selective effects are additive. This parameterization allows us to consider all of the same underlying biology as discussed in the haploid case, but we keep the number of model parameters low by now explicitly assuming additive effects. Figure 1 shows a conceptual diagram of this rationale, where we think of some physiological function increasing with gene dosage towards an asymptote. The relationship between function and dosage can be thought of as having two modes, a ‘linear’ mode, where function is well approximated as linearly increasing in dosage, and a ‘saturation’ mode, in which increasing dosage results in little to no increase in function. This then leads to additive selection as parameterized here, where \(s_{\text {d}}>0\) corresponds to the linear mode, and \(s_{\text {d}} = 0\) corresponds to the saturation mode. To model the situation in which a neofunctionalized copy is less beneficial than an extra copy of the original gene, we allow for \(s_{\text {n}}-s_{\text {d}}< 0\), representing the difference in benefit conferred from neofunctionalization and dosage.

Similarly to the haploid model, the diploid model can be reduced significantly in the neutral case. Discarding neofunctionalization there are only three genotypes to consider, \(AA- -\), \(AAA-\) and AAAA. This leads to a model with state space

where i tracks the number of double-duplicate (AAAA) individuals, and j tracks the number of single-duplicate individuals (\(AAA-\)). In this case, the non-zero off-diagonals of the generator \({{\varvec{Q}}}\) are given by

where \(p_{\text{b}}(X|(i,j))\) denotes the probability that when a birth occurs it is of an individual with genotype X given that the current state is (i, j). We assume that the population is monoecious (equivalent to a dioecious population without sex biased allele frequencies), that individuals cannot mate with themselves, and that the offspring receives a copy of the gene from each parent at each of the original and duplicated locus, including the possibility of inheriting the absence of any gene at that locus.

Recall that under our model, replacement of individuals occurs at Poisson rate 1 and thus the time to replace N individuals is Erlang distributed, and has expectation N. We therefore say that the ‘generation time’ under the model is N, but note that this is the time expected to replace N individuals, not the expected time after some time t to replace all individuals who were present at t. The expected time for any particular individual to be replaced is 1/N.

Note also that the models do not include new duplication events. Rather it is assumed that we start with a duplicate copy and track the subsequent evolution of the population assuming no further duplication events occur. A consequence of this is that the models have an absorbing structure, and permanent fixation of the duplicate is only possible if \(\mu _{\text {p}} = 0\). When \(\mu _{\text {p}} > 0\) the new duplicate must eventually go extinct since in this case only the state (0, 0) is absorbing. However, selection acts to increase (or decrease) the timescale over which a duplicate segregates in the population. In the case of strong selection, the time it takes for the duplicate to go extinct could be much larger than the timescales under consideration here.

The time-to-extinction T follows a phase-type distribution (Latouche and Ramaswami 1999), and the expectation and variance are given by

and

respectively, where \(\mathbf{Q}^*\) is the subgenerator matrix of the process, being identical to \(\mathbf{Q}\) but with the first row and column (corresponding to the extinction state) removed.

Testing for Selection

To test for selection, we evaluate the 95% prediction band for the proportion of haploids carrying a duplicate copy under the reduced (neutral) model, given that the duplicate copy has not yet gone extinct. If the observed proportion of duplicates in a population falls outside of this region, we can reject the hypothesis of neutrality at the 95% significance level, under the assumptions of our models. Further, we can calculate the prediction bands and expectation for non-neutral models to gauge the power of the test to detect selection. We can evaluate the probability of false negatives for given fitness (under the modelling assumptions) by calculating the probability that the proportion of haploids carrying a duplicate copy in the model with selection falls within the prediction bands of the neutral model. However, since the models with selection are less computationally tractable than the neutral population, this is only possible for small population sizes at present. We anticipate that approximations will be forthcoming that allow this to be extended to large populations.

To calculate the quantities of interest, first, we can find the distribution of the Markov chain at time t

where the (i, j) entry of \(\mathbf{P}(t)\) gives the probability that the process is in state j given that it started in state i. Note that here the states i and j are stand-ins for the two-dimensional states of the haploid model (or five-dimensional states of the diploid model). We are interested specifically in the case where the process starts with exactly one individual genome in which the duplication is present, and moreover we need not distinguish between the neofunctionalized and non-neofunctionalized copies for our test of neutrality (since under neutrality everything has the same fitness). Thus, we define a vector \({\underline{p}}(t)\) such that the \(n{\text {th}}\) entry, \(p_n(t)\) gives the probability that there are n duplicates in the population of N genomes at time t. Clearly then for the haploid case we have

That is, we sum up the probabilities of being in any state with n total duplicates, given we started with 1 non-neofunctionalized duplicate to get the probability of having n duplicates. For the diploid case the summation is slightly more complex, but the procedure is the same. We can then get the probability of having n duplicates conditional on the duplicate still being segregating by simply dividing by \(p_0(t)\), hence the average frequency of segregating duplicates at time t is calculated as

where N is replaced by 2N for the diploid case. The lower prediction band at time t is given by

while the upper prediction band at time t is given by

again with N replaced by 2N in the diploid case. That is, the lower and upper prediction bands are the smallest (respectively largest) n for which the probability of observing fewer than (respectively more than) n duplicates at time t is less than or equal to 0.025, divided by the population size N (or 2N) to get a frequency. To perform the test for selection, the prediction bands need only be calculated for a fixed t, in which case they are effectively a 95% confidence interval. Neutrality is rejected if the observed frequency of the duplicate in the population falls outside of this interval.

One important consideration is the tuning of the parameter \(\mu _{\text {p}}\). The reduced model for the neutral case has only two parameters, N, and \(\mu _{\text {p}}\). The parameter N is likely to be known to reasonable accuracy. Treating N as fixed, \(\mu _{\text {p}}\) is solely responsible for tuning the model behaviour, and its value will be dependent on the organism and gene under study. A reasonable estimate of \(\mu _{\text {p}}\) can be obtained by considering the target size for pseudogenizing mutations and becomes a scalar from the background synonymous substitution rate dS in the organism and loci under study. dS is defined as the number of synonymous substitutions per site where a synonymous substitution can occur and is frequently estimated by maximum likelihood. Compared to the mutational opportunity for synonymous substitutions per synonymous site (see for example, Nei and Gojobori (1986)), the number of mutations that would introduce an early stop codon leading to a nonfunctional truncated protein, cause a protein to not fold, hit a functional residue, or hit a core region of the promoter sequence (like the TATA box) that affects all expression domains could be quantified. This will depend upon the length of the gene as well. For the purposes of our analysis, we used a ratio of synonymous substitutions per synonymous site to pseudogenization events per gene of 35, and hence we assume that the relationship between dS and \(\mu _{\text {p}}\) is given by

The background substitution rate, dS, could be evaluated for example using PAML for any pair of duplicates (Yang 2007). It is also, in principle, possible to obtain an empirical rather than a theoretical estimate of the pseudogenization rate when full population (genomic and transcriptomic) sequencing exists by observing and counting the number of pseudogenized copies of the duplicate that are segregating.

An alternative approach to tuning parameters would be to use maximum likelihood parameter estimation on a dataset consisting of the observed proportions of duplicates across many loci (potentially also across closely related species). Suppose that you had a dataset \(D = [n_i,t_i]_{i=1, \ldots ,m}\) consisting of m loci, where the \(i{\text {th}}\) loci is observed in n haploid genomes (i.e. at frequency n/N, or n/2N for the diploid case) at a time \(t_i\) after the initial duplication. Ideally, these loci would be known a priori to be neutral, and the neutral model could then be fit to the data to obtain an estimate of \(u_p\). The likelihood of observing data D under the neutral model is given by

We can maximise \(L(\cdot )\) by maximising its logarithm, which is usually more numerically stable, thus a maximum likelihood estimate \({\hat{\mu }}_{\text{p}}\) is given by

Note that the above procedure assumes, as in the test for selection itself, that the duplicate has not gone extinct in any of the observed loci. If extinct observations are included, the factor \(1/p_0(t_1)\) and term \(-log(p_0(t_i))\) in Eqs. (18) and (19), respectively should be dropped. Also, caution should be taken when applying this procedure if it is not known that the observed loci are indeed neutral, as the presence of selective effects when fitting the neutral model will bias the results of any subsequent tests against detecting selection.

The models described here, particularly the diploid model with selection, have very large sparse generator matrices which require special computational consideration. The software package expokit (Sidje 1998) largely solves these problems for small N (on the order of 1000 in the diploid case), and we used a modified version of the MATLAB implementation of expokit for our analysis. Work is underway to find suitable approximations for large N, but the test for selection itself is tractable in the haploid case for \(N=100,000\) and in the diploid case for \(N=10,000\) thanks to the reduced neutral models discussed above and the use of expokit.

Results

A test for selection on copy number variants in a population has been devised. The neutral expectation and its confidence intervals have been generated for both haploid (N = 1000, 100,000) and diploid populations (N = 1000). Under a range of selective conditions (including selection on the duplicate copy itself and selection for a neofunctionalized duplicate that would arise from new mutation), both individual realizations and the expectation are presented for both types of population. We also explicitly calculate the probability of correctly rejecting the neutral hypothesis for samples of an example haploid population taken at different times since the duplication event.

Realizations in Haploid and Diploid Populations

We calculated prediction bands for a neutral haploid population with \(N=10^5\) individuals and with a moderately high rate of pseudogenization (\(\mu _{\text {p}} = 10^-7)\). We then simulated the evolution of populations undergoing selection under three different parameterizations, panels (a–c) of Fig. 2 show the simulated sample paths overlaid over the prediction bands for the neutral case. Note that the prediction band is the same in each of panels (a–c), representing the 95% probability region of the neutral model, however, the axes scales differ to accommodate the simulated sample paths.

Each panel shows the conditional expected proportion (dashed line) of haploid genomes in a population with a duplicated copy under neutrality overlaid with 10 simulated populations experiencing selection. The neutral prediction band is shaded. Panels (a–c) show haploid populations with \(N=10^5\) individuals, where the simulated populations are experiencing a mild dosage selection and with a moderate rate of mildly beneficial neofunctionalization (\(\mu _{\text {n}}~=~10^{-8},f_{\text {d}} = 1.01, f_{\text {n}}~=~1.02\)), b moderate (positive) dosage selection with a moderate rate of highly beneficial neofunctionalization (\(\mu _{\text {n}}~=~10^{-8},f_{\text {d}} = 1.05, f_{\text {n}}~=~1.1\)), c moderate (negative) dosage selection with a moderate rate of highly beneficial neofunctionalization (\(\mu _{\text {n}}~=~10^{-8},f_{\text {d}} = 0.95, f_{\text {n}}~=~1.1\)). Panel a shows a diploid population with \(N=1000\) individuals, where the simulated populations are experiencing positive selection (\(\mu _{\text {n}}~=~10^{-6}, s_{\text {n}}~=~0.1\))

The first set of simulations (shown in panel (a)) had relatively small selective effects (\(f_{\text {d}}=1.01,f_{\text {n}}=1.02\)). The majority of these sample paths remain within the prediction bands of the neutral model (the shaded region), and our test would not detect selection in these cases. On the other hand, some of the sample paths spend some time outside of the prediction bands, and our test applied to the these simulated populations at the times during which they are outside the prediction bands would indicate the presence of selection.

The second set of simulations (shown in panel (b)) had relatively large selective effects, with both positive dosage effects (\(f_{\text {d}} = 1.05\)) and a significant substantial positive selective effect associated with neofunctionalization (\(f_{\text {n}} = 1.1\)). In this case, 9 of the 10 sample paths were well outside of the prediction bands by time \(dS = 025\), indicating that our if test applied at this time would reject neutrality in 9 of the 10 simulated populations.

The last set of simulations for a haploid population (shown in panel (c)) again had \(f_{\text {n}} = 1.1\), but with a negative dosage effect of the same magnitude as the positive effect from the previous example (\(f_{\text {d}} = 0.95\)). In this case, sample paths diverge from the neutral prediction bands much more rapidly, and selection would be detected in any of the 10 simulated populations at times greater than or equal to \({\text{d}}S = 0.025\).

We repeated the procedure for a diploid population of \(N=1000\) individuals (2000 haploid genomes) with a very low rate of pseudogenization (\(\mu _{\text {p}} = 10^{-10}\)), calculating the neutral prediction bands and simulating 10 sample paths of populations with a high rate of highly beneficial neofunctionalization (\(\mu _{\text {n}} = 10^{-6}, s_{\text {n}} = 0.1\)). The results are shown in panel (d) of Fig. 2. In this example the rate of neofunctionalization is fast compared to pseudogenization (and hence also compared to our timescale dS, which scales with \(\mu _{\text {p}}\)). The initial neofunctionalization happened quickly in each case, and the neofunctionalized copies quickly spread through the population. The low rate of pseudogenization also results in the prediction band for the neutral case becoming very wide very quickly (note the difference in scale to the previous examples), but the high rate of neofunctionalization provides a window during which selection is easily detected.

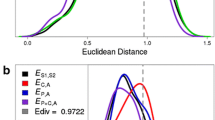

Power to Detect Selection

To better gauge the power of the test, we calculated conditional expectations and prediction bands for the model with selection under some different parameterizations. Figure 3 shows several examples. Interpreting this figure, we can say that in scenarios where the two sets of prediction bands (shaded regions) do not overlap, our test will reject neutrality at least 95% of the time. When the two sets of bands completely overlap, our test will fail to reject neutrality at least 95% of the time. When the overlap is only partial, the results of the test will fall somewhere in the middle of these two scenarios, with more failures to reject neutrality the greater the extent of the overlap. We can see from Fig. 3 that the test is unlikely to detect negative selection acting on copy number alone (where the presence of an extra copy is deleterious), as the two different prediction bands almost entirely overlap. However, negative selection on copy number increases the probability of detecting selection in the presence of beneficial neofunctionalization (where mutations in the new copy lead to a beneficial effect), as can be seen in the middle columns of Fig. 3. As selection on the copy number becomes positive, we see a significant difference in the two regions, indicating that our test is likely to be able to detect positive selection on copy number (where there is an increase in fitness). Increasing the selective benefit of neofunctionalization results in a similar picture, although notably when there is no dosage effect (\(f_{\text {d}} = 1\)), the prediction bands under selection partially overlap the neutral bands even at large times, indicating the potential for false negatives in this scenario. In the neutral scenario the test will of course correctly fail to reject neutrality 95% of the time by definition (and incorrectly reject neutrality 5% of the time).

Comparison of the expected proportion of haploid genomes with a duplicate copy conditional on non-extinction of the duplicate copy in the population. Prediction bands are shown for the neutral (light shading) and non-neutral (dark sharding) cases, where the non-neutral case has varying selective effects for each subplot (\(N=1000,\mu _{\text {p}}=10^{-5},\mu _{\text {n}}=10^{-6}\) in all cases). The magnitude of positive selection on the neofunctionalized copy (\(f_{\text {n}}\)) is increasing in the columns, while dosage selection is going from negative \((fd<1)\) to positive \((fd>1)\) in the rows

In terms of the biology underlying the parameterization, \(f_{\text {d}}<1\) reflects the biosynthetic cost of maintaining an extra copy in the genome (Wagner 2005) whereas \(f_{\text {d}}>1\) reflects a fitness advantage from the linear response region in Fig. 1, as was seen with extra copies of amylase in the human population associated with eating a high starch diet (Perry et al. 2007). \(f_{\text {n}}>1\) reflects an advantage to a new function based upon a new mutation arising. The case where \(f_{\text {d}}>f_{\text {n}}\) reflects the case where the new function associated with the new mutation provides less of an advantage than extra copies of the original gene, associated presumably with a greater concentration of the encoded protein in relevant cell types.

The power of the test to detect selection of a given magnitude at any point in time can also be calculated explicitly by finding the probability that a sample taken at that time will fall within the prediction band of the neutral model. Figure 4 shows a graph of the probability that a sample taken from a haploid population with parameters \(N=1000,f_{\text {d}}=0.9,f_{\text {n}}=1.1,\mu _{\text {p}}=10^{-5},\mu _{\text {n}}=10^{-6}\) will result in the rejection of neutrality under our test. The shape of the curve is similar for other parameters, with the strength of selection (and population size) being the main determining factors for how long a duplicate must have been segregating before the test becomes reliable.

Discussion

A new approach for characterizing selection on segregating gene duplicates (copy number variants or CNVs) has been established based upon the expected relationship between an allele’s age and its frequency in a population when segregating neutrally. This approach characterizes selection on the duplicate copy itself rather than on mutations that occur within the duplicates while segregating. While many models for duplicate gene retention assume that fixation of the duplicate copy occurs before fate determining mutations under selection begin to act (Innan and Kondrashov 2010), this assumption may be violated frequently for a number of reasons, most particularly when mutation rates and/or effective population sizes are large. These are the scenarios when this method has particular power to reject neutrality because selection is a stronger force in larger populations where random drift accounts for a smaller fraction of fixed changes.

Similar approaches have recently been applied to characterize selection on SNPs segregating in a population (Platt et al. 2019). In those scenarios, characterizing the age of an allele depends upon characterizing tracts of identity by descent. Here, characterization of allele (duplicate) age is much simpler, relying only upon the pairwise dS value between the copies. More complex schemes to examine selection on CNVs have been presented (Itsara et al. 2010; Hsieh et al. 2019), but use orthogonal information to that used in this method. Such methods are based upon either simulating under the coalescent or rely upon genome-wide frequencies of CNVs.

In this paper, in addition to presenting the neutral baseline and prediction bands about it, we have analyzed the statistical power of the test under a number of simple but realistic selective schemes and presented cases where one would expect to have the power to reject neutrality. The parameterization of test cases in this model is meant to capture costs of maintaining and expressing duplicate copies (Wagner 2005) as well as selective advantages ranging in effect from amylase in the human population (Perry et al. 2007) to duplicate copies that emerge during yeast experimental evolution (Lauer et al. 2018). The cases where one expects this method to have sufficient power include population sizes much smaller than would be expected for most species of interest to the population ecology/ecological genomics community. While the approach presented here is an exact solution that has not yet reached population sizes that are reflective of those for many eukaryotic populations of interest, approximations to the neutral baseline are currently under development that will enable generation of the test statistic to compare to population genomic data for any species of interest. For example, the null model under simple assumptions can be specified with a diffusion approximation that is under evaluation. Models for more complex regimes that are approximated with a state space based upon the allele number rather than the population structure are also under evaluation. The work here lays the basic science foundations for these future developments and future applications to biological data and problems. With these future developments and characterization of model performance, we envision the generation of a tool for empirical data analysis.

Data Availability

No original research data were presented in this paper. Code used to perform the analysis is available at https://github.com/TristanLStark/DetectingSelection. An R script to run the full analysis has been made available at https://github.com/peterbchi/CNVSelectR/blob/master/R/CNVSelect_test.R.

References

Anisimova M, Liberles D (2012) Detecting and understanding natural selection. In: Cannarozzi GM, Schneider A (eds) Codon evolution: mechanisms and models, vol 6. Oxford University Press, Oxford, pp 73–96

Arvestad L, Lagergren J, Sennblad B (2009) The gene evolution model and computing its associated probabilities. J ACM 56(2):1–100. https://doi.org/10.1145/1502793.1502796

Bornholdt D, Atkinson TP, Bouadjar B, Catteau B, Cox H, De Silva D, Grzeschik K (2013) Genotype-phenotype correlations emerging from the identification of missense mutations in MBTPS2. Hum Mutat 34(4):587–594. https://doi.org/10.1002/humu.22275

Conant GC, Wagner A (2003) Asymmetric sequence divergence of duplicate genes. Genome Res 13(9):2052–2058. https://doi.org/10.1101/gr.1252603

De Sanctis B, Krukov I, de Koning AJ (2017) Allele age under non-classical assumptions is clarified by an exact computational Markov chain approach. Sci Rep 7(1):1–11. https://doi.org/10.1038/s41598-017-12239-0

Force A, Lynch M, Pickett FB, Amores A, Yan Y-L, Postlethwait J (1999) Preservation of duplicate genes by complementary, degenerative mutations. Genetics 151(4):1531–1545

Gonzalez E, Kulkarni H, Bolivar H, Mangano A, Sanchez R, Catano G, Ahuja SK (2005) The influence of CCL3L1 gene-containing segmental duplications on HIV-1/AIDS susceptibility. Science 307(5714):1434–1440. https://doi.org/10.1126/science.1101160

Guerin MN, Weinstein DJ, Bracht JR (2019) Stress adapted Mollusca and Nematoda exhibit convergently expanded hsp70 and AIG1 gene families. J Mol Evol 87(9–10):289–297. https://doi.org/10.1007/s00239-019-09900-9

Hsieh P, Vollger MR, Dang V, Porubsky D, Baker C, Cantsilieris S, Sorensen M et al (2019) Adaptive archaic introgression of copy number variants and the discovery of previously unknown human genes. Science 366:6463

Hughes AL (1994) The evolution of functionally novel proteins after gene duplication. Proc R Soc Lond B 256(1346):119–124. https://doi.org/10.1098/rspb.1994.0058

Innan H, Kondrashov F (2010) The evolution of gene duplications: classifying and distinguishing between models. Nat Rev Genet 11(2):97–108. https://doi.org/10.1038/nrg2689

Itsara A, Wu H, Smith JD, Nickerson DA, Romieu I, London SJ, Eichler EE (2010) De novo rates and selection of large copy number variation. Genome Res 20(11):1469–1481. https://doi.org/10.1101/gr.107680.110

Katju V, Lynch M (2006) On the formation of novel genes by duplication in the Caenorhabditis elegans genome. Mol Biol Evol 23(5):1056–1067. https://doi.org/10.1093/molbev/msj114

Konrad A, Teufel AI, Grahnen JA, Liberles DA (2011) Toward a general model for the evolutionary dynamics of gene duplicates. Genome Biol Evol 3:1197–1209. https://doi.org/10.1093/gbe/evr093

Latouche G, Ramaswami V (1999) Introduction to matrix analytic methods in stochastic modeling. ASA-SIAM series on statistics and applied mathematics. Society for Industrial and Applied Mathematics, Philadelphia

Lauer S, Avecilla G, Spealman P, Sethia G, Brandt N, Levy SF, Gresham D (2018) Single-cell copy number variant detection reveals the dynamics and diversity of adaptation. PLoS Biol. 16(12):e3000069

Liberles DA, Teufel AI, Liu L, Stadler T (2013) On the need for mechanistic models in computational genomics and metagenomics. Genome Biol. Evol. 5(10):2008–2018

Lynch M, Force A (2000a) The probability of duplicate gene preservation by subfunctionalization. Genetics 154(1):459–473

Lynch M, Force AG (2000b) The origin of interspecific genomic incompatibility via gene duplication. Am Nat 156(6):590–605. https://doi.org/10.1086/316992

Lynch M, O’Hely M, Walsh B, Force A (2001) The probability of preservation of a newly arisen gene duplicate. Genetics 159(4):1789–1804

Maruyama T (1974) The age of an allele in a finite population. Genet Res 23(2):137–143. https://doi.org/10.1017/S0016672300014750

Moran PAP (1958) Random processes in genetics. In: Mathematical proceedings of the cambridge philosophical society, vol 54, Cambridge University Press, Cambridge, pp 60–71. https://doi.org/10.1017/S0305004100033193

Nei M, Gojobori T (1986) Simple methods for estimating the numbers of synonymous and nonsynonymous nucleotide substitutions. Mol Biol Evol 3(5):418–426. https://doi.org/10.1093/oxfordjournals.molbev.a040410

Ohno S (1970) The enormous diversity in genome sizes of fish as a reflection of nature’s extensive experiments with gene duplication. Trans Am Fish Soc 99(1):120–130. https://doi.org/10.1577/1548-8659(1970)99h120:TEDIGSi2.0.CO;2

Perry GH, Dominy NJ, Claw KG, Lee AS, Fiegler H, Redon R, Stone AC (2007) Diet and the evolution of human amylase gene copy number variation. Nat Genet 39(10):1256–1260. https://doi.org/10.1038/ng2123

Platt A, Pivirotto A, Knoblauch J, Hey J (2019) An estimator of first coalescent time reveals selection on young variants and large heterogeneity in rare allele ages among human populations. PLoS Genet 15:8. https://doi.org/10.1371/journal.pgen.1008340

Rodrigue N, Philippe H (2010) Mechanistic revisions of phenomenological modeling strategies in molecular evolution. Trends Genet 26(6):248–252

Sidje RB (1998) Expokit: a software package for computing matrix exponentials. ACM Trans Math Softw 24(1):130–156. https://doi.org/10.1145/285861.285868

Siltberg-Liberles J, Grahnen JA, Liberles DA (2011) The evolution of protein structures and structural ensembles under functional constraint. Genes 2(4):748–762

Stark TL, Liberles DA, Holland BR, O’Reilly MM (2017) Analysis of a mechanistic Markov model for gene duplicates evolving under subfunctionalization. BMC Evol Biol 17(1):1–16. https://doi.org/10.1186/s12862-016-0848-0

Steel M (2005) Should phylogenetic models be trying to ‘fit an elephant’? Trends Genet 21(6):307–309

Tofigh A, Hallett M, Lagergren J (2010) Simultaneous identification of duplications and lateral gene transfers. IEEE/ACM Trans Comput Biol Bioinform 8(2):517–535. https://doi.org/10.1109/TCBB.2010.14

Wagner A (2005) Energy constraints on the evolution of gene expression. Mol Biol Evol 22(6):1365–1374. https://doi.org/10.1093/molbev/msi126

Yang Z (2007) PAML 4: phylogenetic analysis by maximum likelihood. Mol Biol Evol 24(8):1586–1591. https://doi.org/10.1093/molbev/msm088

Yohe LR, Liu L, Dávalos LM, Liberles DA (2019) Protocols for the molecular evolutionary analysis of membrane protein gene duplicates. In: Sikosek T (ed) Computational methods in protein evolution, vol 1851. Springer, New York, pp 49–62. https://doi.org/10.1007/978-1-4939-8736-83

Zhang C, Zhang C, Chen S, Yin X, Pan X, Lin G, Wang W (2013) A single cell level based method for copy number variation analysis by low coverage massively parallel sequencing. PloS ONE 8:1. https://doi.org/10.1371/journal.pone.0054236

Zhang J (2003) Evolution by gene duplication: an update. Trends Ecol Evol 18(6):292–298. https://doi.org/10.1016/S0169-5347(03)00033-8

Acknowledgements

We would like to thank the Australian Research Council for partially funding this research through Discovery Project DP180100352. We would also like to thank Ryan Houser for careful reading of an early version of the manuscript and for helpful discussions, Gene Maltepes for computational support, and Catherine Browne for technical assistance in the preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

This study was conceived by DAL and TLS. Modeling and theoretical results were generated by TLS and RSK. Computer code for simulations was written and run by TLS, RSK, MAM, and PBC. The manuscript was written by DAL, TLS, RSK, and MAM.

Corresponding authors

Additional information

Handling editor: Liang Liu.

Rights and permissions

About this article

Cite this article

Stark, T.L., Kaufman, R.S., Maltepes, M.A. et al. Detecting Selection on Segregating Gene Duplicates in a Population. J Mol Evol 89, 554–564 (2021). https://doi.org/10.1007/s00239-021-10024-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00239-021-10024-2