Abstract

We use large-scale mutagenesis data and computer simulations to quantify the mutational robustness of protein-coding genes by taking into account constraints arising from protein function and the genetic code. Analyses of the distribution of amino acid substitutions from 18 mutagenesis studies revealed an average of 45% of neutral variants; while mutagenesis data of 12 proteins artificially designed under no other constraints but stability, reach an average of 60%. Simulations using a lattice protein model allow us to contrast these estimates to the expected mutational robustness of protein families by generating unbiased samples of foldable sequences, which we find to have 30% of neutral variants. In agreement with mutagenesis data of designed proteins, the model shows that maximally robust protein families might access up to twice the amount of neutral variants observed in the unbiased samples (i.e. 60%). A biophysical model of protein-ligand binding suggests that constraints associated to molecular function have only a moderate impact on robustness of approximately 5 to 10% of neutral variants; and that the direction of this effect depends on the relation between functional performance and thermodynamic stability. Although the genetic code constraints the access of a gene’s nucleotide sequence to only 30% of the full distribution of amino acid mutations, it provides an extra 15 to 20% of neutral variants to the estimations above, such that the expected, observed, and maximal robustness of protein-coding genes are approximately 50, 65, and 75%, respectively. We discuss our results in the light of three main hypothesis put forward to explain the existence of mutationally robust genes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Mutations propel the evolutionary process by introducing genetic variation into populations. There are multiple forms of mutations which from the standpoint of the genotype are usually classified according to their size from single-point and indels, to translocations and large genomic rearrangements (Nei 2013). The relative frequency of these types of mutations is expected to vary. In populations of E. coli, for instance, it was found that single-point mutations are the most common, reaching approximately 50% (Tenaillon et al. 2016).

From the standpoint of their effect on phenotype, mutations are broadly classified as deleterious, neutral or beneficial. The available fraction of neutral mutations, or mutational robustness, is of particular interest in genetics and evolutionary biology due to its role on the resistance of phenotypes to genetic insults, or on a population’s potential to sustain genetic variation. Our goal here is to quantify the mutational robustness of protein-coding genes as the fraction of neutral single-point mutations. For our purposes, we define the neutral variants of a gene as those that have a negligible impact on function.

Early mutagenesis studies of a handful of genes revealed a substantial fraction of neutral variants in proteins. For instance, two studies introduced 1500 and 4000 independent single-point mutations in the Lac repressor and showed that approximately 50% and 44% of mutations did not lead to phenotypic effects (Miller 1979; Suckow et al. 1996). A similar work studying bacteriophage T4 lysozyme showed that 55% of sites tolerated all single-point mutations tested (Rennell et al. 1991).

By the mid 90s two types of computational approaches were used to study the effect of mutations in proteins. The first was based on simplified short polymers folded on a two- or three-dimensional lattice (Lau and Dill 1989; Dill et al. 1995). This type of models sacrifice details of the complex molecular structure of proteins to recapitulate the overall statistics and thermodynamics of the sequence-structure map. Lattice models revealed that thermodynamic stability is a key aspect of a protein’s robustness to mutation. The model showed that conformations with a large number of interresidue contacts tend to accumulate stabilizing interactions, should be more prepared to resist the impact of mutations on phenotype, and therefore are associated to a larger number of sequences. However, it also became clear that robust folds are not only associated to a larger fraction of stabilizing interactions (Bornberg-Bauer 1997), but also are endowed with optimal geometries (Finkelstein et al. 1994), oftentimes simpler and more symmetrical than the average fold (Li et al. 1996; Hartling and Kim 2008). A second insight from lattice models was that mutational robustness is to some extent encoded in the sequence-stability map of proteins. By accumulating stabilizing interactions protein sequences approach a “consensus” sequence of high-stability that gives a funnel-like topology to the local sequence-stability map (Bornberg-Bauer and Chan 1999).

Insights from lattice models were echoed by the study of hundreds of crystal structures of proteins, and the development of energy functions for the prediction of changes in thermodynamic stability caused by mutations (Guerois et al. 2002). These studies suggested that destabilizing single-point mutations reach on average 65 to 70%, are 20 times more common than stabilizing mutations, and therefore, proteins are expected to access approximately 25 to 30% of neutral single-point mutations (Tokuriki et al. 2007; Tokuriki and Tawfik 2009). Thus, computational approaches predicted generally lower fraction of neutral variants than previous empirical studies, provided a mechanistic basis for the interpretation of compensatory mutations (DePristo et al. 2005), and supported the hypothesis that mutational robustness is an intrinsic property of molecular structure (Finkelstein et al. 1994; Li et al. 1996).

From an evolutionary perspective, however, instead of thermodynamic stability what is really subject to selective pressure is the gene’s function (Maynard-Smith 1970). Indeed, it has been estimated that as much as \(\approx\)25% of variants causing monogenic diseases can not be explained by their impact on thermodynamic stability alone (Yue et al. 2005; Redler et al. 2016). Importantly, most proteins have only marginal levels of thermodynamic stability, and when contrasted to functional performance might be subject to an evolutionary trade-off (Tokuriki et al. 2007), confounded by genetic drift (Goldstein 2011). In other words, protein-coding genes evolve just enough stability to be able to perform their functions, and in doing so they inevitably accumulate mutations that might readily impact their stability. Several models have been proposed to explain the dependence between stability and molecular function which is expected to vary from protein to protein, and from function to function (Echave and Wilke 2017).

Similar to the effect of function or stability on protein evolution, the cellular environment imposes a variety of constraints, such as aggregation propensity, the overlap of regulatory and coding sequences, or codon bias driven by gene expression (Zhang and Yang 2015). Therefore, the mutational robustness of the average protein-coding gene results from the joint effect of several forces, and is expected to differ from both the robustness of an unbiased sample of foldable sequences, and from the robustness that a given protein can potentially evolve. Indeed, theory and experiment suggests that under large mutation rates and/or effective population size (i.e. \(\mu N_e > 1\)), proteins can evolve increasing levels of mutational robustness (Van Nimwegen et al. 1999; Bloom et al. 2007; Bornberg-Bauer and Chan 1999). However, such conditions are rare for even unicellular organisms (Lynch and Conery 2003). Alternatively, mutational robustness might evolve by congruence, that is, as a byproduct of other mechanisms subject to selection, such as mistranslation (Bratulic et al. 2015), or robustness to recombination (Azevedo et al. 2006). An important step forward to gauge the contribution of these forces is to accurately quantify the expected mutational robustness of protein-coding genes.

The development of new techniques for large-scale mutagenesis has allowed the study of molecular functions at high resolution (Shendure and Fields 2016). These techniques can quantify the functional effect of all possible single-point mutations of a gene, or distribution of fitness effects (DFE) (Eyre-Walker and Keightley 2007). Analyses of the DFE of several genes have revealed non-trivial multimodal patterns, with significant gene-to-gene variations, and a dependence on environmental conditions (McLaughlin et al. 2012; Melamed et al. 2013; Roscoe et al. 2013; Starita et al. 2013; Melnikov et al. 2014; Olson et al. 2014; Kitzman et al. 2015; Stiffler et al. 2015; Sun et al. 2020). Similarly, advances in protein design used in combination with mutagenesis experiments, have made empirically feasible to chart regions of sequence space in an manner that is not biased by natural selection, as seen through the effect of functional constraints, or the adaptation to the cellular environment (Rocklin et al. 2017).

Here, our goal is to quantify the average mutational robustness of protein-coding genes by studying constraints arising from protein function and the genetic code. First, we estimate mutational robustness from large-scale mutagenesis data of a broad set of natural proteins. Then, we study designed proteins, which have been highly optimized for stability in the absence of other constraints, and therefore can provide information about the maximal robustness accessible to proteins. To put our estimations in context, we study unbiased samples of foldable sequences by implementing a computational lattice model of the sequence-structure map of proteins. This approach allows us to provide estimations for the maximal mutational robustness of proteins, as well as a rough estimation of the robustness cost of constraints associated to molecular function modeled as a ligand-binding interaction. Finally, we interpret our results from the perspective of the protein-coding gene by evaluating the impact of the genetic code on the accessibility to synonymous and non-synonymous mutations.

Methods

Large-Scale Mutagenesis Data

Mutagenesis data was obtained from the literature and from a recent database of large-scale mutagenesis studies (Esposito et al. 2019). We compiled data from 18 natural (Table 1), and 12 artificially designed (Table S1) proteins. Overall, selected studies report an average of 94% of the full set of expected variants per experiment, which in total reaches over 115,000 variants. Scoring strategies of mutagenesis data can be used as a proxy for the selection coefficient, s, of variants. Here we rely on the information reported by each study, which can be generally grouped into two scoring strategies. The first approach normalizes counts (N) of a particular variant (i), observed in the input (inp) and selection (sel) libraries, with respect to the counts of the wild type or reference sequence (\(N_{wt}\)), as:

Using this definition of \(s_i\), neutral variants are close to zero, and deleterious/beneficial mutations adopt values lower/higher than zero (McLaughlin et al. 2012; Roscoe et al. 2013; Olson et al. 2014; Kitzman et al. 2015; Stiffler et al. 2015; Mishra et al. 2016; Rocklin et al. 2017). An alternative scoring strategy is to normalize for both synonymous and non-sense (stop) codons.

where \(\phi _{i} = N_{i}^{{{\text{sel}}}} /N_{i}^{{{\text{inp}}}}\). This definition distinguishes deleterious from neutral variants, which are centered around zero and one, respectively (Weile et al. 2017; Jiang 2019; Sun et al. 2020).

Classification of Neutral Variants

To classify neutral variants we use two approaches. The first approach is based on a set of control variants, which can be either the synonymous variants of the reference (i.e. wild type) sequence, or all variants subject to a control condition (e.g. no selection, no substrate). We use these control variants to obtain \(n_r\) = 100 replicate samples of the same size as the number of mutagenized sites in the protein. To each of these samples we fit a normal distribution and use the mean (\(\mu_o\)) and standard deviation (\({\sigma }_o\)) to define neutral variants as those with selective coefficients that are at n standard deviations around the mean selection coefficient of control variants (i.e. \(\mu _o-n\sigma _o\le {s}\le \mu _o+n\sigma _o\)). Finally, we report the mean mutational robustness and 95% confidence intervals obtained from the \(n_r\) resampling iterations (Table S1–2).

As an alternative strategy, we classify variants by modeling a protein’s distribution of fitness effects (DFE) as a mixture of normal distributions. We use an expectation maximization (EM) algorithm to fit a normal mixture, defined as:

where \(\sum\limits_{{k=1}}^{n} {\lambda _{k} } = 1.0\). According to the model, we define a neutral component, \(\mathcal {N}(\mu _o, \sigma ^2_o)\), as the one with a mean closest to the selection coefficient of the reference sequence. Similarly, we define as deleterious (\(\mathcal {N}(\mu _{d}, \sigma ^2_{d})\)) and beneficial (\(\mathcal {N}(\mu _b, \sigma ^2_b)\)) components those directly at the left and right of the neutral component, respectively. In some cases a DFE might have multiple deleterious components or no beneficial components (Figure S1A). As in the first approach, we define neutral variants as those with selective coefficients at n standard deviations around the mean of the neutral component (\(\mu _o\)).

To decide which value of n would lead to an accurate classification of neutral variants, we modeled the false negative (FN) and false positive (FP) rates of variants based on the mean and standard deviations of the distributions of neutral, deleterious, and beneficial variants (Table S2). Using these parameters, we calculated the FN and FP rates as follows:

with \(erf(\cdot )\), the error function. Because the rate of true positives (TP) and true negatives (TN) can be estimated as: \({\text{TP}}~ = ~1 - {\text{FP}}\); and \({\text{TN}}~ = ~1 - {\text{FN}}\); the accuracy (acc) of correctly classifying neutral variants can be expressed as:

Normal mixture parameters were estimated from the DFE in the datasets of 18 natural proteins (Table S2). We found that the accuracy of classification does not increase significantly above n = 1.5 standard deviations (Figure S1B, S1C).

A Protein Lattice Model

Protein lattice models consist of three main parameters: sequence length (L); a monomer alphabet (\(\mathcal A\)) of size \(\alpha\); and an energy function (\(\mathbf{U}\)). A sequence s, is composed of L monomers drawn from \(\mathcal A\). A self-avoiding walk algorithm can be used to enumerate the set of all possible conformations (\(\mathcal P\)). The energy function, \(\mathbf{U}_{ij}\), specifies the interaction energy between monomers i and j along the sequence, and can be used to calculate the ground-state energy (E), of a sequence s folded onto c, as the total number of interresidue contacts, weighted by the energy function, as: \(E(s,c)~ = \sum\nolimits_{{i < j}}^{L} {U_{{ij}} } \Delta _{{ij}}^{c}\). The function \(\Delta _{{ij}}^{c}\), adopts the value of 1 if monomers at positions \({i}\) and \({j}\) are in contact, 0 otherwise. Similarly, the stability (\(\Delta G\)) of a sequence s folded onto conformation c, can be calculated as (Bornberg-Bauer and Chan 1999):

\(k_B\) is the Boltzmann constant and T, the absolute temperature. The degeneracy of sequence s, \(n\{E\}\), quantifies the number of conformations with a minimum energy E. A sequence s is said to be foldable (or viable), if it complies with two requirements. First, it must adopt a minimum ground energy (i.e. \(E_{N} = E(s,c)\)) folded onto a single conformation c, and therefore, \(n\{E_N\}\) = 1; in which case we called c its native conformation. The second requirement of foldability is \(\varDelta G(s)\le\)0.0.

Currently, the fraction of foldable sequences (\(f_v\)) of natural proteins is unknown and empirical analyses using libraries of random sequences have suggested values ranging between 5 and 20% (Rao et al. 1974; Doi et al. 2005). To account for this property in the model, we introduce a parameter \(\zeta\), which allows us to scale the fraction of viable sequences (\(f_v\)) using as a criterion the second requirement for foldability (i.e. \(\varDelta G \le\)0.0). We set \(f_v\) to 20% of viable sequences (\(\zeta\) = 0.07) (Figure S2).

In this study we use a two-dimensional lattice of sequence length 16 mer, with an amino acid alphabet of size 20; and the Miyazawa-Jernigan (MJ) energy function (Table VI from Miyazawa and Jernigan (1985)). The model allows us to simulate the entire space of unique and foldable conformations, which in lattice models of similar polymer length reaches \(\approx\)40% of all possible conformations (Figure S3A). In case of the model of L = 16, there are 15,048 foldable conformations, similar in distribution and quantity to the currently known protein families (El-Gebali et al. 2019) (Figure S3B).

Sequence Sampling

We obtain unbiased samples of sequences using a Monte Carlo algorithm (Jerrum and Sinclair 1996). We start with a sequence s that folds uniquely onto conformation c. We choose a position and substitute it with any of the other 19 amino acids, in both cases uniformly at random. We adopt the new sequence \(s^{\prime}\) if it folds onto a unique conformation \(c^{\prime}\) (whether or not it is equal to c), and \(p_f(s^{\prime})\ge p_f(s)\). Where \(p_f(s)\), is the folding probability of sequence s onto its native conformation (c), defined as:

If \(p_{f} (s^{\prime}) < p_{f} (s)\), then the mutation is accepted with probability \([p_f(s^{\prime})/p_f(s)]^\gamma\). The parameter \(\gamma\) controls the likelihood of selecting a new viable sequence and we set it to \(10^3\), which favors larger folding probabilities. To ensure independence we first carried out a burning-in simulation of 10,000 rounds, and single sequences are sampled every 350 rounds after that, which ensures an average of 20 mutations per site.

Simulation of Functional Constraints

To simulate functional constraints we construct a biophysical model of ligand-binding. We define a target as a protein sequence folded onto its native conformation. Functional performance (\(F_p\)) is defined as the probability that a target binds a compatible ligand, modeled as a short peptide of length l. \(F_p\) can be quantified by the conditional probability that a ligand binds a target given that the target sequence is folded onto its native conformation, or \(p_b\) = P(b|N); times the folding probability of sequence s, or \(p_f\) = P(N) (Eq. 8). The term \(p_b\), represents the fraction of the target bound to a ligand, and depends on both the ligand concentration ([L]), and the binding energy between the ligand at b positions in the target (\(\varDelta \epsilon\)) (Phillips et al. 2012). Similar to the lattice model, the energy of interaction between the ligand and the target is calculated using the MJ energy function: \(\Delta \epsilon = \sum\limits_{i}^{b} {\sum\limits_{j}^{L} {} } {\mathbf{U}}_{{ij}} \Delta _{{ij}}^{c}\); with i and j, the pairs of ligand and target residues in contact.

We use \(p_b\) to define two biophysical models for the effect of thermodynamic stability on functional performance (Echave and Wilke 2017). The soft threshold model assumes that a stronger interaction energy between ligand and target facilitates function, and therefore, it follows a sigmoidal function of the form: \(p_{b} = ~[L]/([L] + e^{{\eta \in \Delta }} )\). For simplicity, we set [L] to 1.0 and \(\eta\) to 10, such that \(\varDelta \epsilon\) corresponds approximately to one standard deviation around the mean interaction energy caused by a single mutation, which in the case of MJ pseudo-energy values is \(\approx\) 0.2 kcal/mol, and at which \(p_{b}\) = 0.9. The second model, called optimum stability, assumes that there is a \(\varDelta \epsilon\) at which functional performance is optimal, and is defined as a normal distribution, \(p_{b} = {\mathcal{N}}(\mu ,\sigma ^{2} )\). We parameterize the distribution such that for a \(\varDelta \epsilon\) of ± 0.2 kcal/mol, \(p_{b} = {\mathcal{N}}\)(0.0, 0.04) = 0.9.

Data Availability

Mutagenesis data is available through the supplementary material of references listed in Table 1, and several can be found in Esposito et al. (2019). R code to fit a normal mixture and classify neutral variants from the distributions of selection coefficients, as well as scripts and data to reproduce figures is provided as supplementary data (https://github.com/eferrada/QMutRobust).

Results

The Average Mutational Robustness of Natural Proteins

We quantify mutational robustness as the fraction of neutral variants (\(\lambda\)) available to a protein-coding gene. First, we consider \(\lambda\) as a result of constraints imposed by protein structure and function on the full distribution of amino acid substitutions in a protein, or \(\lambda _{prot}\). And second, we account for the combined effect of protein structure/function plus constraints arising from the genetic code on the nucleotide sequence, or \(\lambda _{nuc}\). We begin by estimating \(\lambda _{prot}\) using data from large-scale mutagenesis studies. We collected datasets of 18 natural proteins originally found in different organisms including bacteria and eukarya, range between 35 to 550 amino acids, and belong to diverse folds and families, which suggest that they are evolutionarily unrelated (Table 1). Similarly, the assays used in the experiments mimic the proteins’ natural functions, which are diverse, including peptide binding, RNA/DNA binding, and catalysis (Table 1). Overall, these studies have an average of 94% of all single mutations per protein, and encompass over 90,000 variants (Table 1).

Importantly, our definition of neutrality differs from a population genetics perspective. The reason is that estimations of the selection coefficient of mutations is simply a proxy which is highly dependent on the experimental conditions. For instance, selection assays used in these type of experiments oftentimes work at high, unrealistic gene expression levels (Boucher et al. 2016), or depend on the presence of a ligand (Stiffler et al. 2015). Thus, we restrict the use of the term neutral variant, to mean a mutation with a negligible effect on the gene’s function. We use two approaches to define neutral variants from data (Methods).

In the first approach, we define a set of control variants based on either synonymous mutations or variants under conditions of no selection. In the second approach control variants are defined as those part of the “neutral” component of a normal mixture fit to the DFE (Methods). The neutral component is defined as the one with a mean closest to the selection coefficient of the reference sequence (i.e. s = 0). In both approaches the set of control variants are described by a normal distribution: \(\mathcal {N}(\mu _o, \sigma ^2_o)\); and we use the mean (\(\mu _o\)) and standard deviation (\(\sigma _o\)) of this distribution to define neutral variants as those with a selection coefficient of at most 1.5 standard deviations away from the neutral mean (i.e. \(\mu _o-1.5\sigma _o\le {s}\le \mu _o+1.5\sigma _o\)). Our analyses of 11 studies of natural proteins to which we can apply both approaches, show a strong correlation (Pearson’s R = 0.89, p-value = 9.5e−06) (Table S2). Therefore, in the following, we use the second approach, which uses information from the full DFE, and can be applied to all datasets. Using this approach we found varying levels of robustness ranging from values close to 20% to over 60% of neutral variants, with an average \(\lambda _{prot}\) of 46.7% (95% CI: 45.5, 48.0) (Table 1).

Our estimations of \(\lambda _{prot}\) might be impacted by missing data. By just reporting the total fraction of neutral variants we have simply assumed that missing data distribute uniformly over the range of selection coefficients of a given protein. However, missing variants are most likely biased towards deleterious mutations, thus in some cases we might be overestimating \(\lambda _{prot}\).

To explore the effect of this potential bias on our conclusions, we repeated our analysis considering the hypothetical case in which all missing variants were deleterious. We found only a moderate effect on our previous estimation, with and average \(\lambda _{prot}\) of 43.6% (95% CI: 42.4, 44.9).

A second factor that might influence our results are the two different normalization strategies used to estimate selection coefficients (Eqs. 1, 2; Methods). Both normalization strategies are equally represented in our data, and a comparison of the two approaches revealed no significant differences between the estimations of \(\lambda\) (Wilcoxon Rank Sum test, p-value = 0.776). Moreover, we used raw data available for one of the studies (Cystathionine \(\beta\)-synthase, CBS) to obtain selection coefficients using both normalization methods, and observed indistinguishable results, with an average \(\lambda _{prot}\) of 44.1 ± 2.8% and 43.5 ± 2.1% ( ± 95% CI).

In summary, estimations of \(\lambda _{prot}\) from high-resolution mutagenesis data spanning a large set of non-redundant protein sequences, structures and functions, revealed an average \(\lambda _{prot}\approx\) 45%. This estimation does not seem biased by incomplete data or normalization strategies of the mutagenesis data.

A Computational Model of Proteins for the Large-Scale Simulation of Mutational Robustness

Is 45% of neutral variants, or the range of mutational robustness observed in mutagenesis data, expected or rather surprising? To address this question we implemented a computational lattice model that allows us to explore the degree of robustness of model conformations as well as factors that might impact the fraction of neutral variants. The protein model we implement here is based on sequences of length 16 mer, composed of the natural amino acid alphabet, and folded on a two-dimensional lattice. The model sacrifices details of the molecular structure of proteins to inform about the overall statistics of the sequence-structure map of proteins (Drummond and Wilke 2008; Drummond et al. 2005); and in particular, the relation between mutation and thermodynamic stability (Lau and Dill 1989; Lipman and Wilbur 1991; Bornberg-Bauer and Chan 1999; Xia and Levitt 2002).

Three features of the model justify its use in predicting the mutational robustness of proteins. First, the distribution of polar versus hydrophobic residues is one of the fundamental assumptions of the model (Lau and Dill 1989), that we further approximate using an empirically-derived energy function accounting for the full proteinaceous amino acid alphabet (Miyazawa and Jernigan 1985). Second, the model of L = 16 mer resembles the ratio between buried versus exposed residues in natural proteins, which is better recapitulated by two-dimensional than by the compact three-dimensional version of the model (Chan and Dill 1991; Dill et al. 1995). Third, the computational tractability of the model, as well as the use of the proteinaceous amino acid alphabet allows us to simulate both compact and non-compact conformations, and to obtain large and unbiased samples of sequences. By expanding the size of the amino acid alphabet, the model not only accounts for the globularity observed in most proteins (Chan and Dill 1996), but also for their large structural diversity, such as rare conformations of low stability and/or biased amino acid composition (Figure S4).

In this model, we define a protein family as a collection of viable sequences that share the same conformation or molecular function, and can be connected through paths of single-point mutations. Note that two sequences that adopt the same conformation do not necessarily belong to the same family. However, due to the ability of protein sequences to percolate in sequence space, we use these terms interchangeably.

The Mutational Robustness of Random Samples of Foldable Sequences

Similar to the empirical data, the model allows us to compute the mutational robustness (\(\lambda\)) of a sequence folded onto its native conformation. To do this we simulate all single-mutant variants of the sequence and compute \(\lambda\) as the fraction of those variants that preserve the same conformation as the original sequence. In contrast to empirical data, the model also allows us to estimate the average mutational robustness of a conformation c, or \(\lambda ^c\). We do this by simply taking the arithmetic average of the individual mutational robustness of a set of independently sampled sequences adopting the same conformation.

To gain insights about the expected mutational robustness of conformations arising from random, foldable sequences, we first obtain a large and unbiased sample of sequences using a Monte Carlo algorithm (Methods). The algorithm ensures that all sequences in the sample are foldable (i.e. adopt a single native conformation), are connected through single-point mutations, and are independent. Our initial sample comprised 2.2 × 10\(^6\) sequences, and included approximately 95% (14,313 out of 15,048) of the conformations in the model. Then, for each conformation in the sample, we sub-sampled up to 100 sequences and estimated \(\lambda ^c\).

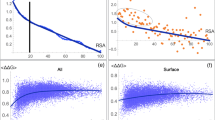

Average mutational robustness of conformations in the lattice model. Mutational robustness (\(\lambda _{prot}\)) of a sequence \(s_i\) folding on a conformation \(c_i\), was calculated as the fraction of single variants that preserves the conformation \(c_i\). We calculated \(\lambda ^c_{prot}\) as the arithmetic average of \(\lambda _{prot}\) of up to 100 sampled sequences per conformation

Our analyses of over 14,000 families revealed an average \(\lambda ^c_{prot}\) of 32 ± 9% (95% CI), with a distribution of slightly asymmetric shape that drops abruptly at \(\approx\) 40% (Fig. 1). There is a large variation in \(\lambda ^c_{prot}\). A small fraction of families (0.4%) shows averaged values of \(\lambda ^c_{prot}\) lower than 15%. This is expected by the large variation in the number of sequences per conformation (Figure S3B). To explore this further we classified families according to the number of sequences in our sample and study the average mutational robustness of the 1000 smallest and 1000 largest families. We found averaged fractions of neutral variants of 28 ± 6 and 41 ± 6% (± 95% CI), respectively.

Protein Families Can Evolve Twice the Robustness Seen in Random Samples of Foldable Sequences

Natural proteins are expected to differ from random samples of foldable sequences. The reason is that, to perform their functions protein-coding genes adapt to environmental conditions while subject to a variety of constraints. On the one hand, these constraints might substantially reduce \(\lambda\). On the other, under some circumstances proteins might evolve increasing mutational robustness (Van Nimwegen et al. 1999; Bloom et al. 2007). Next, we wanted to explore the impact of these two seemingly conflicting forces on \(\lambda\).

To study the maximal \(\lambda _{prot}\) available to proteins, we identified 300 representative families among the smallest and the largest protein families from our previous analyses. We sampled 10 sequences per family, and for each one of these sequences we simulated an adaptive walk towards larger \(\lambda _{prot}\) using the following algorithm: First, we calculate the mutational robustness (\(\lambda _i\)) of the reference sequence \(s_i\). Second, we introduce a single mutation randomly distributed along the sequence \(s_i\), producing sequence \(s_j\). Third, we calculate the mutational robustness of \(s_j\), or \(\lambda _j\). Fourth, if \(\lambda _j\ge \lambda _i\), we adopt \(s_j\) as our new reference sequence, otherwise we repeat steps 1 to 3 until no new sequences are found. We summarize the results of a simulation by calculating the average change in robustness per family, with respect to the initial sequence i, as: \(\Delta \lambda _{i} = \lambda _{{i,n}} - \lambda _{{i,1}}\). With n, the total number of adaptive steps. In using this approach we are not interested in the mechanisms for the evolution of mutational robustness, but rather on the availability of larger amounts of neutral mutations to sequences of a given protein family.

Our analyses of adaptive walks revealed that protein families can access a substantial extra fraction of neutral variants, and that this amount depends on family size. Figure 2A show examples of adaptive walks for two sequences with conformations of small and large family size. Overall, the smallest families experience on average \(\Delta \lambda\) of 26 ± 5%; while the largest families reach a \(\Delta \lambda\) of 38 ± 7% (± 95% CI). According to our previous analysis, unbiased samples of foldable sequences that belong to small and large families, have already average \(\lambda ^c_{prot}\) of 28 ± 6 and 41 ± 6%, respectively; therefore the same families might evolve approximately twice their initial \(\lambda ^c_{prot}\), reaching fractions of over 55 to 80% of neutral variants, respectively.

Functional Constraints Can Increase or Reduce Mutational Robustness

To test the effect of functional constraints on the mutational robustness of proteins we construct a biophysical model for the interaction of a target and a ligand. We define a target as a protein sequence folded onto its native conformation, and model a ligand as a short peptide of length l (Fig. 2b, c). The model assumes that functional performance (\(F_p\)) is proportional to the probability that the target interacts with the ligand, and therefore, it depends on two components. First, the folding probability that the target is in its native state (i.e. \(p_{f} = P(N)\), as defined by Eq. 2). The second component is the conditional probability of binding given that the target is in its native state (i.e. \(p_{b} = P(b|N)\)). \(p_b\) depends on the interaction energy between the target and the ligand (\(\Delta \epsilon\)), and therefore, on the amino acid composition of both the ligand and the sequence it binds on the target. We define \(p_{b}\) using two alternative models for the effect of thermodynamic stability on functional performance (Methods). The soft threshold model assumes the existence of a minimum amount of thermodynamic stability necessary for the function to be performed, and can be described by a sigmoidal curve (Fig. 2d). Alternatively, \(p_{b}\) can be defined by the optimum stability model, which assumes that functional performance is only optimized on a small range of relatively destabilizing interactions, and is therefore defined by a normal distribution centered at \(\Delta\epsilon = 0\) (Fig. 2d).

The range of mutational robustness of model proteins and the effect of functional constraints. (a). Examples of adaptive walks for two targets associated to a small family (bottom curves, conformation in panel b); and a large family (top curves, conformation in panel c). The algorithm used is described in the main text. At each step of the simulation, all single variants of the reference sequence are simulated and the mutational robustness (\(\lambda _{prot}\)) of a sequence is calculated. Adaptive walks are performed using no ligand (black thick curve), or with ligands according to the soft threshold (black thin curves) or the optimum stability models (light grey curves). Each walk is performed independently 10 times. Error bars show confidence intervals estimated using the bootstrap (n = 100). (b). Example of a small family conformation (black circles), with sequence CCCWECMWKLNVDKNC, and 5 interresidue contacts. Ligand (grey circles) with l = 9, b = 6. (c). Example of a conformation from a big family (black circles), with sequence: IRVNECKKDSPSYSEG, and 8 interresidue contacts. Ligand (grey circles) with l = 4, b = 4. (d). Models for the effect of thermodynamic stability on functional performance. Binding probability (\(p_b\)) as a function of the difference in binding energy (\(\varDelta \epsilon\)). Optimum stability (solid line) and soft threshold (dashed line) models. (e). Overall change in robustness per adaptive walk in the presence of ligands with different number of binding sites (b). Each point corresponds to the \(\varDelta \lambda ^c_{prot}\) over 150 adaptive walks of target’s conformations of small (bottom) and larger (top) family size, using the optimum stability (solid lines) or the soft threshold (dashed lines) models. Horizontal lines show the expected mutational robustness in the absence of a ligand. Error bars show confidence intervals estimated using the bootstrap (n = 150)

To explore the effect of functional constraints on the mutational robustness of a given target, we first use the target’s conformation to create a library of ligands with random conformations and sequences. Then, we select a ligand from this library, according to two conditions. First, the target-ligand interaction must comply with a previously defined value of b, or number of interacting sites, which can range between 2 to 8 sites, or \(\approx\)10 to 50% of the target’s length. Figure 2b and 2c present two examples, with b = 6 and b = 4, respectively. Notice that a single ligand site can bind none to multiple positions on the target, and therefore, b is not necessarily equal to the ligand size, l. The second condition is that the ligand’s sequence remains constant during the simulation and must have an initial binding probability \(p_b\) ranging between 0.90 and 0.95. Our choice of a high \(p_b\) ensures that the initial ligand is relatively well-adapted to its target, and justify our assumption of the binding of a single ligand per target. Finally, to explore the effect of functional constraints on \(\lambda _{prot}\), we simulated the same adaptive walk described above, but under the constraint of a functional performance of at least 0.5 (i.e. \(F_p\ge\)0.5).

Examples of adaptive walks for two targets suggest that the effect of functional constraints on \(\lambda _{prot}\) depends on both the number of binding sites and on the type of model for the relation between stability and functional performance (Fig. 2a–c). We see that regardless of the adaptive step along the walk, and on whether the family size of the target’s conformation is small or large, the optimum stability model reduces \(\lambda _{prot}\) in approximately 5 to 10% of neutral variants. The soft threshold model, on the other hand seems to have a less drastic effect on robustness, which in the case of the conformation of a small family size with b = 4, is reverted by the end of the adaptive walk (Fig. 2a, b).

One mechanism known to promote \(\lambda _{prot}\) is an increase in thermodynamic stability. In our model that can happen by either increasing the stability of interactions in the target (i.e. folding probability, \(p_f\)), or the interactions between the target and the ligand (i.e. binding probability, \(p_b\)). In the case of the soft threshold model, increasing binding stability (\(\varDelta \epsilon\)) is allowed to evolve with no detrimental effect on function. In contrast, in the optimum stability model the binding stability is constrained to low values. Therefore, in contrast to optimum stability, the soft threshold model might lead to the accumulation of additional thermodynamic stability and therefore larger \(\lambda _{prot}\). Because the binding stability is proportional to b, the larger the ligand, the strongest these effects are expected to be. This is precisely what we observe when simulating walks over a representative set of small and large families, with ligands of increasing sizes (Fig. 2e). Our conclusions are not impacted by changes to initial parameters in the simulation (Figure S5).

In summary the model shows that by evolving through single-point mutations, protein families can access up to twice the amount of robustness observed in unbiased samples of foldable sequences. We find that functional constraints can only moderately affect \(\lambda _{prot}\), in up to 10%, and depending on how functional performance responds to changes in thermodynamic stability, binding might even contribute to increase the fraction of neutral variants.

The Mutational Robustness of Artificially Designed Proteins

Our previous analyses suggest that protein families can potentially evolve substantial robustness and that functional constraints have only a moderate impact on robustness. Here, we ask whether the predictions of mutational robustness observed in the model are attainable by real proteins. To test this prediction we reason that in contrast to natural proteins, designed proteins are not the result of a long evolutionary process, and are often optimized for folding in the absence of functional constraints. Therefore, we contrast our previous findings to estimations of \(\lambda _{prot}\) from mutagenesis data of artificially designed proteins.

We use a recently reported dataset composed of 12 designed proteins ranging between 40 and 43 residues, spanning four different folds (one of which has not been observed in natural proteins), with a 100% of all single-point mutations per protein, and encompassing over 25,000 variants (Table S1) (Rocklin et al. 2017). These proteins were computationally designed to be thermodynamically stable, selected for stability using two protease assays (i.e. trypsin and chemotrypsin), and studied using large-scale mutagenesis. We found that designed proteins have an average \(\lambda _{prot}\) of 58.3 ± 2.1% (± 95% CI). This estimation is significantly different and approximately 15% larger than the \(\lambda _{prot}\) observed in the set of natural proteins (Table 1) (One-sided Mann-Whitney U-test, p-value = 0.0079). Notably, our observations are in line with the lattice model, which predicts an average maximal \(\lambda _{prot}\) \(\approx\) 60% of neutral variants.

Summary of the distribution of mutational robustness for proteins and protein-coding nucleotide sequences using mutagenesis data and simulations. Boxplots show estimations of \(\lambda\), expressed as percentage of neutral variants, from mutagenesis data of natural (white) and artificially designed (grey) proteins. Estimations of the mean \(\lambda\) from simulations in the lattice model is represented by segmented lines that connect the mean \(\lambda\) estimated from random samples of families (circles) or families evolved for larger \(\lambda\) (squares). In both cases estimations were carried out for families of small (white) and large (black) sizes (i.e. number of sequences per conformation)

Robustness from the Perspective of the Gene

So far we have studied the average fraction of neutral mutations in proteins using the full distribution of amino acid substitutions per site. However, the mutational robustness of a protein-coding gene is influenced by the mapping between codons and amino acids set by the genetic code, in two fundamental ways. First, a given protein-coding gene can only access \(\approx\)30% of the full set of amino acid substitutions. The reason is that of all possible single-point mutations in codons, 70% are non-synonymous, and these mutations can only lead to an average of 7.5 of the 19 (40%) other amino acids per site.

Second, if the map of codons to amino acids were fully random, our previous estimations of \(\lambda _{prot}\) for proteins would not be affected, since a sample of 30% of mutations would be drawn uniformly from the full distribution of amino acid substitutions. However, far from a random mapping between codons and amino acids, the universal genetic code is considerably adapted to reduce the impact of mutations on fitness (Freeland and Hurst 1998). Indeed, of the average 70% of non-synonymous mutations in codons, a large fraction leads to amino acid substitutions of similar physicochemical properties. Most importantly, 25% of single-point mutations in codons are expected to be synonymous, whereas 5% lead to truncated variants. Overall, influenced by codon usage, and sequence composition, the genetic code is expected to substantially increase the fraction of neutral variants of the average protein-coding gene.

To explore the average effect of the genetic code we calculated the expected mutational robustness of protein coding genes (i.e. \(\lambda _{nuc}\)) in the datasets of natural, artificial and unbiased samples of model proteins. For each protein sequence we generated \(n_r\) = 100 correponding mRNA sequences by randomly drawing matching codons from the universal genetic code. Then, for each RNA sequence we generated all single nucleotide variants and translated them into proteins. Finally, we estimated \(\lambda _{nuc}\) using the fitness values available from their respective DFE. As predicted, our results revealed the average \(\lambda _{nuc}\) of natural (66.3 ± 1.2%), artificial (70.5 ± 1.5%), and unbiased samples of sequences of both small (48.0 ± 2.7%) and large (58.1 ± 2.5%) families, to be on average 17.5% larger than the estimations based on the full set of protein variants, \(\lambda _{prot}\) (Fig. 3; Table 1, S1 and S2).

Discussion

We used large-scale mutagenesis data and computer simulations to quantify the mutational robustness of protein-coding genes. Analyses of distributions of amino acid substitutions from 18 natural proteins revealed that the observed mutational robustness of proteins ranges from 20 to over 60% of neutral variants, with an average of \(\approx\) 45%. In contrast, mutagenesis data of 12 artificial proteins, designed under stability constraints, showed average mutational robustness of approximately 60%. This difference is not surprising, since natural proteins are not expected to be optimized for mutational robustness; and suggests that natural proteins might be able to evolve, on average, an additional 15% of neutral variants.

As a result of their large evolutionary history, proteins have evolved to cope with a variety of constraints, including gene expression, mistranslation, and molecular function. Thus, the observed mutational robustness of proteins (i.e. \(\approx\) 45%) is expected to be larger than the intrinsic robustness of an unbiased sample of foldable sequences. We tested this hypothesis using a protein lattice model and our analyses provided three main insights.

First, we found that unbiased samples of protein families from the foldable fraction of sequence space are expected to access on average \(\approx\)30% of neutral variants. This intrinsic robustness predicted by the model might be explained by the distribution of hydrophobic and polar amino acids, as well as the ratio of exposed versus buried residues, that are fundamental properties of the foldability of proteins (Chothia 1976; Bowie et al. 1990), and that are well recapitulated by the model (Chan and Dill 1991; Dill et al. 1995). Evidence supporting an invariant degree of \(\lambda _{prot}\) comes from simulations of mutations in crystal structures showing that small size effects on stability are consistently observed in residues with surface accessibility of 40% or larger (Guerois et al. 2002; Tokuriki and Tawfik 2009; Ferrada 2019). Similarly, chain length might also impact mutational robustness. Theory predicts a linear dependence between length and main thermodynamic observables (Robertson and Murphy 1997). However, because of the competing effect between enthalpy and entropy, longer chains are predicted to have only a small impact on stability (Ghosh and Dill 2009). To test the impact that the dependence of stability on length might have on \(\lambda\), we explored their association in lattice models of varying lengths, as well as in the experimental data. A linear model revealed that the expected small dependence of stability on chain length, has also a small impact on \(\lambda\). Analysis of the empirical data shows that doubling the length of a protein/gene translates into a 10 to 15% increase in mean \(\lambda\) (Figure S6).

A second prediction of the lattice model is that protein families can access twice the amount of robustness observed in unbiased samples of foldable sequences, reaching up to approximately 60% of neutral variants. Notably, this value is very close to the estimations of \(\lambda _{prot}\) obtained from mutagenesis data of artificially designed proteins. Finally, the model suggested that functional constraints can only moderately affect the fraction of available neutral mutations in approximately 5 to 10%. This finding resonates with analyses of a large compilation of sequence profiles with annotated molecular function, which suggests that just the fraction of sites directly associate to function in single domain proteins is on average 3% (Sigrist et al. 2002). The model also revealed that the strength and direction of the effect of functional constraints depends on the relation between thermodynamic stability and functional performance, which means that under some circumstances functions involving binding might increase thermodynamic stability, favoring robustness, such as the stability gained by protein complexes (Guerois et al. 2002), or folding upon binding (Dyson and Wright 2002).

Large-scale mutagenesis studies for the characterization of molecular function have been criticized on the basis that the selection assay implemented by these studies might remove the gene’s function from its original cellular environment where gene expression, substrate or the presence of other genes might differ substantially (Boucher et al. 2016; Lind et al. 2016). As a consequence of these limitations, proxies for selection coefficient derived from these data might not necessarily provide information about the evolutionary history of a gene. To address this drawback, we have refrained from using a definition of neutrality based on population genetic principles, and simply defined neutral mutation as those with a negligible effect on protein function. Using this definition, and relying on a relatively large number of observations, as well as computer simulations, we aimed at estimating the overall average robustness to mutations. A mutagenesis study of \(\beta\)-lactamase (TEM-1) subject to several concentrations of the inhibitor Ampicillin supports our overall approach (Stiffler et al. 2015). The study showed that the DFE of the TEM-1, and therefore its fraction of neutral variants, depends on the concentration of the inhibitor. We used mutagenesis data reported by Stiffler et al. (2015) to estimate the fraction of TEM-1 variants that remain neutral at increasing concentrations of the inhibitor. Our estimations show substantial variation with values of robustness ranging between 29 and 77% of neutral variants (Table S2). Notably, these estimations corresponds closely with the variation predicted by our simulations and observed in other datasets, suggesting that our analyses succeed in providing a representative glimpse of the expected natural variation of protein robustness.

In summary, mutagenesis data and simulations provide support to the existence of intrinsic levels of mutational robustness, which should amount to approximately 30% of neutral variants. Similarly, the data suggests that the average protein access an additional 15% of neutral variants, which might result from the joint effect of evolutionary forces, such as adaptation to cellular conditions, including selection for function and the effect of genetic drift. Our analyses also suggest that proteins are not optimized for robustness, and could access an additional average of 15 to 20% of neutral variants, that might be reached in rare cases through mechanisms of direct selection for robustness, by congruently evolving thermodynamic stability, or in large populations driven simply by the topology of neutral networks in sequence space (Van Nimwegen et al. 1999; Bornberg-Bauer and Chan 1999; Bloom et al. 2007).

Our results show that the mutational robustness of protein-coding genes is even expected to increase when accounting for the effect of genetic code, such that the expected, observed, and maximal \(\lambda _{nuc}\) are approximately 50, 65, and 75%, respectively (Fig. 3). Overall, these findings provide support to the ability of protein-coding genes to evolve neutrally. In fact, theory predicts that provided enough mutational robustness (\(\lambda _{nuc}>\) 37%) (Reidys et al. 1997), genes can escape the confinement of a local rugged landscape, and percolate reaching largely divergent regions of sequence space (Reidys et al. 1997; Babajide et al. 1997, 2001). Thus, the substantial mutational robustness of protein-coding genes would explain the existence of largely divergent families of relatively remote but detectable homology, sharing similar structures and functions.

References

Azevedo RB, Lohaus R, Srinivasan S, Dang KK, Burch CL (2006) Sexual reproduction selects for robustness and negative epistasis in artificial gene networks. Nature 440(7080):87–90

Babajide A, Farber R, Hofacker IL, Inman J, Lapedes AS, Stadler PF (2001) Exploring protein sequence space using knowledge-based potentials. J Theor Biol 212(1):35–46

Babajide A, Hofacker IL, Sippl MJ, Stadler PF (1997) Neutral networks in protein space: a computational study based on knowledge-based potentials of mean force. Fold Des 2(5):261–269

Bloom JD, Lu Z, Chen D, Raval A, Venturelli OS, Arnold FH (2007) Evolution favors protein mutational robustness in sufficiently large populations. BMC Biol 5(1):29

Bornberg-Bauer E (1997) How are model protein structures distributed in sequence space? Biophys J 73(5):2393–2403

Bornberg-Bauer E, Chan HS (1999) Modeling evolutionary landscapes: mutational stability, topology, and superfunnels in sequence space. Proc Natl Acad Sci 96(19):10689–10694

Boucher JI, Bolon DN, Tawfik DS (2016) Quantifying and understanding the fitness effects of protein mutations: laboratory versus nature. Protein Sci 25(7):1219–1226

Bowie JU, Reidhaar-Olson JF, Lim WA, Sauer RT (1990) Deciphering the message in protein sequences: tolerance to amino acid substitutions. Science 247(4948):1306–1310

Bratulic S, Gerber F, Wagner A (2015) Mistranslation drives the evolution of robustness in tem-1 \(\beta\)-lactamase. Proc Natl Acad Sci 112(41):12758–12763

Chan HS, Dill KA (1991) “sequence space soup’’ of proteins and copolymers. J Chem Phys 95(5):3775–3787

Chan HS, Dill KA (1996) Comparing folding codes for proteins and polymers. Proteins-Struct Funct Genet 24(3):335–344

Chothia C (1976) The nature of the accessible and buried surfaces in proteins. J Mol Biol 105(1):1–12

DePristo MA, Weinreich DM, Hartl DL (2005) Missense meanderings in sequence space: a biophysical view of protein evolution. Nat Rev Genet 6(9):678–687

Dill KA, Bromberg S, Yue K, Chan HS, Ftebig KM, Yee DP, Thomas PD (1995) Principles of protein folding-a perspective from simple exact models. Protein Sci 4(4):561–602

Doi N, Kakukawa K, Oishi Y, Yanagawa H (2005) High solubility of random-sequence proteins consisting of five kinds of primitive amino acids. Protein Eng Des Sel 18(6):279–284

Drummond DA, Silberg JJ, Meyer MM, Wilke CO, Arnold FH (2005) On the conservative nature of intragenic recombination. Proc Natl Acad Sci USA 102(15):5380–5385

Drummond DA, Wilke CO (2008) Mistranslation-induced protein misfolding as a dominant constraint on coding-sequence evolution. Cell 134(2):341–352

Dyson HJ, Wright PE (2002) Coupling of folding and binding for unstructured proteins. Curr Opin Struct Biol 12(1):54–60

Echave J, Wilke CO (2017) Biophysical models of protein evolution: understanding the patterns of evolutionary sequence divergence. Annu Rev Biophys 46:85–103

El-Gebali S, Mistry J, Bateman A, Eddy SR, Luciani A, Potter SC, Qureshi M, Richardson LJ, Salazar GA, Smart A et al (2019) The pfam protein families database in 2019. Nucleic Acids Res 47(D1):D427–D432

Esposito D, Weile J, Shendure J, Starita LM, Papenfuss AT, Roth FP, Fowler DM, Rubin AF (2019) Mavedb: an open-source platform to distribute and interpret data from multiplexed assays of variant effect. Genome Biol 20(1):1–11

Eyre-Walker A, Keightley PD (2007) The distribution of fitness effects of new mutations. Nat Rev Genet 8(8):610

Ferrada E (2019) The site-specific amino acid preferences of homologous proteins depend on sequence divergence. Genome Biol Evol 11(1):121–135

Finkelstein A, Gutin A, Badretdinov A (1994) Boltzmann-like statistics of protein architectures. Origins and consequences. Sub-Cell Biochem 24:1–26

Freeland SJ, Hurst LD (1998) The genetic code is one in a million. J Mol Evol 47(3):238–248

Ghosh K, Dill KA (2009) Computing protein stabilities from their chain lengths. Proc Natl Acad Sci 106(26):10649–10654

Goldstein R.A (2011) The evolution and evolutionary consequences of marginal thermostability in proteins. Proteins 79(5):1396–1407

Guerois R, Nielsen JE, Serrano L (2002) Predicting changes in the stability of proteins and protein complexes: a study of more than 1000 mutations. J Mol Biol 320(2):369–387

Hartling J, Kim J (2008) Mutational robustness and geometrical form in protein structures. J Exp Zool B 310(3):216–226

Jerrum M, Sinclair A (1996) The markov chain monte carlo method: an approach to approximate counting and integration. PWS Publishing, Boston

Jiang RJ (2019) Exhaustive mapping of missense variation in coronary heart disease-related genes. Ph.D. thesis, University of Toronto, Canada

Kitzman JO, Starita LM, Lo RS, Fields S, Shendure J (2015) Massively parallel single-amino-acid mutagenesis. Nat Methods 12(3):203–206

Lau KF, Dill KA (1989) A lattice statistical mechanics model of the conformational and sequence spaces of proteins. Macromolecules 22(10):3986–3997

Li H, Helling R, Tang C, Wingreen N (1996) Emergence of preferred structures in a simple model of protein folding. Science 273(5275):666

Lind PA, Arvidsson L, Berg OG, Andersson DI (2016) Variation in mutational robustness between different proteins and the predictability of fitness effects. Mol Biol Evol 34(2):408–418

Lipman DJ, Wilbur WJ (1991) Modelling neutral and selective evolution of protein folding. Proc R Soc Lond B 245(1312):7–11

Lynch M, Conery JS (2003) The origins of genome complexity. Science 302(5649):1401–1404

Matreyek KA, Starita LM, Stephany JJ, Martin B, Chiasson MA, Gray VE, Kircher M, Khechaduri A, Dines JN, Hause RJ et al (2018) Multiplex assessment of protein variant abundance by massively parallel sequencing. Nat Genet 50(6):874–882

Maynard-Smith J (1970) Natural selection and the concept of a protein space. Nature 225(5232):563–564

McLaughlin RN, Poelwijk FJ, Raman A, Gosal WS, Ranganathan R (2012) The spatial architecture of protein function and adaptation. Nature 491(7422):138–142

Melamed D, Young DL, Gamble CE, Miller CR, Fields S (2013) Deep mutational scanning of an rrm domain of the saccharomyces cerevisiae poly (a)-binding protein. RNA 19(11):1537–1551

Melnikov A, Rogov P, Wang L, Gnirke A, Mikkelsen TS (2014) Comprehensive mutational scanning of a kinase in vivo reveals substrate-dependent fitness landscapes. Nucleic Acids Res 42(14):e112–e112

Miller J.H (1979) Genetic studies of the lac repressor: Xi. on aspects of lac repressor structure suggested by genetic experiments. J Mol Biol 131(2):249–258

Mishra P, Flynn JM, Starr TN, Bolon DN (2016) Systematic mutant analyses elucidate general and client-specific aspects of hsp90 function. Cell Rep 15(3):588–598

Miyazawa S, Jernigan RL (1985) Estimation of effective interresidue contact energies from protein crystal structures: quasi-chemical approximation. Macromolecules 18(3):534–552

Nei M (2013) Mutation-driven evolution. OUP, Oxford

Olson CA, Wu NC, Sun R (2014) A comprehensive biophysical description of pairwise epistasis throughout an entire protein domain. Curr Biol 24(22):2643–2651

Phillips R, Kondev J, Theriot J, Garcia H (2012) Physical biology of the cell. Garland Science, New York

Rao S.P, Carlstrom D.E, Miller W.G (1974) Collapsed structure polymers. Scattergun approach to amino acid copolymers. Biochemistry 13(5):943–952

Redler RL, Das J, Diaz JR, Dokholyan NV (2016) Protein destabilization as a common factor in diverse inherited disorders. J Mol Evol 82(1):11–16

Reidys C, Stadler PF, Schuster P (1997) Generic properties of combinatory maps: neutral networks of rna secondary structures. Bull Math Biol 59(2):339–397

Rennell D, Bouvier SE, Hardy LW, Poteete AR (1991) Systematic mutation of bacteriophage t4 lysozyme. J Mol Biol 222(1):67–88

Robertson AD, Murphy KP (1997) Protein structure and the energetics of protein stability. Chem Rev 97(5):1251–1268

Rocklin GJ, Chidyausiku TM, Goreshnik I, Ford A, Houliston S, Lemak A, Carter L, Ravichandran R, Mulligan VK, Chevalier A et al (2017) Global analysis of protein folding using massively parallel design, synthesis, and testing. Science 357(6347):168–175

Roscoe BP, Thayer KM, Zeldovich KB, Fushman D, Bolon DN (2013) Analyses of the effects of all ubiquitin point mutants on yeast growth rate. J Mol Biol 425(8):1363–1377

Shendure J, Fields S (2016) Massively parallel genetics. Genetics 203(2):617–619

Sigrist CJ, Cerutti L, Hulo N, Gattiker A, Falquet L, Pagni M, Bairoch A, Bucher P (2002) Prosite: a documented database using patterns and profiles as motif descriptors. Brief Bioinform 3(3):265–274

Starita LM, Pruneda JN, Lo RS, Fowler DM, Kim HJ, Hiatt JB, Shendure J, Brzovic PS, Fields S, Klevit RE (2013) Activity-enhancing mutations in an e3 ubiquitin ligase identified by high-throughput mutagenesis. Proc Natl Acad Sci 110(14):E1263–E1272

Stiffler MA, Hekstra DR, Ranganathan R (2015) Evolvability as a function of purifying selection in tem-1 \(\beta\)-lactamase. Cell 160(5):882–892

Suckow J, Markiewicz P, Kleina LG, Miller J, Kisters-Woike B, Müller-Hill B (1996) Genetic studies of the lac repressor xv: 4000 single amino acid substitutions and analysis of the resulting phenotypes on the basis of the protein structure. J Mol Biol 261(4):509–523

Sun S, Weile J, Verby M, Wu Y, Wang Y, Cote AG, Fotiadou I, Kitaygorodsky J, Vidal M, Rine J et al (2020) A proactive genotype-to-patient-phenotype map for cystathionine beta-synthase. Genome Med 12(1):1–18

Tenaillon O, Barrick JE, Ribeck N, Deatherage DE, Blanchard JL, Dasgupta A, Wu GC, Wielgoss S, Cruveiller S, Médigue C et al (2016) Tempo and mode of genome evolution in a 50,000-generation experiment. Nature 536(7615):165–170

Tokuriki N, Stricher F, Schymkowitz J, Serrano L, Tawfik DS (2007) The stability effects of protein mutations appear to be universally distributed. J Mol Biol 369(5):1318–1332

Tokuriki N, Tawfik DS (2009) Stability effects of mutations and protein evolvability. Curr Opin Struct Biol 19(5):596–604

Van Nimwegen E, Crutchfield JP, Huynen M (1999) Neutral evolution of mutational robustness. Proc Natl Acad Sci 96(17):9716–9720

Weile J, Sun S, Cote AG, Knapp J, Verby M, Mellor JC, Wu Y, Pons C, Wong C, van Lieshout N et al (2017) A framework for exhaustively mapping functional missense variants. Mol Syst Biol 13(12):957

Xia Y, Levitt M (2002) Roles of mutation and recombination in the evolution of protein thermodynamics. Proc Natl Acad Sci 99(16):10382–10387

Yue P, Li Z, Moult J (2005) Loss of protein structure stability as a major causative factor in monogenic disease. J Mol Biol 353(2):459–473

Zhang J, Yang JR (2015) Determinants of the rate of protein sequence evolution. Nat Rev Genet 16(7):409–420

Acknowledgements

The author thanks to Dr. Patricio Orio at the Centro Interdisciplinario de Neurociencias de Valparíso (CINV), for access to computational resources; and to the Chilean National Agency for Research and Development (ANID), for support through the project REDES190089.

Author information

Authors and Affiliations

Corresponding author

Additional information

Handling Editor: Erich Bornberg-Bauer.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Ferrada, E. Quantifying the Mutational Robustness of Protein-Coding Genes. J Mol Evol 89, 357–369 (2021). https://doi.org/10.1007/s00239-021-10009-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00239-021-10009-1