Abstract

The growth of ancient DNA research has offered exceptional opportunities and raised great expectations, but has also presented some considerable challenges. One of the ongoing issues is the impact of post-mortem damage in DNA molecules. Nucleotide alterations and DNA strand breakages lead to a significant decrease in the quantity of DNA molecules of useful length in a sample and to errors in the final DNA sequences obtained. We present a model of age-dependent DNA damage and quantify the influence of that damage on subsequent steps in the sequencing process, including the polymerase chain reaction and cloning. Calculations using our model show that deposition conditions, rather than the age of a sample, have the greatest influence on the level of DNA damage. In turn, this affects the probability of interpreting an erroneous (possessing damage-derived mutations) sequence as being authentic. We also evaluated the effect of post-mortem damage on real data sets using a Bayesian phylogenetic approach. According to our study, damage-derived sequence alterations appear to have little impact on the final DNA sequences. This indicates the effectiveness of current methods for sequence authentication and validation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The development and improvement of molecular methods has enabled DNA to be recovered from organic remains up to hundreds of thousands of years old. The availability of such ancient DNA (aDNA) data significantly expands the opportunities for investigating recent evolutionary and archaeological questions. However, the post-mortem degradation of DNA creates significant challenges for such studies, particularly because of data loss and the heightened risks of sample contamination. In an attempt to improve the reliability and credibility of the results, various authors have proposed authentication methods and anti-contamination precautions (e.g., Cooper and Poinar 2000; Hofreiter et al. 2001b; Pääbo et al. 2004; Willerslev and Cooper 2005).

To overcome the difficulties associated with aDNA studies, it is essential to understand the sources and nature of DNA decay. Cellular and biomolecular decay starts at the moment of death of an organism, when the biochemical pathways cease and phospholipid membranes of the cells decay. The cells start being degraded by the endogenous nucleases and proteases (Darzynkiewicz et al. 1997). The DNA becomes non-specifically cleaved into fragments. Subsequently, DNA is prone to digestion by bacteria and other organisms feeding on the dead organic matter (Eglinton and Logan 1991). Both of these DNA-damaging processes commence relatively soon after cell death, with the latter process lasting until the digestive potential of the organic matter for the residing microorganisms is consumed (Vass 2001).

Moreover, biomolecules are continuously affected by chemical decomposition driven by mostly hydrolytic and oxidative reactions, though at a much lower rate than the enzymatic decomposition described above (Frederico et al. 1990; Lindahl 1993; Lindahl and Andersson 1972; Lindahl and Nyberg 1972). This spontaneous chemical decomposition of DNA has been examined the most extensively because it is possible to estimate its rates under known conditions (such as temperature or pH). In contrast, the rates of endogenous enzymatic and microbiological damage are extremely difficult to quantify owing to their complex dynamics.

For practical convenience, in this study we divide DNA damage into two categories (Fig. 1). The first is “shortening lesions,” which comprises all of the chemical changes that are not bypassable by DNA polymerase and thus prevent DNA replication during PCR. These are predominantly strand breaks, intermolecular crosslinks, and modified nucleotides that block the strand elongation (e.g., hydantoins). The second category of damage, “miscoding lesions,” includes all alterations that can be bypassed by polymerase but produce changes in nucleotides that result in a faulty sequence read.

A scheme of DNA post-mortem damage and sequence analysis. DNA molecules undergo miscoding (red stars) and shortening damage. Shortening damage comprises blocking lesions, such as crosslinks or hydantoins (black squares), and strand breaks. Shortening lesions that occur within fragments targeted by PCR prevent DNA amplification. Miscoding lesions, if present in a sufficiently high fraction of the amplified fragments, lead to spurious polymorphisms in the DNA sequence

The overwhelming majority of post-mortem miscoding lesions take the form of type 2 transitions (C→T and G→A) (Gilbert et al. 2003, 2007; Hansen et al. 2001; Stiller et al. 2006). Most authors point to cytosine deamination as a major source of those lesions (Briggs et al. 2007; Brotherton et al. 2007; Hofreiter et al. 2001a). Spontaneous hydrolysis with a release of an amine group results in the replacement of cytosine with uracil, which is subsequently read as thymine during PCR and results in an apparent C→T or G→A (on the opposite strand) transition in the final sequence. Although some have suggested that a proportion of the observed type 2 transitions derive from guanine modifications (Gilbert et al. 2007), Brotherton et al. (2007) showed that they were artifacts of the DNA library building process. Nevertheless, regardless of the actual origin of such miscoding lesions, a distinction between these two reactions is not drawn in standard PCR-based analysis.

Shortening lesions are more difficult to characterize in PCR-based studies because they simply manifest themselves as a lack of PCR product. Two main causes of shortening lesions have been discussed in the literature: (i) depurination, which typically causes strand breaks and therefore shortens the DNA fragments available for analysis (Lindahl and Andersson 1972); and (ii) crosslinks between DNA strands, DNA molecules, or DNA and proteins (Greenberg 2005; Poinar et al. 1998; Reynolds 1965), which stop polymerase from continuing DNA replication. Hansen et al. (2006) argued that, for permafrost-preserved samples, crosslinks are predominantly responsible for the decreased length of PCR-available DNA molecules. Heyn et al. (2010), however, found that levels of blocking lesions (crosslinks and all other shortening lesions that do not cause physical fragmentation of DNA molecules) vary widely across different samples, ranging from a barely detectable amount in mammoth DNA to 39% of affected molecules for a permafrost-preserved horse sample. They also found no significant correlation between the level of DNA fragmentation and the frequency of blocking lesions.

Some of the nucleotide modifications may constitute either a miscoding or shortening lesion, depending on the DNA polymerase used for amplification. This is the case especially with uracil residues emerging from cytosine deamination events. Whereas most DNA polymerases used for PCR (Taq polymerase family) simply treat uracil as thymine, some others, like Pfu polymerase, are not able to continue replication through uracil (Fogg et al. 2002). Therefore, depending on the chosen amplifying enzyme, the type 2 transitions will either stop strand elongation or introduce damage-derived sequence alterations.

Both shortening and miscoding lesions accumulate through time in DNA molecules, resulting in a reduction in molecular length and an increase in nitric base modifications. As a consequence, when a PCR amplification is attempted, there are only a certain number of molecules of a targeted length available. Furthermore, some fraction of these molecules carries miscoding lesions. Intuitively, the most significant factor dictating the level of DNA decay is the age of the material. However, environmental factors such as temperature, hydration, and pH can also have an important influence. These factors have the potential to obscure the relationship between the chronological age of a sample and the level of DNA damage (e.g., Poinar et al. 1996).

To capture the contribution of temperature to post-mortem DNA decay, Smith et al. (2001) introduced the concept of “thermal age,” which measures the “age” of a specimen standardized according to temperature. The standardization is made at 10°C, such that the thermal age of a sample is defined as the time after which, if it had been deposited at 10°C, the sample would exhibit an equivalent level of DNA degradation. The thermal age of a sample will depend on its chronological age as well as the long-term average temperature of deposition. It has been suggested that the amount of DNA damage in a sample is more likely to correlate with thermal age than with chronological (radiocarbon) age (Smith et al. 2001). However, the thermal age model considers only depurination-driven damage and disregards other forms of shortening damage (e.g., crosslinks) as well as miscoding damage.

Several procedures have been developed to reduce the impact of DNA damage on sequence analyses. These include molecular techniques that are applied prior to the sequencing process. The use of DNA polymerase that ceases elongation at uracil residues (Pfu polymerase; Fogg et al. 2002) will eliminate the miscoding lesions derived from cytosine deamination from the amplification product, turning them into shortening lesions. Also, uracil N-glycosylase treatment is widely used to remove uracil-derived miscoding lesions (Hofreiter et al. 2001a; Pääbo 1989). Intermolecular crosslinks (products of the Maillard reaction) are sometimes claimed to be removed by N-phenacylthiazolium bromide (Poinar et al. 1998; Vasan et al. 1996), but doubts have been cast on the benefits of this treatment (Rohland and Hofreiter 2007).

Statistical approaches can also be adopted to evaluate and mitigate the impact of post-mortem damage in DNA sequence data. Helgason et al. (2007) developed a test in which the damage-derived sequence alterations among clone sequences are used to determine the endogenous DNA sequence. Bower et al. (2005) estimated the number of clones needed to obtain a statistically supported final sequence. Dedicated bioinformatic tools are being introduced to detect miscoding damage (e.g., Ginolhac et al. 2011), with the potential to improve sequence authentication. The potential effect of DNA damage on phylogenetic and population-genetic analyses has been raised in the literature (Axelsson et al. 2008; Clark and Whittam 1992), prompting others to develop phylogenetic models of aDNA decay (e.g., Ho et al. 2007; Mateiu and Rannala 2008; Rambaut et al. 2009). These models allow the level of damage to be estimated and taken into account in evolutionary analysis, and treat the amount of damage as either age-dependent (Rambaut et al. 2009) or age-independent (Ho et al. 2007; Mateiu and Rannala 2008). Given the interactions of factors that can affect the level of DNA damage in a given sample, and given the range of methods that have been employed in different studies to obtain and authenticate ancient sequence data, it is not clear which of these two types of models is more appropriate for real data sets. In addition, for the age-dependent model, thermal age might represent a better indicator of the level of DNA damage than the chronological age of a sample.

To provide further insight into the nature of DNA decay and its impact on aDNA studies, we developed a model of DNA damage and sequence analysis. This allows us to investigate how the different factors affecting organic remains and the methods used during its examination influence the accuracy of the resulting aDNA sequence data. Our model shows the relative effect of different factors, thereby providing an indication of the critical points in aDNA analysis, including the experimental design. We also evaluate the age dependence of DNA damage and determine whether the impact of miscoding lesions is mitigated by the precautions taken during sequencing and analysis.

Materials and Methods

To analyze the influence of different sample-associated parameters on the level of DNA damage, we: (i) explored the contributions of sample age and deposition temperature to “thermal age,” which has been suggested to correlate well with DNA preservation; (ii) developed a theoretical model of post-mortem DNA damage and sequence analysis; and (iii) analyzed published aDNA data using Bayesian phylogenetic models of post-mortem damage to assess the prevalence of damage-derived sequence errors and the putative age dependence of the level of DNA damage.

Thermal Age

To assess the applicability of thermal age in predicting the level of DNA damage, we evaluated the relative effects of sample age and deposition temperature. Thermal ages for temperatures ranging from −30 to +30°C were calculated using the equation provided by Smith et al. (2003):

where t is the age of the sample—either thermal (t 10°C) or chronological (t c)—and k is a rate of reaction (at sample deposition temperature T or at 10°C). Reaction rates were calculated using the Arrhenius equation:

where A is a pre-exponential constant (A = 1.45 × 1011 s−1), E a is activation energy (E a = 127 kJ), R is the gas constant (R = 8.314 J mol−1 K−1), and T is the temperature (K). The values of A and E a were estimated by Lindahl and Nyberg (1972), who obtained an in vitro estimate of the depurination reaction curve.

By combining Eqs. 1 and 2, the thermal age of a sample can be calculated using the following equation:

Although a study of permafrost-preserved mammoth samples suggested that thermal age and other available indirect methods are only able to provide poor predictions of the level of DNA damage (Schwarz et al. 2009), this might not necessarily be the case for all aDNA samples. We investigate the thermal age concept further to determine how the level of damage depends on age and depositional temperature.

Model of DNA Damage and Sequence Analysis

Here, we describe a model of the post-mortem decay processes influencing DNA and of the subsequent procedure used to retrieve the sequence data. The process begins with the death of the organism, when 100% of the DNA molecules are present in the sample. The molecules are simultaneously subjected to two types of damage-inducing reactions: shortening damage reactions occurring at rate k s and reactions introducing miscoding lesions occurring at rate k m. Since it has been argued that an overwhelming majority of miscoding lesions are type 2 transitions (regardless of whether they are C→U/T or G→A), it can be assumed that only C and G will undergo this type of damage. Therefore, in our model, k m applies only to sites with C and G.

Each of the two types of damage-inducing reaction is described by the Arrhenius equation and has its own values for the activation energy and pre-exponential factor. In the model, however, since any reliable estimation of these parameters for post-mortem decay seems unfeasible, we used fixed rates for both k s and k m ranging from 10−7 to 10−2 site−1 year−1. This represents a wide range of possible rates of damage reaction, given that the depurination rates range from 10−9 to 10−4 site−1 year−1 for a temperature range of −30 to 30°C (Lindahl and Nyberg 1972), and given that other types of damage are also assumed to be in operation.

Although there are in vitro estimates for rates of DNA depurination (Lindahl and Nyberg 1972) and deamination (Lindahl and Nyberg 1974), as well as an in vivo estimate for the rate of spontaneous deamination (Shen et al. 1994), they are likely to be highly distinct from DNA damage after cell death. Factors affecting post-mortem reaction rates are of an environmental nature and can include temperature, pH, level of hydration, and oxidative potential. Unfortunately, these factors are rarely known with any certainty, especially for the entire life of an ancient sample. As a consequence, it is difficult to quantify their impact on the level of post-mortem DNA damage in a sample. This necessitates the use of a retrospective approach in which the reaction rates are derived from the observed damage level (e.g., Gilbert et al. 2007).

For given reaction rates of damage, we calculated the probabilities of occurrence of at least one shortening or miscoding lesion on a fragment of a chosen (PCR-targeted) l-bp length after time t c. It was assumed that the probability of damage events, within and among damage reaction types, is identically and independently distributed among sites. Although Briggs et al. (2007) found evidence of increased levels of strand breakage in C→T transition sites, such lesions will be observed solely as shortening lesions in PCR-based studies and will be treated as such in this study. However, when comparing empirical results with those obtained using this model, one would need to consider the effects of this linkage.

The probabilities of damage per fragment were described as follows:

where l is the length of the sequence and l CG is the number of C and G sites in the sequence. As a result of these damage processes, the DNA template molecules eventually fall into four classes:

Only the two first classes, which represent the fraction of molecules amplifiable and unaltered and the fraction of molecules amplifiable and altered, respectively, participate in PCR. The molecules in the last two classes are shorter than the length targeted by the primers. Consequently, the PCR product is a mixture of DNA fragments of a targeted length, with a certain fraction carrying at least one alteration (miscoding lesion) in the sequence. Assuming that neither altered nor unaltered molecules are amplified preferentially in the PCR, the fraction of altered molecules in the PCR product, given by P(amplifiable, altered), should be similar to that of the original DNA extract. A sequence obtained using the Sanger method will be determined by the nucleotide constituting the majority at each nucleotide position in the PCR product (i.e., a majority-rule consensus).

It is also possible to estimate the absolute number of DNA molecules in the extract depending on the tissue type, DNA type (nuclear/organellar), and sample quantity. Smith et al. (2003) offered estimates for mitochondrial DNA from human bone tissue. However, to avoid introducing additional uncertainties and approximations to our analyses, we have chosen to present the results as fractions rather than as absolute values.

We plotted the fractions of correct and erroneous molecules against a range of values for the parameters of the model. This allowed us to analyze the influence of each factor on the success of the sequencing procedure.

Sequence Authentication Methods

We evaluated the efficacy of chromatogram analysis, cloning, and high-throughput sequencing in eliminating damage-derived sequence errors. We estimated the fraction of altered molecules for each nucleotide position depending on the rate of miscoding damage (k m) and the age of the sample (t c). This was done by calculating the probability of at least one transition occurring after time t c. The fraction of the molecules sharing an altered nucleotide at a particular site, P d, is independent of k s and is given by:

The relation (10) only applies, however, to C and G sites because miscoding damage is assumed to affect only those nucleotides. All of the alterations in PCR products that are not type 2 transitions are believed to be PCR artefacts (Gilbert et al. 2007; Stiller et al. 2006). Therefore, their number would be independent of the age of the sample and affected instead by PCR conditions and the reagents used. Assuming that C→U deamination is the sole cause of miscoding damage, the use of uracil-sensitive DNA polymerase or treatment with uracil N-glycosylase ideally should reduce the amount of damage-derived miscoding lesions to 0 and leave only the PCR-derived alterations in DNA (Lindahl et al. 1977).

Base determination at each nucleotide position depends on the choice of base-calling algorithm and acceptance threshold. Three possible methods of determining bases are analyzed here: (i) PCR-product direct-sequencing chromatogram analysis; (ii) PCR-product cloning; and (iii) high-throughput sequencing, involving either direct sequencing of a DNA extract or of a PCR product. For each of these, sequence determination depends on the ratio of altered to unaltered molecules at each nucleotide position and on the threshold (the level of inequality in frequencies of different bases at which one accepts the most frequent base as an authentic one).

We model how different thresholds for all three base-calling methods affect the probability of interpreting a miscoding lesion as an authentic (endogenous) nucleotide. The ratios of frequencies of different bases at each site are observed as ratios of peak areas in chromatograms from direct sequencing, although the dependence is nonlinear because of the nature of the chromatography-tracing algorithms (e.g., Ewing et al. 1998). Any attempt to quantify the frequencies of the candidate bases at a nucleotide site requires additional analysis of the trace file.

For cloning and high-throughput sequencing, the proportion of DNA fragments carrying different bases at a particular site is represented by the proportion of all obtained sequences differing from each other at that site. The representation becomes more direct in high-throughput analyses, where the coverage can be high enough to reflect the distribution of variation among DNA fragments (e.g., targeted high-throughput sequencing; Stiller et al. 2009). Low-coverage pyrosequencing and cloning are more strongly affected by stochastic factors because of the smaller number of sequences (usually only a few to ~20 clones are sequenced; Bower et al. 2005). Therefore, we modeled cloning (we include low-coverage high-throughput sequencing in the “cloning” category to distinguish it from high-coverage analyses) as a Bernoulli process to calculate the probability (P a/j ) of obtaining a certain number of clones (a) altered at a chosen C or G site in the pool of all the sequenced clones (j), given a frequency P d of altered nucleotides at the site:

P a/j reflects the probability of accepting a damage-derived alteration as an endogenous nucleotide when the acceptance threshold is a per j of the sequenced clones, where a/j ≥ 0.5.

The criterion for deciding on the final sequence, when observing a mixture of different nucleotides at a given position (e.g., among cloned sequences), might not be consistent among published studies and has not been clearly defined by the authentication criteria proposed for aDNA. When heterozygosity is expected in the target locus (as is the case with nuDNA), distinguishing between damage-derived or PCR-introduced nucleotide changes and endogenous polymorphism is a serious challenge. Since this requires a number of additional assumptions about the expected levels and patterns of heterozygosity, we limit our evaluation of sequence authentication methods to non-polymorphic loci such as mtDNA (although heteroplasmy can occur in mtDNA; Li et al. 2010).

The simplest method of deciding on the final sequence is to choose the nucleotide that is more prevalent (i.e., an acceptance threshold of 0.5), but at high levels of DNA damage this approach can increase the error rate in the final sequences. The strictest approach is to reject each sample that produces polymorphic sequences, but this can lead to many samples being discarded. The influence of the scheme used to determine the final sequence was investigated here for various levels of miscoding damage.

One can also choose to bias acceptance toward C when observing a mixture of C and T and toward G when observing a mixture of G and A. However, this would increase the risk of accepting an erroneous sequence when PCR artefacts are prevalent or if an appreciable number of type 1 transitions (T→C and A→G) have occurred.

Bayesian Phylogenetic Analysis of Ancient DNA Data

To investigate the effects of post-mortem damage on real aDNA sequences, we analyzed a range of published data sets. In this approach, we assessed the amount of damage-derived sequence alterations that are present in the final sequences despite the authentication methods that were employed in their generation. We chose to analyze the data sets for which the sampling times of the sequences contain sufficient information for calibrating estimates of rates and timescales. To identify these data sets, we employed a date-randomization approach as used in previous studies (Firth et al. 2010; Ho et al. 2011). Following this preliminary investigation, our present study involves nine mitochondrial DNA alignments comprising dated sequences: Adélie penguin (96 sequences), aurochs (41), bison (158), boar (81), brown bear (36), cave lion (23), elephant seal (223), muskox (114), and tuatara (33). Modern sequences were excluded from all analyses.

The data sets were analyzed using the Bayesian phylogenetic software BEAST 1.6.1 (Drummond and Rambaut 2007). For each data set, the best-fitting model of nucleotide substitution was identified using the Bayesian information criterion. In each analysis, a coalescent prior was specified for the tree, with the demographic model chosen using Bayes factors (Suchard et al. 2001). We limited our model comparison to the constant-size, exponential-growth, and Bayesian skyride population models. Owing to the intraspecific nature of each data set, a strict molecular clock was assumed.

We compared two different phylogenetic models of post-mortem damage. Both models assume that damage occurs exclusively in the form of transitions. It has been suggested that including transversions in these models results in the overestimation of the level of damage, because some of the genetic variation among samples is erroneously treated as post-mortem damage (Ho et al. 2007). First, we used an age-dependent model in which the amount of sequence damage depends on the age of the sample (Rambaut et al. 2009). In this model, the probability that a site is undamaged decays exponentially with rate r. The probability that the nucleotide at the site, S, is j given the observed nucleotide is i is (Rambaut et al. 2009):

Second, we used an age-independent model in which all sequences are assumed to have the same amount of damage (Ho et al. 2007). This model includes a single parameter, δ, which reflects the mean amount of damage per site. All sequences in the data set are assumed to carry the same amount of damage, on average.

The two models of post-mortem damage were compared using Bayes factors. For further comparison, analyses were repeated without a model of post-mortem damage. Posterior distributions of parameters were estimated via Markov chain Monte Carlo (MCMC) sampling, with samples drawn every 5,000 steps over a total of 50,000,000 steps. The first 10% of samples were discarded as burn-in. Acceptable sampling and convergence to the stationary distribution were checked by inspection of samples from the posterior. For two data sets (Adélie penguin and elephant seal), the number of MCMC steps was increased several fold to achieve sufficient sampling. XML-formatted input files for all BEAST analyses are available as Online Resource.

Results

Thermal Age

We analyzed the dependence of thermal age of a sample on its chronological age and the temperature at which the sample was deposited (Fig. 2a). By definition, thermal age equals chronological age if the deposition temperature is 10°C. Thermal age grows exponentially with temperature and is 35-fold greater than the chronological age at 30°C. However, at temperatures below 10°C, the thermal age decreases and the dependence on the chronological age gets weaker (Fig. 2b). For −30°C, the thermal age falls to only 1.4 × 10−4 of the chronological age.

Model of DNA Damage and Sequence Analysis

We performed several calculations using the model of DNA damage and sequence analysis (Fig. 3). As k m/k s falls below 1, the fraction of unaltered molecules in the pool rises, reaching nearly 1.0 for k m/k s = 0.01. When k m/k s = 10, however, there is a rapid drop in the fraction of unaltered molecules.

Fractions of amplifiable molecules in a sample after time t c (shaded area), and of unaltered amplifiable molecules for a range of rates of miscoding damage (k m) (lines). Panels a–d show the effect of the rate of shortening damage (k s, 10−4 and 10−5 site−1 year−1), targeted PCR-product length (50 and 100 bp), and the ratio of two types of damage reactions (k m/k s, 0.01–10) on the number of the amplifiable molecules left after time t c and the proportion of them remaining unaltered by miscoding damage

At k s = 10−4 site−1 year−1, 90% of the amplifiable 100 and 50 bp molecules are lost after 230 and 460 years, respectively (Fig. 3). When the rate of shortening damage is 10-fold lower (k s = 10−5 site−1 year−1), this loss takes approximately 10 times longer.

Authentication Methods

Although the success of the DNA amplification is highly dependent on k s, it is k m that determines the quality of the amplified molecules and therefore their potential to yield a correct endogenous sequence (Fig. 4). The fraction of altered nucleotides at each primarily C or G site approaches 1 over time, exceeding 0.5 after <7 years for k m = 10−1 site−1 year−1 and after 6,931 years for k m = 10−4 site−1 year−1. For k m = 10−6, which is the order of magnitude observed by Gilbert et al. (2007) in real aDNA samples (the value has been adjusted here because we are assuming that primarily G or C sites are affected), the fraction of the altered base exceeds that of the endogenous base after nearly 700 kyr. It takes over 50 kyr for 0.05 of the bases at a primarily G or C site to be altered.

For chromatogram analyses, it is difficult to quantify the ratio of candidate nucleotides at each site, but a threshold of 0.5 will mean choosing the more frequent nucleotide (i.e., the higher peak) at each site. For high-throughput sequencing with sufficient coverage, the fraction of observed altered (i.e., varying) nucleotides at a chosen site equals the overall fraction of the molecules sharing an altered nucleotide per site (P d). Therefore, we regard the altered nucleotide as endogenous if P d exceeds the chosen acceptance threshold.

Typical cloning, however, results in much lower coverage of the analyzed fragment. Consequently, base-calling is more susceptible to stochastic variation. When P d = 0.5, which corresponds to a very high level of damage, and if a base occurring in majority of sequenced clones is accepted as endogenous, the probability of a lesion-derived nucleotide being accepted is 0.38 for 10 sequenced clones and 0.41 for 20 clones (Fig. 5). The probability is slightly higher here when more clones are sequenced because of the properties of the Bernoulli distribution and the fact that the majority of clones constitutes ≥ 60% (i.e., six or more clones) when 10 clones are sequenced and ≥ 55% (i.e., 11 or more clones) when 20 are sequenced. At a lower, but still very high, damage level of P d = 0.3 these probabilities fall to 0.047 and 0.017 for 10 and 20 sequenced clones, respectively. Raising the acceptance threshold reduces the probability of accepting an erroneous sequence but also increases the overall number of bases that are treated as ambiguous (N).

Probabilities of drawing a altered clones among all j (10 or 20) sequenced clones for different values of P d (fraction of the molecules sharing an altered nucleotide per site). When the acceptance threshold for base calling is lower than a, damage-derived alteration is accepted as an endogenous nucleotide

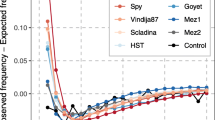

Bayesian Phylogenetic Analyses of aDNA Data

For six of the nine published aDNA data sets, we found no evidence of post-mortem damage present in the final sequences (Table 1). An age-dependent model of damage was supported for the bison and brown bear alignments, whereas an age-independent model of damage was supported for the elephant seal data. For these three data sets, the 95% credibility intervals of the estimates of damage rates excluded a value of zero (Table 2), reflecting consistency with the results of the Bayes factor analysis.

Discussion

In this study, we have developed a theoretical framework for analyzing the effects of post-mortem damage in aDNA sequences. We have found that the level of damage-derived errors in the final sequence data has a weak relationship with the chronological age of the sample. This is because of the influence of temperature and other environmental factors as well as the various measures taken to reduce the impact of post-mortem damage.

It has been suggested that the temperature and the age of the sample are the factors that have the strongest influence on the level of DNA damage (Smith et al. 2001). The combination of these two factors into “thermal age” seems to be a useful tool for comparing the level of shortening damage in samples of different ages and/or deposition temperatures, provided that other environmental conditions are similar. Smith et al. (2003) suggested that only thermally young material (i.e., <~25 kyr) is likely to yield amplifiable aDNA. The deposition temperature of a sample has a crucial impact on its thermal age, but this relationship declines in importance at very low temperatures. The thermal age of a sample is <10% of its chronological age when the deposition temperature is below ~−1.6°C. In contrast, for samples that have been stored at room temperature in museum collections (~20°C), thermal ages are more than six times higher than the chronological ages. Therefore, in subzero temperatures, the level of depurination (and thus length-reducing damage) is generally comparable across samples regardless of their chronological age. At low temperatures, the predicted level of damage is low and it is possible that other environmental factors are able to contribute to variation in the level of DNA damage.

It is also worth noting that the handling and relocation of samples after the excavation often leads to (sometimes multiple) changes in temperature, humidity, and other factors influencing damage rates. It has been shown that the post-excavation history of the sample can have a profound impact on DNA preservation (Pruvost et al. 2007). This circumstance makes DNA damage predictions for ancient samples even more complex.

Further studies of DNA damage in samples from known deposition conditions will improve our understanding of the parameters governing DNA decay. It is hoped that this will allow the development of tools for predicting the level of DNA preservation in any organic material. Given the difficulty and complexity involved in assessing rates of post-mortem damage, predicting the level of DNA preservation for any particular sample is prone to be rather unreliable and highly imprecise. Nevertheless, estimates of damage rates should improve with the publication of larger amounts of data (including deposition conditions). Characterization of the factors influencing both types of damage reaction is essential because it is their ratio that affects the accuracy of the final sequence.

Not surprisingly, we found that the targeted PCR-product size has a major impact on the likelihood of the successful amplification. This has been reported in a number of empirical studies (Deagle et al. 2006; Ottoni et al. 2009; Poinar et al. 2003, 2006; Schwarz et al. 2009). In order to maximize the amount of genetic information relative to the cost of the analysis, it is desirable to target long DNA fragments, but this needs to be balanced against the level of DNA preservation. However, with the expanding availability and use of high-throughput direct sequencing and other length-independent methods of analysis (e.g., Brotherton et al. 2010), this issue is declining in importance.

At high levels of damage, all of the authentication methods are prone to fail in identifying miscoding lesions. For this purpose, cloning does not seem to be more effective than rigorous chromatogram analyses, although the former still remains useful for detecting contamination (e.g., Krings et al. 1997).

Sequence determination becomes more complicated once we allow the sequences to exhibit endogenous variability, for example, allelic variation at nuclear loci. Most of the published aDNA studies have involved mitochondrial sequences, so endogenous variation has not been a major issue (except for possible heteroplasmy events; Millar et al. 2008). However, sequences at nuclear loci can vary in a pair of chromosomes, so a mixture of nucleotides at a certain site would not necessarily indicate the presence of DNA damage. Loci showing variability within the studied organism, either in the form of allelic differences or heteroplasmy, can be very difficult to distinguish from damage-derived lesions (Briggs et al. 2010).

When levels of DNA damage are very high, one would observe inconsistencies (e.g., among clones) and/or low quality sequences (observed in direct-sequencing chromatograms). In such instances, it is likely that the data yielded by the sample would be regarded as unreliable and would be discarded from the analysis.

Repeated amplification and sequencing, which are considered to be standard aDNA authentication criteria, represent the fundamental steps for detecting miscoding lesions. Although the same sequencing artefacts can occur in each approach, sequencing both DNA strands seems to eliminate this issue (Brandstätter et al. 2005). A moderate level of miscoding lesions should be detected by any of the existing authentication methods. In addition, uracil N-glycosylase treatment, which has become a routine step in aDNA analyses (Hofreiter et al. 2001a), or the use of uracil-sensitive DNA polymerase (Rasmussen et al. 2010) significantly decreases the level of damage-derived sequence errors.

Our analyses of published aDNA data using statistical models of post-mortem damage yielded a mixture of results. For most data sets, the models were unable to detect the presence of post-mortem damage in the published sequences, suggesting that the methods employed to eliminate damage in those studies were effective. Damage was found to be age-independent for one data set, elephant seal, which is somewhat unexpected given that the samples were all collected from the Victoria Land coast of Antarctica. Two data sets, steppe bison and brown bear, yielded evidence of age-dependent DNA damage. The signal of age dependence is detected despite variation in the conditions of sample deposition and despite the fact that the material derives predominantly from museum collections. The results from this analysis are not easily explained by the range of sampling locations and putative deposition conditions, indicating the complexity of factors affecting patterns of DNA damage in published sequences. Overall, our results highlight the importance of comparing different models of post-mortem damage for aDNA data because accounting for DNA damage can play an important role in phylogenetic and population-genetic analyses. Our investigation also suggests that chronological age is not always the most fitting predictor of the level of damage.

Conclusion

Long-term DNA decay is an exponential process during which the molecules are being both fragmented and chemically altered. The factors governing these two types of damage are very difficult to characterize, especially for the entire duration of deposition. Therefore, achieving an accurate prediction of the level of DNA decay is not possible with the present state of knowledge. Further work is needed to determine the values of some of the parameters in the model presented here. Primary data from high-throughput sequencing projects might prove to be particularly informative in this regard, but deposition conditions would still need to be known in detail.

Our study indicates that low to moderate levels of post-mortem damage do not typically produce errors in final sequences. Authentication measures such as replication of sequencing results and elimination of uracil residues will also serve to mitigate the impact of post-mortem damage. However, when samples are highly degraded, the impact of post-mortem damage is not trivial and the analysis needs to be approached with considerable caution.

By using new techniques and continuing to adopt a cautious approach to sequence analysis, aDNA studies have excellent potential to answer many interesting questions about the recent past. As our understanding of DNA decay processes improves, we will be able to increase our chances of recovering reliable genetic data from ancient samples.

References

Axelsson E, Willerslev E, Gilbert MTP, Nielsen R (2008) The effect of ancient DNA damage on inferences of demographic histories. Mol Biol Evol 25:2181–2187

Barnes I, Matheus P, Shapiro B, Jensen D, Cooper A (2002) Dynamics of Pleistocene population extinctions in Beringian brown bears. Science 295:2267–2270

Barnett R, Shapiro B, Barnes I, Ho SYW, Burger J, Yamaguchi N, Higham TF, Wheeler HT, Rosendahl W, Sher AV, Sotnikova M, Kuznetsova T, Baryshnikov GF, Martin LD, Harington CR, Burns JA, Cooper A (2009) Phylogeography of lions (Panthera leo ssp.) reveals three distinct taxa and a late Pleistocene reduction in genetic diversity. Mol Ecol 18:1668–1677

Bower MA, Spencer M, Matsumura S, Nisbet RE, Howe CJ (2005) How many clones need to be sequenced from a single forensic or ancient DNA sample in order to determine a reliable consensus sequence? Nucleic Acids Res 33:2549–2556

Brandstätter A, Sänger T, Lutz-Bonengel S, Parson W, Béraud-Colomb E, Wen B, Kong QP, Bravi CM, Bandelt HJ (2005) Phantom mutation hotspots in human mitochondrial DNA. Electrophoresis 26:3414–3429

Briggs AW, Stenzel U, Johnson PL, Green RE, Kelso J, Prüfer K, Meyer M, Krause J, Ronan MT, Lachmann M, Pääbo S (2007) Patterns of damage in genomic DNA sequences from a Neandertal. Proc Natl Acad Sci USA 104:14616–14621

Briggs AW, Stenzel U, Meyer M, Krause J, Kircher M, Pääbo S (2010) Removal of deaminated cytosines and detection of in vivo methylation in ancient DNA. Nucleic Acids Res 38:e87

Brotherton P, Endicott P, Sanchez JJ, Beaumont M, Barnett R, Austin J, Cooper A (2007) Novel high-resolution characterization of ancient DNA reveals C > U-type base modification events as the sole cause of post mortem miscoding lesions. Nucleic Acids Res 35:5717–5728

Brotherton P, Sanchez JJ, Cooper A, Endicott P (2010) Preferential access to genetic information from endogenous hominin ancient DNA and accurate quantitative SNP-typing via SPEX. Nucleic Acids Res 38:e7

Campos PF, Willerslev E, Sher A, Orlando L, Axelsson E, Tikhonov A, Aaris-Sorensen K, Greenwood AD, Kahlke RD, Kosintsev P, Krakhmalnaya T, Kuznetsova T, Lemey P, MacPhee R, Norris CA, Shepherd K, Suchard MA, Zazula GD, Shapiro B, Gilbert MTP (2010) Ancient DNA analyses exclude humans as the driving force behind late Pleistocene musk ox (Ovibos moschatus) population dynamics. Proc Natl Acad Sci USA 107:5675–5680

Clark AG, Whittam TS (1992) Sequencing errors and molecular evolutionary analysis. Mol Biol Evol 9:744–752

Cooper A, Poinar HN (2000) Ancient DNA: do it right or not at all. Science 289:1139

Darzynkiewicz Z, Juan G, Li X, Gorczyca W, Murakami T, Traganos F (1997) Cytometry in cell necrobiology: analysis of apoptosis and accidental cell death (necrosis). Cytometry 27:1–20

de Bruyn M, Hall BL, Chauke LF, Baroni C, Koch PL, Hoelzel AR (2009) Rapid response of a marine mammal species to Holocene climate and habitat change. PLoS Genet 5:e1000554

Deagle BE, Eveson JP, Jarman SN (2006) Quantification of damage in DNA recovered from highly degraded samples—a case study on DNA in faeces. Front Zool 3:11

Drummond AJ, Rambaut A (2007) BEAST: Bayesian evolutionary analysis by sampling trees. BMC Evol Biol 7:214

Edwards CJ, Bollongino R, Scheu A, Chamberlain A, Tresset A, Vigne JD, Baird JF, Larson G, Ho SYW, Heupink TH, Shapiro B, Freeman AR, Thomas MG, Arbogast RM, Arndt B, Bartosiewicz L, Benecke N, Budja M, Chaix L, Choyke AM, Coqueugniot E, Dohle HJ, Goldner H, Hartz S, Helmer D, Herzig B, Hongo H, Mashkour M, Ozdogan M, Pucher E, Roth G, Schade-Lindig S, Schmolcke U, Schulting RJ, Stephan E, Uerpmann HP, Voros I, Voytek B, Bradley DG, Burger J (2007) Mitochondrial DNA analysis shows a Near Eastern Neolithic origin for domestic cattle and no indication of domestication of European aurochs. Proc Biol Sci 274:1377–1385

Eglinton G, Logan GA (1991) Molecular preservation. Philos Trans R Soc Lond B 333:315–327

Ewing B, Hillier L, Wendl MC, Green P (1998) Base-calling of automated sequencer traces using phred. I. Accuracy assessment. Genome Res 8:175–185

Firth C, Kitchen A, Shapiro B, Suchard MA, Holmes EC, Rambaut A (2010) Using time-structured data to estimate evolutionary rates of double-stranded DNA viruses. Mol Biol Evol 27:2038–2051

Fogg MJ, Pearl LH, Connolly BA (2002) Structural basis for uracil recognition by archaeal family B DNA polymerases. Nat Struct Biol 9:922–927

Frederico LA, Kunkel TA, Shaw BR (1990) A sensitive genetic assay for the detection of cytosine deamination: determination of rate constants and the activation energy. Biochemistry 29:2532–2537

Gilbert MTP, Hansen AJ, Willerslev E, Rudbeck L, Barnes I, Lynnerup N, Cooper A (2003) Characterization of genetic miscoding lesions caused by postmortem damage. Am J Hum Genet 72:48–61

Gilbert MTP, Binladen J, Miller W, Wiuf C, Willerslev E, Poinar HN, Carlson JE, Leebens-Mack JH, Schuster SC (2007) Recharacterization of ancient DNA miscoding lesions: insights in the era of sequencing-by-synthesis. Nucleic Acids Res 35:1–10

Ginolhac A, Rasmussen M, Gilbert MTP, Willerslev E, Orlando L (2011) mapDamage: testing for damage patterns in ancient DNA sequences. Bioinformatics 27:2153–2155

Greenberg MM (2005) DNA interstrand cross-links from modified nucleotides: mechanism and application. Nucleic Acids Symp Ser (Oxf):57–58

Hansen A, Willerslev E, Wiuf C, Mourier T, Arctander P (2001) Statistical evidence for miscoding lesions in ancient DNA templates. Mol Biol Evol 18:262–265

Hansen AJ, Mitchell DL, Wiuf C, Paniker L, Brand TB, Binladen J, Gilichinsky DA, Rønn R, Willerslev E (2006) Crosslinks rather than strand breaks determine access to ancient DNA sequences from frozen sediments. Genetics 173:1175–1179

Hay JM, Subramanian S, Millar CD, Mohandesan E, Lambert DM (2008) Rapid molecular evolution in a living fossil. Trends Genet 24:106–109

Helgason A, Palsson S, Lalueza-Fox C, Ghosh S, Sigurdardottir S, Baker A, Hrafnkelsson B, Arnadottir L, Thorsteinsdottir U, Stefansson K (2007) A statistical approach to identify ancient template DNA. J Mol Evol 65:92–102

Heyn P, Stenzel U, Briggs AW, Kircher M, Hofreiter M, Meyer M (2010) Road blocks on paleogenomes—polymerase extension profiling reveals the frequency of blocking lesions in ancient DNA. Nucleic Acids Res 38:e161

Ho SYW, Heupink TH, Rambaut A, Shapiro B (2007) Bayesian estimation of sequence damage in ancient DNA. Mol Biol Evol 24:1416–1422

Ho SYW, Lanfear R, Phillips MJ, Barnes I, Thomas JA, Kolokotronis SO, Shapiro B (2011) Bayesian estimation of substitution rates from ancient DNA sequences with low information content. Syst Biol 60:366–375

Hofreiter M, Jaenicke V, Serre D, Haeseler Av A, Pääbo S (2001a) DNA sequences from multiple amplifications reveal artifacts induced by cytosine deamination in ancient DNA. Nucleic Acids Res 29:4793–4799

Hofreiter M, Serre D, Poinar HN, Kuch M, Pääbo S (2001b) Ancient DNA. Nat Rev Genet 2:353–359

Krings M, Stone A, Schmitz RW, Krainitzki H, Stoneking M, Pääbo S (1997) Neandertal DNA sequences and the origin of modern humans. Cell 90:19–30

Lambert DM, Ritchie PA, Millar CD, Holland B, Drummond AJ, Baroni C (2002) Rates of evolution in ancient DNA from Adelie penguins. Science 295:2270–2273

Li M, Schonberg A, Schaefer M, Schroeder R, Nasidze I, Stoneking M (2010) Detecting heteroplasmy from high-throughput sequencing of complete human mitochondrial DNA genomes. Am J Hum Genet 87:237–249

Lindahl T (1993) Instability and decay of the primary structure of DNA. Nature 362:709–715

Lindahl T, Andersson A (1972) Rate of chain breakage at apurinic sites in double-stranded deoxyribonucleic acid. Biochemistry 11:3618–3623

Lindahl T, Nyberg B (1972) Rate of depurination of native deoxyribonucleic acid. Biochemistry 11:3610–3618

Lindahl T, Nyberg B (1974) Heat-induced deamination of cytosine residues in deoxyribonucleic acid. Biochemistry 13:3405–3410

Lindahl T, Ljungquist S, Siegert W, Nyberg B, Sperens B (1977) DNA N-glycosidases: properties of uracil-DNA glycosidase from Escherichia coli. J Biol Chem 252:3286–3294

Mateiu LM, Rannala BH (2008) Bayesian inference of errors in ancient DNA caused by postmortem degradation. Mol Biol Evol 25:1503–1511

Millar CD, Dodd A, Anderson J, Gibb GC, Ritchie PA, Baroni C, Woodhams MD, Hendy MD, Lambert DM (2008) Mutation and evolutionary rates in Adélie penguins from the Antarctic. PLoS Genet 4:e1000209

Ottoni C, Koon HE, Collins MJ, Penkman KE, Rickards O, Craig OE (2009) Preservation of ancient DNA in thermally damaged archaeological bone. Naturwissenschaften 96:267–278

Pääbo S (1989) Ancient DNA: extraction, characterization, molecular cloning, and enzymatic amplification. Proc Natl Acad Sci USA 86:1939–1943

Pääbo S, Poinar HN, Serre D, Jaenicke-Despres V, Hebler J, Rohland N, Kuch M, Krause J, Vigilant L, Hofreiter M (2004) Genetic analyses from ancient DNA. Annu Rev Genet 38:645–679

Poinar HN, Hoss M, Bada JL, Pääbo S (1996) Amino acid racemization and the preservation of ancient DNA. Science 272:864–866

Poinar HN, Hofreiter M, Spaulding WG, Martin PS, Stankiewicz BA, Bland H, Evershed RP, Possnert G, Pääbo S (1998) Molecular coproscopy: dung and diet of the extinct ground sloth Nothrotheriops shastensis. Science 281:402–406

Poinar HN, Kuch M, McDonald G, Martin P, Pääbo S (2003) Nuclear gene sequences from a late Pleistocene sloth coprolite. Curr Biol 13:1150–1152

Poinar HN, Schwarz C, Qi J, Shapiro B, Macphee RD, Buigues B, Tikhonov A, Huson DH, Tomsho LP, Auch A, Rampp M, Miller W, Schuster SC (2006) Metagenomics to paleogenomics: large-scale sequencing of mammoth DNA. Science 311:392–394

Pruvost M, Schwarz R, Correia VB, Champlot S, Braguier S, Morel N, Fernandez-Jalvo Y, Grange T, Geigl EM (2007) Freshly excavated fossil bones are best for amplification of ancient DNA. Proc Natl Acad Sci USA 104:739–744

Rambaut A, Ho SYW, Drummond AJ, Shapiro B (2009) Accommodating the effect of ancient DNA damage on inferences of demographic histories. Mol Biol Evol 26:245–248

Rasmussen M, Li Y, Lindgreen S, Pedersen JS, Albrechtsen A, Moltke I, Metspalu M, Metspalu E, Kivisild T, Gupta R, Bertalan M, Nielsen K, Gilbert MTP, Wang Y, Raghavan M, Campos PF, Kamp HM, Wilson AS, Gledhill A, Tridico S, Bunce M, Lorenzen ED, Binladen J, Guo X, Zhao J, Zhang X, Zhang H, Li Z, Chen M, Orlando L, Kristiansen K, Bak M, Tommerup N, Bendixen C, Pierre TL, Grønnow B, Meldgaard M, Andreasen C, Fedorova SA, Osipova LP, Higham TF, Ramsey CB, Hansen TV, Nielsen FC, Crawford MH, Brunak S, Sicheritz-Pontén T, Villems R, Nielsen R, Krogh A, Wang J, Willerslev E (2010) Ancient human genome sequence of an extinct Palaeo-Eskimo. Nature 463:757–762

Reynolds TM (1965) Chemistry of nonenzymic browning. II. Adv Food Res 14:167–283

Rohland N, Hofreiter M (2007) Comparison and optimization of ancient DNA extraction. Biotechniques 42:343–352

Schwarz C, Debruyne R, Kuch M, McNally E, Schwarcz H, Aubrey AD, Bada J, Poinar HN (2009) New insights from old bones: DNA preservation and degradation in permafrost preserved mammoth remains. Nucleic Acids Res 37:3215–3229

Shapiro B, Drummond AJ, Rambaut A, Wilson MC, Matheus PE, Sher AV, Pybus OG, Gilbert MTP, Barnes I, Binladen J, Willerslev E, Hansen AJ, Baryshnikov GF, Burns JA, Davydov S, Driver JC, Froese DG, Harington CR, Keddie G, Kosintsev P, Kunz ML, Martin LD, Stephenson RO, Storer J, Tedford R, Zimov S, Cooper A (2004) Rise and fall of the Beringian steppe bison. Science 306:1561–1565

Shen JC, Rideout WM III, Jones PA (1994) The rate of hydrolytic deamination of 5-methylcytosine in double-stranded DNA. Nucleic Acids Res 22:972–976

Smith CI, Chamberlain AT, Riley MS, Cooper A, Stringer CB, Collins MJ (2001) Neanderthal DNA. Not just old but old and cold? Nature 410:771–772

Smith CI, Chamberlain AT, Riley MS, Stringer C, Collins MJ (2003) The thermal history of human fossils and the likelihood of successful DNA amplification. J Hum Evol 45:203–217

Stiller M, Green RE, Ronan M, Simons JF, Du L, He W, Egholm M, Rothberg JM, Keates SG, Ovodov ND, Antipina EE, Baryshnikov GF, Kuzmin YV, Vasilevski AA, Wuenschell GE, Termini J, Hofreiter M, Jaenicke-Despres V, Pääbo S (2006) Patterns of nucleotide misincorporations during enzymatic amplification and direct large-scale sequencing of ancient DNA. Proc Natl Acad Sci USA 103:13578–13584

Stiller M, Knapp M, Stenzel U, Hofreiter M, Meyer M (2009) Direct multiplex sequencing (DMPS)—a novel method for targeted high-throughput sequencing of ancient and highly degraded DNA. Genome Res 19:1843–1848

Suchard MA, Weiss RE, Sinsheimer JS (2001) Bayesian selection of continuous-time Markov chain evolutionary models. Mol Biol Evol 18S:1001–1013

Vasan S, Zhang X, Kapurniotu A, Bernhagen J, Teichberg S, Basgen J, Wagle D, Shih D, Terlecky I, Bucala R, Cerami A, Egan J, Ulrich P (1996) An agent cleaving glucose-derived protein crosslinks in vitro and in vivo. Nature 382:275–278

Vass AA (2001) Beyond the grave—understanding human decomposition. Microbiol Today 28:190–193

Watanobe T, Ishiguro N, Okumura N, Nakano M, Matsui A, Hongo H, Ushiro H (2001) Ancient mitochondrial DNA reveals the origin of Sus scrofa from Rebun Island, Japan. J Mol Evol 52:281–289

Willerslev E, Cooper A (2005) Ancient DNA. Proc Biol Sci 272:3–16

Acknowledgments

The authors thank anonymous reviewers for constructive comments that led to improvements in the manuscript. M.M. is funded by a University of Sydney International Research Scholarship. S.Y.W.H. is funded by the Australian Research Council and by a start-up grant from the University of Sydney.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Molak, M., Ho, S.Y.W. Evaluating the Impact of Post-Mortem Damage in Ancient DNA: A Theoretical Approach. J Mol Evol 73, 244–255 (2011). https://doi.org/10.1007/s00239-011-9474-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00239-011-9474-z