Abstract

The distribution of n-tuplet frequencies is shown to strongly correlate with functionality when examining a genomic sequence in a reading-frame specific manner. The approach described herein applies a coarse-graining procedure, which is able to reveal aspects of triplet usage that are related to protein coding, while at the same time remaining species independent, based on a simple summation of suitable triplet occurrences measures. These quantities are ratios of simple frequencies to suitable mononucleotide-frequency products promoting the incidence of the RNY motif, preferred in the most widely used codons. A significant distinction of coding and noncoding sequences is achieved.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Data from completed and ongoing genome projects are rapidly accumulating. Under this prospect, the need for efficient tools for analyzing specific properties of the newly found sequences is becoming imperative. Methods formulated for this task fall mainly in two categories (Fickett 1996). “Signal” methods make use of nucleotide strings that are highly correlated with specific functions of the genomic material, such as splice junctions, consensus sequence elements of promoter regions, etc. “Content” methods, on the other hand, are based on statistical features of the coding genetic text as contrasted with the noncoding from oligonucleotide frequencies up to higher-order statistical properties (Fickett and Tung 1992; Rogic et al. 2001).

A key aspect for the study of the genomic text is the length scale, under which the sequences are examined. A DNA primary structure can be studied under several length scales, each providing a “filter,” through which specific statistical attributes of the sequence, are revealed. In this work we focus on the short scale, the one lying below 10 nucleotides, which is immediately affected by the grammar and syntax of the genetic code.

When examining this particular length scale, studies of the patterns of oligonucleotide (n-tuplet) occurrences are of critical importance. Deviations from randomness in the n-tuplet occurrence have been extensively studied using several methodological approaches (Burge et al. 1992; Gutierrez et al. 1993; Karlin and Ladunga 1994). The observed patterns have been directly visualized, through approaches like the Chaos Game Representation (Jeffrey 1990) and “genomic portraits” (Hao 2000a, b). In general, the observed patterns have been found to be species or taxonomic group specific, allowing the derivation of evolutionary trees similar to those obtained using other approaches (Karlin et al. 1994; Karlin and Mrazek 1997). On the contrary, in most cases, the nucleotide n-tuplet occurrences are not clearly correlated with the functional role of the examined sequences (Burge et al. 1992; Karlin and Burge 1995; Nussinov 1981). Whenever such statistical differences are found between coding and noncoding segments, they are too weak or superimposed with coexisting species-specific patterns and therefore cannot be used in tools for the detection of new protein-coding regions.

Three nucleotide long words are of particular interest for obvious reasons, having to do with the nature of the genetic code. The use of triplets is essential in the case of coding sequences. Optimization of the frequencies of trinucleotides in order to achieve high expression fidelity and speed is a common strategy, used by a wide range of organisms, known as codon usage (Bulmer 1991; Sharp and Li 1986). In addition, codon usage patterns are widely used in phylogenetic studies, as well as in attempts to estimate the expression rate of a given gene. Therefore we see that the nonrandom usage of codons is considered as a strong indication of the coding potential of a sequence, and in most cases it is also correlated with its expression rate. A number of studies have pointed out the need to account for background nucleotide composition when studying codon usage (Akashi and Eyre-Walker 1998; Akashi et al. 1998; Kliman and Eyre-Walker 1998; Marais et al. 2001; Novembre 2002; Urrutia and Hurst 2001). This is exceptionally imperative in the cases of higher eukaryotic genomes, where nucleotide composition is subject to large intragenomic fluctuations (Bernardi 1989; Bernardi 1993).

The approach described in the present work is based on a reading-frame specific counting of frequencies of triplet occurrences, which then are normalized over a suitable mononucleotide-frequency product promoting the incidence of the RNY motif. This division ascribes a statistical weight to the value of each observed frequency of occurrence. Then the final quantity is obtained by a simple summation of measures of n-tuplet frequencies, that being a coarse-graining procedure. A suppression of species-specific features in the triplet distribution is achieved, thus revealing characteristics of the sequence having to do with its coding role. It is therefore expected to be able to distinguish systematically between coding and noncoding sequences.

Sequences and Data Handling

Collections of sequences of known origin, functionality, and mean lengths were downloaded from EMBL database using the SRS7 retrieval system in the following way.

Large species-specific collections, each including all sequences of a given origin and functionality and within a given length range, were initially formed. From each of those raw collections, 500 entries randomly chosen were retrieved, thus resulting in collections with minimized redundancy, which were the final objects in our analysis.

Collections of mixed origin were also formed using the EMBL database. In this case the interest focused on sequence representation from more general categories, namely, higher eukaryotes, viruses, and organelles. The eukaryotic collections consisting of coding sequences (CDS) and intronic sequences, with mean length ∼4000 nucleotides (∼4 knt), were completely cleaned for redundancy, thus constituting the most reliable reference set. Nevertheless, sequence collections of lower mean lengths, not checked for redundancy, behaved in all cases in a manner similar to that of the nonredundant set, a strong indication of the very slight impact that sequence redundancy has on the obtained results.

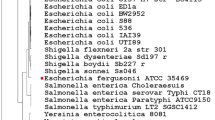

Prokaryotic and yeast coding and noncoding sequence collections were obtained from 30 complete representative eubacterial genomes and the 16 chromosomes of S. cerevisiae, respectively, as retrieved from GenBank.

Trying to quantify the success of several algorithms, based on quantities measuring “coding potential” in separating sets of (known functionality) coding and noncoding sequences, we proceed as follows.

Having the two sets of “test” sequences represented by two distribution curves of the given quantity (Q), we first determine numerically an optimal threshold value Q thr, which divides the Q-value space into two separate regions, one hosting mainly coding and the other noncoding sequences. Accordingly, the “test” sequence sets are divided into four subpopulations: True and false coding and noncoding sequences (TC, FC, TN, and FN, respectively). Then we define as “classification rate” the ratio (TC+TN)/(TC+TN+FC+FN) expressed as a percentage. Notice that the collections to be compared were always chosen to contain sequences of equal mean length and originating from the same species or species group.

The Codon Occurrence Measure

Method

The algorithm used for the computation of Codon Occurrence Measure (COM) is described below.

-

(A)

The whole sequence is read in a reading frame (RF)-specific context, calculating the 64 trinucleotide measures of occurrence in various ways, which are described later. Three different values of what we call the codon occurrence measure (COM) are obtained, by summing up the calculated measures of occurrence for each of the three reading frames individually:

$$ <