Abstract

Action affordances can be activated by non-target objects in the visual field as well as by word labels attached to target objects. These activations have been manifested in interference effects of distractors and words on actions. We examined whether affordances could be activated implicitly by words representing graspable objects that were either large (e.g., APPLE) or small (e.g., GRAPE) relative to the target. Subjects first read a word and then grasped a wooden block. Interference effects of the words arose in the early portions of the grasping movements. Specifically, early in the movement, reading a word representing a large object led to a larger grip aperture than reading a word representing a small object. This difference diminished as the hand approached the target, suggesting on-line correction of the semantic effect. The semantic effect and its on-line correction are discussed in the context of ecological theories of visual perception, the distinction between movement planning and control, and the proximity of language and motor planning systems in the human brain.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In his ecological theory of visual perception, Gibson (1979) posited that a major purpose of visual perception was to categorize the affordances of objects comprising the visual scene (cf. Arbib 1997; Jeannerod 1994). According to this view, the visual perception of objects tends to activate motor tendencies relevant to their use. A prediction of this view is that action affordances provided by irrelevant, non-target objects will activate motor tendencies that could interfere with actions directed toward target objects.

To date, available evidence seems consistent with this idea (e.g., Castiello 1996, 1998; Gentilucci and Gangitano 1998; Gentilucci et al. 2000; Gentilucci 2002; Glover and Dixon 2002a). For example, the affordances offered by a distractor object placed several centimeters from a target object can affect the grasping movement directed to the target (Castiello 1996, 1998). Similarly, a word printed on an object can affect the movement directed towards that object. Thus, for Italian subjects, the word “GRANDE” (“large”) leads to a larger maximum grip aperture than the word “PICCOLO” (“small”) (Gentilucci and Gangitano 1998).

An interesting aspect of these interference effects is that they do not appear to have a noticeable impact on the ultimate success of the action. That is, even though non-target affordances interfere with the kinematics of movements made to target objects, subjects retain the ability to grasp objects successfully. This outcome suggests that a correction mechanism is brought into play during performance.

We suggest that the apparent discrepancies between interference effects and successful action execution can be explained by a distinction between the planning of actions and their on-line control (Glover 2002, in press, 2003; Glover and Dixon 2001a, 2002a). According to this “planning-control” distinction, the planning system uses a visual representation that is susceptible to interference from cognitive and perceptual variables, leading to large and systematic errors in action planning. Conversely, the control system uses a quickly updated representation of the target that is focused on the visuospatial properties of the target itself, independent of other cognitive and perceptual variables, and is able to correct for the influences of these variables in flight. In line with this reasoning, both visual illusions (Glover and Dixon 2001a, 2001b, 2002b) and words (Glover and Dixon 2002a) have been shown to have large effects on early phases of reaching and grasping movements. However, these effects have been found to decrease and approach a value of zero by the time movements end.

In the present study we built on this past research by using words that only implicitly relate to target size. As the implicit/explicit distinction has been an important one in studies of brain and behavior, for example in learning (e.g., Schendan et al. 2003), perception (e.g., Le et al. 2003), and language (Buchanan et al. 2003), our purposes here were twofold. First, we wished to observe whether words that only implicitly related to size (e.g., “APPLE”, a prototypically large object, versus “GRAPE”, a prototypically small object) nevertheless were able to activate motor tendencies related to that feature. Second, we wished to address the concern that the explicit words used in past studies (e.g., “LARGE” versus “SMALL”, “NEAR versus “FAR”; Gentilucci et al. 2000; Glover and Dixon 2002a) induced experimenter demand effects. That is, although the researchers’ interest in those studies was clearly in showing word effects that occurred outside of subjects’ awareness, it remained a possibility that subjects reacted explicitly to the words. We predicted that action affordances would be activated through implicitly suggested size, and we predicted, in line with the planning-control model, that these effects would be largest early in movement and smallest when movements ended.

Materials and methods

Participants

Twelve Pennsylvania State University undergraduates with normal or corrected-to-normal vision participated in Experiment 1 in return for course credit. All participants reported being physically fit, and all were right-handed as measured by a modified version of the Oldfield Inventory (Oldfield 1971). All were naive as to the exact purpose of the experiment.

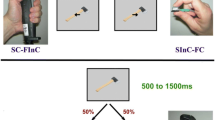

Apparatus

The study was conducted in a dimly-lit room. Subjects sat at the long side of a 91.4 cm by 182.8 cm rectangular table. A piece of 91.4 by 60.7 cm rectangular black construction paper was secured to the table with tape, centered with one of its narrow ends perpendicular to the subject. A 1 cm spherical knob was fastened onto the paper approximately 20 cm from the subject’s midline. This knob was marked the hand’s starting position. The wooden target blocks were rectangular, painted white, with 2 cm by 2 cm ends, and lengths of either 4, 5, or 6 cm. These were placed with their ends facing the subjects at a distance of 24 cm and an angle of 22.5 degrees to the right of midline. The positions of the blocks made it easy for subjects to grasp the blocks’ ends comfortably.

Participants sat facing a 61 cm television monitor at a distance of approximately 1 m. The monitor was used to present the word in the center of the monitor in size 108 Geneva font. The overall width of the words ranged from 6.2 cm to 17.4 cm depending on the length of individual words, and each letter was approximately 2.7 cm high. Ten words were used, five of which represented objects requiring small grip apertures (approximately 1 cm between thumb and forefinger) (“PEA”, “PILL”, “GRAPE”, “PENCIL”, “TWEEZERS”), and five of which represented objects requiring large grip apertures (approximately 10 cm) (“JAR”, “PEAR”, “APPLE”, “ORANGE”, and “BASEBALL”).

The workspace was monitored with an Optotrak (Northern Digital, Waterloo, Ontario) motion tracking system which recorded three infra-red emitting diodes (IREDs) at a sampling rate of 100 Hz. One IRED was located on the ulnar bone on the styloid process of the radium at the wrist. The other IREDs were placed on the inner dorsolateral surfaces of the thumb and finger (i.e., with the palm facing down, these two IREDS were near the bottom right of the thumbnail and the bottom left of the fingernail).

Experimental procedure

The experimental procedure used in this experiment was approved by the Institutional Review Board of the Pennsylvania State University.

Subjects sat at the table facing the monitor, holding the starting knob with the right thumb and forefinger. They began each trial with the eyes closed, during which time the experimenter set up a target block and then clicked a button on the computer to start the trial. This caused the computer to display a word, trigger the start of the kinematic recording, and send a series of two tones to the subject via headphones. The first tone signaled subjects to open their eyes and (silently) read the word. The second tone, which sounded 1 s later, signaled the subject to reach out and grasp the target block. Subjects were asked to lift the target block by the ends using only the thumb and forefinger, and to place it down again on the table approximately 10 cm in front of the starting position. Speed was not emphasized. After grasping and placing the block, the subject returned the hand to the start position, closed his or her eyes, and waited for the next trial.

The task consisted of 6 practice and 60 experimental trials. The experimental trials consisted of two repetitions of each object size (4, 5, and 6 cm) and each word (five small-object words and five large-object words), presented randomly. Prior to the beginning of the experimental trials, subjects were told that a memory test would be administered at the end of the session, and that they should try to remember as many of the test words as possible. However, at the end of the session subjects were not asked to recall the words, but rather were asked if they had noticed anything special about the words used.

Data analysis

The dependent variable was the grip aperture (distance between thumb and forefinger) throughout the reach. Data were first processed by passing them through a custom filter designed to exclude artifacts. Trials were excluded if more than three consecutive measures fell outside the normal range of movements (i.e., if the IREDs ‘dropped out’). The criterion velocity for the onset and offset of the movement was set at 0.025 m/s. Because the thumb tends to be more stable in grasping and more representative of the position of the hand than the wrist (Wing and Fraser 1983; Wing et al. 1986), we used the thumb rather than the wrist to determine the onset and offset criteria. Trials were excluded if either reaction time or movement times were longer than 1,500 ms, or if movement times were shorter than 300 ms.

On some occasions, subjects began to move slightly before the signal, despite instructions to the contrary. (Unfortunately, this lack of compliance with instructions could not be monitored on-line by the experimenter.) Because the time between presentation of the word and the signal to begin the reach was relatively long (1,000 ms), we did not exclude trials for having movement onsets that preceded the signal to move by less than 500 ms (i.e., in which subjects had at least 500 ms to read the word before moving). Overall, 95.7% of the total trials were included in the final analysis.

For each movement, the magnitude of the grip aperture was computed at 21 temporally equal intervals from onset to offset, inclusive. This meant that each interval corresponded to 5% of the actual movement. These data were then averaged for each subject by word group (i.e., “large object” versus “small object”) and target size. To assess the semantic effect, we first removed the overall effect of time for each subject. The residuals were then fitted with nonlinear models of the form:

where A is the grip aperture, S is the size of the target, w t is the semantic effect at time t, and b t is the slope of the relationship between grip aperture and target size. The models were fit using an iterative search procedure that minimized the squared error of prediction; approximate standard errors of the parameter estimates were computed from the derivatives of the fit with respect to the parameters. This approach to modeling the effect of semantics is necessary because the dependence of movement parameters on the actual physical characteristics of the targets develops gradually over time (e.g., Franz et al. 2000; Glover 2002; Glover and Dixon 2001a, 2002a, 2002b). For example, whereas a difference of 20 mm in target size is reflected by a difference of roughly 20 mm in grip aperture at the end of the movement (i.e., an aperture-size slope approaching 1), there is, by definition, no change in grip aperture dependent on target size at the beginning of the movement (i.e., the aperture-size slope is zero). Between these two times, the aperture-size slope generally increases. The model embodies the assumption that whatever effect the word manipulation has on grip aperture should similarly be proportional to the aperture-size slope. The parameter w t can thus be understood as the effect of semantics on the represented size of the target, and the parameter b t describes the degree to which the represented size is related to grip aperture. Estimates of w t can also be obtained by scaling the raw effect of the semantic manipulation by the aperture-size slope; for this reason, we refer to the estimates of w t as the scaled semantic effect. However, it is worth noting that the analyses we report are not, in fact, analysis of scaled effects, but rather are (nonlinear) analyses of the raw effects. The scaled effects are rather the output (i.e., estimated parameter values) of that analysis.

To examine the changes in semantic effects over time, we analyzed the results at each quarter of the duration of the movement. Two issues were of critical importance. First, we sought to determine whether there was an effect of the semantics on the grip aperture. Second, we sought to determine whether this effect changed over time. The statistical evidence related to these issues was assessed by comparing the fits of different, nested versions of the nonlinear model described above. In particular, evidence for an overall effect of semantics was assessed by comparing a model in which w t was assumed to be 0 to a model in which w t was constant but nonzero for all t. Evidence for an effect of semantics that changed over time was assessed by comparing these models to one in which w t was allowed to take on different positive values at each time, t. The relative quality of the two fits in each comparison was evaluated using the maximum likelihood ratio (λ). This ratio represents the likelihood of the data based on one model divided by the likelihood of the data based on the alternative model and provides a simple description of the evidence for one model over the other.

A likelihood ratio of 10 is classified by Goodman and Royall (1988) as “moderate to strong” evidence for one model relative to the other (cf. Dixon 1998; Dixon and O’Reilly 1999).

Results

Figure 1 shows the effect of object size on grip aperture. Figure 1a shows the relationship between grip aperture and object size over time. It can be seen that the grip aperture rose over the course of the movement to a peak at roughly 80–85% of movement duration, then decreased during the final approach to the target. These data are typical of grasping movements using the thumb and forefinger (e.g., Glover and Dixon 2002a, 2002b; Jakobson and Goodale 1991; Jeannerod 1984). Figure 1b shows the change in the aperture-size slope over time. Here again there is a typical rise of the slope over time (Glover and Dixon 2002a, 2002b; Jeannerod 1984).

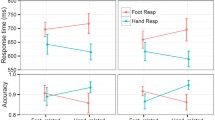

Figure 2a and b show the raw (Fig. 2a) and scaled (Fig. 2b) effects of semantics on grip aperture at each 25% of movement time. A model incorporating a constant effect of words fit the data only slightly better than the null model, in which it was assumed that words had no effect at all, λ = 2.4. However, a model incorporating a time-varying effect of words fit substantially better than either the null model (λ = 50.1) or the constant model (λ = 21.0). These results provide clear evidence for an effect of words that decreased to 0 as the hand approached the target.

a Raw effects of semantics on grasping at each 25% of the movement. These effects are expressed as the mean grip aperture for grasps preceded by words belonging to the ‘large’ objects class minus the mean grip aperture for grasps preceded by words belonging to the ‘small’ objects class. b Scaled effects of semantics on grasping at each 25% of the movement. These effects are expressed as the raw effect divided by the aperture-size slope at each point in time. c Scaled and raw effects of semantics on grasping during the final 25% of the movement. Data are shown at 5% intervals between 75% and 100% of movement time, inclusive

All three of the models provide overall good fits to the data (accounting for more than 97% of the variation in means in all cases) because most of the variation in grip aperture is determined by the size of the bar, and all three models include bar size as a variable. However, a more telling assessment of the fit of the models is to examine how well they capture the obtained semantic effect (i.e., the difference in grip aperture for small and large words). The null model predicts that the semantic effect should be identically 0. The constant model predicts an overall semantic effect, but across the 12 possible combinations of time and bar size the predictions of the constant model are inaccurate and correlate negatively with the observed values, r = −.26. The time-varying model predicts the size of the semantic effect moderately well, r = .61.

Although the model \( A = b_{t} {\left[ {S + w_{t} } \right]} \) is inherently nonlinear, a comparable demonstration of these semantic effects can obtained using linear approximations. For this analysis, we assume that the increase in slope (b t ) over time and the decrease in the semantic effect (w t ) over time are linear. Figures 1b and 2b suggest that these linear approximations are not unreasonable. Using this assumption, a null model predicts that the effect of words should be zero at all times; a model incorporating a constant value of w predicts that the effect of words should increase linearly over time along with the slope; and a model incorporating a decreasing value of w predicts that the effect of words should have both a linear and quadratic component. In particular, the effect of words should be large during the middle of the reach (when both w and b have intermediate values), small near the onset of the reach (when b is near 0) and small near the end of the reach (when w is near 0). The raw effect of semantics shown in Fig. 2a conforms to this latter pattern. Not surprisingly then, when models incorporating these linear and quadratic trends are evaluated, the constant model fits only slightly better than the null model (λ = 4.5), and the time-varying model does better than both (λ = 129.4 and λ = 28.9). These analyses indicate that the raw effect shown in Fig. 2a is more akin to an inverted U than to either a flat function with a value of 0 (the null model) or a linear increasing function (the constant effect model).

A number of concerns may be raised against the interpretation that the words had a large effect early in the reaches that dissipated as the movements progressed. For one, it might be posited that the effects at 100% of movement time were constrained to be near zero by the hand being in contact with or at least very near to the target. To address this possibility, we conducted an analysis of four points in time at 5% of movement time prior to the four in the original analysis (i.e., at 20%, 45%, 70%, and 95% MT), which excluded 100% MT. As in the original analysis, a model incorporating a constant effect of words fit the data only slightly better than the null model, λ = 3.7; however, a model incorporating a time-varying effect of words fit substantially better than either the null model (λ = 51.9) or the constant model (λ = 14.1). Further, an inspection of the data from 75–100% of MT shows a consistent decline over time for both the raw and scaled data (Fig. 2c). This would not be expected if the small effect at 100% MT were simply a consequence of the hand having contacted the target; in that case, one might expect a sudden drop in the effect of the words at 100% MT.

Another potential concern relates to the lengths of words used (from three to eight letters). Although word length was matched across “small” and “large” objects, the possibility remained that word length had an effect on grip aperture, independent of the size of the objects represented by the words. To evaluate this possibility, we carried out a correlation analysis between word length (in letters) and grip aperture at each 25% of movement duration. In none of these analyses was there substantial evidence for an effect of word length on grip aperture (all R 2 <.19, all λ <3.1).

Α third potential problem relates to the time taken to initiate and complete the movements. It might be argued that the words had greater effects early in the movement than later not because of any distinction between the information used for planning versus on-line control, but rather because the effects of the words simply faded with time. According to this hypothesis, when the words initially appeared, the priming effects on grasping were strong, but as time passed the effects of the words diminished.

To address this possibility, we conducted two sets of analyses. First, we tested for the effects of word class on reaction times and movement times. Mean reaction times were 295 ms for “small” object words and 302 ms for “large” object words. Mean movement times were 661 ms for both the “small” and “large” object words (range of mean MT across subjects was 550–772 ms; none of the movements took longer than 1,200 ms). There was no evidence that either reaction times or movement times differed depending on word class (both λs <1.2).

Second and more critically, we analyzed the correlations between total elapsed time after word presentation and the effect of the word on grip aperture. If the effects of the words faded with time after the word was presented, one would expect that as more time elapsed, the effects of the words on grip aperture would decrease. The correlation between elapsed time and word effect on grip aperture was small (R 2 = −.21) and the likelihood ratio comparing the model including an effect of elapsed time to a null model (λ = 3.4) was much smaller than the likelihood ratio reported above comparing an effect of normalized time to a null model at each 25% of MT (λ = 50.1). Taken together, these results support the notion that it was movement time from the beginning of the reach, and not total elapsed time from the presentation of the word, that was the critical factor in the reduction of the word effect as the movements unfolded.

The final noteworthy aspect of the results pertains to subjects’ end-of-session comments. If subjects became aware that the words they were reading represented “large” and “small” objects, this may have induced an experimenter demand effect. The potential for such a confound existed in past studies using more explicit words such as “LARGE” and “SMALL” (e.g., Gentilucci and Gangitano 1998; Glover and Dixon 2002a). When subjects in the present study were asked what they noticed about the words during the experimental session, most replied that the words tended to represent food objects, and in particular fruit. Only one subject noticed the classification of the words into “large” objects and “small” objects. As this subject’s scaled effects fell within one standard deviation of the group means at each quarter of the movement, her data were retained in the analyses reported above.

Discussion

The present experiment yielded two results that were of central importance. First, subjects had larger grip apertures early in the reach after reading words representing relatively large objects (e.g., “APPLE”) than after reading words representing relatively small objects (e.g., “GRAPE”). This was consistent with the hypothesis that the words activated motor tendencies that interfered with the grasping of targets. Second, there was evidence that an on-line correction of these semantic effects occurred as the hand approached the target. These results were consistent with a planning-control model of action (Glover 2002, in press, 2003; Glover and Dixon 2001a, 2002a), in which large effects of cognitive and perceptual variables early in a movement are corrected in flight. Although one may question why the corrections were gradual and not immediate, there are several reasons for the motor system to favor smooth and gradual corrections over fast, jerky corrections. For example, where there is ample time for corrections to be enacted before the movement is completed, smooth corrections result in the same ultimately accurate action as would fast and jerky corrections; yet only the former satisfy the constraint of minimizing the jerk in the system (see, e.g., Glover, in press; Rosenbaum 1991; Wolpert and Ghahramani 2000). The present study represents the first demonstration of implicit priming of grip scaling using words only indirectly related to the target feature of interest.

As mentioned in the “Introduction,” a possible mechanism for the interference of semantics with motor planning may relate to the Gibsonian notion of affordances (Gibson 1979). A possible synthesis of this idea with the results of the present study is that the reading of a word activates affordances in a similar manner to seeing the physical object the word represents. This would necessarily extend Gibson’s notion of motor tendencies being activated by visual object perception to the more general notion of tendencies being activated by semantic classifications. The present study suggests that not only physical objects and words, but a broad range of object associations (e.g., pictures, sounds, smells, etc.) could potentially activate affordances.

An interesting neurological correlate of the Gibsonian notion of visual perception is the phenomenon of “utilization behavior” that often follows damage to the frontal lobes (e.g., Humphreys and Riddoch 2000; Lhermitte 1983). In this syndrome, there is a lack of inhibition of motor programs directed towards objects such that the patient feels compelled to act on objects in the visual scene. For example, patients exhibiting this syndrome will often grasp and use an object placed in front of them even when given no instruction to do so (Lhermitte 1983). This phenomenon relates to the present study in that, even though normal subjects were able to inhibit the motor tendency to respond to an object (in this case represented by a word), some of this tendency was still noticeable in the motor program ultimately selected.

Another point of interest is the proximity of language and motor planning centers in the human brain. Generally, both language and action appear to be strongly lateralized to the left hemisphere (Kolb and Whishaw 1995). Further, it has been argued that Broca’s area, active during both word reading (Petersen et al. 1988; Price et al. 1994) and action planning (Deiber et al. 1996; Grafton et al. 1998), evolved out of a premotor region in the monkey brain (Rizzolatti and Arbib 1998). It has also been argued that language evolved from the adaptation of brain structures specialized for motor functions (Kimura 1979; Rizzolatti and Arbib 1998), a point supported by the observation that motor planning (e.g., Buccino et al. 2001; Rushworth et al. 2001) and language (Damasio and Damasio 1989) centers also overlap in the human inferior parietal lobe.

In view of these points, it is reasonable that the present study revealed a semantic effect of word reading on the planning of grasping. When subjects read a word representing a relatively large object, they initially opened their thumb-finger aperture wider than when they read a word representing a relatively small object. These semantic effects decreased over the course of the movement, however, allowing subjects to grasp the blocks without difficulty. These results were consistent with the notion of affordance activation by words as well as physical objects. The results also provided support for the idea that the planning and on-line control of actions operate using distinct arrays of inputs. Whereas planning appears susceptible to interference from many perceptual and cognitive variables, on-line control, in contrast, appears relatively immune to these same variables.

References

Arbib MA (1997) From visual affordances in monkey parietal cortex to hippocampo-parietal interactions underlying rat navigation. Philos Trans R Soc Lond B Biol Sci 29:1429–1436

Buccino G, Binkofski F, Fink GR, Fadiga L, Fogassi L, Gallese V, Seitz RJ, Zilles K, Rizzolatti G, Freund H-J (2001) Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. Eur J Neurosci 13:400–404

Buchanan L, McEwen S, Westbury C, Libben G (2003) Semantics and semantic errors: implicit access to semantic information from words and nonwords in deep dyslexia. Brain Lang 84:65–83

Castiello U (1996) Grasping a fruit: selection for action. J Exp Psychol Hum Percept 22:582–603

Castiello U (1998) Attentional coding for three-dimensional objects and two-dimensional shapes. Differential interference effects. Exp Brain Res 123:289–297

Damasio H, Damasio A (1989) Lesion analysis in neuropsychology. Oxford University Press, Oxford

Deiber M-P, Ibanez V, Sadato N, Hallett M (1996) Cerebral structures participating in motor preparation in humans: a positron emission tomography study. J Neurophys 75:233–247

Dixon P (1998) Why scientists value p values. Psychonom Bull Rev 5:390–396

Dixon P, O’Reilly T (1999) Scientific versus statistical inference. Can J Exp Psychol 53:133–149

Franz VH, Gegenfurtner KR, Bulthoff HH, Fahle M (2000) Grasping visual illusions: no evidence for a dissociation between perception and action. Psychol Sci 11:20–25

Gentilucci M (2002) Object motor representation and language. Neuropsychologia 40:1139–1153

Gentilucci M, Gangitano M (1998) Influence of automatic word reading on motor control. Eur J Neurosci 10:752–756

Gentilucci M, Benuzzi F, Bertolani L, Daprati E, Gangitano M (2000) Language and motor control. Exp Brain Res 133:468–490

Gibson JJ (1979) The ecological approach to visual perception. Houghton-Mifflin, Boston, MA

Glover S (2002) Visual illusions affect planning but not control. Trends Cogn Sci 6:288–292

Glover S (in press) Separate visual representations in the planning and control of actions. Behav Brain Sci

Glover S (2003) Optic ataxia as a deficit specific to the on-line control of actions. Neurosci Biobehav Rev 27:447-456

Glover S, Dixon P (2001a) Dynamic illusion effects in a reaching task: evidence for separate visual representations in the planning and control of reaching. J Exp Psychol Hum Percept 27:560–572

Glover S, Dixon P (2001b) Motor adaptation to an optical illusion. Exp Brain Res 137:254–258

Glover S, Dixon P (2002a) Semantics affect the planning but not control of grasping. Exp Brain Res 146:383–387

Glover S, Dixon P (2002b) Dynamic effects of the Ebbinghaus illusion in grasping: support for a planning/control model of action. Percept Psychophys 64:266–278

Goodman SN, Royall R (1988) Evidence and scientific research. Am J Public Health 78:1568–1574

Grafton ST, Fagg A, Arbib MA (1998) Dorsal premotor cortex and conditional movement selection: a PET functional mapping study. J Neurophys 79:1092–1097

Humphreys GW, Riddoch MJ (2000) One more cup of coffee for the road: object-action assemblies, response blocking and response capture after frontal lobe damage. Exp Brain Res 133:81–93

Jakobson LS, Goodale MA (1991) Factors affecting higher-order movement planning: a kinematic analysis of human prehension. Exp Brain Res 86:199–208

Jeannerod M (1984) The timing of natural prehension movements. J Mot Behav 16:235–254

Jeannerod M (1994) The representing brain: neural correlates of motor intention and imagery. Behav Brain Sci 17:187–245

Kimura D (1979) Neuromotor mechanisms in the evolution of human communication. In: Steklis HD, Raleigh M (eds) Neurobiology of social communication in primates: an evolutionary perspective. Academic Press, New York

Kolb B, Whishaw IQ (1995) Fundamentals of human neuropsychology. Freeman, New York

Le S, Raufaste E, Roussel S, Puel M, Demonet JF (2003) Implicit face perception in a patient with visual agnosia? Evidence from behavioral and eye-tracking analyses. Neuropsychologia 41:702–712

Lhermitte F (1983) Utilization behaviour and its relation to lesions of the frontal lobes. Brain 106:237–255

Oldfield RC (1971) The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9:97–113

Petersen SE, Fox P, Posner M, Mintun M, Raichle M (1988) Positron emission tomography studies of the cortical anatomy of single word processing. Nature 331:585–589

Price CJ, Wise R, Watson J, Patterson K, Howard D, Frackowiak R (1994) Brain activity during reading: the effects of exposure duration and task. Brain 117:1255–1269

Rizzolatti G, Arbib MA (1998) Language within our grasp. Trends Neurosci 21:188–194

Rosenbaum DA (1991) Human motor control. Academic Press, San Diego

Rushworth MF, Ellison A, Walsh V (2001) Complementary localization and lateralization of orienting and motor attention. Nature Neurosci 4:643–662

Schendan HE, Searl MM, Melrose RJ, Stern CE (2003) An fMRI study of the role of the medial temporal lobe in implicit and explicit sequence learning. Neuron 27:1013–1025

Wing AM, Fraser C (1983) The contribution of the thumb to reaching movements. Q J Exp Psychol 35A:297–309

Wing AM, Turton A, Fraser C (1986) Grasp size and accuracy of approach in reaching. J Motor Behav 18:245–260

Wolpert DM, Ghahramani Z (2000) Computational principles of movement neuroscience. Nat Neurosci Suppl 3:1212–1217

Acknowledgements

This work was supported by the Natural Sciences and Engineering Research Council, through a fellowship to SG and a grant to PD, as well as by NIH grant 1 R15 NS41887-01 to Jonathan Vaughan, Hamilton College, Clinton, New York, for which the second author was a consultant.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Glover, S., Rosenbaum, D.A., Graham, J. et al. Grasping the meaning of words. Exp Brain Res 154, 103–108 (2004). https://doi.org/10.1007/s00221-003-1659-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-003-1659-2