Abstract

We consider a class of dispersive and dissipative perturbations of the inviscid Burgers equation, which includes the fractional KdV equation of order \(\alpha \), and the fractal Burgers equation of order \(\beta \), where \(\alpha , \beta \in [0,1)\), and the Whitham equation. For all \(\alpha , \beta \in [0,1)\), we construct solutions whose gradient blows up at a point, and whose amplitude stays bounded, which therefore display a “shock-like” singularity. Moreover, we provide an asymptotic description of the blow-up. To the best of our knowledge, this constitutes the first proof of gradient blow-up for the fKdV equation in the range \(\alpha \in [2/3, 1)\), as well as the first description of explicit blow-up dynamics for the fractal Burgers equation in the range \(\beta \in [2/3, 1)\). Our construction is based on modulation theory, where the well-known smooth self-similar solutions to the inviscid Burgers equation are used as profiles. A somewhat amusing point is that the profiles that are less stable under initial data perturbations (in that the number of unstable directions is larger) are more stable under perturbations of the equation (in that higher order dispersive and/or dissipative terms are allowed) due to their slower rates of concentration. Another innovation of this article, which may be of independent interest, is the development of a streamlined weighted \(L^{2}\)-based approach (in lieu of the characteristic method) for establishing the sharp spatial behavior of the solution in self-similar variables, which leads to the sharp Hölder regularity of the solution up to the blow-up time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this article, we construct and describe the dynamics of solutions with smooth decaying initial data that exhibit gradient blow-up (while the solution itself remains bounded) for a wide class of perturbations of the inviscid Burgers equation

Examples covered by our result include the fractional KdV equations

Whitham’s equation,

and the fractal Burgers equations

where \(\vert {D_{x}}\vert ^{\alpha } = (-\Delta )^{\frac{\alpha }{2}}\). These equations arise naturally as model problems in the theory of water waves [30], which makes the problem of singularity formation a natural one.

Gradient blow-up (also referred to as shock formation or wave breaking in the contexts of hyperbolic conservation laws or water waves, respectively [38]) for (fKdV) in the range \( 0 \le \alpha < 1\) was conjectured from numerical experiments in [22] and later in [18]. While the existence of gradient-blow-up solutions was known for (fKdV) in the range \(0 \le \alpha < \frac{2}{3}\) and for (Whitham) (see for instance [17, 18, 32, 39]), our result seems to be the first construction of such blow-up solutions in the range \(\frac{2}{3} \le \alpha < 1\). We furthermore give a quantitative description of the blow-up dynamics in a stable blow-up regime in the case \(0< \alpha < \frac{2}{3}\), which seems to have not appeared in the literature (note that in the case \(\alpha =0\), the Burgers–Hilbert equation, a precise description of the same blow-up dynamics has already appeared in the recent work of Yang [39], which is discussed further below). In all cases, we also observe that there exist smooth compactly supported initial data of either sign (everywhere nonnegative or nonpositive) that give rise to the same blow-up behavior, which disproves (yet suggests a refinement of) a conjecture made by Klein–Saut [22, Conjecture 3] for (Whitham); see Remark 1.3 below.

Concerning (fBurgers), gradient blow-up was shown in the papers [1, 14, 20] for all ranges \(0< \beta < 1\). Moreover, in a recent work of Chickering–Moreno-Vasquez–Pandya [7], which we learned of while preparing our article, a quantitative description of a stable blow-up dynamics analogous to [39] in the Burgers–Hilbert case was given in the case \(0< \beta < \frac{2}{3}\). Our work provides an alternative, independent description of the same stable blow-up regime, as well as the precise description of some examples of gradient-blow-up solutions in the case \(\frac{2}{3} \le \beta < 1\), which seems new.

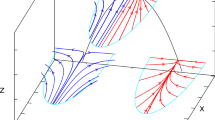

Our proof is based on a systematic study of the stability of self-similar blow-up solutions for (Burgers) under perturbations of the equation. As is well-known, (Burgers) admits a two-parameter family of scaling symmetries (corresponding to separate rescaling of time and space), which results in a one-parameter family of self-similar change of variables

parametrized by \(b > 0\).Footnote 1 Among these b’s, there exist countably many choices that lead to smooth self-similar solutions (i.e., s-independent solution in the self-similar variables), namely \(b = \frac{2k+1}{2k}\) for \(k= 1, 2, \ldots \) [15]. In what follows, we will refer the self-similar solutions in the case \(k = 1\) as ground states, and those in the case \(k \ge 2\) as excited states.

A key predecessor of this article is the recent work of Yang [39] that, based on the modulation-theoretic approach of Buckmaster–Shkoller–Vicol [4], constructed an open set of initial data giving rising to gradient-blow-up solutions to the Burgers–Hilbert equation (i.e., (1) with \(\alpha = 0\)) with ground state self-similar solutions to (Burgers) as blow-up profiles (see also the very recent work [7] for (fBurgers) with \(0< \beta < \frac{2}{3}\)). In this article, we extend [39] (and [7]) to more general perturbations of the Burgers equation, while simultaneously allowing for the use of excited states as blow-up profiles.Footnote 2

In fact, these two extensions go hand in hand. A somewhat amusing point, made precise in this article, is that higher excited self-similar profiles, which are less stable under perturbations of the initial data (i.e., they are stable under higher co-dimensional set of initial data perturbations), are more stable under perturbations of the equation, in that higher order dispersive and/or dissipative terms are allowed.Footnote 3 An explanation behind this phenomenon is as follows. The key factor that determines the stability of a self-similar profile under perturbations of the equation turns out to be its rate of concentration (i.e., the exponent \(b = \frac{2k+1}{2k}\)), and the slower rates of the excited states lead to larger classes of admissible perturbations of the equation. To see this point heuristically, one may simply compare the “strength” of each term in the equation on the characteristic time and length scales of the k-th Burgers self-similar profile, which are \(\sim (-t)\) and \(\sim (-t)^{\frac{2k+1}{2k}}\), respectively. For instance, for (fKdV), compare

(In self-similar variables for (Burgers), the “strength” of \(u \partial _{x} u\) is the same as that of \(\partial _{t} u\).) The “strength” of the perturbation \(\vert {D_{x}}\vert ^{\alpha -1} \partial _{x} u\) is weaker than that of \(\partial _{t} u\) when \(- \frac{2k+1}{2k} \alpha < -1\), or equivalently, \(\alpha < \frac{2k}{2k+1}\); note that this range improves as k increases. Our main theorem demonstrates that under this condition, the Burgers self-similar profile with \(b = \frac{2k+1}{2k}\) is stable under passage to (fKdV), leading to gradient-blow-up solutions to (fKdV) that asymptote to the same Burgers self-similar profile near the singularity. On the other hand, it will become apparent that the instability of the self-similar profile under initial data perturbations does not affect its stability under perturbations of the equation.

We remark that the preceding points are in parallel with the recent remarkable works of Merle–Raphaël–Rodnianski–Szeftel on singularity formation for the compressible Euler equations, the compressible Navier–Stokes equations and defocusing nonlinear Schrödinger equations (NLS). In [27], smooth self-similar profiles for the polytropic compressible Euler equations with characteristic length scales \((-t)^{\frac{1}{r}}\) are constructed for discrete values of r. Then these profiles are used to demonstrate singularity formation for second-order dissipative (i.e., compressible Navier–Stokes [28]) and dispersive (i.e., energy-supercritical defocusing NLS after Madelung transform [26]) perturbations of the Euler equations, where the admissible values of r with respect to each perturbation may be determined with similar heuristics as above.

Another innovation in this article is the introduction of a robust yet sharp weighted \(L^{2}\)-based method to establish the optimal spatial growth of the solution in the appropriate self-similar variables, in lieu of the method of characteristics employed in [4] and subsequent works. Such information is necessary to establish the sharp Hölder regularity, and in general even the boundedness (due to the lack of the maximum principle), of the solution up to the blow-up time. We refer the reader to Sect. 1.3 below for a short description of this method.

1.1 First Statement of the Main Result and Discussion

We now precisely state the class of equations studied in this article. For \(u: {\mathbb {R}}_{t} \times {\mathbb {R}}_{x} \rightarrow {\mathbb {R}}\), consider

Here, \(\Gamma \) and \(\Upsilon \) are Fourier multipliers with symbols \(\Gamma (\xi )\) and \(\Upsilon (\xi )\) satisfying the following properties:

-

1.

\(\Gamma (\xi ), \Upsilon (\xi ) \in C^{\infty }({\mathbb {R}}\setminus \{0\})\) are real-valued and evenFootnote 4;

-

2.

\(\Gamma (\xi ) \xi \) and \(\Upsilon (\xi )\) are symbols of order \(\alpha \) and \(\beta \) with \(0 \le \alpha , \beta < 1\), in the sense that for every multi-index I, there exists constants \(C_{\Gamma , |I|}, C_{\Upsilon , |I|} > 0\), such that for every \(\vert {\xi }\vert \ge 1\),

$$\begin{aligned} \vert {\partial _{\xi }^{I} (\Gamma (\xi ) \xi )}\vert \le C_{\Gamma , N} \vert {\xi }\vert ^{\alpha -\vert {I}\vert }, \quad \vert {\partial _{\xi }^{I} \Upsilon (\xi )}\vert \le C_{\Upsilon , N} \vert {\xi }\vert ^{\beta -\vert {I}\vert }. \end{aligned}$$On the other hand, we assume that \(\Gamma (\xi ) \xi \) and \(\Upsilon (\xi )\) are bounded on \(\{\xi \in {\mathbb {R}}: \vert {\xi }\vert \le 1\}\).

-

3.

\(\Upsilon (\xi ) \ge 0\) (i.e., \(\Upsilon \) is elliptic).

Clearly, (fKdV), (Whitham) are examples of (1) with \(\Upsilon = 0\), and (fBurgers) is an example of (1) with \(\Gamma = 0\). The order of \(\Gamma \) for (Whitham) is \(\alpha = \frac{1}{2}\).

By the standard energy method, it can be readily seen that the initial value problem for (1) is locally well-posed in \(H^{s}\) for any \(s > \frac{3}{2}\). Our main theorem concerns the formation of singularity for (1) starting from smooth and well-localized initial data. In simple terms, the statement of our main theorem is as follows.

Theorem 1.1

Let k be a positive integer such that \(\alpha , \beta < \frac{2k}{2k+1}\). Then there exist smooth initial data \(u_0\) for (1) such that the resulting solution of (1) blows up in finite time in \({\mathcal {C}}^{\sigma }\) for every \(\sigma > \frac{1}{2k+1}\), while its \({\mathcal {C}}^{\frac{1}{2k+1}}\) norm stays bounded until the blow-up time. In the case \(k=1\), and for \(\alpha < \frac{2}{3}\) and \(\beta < \frac{2}{3}\), the blow-up behavior is stable in \(H^{5}\). In the case \(k > 1\), these initial data form a “codimension \(2k-2\) subset” of \(H^{2k+3}\).

For more precise statements regarding the description of the initial data and blow-up dynamics, we refer to Theorem 3.1, Lemma 3.4 and the ensuing discussion. Note moreover that it would be possible, by a more refined analysis, to show that the “codimension \(2k-2\) subset” of \(H^{2k+3}\) in the above statement constitutes in reality a suitably regular submanifold of initial data. However, this is not carried out in the present work.

Remark 1.2

(Stable blow-up regime) Note that Theorem 1.1 applies to (fKdV) for \(0 \le \alpha < \frac{2}{3}\), (Whitham) and (fBurgers) for \(0 \le \beta < \frac{2}{3}\) with \(k = 1\), and as a result we obtain a blow-up behavior that is stable under initial data perturbations for these equations. On the other hand, in the range \(\frac{2}{3} \le \alpha < 1\), the term \(\vert {D_{x}}\vert ^{\alpha -1} \partial _{x}\) cannot be merely treated as a small perturbation for \(k = 1\), and we must perturb off of an excited Burgers self-similar profile. Description of a stable (under initial data perturbations) blow-up for (fKdV) for \(\frac{2}{3} \le \alpha < 1\) remains an open problem.

Remark 1.3

(The sign of the initial data) In [22, Conjecture 3], based on numerical investigation, the following interesting conjecture concerning the blow-up dynamics for (Whitham) and the sign of the initial data was made:

Solutions to the Whitham equation [...] for negative initial data \(u_{0}\) of sufficiently large mass will develop a cusp at \(t^{*} > t_{c}\) of the form \(\vert {x-x^{*}}\vert ^{1/3}\) [...]

Solutions to the Whitham equation [...] for positive initial data \(u_{0}\) of sufficiently large mass will develop a cusp at \(t^{*} < t_{c}\) of the form \(\vert {x-x^{*}}\vert ^{1/2}\) [...]

Our construction provides an open set of initial data of each sign whose corresponding solutions all have the same blow-up behavior (i.e., \({\mathcal {C}}^{\frac{1}{3}}\) remains bounded while \({\mathcal {C}}^{\sigma }\) for any \(\sigma > \frac{1}{3}\) blows up), thereby providing a counterexample to this conjecture as stated; see Remark 3.3 below. Nevertheless, it is possible that the blow-up observed in [22] for positive initial data is another stable blow-up regime, whose blow-up profile must have a positive sign. Verification of this revised picture remains an interesting open problem.

1.2 Prior Works

The models we consider in the present paper have been considered several times in the literature. We try to give a (non-exhaustive) list of previous results here, dividing them into four main areas.

-

Water waves Some of the above equations, such as (fKdV) and (Whitham) arise as approximated models in the theory of water waves. In his 1967 paper Whitham [37] introduced the equation bearing his name, arising as a nonlinear approximation for surface water waves, where the dispersive term satisfies the appropriate dispersion relation. For further discussion of the connections of (fKdV) with the theory of water waves, we refer the reader to the work of Klein–Linares–Pilod–Saut [21]. Many authors since the work of Whitham focused on the issue of singularity formation for such models. Wave breaking for (Whitham) was first shown only formally by Seliger [34], followed by Naumkin–Shishmarev [30]. The Russian authors were able to extend Seliger’s argument to (fKdV) in the case \(0 \le \alpha < \frac{2}{3}\). However, it appears that their arguments were not completely rigorous. In follow-up work, A. Constantin–Escher [11] made these arguments fully rigorous in the case of a model problem very similar to (Whitham), requiring however boundedness of the kernel, which does not cover the case of (Whitham) itself. In Castro–Cordoba–Gancedo [6], the authors proved blow-up for (fKdV) in the full range \(0< \alpha < 1\): their result show that the solution blows up in \({\mathcal {C}}^{1,\sigma }\), however it does not imply gradient blow-up in this case. In Klein–Saut [22], the authors performed numerical experiments on (fKdV) in the full range \(0< \alpha < 1\), which lead them to conjecture that wave breaking happens in the full range. In Hur–Tao [18], the authors were then able to show wave breaking for (fKdV) in the case \(0< \alpha < \frac{1}{2}\) and for (Whitham). In later work, Hur was able to extend the blow-up construction to the range \(0< \alpha < \frac{2}{3}\), see Hur [17]. More recently, Yang [39] extended the shock formation construction to the case \(\alpha = 0\), by means of a modulation-theoretic analysis in self-similar variables similar to [4] (discussed below), which gives a precise description of singularity formation. Finally, Saut–Wang [32] have also proved gradient blow-up for (fKdV) in the case \(0 \le \alpha < \frac{3}{5}\) as well as for (Whitham). Concerning model problems, let us mention the work of Klein–Saut–Wang [23], where the authors consider the modified fKdV equation (which features a cubic nonlinearity) in the range \(\alpha \in (0,2)\). In the weakly dispersive range (\(\alpha \in (0,1)\)), they show the existence of wave breaking solutions. Note also that, by the work of Saut–Wang [33], modified fKdV admits global solutions for small data when \(\alpha \) is in the full range (0, 2), \(\alpha \ne 1\). Let us finally briefly mention the case of \(\alpha \ge 1\), where the situation seems to be delicate. Conjecturally, when \(\alpha \in (1, 3/2)\), the picture of “shock formation” is expected not to hold (see Klein–Saut [22]) for the fKdV equation. In a recent work, Rimah was able to establish a precise version of this statement for a paralinearized version of the fKdV equation, thereby excluding wave breaking in the case \(\alpha \in (1,2)\) for a paralinearized model problem [31]. For modified fKdV with \(\alpha \in (1,2)\), Klein–Saut–Wang (again in [23]), conjecture that, in the focusing case for \(\alpha \in (1,2)\), the \(L^\infty \) norm of the solution blows up (and no wave breaking occurs). Finally, it is expected that, for \(\alpha > 3/2\), no blow-up occurs for fKdV. In this direction, we cite the work of Linares–Pilod–Saut [24], in which the authors show local well-posendess for fKdV with initial data in \(H^s(\mathbb {R})\), where \(s > \frac{3}{2}-\frac{3\beta }{8}\) and \(0< \beta < \alpha - 1\). More recently, Molinet–Pilod–Vento [29] extended the previous result to \(s > \frac{3}{2} -\frac{5\beta }{4}\). Together with conservation of energy this implies global well-posedness when \(\alpha > 1+6/7\). Showing global well-posedness all the way to \(\alpha > 3/2\) remains an outstanding open problem.

-

Weak dissipation. Weakly dissipative models have also attracted significant attention from the fluid dynamics community. For (fBurgers) in the case \(0 \le \beta < 1\), Kiselev–Nazarov–Shterenberg [20] and independently Alibaud–Droniou–Vovelle [1] as well as Dong–Du–Li [14] were able to show gradient blow-up. Note that the approaches in [1, 14, 20] rely heavily on monotonicity properties of the fractional Laplacian (a form of the maximum principle). Unfortunately, in the dispersive case (in particular, in fKdV with \(\alpha \in (2/3,1)\)), the monotonicity properties are lost and this approach breaks down. Very recently, Chickering–Moreno-Vasquez–Pandya [7] used an approach similar to Buckmaster et al. [4] and Yang [39] to give a precise description of stable blow-up dynamics in the case \(0 \le \beta < \frac{2}{3}\). We note that the blow-up solutions to (fBurgers) constructed in this article sharply complement known regularity criteria for (fBurgers). More precisely, regularity results on linear advection-fractional dissipation equation [12, 35, 36] (see also [19] for time-integrated criteria) imply that if u is a solution to (fBurgers) such that \(u \in L^{\infty }_{t}([0, \tau _{+}); {\mathcal {C}}^{1-\beta })\), then \(\partial _{x} u\) is Hölder continuous up to time \(\tau _{+}\), and therefore the solution extends past \([0, \tau _{+})\). On the other hand, for each \(k \ge 1\), Theorem 1.1 demonstrates the existence of a blow-up solution u to (fBurgers) with \(u \in L^{\infty }_{t} ([0, \tau _{+}); {\mathcal {C}}^{\frac{1}{2k+1}})\) for any \(\beta < \frac{2k}{2k+1}\) (or more instructively, \(\frac{1}{2k+1} < 1-\beta \)).

-

Self-similar constructions in fluids Our blow-up construction is based on the method of modulation theory in self-similar variables using smooth self-similar solutions to the Burgers equation as blow-up profiles. This method was first applied in the context of the Burgers equation in a seminal work by Collot–Ghoul–Masmoudi [10], in which the authors construct gradient blow-up for a two-dimensional Burgers equation with transverse viscosity, which is a simplified model for Prandtl’s boundary layer equation. In particular, similarly to the present article, [10] employs weighted \(L^{2}\)-bounds and makes use of all excited states as blow-up profiles via a topological argument. The above method was applied more recently to compressible fluid dynamics with great success in a series of works [3,4,5] by Buckmaster–Shkoller–Vicol. In [3, 4], the authors use a self-similar method to show shock formation for polytropic compressible Euler in two and three space dimensions, giving a precise asymptotic description of shock formation at the point of first singularity, even in the presence of vorticity. They moreover extended their treatment to the non-isentropic case in [5], showing for the first time generation of vorticity at the shock. We also mention the work of Buckmaster–Iyer [2], in which the authors show formation of unstable shocks for two-dimensional polytropic compressible Euler by using (first) excited states as blow-up profiles, albeit via a different argument (Newton iteration) than what is used in this article (topological argument) to control the unstable directions. Concerning self-similar solutions in fluids, we finally mention the groundbreaking recent work of Elgindi on the blow-up of the 3D incompressible Euler equations in the \({\mathcal {C}}^{1,\alpha }\) regularity class [16].

-

Geometric blow-up constructions Finally, we also mention the geometric blow-up constructions pioneered by Christodoulou in [8], where shock formation for the compressible irrotational Euler equations is shown. The work of Christodoulou relies on powerful energy estimates, which allow not only to construct the point of first singularity, but also the maximal development of the solution. These ideas enabled Christodolou to later address the restricted shock development problem [9]. Moreover, Luk–Speck used geometric ideas to show stability of planar shocks under perturbations with nonzero vorticity [25]. In the context of the present work, it would be very interesting to extend this type of reasoning to include weakly dispersive and dissipative effects.

1.3 Strategy of the Proof

In this section, we outline the strategy of the proof. For the purposes of this section, let us restrict to the case of (fKdV). Our argument is based on the underlying analysis of stable (and unstable) blow-up for the Burgers equation. It is well known (see, for instance, [15]) that, for any given \(k \in \mathbb {N}\), \(k \ge 1\), the Burgers equation admits self-similar solutions exhibiting blow-up in \({\mathcal {C}}^{0, \frac{1}{2k+1}+}\), which are each associated to a self-similar blow-up profile and self-similar coordinates.

We start from equation (fKdV), which is written in the variables (t, x, u), and we rewrite it in the appropriate self-similar variables arising from Burgers, which we call (s, y, U). For the precise definition of these variables, see Sect. 2.2. We expect the unstable behavior to be encoded by the derivatives of U up to and including order 2k at \(y = 0\). In view of this observation, we are going to track of the values of \(\partial _y^j U(s,0)\) throughout the evolution, \(j = 0, \ldots , 2k\).

Three of the unstable modes can be controlled naturally by modulation parameters adapted to the symmetries of the equation: time translation, space translation and Galilean transformation. The modulation conditions will therefore be imposed on U(s, 0), \(\partial _y U(s,0)\) and \(\partial _y^{2k}U(s,0)\), and the modulation parameters are going to be called \((\tau , \xi , \kappa )\). In the case \(k=1\), there are only three unstable directions, which allows us to show stable blow-up.

In the case \(k > 1\), the remaining \(2k - 2\) unstable directions will have to be controlled by selecting the initial data appropriately. For the precise definition of the modulation parameters and to see how they arise from the symmetries of the equation, see Sect. 2.2.

With this setup, in the self-similar variables (defined precisely below in (5)), (fKdV) then becomes:

Here, we have defined \(b = \frac{2k + 1}{2k}\).

For ease of exposition, we are now going to set all the modulation parameters to zero. We obtain the following equation:

The key observation is that, as long as \(1 - b \alpha > 0\), we are able to treat the term on the RHS in a perturbative way due to the exponentially decaying prefactor. Since \(b \rightarrow 1\) as \(k \rightarrow \infty \), we are able to treat values of \(\alpha \) arbitrarily close to 1 by choosing k appropriately.

We will set up a bootstrap argument and our goal will be to show that Eq. (2) admits a global (in self-similar time s) solution. The starting point is that \(\partial _y U\) can be treated almost independently by a Lagrangian analysis, which yields a uniform bound for \(\partial _y U\) in \(L^\infty \). Through the intermediate step of showing a uniform \(L^2\) bound for \(\partial _y^2 U\), we finally propagate appropriate weighted (in y) bounds for top-order derivatives. The weights are adapted to the Lagrangian flow of the equation, and their purpose is to show that the solution displays the correct asymptotic behavior at the time of blow-up. This is carried out in a weighted \(L^2\) framework, which has a twofold advantage. First, we show blow-up without the need to consider the difference with the exact self-similar blow-up profile, which is an amusing aspect by itself. Second, this part of the argument is entirely \(L^2\) based, which avoids derivative loss at the top order.

The final part of the argument is then devoted to addressing the “unstable” part, i.e. the ODE analysis for the modulation parameters and for the unstable derivatives of U at \(y = 0\). We introduce a “trapping condition” for the unstable coefficients (i.e., a decay condition on derivatives of U at \(y = 0\)) and show, by way of a shooting argument, that initial data can be selected such that the trapping condition holds for all times.

We are now going to describe the strategy in more detail, again focusing on the case of fKdV.

-

1.

Control of \(\Vert \partial _y U \Vert _{L^\infty }\). We differentiate Eq. (2) by \(\partial _y\) and we obtain:

$$\begin{aligned} \partial _s U' +U' +(U')^2 +(U+by)\partial _y U' =e^{-s(1- b\alpha )}\partial _y |D_y|^{\alpha -1} U'. \end{aligned}$$(3)Let us for a moment neglect the nonlocal RHS. We rewrite \(U'\) in Lagrangian coordinates (we let \({\tilde{U}}'\) be \(U'\) written in Lagrangian coordinates) and we obtain the following equation for \({\tilde{U}}'\):

$$\begin{aligned} \partial _s {\tilde{U}}' = -{\tilde{U}}' ({\tilde{U}}' +1). \end{aligned}$$We immediately see that the inequality \(-1< {\tilde{U}}' < 1\) is preserved by the above Lagrangian ODE, and moreover this bound carries over to the original Eq. (3). This control is going to be the starting point of our analysis (see Lemma 5.2).

Building upon this inequality, we then show that, depending on the region considered, \(U'(s,y)\) either satisfies a coarse polynomial bound in terms of |y|, or it decays exponentially in self-similar time (see Lemma 5.2, part 4). We will use this, later in the course of the argument, to show dissipativity of the equation in a region where y is large.

-

2.

Control of \(\Vert \partial ^{2k+2}_y U \Vert _{L^2(\mathbb {R})}\) and \(\Vert \partial ^{2k+3}_y U \Vert _{L^2(\mathbb {R})}\). These terms are “top order” in terms of derivatives. In this part, we shall first accomplish the intermediate task of controlling \(\Vert \partial ^2_y U \Vert _{L^2(\mathbb {R})}\). We first show, by a Lagrangian argument, that \(U''\) satisfies a uniform \(L^\infty \) bound in the “close” region \(|y|\le \frac{1}{4}\). We emphasize that, in this case, it is extremely important that the bound, as well as its region of validity, be independent of the bootstrap parameters. The reason is that we then perform a weighed \(L^2\) estimate in the region \(R:= \{ y \in \mathbb {R}: \frac{1}{4}\le |y| \le y_2\}\), and we wish to control the expression

$$\begin{aligned} \left\| {\exp \left( - \frac{\lambda }{2} y\right) U''}\right\| _{L^2(R)}, \end{aligned}$$where \(\lambda \) is a positive real parameter, and \(y_2\) is a positive number which we regard as large. We thus require the parameter \(\lambda \) to depend on the lower bound for |y| when \(y \in R\), and it is therefore crucial that this lower bound be independent of the bootstrap parameters. Finally, we need to show a bound in the “far away” region, where \(|y| \ge y_2\). We use the smallness of \(U'\) to show that the equation for \(U''\) has a dissipative character for \(|y| \ge y_2\). Combining the three regions, we obtain a uniform \(L^2\) bound for \(U''\). This is the content of Lemma 5.3.

Turning now to the proof of the bounds for \(\Vert \partial ^{2k+2}_y U \Vert _{L^2(\mathbb {R})}\) and \(\Vert \partial ^{2k+3}_y U \Vert _{L^2(\mathbb {R})}\), we recall the familiar observation that, taking \(2k+2\) derivatives of Eq. (2), the linear term on the LHS becomes dissipative everywhere on \(\mathbb {R}\). Combining this fact with interpolation and the control of \(\partial ^2_y U\) in \(L^2\), which was obtained as an intermediate step, allows us to deduce a uniform bound for \(\Vert \partial ^{2k+2}_y U \Vert _{L^2(\mathbb {R})}\). Using this control, it is then straightforward to derive a bound for \(\Vert \partial ^{2k+3}_y U \Vert _{L^2(\mathbb {R})}\) (we require control up to this order due to a technical point: we will need to bound \(\partial _y^{2k+2}U\) at \(y = 0\) by Sobolev embedding, in order to control the evolution of \(\partial _y^{2k+1}U\) at \(y = 0\) in the “unstable” part of the argument). The high order bounds are obtained in Lemma 5.4.

-

3.

Control of weighted \(L^2\) norms. Recall that the exact self-similar profile \({\bar{U}}\) for the Burgers equation satisfies, for large |y|,

$$\begin{aligned} |y|^{\frac{1}{2k+1} - j}\lesssim |\partial _y^j {\bar{U}}| \lesssim |y|^{\frac{1}{2k+1} - j}, \end{aligned}$$(4)where \(j \ge 0\), \(j \in \mathbb {N}\).

In this part of the argument, we wish to propagate an appropriate \(L^2\) version of the above polynomial decay bound, using a weighted \(L^2\) space, for top-order number of derivatives (i.e., when \(j = 2k+3\)). This information is needed to show that the blow-up solution is in the correct Hölder regularity class up to the blow-up time.

The weights are constructed such that, in a region of bounded x (i. e. a region which corresponds to the image under the Lagrangian flow of a bounded y-interval centered at 0), one obtains the corresponding decay in y. Outside this region, the weight is “tapered”: it is independent of y, and grows exponentially in self-similar time at the correct rate.

More precisely, given \(n \in \mathbb {N}\) and \(L > 0\), we define the semi-norm

$$\begin{aligned} \Vert {V}\Vert _{\dot{{\mathcal {H}}}^{n}_{< L}}= & {} \sup _{j \in {\mathbb {Z}}, \, 2^{j}< L} \left( \int _{2^{j-1}< \vert {y}\vert < 2^{j}} (\vert {y}\vert ^{n-\frac{1}{2k+1}} \partial _{y}^{n} V)^{2} \frac{\textrm{d}y}{y}\right) ^{\frac{1}{2}} \\{} & {} + L^{n-\frac{1}{2k+1}-\frac{1}{2}} \left( \int _{\vert {y}\vert >\frac{L}{2}} (\partial _{y}^{n} V)^{2} \textrm{d}y\right) ^{\frac{1}{2}}. \end{aligned}$$Note that it consists of two terms: each expression in the first summand scales according to (4), and the second summand is obtained by choosing the weight to be the matching constant outside the region \(|y| \le \frac{L}{2}\). In practice, since the Lagrangian flow away from \(y = 0\) is well approximated by \(y = C e^{bs}\), we are going to set \(L = e^{bs}\).

Our goal will then be to show that \(\Vert {U}\Vert _{\dot{{\mathcal {H}}}^{2k+3}_{< e^{bs}}}\) is uniformly bounded in s. As a first step, we first show a uniform bound on \(\Vert {U}\Vert _{\dot{{\mathcal {H}}}^{1}_{< e^{bs}}}\). To obtain it, we multiply the equation for \(U'\) by a weight approximately adapted to the Lagrangian flow in a region of large y. The growth rate of the weight is also chosen appropriately.

Using this information, the bound for \(\Vert {U}\Vert _{\dot{{\mathcal {H}}}^{2k+3}_{< e^{bs}}}\) is obtained in a similar fashion. In this case, however, one needs to be careful about a potential loss of derivative, as the nonlocal term does not commute with the weight. To deal with this issue, we show a commutator estimate (see Lemma 4.3). The weighted bounds are proved in Lemma 5.5.

Remark 1.4

Note that, if we set \(L = \infty \), the above semi-norm is scale invariant.

-

4.

Topological argument. Finally, in Sect. 6, we employ a topological procedure relying on the instability of the ODE system satisfied by the Taylor coefficients of U at \(y = 0\) to close the argument. This procedure will moreover select appropriately the initial data in the unstable case. This type of construction is well known in the dispersive community: see, for instance, the paper by Côte, Martel, and Merle [13].

Recall the trapping condition, i.e. a decay condition for the “unstable” Taylor coefficients of U at \(y = 0\). We want to show that, upon appropriately choosing initial data, it can be arranged that the solution remains trapped globally in time.

First, in Lemma 6.1 we show that, under the bootstrap assumptions and assuming the trapping condition, the evolution of the modulation parameters is controlled.

Finally, in Lemma 6.3, we show that the ODE system satisfied by the first \(2k+1\) Taylor coefficients of U at \(y=0\) displays an unstable character, and we use this fact, combined with a Brouwer-type argument, to show that we can select initial data such that the corresponding solution is trapped for all time. This concludes the argument.

Remark 1.5

Note that parts (1) and (2) of the above outline rely on showing \(L^\infty \) estimates, which are proved here by means of Lagrangian analysis. This Lagrangian approach seems to be the most efficient way (in terms of degree of technicality) to analyze directly the unknown U (which is what we do in this paper), rather than the difference between U and the exact self-similar profile. However, we believe that, if instead one were to analyze the difference between U and the corresponding self-similar profile, one would be able to carry out the argument without the need for Lagrangian analysis. This approach would make the argument completely \(L^2\) based.

1.4 Organization of the Paper

In Sect. 2, we introduce the relevant equations, the self-similar coordinates, the modulation parameters, and the unstable ODE system for Taylor coefficients at \(y=0\). In Sect. 3, we give a precise statement of the main theorem (Theorem 3.1), and reduce its proof to establishing two key lemmas, Lemma 3.6 (main bootstrap lemma) and Lemma 3.9 (shooting lemma for unstable coefficients when \(k > 1\)). After collecting some useful lemmas for the Fourier multipliers arising in our problem in Sect. 4, the following two sections are devoted to the proof of the two key lemmas. In Sect. 5, close the bootstrap assumptions on the solution U in appropriate self-similar variables. In Sect. 6, we estimate the ODEs for the modulation parameters and stable coefficients, thereby completing the proof of Lemma 3.6. Moreover, in case \(k > 1\), we analyze the ODEs for the unstable coefficients and establish Lemma 3.9.

2 Preliminaries

2.1 Notation and Conventions

As is usual, we use \(C > 0\) to denote a positive constant that may change from line to line. Dependencies of C are expressed by subscripts. Moreover, we use the standard notation \(A \lesssim B\) for \(\vert {A}\vert \le C B\), and \(A \simeq B\) for \(A \lesssim B\) and \(B \lesssim A\), and dependencies of the implicit constant C are expressed by subscripts.

Given a symbol \(\Gamma (\xi )\), we denote by \(\Gamma (D_{x})\) its quantization in x, i.e., \(\Gamma (D_{x}) V = {\mathcal {F}}_{x}^{-1}[\Gamma (\xi ){\mathcal {F}}_{x}[V](\xi )]\), where \({\mathcal {F}}_{x}\) denotes the Fourier transform in the variable x. For each \(k \in {\mathbb {Z}}\), we define the Littlewood–Paley projection \(P_{\le k}\) to be the Fourier multiplier operator with symbol \(P_{\le k}(\xi )\) defined by \(P_{\le k}(\xi ) = P_{\le 0}(2^{-k} \xi )\), where \(P_{\le 0}\) is a nonnegative smooth function supported in \([-2, 2]\) and equals 1 on \([-1, 1]\). We also introduce the symbols \(P_{k}(\xi ) = P_{\le k}(\xi ) - P_{\le k-1}(\xi )\) and \(P_{> k}(\xi ) = 1 - P_{\le k}(\xi )\), as well as the corresponding Fourier multipliers (which are also called Littlewood–Paley projections).

2.2 Derivation of the Equations in Self-Similar Variables

Given parameters \(\tau , \xi , \kappa \in {\mathbb {R}}\) and \(\lambda > 0\) (called modulation parameters), consider the change of variables \((t, x, u) \mapsto (s, y, U)\),

Note that varying the modulation parameters \(\tau \), \(\xi \), \(\kappa \) and \(\lambda \) correspond to applying time translation, space translation, Galilean boost (\(u \mapsto u(x-\kappa t) - \kappa \)) and spatial scaling to the solution, which are exact symmetries for the (invsicid) Burgers equation. Hence, (s, y, U) are nothing but the rescaled variables for Burgers equation centered at \((t, x, u) = (\tau , \xi , \kappa )\) at spatial scale \(\lambda \).

We let the modulation parameters depend dynamically on t, i.e., \(\tau = \tau (t)\), \(\xi = \xi (t)\), \(\kappa = \kappa (t)\) and \(\lambda = \lambda (t)\), and consider the same change of variables (5). Note that

so that

Thus, (1) becomes

In what follows, we shall assume the following self-similarity ansatz for \(\lambda \): Given \(k \in \mathbb {N}\), set \(b: = \frac{2k + 1}{2k}\) and

Then \(\frac{\lambda _{s}}{\lambda } = - b\), and we arrive at

If \(\Gamma \) and \(\Upsilon \) were zero, and \(\tau \), \(\xi \), \(\kappa \) were constant, then (7) is precisely the self-similar Burgers equation with scales (6). As is well-known, the values \(b = \frac{2k+1}{2k}\) with \(k = 1, 2, \ldots \) are distinguished by the property that they admit smooth steady profiles of the self-similar Burgers equation [15, Sect. 11.2]; see also Sect. 2.3 below.

Our intention is to view the linear terms \(e^{-s}\Gamma (e^{bs} D_{y}) e^{bs} \partial _{y} U\) and \(e^{-s}\Upsilon (e^{bs} D_{y}) U\) as perturbations. To motivate the way we will decompose these terms, consider the model cases \(\Gamma (\xi ) = c_{\Gamma } \vert {\xi }\vert ^{\alpha -1}\) and \(\Upsilon (\xi ) = c_{\Upsilon } \vert {\xi }\vert ^{\beta }\) (\(c_{\Gamma }, c_{\Upsilon } \in {\mathbb {R}}\)). Then \(\Gamma (e^{bs} D_{y}) e^{b s} \partial _{y} = c_{\Gamma } e^{b \alpha s} \vert {D_{y}}\vert ^{\alpha -1} \partial _{y}\) and \(\Upsilon (e^{bs} D_{y}) = c_{\Upsilon } e^{b \beta s} \vert {D_{y}}\vert ^{\beta }\), so that

In the regime we perform our construction, \(\vert {D_{y}}\vert ^{\alpha -1} \partial _{y} U\) and \(\vert {D_{y}}\vert ^{\beta }U\) will morally remain bounded in time.Footnote 5 Therefore, we may regard these terms as perturbative when \(b \alpha < 1\) and \(b \beta < 1\), in which case the factors \(e^{-(1- b \alpha ) s} \) and \(e^{-(1- b \beta ) s} \) decay exponentially.

In view of the above discussion, in what follows, we are going to denote

Note that, under our assumptions, \(\mu > 0\). To simplify our notation, we will now rewrite the operator on the RHS as follows:

where

Note that \({\mathcal {H}}\left( U + e^{(b-1)s} \kappa \right) = {\mathcal {H}}(U)\) thanks to \(\chi _{\ge 1}(e^{bs} D_{y})\). Putting everything together, we finally have

2.3 Definition of the Profile

We now solve the steady profile equation for the Burgers problem (i.e., \(\Gamma \), \(\Upsilon \) are zero and \(\tau \), \(\xi \) and \(\kappa \) are fixed):

We define \(\mathring{U}\) to be a solution to the above equation (an exact self-similar profile) such that \(\mathring{U}(0) = 0\), \(\partial _{y} \mathring{U}(0) = -1\), \(\partial _{y}^{2k+1} \mathring{U}(0) = (2k)!\) and \(\partial _y^j \mathring{U} (0) = 0\) for \(j = 2, \ldots , 2k\). We can ensure the last condition by simply noticing that the self-similar profile equation is equivalent to

where \(h_1 > 0\) is a free parameter. From this implicit definition, we see that the first three non-vanishing Taylor coefficients at \(y=0\) are \(\partial _{y} \mathring{U}(0)\), \(\partial _{y}^{2k+1} \mathring{U}(0)\) and \(\partial _{y}^{4k+1} \mathring{U}(0)\). We fix \(h_1\) so that \(\mathring{U}\) satisfies \(\partial _{y}^{2k+1} \mathring{U}(0) = (2k)!\), and a calculation shows that \(h_1 = 1/(2k+1)\); in what follows, we will suppress the dependence of constants on \(h_{1}\).

By construction, \(\mathring{U}\) has about \(y = 0\) the Taylor expansion

and, about \(y = \pm \infty \), the expansion

We now define our choice of the profile. Consider a function \(\bar{\chi }: \mathbb {R}\rightarrow \mathbb {R}\) which is positive, equal to 1 on the interval \([-1,1]\), equal to 0 outside of the interval \([-8,8]\), and such that \({\bar{\chi }}' \ge - \frac{1}{4}\). We then define the cut-off function \(\chi \) to be transported by the linearized flow generated by \(\mathring{U}\):

Some basic properties of the cut-off function \(\chi \) are as follows:

Lemma 2.1

(Support property of \(\chi (s,y)\)) We have \({{\,\textrm{supp}\,}}\chi \subseteq [-C e^{b s}, C e^{b s}]\).

Proof of Lemma 2.1

Define the Lagrangian trajectories \(Y_{\pm }(s)\) as

so that \(\chi (y) = 0\) for all \(y: |y| \ge Y(0)\). The conclusion of the lemma will follow if we can show that \(|Y_{\pm }| \le C e^{bs}\). By the form of \(\mathring{U}\), we have that \(|\mathring{U}(Y_{\pm }(s))| \le 1 + |Y_{\pm }(s)|\), and the lemma follows by integrating (12) in s. \(\square \)

By Lemma 2.1 and (11), we have

We finally define the profile

This is no longer a time-independent profile. Moreover, the modified profile satisfies the equation

2.4 Equation for Iterated Derivatives of U

We let \(U^{(j)} = \partial _y^{(j)} U\), with \(j \ge 1\). We derive the equation satisfied by \(U^{(j)}\). For \(j = 1\),

and for \(j \ge 2\),

Here, \(M^{(j)} = \partial _y^{(j)} (U U') - U U^{(j+1)} - ( j+1) U' U^{(j)}\) for \(j \ge 2\).

2.5 Perturbation Equation and Commutation

We now define the perturbation \(W = U - {\bar{U}}\), and we obtain the following equation for W:

Here, \(E_{\chi } = \mathring{U} (1 - \chi ) \partial _{y} \bar{U}\).

Remark 2.2

Note that the error term \(E_{\chi }\) arising from the cutoff is identically zero near \(y =0\).

Suppose now that \(j \ge 1\). We now commute the above Eq. (16) with \(\partial _y^j\), and obtain:

Here,

2.6 Derivation of the Modulation Equations and Unstable ODE System at \(y = 0\)

We now derive the equations satisfied by the derivatives of W at the origin. For each \(j \ge 0\), we let

For \(j \ge 0\), we have

where \(\delta _{0j}\) is the Kronecker delta symbol, which equals 1 when \(j = 0\) and vanishes otherwise. We also used the following properties of the profile: \({\bar{U}}(s,0) = \partial ^{j}_y {\bar{U}} = 0\) for \(2\le j \le 2k\), and \(\partial _y {\bar{U}}(s,0) = 1\).

We first consider the cases \(j = 0, 1\) or 2k:

Observe that the coefficients in front of the s-derivatives of the modulation parameters in these three equations are always non-zero. Indeed, in (18), \(\xi _s\) is multiplied by \(e^{bs}\), \(\kappa _s\) is multiplied by \(e^{(b-1)s}\), and \(\tau _s\) is multiplied by \(e^s\) (similarly for (19) and (20)).

For this reason, we shall use these equations to determine the dynamic evolution equations for \(\kappa \), \(\tau \) and \(\xi \) by imposing the conditions

which leads to the following equations:

Remark 2.3

Note also that, in case \(k =1\), the last term in Eq. (23) vanishes.

Conversely, if \(\kappa _{s}\), \(\tau _{s}\) and \(\xi _{s}\) are fixed so that (22)–(23) are satisfiedFootnote 6 and \(w_{0}\), \(w_{1}\) and \(w_{2k}\) are initially zero, then by (17) in the cases \(j= 0\), 1, and 2k, (21) holds.

When \(k > 1\), the conditions in (21) do not fix all values of \(w_{j}\) for \(j=0, \ldots , 2k\). In such a case, we use the above equation to determine the evolution of \(w_{j}\). More precisely, for the remaining indices \(j = 2, \ldots , 2k-1\), the ODE for \(w_{j}\) is

Here, we used the properties of \(\overline{U}^{(j)}(0)\) and \(w_{0} = w_{1} = w_{2k} = 0\).

We will now rewrite the above system as a system of ODEs. Introduce the vector \(\vec {w}(s) = (w_2(s), \ldots , w_{2k-1}(s))\). Then, \(\vec {w}(s)\) satisfies the following system of ODEs:

Here, D and M are \((2k-1)\times (2k-1)\) matrices given by

where I is the identity matrix and N is the nilpotent matrix such that \(N_{j (j+1)} = 1\) and \(N_{j j'} = 0\) otherwise.

Since \(b = \frac{2k+1}{2k}\), each eigenvalue \(\lambda _{j}\) of D is strictly positive, so the main linear part \((\partial _{s} - D) \vec {w}(s)\) defines an unstable system of ODEs. In addition, \({\mathcal {N}}(\vec {w}(s))\) is a vector with quadratic entries as functions of the entries of \(\vec {w}\), and \(\vec {f}\) is the vector \(((1+e^{s} \tau _{s}) F^{(2)}(s,0), \ldots , (1+e^{s} \tau _{s}) F^{(2k-1)}(s, 0))\).

3 Precise Formulation of the Main Theorem and Reduction to the Main Bootstrap Lemma

3.1 Initial Data in the Original Variables and the Main Theorem

The purpose of this subsection is to give a precise formulation of the main theorem of this paper (Theorem 3.1). We begin by specifying the set of initial data.

We begin by introducing the following co-dimension \(2k+1\) subspace of \(H^{2k+3}\):

We parametrize the initial data in \(H^{2k+3}\) that will lead to the desired gradient blow-up solutions with the help of the map \(\varvec{\Phi }: (0, \infty ) \times {\mathbb {R}}\times {\mathbb {R}}\times {\mathbb {R}}^{2k-2} \times H^{2k+3}_{(2k)} \rightarrow H^{2k+3}\), which is defined by the formula

where \(b = \frac{2k+1}{2k}\), \(\mathring{U}\) is the k-th smooth self-similar profile for the Burgers equation and \(\chi (s, \cdot )\) and \(\bar{\chi }(\cdot )\) are as in Subsection 2.3. When \(k = 1\), the term \( {\bar{\chi }}(y) \sum _{j=2}^{2k-1} \frac{w_{j, 0}}{j!} y^{j}\) is omitted.

Note that (26) maps the point \((\tau _{0}, \xi _{0}, \kappa _{0}, w_{2, 0}, \ldots , w_{2k-1, 0}, W_{0}) = (\tau _{0}, \xi _{0}, \kappa _{0}, 0, \ldots , 0)\) to the translated and rescaled self-similar Burgers profile whose gradient at \(x = \xi _{0}\) is negative and of size \(\tau _{0}^{-1}\), i.e.,

When \(k > 1\), \(w_{j,0}\) equals the j-th Taylor coefficient of U(y) at \(y =0\) in the self-similar variables for \(j=2, \ldots , 2k-1\).

Given \(\tau _{0}, \epsilon _{0} > 0\), we consider the following open subset of \(H^{2k+3}_{(2k)}\):

When \(k > 1\), for \(\vec {v}_{0} \in {\mathbb {R}}^{2k-2}\) and \(r > 0\), we also introduce the notation

We are now ready to formulate the main theorem in precise terms.

Theorem 3.1

(Precise formulation of the main result) Let k be a positive integer such that \(\alpha , \beta < \frac{2k}{2k+1}\) and set \(b = \frac{2k+1}{2k}\). Then there exist \(\gamma > 0\) and positive decreasing functions \(\tau _{*}(\cdot )\), \(\epsilon _{*}(\cdot )\) such that the following holds. Let \(\xi _{0} \in {\mathbb {R}}\), \(\kappa _{0} \in {\mathbb {R}}\), \(\tau _{0} < \tau _{*}(\vert {\kappa _{0}}\vert )\), \(\epsilon _{0} < \epsilon _{*}(\vert {\kappa _{0}}\vert )\) and \(W_{0} \in {\mathcal {O}}_{\tau _{0}, \epsilon _{0}}\). When \(k = 1\), the initial data \(u_{0}(x)\) given by (26) gives rise to a (well-posed)Footnote 7 solution to (1) with initial conditions \(u(0, x) = u_{0}(x)\) that blows up in finite time. When \(k \ge 2\), there exists \(\vec {w}_{0} \in B_{0}(\tau _{0}^{\gamma }) \subseteq {\mathbb {R}}^{2k-2}\) such that the initial data \(u_{0}(x)\) given by (26) gives rise to a (well-posed) solution to (1) with initial conditions \(u(0, x) = u_{0}(x)\) that blows up in finite time. In both cases, the following statements hold:

-

1.

The blow-up time \(\tau _{+}\) obeys the bound \(\vert {\tau _{+} - \tau _{0}}\vert < C \tau _{0}^{1+\gamma }\).

-

2.

There exist \(\xi _{+}\), \(\kappa _{+}\) such that

$$\begin{aligned} \vert {\kappa _{+} - \kappa _{0}}\vert \le C \tau _{0}^{b-1+\gamma }, \quad \vert {\xi _{+} - (\xi _{0} + \tau _{+} \kappa _{0})}\vert \le C \tau _{0}^{b+\gamma }, \end{aligned}$$and such that

$$\begin{aligned} \sup _{0 \le t < \tau _{+}} \Vert {u(t, \cdot )}\Vert _{L^{\infty }} + [u(t, \cdot )]_{{\mathcal {C}}^{\frac{1}{2k+1}}} \le C, \end{aligned}$$while for every \(\sigma \in (\frac{1}{2k+1}, 1)\),

$$\begin{aligned} C_{\sigma }^{-1} \vert {t - \tau _{+}}\vert ^{-\frac{2k+1}{2k}(\sigma -\frac{1}{2k+1})} \le [u(t, \cdot )]_{{\mathcal {C}}^{\sigma }}&\le C_{\sigma } \vert {t - \tau _{+}}\vert ^{-\frac{2k+1}{2k}(\sigma -\frac{1}{2k+1})} \end{aligned}$$as \(t \rightarrow \tau _{+}\).

Remark 3.2

For the blow-up solutions in Theorem 3.1, we expect \(\mathring{U}\) to be the blow-up profile, in the sense that U(s, y) in appropriate self-similar variables converges to \(\mathring{U}\) as \(s \rightarrow \infty \) on compact sets of y. Such a statement would follow from estimates for \(W = U - \chi \mathring{U}\) on top of those proved in this paper, but we have not carried out the details. We refer to Yang [39] for the proof of this statement in the case of Burgers–Hilbert (i.e., (fKdV) with \(\alpha = 0\)).

Remark 3.3

(Sign of the initial data) There exist smooth compactly supported initial data with both signs (i.e., everywhere nonnegative or nonpositive) that satisfy the hypothesis of Theorem 3.1. Indeed, in (26), note that \(\vert {\mathring{U}}\vert \le C_{0} \tau _{0}^{1-b}\) on the support of \(\chi (-\log \tau _{0}, \cdot )\) (see Lemma 2.1) for some constant \(C_{0} > 0\) independent of \(\tau _{0}\). Therefore, if we choose, say, \(\vert {\kappa _{0}}\vert > 2 C_{0}\), then the initial profile \(\chi (-\log \tau _{0}) ( \tau _{0}^{b-1} \mathring{U}(\tau _{0}^{-b}(x - \xi _{0})) + \kappa _{0} )\) has a definite sign independent of \(\tau _{0} > 0\). Moreover, observe that \(W_{0} \in {\mathcal {O}}_{\tau _{0}, \epsilon _{0}}\) satisfies the pointwise bound \(\vert {W_{0}}\vert \lesssim \tau _{0}^{1-b} \epsilon _{0}\) by the Sobolev embedding. As a consequence, when \(k = 1\), the image of (26) with the above choice of \(\kappa _{0}\) and \(\epsilon _{0} > 0\) sufficiently small leads to the existence of an open subset of signed initial data in \(H^{5}\) that leads to the blow-up behavior described in Theorem 3.1, as alluded to in Remark 1.3 above. When \(k \ge 2\), by taking \(\epsilon _{0}\) and \(\tau _{0} > 0\) sufficiently small, we may ensure that the initial data constructed by Theorem 3.1 has a definite sign.

All statements in Theorem 1.1 can be read off from Theorem 3.1, with the exception of the stability and the co-dimensionality statements. To formulate these statements, we show that the map (26) is a local homeomorphism.

Lemma 3.4

For each \(\Theta = (\tau _{0}, \xi _{0}, \kappa _{0}, w_{2, 0}, \ldots , w_{2k-1, 0}, W_{0})\) satisfying the hypothesis of Theorem 3.1, the map \(\varvec{\Phi }\) defined by (26) is a homeomorphism from an open neighborhood of \(\Theta \) onto an open neighborhood of \(\varvec{\Phi }(\Theta )\) in \(H^{2k+3}\).

Note that \(\varvec{\Phi }\) does not possess any further regularity in, for instance, \(\tau _{0}\), as it acts as a scaling parameter.

Proof

Continuity of the map \(\varvec{\Phi }\) is evident. For every \(\mathring{\Theta } \in {\tilde{{\mathcal {O}}}}_{\mathring{\xi }, \mathring{\kappa }, \epsilon _{0}}\), we may directly construct the continuous inverse in a small neighborhood of \(\mathring{u} = \varvec{\Phi }(\mathring{\Theta })\) as follows. Let u be sufficiently close to \(\mathring{u}\) in the \(H^{2k+3}\) topology. Since \(\partial _{x}^{2k+1} \mathring{u}(\mathring{\xi }) = (2k)! \tau _{0}^{-2k-2}\) and \(\partial _{x}^{2k} \mathring{u}(\mathring{\xi }) = 0\), we may ensure that \(\partial _{x}^{2k+1} u(\mathring{\xi })\) is nonzero and \(\partial _{x}^{2k} u(\mathring{\xi })\) is small. Hence, we can find a unique point \(\xi _{0}\) near \(\mathring{\xi }\) such that \(\partial _{x}^{2k} u(\xi _{0}) = 0\). Next, we choose \(\tau _{0} = - (\partial _{x} u(\xi _{0}))^{-1}\), \(\kappa _{0} = u(\xi _{0})\) and \(w_{j, 0} = \tau _{0}^{bj} \partial _{x}^{j} u(\xi _{0})\). Finally, define \(W_{0}\) from u and the parameters using (26). \(\square \)

Lemma 3.4 shows that the set of initial data for which Theorem 3.1 applies in the case \(k = 1\) is an open subset of \(H^{5}\), which is the precise sense in which the blow-up dynamics described in Theorem 3.1 is stable. In the case \(k \ge 2\), it establishes the precise sense in which the initial data given by prescribing \(\xi _{0} \in {\mathbb {R}}\), \(\kappa _{0} \in {\mathbb {R}}\), \(\tau _{0} < \tau _{*}(\vert {\kappa _{0}}\vert )\), \(\epsilon _{0} < \epsilon _{*}(\vert {\kappa _{0}}\vert )\) and \(W_{0} \in {\mathcal {O}}_{\tau _{0}, \epsilon _{0}}\) but not specifying \(\vec {w}_{0} \in B_{0}(\tau _{0}^{\gamma }) \subseteq {\mathbb {R}}^{2k-2}\) is “co-dimension \(2k-2\)” in \(H^{2k+2}\), as alluded to in Theorem 1.1.

Remark 3.5

An interesting question, which is not pursued in this article, is the regularity of the co-dimension \(2k-2\) set of initial data in \(H^{2k+3}\) given by Theorem 3.1 and Lemma 3.4 (e.g., does it form a \(C^{1}\) submanifold of \(H^{2k+3}\) modelled by \(H^{2k+3}\)?). Such a result seems to require a careful analysis of the difference of blow-up solutions.

3.2 Initial Data in Self-Similar Variables

In this short subsection, we rephrase our ansatz for the initial data in the self-similar variables (5), in which most of our analysis will take place.

We prescribe the initial data at \(s = \sigma _{0}\), where conditions on \(\sigma _{0}\) will be specified later. In the self-similar variables (s, y, U) given by (5) with \(\tau (\sigma _{0}) = \tau _{0}\), \(\xi (\sigma _{0}) = \xi _{0}\) and \(\kappa (\sigma _{0}) = \kappa _{0}\), the initial data for U is of the form

where the assumptions on \(W_{0}\) are as follows:

When \(k > 1\), the following smallness conditions are assumed for the unstable coefficients:

3.3 Main Bootstrap and Shooting Lemmas

In this section, we state two central ingredients of our proof, namely, the main bootstrap lemma in self-similar coordinates (Lemma 3.6) and a shooting lemma for handling the unstable modes when \(k \ge 2\) (Lemma 3.9).

Recall that \(\mu = \min \{1-b\alpha , 1-b \beta , 1\}\). Let \(\mu _{0}\) be given by

Footnote 8 Fix also a number \(\gamma \) satisfying

To formulate our bootstrap assumptions, we introduce a semi-norm \(\dot{{\mathcal {H}}}^{n}_{< L}\) (n is a nonnegative integer and \(L > 0\)) defined by the formula

A notable feature of this semi-norm is that, in the limit \(L =\infty \), it is invariant under the self-similar transformation \(x = \lambda y\), \(u(x) = \lambda ^{1-\frac{1}{b}} U(y)\) with \(b = \frac{2k+1}{2k}\) for any \(\lambda > 0\).

Lemma 3.6

(Main bootstrap lemma) There exist increasing functions \(\epsilon _{*}^{-1}(\cdot )\), \(A(\cdot ), y_{0}^{-1}(\cdot )\) and \(\sigma _{*}(\cdot )\) on \([0, \infty )\), all of which are bounded from below by 1, such that the following holds. Let \(\kappa _{0} \in {\mathbb {R}}\), \(\sigma _{0} \ge \sigma _{*}(\vert {\kappa _{0}}\vert )\) and assume that the initial data conditions (D1)–(D4) are satisfied at \(s = \sigma _{0}\) with \(\epsilon _{0} \le \epsilon _{*}(\vert {\kappa _{0}}\vert )\). Suppose that, for some \(\sigma _{1} > \sigma _{0}\), \(A = A(\vert {\kappa _{0}}\vert )\) and \(y_{0} = y_{0}(\vert {\kappa _{0}}\vert )\), the following estimates are satisfied for \(s \in [\sigma _{0}, \sigma _{1}]\):

Assume also that \(U(s, 0) = U'(s, 0)+1 = U^{(2k)}(s, 0) = 0\) for all \(s \in [\sigma _0, \sigma _1]\). In case \(k > 1\), assume furthermore that \(\vec {w}\) satisfies the trapping condition

Then, stronger estimates actually hold on the interval \(s \in [\sigma _{0}, \sigma _{1}]\), as follows:

Remark 3.7

(On dependencies) We would like to clarify the order in which the above functions \(\epsilon _*\), A, \(y_0\), and \(\sigma _*\) are chosen. We start from \(\epsilon _{*}\), which is essentially the size of the initial data. Then we choose A, which is the bootstrap parameter (we will eventually choose it to be very large), and, in order to be able to Taylor expand at \(y = 0\), we choose \(y_0\) to be very small based on A. This then forces us to choose \(\sigma _{*}\) very large depending on \(y_0\) and A.

When \(k = 1\), then Lemma 3.6 is already sufficient to set up a bootstrap argument to show the global existence of U(s, y) for all \(s \ge \sigma _{0}\), which is the key step in the proof of Theorem 3.1 (see the proof of Theorem 3.1 below).

When \(k > 1\), the trapping condition (T) for \(\vec {w}\) is not improved in general, so we need an extra argument to find a global-in-s solution. For this purpose, we introduce the notion of a trapped solution as follows:

Definition 3.8

Let \(k > 1\). For \(\kappa _{0} \in {\mathbb {R}}\), \(\xi _{0} \in {\mathbb {R}}\) and \(W_{0}\) satisfying the initial data conditions (D2)–(D3), let A, \(y_{0}\) and \(\sigma _{0}\) be determined from Lemma 3.6. We say that a solution U(s, y) with the initial data (D1) induced by \(\sigma _{0}\), \(\kappa _{0}\), \(\xi _{0}\), \(W_{0}\) and \(\vert {\vec {w}_{0}}\vert \le e^{-\gamma \sigma _{0}}\) is trapped on an interval \([\sigma _{0}, \sigma _{1}]\) if it satisfies (B1)–(B7) and (T) on \([\sigma _{0}, \sigma _{1}]\).

By Lemma 3.6, it follows that the only way a trapped solution U(s, y) on \([\sigma _{0}, \sigma _{1}]\) can fail to be trapped for \(s > \sigma _{1}\) is if (T) is saturated at \(s = \sigma _{1}\), i.e., \(\vert {\vec {w}(\sigma _{1})}\vert = e^{-\gamma \sigma _{1}}\). Combining this property with a topological fact (namely, the nonexistence of a continuous retraction of a closed ball to its boundary), we shall prove the existence of a globally trapped solution:

Lemma 3.9

(Shooting lemma) Let \(W_0\), \(\kappa _{0}\) and \(\xi _{0}\) be fixed so that the conditions (D2)–(D3) hold, and let A, \(y_{0}\), and \(\sigma _{0}\) be as in Lemma 3.6. Then there is a vector \(\vert {\vec {w}_{0}}\vert < e^{-\gamma \sigma _{0}}\) such that the corresponding solution U(s, y) with initial data at \(\sigma _{0}\) induced by \(\vec {w}_0\) and \(W_0\) remains trapped for all \(s \ge \sigma _{0}\).

We are going to prove Lemmas 3.6 and 3.9 in Sects. 5 and 6 by breaking the proof into several parts. In the remainder of this section, we show how to establish Theorem 3.1 assuming Lemmas 3.6 and 3.9.

In addition to Lemmas 3.6 and 3.9, we need three more ingredients, which will be useful in the rest of the paper. The first ingredient is the following simple pointwise bound from the weighted \(L^{2}\)-Sobolev norm \(\dot{{\mathcal {H}}}_{<L}^{n}\):

Lemma 3.10

For any \(1 \le \ell \le 2k+2\), we have

Proof

This lemma follows easily from the Sobolev embedding on the unit interval and scaling; we omit the details. \(\square \)

The second ingredient is the observation that Eq. (1) admits an \(L^2\) bound for u(t, x), which readily translates into an \(L^2\) bound for U(s, y) itself. We record this fact in the following lemma.

Lemma 3.11

Assume that the initial data conditions (D1)–(D4) are satisfied at \(s = \sigma _0\), and u(t, x), U(s, y) are as above. Then, there is \(C>0\) such that the following bound holds for \(s \in [\sigma _{0}, \sigma _{1}]\):

Proof

We first express the initial data for u in terms of the initial data for U. Due to (D1), we have

where we remind the reader that \(x = e^{-b \sigma _{0}} y + \xi _{0}\). To bound the first (and dominant) term in the above expression, recall from (13) that \(\vert {\mathring{U}(y)}\vert \lesssim e^{\frac{1}{2k+1} b \sigma _{0}} = e^{(b-1) \sigma _{0}}\) on the support of \(\chi (\sigma _{0}, \cdot )\). Therefore,

where we used Lemma 2.1 again in the last inequality. The contribution of the last term is bounded precisely by (D4), while the contribution of the second term would decay as \(\sigma _{0} \rightarrow \infty \) according to our assumptions on the initial data. We eventually obtain

We now use the fact that Eq. (1) satisfies an a-priori \(L^{2}\) bound, since \(\Gamma (D_{x}) \partial _{x}\) is anti-symmetric (dispersive) and \(\Upsilon (D_{x})\) is nonnegative (dissipative). We then calculate, using the fact that \(u =e^{(1-b)s} (U + e^{(b-1) s} \kappa )\),

This readily implies

Finally, the third ingredient concerns some specific bounds for the initial data which follow from the requirements in Sect. 3.2. We record these bounds in the next subsection.

3.4 Consequences of the Initial Data Bounds

We record here some consequences of the initial data bounds from Sect. 3.2 which will be used in the proof of Theorem 3.1. By (D3) and interpolation, we have

and by the Gagliardo–Nirenberg inequality,

On the other hand, (D3) also implies

Noting that \(\Vert {\bar{U}}\Vert _{\dot{{\mathcal {H}}}_{<e^{b \sigma _{0}}}^{n}} \le C_{n}\) for any \(n = 0, 1, \ldots \), we have

By the definition of \(\bar{U}\), (30) and Lemma 3.10, we also obtain the pointwise bound

3.5 Proof of the Main Theorem

We are now ready to give a proof of Theorem 3.1.

Proof of Theorem 3.1 assuming Lemmas 3.6 and 3.9

Let \(\tau _{*}(\cdot ) = e^{-\sigma _{*}(\cdot )}\) and define \(\sigma _{0}\) by \(\tau _{0} = e^{-\sigma _{0}}\). In case \(k = 1\), by a standard bootstrap argument using Lemma 3.6, there exist \(C^{1}\) functions \(\tau (\cdot )\), \(\kappa (\cdot )\), and \(\xi (\cdot )\) on \([\sigma _{0}, \infty )\) such that in the self-similar variables (s, y, U) given by (5) with \(\tau (\cdot )\), \(\kappa (\cdot )\) and \(\xi (\cdot )\), U(s, y) is a globally trapped solution on \([\sigma _{0}, \infty )\) and \(\tau \), \(\kappa \), and \(\xi \) solve (22)–(23) with \(\tau (\sigma _{0}) = \tau _{0}\) (so that \(s = \sigma _{0}\) corresponds to \(t = 0\)), \(\kappa (\sigma _{0}) = \kappa _{0}\) and \(\xi (\sigma _{0}) = \xi _{0}\). In case \(k \ge 2\), by Lemmas 3.6 and 3.9, there exists \(\vec {w}_{0} \in B_{0}(e^{- \gamma \sigma _{0}})\) such that the above conclusion holds.

By integrating the ODEs for \(\tau _{s}\), \(\kappa _{s}\) and \(\xi _{s}\) in (IB6), it follows that \((\tau (s), \kappa (s), \xi (s)) \rightarrow (\tau _{+}, \kappa _{+}, \xi _{+})\) as \(s \rightarrow \infty \), where

In particular, by (IB6) and \(\vert {\tau _{+} - \tau }\vert \lesssim e^{-(1+\gamma )s}\), it follows that the change of variables \(s \rightarrow t\) is a well-defined strictly increasing map from \([\sigma _{0}, \infty )\) onto \([0, \tau _{+})\). Since \(\partial _{y} U(s, 0) = -1\) for all s, it follows that \(\partial _{x} u(t, \xi (s(t))) = - (\tau (s(t)) - t)^{-1} \rightarrow \infty \) as \(t \rightarrow \tau _{+}\), which implies that u indeed blows up as \(t \nearrow \tau _{+}\). The desired bounds on \(\tau _{+}\), \(\kappa _{+}\) and \(\xi _{+}\) also follow from (33).

To complete the proof, it remains to establish the regularity and blow-up properties of u, which we derive from properties of U and the change of variables (5). To begin with, note that, by (IB4)–(IB5) and Lemma 3.10, we have

On the other hand, \(\vert {U'(s, y)}\vert \le 2\) for \(\vert {y}\vert \le 1\) by (IB1)–(IB2). Using \(U(s, 0) = 0\) and by integration, we arrive at

For \(\vert {y}\vert > e^{b s}\), we may eliminate the linear growth \(\vert {y}\vert e^{-s}\) by using the Sobolev inequality based on the \(L^{2}\) bound (28) and

As a consequence, we obtain

which is an improvement over (35). In particular, it follows that

which implies via (5) that \(\Vert {u}\Vert _{L^{\infty }}\) is uniformly bounded up to the blow-up time \(\tau _{+}\).

To prove the upper bounds on the Hölder semi-norms, first observe the simple gradient bound \(\vert {U'}\vert \le C A (\vert {y}\vert ^{-\frac{2k}{2k+1}} + e^{-s})\) from (IB1)–(IB2) and (34). Recalling (5), \(\lambda = e^{-bs}\) and \(b = \frac{2k+1}{2k}\), we have, for each \(t < \tau _{+}\)

Then interpolating with the trivial upper bound \(\Vert {\partial _{x} u}\Vert _{L^{\infty }} = (\tau _{+} - t)^{-1} \Vert {\partial _{y} U}\Vert _{L^{\infty }} \lesssim (\tau _{+} - t)^{-1}\), the upper bounds when \(\frac{1}{2k+1}< \sigma < 1\) follow.

Finally, to establish the lower bounds on the Hölder semi-norms, note first that \(\inf _{s \ge \sigma _{0}, \, \vert {y}\vert \le c_{0}} \vert {U'(s, y)}\vert > 0\) for some \(c_{0} > 0\) by Taylor expansion. By the mean value theorem,

and then by (5), the desired lower bound follows. \(\square \)

4 Lemmas on Fourier Multiplier

In this section, we establish key analytic lemmas concerning the operators \({\mathcal {H}}\) and \({\mathcal {L}}\), whose definitions are recalled here for convenience:

Observe that the assumptions on \(\Gamma \) and \(\Upsilon \) remain true under any increase of \(\alpha \) or \(\beta \). In the proofs in this section, we will often assume, without loss of generality, that \(\alpha = \beta \) and \(\alpha \ge 0\), so that \(\max \{\alpha , \beta , 0\} = \alpha \).

We begin with simple \(L^{2}\) and \(L^{\infty }\) estimates for \({\mathcal {H}}\) and \({\mathcal {L}}\).

Lemma 4.1

For any \(\ell \ge 0\), we have

For \(\max \{\alpha , \beta \} < 1\), we have

Proof

The \(L^{2}\) bound (36) for \({\mathcal {L}}\) is simply a consequence of the fact that, thanks to the frequency projection \(P_{\le 0}(e^{b s} D_{y})\) and the assumptions on \(\Gamma \), \(\Upsilon \), \({\mathcal {L}}\) is a Fourier multiplier with bounded symbol. The case \(\ell \ge 1\) then follows, thanks again to the frequency projection \(P_{\le 0}(e^{b s} D_{y})\). Moreover, (37) follows from Bernstein’s inequality.

To prove (38), it suffices to prove that, for all \(k \in {\mathbb {Z}}\),

To see this (in particular, the \(L^{\infty }\) bound), note that

Indeed, by the assumptions on \(\Gamma \), the kernel of \(P_{k'}(D_{x}) \Gamma (D_{x}) \partial _{x}\) is of the form \(2^{\alpha k'} K_{k'}(x)\), where \(\int \vert {K_{k}(x)}\vert \, \textrm{d}x \lesssim 1\) (independent of k). By rescaling \(x = e^{b s} y\), we see that the kernel of \(P_{k}(D_{y}) e^{-b \alpha s} \Gamma (e^{b s} D_{y}) e^{b s} \partial _{y} \) is of the form \(2^{\alpha k} e^{-bs} K_{k-(\log 2)^{-1} b s}(e^{-bs} y)\), where the y-integral of \(e^{-bs} \vert {K_{k-(\log 2)^{-1} b s}(e^{-bs} y)}\vert \) is uniformly bounded in k. The desired bounds for the contribution of \(\Gamma \) in \({\mathcal {H}}\) now follows from Young’s inequality. A similar bound holds for \(\Upsilon \). \(\square \)

Next, we prove a sharp upper bound on the kernel of the operator \({\mathcal {H}}\).

Lemma 4.2

For each s, there exists a function \(K_{s} \in C^{\infty }({\mathbb {R}}{\setminus } \{0\})\) such that

where

Proof

Without loss of generality, assume \(\alpha = \beta \ge 0\). By the Fourier inversion formula, we have

where

The desired statement now follows by applying the rescaling \(x = e^{b s} y\). \(\square \)

Finally, we formulate and prove a key commutator estimate for \({\mathcal {H}}\) in the weighted \(L^{2}\)-Sobolev space \(\dot{{\mathcal {H}}}_{<L}^{n}\) introduced earlier. For this purpose, it is instructive to generalize the weight in the semi-norm and introduce

Lemma 4.3

Let \(-\frac{1}{2}< \nu < \frac{1}{2}\), \(\ell \in \{0, 1, \ldots \}\) and \(L > 1\). Let \(\varpi \) be a smooth function satisfying one of the following assumptions:

-

Case 1. \({{\,\textrm{supp}\,}}\varpi \subseteq \{2^{j_{0}-1-c_{0}}< \vert {y}\vert < 2^{j_{0}+c_{0}}\}\) and \(0 \le \varpi \le C_{0} 2^{(\nu + \ell ) j_{0}}\) for some \(c_{0}, C_{0} > 0\) and \(j_{0} \in {\mathbb {Z}}\) such that \(2^{j_{0}} < L\), or

-

Case 2. \({{\,\textrm{supp}\,}}\varpi \subseteq \{\vert {y}\vert > 2^{j_{0}-1-c_{0}}\}\) and \(0 \le \varpi \le C_{0} 2^{(\nu + \ell ) j_{0}}\) for some \(c_{0}, C_{0} > 0\) and \(j_{0} = \lfloor \log _{2} L \rfloor \).

Then for any \(s \in {\mathbb {R}}\) and \(V \in {\mathcal {H}}_{< L}^{\ell +1, \nu }\), we have

where the implicit constant is independent of s and L.

Suppose, in addition, that \(\vert {\varpi '}\vert \le C_{0} 2^{(\nu + \ell - 1)j_{0}}\) and \(\ell \ge 1\). Then for any \(s \in {\mathbb {R}}\) and \(V \in {\mathcal {H}}_{< L}^{\ell , \nu }\), we have

where the implicit constant is independent of s and L.

The fact that the bound \(-\frac{1}{2}< \nu < \frac{1}{2}\) is sharp (at least when \(\ell = 0\) and \(\alpha = 0\)) can be seen by fixing \({\mathcal {H}}= P_{>0}(e^{bs} D_{y}) \textrm{H}\), where \(\textrm{H}\) is the Hilbert transform, and considering V localized in \(A_{j}\) with \(\vert {j-j_0}\vert \) large. In the proof of Lemma 5.5 below, this lemma will be applied with \(V = U'\) and \(\nu = \frac{1}{2} - \frac{1}{2k+1}\); indeed, observe that \(\Vert {U}\Vert _{\dot{{\mathcal {H}}}_{<L}^{n}} = \Vert {U'}\Vert _{\dot{{\mathcal {H}}}_{<L}^{n-1, \frac{1}{2} - \frac{1}{2k+1}}}\) for \(n \ge 1\).

Remark 4.4

We note that while (40) and (41) are sharp in terms of the spatial weights, it is not sharp in terms of regularity, as we are only working with integer regularity indices. Indeed, the orders of the operators \(\varpi {\mathcal {H}}\partial _{y}^{\ell }\) and \([\varpi , {\mathcal {H}}] \partial _{y}^{\ell }\) are \(\alpha + \ell \) and \(\alpha + \ell - 1\), respectively, while we are using \(\ell +1\) and \(\ell \) derivatives on the RHS, respectively (recall that \(0 \le \alpha < 1\)). A crucial point, however, is that the RHS of the commutator estimate, (41), involves at most \(\ell \) derivatives, which is important for avoiding any loss of derivatives in Lemma 5.5.

Another important point is that (40) and (41) are independent of s and L. In particular, the only s-independent information we have on the symbol of \({\mathcal {H}}\) are the scale-invariant bounds

which are essentially all we use about \({\mathcal {H}}\).

Proof

Without loss of generality, assume \(\alpha = \beta \ge 0\). In what follows, we suppress the dependence of implicit constants on \(\alpha \), \(\nu \), \(c_{0}\) and \(C_{0}\). In what follows, we simply write \(P_{k} = P_{k}(D_{y})\) (\(k \in {\mathbb {Z}}\)).

To simplify the notation, we introduce the following schematic notation: We denote by \(\tilde{P}_{k}\) (resp. \(\tilde{K}_{k_{0}}\)) any function, which may vary from expression to expression, that obeys the same support properties and bounds as \(P_{k}\) (at the level of the symbol) (resp. \(K_{k}\)), i.e., \({{\,\textrm{supp}\,}}\tilde{P}_{k} \subseteq \{\xi \in {\mathbb {R}}: 2^{k-5}< \vert {\xi }\vert < 2^{k+5}\}\) and \(\vert {(\xi \partial _{\xi })^{n} \tilde{P}_{k}(\xi )}\vert \lesssim _{n} 1\) (resp. \(\vert {\partial _{y}^{n} \tilde{K}_{k}(y)}\vert \lesssim _{N, n} \frac{2^{(1+n)k}}{\langle {2^{k} y}\rangle ^{n+N}}\)).

With the above conventions, we have the schematic identities \(P_{k} = \tilde{P}_{k}\) and

where an important point in the last identity is that the implicit bounds for \(\tilde{P}_{k}\) are independent of s. Note also that any operator of the form \(\tilde{P}_{k}\) has a kernel of the form \(\tilde{P}_{k} V = \tilde{K}_{k} *V\).

Next, we introduce a nonnegative smooth partition of unity \(\{\eta _{j}\}_{j \in {\mathbb {Z}}}\) on \({\mathbb {R}}\) subordinate to the open cover \(\{A_{j} = \{y \in {\mathbb {R}}: 2^{j-3}< \vert {y}\vert < 2^{j+2}\}\}_{j \in {\mathbb {Z}}}\). We shall write \(\eta _{\ge j} = \sum _{j' \ge j} \eta _{j'}\). We also introduce the shorthands

As the notation suggests, \(\breve{\eta }_{j_{0}}\) and \(\breve{\eta }_{\ge j_{0}}\) have similar support and upper bound properties as \(\eta _{j_{0}}\) and \(\eta _{\ge j_{0}}\), respectively, thanks to the hypothesis on \(\varpi \). However, note that we only have control of up to one derivative of \(\breve{\eta }_{j_{0}}\) and \(\breve{\eta }_{\ge j_{0}}\) since we only assume that \(|\varpi '| \le C_0 2^{(\nu + \ell -1)j_0}\), and we have no assumptions on higher derivatives.

Case 1, Step 1. We will use the following three bounds to treat the “non-local”, “low frequency” and “far-away input” cases, respectively: there exists a positive constant c independent of \(j, j_0\) and k such that, for \(\vert {j - j_{0}}\vert > c_{0}+5\) and \(k \ge - j_{0} - 5\),

and for \(k < - j_{0} - 5\),

and

We defer their proofs for a moment and prove (40) and (41) assuming (42)–(44).

Case 1, Step 1.(a). To prove (40), we begin by expanding

The term \(\textrm{I}_{far}\) can be treated using (44), so it only remains to estimate \(\textrm{I}_{near}\). Unless \(\vert {j - j_{0}}\vert \le c_{0} + 5\) and \(k \ge - j_{0} - 5\), we can apply (42) and (43) to obtain an acceptable bound for the summand. When \(\vert {j - j_{0}}\vert \le c_{0} + 5\) and \(k \ge - j_{0} - 5\), we use the schematic identity \(\tilde{P}_{k} = 2^{-(\ell +1) k} \tilde{P}_{k} \partial _{x}^{\ell +1}\) to simply bound

which can be summed up in \(k \ge -j_{0} + 5\).

Case 1, Step 1.(b). Now we prove the commutator estimate (41). We begin by making the following decomposition:

We treat each term in (45) as follows. For \(\textrm{I}\), we start by writing

To treat the first sum on the RHS of (46), we make use of the commutator structure. We write

where \(\tilde{V} = \partial _{y}^{\ell } (\eta _{j} V)\). Then using the bound for \(\breve{\eta }_{j_{0}}'\) (which comes from that for \(\varpi '\)) and the \(O(2^{k})\)-localization of \(\tilde{K}_{k}\), the kernel on the RHS can be written as \(2^{-k-j_{0}} \breve{K}(y, y')\), where \(\sup _{y} \Vert {\breve{K}(y, \cdot )}\Vert _{L^{1}}\) and \(\sup _{y'} \Vert {\breve{K}(\cdot , y')}\Vert _{L^{1}}\) are bounded by an absolute constant. Hence, by Schur’s test,

which is summable in the range \(\{(j, k): \vert {j - j_{0}}\vert \le c_{0} + 5,\, k \ge -j_{0}-5\}\).

For the second sum on the RHS of (46), we simply note that \([\breve{\eta }_{j_{0}}, \tilde{P}_{k_{0}}] \partial _{y}^{\ell } (\eta _{j} V) = \breve{\eta }_{j_{0}} \tilde{P}_{k_{0}} (\eta _{j} V)\) by the support properties of \(\breve{\eta }_{j_{0}}\) and \(\eta _{j}\). Hence, we may apply (42), which is acceptable in the range \(\{(j, k): \vert {j - j_{0}}\vert > c_{0} + 5,\, k \ge -j_{0}-5\}\).

Next, the term \(\textrm{II}\) in (45) is directly bounded using (43).

Finally, we turn to the term \(\textrm{III}\) in (45). We write

By the support properties of \(\breve{\eta }_{j_{0}}\) and \(\eta _{j}\), the summand vanishes unless \(\vert {j_{0} - j}\vert \le c_{0} + 5\). In that case, we have

where on the first line we used the \(L^{1} \hookrightarrow L^{2}\) Bernstein inequality and \(\Vert {\breve{\eta }_{j_{0}}}\Vert _{L^{2}} \lesssim 2^{\frac{1}{2} j_{0}}\). The above bound is acceptable in the range \(\{(j, k): \vert {j - j_{0}}\vert \le c_{0} + 5,\, k < -j_{0}-5\}\).

Case 1, Step 2. It remains to prove (42), (43), and (44). We start with the following bound for the kernel \(\tilde{K}_{k}\) of \(\tilde{P}_{k}\): for \(\vert {j - j_{0}}\vert > c_{0} + 5\) and any \(N \ge 0\),

Indeed, (47) follows from the bound for \(\tilde{K}_{k}\) and the simple fact that \(\vert {y - y'}\vert \simeq 2^{\max \{j, j_{0}\}}\) if \(\vert {y}\vert \simeq 2^{j_{0}}\), \(\vert {y'}\vert \simeq 2^{j}\) and \(\vert {j - j_{0}}\vert > c_{0} + 5\).Footnote 11

As a result, we have \(\Vert {\breve{\eta }_{j_{0}} \tilde{P}_{k} (\eta _{j} V)}\Vert _{L^{\infty }} \lesssim _{N} 2^{k} 2^{-N(\max \{j, j_{0}\} + k)} \Vert {\eta _{j} V}\Vert _{L^{1}}\). By two applications of Hölder’s inequality, as well as \(\Vert {\eta _{j} V}\Vert _{L^{2}} \lesssim 2^{-\nu j} \Vert {V}\Vert _{\dot{{\mathcal {H}}}_{<L}^{0, \nu }}\), we have

By choosing N to be appropriately large, (42) and (43) in the case \(j > - k + c_{0}\) follow (note that in the last case, \(j_{0} < - k - 5\), so \(j > j_{0} + c_{0} + 5\)). To treat the remaining cases in (43), namely \(k < - j_{0} -5\) and \(j \le -k + c_{0}\), we simply use the Hölder and Bernstein inequalities to estimate

Finally, to prove (44), we first split

Observe that the contribution of the first term can be treated using (42) and (43). For the remaining piece, thanks to the spatial separation between the supports of \(\breve{\eta }_{j_{0}}\) and \(\eta _{\ge \log _{2} L + c_{0} + 10}\), (47) implies \(\Vert {\breve{\eta }_{j_{0}} \tilde{P}_{k} (\eta _{\ge \log _{2} L + c_{0} + 10} V)}\Vert _{L^{\infty }} \lesssim _{N} 2^{\frac{1}{2} k} 2^{-\frac{N}{2} (\log _{2} L + k)} \Vert {\eta _{\ge \log _{2} L + c_{0} + 10} V}\Vert _{L^{2}}\). Hence, by Hölder’s inequality,

By choosing N appropriately, (44) follows.

Case 2, Step 1. In this case, \(L \simeq _{c_{0}} 2^{j_{0}}\). We will use the following bound to treat the “nearby input” case: for \(j < \log _{2} L\),

We defer their proofs for a moment and prove (40) and (41) assuming (48).

Case 2, Step 1.(a). As before, to prove (40), we expand

This time, the term \(\textrm{I}_{near}\) can be treated using (48), so it only remains to estimate \(\textrm{I}_{far}\). By almost orthogonality (in case \(\alpha + \ell = 0\)) or interpolation (in case \(\alpha + \ell > 0\)), it is straightforward to prove

which is acceptable.

Case 2, Step 1.(b). To prove (41), we expand

For \(\textrm{I}'\), we argue as in term \(\textrm{I}\) in Case 1, Step 1.(b) using the commutator structure and bound

For \(\textrm{II}'\), we expand the commutator expression and write

We simply bound \(2^{\alpha k} \lesssim 2^{- \alpha j_{0}}\), then use almost orthogonality to estimate

For \(\textrm{III}'\), we simply observe that, by the support properties of \(\breve{\eta }_{\ge j_{0}}\) and \(\eta _{j}\),

which can be treated using (48).

Case 2, Step 2. It remains to prove (48). Recall that \(L \simeq _{c_{0}} 2^{j_{0}}\) and \(j < \log _{2} L\). We first split