Abstract

In this paper we compare ways of computing stationarity tests. We show that whereas some of the procedures recommended lead to inconsistency of the tests, it is still possible to compute a test with good properties in finite sample in terms of empirical size and power. The guidance suggested in the paper is illustrated by testing for the purchasing power parity hypothesis in some developed countries.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The literature on unit root and stationarity tests has experienced a huge advance in recent years. On the one hand, the proposals of unit root tests have achieved a higher degree of development with the tests in Ng and Perron (2001). These authors define a set of modified test statistics that show the best performance in finite samples in terms of empirical size and power. Many authors have focused on stationarity tests. In contrast to unit root tests, these tests specify the null hypothesis of stationarity and the alternative of non-stationarity, so they can be seen as the reversal complement of the unit root tests. The relevant proposals in this field are Nabeya and Tanaka (1988), Kwiatkowski et al. (1992)—hereafter, KPSS test—and Leybourne and McCabe (1994, 1999)—henceforth, LMC test. Some authors have advocated the joint application of both kinds of statistics as a way to obtain robust conclusions about the stochastic time series properties—see Amano and Van Norden (1992), Cheung and Chinn (1997), Charemza and Syczewska (1998), Maddala and Kim (1998), Carrion-i-Silvestre et al. (2001), and Gabriel (2003) for a discussion on confirmatory analysis.

However, the main drawback of stationarity tests is the difficulty entailed in the estimation of the long-run variance involved in the computation of the tests. While the KPSS test uses a non-parametric estimation for the long-run variance, the LMC test conducts the estimation in a parametric way. Both procedures require the definition of a lag-length parameter, which is shown to have high influence on the finite sample properties of the tests—see Lee (1996) and Chen (2002) for the KPSS test. This feature has recently been pointed out in Caner and Kilian (2001), who indicate that the KPSS and LMC tests show size distortions when the stochastic process is near to non-stationarity. Besides, Engel (2000) warns about the use of the KPSS because of its lack of power. Chen (2002) also points out that the power of the test is affected when the lag length is not appropriate.

We argue that the pitfalls can be mitigated once the long-run variance is properly estimated. However, this is not easy. Thus, in most situations the bandwidth for the spectral window that requires the non-parametric approach is chosen by automatic methods—i.e. Andrews (1991) or Newey and West (1994) procedures—although this leads to the inconsistency—see Choi and Ahn (1995, 1999) and Kurozumi (2002). This feature is of special interest for applied economists, because some of most popular econometric programs do not take this inconsistency problem into account. For instance, the latest versions of Eviews and Stata offer the possibility of computing the KPSS test using an automatic bandwidth selection method that causes the inconsistency. Instead, we could follow the parametric approach. Therefore, comparison of the two approaches is relevant from an empirical point of view.

In this paper we assess the performance of a set of alternatives that can be used when computing the stationarity tests. In this regard, we consider the suggestions in Choi and Ahn (1995, 1999), Kurozumi (2002) and Sul et al. (2005) when implementing an automatic procedure to estimate the long-run variance from a non-parametric point of view. For the LMC test, we can apply an information criterion or the testing procedure defined in Leybourne and McCabe (1999).

Here we provide a guide to the estimation of the long-run variance when testing the null hypothesis of stationarity. The guide given is illustrated with an example based on the purchasing power parity (PPP) hypothesis. When reviewing the literature we noticed that stationarity tests are widely applied to the PPP hypothesis framework, but little attention has been paid to the estimation of the long-run variance. Here we focus on two databases depending on the frequency of the time series. First, we examine the annual data set in Taylor (2002), which allow us to test the PPP for 19 countries, covering a century. Second, we analyse the PPP hypothesis using monthly time series from the International Financial Statistics database of the IMF. As expected, the properties of the statistics improve as the number of observations increases. Thus, it is interesting to assess the robustness of the analysis using time series that allow us to focus on features such as the long-run and the span of the information.

The outline of the paper is as follows. In Section 2 we describe the stationarity tests and the different approaches to the estimation of the long-run variance. In Section 3 we conduct Monte Carlo simulations in order to assess the finite sample properties of each procedure. Section 4 illustrates the PPP hypothesis. Finally, Section 5 concludes.

Stationarity tests

Let {y t } be the stochastic process with the Data Generation Process (DGP) given by:

t=1, ..., T, where {u t } and {v t } are assumed to be two independent stochastic processes that satisfy the strong-mixing conditions in Phillips (1987) and Phillips and Perron (1988). In this framework the null hypothesis of stationarity implies that σ v 2=0 since in this case the non-stationary component α t collapses to a constant α 0, and, hence, the time series is generated by stationary components. Consequently, the alternative hypothesis of non-stationarity is given by σ v 2>0. As mentioned above, we deal with two different statistics to analyse the stochastic properties of the time series, i.e. the KPSS and the LMC statistics. In what follows we briefly present the main features of these statistics.

The KPSS test is given by:

i=0,1,2, where \(S_{t} = {\sum\nolimits_{j = 1}^t {\widehat{u}} }_{j} \) denotes the partial sum process, \({\left\{ {\widehat{u}_{t} } \right\}}\)is the OLS estimated residuals that are obtained after y t is regressed against the determinis-tic function f(t). Specifically, the subscript i in Eq. 3 denotes the deterministic function. Thus, we use i=0 for the model that does not include any deterministic term, i.e. f(t)=0. The model that includes a constant term, f(t)=μ, is denoted by i=1, while i=2 is for the model with a time trend, f(t)=μ+β t. The long-run variance is estimated using the non-parametric estimator:

where w(s, l) denotes the spectral window. While the choice of the kernel is dependant on the preference of practitioners—Kwiatkowski et al. (1992) use the Bartlett kernel, but Hobijn et al. (1998) suggest the Quadratic spectral window—caution should be taken when estimating the spectral bandwidth. Thus, we can find suggestions that can lead to erroneous conclusions. For instance, Lee (1996) uses Andrews (1991) method, while Hobijn et al. (1998) suggest applying the automatic methods in Newey and West (1994) to estimate the bandwidth—henceforth, we denote the estimated bandwidth parameter that is selected using these methods as \(\widehat{I}_{{auto}} \). Unfortunately, Choi and Ahn (1995, 1999) and Kurozumi (2002) advise that the use of these data-based selection methods provokes the inconsistency of the test. Notwithstanding, some bounds to control the estimated bandwidth can be imposed to avoid such inconsistency. Below we highlight three alternatives.

First, the rule in Choi and Ahn (1995, 1999) establishes that the bandwidth that is estimated using Andrews' method should be constrained to \(\widehat{I} = 2\;{\text{if}}\;\widehat{I}_{{auto}} \geqslant T^{\delta } \), where δ=0.7 for raw' series—i.e. for the deterministic specification given by i=0—and δ=0.65 for detrended time series—i.e. for the deterministic specification given by i=1,2. Second, Kurozumi (2002) proposes that the bandwidth for the Bartlett kernel should be estimated as:

where \(\widehat{a}\) is the estimate of the autoregressive parameter given by Andrews' method. Evidence drawn from simulations experiments leads us to specify k=0.7 or k=0.8 to establish a compromise between the empirical size and power of the test. Third, Sul et al. (2005) propose a prewithened Heteroskedasticity and Autocorrelation Consistent (HAC) estimator for the long-run variance. In the first stage an AR model for the residuals \({\left\{ {\widehat{u}_{t} } \right\}}\)is estimated:

After the estimation of Eq. 4 is carried out it is possible to obtain the long-run variance of the estimated residuals in Eq. 4, which is denoted as \(\widetilde{\sigma }_{\psi } ^{2} \), through the application of an HAC estimator—for instance, Bartlett or Quadratic Spectral window—to monitor the presence of heteroskedasticity. In the second stage the estimated long-run variance is recolored:

where \(\widetilde{\vartheta }{\left( 1 \right)}\)denotes the autoregressive polynomial \(\widetilde{\vartheta }{\left( L \right)} = 1 - \widetilde{\vartheta }_{1} L - ... - \widetilde{\vartheta }_{p} L^{p} \) evaluated at L=1. In order to avoid the inconsistence of the test statistic, Sul et al. (2005) suggest using the following boundary condition rule to obtain the long-run variance estimate:

The application of this rule ensures that the estimated long-run variance is bounded above \(T\widetilde{\sigma }_{\psi } ^{2} .\)Now, the individual KPSS test statistic is of order O p (T) under the alternative hypothesis and diverges, so that the test is consistent. In the simulations we consider an AR(1) process for Eq. 4, and \(\widetilde{\sigma }_{\psi } ^{2} \) is obtained using the Quadratic spectral window as depicted in Andrews (1991), Andrews and Monahan (1992) and Sul et al. (2005).

Regarding the parametric approach, Leybourne and McCabe (1994) specify the dynamics of {y t } in Eq. 1 using an AR(p) representation. Thus, the DGP of {y t } is given by:

and Eq. 2, where the roots of ϕ p (L) lie outside the unit circle. The LMC test is given by:

i=0,1,2, where \({\left\{ {\widehat{u}_{t} } \right\}}\) is the OLS estimated residuals that are obtained after the filtered variable y t * is regressed against f(t) and \(\widehat{\sigma }_{u} ^{2} = T^{{ - 1}} {\sum\nolimits_{t = 1}^T {\widehat{u}_{t} ^{2} } }\). We denote by y t * the variable once the AR(p) model has been filtered out, that is, \(y_{t} * = y_{t} - {\sum\nolimits_{j = 1}^p {\widehat{\phi }} }_{j} y_{{t - j}} \), where \(\widehat{\phi }_{j} \)are the OLS estimated coefficients in the following over-differenced, second-order in moments equivalent, model:

where g(t)=Δf(t) and ζ t ∼iid (0, σ ζ 2) with σ ζ 2=σ u 2 θ −1. Under the null hypothesis θ=1, while the alternative hypothesis implies 0<θ<1. As stated in Caner and Kilian (2001), Leybourne and McCabe (1994, 1999) estimate the model with the GAUSS-ARIMA Library but defining a grid for the specification of the starting value for θ. Specifically, the grid starts at θ=0 and ends at θ=1 with steps of 0.05. The starting values for the autoregressive parameters are set equal to ϕ j =θ+0.1, j=1,..., p. They select the set of parameter estimates that corresponds with the highest log-likelihood. McCabe and Leybourne (1998) showed that the ML estimation of θ in Eq. 7 is T-consistent and that the AR parameters are asymptotically equivalent to those obtained from the OLS estimation of Eq. 5 in levels.

The order of the autoregressive model in Eq. 7 can be chosen using two different procedures. Once a maximum number of lags (p max) has been specified, the first procedure uses an information criterion to select p. The Akaike (AIC) and Schwarz bayesian (SC) criteria are the most extended ones. In the simulation experiments we have also specified the modified Schwarz criterion recently proposed in Liu et al. (1997) (LWZ). The second approach, which is based on the general-to-specific principle, relies on the testing procedure described in Leybourne and McCabe (1999). In brief, let y t be a stochastic process with the DGP given in Eq. 7. Then, under the null hypothesis of H 0: ϕ p =0 the test \(Z{\left( p \right)} = T^{{1/2}} \widehat{\phi }_{p} \widehat{\theta } \to _{d} N{\left( {0,1} \right)},\)where \(\widehat{\phi }_{p} \) and \(\widehat{\theta }\) are estimated from Eq. 7.

Both the KPSS and LMC tests have the same limiting distributions, which are shown to belong to the family of the Cramér-von Mises distributions—see Harvey (2001). The asymptotic critical values can be found in Kwiatkowski et al. (1992) for the models i=1, 2 and Hobijn et al. (1998) for the model i=0, and finite sample critical values are available in Sephton (1995) for the models i=1, 2.

Monte Carlo evidence

In this section we conduct Monte Carlo simulations to assess how the stationarity tests described above behave in practice. The DGP that is used to analyse the empirical size is given by:

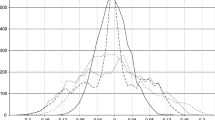

with ɛ t ∼iid N(0,1). The set of autoregressive parameters that has been specified is ϕ1={0, 0.5, 0.7, 0.8, 0.9, 0.95, 0.96, 0.97, 0.98, 0.99, 1} and ϕ2={0, 0.5, −0.5}. Note that these values allow us to analyse both the empirical size and power of the test, since we allow one of the roots to be unity. The sample size has been set equal to T={100, 300, 600} and n=2,000 replications for each experiment are conducted. The nominal size is set at the 5% level and the finite sample critical values are drawn from the response surfaces in Sephton (1995). The GAUSS codes that have been used in the simulations are available from the authors upon request.

The results of the simulations are reported in Tables 1 and 2 for an AR(1) process. The labels in the table are as follows: K for the rule in Kurozumi (2002)—with k=0.7—CA for the rule in Choi and Ahn (1995, 1999), SPC for the rule in Sul et al. (2005), Z(p) for the procedure in Leybourne and McCabe (1999)—with a maximum order for the parametric correction of p max=3 and the 10% significance level for the lags to be included in the auxiliary regression (7)—AIC for the Akaike information criterion—with a maximum order for the parametric correction of p max=3—and known when we assume that the order of the autoregressive model is p=0 for ϕ=0 and p=1 elsewhere.Footnote 1

Looking at the results for the specification that includes a constant term in the regression, Table 1 shows that the SPC method is correctly sized except when the autoregressive parameter is very close to one. The use of the AIC criterion together with this procedure results in a size that is almost identical to the one where the order of the autoregressive model is known. All other tests are oversized with respect to the SPC method, especially the CA and LMC methods. Note that the value of ϕ=1 gives us a first measure of the power of the tests. In this case the lower power of the SPC test can be explained by the size distortions of the others. Similar conclusions are reached irrespective of the deterministic specification (Table 2). Therefore, in order to save space, we continue the analysis of empirical size and power focusing on the methods with correct size, i.e. the KPSS test implementing the SPC method to estimate the long-run variance: KPSS (SPC).

Table 3 reports the rejections frequencies of the KPSS (SPC) test for AR(2) processes. Once again, the test has the correct size except when one of the roots is very close to one. Note that the test has virtually no power when a trend is included in the auxiliary regression, there is a negative root and the sample is not large enough (say T=100). Nevertheless, as the sample size grows the power increases.

Concerning the power of the test, in the previous tables we saw that KPSS (SPC) has a reasonable power, especially in large samples, for pure random walks and I(1) processes with positive autocorrelated shocks. Another DGP commonly used to study the power of the stationarity tests is given by the structural Eqs. 1–2 with σ v 2>0—see Kwiatkowski et al. (1992), Leybourne and McCabe (1994), and Lee (1996) among others. Here in the experiments we set β=0 and u t =ɛ t ∼iid N (0, σ ɛ 2). Note that in this case the reduced form of the DGP can be written as:

where η t is a white noise process, \(\tau = 1 + \frac{1}{2}r - \frac{1}{2}{\sqrt {{\left( {r + 4} \right)}r} }\) and r=σ v 2/σ ɛ 2 is the signal-to-noise ratio. Therefore, y t is an IMA(1,1) process where the MA parameter depends on the signal-to-noise ratio σ v 2/σ ɛ 2. Table 4 shows the empirical power of the KPSS (SPC) test for several values of the signal-to-noise ratio σ v 2/σ ɛ 2. For low values of σ v 2/σ ɛ 2 the corresponding MA parameter τ is very close to one, so there is quasi-cancellation of the roots and the process looks similar to a white noise process in finite samples. For these values, the power of the KPSS (SPC) does not increase monotonically. When the signal-to-noise ratio is greater then 0.05, the power rises monotonically and can be considered adequate.

Additional evidence concerning the purchasing power parity hypothesis

Empirical economic research has devoted increasing interest to testing the PPP hypothesis. Some relevant references in the field are Meese and Rogoff (1988), Abuaf and Jorion (1990), Froot and Rogoff (1995), Rogoff (1996), Oh (1996) and Papell (1997), to mention a few. All these contributions use unit root or cointegration techniques, in a time series or panel data framework, in order to assess whether PPP holds. In this paper we contribute to the evidence available for this topic using the stationarity tests described above.

Stationarity tests have been extensively applied in PPP hypothesis testing, mainly because PPP appears as the null hypothesis of these tests—see, for instance, Corbae and Ouliaris (1991). Taylor (2001) indicates that the PPP hypothesis should be tested using a procedure that specifies the null hypothesis of stationarity, because this hypothesis is well established in the economist's priors. Some authors adopt this idea and rely on stationarity statistics to test the PPP hypothesis. For instance, Culver and Papell (1999) used the KPSS test and found evidence in favour of the PPP in the post-Bretton Woods era with quarterly data. Engel (2000) uses the KPSS test when testing for the PPP, although he warns of the low power found in the simulations. Finally, Caner and Kilian (2001) also apply the KPSS and LMC tests to the PPP, but advising of the size distortion problems.

We have shown that the application of stationarity tests is not exempt from problems, since size distortions can lead to erroneous conclusions. Fortunately, these pitfalls can be mitigated if the long-run variance is properly estimated. In this section we follow the recommendations given in the previous section and apply the KPSS test to two different sets of time series using the procedure developed in Sul et al. (2005) to estimate the long-run variance. The first data set refers to the 19 countries considered in Taylor (2002). These data allow us to compute annual real exchange rate for Argentina (1884), Australia (1870), Belgium (1880), Brazil (1889), Canada (1870), Denmark (1880), Finland (1881), France (1880), Germany (1880), Italy (1880), Japan (1885), Mexico (1886), Netherlands (1870), Norway (1870), Portugal (1890), Spain (1880), Sweden (1880), Switzerland (1892) and the United Kingdom (1870) using the United States as the numerarie—see Taylor (2002) for additional details on the data sources. The starting date of the time series is irregular—the first year of the time period is given between parentheses above—but all of them end at 1996. Therefore, the data cover a century. The second data set is given by the post-Bretton Woods era monthly time series drawn from the International Financial Statistics database of the IMF, which covers the period from January 1973 to December 1998. This data set covers Australia, Austria, Belgium, Canada, Denmark, Finland, France, Germany, Greece, Ireland, Italy, Japan, Netherlands, New Zealand, Norway, Portugal, Spain, Sweden, Switzerland and United Kingdom.

Table 5 reports the results for the ADF-GLS, modified tests—modified Sargan–Bharvaga (MSB) and modified Zt test (MZt)—in Ng and Perron (2001), and the KPSS (SPC) statistic. Although the model that includes the constant term is the deterministic specification that is consistent with the PPP hypothesis, we have also computed the test allowing for a time trend. As pointed out in Taylor (2002), this follows the spirit of the Balassa–Samuelson effect. The order of the autoregressive correction is selected using the modified AIC information criteria (MAIC) proposed in Ng and Perron (2001). These results indicate that the null hypothesis of unit root cannot be rejected by either unit root tests for Canada, Denmark, France, Japan, Norway and Portugal. On the other hand, we find evidence in favour of the PPP for 13 countries, although for Brazil, Mexico and Switzerland we require the specification of a time trend. There are some discrepancies between Taylor's results and ours, since he concludes that the PPP has held in the long run over the twentieth century for his sample of 20 countries. These contradictions might be due to the use of the MAIC instead of the Lagrange multiplier as the lag-length selection procedure. The results for Canada and France, where the order of the autoregressive correction selected by the MAIC criterion is large, are of particular interest.

When looking at the KPSS (SPC) statistic where the specification is given by a constant term, the PPP hypothesis cannot be rejected at the 5% level for 12 countries. If the deterministic component is given by a time trend, the KPSS statistic finds evidence in favour of the PPP at the 5% level for 18 countries. Taking into account both the results of the unit root and stationarity tests, we have found evidence in favour of the PPP hypothesis for Argentina, Finland, Germany, Italy, Netherlands, Spain and UK when the deterministic specification is given by a constant term. When allowing for a time trend, we conclude that the PPP holds for Argentina, Belgium, Brazil, Finland, Germany, Italy, Mexico, Sweden and Switzerland. In all, evidence in favour of the PPP hypothesis has been found for 12 countries. The real exchange rates for France, Japan, and Switzerland are characterised as non-stationary for the unit root and stationarity tests when using the constant term as the deterministic component. France is still classified as non-stationary when the time trend is allowed. For the other countries, the application of the tests results in inconclusive situations.

Therefore, our results contradicts those in Taylor (2002), because while he found evidence in favour of the PPP hypothesis—using either the constant or the time trend—we can only conclude that the PPP holds just for 12 of the 19 countries studied.

The picture changes when analysing the monthly data set (see Table 6). In this case we can find evidence in favour of the PPP hypothesis for Ireland and (mild evidence for) Italy when using the deterministic specification given by a constant term, and for New Zealand when using the time trend. Evidence of non-stationarity is found for Australia, Austria, Belgium, Canada, Denmark, Japan, Portugal, Spain, Sweden and Switzerland when specifying a constant term. The evidence against the PPP hypothesis increases when using the time trend since non-stationarity behaviour is found for Austria, Belgium, Canada, Denmark, Finland, France, Germany, Greece, Italy, Norway, Portugal, Spain and Sweden. For the other cases contradictory conclusions are found.

To sum up, the evidence in favour of the PPP hypothesis depends on the sample period. For the large data set covering a century we found strong evidence in favour of this hypothesis (both types of test coincide in classifying the series as stationary) for: Argentina, Finland, Germany, Italy, Netherlands, Spain and UK and, if a trend is included, for: Belgium, Sweden and Switzerland. Moreover, for this span there is strong evidence against the PPP for Brazil, France, Japan and Switzerland when no trend is allowed but only for France when one is. For the monthly data set covering the last quarter of the century, there is strong evidence favouring the hypothesis only for two countries (Italy and Ireland) for the specification without time trend and New Zealand if this term is included. Strong evidence against the PPP is found for (no time trend in the auxiliary regression): Australia, Austria, Belgium, Canada, Denmark, Japan, Portugal, Spain, Sweden and Switzerland, and, if there is a trend, for Austria, Belgium, Canada, Denmark, Finland, France, Germany, Greece, Italy, Norway, Portugal, Spain and Sweden. Finally, it should be mentioned that contradictory results for some countries for these two data sets and periods could be due to a structural change—see Cheung and Chinn (1997). In this respect our results indicate that those studies aiming to analyse the PPP hypothesis allowing for structural breaks using, for instance, the approach in Kurozumi (2002), should pay special attention to the estimation of the long-run variance in order to control size distortions.

Conclusion

The paper has shown the performance of the different choices that an analyst can make when computing stationarity tests. Although the scope of the paper has not covered all the possible stationarity tests that are available in the econometric literature, we have focused on the two tests most widely used, i.e. the KPSS and the LMC tests. The aim of the paper is to after advice on the computation of these tests, assessing the consequences of the different choices on the selection of the spectral bandwidth or the lag length. We conclude that the KPSS test that estimates the long-run variance using the proposal in Sul et al. (2005) is the best choice since this combination shows less size distortion than the LMC test and has reasonable power. Therefore, the inference on the stochastic properties of the time series should be based on the non-parametric approach when computing stationarity tests.

Finally, our findings are extensive to other fields of applications of the stationarity tests. For instance, our suggestions can be taken into account when computing the KPSS test in the seasonal framework—see Lyhagen (2000) and Phillips and Jin (2002)—when dealing with fractional integration—see Lee and Schmidt (1996) and Amsler (1999)—when testing the stationarity allowing for structural breaks—see Carrion-i-Silvestre and Sansó (2004), Lee and Strazicich (2001), Busetti and Harvey (2001) and Kurozumi (2002)—or when testing for cointegration—see Harris and Inder (1994), Shin (1994), Bartley et al. (2001) and Carrion-i-Silvestre and Sansó (2005).

Notes

Similar results were obtained with the BIC and LWZ information criteria.

References

Abuaf N, Jorion P (1990) Purchasing power parity in the long run. J Finance 45:157–174

Amano R, Van Norden S (1992) Unit-root tests and the burden of proof. Discussion paper, Bank of Canada

Amsler C (1999) Size and power: lower tail KPSS tests and anti persistent alternatives. Appl Econ Lett 6:693–695

Andrews DWK (1991) Heteroskedasticity and autocorrelation consistent covariance matrix estimation. Econometrica 59:817–858

Andrews DWK, Monahan JC (1992) An improved heteroskedasticity and autocorrelation consistent autocovariance matrix. Econometrica 60:953–966

Bartley WA, Lee J, Strazicich MC (2001) Testing the null of cointegration in the presence of a structural break. Econ Lett 73: 315–323

Busetti F, Harvey A (2001) Testing for the presence of a random walk in series with structural breaks. J Time Ser Anal 22: 127–150

Caner M, Kilian L (2001) Size distortions of tests of the null hypothesis of stationarity: evidence and implications for the PPP debate. J Int Money Financ 20:639–657

Carrion-i-Silvestre JL, Sansó A (2004) The KPSS test with two structural breaks. Discussion paper, Department of Econometrics, Statistics and Spanish Economy. University of Barcelona

Carrion-i-Silvestre JL, Sansó A (2005) Testing the null of cointegration with structural breaks. Oxford Bulletin of Economics and Statistics Forthcoming.

Carrion-i-Silvestre JL, Sansó A, Artís M (2001) Unit root and stationarity tests' wedding. Econ Lett 70:1–8

Charemza WW, Syczewska EM (1998) Joint application of the Dickey–Fuller and KPSS tests. Econ Lett 61:17–21

Chen MY (2002) Testing stationarity against unit roots and structural changes. Appl Econ Lett 9:459–464

Cheung YW, Chinn MD (1997) Further investigation of the uncertain unit root in GNP. J Bus Econ Stat 15:68–73

Choi I, Ahn BC (1995) Testing for cointegration in a system of equations. Econom Theory 11: 952–983

Choi I, Ahn BC (1999) Testing the null of stationarity for multiple time series. J Econom 88: 41–77

Corbae D, Ouliaris S (1991) A test of long-run purchasing power parity allowing for structural breaks. Econ Rec 67:26–33

Culver SE, Papell DH (1999) Long-run purchasing power parity with short-run data: evidence with a null hypothesis of stationarity. J Int Money Financ 18:751–768

Engel C (2000) Long-run PPP may not hold after all. J Int Econ 57:243–273

Froot KA, Rogoff K (1995) Perspectives on PPP and long-run real exchange rates. Handbook of international economics, vol III. Grossman E, Rogoff K (Eds.). Elsevier, North-Holland, pp. 1647–1688

Gabriel VJ (2003) Cointegration and the joint confirmation hypothesis. Econ Lett 78:17–25

Harris D, Inder B (1994) A test of the null hypothesis of cointegration. In: Hargreaves CP (ed) Nonstationary time series analysis and cointegration, Chap. 5:133–152. Oxford University Press

Harvey AC (2001) A unified approach to testing for stationarity and unit roots. Discussion paper, Faculty of Economics and Politics. University of Cambridge

Hobijn B, Franses PHB, Ooms M (1998) Generalizations of the KPSS-test for stationarity. Discussion Paper 9802, Econometric Institute. Erasmus University Rotterdam

Kurozumi E (2002) Testing for stationarity with a break. J Econom 108:63–99

Kwiatkowski D, Phillips PCB, Schmidt PJ, Shin Y (1992) Testing the null hypothesis of stationarity against the alternative of a unit root: how sure are we that economic time series have a unit root. J Econom 54:159–178

Lee J (1996) On the power of stationarity tests using optimal bandwidth estimates. Econ Lett 51:131–137

Lee J, Schmidt P (1996) On the power of the KPSS test of stationarity against fractionally-integrated alternatives. J Econom 73:285–302

Lee J, Strazicich M (2001) Testing the null of stationarity in the presence of one structural break. Appl Econ Lett 8:377–382

Leybourne SJ, McCabe BPM (1994) A consistent test for a unit root. J Bus Econ Stat 12:157–166

Leybourne SJ, McCabe BPM (1999) Modified stationarity tests with data-dependent model selection rules. J Bus Econ Stat 17:264–270

Liu J, Wu S, Zidek JV (1997) On segmented multivariate regressions. Stat Sin 7:497–525

Lyhagen J (2000) The seasonal KPSS statistic. Discussion paper, Department of Economic Statistics. Stockholm School of Economics

Maddala GS, Kim IM (1998) Unit roots, cointegration and structural change. Cambridge

McCabe BPM, Leybourne SJ (1998) On estimating an ARMA model with a MA unit root. Econom Theory 14:326–338

Meese R, Rogoff K (1988) What is real? The exchange rate interest dixoerential relation over the modern floating rate period. J Finance 43:933–948

Nabeya S, Tanaka K (1988) Asymptotic theory of a test for the constancy of regression coefficients against the random walk alternative. Ann Stat 16:218–235

Newey WK, West KD (1994) Automatic lag selection in covariance matrix estimation. Rev Econ Stud 61:631–653

Ng S, Perron P (2001) Lag length selection and the construction of unit root tests with good size and power. Econometrica 69:1519–1554

Oh KY (1996) Purchasing power parity and unit root tests using panel data. J Int Money Financ 15:405–418

Papell D (1997) Searching for stationarity: purchasing power parity under the current float. J Int Econ 43:313–332

Phillips PCB (1987) Time series regression with a unit root. Econometrica 55:277–301

Phillips PCB, Jin S (2002) The KPSS test with seasonal dummies. Econ Lett 77:239–243

Phillips PCB, Perron P (1988) Testing for a unit root in time series regression. Biometrika 75:335–346

Rogoff K (1996) The purchasing power parity puzzle. J Econ Lit 34:647–468

Sephton PS (1995) Response surfaces estimates of the KPSS stationary test. Econ Lett 47:255–261

Shin Y (1994) A residual-based test of the null of cointegration against the alternative of no cointegration. Econom Theory 10:91–115

Sul D, Phillips PCB, Choi CY (2005) Prewhitening Bias in HAC Estimation. Oxf Bull Econ Stat 67:517–546

Taylor AM (2001) Potential pitfalls for the purchasing-power—parity puzzle? Sampling and specification biases in mean-reversion tests of the law of one price. Econometrica 69:473–498

Taylor AM (2002) A century of purchasing—power parity. Rev Econ Stat 84:139–150

Acknowledgements

We thank A. M. Taylor for providing us with the data, and the Editor, B. H. Baltagi, and two anonymous referees for helpful comments. Financial support is acknowledged from the Ministerio de Ciencia y Tecnología under grant SEJ2005-08646/ECON and SEJ2005-07781/ECON and Conselleria d'Economia, Hisenda i Innovació del Govern Balear under PRIB-2004-10095, respectively.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Carrion-i-Silvestre, J.L., Sansó, A. A guide to the computation of stationarity tests. Empirical Economics 31, 433–448 (2006). https://doi.org/10.1007/s00181-005-0023-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00181-005-0023-8