Abstract

This paper is concerned with the topological optimization of elastic structures, with the goal of minimizing the compliance and/or mass of the structure, subject to a stress constraint. It is well known that depending upon the geometry and the loading conditions, the stress field can exhibit singularities, if these singularities are not adequately resolved, the topological optimization process will be ineffective. For computational efficiency, adaptive mesh refinement is required to adequately resolve the stress field. This poses a challenge for the traditional Solid Isotropic Material with Penalization (SIMP) method that employs a one-to-one correspondence between the finite element mesh and the optimization design variables because as the mesh is refined, the optimization process must somehow be re-started with a new set of design variables. The proposed solution is a dual mesh approach, one finite element mesh defines the material distribution, a second finite element mesh is used for the computation of the displacement and stress fields. This allows for stress-based adaptive mesh refinement without modifying the definition of the optimization design variables. A second benefit of this dual mesh approach is that there is no need to apply a filter to the design variables to enforce a length scale, instead the length scale is determined by the design mesh. This reduces the number of design variables and allows the designer to apply spatially varying length scale if desired. The efficacy of this dual mesh approach is established via several stress-constrained topology optimization problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We are concerned with the topology optimization of linear elastic structures with stress constraints. The goal is to determine the material layout that minimizes the compliance and/or mass, subject to a constraint on the stress of the structure. This topological optimization problem is a high dimensional nonlinear programming problem.

There are a variety of formulations for the structural topology optimization problem. Our starting point is the density method (Bendsoe 1989, 2003; Rozvany 2001). In this approach, the optimization design variables are the material volume fraction for every element in the finite element analysis. These variables are then filtered to impose a minimum length scale on the design. A penalty function is used to coerce the continuous volume fractions toward a binary state. In this article, a method using element-based density, with a penalty, and a spatial filter, is referred to as SIMP (Solid Isotropic Material with Penalization). This is a popular method because it is quite general, and many interesting designs have been created with this method.

One problem that plagued early incarnations of density-based topology optimization was a checkerboard instability (Sidmund and Petersson 1998; Bourdin 2001). It was observed that the computed volume fractions would take on an alternating black (100%) and white (0%) checkerboard pattern. This checkerboard pattern is an indication that the density-based topology optimization problem is ill-posed in the sense that the solution does not converge as the computational mesh is refined. Even neglecting the absence of convergence, the checkerboard results are not useful because the finite element method computes an unrealistically small compliance for this structure, and in addition, the resulting checkerboard geometry cannot be manufactured.

Modern density-based implementations employ a filter to eliminate checkerboard instabilities (Bruns and Tortorelli2001, 2003). The filter is typically in the form of a convolution of the volume fraction field with a small kernel that is either constant, linear (the “hat” or “cone” filter), or Gaussian. The filter radius must be independent of the mesh resolution, and as such allows the user to impose a minimal feature size, or length scale, upon the problem. This allows the computational mesh to be refined, yielding accurate compliance estimates. Other means of achieving the same effect are possible, for example, since the Green’s function of the Helmholtz PDE is a Gaussian, the finite element discretization of the Helmholtz equation can be used as a filter (Lazarov and Sigmund 2011). It should be noted that perimeter and slope constraints can be used to regularize the optimization problem (Haber et al. 1996; Amrosio and Buttazaro 1993; Niordson 1983). A disadvantage of filtering is that the filtered density is spatially correlated, i.e., information content has been reduced, resulting in an inefficient representation of the geometry and the non-linear programming problem is larger than necessary.

While the majority of research on topology optimization has been focused on the compliance minimization problem, it can be argued that in real-world engineering applications, the maximum local stress is an essential quantity of interest to be considered in the optimization. It is possible to consider the von-Mises stress in every element in the mesh as was done in Duysinx and Bendsoe (1998), but this yields a very large optimization problem, which becomes prohibitively large if a highly refined mesh is required which becomes to resolve the stress. It is therefore a practical necessity to reduce the stress field to a single scalar quantity. The standard p-norm and the KS norm were investigated in Yang and Chen (1996) for stress agglomeration, these functions approximate the maximum value of the stress in a differentiable manner. In Yang and Chen (1996), the agglomerated stress measure was combined with the compliance into a single objective function. A ramp-like function was used in Amstutz and Novotny (2010) for stress agglomeration, this particular function does not have units of stress but it can be used as an objective to be minimized. Both the p-norm and the KS norm overestimate the stress, and in Le et al. (2010), an ad hoc calibration procedure was discussed. Also, in Le et al. (2010), two SIMP penalties are employed, v3 is used when computing the effective material properties of an element, and v1/2 is used when computing the resulting stress of an element. The purpose of these penalty functions is to steer the density towards a binary solid-void distribution. A cone filter was used in Le et al. (2010) to eliminate the checkerboard instability.

There is no requirement that the optimization design variables be an element-centered relative density as in the standard SIMP method. Level set methods have been combined with stress optimization (Suresh and Takalloozadeh 2013; Guo et al. 2011; Picelli et al. 2018; Guo et al. 2014b). A Moving Morphable Void (MMV) approach is also combined with stress optimization (Zhang et al. 2018). Continuous “nodal” density values have been used as the design variables in density-based stress optimization (Jensen 2016). These approaches do not require a filtering step.

It is well known that solutions to elasticity problems (and related elliptic partial differential equations e.g. electrostatics) can exhibit r−λ behavior in the vicinity of a corner, where λ is a function of the angle of the corner. This has long been referred to as a singularity (Williams 1952; Karp and Karal 1962; Yosibash 2012). In this article r−λ behavior will be referred to as stress concentration. It has been observed that stress-constrained topology optimization problems can suffer from uniqueness and convergence issues, and in the topology optimization literature this has been referred to as singularity problems (Cheng and Jiang 1992). This singularity problem must be addressed if a stress-constrained topology optimization is considered. Several methods were introduced to resolve this singularity: the ε-relaxed approach (Cheng and Guo 1997), the qp-relaxed method (Bruggi 2008), and the relaxed stress indicator. We use the relaxed stress indicator in (Le et al. 2010) to resolve the singularity problem adequately in this paper.

Adaptive mesh refinement (AMR) can be used to refine the computational mesh in the vicinity of stress concentrations. AMR was combined with a SIMP method and stress constraints in Salazar de Troya and Tortorelli (2018). Previously, AMR was used for standard compliance optimization in with the goal of refining material interfaces and reducing computational cost (Maute and Ramm 1995; Bruggi and Verani 2011; Nana et al. 2016). Adaptive mesh refinement is clearly superior to uniform mesh refinement in regards to accuracy versus cost of the finite element analysis. A problem with the methods proposed in Salazar de Troya and Tortorelli (2018), Maute and Ramm (1995), Bruggi and Verani (2011), and Nana et al. (2016) is that as the mesh is refined, the design variables are completely redefined, and the non-linear programming algorithm must be re-started. One approach to solve this problem is to decouple the design variables from the finite element analysis, and this is the approach proposed here.

The goal of isolating the geometric representation from the computational domain has been previously investigated in topology optimization. A separate background mesh is superimposed over the computational domain in Guest and Genut (2010) to develop an adaptive topology optimization scheme. In this approach, the nodes on the background mesh are assigned density values and circles (spheres) at these nodes are projected onto the computational domain to define the structure. This is akin to the use of a cylindrical filter in the usual density methods if the nodes of the background mesh were located at the element centroids of the computational mesh. Similar approaches that employ scattered points (mesh-free) for material variation are described in Kang and Wang (2011), Luo et al. (2013), Wang et al. (2013, 2014). More recently, (Guo et al. 2014a; Norato et al. 2015; Zhang et al. 2016) developed a topology optimization scheme whereby prescribed objects, e.g., bars and plates, are used as geometric primitives to define structure. Methods employing two distinct meshes for design and analysis have also been investigated (Panesar et al. 2017). Several papers adopt dual mesh approaches to achieve multiresolution topology optimization (MTO) (Nguyen et al. 2010, 2017; Lieu and Lee 2017; Gupta et al. 2018; Liu et al. 2018). They use a coarser discretization for the analysis mesh than the design mesh because their goal is to obtain high-resolution designs with relatively low computational cost. The MTO approach works the best when higher-order elements are used for the analysis mesh to capture high resolution in a design mesh (Lieu and Lee 2017; Nguyen et al. 2017). However, the QR pattern can appear in the MTO approach (Gupta et al. 2018) because a finer design mesh than the analysis mesh is used. Our method uses a coarser mesh for the design mesh than the analysis mesh. Therefore, our approach does not exhibit the QR pattern. Additionally, we do not require a higher-order element for the analysis. It is also important to note that this previous research on geometry representation, dual mesh, and AMR was not concerned with resolving stress, which is the emphasis of the work here.

The method proposed here is based on dual meshes, a design mesh for representation of the geometry and an analysis mesh for computation of displacement, strain, and stress. One advantage of this method is that the analysis mesh can be adaptively refined to resolve stress singularities, without disturbing the definition of the design variables. A second advantage is that no additional filtering step is required to eliminate checkerboard instabilities or enforce a length scale. This is similar to Fourier (White and Stowell 2018) and NURB methods (Chen and Shapiro 2008; Qian 2013). In the proposed method, the length scale, or design complexity control, is enforced by the design mesh, and this length scale can be non-uniform allowing the designer to allow small features in some regions and prohibit small features in other regions. This is somewhat analogous to recent work (Zhang et al. 2017) that used skeletons to enforce spatially varying design resolution. A novel aspect of the proposed method is that the geometry is defined using higher-order Bernstein polynomials on the design mesh. Several researchers have proposed using continuous polynomials to represent geometry (Matsui and Terada 2004; Rahmatalla and Swan 2004; Kang and Wang 2012). In Matsui and Terada (2004), it is claimed that quadratic shape functions cannot be used since they may allow the density to become negative. But this is a feature of Lagrange polynomials, not all polynomials. In the proposed method, higher-order Bernstein polynomials are used to represent the density on the design mesh. These polynomials are always positive, and they form a partition of unity; hence, no special constraints or penalties are required to enforce physical bounds on the relative density.

Below is a summary of the main contributions of our paper:

A novel dual mesh method for stress-constrained topology optimization is introduced.

The elasticity PDE is solved on an analysis mesh, with adaptive mesh refinement to resolve stress singularities, without affecting the design variables.

The material distribution, and hence optimization design variables, are defined on a design mesh.

The design mesh uses higher-order continuous Bernstein polynomials.

No filtering of design variables is required, and spatially varying length scale is easily supported.

No higher-order finite element is required for the physics analysis.

In Section 2, the structural topology optimization problem and density formulation are reviewed. In Section 3, the proposed dual adaptive mesh method is outlined. In Section 4, we present a variety of results including standard cantilever beam and L-bracket benchmark problems, as well as more complicated problems involving irregulars domains.

2 The structural topological optimization problem

The starting point in computational design is the analysis model. For the compliance problem, this is the Navier equation of static elasticity for linear isotropic media in a force-free volume Ω

where u is the displacement, t is the applied traction load, \(\bar {\sigma }\) is the stress, n is the surface normal, Γessential is the essential boundary condition surface, Γload is the traction loaded surface, and \(\mathbb {C}\) is the local stiffness tensor of the material. The domain Ω will be discretized via a finite element mesh. The material density is given by ρ = ρ0v, where ρ0 is the density of solid material and v is the volume fraction (or relative density) that satisfies 0 ≤ v ≤ 1. The constitutive material is also a function of the volume fraction

where p is an penalty parameter. This is a version of the standard Solid Isotropic Material with Penalization (SIMP) method (Bendsoe and Sigmund 2003). In our case, \(\mathbb {C}_{1}\) is the stiffness of solid material, and \(\mathbb {C}_{0}\) is the stiffness of the void material. Typically \(\mathbb {C}_{0}\) is chosen to be 10− 6 times smaller than the corresponding solid value.

The variational form of (1) is

where the notation \({H^{d}_{1}}\left ({\varOmega } \right )\) denotes the Hilbert space of vector functions of dimension d, each component of \(\textbf {f} \in {H^{d}_{1}}\left ({\varOmega } \right ) \) satisfies \(f_{i} \in H_{1}\left ({\varOmega } \right )\). The subspace V is given by \(V=\left \{V \in {H^{d}_{1}}\left ({\varOmega } \right ) ; V = 0 on {\varGamma }_{\text {essential}} \right \}\), the subspace that satisfies the essential boundary conditions. This equation is discretized by replacing V with \(V_{h} = \left \{ \boldsymbol {\psi }_{1}, \boldsymbol {\psi }_{2},...,\boldsymbol {\psi }_{N} \right \}\) where the functions ψi are vector functions, each component given by Lagrange interpolatory basis functions defined on a finite element mesh. This results in a sparse symmetric positive definite system of equations Ku = f, where u is the vector of degrees of freedom (DOF) of the basis function expansion of u,

the load vector f is given by

and K is the stiffness matrix with

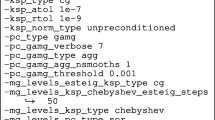

The essential boundary condition is enforced simply by constraining ui = 0 for all ψi which are non-zero on Γessential during the linear system solution process. This linear system of equations is solved using the preconditioned conjugate gradient method with algebraic multigrid preconditioning. The modular finite element method (MFEM) library (MFEM 2019) is used for discretization, assembly, and solution of the finite element equations.

The optimization problems of interest will involve the quantities total mass, total compliance, and a stress measure. The mass, M, is given by

while the compliance, Φ, is given by

The stress measure is a function of the von-Mises stress, σVM, given by

where \(\bar {\sigma }_{dev}\) is the deviatoric component of the stress tensor

We use the following relaxed stress as in (Le et al. 2010) to alleviate stress singularity:

where η(ρ) := ρp is a monotonically increasing function in terms of ρ with 0 < p < 1. Example stress measures include the p-norm

and the log-exp function

In (15) and (16), the sum is over elements with \(\sigma _{VM}^{{{r}}} \left (i \right )\) being the element center value , and the parameter p is typically between 8 and 12. To prevent possible numerical overflow, q is subtracted in the exponent of (16). These stress measures are often referred to as soft maximum functions as they approximate the maximum in the limit of \(p \rightarrow \infty \), but in practice, it is best to consider these functions as conservative upper bounds on the maximum stress. In Amstutz and Novotny (2010) and Salazar de Troya and Tortorelli (2018), an integrated ramp function is used to measure stress,

and this function was multiplied by an appropriate scale factor and added to the mass to form a composite objective function.

The volume fraction v has yet to be defined. In the standard SIMP method, v is a piecewise constant function associated with the mesh elements, these volume fractions are directly the optimization design variables. As mentioned in Section 1, this poses problems when AMR is used to refine the analysis mesh. A more general approach is to assume that v is some linear function of the design variables ω,

where ηi are basis functions (2D or 3D functions of the spatial coordinate) that are unrelated to the finite element analysis mesh. Examples of smooth bases functions for material representation in topology optimization include B-spline (Chen and Shapiro 2008; Qian 2013), distance functions (Biswas et al. 2004), and Fourier modes (White and Stowell 2018). In Section 3.1, (18) will be defined using Bernstein polynomials on a design mesh that is distinct from the analysis mesh.

The constrained non-linear optimization problem is then

where ωi denotes a design variable. The chosen objective function is a combination of mass and compliance with a weighting parameter β. The weighting parameter β is not a requirement of the proposed dual mesh method, in fact β = 0 may be used if desired. The weighting parameter β simply provides a method for incorporating both mass and compliance into the design process. For a given stress constraint, it is possible to compute many different designs by varying β. Also, using a small but non-zero value for β prevents the optimization processing from converging to an empty or disconnected design. The chosen constraint is an inequality constraint involving the stress measure Ψ with yield stress σY. This non-linear programming problem (19) is solved using the dual-primal interior point method with line search, specifically the IPOPT software library (Wächter and Biegler 2006). In our implementation, we provide IPOPT with the gradient of the objective and the constraint.

Clearly the compliance function Φ is not a simple explicit function of ω. Written out explicitly,

In the above equation, ω is the design variable. The volume fraction \(v \left (\omega \right )\) is a linear function of ω given by (18). The function \(\xi \left (v \right )\) represents the variation of the local material properties with respect to volume fraction, this is the penalty function in the SIMP method. The function \(K \left (\xi \right )\) simply states that the finite element stiffness matrix depends upon the material properties. The displacement u is clearly a function of K given by Ku = f, and finally, the compliance Φ is a function of u.

Due to the linear and self-adjoint nature of compliance, the gradient can be computed via the chain rule. A key step in the derivation is to notice that

The gradient of Φ = fTu is then

where KT = K was used. The term dK/dω can be computed element-by-element via the chain rule,

The stress function is also a complicated function of the design variables. Written out explicitly,

The adjoint method is used to compute this gradient,

where λ is the adjoint solution, and again KT = K was used. The key difference between the proposed Bernstein polynomial dual mesh method and the standard SIMP method is in the term \(\frac {\partial v}{\partial \omega }\), discussed below.

3 Dual mesh with adaptivity

3.1 Bernstein polynomials

The Lagrange interpolatory polynomials of order n are given by

where xk are the nodes, and by construction the polynomials \({L_{i}^{n}}\) interpolate at the nodes,

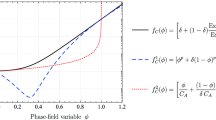

where δij is the Kronecker delta. It is common to define the Lagrange polynomials on the unit interval \(x \in \left [0,1\right ]\) with the xk uniformly spaced in \(\left [0,1\right ]\). Lagrange polynomials for n = 2 are shown in Fig. 1.

A smooth function \(f\left (x \right )\) can be approximated on the interval \(x \in \left [0,1\right ]\) via the basis function expansion

Due to the interpolation properties of the Lagrange basis functions, the choice \(\alpha _{k} = f \left (x_{k} \right )\) where xk are the aforementioned nodes gives standard polynomial interpolation. Note that other choices for α are possible, for example, if it is desired to minimize the error,

this results in a linear system of equations that must be solved for the αi. In other words, while the Lagrangian polynomials are interpolatory, they are not orthogonal.

Bernstein polynomials of order n on the interval \(\left [0,1\right ]\) are given by

with the binomial coefficient

Bernstein polynomials for n = 2 are shown in Fig. 2. For the same order n, the Lagrange polynomials and the Bernstein polynomials are simply different bases for the same polynomial space Pn. Bernstein polynomials can be used in basis function expansions of the form (34) but since they are not interpolatory, computing the coefficients α requires solving an equation. But Bernstein polynomials have some important properties, namely positivity

and normalization (partition of unity)

Given a basis function expansion (34) using Bernstein polynomials, when the coefficients satisfy 0 < αi < 1,Footnote 1 the expansion is likewise bounded by \(0 < \tilde {f}\left (x \right ) < 1\). This is not true of Lagrange polynomial interpolation. This has important consequences in numerical solution of certain partial differential equations where a field such as relative density (or species concentration, etc.) must lie between 0 and 1 to remain physical.

Higher dimensional polynomials can be constructed via tensor product (here, P denotes either Lagrange or Bernstein polynomials), for example, in 3D, we have

for \((x,y,z) \in \left [0,1\right ]^{3}\). For convenience of notation, we define \({P_{m}^{n}}\left (\mathbf {x} \right ) = P_{ijk}^{n}\left (x, y, z\right )\) for point x\( \in \left [0,1\right ]^{3}\), with the triple index ijk reduced to a single index, e.g, \(m = \left (n+1\right )^{2} k + \left (n+1\right ) j + i\). Note that higher-order polynomials can also be defined for simplices (triangles in 2D, tetrahedrons in 3D) as well as for other simple shapes, e.g., prisms and wedges, but these are not used in the article. Since the 1D Bernstein polynomials are interpolatory at the boundaries x = 0 and x = 1, on 2D square elements and 3D cubical elements, the basis functions are interpolatory on edges and faces, respectively.

3.2 Elements

In the finite element method, the term element is often used to denote both a geometric region of integration and a basis function used in a basis function expansion of a field defined within the region of integration. Here, we restrict the term element to denote the geometric region of integration, and use the term shape function to denote the basis functions used in a basis function expansion of a field defined within the element.

Let \(\tilde {\textbf {x}}\) denotes a point in the reference element \({\varOmega }_{r} = \left [0,1 \right ]^{d}\), and let x denotes a point in the actual element Ωe, as shown in Fig. 3. The actual element is implicitly defined using the above Lagrange interpolatory polynomials

where Xi is the coordinate of node i and m is the total number of nodes. The actual element Ωe is the image of Ωr under the mapping \(\mathbf {x} \left (\tilde {\textbf {x}} \right )\). This is illustrated in Fig. 3 for the case of a 2D quadratic element. If it is desired to integrate some function \(f\left (\mathbf {x} \right )\) over some actual element Ωe, this can be achieved via

where J is the Jacobian matrix

and \(\left | \cdot \right |\) denotes determinant. This is particularly convenient for numerical quadrature since the quadrature rule can be tabulated for the reference element.

3.3 Shape functions

Consider now the situation where a field \(\tilde {f}\left (\mathbf {x} \right )\) is represented by a polynomial basis function approximation, using generic basis P, on an actual element Ωe

The functions \({P_{i}^{n}}\left (\mathbf {x} \right )\) are the shape functions, and it is important to note that these may be different from the Lagrange interpolatory polynomials that define the element Ωe. In some applications, the shape functions may be identical to the Lagrange interpolatory polynomials that define the element, this is referred to as iso-parametric. The shape functions can be Lagrange interpolatory polynomials of higher order than those defining the element, this is referred to as super-parametric, and the converse is referred to as sub-parametric. The iso-parametric Lagrange shape functions or order 1 or 2 are used for the unknown displacement field in the finite element solution of the elasticity equations. These shape functions are defined on the analysis mesh. Bernstein polynomial shape functions are used for the representation of the density field, these shape functions are defined on the design mesh. Since the shape functions are actually defined on the reference element, the mapping (41) must be inverted to yield

This is always possible if \(\left | J \right | > 0\), the element cannot be “twisted” or “bowtied.” The MFEM library (MFEM 2019) supports arbitrary high-order shape functions, but here, the Bernstein polynomials will be order 1 or 2.

3.4 Finite element mesh

As mentioned in Section 1, the proposed method employs two meshes, an analysis mesh for the finite element solution of the elasticity partial differential equation, and a design mesh for the definition of the material distribution and associated optimization design variables. The analysis mesh will utilize either first- or second-order (second-order is used for curved geometry, see Section 4.3 below) Lagrange interpolatory shape functions, whereas the design mesh will utilize second-order Bernstein shape functions. The Bernstein shape functions are not completely interpolatory, but they are interpolatory on element boundaries; hence, continuity can be enforced using the above described procedure of defining some boundary DOF’s as slaves and other as master, and performing a change of variables that eliminates the slave DOF’s. The proposed algorithm requires that the analysis mesh be finer (more highly resolved) than the design mesh; otherwise, the analysis is somewhat meaningless as it does not resolve the design. While not an algorithm requirement, the proposed software implementation derives both the analysis mesh and the design mesh from a common base mesh. This common base mesh is the mesh constructed by a designer using a mesh generation tool. This mesh must capture the proposed design domain. This is illustrated in Fig. 4.

Computing a local finite element stiffness matrix requires knowledge of the local material density at a quadrature point in an element of the analysis mesh, but this density is defined on the design mesh. The process is to

- 1.

convert a local quadrature point \(\tilde {x}_{\text {analysis}}\) in element \({\varOmega }^{\text {analysis}}_{e}\) to a global coordinate xglobal via (41)

- 2.

determine the appropriate design element \({\varOmega }^{\text {design}}_{e}\) corresponding to the given analysis element \({\varOmega }^{\text {analysis}}_{e}\)

- 3.

determine the corresponding point \(\tilde {x}_{\text {design}}\) in the reference coordinate system of \({\varOmega }^{\text {design}}_{e}\) using (45).

It is step 2 that is facilitated by hierarchical mesh refinement, meaning that \({\varOmega }^{\text {design}}_{e}\) is a parent (grand parent, great grand parent, etc,) of \({\varOmega }^{\text {analysis}}_{e}\). In addition, it is important to note that in a parallel computing environment, this type of mesh hierarchy does not require any communication to map from \(\tilde {x}_{\text {analysis}}\) to \(\tilde {x}_{\text {design}}\).

The last remaining step is computing \(\frac {\partial v}{\partial \omega }\) at quadrature point \(\tilde {x}_{\text {analysis}}\), where the density v is given by

Clearly, we have

Given these derivatives of the density at the quadrature points, all required partial derivatives have been defined and the chain rule can be applied to compute \(\frac {d \varPhi }{d \omega }\) and \(\frac {d \varPsi }{d \omega }\) and used with the optimization algorithm.

4 Results

In this section, the proposed method is applied to canonical structural optimization problems. First, the method is applied to the standard cantilever beam compliance minimization in order to demonstrate that higher-order Bernstein polynomials are an effective method for representing density, free from checkerboard instabilities, and without any additional filtering. Secondly, the method is applied to stress-constrained optimization, with the scale length defined by the design mesh, and the stress field resolved by an adaptively refined analysis mesh.

4.1 2D cantilever beam

The cantilever beam is defined in the x − y plane, with length \(x\in \left [0,{{1.5}}\right ]\) and height \(y \in \left [0,1\right ]\). The x = 0 end is fixed (zero displacement constraint) and load is applied at point \(\left (1.0, 1.5\right )\) in the y direction. The design mesh was a uniform Cartesian mesh with mesh spacing h = 0.1, the analysis mesh was a uniform Cartesian mesh with spacing h = 0.0125, i.e., 8 × finer. The mass was constrained to M = 0.4, and the objective was minimizing the compliance. The result is shown in Fig. 6. The corresponding single mesh SIMP results are shown for comparison in Fig. 5. The SIMP solution employed a Helmholtz filter with radius r = 0.05. The same design is achieved by both methods. Because the two problems are parameterized differently, there are subtle differences in the material boundary, e.g., the Bernstein result has smoother, less sharp boundaries whereas the SIMP result has a characteristic jagged or stair-stepped boundary (Fig. 6). This can clearly be seen in a close up view of both solutions in Figs. 7 and 8. Note that since the analysis mesh is the same for both designs, the finite element analysis is of equal accuracy and equal cost. But the Bernstein design mesh has significantly fewer design variables than the standard SIMP mesh, 2501 DOF’s for the Bernstein design mesh versus 38,400 DOF’s for the SIMP solution.

A different design can be achieved by reducing the length scale. For the dual mesh method, this is achieved by uniform refinement of the design mesh. For the standard SIMP method, this is achieved by reducing the Helmholtz filter radius to r = 0.025. The two designs are shown in Figs. 9 and 10. Clearly, with the dual mesh approach, the length scale of the design can be controlled via the resolution of the design mesh.

4.2 The L-bracket design problem

It is well known that sharp corners can induce large localized stress. The L-bracket design problem is a good example of this phenomena. The L-bracket problem consists of an L-shaped domain in the x − y plane as shown in Fig. 11. The length is L = 1 and the height is H = 1 and the corner is located at (x,y) = (0.4,0.4). The top edge is fixed (zero displacement constraint) and the load is applied at point \(\left (1,0.4\right )\) in the − y direction. The material properties are E = 2.0e11 and ν = 0.3. The load is a ball load with radius r = 0.05 and pressure p = 5e7. The objective is a combination of mass and compliance compliance as in (19), with β = 1.0e − 4, and the constraint is on the stress with value σy = 2.5e8. The p-norm with p = 12 was used for the stress agglomeration. To be clear, the emphasis here is not to evaluate various stress norms and penalties, but rather to highlight the advantages of the dual mesh approach. The standard SIMP result using a single highly refined mesh with h = 0.00125 and 409,600 elements is shown in Fig. 11. The corresponding result for the proposed dual mesh method, with a second-order Bernstein polynomial design mesh, and an adaptively refined analysis mesh, is shown in Fig. 12. The design mesh consisted of only 1600 elements. The adaptive analysis mesh is shown in Figs. 13 and 14 for refinement level of 1 and 2, respectively. The refinement criteria are simply the local stress magnitude; thus, this is a goal-oriented refinement criteria (technically constraint oriented, since stress is an inequality constraint). The specific refinement indicator used was the elements with stress values in the top 50% of all stress values for level one, and the top 25% of stress values for level two. Other indicators, e.g., all elements with stress values exceeding some predefined value, can also be employed. This design is comparable with that of Salazar de Troya and Tortorelli (2018) which is also uses AMR to resolve the stress singularities. Recall the method proposed in Salazar de Troya and Tortorelli (2018) requires the non-linear optimization to somehow be re-started after every mesh refinement with a new set of design variables, whereas the dual mesh approach has no such complication. The total number of analysis elements was 112,000 for AMR and 409,600 for uniform refinement. The total number of design variables was 409,600 for uniform SIMP, and only 6001 for the second-order Bernstein representation, a reduction of 98% (Table 1)

4.3 The eyebar design

The eyebar domain is the rectangular region of dimension 8 × 16 with a circular hole removed, the hole has radius r = 1.5 and center (x,y) = (0,0). The essential boundary condition (zero displacement constraint) is on the right edge, with width w = 2. The load is applied to the left edge of the hole, in the − x direction, with magnitude \(f=\left (1.5^{2} - y^{2}\right )\), representing the load due to a bolt. The computational mesh is an unstructured quadrilateral mesh. The SIMP result used a uniformly refined mesh with 921,600 elements. The dual mesh result employed a design mesh with 14,400 elements and employed an adaptively refined analysis mesh using local stress as the refinement indicator. Two levels of adaptive mesh refinement were performed using stress as the refinements criteria, using the top 10% of the stress values to demark the elements to refine at each pass. The finest mesh has a total of 43,264 elements. Like the L-bracket problem, the stress measure is the p-norm of the local stress. The standard SIMP result is shown in Fig. 15 and the dual mesh result is shown in Fig. 16. The analysis mesh for the dual mesh result is shown in Fig. 17. The SIMP result employed a Helmholtz filter with radius r = 0.05, roughly corresponding to the element size of the Bernstein design mesh. These results compare quite closely with the results in Amstutz and Novotny (2010) and Salazar de Troya and Tortorelli (2018).

Both the SIMP and the dual mesh result, and the results in Amstutz and Novotny (2010) and Salazar de Troya and Tortorelli (2018) show an interesting small circular feature (which the authors refer to as “ears”). This feature is not investigated in detail in Amstutz and Novotny (2010) and Salazar de Troya and Tortorelli (2018). In order to better understand the purpose of the “ears,” a second optimization was performed, this second optimization employed a locally refined design mesh with 4 × finer mesh around the hole. The analysis mesh is, by definition, also 4 × finer in this region. The stress constraint was increased by a factor of 2 ×, the parameter β was set to 10− 5, yielding a slightly thinner design. The result is shown in Fig. 18, clearly a series of small holes are produced by the optimization. It may naively be assumed that these holes are the location of the maximum stress, and the optimizer satisfies the stress constrained by simply removing material in the location of the maximum stress. But this is not the case. The baseline stress is shown in Fig. 19, this is the stress obtained via unconstrained optimization, i.e., compliance minimization with no stress constraint. Applying the stress constraint described above yields the stress distributions shown in Figs. 20 and 21, corresponding to the designs Figs. 16 and 18, respectively. Clearly the “ears” do not coincide with the maximum stress. The stress constraints and maximum stress values for the eyebar are summarized in Table 2.

Stress distribution corresponding to the design shown in Fig. 16

Stress distribution corresponding to the design shown in Fig. 18

This eyebar design problem highlights another important issue with stress-constrained topology optimization. Figures 22 and 23 compare stress fields on two different meshes, one mesh use linear elements and the oratic elements. Note that the linear element case exhibits artificially high stress values due to faceted approximation of the curved surface. While this phenomena is well known in the finite element community, it is often ignored in topology optimization because topology optimization is often considered a preliminary design step. However, there is little point using stress as an objective or a constraint if the computed stress is artificial; therefore, all of the above eyebar results utilize quadratic elements for the analysis mesh, and corresponding quadratic shape functions for the displacement field.

5 Conclusions

Due in large part to advances in additive manufacturing techniques, topological optimization is becoming a critical computational tool for design engineers. While compliance minimization is common, application of stress constraints in topology optimization is still an active area of research. The stress field can exhibit singularities and adaptive mesh refinement is required to accurately resolve these singularities. This poses a challenge for traditional density-based topology optimization algorithms that employ a one-to-one correspondence between mesh elements and the optimization design variables. The proposed solution is to use two different meshes, a design mesh that is used to support local higher-order Bernstein polynomials for representation of material variation, and an analysis mesh that uses adaptive mesh refinement in the finite element solution of the elasticity equations. The use of Bernstein polynomials allows for straightforward implementation of box constraints on the density. Stress measures are used as the criteria for adaptive refinement of the analysis mesh. With this approach, there is no need to restart the non-linear optimization with a new set of design variables when the analysis mesh is refined. An additional benefit of this approach is that no filter is required to enforce a length scale upon the design. Instead, the length scale is simply given by the resolution of the design mesh, and this resolution can be spatially varying to meet specific design needs. Results for canonical topology optimization problems are presented, and the proposed method is demonstrated to achieve both accuracy and efficiency for these problems.

6 Replication of results

The software used to generate the results shown in Section 4 is property of the US Department of Energy and has not yet been approved for public release, and therefore is not currently openly distributed. The computational meshes used to generate the results can be obtained by contacting the corresponding author. The parameters that define the design problems such as loads, boundary conditions, constraints, and objectives have been defined in Section 4.

Notes

We introduce a lower bound, i.e., 𝜖 > 0, on the Berstein coefficients to avoid singularity in the analysis. However, this may ignore a globally optimal design as discussed in Cheng and Jiang (1992, 1997), Bruggi (2008), Le et al. (2010). We apply the relaxed stress indicator method to alleviate this issue as in Le et al. (2010).

References

Amrosio L, Buttazaro G (1993) An optimal-design problem with perimeter penalization. Calc Var 1(1):55–69

Amstutz S, Novotny A (2010) Topological optimization of structures subject to Von Mises stress constraints. Struct Multidisc Optim 41(3):407–420

Bendsoe M (1989) Optimal shape design as a material distribution problem. Struct Optim 1:193–202

Bendsoe M, Sigmund O (2003) Topology optimization theory, methods, and applications. Springer, Berlin

Biswas A, Shapiro V, Tsukanov I (2004) Heterogeneous material modeling with distance fields. Comp Aided Geom Des 21:215–232

Bourdin B (2001) Filters in topology optimization. Int J Num Meth Eng 50:2143–2158

Bruggi M (2008) On an alternative approach to stress constraints relaxation in topology optimization. Struct Multidiscip Optim 36(2):125–141

Bruggi M, Verani M (2011) A fully adaptive topology optimization algorithm with goal oriented error control. Comp Struct 89:1481–1493

Bruns T, Tortorelli D (2001) Topology optimization of nonlinear elastic structures and compliant mechanisms. Comput Methods Appl Mech Eng 190:26–27

Bruns T, Tortorelli D (2003) An element removal and reintroduction strategy for the topology optimization of structures and compliant mechanisms. Int J Numer Methods Eng 57(10):1413–1430

Chen J, Shapiro V (2008) Optimization of continuous heterogenous models. Heterogen Object Model Appl Lect Notes Comput Sci 4889:193–213

Cheng G, Jiang Z (1992) Study on topology optimization with stress constraints. Eng Optim 20(2):129–148

Cheng G, Guo X (1997) ε-relaxed approach in structural topology optimization. Struct Optim 13(4):258–266

Duysinx P, Bendsoe M (1998) Topology optimization of continuum structures with local stress constraints. Int J Numer Meth Eng 43:1453–1478

Frigo M, Johnson SG (2005) The design and implementation of fftw3. Proc IEEE 93(2):216–231

Gomes A, Suleman A (2006) Application of spectral level set methodology in topology optimization. Struct Multi Optim 31:430–443

Guest TBJK, Prevost JH (2004) Achieving minimum length scale in topology optimization using nodal design variables and projection functions. Int J Numer Meth Eng 61(2):238–254

Guest JK, Genut LCS (2010) Reducing dimensionality in topology optimization using adaptive design variable fields. Int J Numer Meth Eng 81(8):1019–1045

Guo X, Zhang W, Wang MY, Wei P (2011) Stress-related topology optimization via level set approach. Comput Methods Appl Mech Eng 200(47-48):3439–3452

Guo X, Zhang W, Zhong W (2014a) Doing topology optimization explicitly and geometrically-a new moving morphable components based framework. Journal Of Applied Mechanics-Transactions Of The ASME 81(8):081009

Guo X, Zhang W, Zhong W (2014b) Stress-related topology optimization of continuum structures involving multi-phase materials. Comput Methods Appl Mech Eng 268:632–655

Gupta DK, Langelaar M, van Keulen F (2018) Qr-patterns: artefacts in multiresolution topology optimization. Struct Multidiscip Optim 58(4):1335–1350

Haber R, Jog C, Bendsoe M (1996) A new approach to variable-topology shape design using a constraint on perimeter. Struct Optim 11(1):1–12

Jensen KE (2016) Solving stress and compliance constrained volume minimization using anisotropic mesh adaptation, the method of moving asymptotes, and a global p-norm. Struct Mult Optim 54:831–841

Kang Z, Wang Y (2011) Structural topology optimization based on non-local Shepard interpolation of density field. Comp Meth Appl Mech Eng 200(49-52):3515–3525

Kang Z, Wang YQ (2012) A nodal variable method of structural optimization based on shepard interpolant. Struct Mult Opt 90:329–342

Karp SN, Karal FC (1962) The elastic-field behavior in the neighborhood of a crack of arbitrary angle. Comm Pure Appl Math XV:413–421

Kim Y, Yoon G (2000) Multi-resolution multi-scale topology optimization - a new paradigm. Int J Solids Struct 37(39):5529–5559

Körner TW (1988) Fourier analysis. Cambridge University Press, Cambridge

Lazarov B, Sigmund O (2011) Filters in topology optimization based on helmholtz-type differential equations. Int J Numer Meth Eng 86:765–781

Le C, Norato J, Bruns T, Ha C, Tortorelli D (2010) Stress-based topology optimization for continua. Struct Multidisc Optim 41:605–620

Lieu QX, Lee J (2017) Multiresolution topology optimization using isogeometric analysis. Int J Numer Methods Eng 112(13):2025–2047

Liu C, Zhu Y, Sun Z, Li D, Du Z, Zhang W, Guo X (2018) An efficient moving morphable component (mmc)-based approach for multi-resolution topology optimization. Struct Multidiscip Optim 58(6):2455–2479

Luo Z, Zhang N, Wang Y, Gao W (2013) Topology optimization of structures using meshless density variable approximants. Int J Numer Meth Eng 93(4):443–464

Matsui K, Terada K (2004) Continuous approximation of material distribution for topology optimization. Int J Numer Meth Eng 59:1925–1944

Maute K, Ramm E (1995) Adaptive topology optimization. Struct Opt 10:100–112

MFEM (2019) Modular finite element methods, mfem.org

Nana A, Cuilliere JC, Francois V (2016) Towards adaptive topology optimization. Adv Eng Soft 100:290–307

Nguyen TH, Paulino GH, Song J, Le CH (2010) A computational paradigm for multiresolution topology optimization (mtop). Struct Multidiscip Optim 41(4):525–539

Nguyen TH, Le CH, Hajjar JF (2017) Topology optimization using the p-version of the finite element method. Struct Multidiscip Optim 56(3):571–586

Niordson F (1983) Optimal-design Of elastic plates with a constraint on the slope of the thickness function. Int J Solids Struct 19(2):141–151

Norato JA, Bell BK, Tortorelli DA (2015) A geometry projection method for continuum-based topology optimization with discrete elements. Comp Meth App Mech Eng 293:306–327

Panesar A, Brackett D, Ashcroft I, Wildman R, Hague R (2017) Hierarchical remeshing strategies with mesh mapping for topology optimization. Int J Numer Meth Eng 111:676–700

Picelli R, Townsend S, Brampton C, Norato J, Kim H (2018) Stress-based shape and topology optimization with the level set method. Comput Methods Appl Mech Eng 329:1–23

Poulsen T (2002) Topology optimization in wavelet space. Int J Numer Meth Eng 53:567–582

Qian X (2013) Topology optimization in b-spline space. Comp Meth Appl Mech Eng 265:15–35

Rahmatalla S, Swan C (2004) A Q4/Q4 continuum structural topology implementation. Struct Mult Optim 27:130–135

Rozvany G (2001) Aims, scope, methods, history, and unified terminology of computer aided optimization in structural mechanics. Struct Multidiscip Opt 21(2):90–108

Sigmund O, Petersson J (1998) Numerical instabilities in topology optimization: a survey on procedures dealing with checkerboards, mesh-dependencies and local minima. Struct Optim 16(1): 68–75

Salazar de Troya MA, Tortorelli D (2018) Adaptive mesh refinement in stress-constrained topology opotimization. Struct Mult Opt 58:2369–2386

Sayood K (2012) Introduction to data compression. Morgan Kaufmann

Sidmund O, Petersson J (1998) Numerical instabilities in topology optimization: a survey on procedures dealing with checkerboards, mesh-dependencies and local minima. Struct Optim 16:68–75

Suresh K, Takalloozadeh M (2013) Stress-constrained topology optimizatioin: a topilogical level-set approach. Struct Mult Optim 48:295–309

Wächter A, Biegler LT (2006) On the implementation of a primal-dual interior point filter line search algorithm for large-scale nonlinear programming. Math Program 106(1):5–57

Wang Y, Kang Z, He Q (2013) An adaptive refinement approach for topology optimization based on separated density field description. Comput Struct 117:10–22

Wang Y, Kang Z, He Q (2014) Adaptive topology optimization with independent error control for separated displacement and density fields. Comput Struct 135:50–61

Williams ML (1952) Stress singularities resulting from various boundary conditions in angular corners of plates in extension. J App Mech ASME 19(4):526–528

White DA, Stowell MS (2018) Topological optimization of structures using Fourier representations. Struct Multidisp Opt 58:1205–1220

Yang R, Chen C (1996) Stress-based topology optimization. Struct Optim 12:98–105

Yosibash Z (2012) Singularities in elliptic boundary value problems and elasticity and their connection with failure initiation. Springer, Berlin

Zhang S, Norato JA, Gain AL, Lyu N (2016) A geometry projection method for the topology optimization of plate structures. Struct Multidiscip Optim 54(5, SI):1173–1190

Zhang W, Liu Y, Weng P, Zhu Y, Guo X (2017) Explicit control of structural complexity in topology optimization. Comp Meth Appl Mech Engrg 324:149–169

Zhang W, Li D, Zhou J, Du Z, Li B, Guo X (2018) A moving morphable void (Mmv)-based explicit approach for topology optimization considering stress constraints. Comput Methods Appl Mech Eng 334:381–413

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Responsible Editor: Xu Guo

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Prepared by LLNL under Contract DE-AC52-07NA27344

Rights and permissions

About this article

Cite this article

White, D.A., Choi, Y. & Kudo, J. A dual mesh method with adaptivity for stress-constrained topology optimization. Struct Multidisc Optim 61, 749–762 (2020). https://doi.org/10.1007/s00158-019-02393-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-019-02393-6