Abstract

Objectives

To assess the performance of the Pediatric Index of Mortality (PIM) 2 score in Italian pediatric intensive care units (PICUs).

Design

Prospective, observational, multicenter, 1-year study.

Setting

Eighteen medical–surgical PICUs.

Patients

Consecutive patients (3266) aged 0–16 years admitted between 1 March 2004 and 28 February 2005.

Interventions

None.

Measurements and main results

To assess the performance of the PIM2 score, discrimination and calibration measures were applied to all children admitted to the 18 PICUs, in the entire population and in different groups divided for deciles of risk, age and admission diagnosis. There was good discrimination, with an area under the receiver operating characteristic (ROC) curve of 0.89 (95% CI 0.86–0.91) and good calibration of the scoring system [non-significant differences between observed and predicted deaths when the population was stratified according to deciles of risk (χ2 9.86; 8 df, p = 0.26) for the whole population].

Conclusions

The PIM2 score performed well in this sample of the Italian pediatric intensive care population. It may need to be reassessed in the injury and postoperative groups in larger studies.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The mortality rate of children in pediatric intensive care units (PICUs) has stood at about 5% and 7%, respectively, in North America [1, 2] and Europe [3] since the 1990s, and a 5-year Australian study (1997–2001) reported a rate of 4.2% [4], significantly lower than for adults. In a previous Italian study conducted in both pediatric and adult ICU in 1994 the pediatric mortality rate was 11.4% [5].

Because of large differences in case-mix, comparisons of mortality among different units and countries must be corrected for the severity of illness on admission to the ICU, and severity-scoring systems have proved valuable for quality assurance and research in intensive care medicine [4]. These risk-adjusted measures use logistic regression models to obtain an equation that describes the relationship between the predictor variables and the probability of death. Data are collected to obtain two groups of patients: a learning sample, which serves to build the model, and a validation sample, used to test the accuracy of the resulting prediction rule.

Two different mortality scores specific for pediatric patients have been available since the mid-1980s: the Pediatric Risk of Mortality (PRISM) score [6] with subsequent updatings [7] and the Pediatric Index of Mortality (PIM) [8], recently updated as PIM2 [9]. These scores were developed in specific countries (USA and Australia, respectively) but can be applied virtually worldwide, although it is essential to test their performance in a large cohort of the country that intends to adopt them. Scores cover an intrinsic description of the health care system, PICU organization, and case-mix of the population used to create it. Thus, poor calibration in other settings, with over- or under-prediction of mortality, could be the result of substantial health care organization differences. Some authors suggest calculating new coefficients specific for each population in order to obtain better performance [10], but this compromises the ideal of comparisons between different countries.

The above-mentioned previous Italian study, which adopted the PRISM score, showed good sensitivity and specificity in distinguishing the children who died from those who survived (discrimination), but the number of observed deaths was significantly higher than predicted by the score [5]. This might be due to poor performance of the Italian ICUs included in that survey, since the study found higher overall mortality than in other countries.

Since 1994 no other multicenter national study has been conducted in Italy on children, neonates and premature babies admitted to ICUs. The aim of this observational, prospective, multicenter study was to assess the validity of the PIM2 score in Italian PICUs.

Methods

All 23 existing Italian PICUs were invited to participate. The study was conducted over 1 year, from 1 March 2004 to 28 February 2005. All consecutive patients admitted, from newborns (including premature babies over 32 weeks of gestational age and weight ≥ 1500 g) to 16-year-old children, were enrolled. For each patient we considered age, sex, reason for admission (medical, elective surgery, emergency surgery, cardiac surgery, neurosurgery, injury), diagnosis on admission (respiratory failure, heart disease, neurological disease, sepsis, metabolic disease, post-surgical observation, others), presence and nature of chronic disease (evaluated only for medical patients), immunodeficiency (classified as: chemotherapy, HIV infection, congenital, steroid therapy, transplant), length of stay (LOS), diagnosis on discharge, and outcome (survival or death). As severity score, we applied the PIM2 [9] at admission.

Children re-admitted to an ICU were enrolled as new patients only if the new admission was at least 48 h after previous discharge, and were considered the same patients if re-admitted within 48 h. The rate of re-admission was reported for each center. No limits were imposed for the PICU stay. Patients still in the PICU at the end of the study were considered alive.

Data were collected by each PICU through a dedicated electronic data base (Access®, Microsoft Corp.) and sent to the coordinating center (Children's Hospital Vittore Buzzi, Milan). For privacy reasons, patients' data were kept anonymous, and the children were identified by the center of origin and their admission number. Before starting, the study instruments and procedures (software function, appropriate data entry, correct mailing) were tested on site for 1 month in each center.

In order to standardize the application of the PIM2 score, the clinical files of five patients from the coordinating center were sent to each hospital. Two physicians in each center were asked to calculate the score. All disagreements were discussed and solved at a specific site meeting with one of the authors (A.W.). As the reliability of all scores depends on the quality of data collection [11] only the two specifically trained designated physicians retrieved PIM2 data in each center. Throughout the study, one of the authors (A.W.) monitored data consistency and at the end of the study visited each center in order to discuss and solve any controversial and/or inconsistent data. To verify the reliability of data collection, information about 90 admissions (five for each center), selected randomly, was collected twice. The bias in the probability of death, estimated by the Bland–Altman technique [12] and expressed as a ratio (95% confidence interval, CI), was 0.93 (0.90–1.05). The original data predicted 12.85 deaths and the re-extracted data 12.09 deaths.

Excel (Excel®, Microsoft Corp.) and Statistical Package for the Social Sciences (SPSS vers.13.0. Chicago, IL) were used for data management and analysis.

We used discrimination and calibration measures to assess the performance of the PIM2 score in our population. Discrimination, the ability of the score to distinguish between patients who survive and those who die, was assessed by calculating the area under the receiver operating characteristic (ROC) curve. Calibration, the ability of the score to match the actual number of deaths in specific subgroups, was assessed using the Hosmer–Lemeshow goodness-of-fit test to assess across deciles of risk [13].

We stratified patients by age and diagnostic group in order to assess the validity of the score in these subgroups. Age was classified using a seven-group classification. Six were recently recognized by the International Sepsis Forum (ISF) [14]: newborn, neonate, infant, pre-school, school, adolescent. Premature babies over 32 weeks of gestational age and weight ≥ 1500 g admitted on the day of birth were considered as a separate group from newborns. Reason for and diagnosis on admission were classified into six groups: injury, cardiac (including cardiac surgery), neurological, respiratory, miscellaneous, and postoperative (except cardiac surgery), according to the PIM2 study [9]. In each strata we calculated the area under the ROC curve and the ratio of the number of observed deaths to those predicted by the PIM2 score (standardized mortality ratio, SMR). The 95% CIs of SMRs were calculated with the test-based method [15] and the p-values were derived by the one degree of freedom, chi-squared method, calculated as [(observed deaths–expected deaths)2/expected deaths].

The Human Research Ethics Committee of the Children's Hospital Vittore Buzzi, Milan, performed the primary review and approved the study.

Results

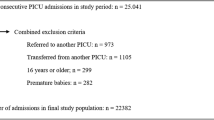

Five of the 23 units had to be excluded because of lack of data collection and/or transmission. Table 1 presents a summary description of the PICUs in the study. There were ten in the north of Italy (centers 1–10), five in the central area of the country (centers 11–15) and three in the south (centers 16–18). Ten units were in children's hospitals, the other eight in pediatric departments of general hospitals. The average number of beds was six, and only three units had ten or more. Most of the units accepted medical and surgical patients, from newborns to 16 years old; eight units admitted premature babies routinely.

In all, 3429 consecutive children were enrolled. Eight centers conducted the survey for only 6 months instead of the whole year, for administrative reasons. A total of 54 children were excluded because they were outside the age limits, and 109 by reason of incomplete data. Table 2 sets out the demographic and clinical characteristics of the 3266 children analyzed. In the whole population, 153 children were re-admitted after 48 h and all survived. No specific statistical analysis was conducted in this group of patients. Among medical patients, the most frequent diagnosis on admission was respiratory failure. Surgical patients amounted to 46.4% of the entire population and trauma patients, 6.8%. Median LOS in the PICU was 2 days (mean 5.8, range 0–365 days); 4.5 % had a LOS shorter than 24 h, and 31% were discharged after 1 day.

The PIM2 score predicted 192.0 deaths (5.9%), while the observed deaths were 171 (5.2%). In 40% (69) of these children, death occurred within the first 24 h after admission. Among medical patients 28.9% had chronic disease, with an absolute mortality rate of 15.6%. The difference between observed and predicted deaths was not significant, and the ratio of observed to expected deaths (O/E ratio) gave an SMR for the entire sample of 0.89 (CI 0.71–1.09). The ROC curve for the overall study population is shown in Fig. 1; the value of the area under the curve is 0.89 with a 95% CI of 0.86–0.91. Observed and expected mortality across deciles of mortality risk groups, according to the Hosmer–Lemeshow test, is set out in Table 3. The results showed good calibration: the differences between observed and expected deaths across the deciles of risk were not significant (χ2 9.87; 8 df, p = 0.26) for the entire sample or for each single decile of predicted mortality risk.

Further analyses were conducted considering the differences between observed and score-predicted mortality according to age and diagnostic group (Table 4 and Table 5 respectively). Mortality predictions from the PIM2 score were correct over all age groups except for premature babies: where no deaths were observed despite a prediction of 6.4 deaths; this difference was significant (p = 0.009). Over the six diagnostic groups, the only relevant, although non-significant exceptions were the injury and postoperative groups, where the score predicted more deaths than were actually observed, with SMR of 0.65 (CI 0.30–1.33 and 0.35–1.20 respectively) in each case.

Discussion

This study evaluated the ability of the PIM2 score to predict mortality in Italian PICUs. A previous study in the early 1990s did not validate the PRISM score [5]. For the present study we chose the PIM2 score because it is the most recent severity score published for children, it has a free algorithm to calculate mortality risk while other scores require a license, and the small number of variables (10) makes it very simple to collect. In addition, the Australian and New Zealand Pediatric Intensive Care (ANZPIC) network found the PIM2 score performed better than other rating systems [4].

Our results showed good discrimination and good calibration of the score. The area under the ROC curve (0.89) was very close to the 0.90 reported in the original article (95% CI 0.89–0.91) [9]. In the overall population, the PIM2 score predicted 192.0 deaths while we actually observed 171 deaths, giving an O/E ratio (SMR) of 0.89 (CI 0.71–1.09), not significantly different from unity. The score maintained its validity across the different levels of severity when the population was stratified according to the PIM2 risk score into ten progressive categories (deciles). The observed deaths were not statistically different from the prediction in all ten strata or when a summary measure of the significance was obtained with the Hosmer–Lemeshow test.

Analysis of the overall study population showed an overprediction of 21 deaths. This difference was described well by the analysis of the score's validity by different age groups and diagnostic categories. In all the age brackets introduced by the ISF, the numbers of deaths predicted by PIM2 were similar to those observed, except in the preschool category, where more deaths were predicted than observed (50.9 vs. 38, p = 0.06) and the SMR was 0.74 (CI 0.47–1.13) despite a good area under the ROC curve (0.92). In-depth analysis of this group did not reveal any diagnostic group with a significant difference.

The PIM2 score was applied in Australia and New Zealand for premature babies too, although in the original article there was no validation of the score in this category [9]. Only one center of the 14 in the PIM2 paper admitted premature infants routinely, against eight in this study. We included only slightly premature babies. The score predicted higher mortality than was actually observed (overprediction of six deaths). Because of the small size of the premature group in our study, PIM2 needs to be reassessed in larger populations of premature babies.

The validity of the PIM2 score was further evaluated according to diagnostic groups, which vary widely in different PICUs and settings. In all six diagnostic categories the PIM2 showed good discrimination, with areas under the ROC curves higher than 0.80 and a good O/E ratio. The number of deaths observed was lower than expected for postoperative and injured children but the difference was not significant.

The study that used the PRISM score in Italian ICUs in 1994 reported an observed mortality significantly higher than that predicted although the discrimination power was satisfactory [11]. Pediatric absolute mortality in the Italian PRISM study was much higher (11.4%) than in the present study (5.2%). The two Italian studies' cohorts were similar for age, type of admission, co-morbidities, and geographic distribution of units, and the only striking difference was in the features of the ICUs. Ten of the 21 units enrolled in the PRISM study (47.6%) were adult units, with a mean of 21 children admitted per year for combined adult–pediatric units and 91 for pediatric units. In our study, all units were pediatric and the mean number of children per year per unit was 181.

In all the subgroup analyses in the PRISM study the lack of fit of the model was due to higher than expected mortality in the low-risk categories (which amounted to 89% of the cohort). For these reasons we presume that differences between observed and expected numbers of deaths were only partially related to the validity or otherwise of the PRISM score. Insufficient experience and skill in treating pediatric patients, probably because of the small number of cases managed per year, seems to be the main reason. As reported in the literature, pediatric patients need to be cared for in a dedicated unit in a children's hospital or pediatric department [16–18]. Competence and skill in treating pediatric patients increase with centralization of pediatric critical care and the numbers of patients observed [19, 20].

One of the reasons why critically ill children are admitted to adult units is that Italian PICUs are not homogeneously distributed through the country: there seem to be enough in the north but not nearly enough in the south, and in the past 10 years this situation has not changed. From 13% to 22% of critically ill children from the south of Italy are treated in hospitals in the north [21], while many children treated in the south are admitted to adult ICUs.

The validity of the PIM2 score might be explained by some intrinsic characteristics that render it less affected by the setting, and the demographic and clinical features of the population admitted to the PICU. One of the main characteristics of this score is that all the variables have to be recorded within 1 h of admission. As 40% of deaths occurred in the first 24 h, the PIM2 seems to be better, in this respect, than other scores that require data collection at 12–24 h. Recent studies showed that the PIM score can be used in the short time interval available to the pediatric teams who retrieve children from other hospitals [22].

The need to validate a severity score separately in each country appears unquestionable [9], considering the considerable diversity in structure, organization, staffing, and management among European PICUs [23] with respect to US and Australian ones. Nevertheless, only two other studies using the PIM2 score have been published: one in 2005 reported the experience of a single PICU in Croatia [24], and one in 2006 presented an assessment and optimization of mortality prediction tools in the UK [10]. The latter was a large, multicenter, nationwide study and showed good discriminatory power but poor calibration for the PIM, PIM2 and PRISM scores. The authors proposed new specific UK coefficients to obtain satisfactory calibration.

In our study both the discrimination and calibration through deciles of risk were appropriate, though in some patient categories the predictions were not sufficiently precise.

Assessing the performance of the PIM2 score is an important step for Italian pediatric critical care medicine. The need to work together for epidemiological and clinical studies is pressing, because most units are small (four to six beds) and have no more than 200–400 admissions per year. PIM2 provides a valid mortality index for multicenter national studies and may help improve child healthcare policies throughout the country.

References

Tilford JM, Roberson PK, Lensing S, Fiser DH (1998) Differences in pediatric ICU mortality risk over time. Crit Care Med 26:1737–1743

Pollack MM, Cuerdon TC, Getson PR (1993) Pediatric intensive care units: results of a national survey. Crit Care Med 21:607–613

Gemke RJBJ, Bonsel GJ (1995) Comparative assessment of pediatric intensive care: A national multicenter study. Crit Care Med 23:238–245

Slater A, Shann F, for the Anzics Paediatric Study Group (2004) The suitability of the Pediatric Index of Mortality (PIM), PIM2, the Pediatric Risk of Mortality (PRISM), and PRISM III for monitoring the quality of pediatric intensive care in Australia and New Zealand. Pediatr Crit Care Med 5:447–454

Bertolini G, Ripamonti D, Cattaneo A, Apolone G (1998) Pediatric Risk of Mortality: an assessment of its performance in a sample of 26 Italian intensive care units. Crit Care Med 26:1427–1432

Pollack MM, Ruttimann UE, Getson PR (1988) Pediatric risk of mortality (PRISM) score. Crit Care Med 16:1110–1116

Pollack MM, Patel KM, Ruttimann UE (1996) PRISM III: an updated Pediatric Risk of Mortality score. Crit Care Med 24:743–752

Shann F, Pearson G, Slater A, Wilkinson K (1997) Paediatric index of mortality (PIM): a mortality prediction model for children in intensive care. Intensive Care Med 23:201–207

Slater A, Shann F, Pearson G for the PIM Study Group (2003) PIM2: a revised version of the Paediatric Index of Mortality. Intensive Care Med 29:278–285

Brady AR, Harrison D, Black S, Jones S, Rowan K, Pearson G, Ratcliffe J, Parry GJ, on behalf of the UK PICOS Study Group (2006) Assessment and optimization of a mortality prediction tool for admission to pediatric intensive care in the United Kingdom. Pediatrics 117:e733–742

van Keulen JG, Gemke RJBJ, Polderman KH (2005) Effect of training and strict guidelines on the reliability of risk adjustment systems in paediatric intensive care. Intensive Care Med 31:1229–1234

Bland JM, Altman DG (1986) Statistical methods for assessing agreement between two methods of clinical measurement. Lancet 1:307–310

Hosmer DW, Lemeshow S (2000) Applied logistic regression. Wiley, New York

Goldstein B, Giroir B, Randolph A and the Members of the International Consensus Conference on Pediatric Sepsis (2005) International pediatric sepsis consensus conference: definitions for sepsis and organ dysfunction in pediatrics. Pediatr Crit Care Med 6:2–8

Miettinen OS (1976) Estimability and estimation in case-referent studies. Am J Epidemiol 103:226–235

Frey B, Argent A (2004) Safe paediatric intensive care. 1. Does more medical care lead to improved outcome? Intensive Care Med 30:1041–1046

Frey B, Argent A (2004) Safe paediatric intensive care. 2. Workplace organisation, critical incident monitoring and guidelines. Intensive Care Med 30:1292–1297

Rosenberg DJ, Moss MM (2004) American College of Critical Care Medicine of the Society of Critical Care Medicine. Guidelines and levels of care for pediatric intensive care units. Crit Care Med 32:2117–2127

Kanter RK (2002) Regional variation in child mortality at hospitals lacking a pediatric intensive care unit. Crit Care Med 30:94–99

Pearson G, Shann F, Barry P, Vyas J, Thomas D, Powell C, Field D (1997) Should paediatric intensive care be centralised? Trent versus Victoria. Lancet 349:1213–1217

Bonati M, Campi R (2005) What can we do to improve child health in southern Italy? PLoS Med 2:e250

Tibby SM, Taylor D, Festa M, Hanna S, Hatherill M, Jones G, Habibi P, Durward A, Murdoch IA (2002) A comparison of three scoring systems for mortality risk among retrieved intensive care patients. Arch Dis Child 87:421–425

Nipshagen MD, Polderman KH, De Victor D, Gemke RJ (2002) Pediatric intensive care: result of a European survey. Intensive Care Med 28:1797–803

Mestrovic J, Kardum G, Polic B, Omazic A, Stricevic L, Sustic A (2005) Applicability of the Australian and New Zealand Paediatric Intensive Care Registry diagnostic codes and Paediatric Index of Mortality 2 scoring system in a Croatian paediatric intensive care unit. Eur J Pediatr 164:783–784

Author information

Authors and Affiliations

Consortia

Corresponding author

Additional information

The members of the SISPe group are listed in the appendix.

Members of the Italian Pediatric Sepsis Study (SISPe) Group

Members of the Italian Pediatric Sepsis Study (SISPe) Group

P. Vitale, A. Conio (Ospedale Regina Margherita, Turin); G. Ottonello, A. Arena (Ospedale Gaslini, Genoa); C. Gallini, R. Gilodi (Ospedale Sant'Antonio e Biagio e Cesare Arrigo, Alessandria); R. Osello, F. Ferrero (Ospedale Maggiore della Carità, Novara); E. Zoia, A. Mandelli (Ospedale de Bambini V Buzzi, Milan); L. Napolitano, S. Leoncino (Fondazione Ospedale Maggiore Policlinico Mangiagalli Regina Elena, Milan); E. Galassini (Ospedale Fatebenefratelli, Milan); D. Codazzi (Ospedali Riuniti, Bergamo); A. Baraldi, S. Molinaro (Spedali Civili, Brescia); P. Santuz (Ospedale Civile Borgo Trento, Verona); P. Cogo, A. Pettenazzo (Ospedale Civile T.I. Pediatrica, Padua); L. Meneghini, F. Giusti (Ospedale Civile Anestesia, Padua); A. Sarti (Ospedale Burlo, Trieste); E. Iannella, S. Baroncini (Ospedale Sant'Orsola Malpigli, Bologna); M. Calamandrei, A. Messeri (Ospedale Meyer, Florence); M. Marano, C. Tomasello (Ospedale Bambin Gesù DEA, Rome); A. Onofri, M. Ferrari (Ospedale Bambin Gesù Anestesia, Rome); M. Piastra, E. Caresta (Ospedale Gemelli, Rome); A. Dolcini (Ospedale Santo Bono, Naples); C. Rovella, A.M. Guddo (Ospedale G.A. Di Cristina, Palermo); M. Astuto, N. Disma (Ospedale Policlinico, Catania); D. Salvo, D. Buono (Ospedale San Vincenzo, Taormina)

Rights and permissions

About this article

Cite this article

Wolfler, A., Silvani, P., Musicco, M. et al. Pediatric Index of Mortality 2 score in Italy: a multicenter, prospective, observational study. Intensive Care Med 33, 1407–1413 (2007). https://doi.org/10.1007/s00134-007-0694-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00134-007-0694-z