Abstract

Let \({\mathcal {R}}\) denote the generalized Radon transform, which integrates over a family of N-dimensional smooth submanifolds \({\mathcal {S}}_{{{\tilde{y}}}}\subset {\mathcal {U}}\), \(1\le N\le n-1\), where an open set \({\mathcal {U}}\subset {\mathbb {R}}^n\) is the image domain. The submanifolds are parametrized by points \({{\tilde{y}}}\subset {{\tilde{{\mathcal {V}}}}}\), where an open set \({{\tilde{{\mathcal {V}}}}}\subset {\mathbb {R}}^n\) is the data domain. We assume that the canonical relation \({{\tilde{C}}}\) from \(T^*{\mathcal {U}}\) to \(T^*{{\tilde{{\mathcal {V}}}}}\) of \({\mathcal {R}}\) is a local canonical graph (when \({\mathcal {R}}\) is viewed as a Fourier Integral Operator). The continuous data are denoted by g, and the reconstruction is \({\check{f}}={\mathcal {R}}^*{\mathcal {B}}g\). Here \({\mathcal {R}}^*\) is a weighted adjoint of \({\mathcal {R}}\), \({\mathcal {B}}\) is a pseudo-differential operator, and g is a conormal distribution. Discrete data consists of the values of g on a regular lattice with step size \(O(\epsilon )\). Let \({\mathcal {S}}\) denote the singular support of \({\check{f}}\), and \({\check{f}}_\epsilon ={\mathcal {R}}^*{\mathcal {B}}g_\epsilon \) be the reconstruction from interpolated discrete data \(g_\epsilon ({{\tilde{y}}})\). Pick a point \(x_0\in {\mathcal {S}}\), i.e. the singularity of \({\check{f}}\) at \(x_0\) is visible from the data. The main result of the paper is the computation of the limit

Here \(\kappa \ge 0\) is selected based on the strength of the reconstructed singularity, and \({\check{x}}\) is confined to a bounded set. The limiting function \(\text {DTB}({\check{x}})\), which we call the discrete transition behavior, contains full information about the resolution of reconstruction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Analysis of resolution of tomographic reconstruction from discrete Radon transform data is a practically important problem. In many applications one needs to know how accurately and with what resolution singularities of the object f (e.g., a jump discontinuity across a smooth surface \({\mathcal {S}}=\text {singsupp}(f)\)) are reconstructed. Let \({\check{f}}\) denote the reconstruction from continuous data, and \({\check{f}}_\epsilon \) denote the reconstruction from discrete data, where \(\epsilon \) represents the data sampling rate. In the latter case, interpolated discrete data are substituted into the “continuous” inversion formula. In [17,18,19,20] the author initiated an analysis of reconstruction, which is focused on the behavior of \({\check{f}}_\epsilon \) near \({\mathcal {S}}\). One of the main results of these papers is the computation of the limit

Here \(x_0\in {\mathcal {S}}\) (\(x_0\) is selected subject to some constraints, see Definition 3.4 below), \(\kappa \ge 0\) is a unique number selected based on the strength of the singularity of \({\check{f}}\) at \(x_0\) (see 4.19), and \({\check{x}}\) is confined to a bounded set. Let \(f_0\) be the leading singularity of \({\check{f}}\) in a neighborhood of \(x_0\) (see Definition 5.2). For example, if \({\check{f}}\) is a conormal distribution with a homogeneous top symbol, then \(f_0\) is the distribution determined by the top symbol. If \(f_0\) is a homogeneous distribution of degree \(-\kappa \), i.e., \(f_0(t\check{x})=t^{-\kappa }f_0({\check{x}})\), then this value of \(\kappa \) is used in (1.1).

It is important to emphasize that both the size of the neighborhood around \(x_0\) and the data sampling rate go to zero simultaneously in (1.1). The limiting function \(\text {DTB}({\check{x}})\), which we call the discrete transition behavior (or DTB for short), contains complete information about the resolution of reconstruction. The limit in (1.1) is computed for a fixed \(x_0\), so the dependence of the DTB, \(f_0\), and \(\kappa \) on \(x_0\) is omitted for simplicity.

The DTB in (1.1) is a complete description of the reconstruction from discrete data in a neighborhood of a singularity. To put it simply, DTB is an accurate estimate of the reconstruction itself, which is the most one can ever obtain in resolution analysis. Conventional measures of resolution such as Full Width at Half Maximum (FWHM), line pairs per unit length, characteristic scale, etc., are a single number each. Once the full DTB function is computed, getting any desired resolution measurement from it (i.e., converting the DTB into a single number) is trivial. See Remark 4.10 for an example.

The results obtained to date can be summarized as follows. Even though we study reconstruction from discrete data, the classification of the cases is based on their continuous analogues. In [17] we find \(\text {DTB}({\check{x}})\) for the Radon transform in \({\mathbb {R}}^2\) in two cases: f is static and f changes during the scan (dynamic tomography). In the static case the reconstruction formula is exact (i.e., \({\check{f}}=f\)), and in the dynamic case the reconstruction formula is quasi-exact (i.e., \({\check{f}}-f\) is smoother than f). In [18] we find \(f_0({\check{x}})\) for the classical Radon transform (CRT) in \({\mathbb {R}}^3\) assuming the reconstruction is exact and f has jumps. In [20] we consider a similar setting as in [18], i.e., f has jumps and reconstruction is quasi-exact, but consider a wide family of generalized Radon transforms (GRT) in \({\mathbb {R}}^3\). Finally, in [19], the data still comes from the classical Radon transform, but the dimension is increased to \({\mathbb {R}}^n\), the reconstruction operators are more general, and f may have singularities other than jumps. See Table 1 for a summary of the cases.

Let \({\mathcal {R}}\) denote the GRT, which integrates over a family of N-dimensional smooth submanifolds \({\mathcal {S}}_{{{\tilde{y}}}}\subset {\mathcal {U}}\subset {\mathbb {R}}^n\), \(1\le N\le n-1\). When integration is performed over affine subspaces and \(N<n-1\), the GRT is known as the N-plane transform. If \(N=1\), the GRT is called the ray (or, X-ray) transform. The open set \({\mathcal {U}}\) represents the image domain. The submanifolds \({\mathcal {S}}_{{{\tilde{y}}}}\) are parametrized by points \({{\tilde{y}}}\subset {{\tilde{{\mathcal {V}}}}}\), where an open set \({{\tilde{{\mathcal {V}}}}}\subset {\mathbb {R}}^n\) is the data domain. Our only other condition on \({\mathcal {R}}\) (besides that \({\mathcal {S}}_{{{\tilde{y}}}}\) be embedded manifolds, see Assumption 3.1(G1)) is that the canonical relation \({{\tilde{C}}}\) from \(T^*{\mathcal {U}}\) to \(T^*{{\tilde{{\mathcal {V}}}}}\) of \({\mathcal {R}}\) be a local canonical graph (see Assumption 3.1(G2) and Sect. 5.1). Here we view \({\mathcal {R}}\) as a Fourier Integral Operator (FIO). Assumption 3.1(G2) implies also that all the singularities of f microlocally near \((x_0,\xi _0)\in T^*{\mathcal {U}}\), where \(\xi _0\) is conormal to \({\mathcal {S}}\) at \(x_0\), are visible in the GRT data \({\mathcal {R}}f({{\tilde{y}}})\), \({{\tilde{y}}}\in {{\tilde{{\mathcal {V}}}}}\) (see Remark 3.2).

Reconstruction from continuous data \(g={\mathcal {R}}f\) is achieved by \(\check{f}={\mathcal {R}}^*{\mathcal {B}}g\). Here \({\mathcal {R}}^*\) is a weighted adjoint of \({\mathcal {R}}\), which integrates over submanifolds \({{\tilde{{\mathcal {T}}}}}_x:=\{{{\tilde{y}}}\in {{\tilde{{\mathcal {V}}}}}:x\in {\mathcal {S}}_{{{\tilde{y}}}}\}\), and \({\mathcal {B}}\) is a fairly general pseudo-differential operator (\(\Psi \)DO). In fact, g does not even have to be the GRT of some f. All we need is that g be a sufficiently regular conormal distribution associated with a smooth hypersurface \(\Gamma \subset {{\tilde{{\mathcal {V}}}}}\) [13, Sect. 18.2]. The data g are sampled on a regular lattice \({{\tilde{y}}}_j=\epsilon D j\), \(j\in {\mathbb {Z}}^n\), covering \({{\tilde{{\mathcal {V}}}}}\), where D is a sampling matrix.

To illustrate the effect of \({\mathcal {B}}\) on reconstruction, suppose \(g={\mathcal {R}}f\), where f is a sufficiently regular conormal distribution associated with a smooth hypersurface \({\mathcal {S}}\subset {\mathcal {U}}\). The choice of \({\mathcal {B}}\) determines whether the reconstruction is quasi-exact (i.e., \({\check{f}}-f\) is smoother than f), preserves the order of singularities of f (\({\check{f}}\) and f are in the same Sobolev space), or is singularity-enhancing (\({\check{f}}\) is more singular than f). A common example of the latter is Lambda (also known as local) tomography [8, 27].

The setting considered in this paper includes all the cases considered previously [17,18,19,20], and is substantially more general than before. In particular, in the previous work we always had \(N=n-1\). Now, N can be any integer \(1\le N\le n-1\). This includes the practically most important case of cone beam CT: \(n=3\) and \(N=1\), on which the overwhelming majority of all medical, industrial, and security CT scans are based (see e.g., [15, 24] and references therein).

The main result of this paper is the derivation of the DTB (1.1) under these general conditions (see Theorem 4.7). Our result shows that even though g is sampled on a regular lattice, due to the geometric properties of the GRT, the resolution is both location- and direction-dependent (see Remark 4.8). We also show that the DTB equals to the convolution of the continuous transition behavior (CTB) with the suitably scaled classical Radon transform of the interpolation kernel (see Theorem 5.4). Loosely speaking, the CTB is the continuous analogue of the DTB (see Definition 5.3):

To put it differently, \(\text {CTB}(\check{x})\) is the leading singularity of the reconstruction \({\check{f}}\) at \(x_0\) (the same as \(f_0\) mentioned above). Since the reconstruction is not always intended to compute f or its singularities exactly (e.g., for singularity-enhancing reconstructions), it is important to know what the CTB look like in these more general situations.

The operator \({\mathcal {R}}^*\) (and, of course, \({\mathcal {R}}\) as well) can be viewed as an FIO, which is associated to a phase function linear in the frequency variables (see [12, Sect. 2.4] and [10, Sect. 1.3]):

Here \(w\in C_0^{\infty }({\mathcal {U}}\times {{\tilde{{\mathcal {V}}}}})\), and \(\Psi \in C^\infty ({\mathcal {U}}\times {{\tilde{{\mathcal {V}}}}})\) is any \({\mathbb {R}}^{n-N}\) valued function that satisfies some nondegeneracy conditions (so that \({{\tilde{C}}}\) is a local canonical graph). Any such \(\Psi \) determines a pair \({\mathcal {R}}\), \({\mathcal {R}}^*\) by setting \({\mathcal {S}}_{{{\tilde{y}}}}=\{x\in {\mathcal {U}}:\,{\Psi (x,{{\tilde{y}}})=0}\}\), \({{\tilde{{\mathcal {T}}}}}_x=\{{{\tilde{y}}}\in {{\tilde{{\mathcal {V}}}}}:\,{\Psi (x,{{\tilde{y}}})=0}\}\), and selecting integration weights to ensure that \({\mathcal {R}}\) and \({\mathcal {R}}^*\) are properly supported FIOs. As is easily seen, any properly supported FIO \(F:{\mathcal {E}}^\prime ({{\tilde{{\mathcal {V}}}}})\rightarrow {\mathcal {D}}^\prime ({\mathcal {U}})\) with the same phase can be represented in the form \(F={\mathcal {R}}^*{\mathcal {B}}\) modulo a regularizing operator for some \({\mathcal {B}}\) (at least, microlocally where \({\mathcal {R}}^*\) is elliptic). Indeed, we can just take \({\mathcal {B}}=({\mathcal {R}}^*)^{-1}F\), where \(({\mathcal {R}}^*)^{-1}\) is a (left and right) parametrix for \({\mathcal {R}}^*\) (see [6, Proposition 5.1.2]). We assume here that an appropriate cut-off is introduced in \(({\mathcal {R}}^*)^{-1}\), so the composition is well-defined. Also, we do not worry about global conditions on \({\mathcal {R}}\), because \({\mathcal {U}}\) and \({{\tilde{{\mathcal {V}}}}}\) are sufficiently small. Then \({\mathcal {B}}\) is a \(\Psi \)DO (its canonical relation \({{\tilde{C}}}\circ {{\tilde{C}}}^t\) is the diagonal from \(T^*{{\tilde{{\mathcal {V}}}}}\) to \(T^*{{\tilde{{\mathcal {V}}}}}\)), and \(F-{\mathcal {R}}^*{\mathcal {B}}\) is regularizing. Thus, the reconstruction algorithm is the application of an FIO F with a phase function, which is linear in the frequency variables, to discrete data \(g({{\tilde{y}}}_j)\).

We emphasize \({\mathcal {R}}^*\) when discussing parallels between \({\mathcal {R}}^*\) and FIOs, because in this paper we investigate the resolution of computing \({\mathcal {R}}^*{\mathcal {B}}\) from discrete data (and not of \({\mathcal {R}}\)).

Various methods for applying FIOs to discrete data have been proposed, see e.g., [3,4,5, 35] and references therein. This appears to be the first analysis of resolution of the reconstructed image Fg for fairly general classes of FIOs F and (conormal) distributions g. Some results along this direction are in [20]. Here \(g={\mathcal {I}}f\), where \({\mathcal {I}}\) is an imaging operator (frequently an FIO), and f is the unknown original object. Analyses of such sort are especially important, because they apply not only when an exact inversion formula for \({\mathcal {I}}\) is known (e.g., when \({\mathcal {I}}\) is the classical Radon transform), but even when no such formula exists (e.g., when \({\mathcal {I}}\) is a weighted GRT integrating over nonplanar submanifolds). In the latter cases a common approach is to use a parametrix for \({\mathcal {I}}\) as the reconstruction operator F, so Fg accurately recovers only the singularities of f. Reconstruction of the smooth part of f with this approach is usually not accurate even if the data are ideal (i.e., known exactly everywhere). See, for example, Remark 1 in [29]. Our approach, which we call local resolution analysis, is well suited to the analysis of such linear recosntruction algorithms because the analysis is localized to an immediate neighborhood of the singularities of f.

Let g be a conormal distribution associated with a smooth hypersurface \({{\tilde{\Gamma }}}\), i.e., \(WF(g)\subset N^*{{\tilde{\Gamma }}}\), the latter is the conormal bundle of \({{\tilde{\Gamma }}}\). Even if g is not in the range of \({\mathcal {R}}\), our assumptions ensure that there is a smooth hypersurface \({\mathcal {S}}\subset {\mathcal {U}}\) such that (1) \(N^*{{\tilde{\Gamma }}}={{\tilde{C}}}\circ N^*{\mathcal {S}}\), and (2) \(WF({\check{f}})\subset N^*{{\tilde{{\mathcal {S}}}}}\). Let \({{\tilde{{\mathcal {T}}}}}_{\mathcal {S}}\) be the set of all \({{\tilde{y}}}\in {{\tilde{{\mathcal {V}}}}}\) such that \({\mathcal {S}}_{{{\tilde{y}}}}\) is tangent to \({\mathcal {S}}\). As is well-known, \({{\tilde{\Gamma }}}={{\tilde{{\mathcal {T}}}}}_{\mathcal {S}}\).

A common thread through our work is that the well-behaved DTB (i.e., the limit in (1.1)) is guaranteed to exist only if a pair \((x_0,{{\tilde{y}}}_0)\in {\mathcal {U}}\times {{\tilde{{\mathcal {V}}}}}\) is generic. Here \(x_0\in {\mathcal {S}}\), and \({{\tilde{y}}}_0\in {{\tilde{\Gamma }}}\) is the data point from which the singularity of \({\check{f}}\) at \(x_0\) is visible, i.e. \({\mathcal {S}}_{{{\tilde{y}}}_0}\) is tangent to \({\mathcal {S}}\) at \(x_0\). Roughly, the pair is generic if in a small neighborhood of \({{\tilde{y}}}_0\) the sampling lattice \({{\tilde{y}}}^j\) is in general position relative to a local patch of \({{\tilde{\Gamma }}}\) containing \({{\tilde{y}}}_0\) (see Definition 3.4 for a precise statement). The property of a pair to be generic is closely related with the uniform distribution theory [21].

If \((x_0,{{\tilde{y}}}_0)\) is not generic, the DTB may be different from the generic one predicted by our theory, and certain non-local artifacts that depend on the shape of \({\mathcal {S}}\) can appear as well (see e.g. [19]) even if \({\mathcal {R}}^*{\mathcal {R}}\) is a \(\Psi \)DO. This shows also that the case of discrete data is more complicated than when the data are continuous, because in the latter case \(\text {WF}({\check{f}})\subset \text {WF}(f)\) whenever \({\mathcal {R}}^*{\mathcal {R}}\) is a \(\Psi \)DO.

Alternative approaches to study resolution are in the framework of the sampling theory. The key assumption in these approaches is that f be essentially bandlimited in the classical sense [7, 23, 25] or in the semiclassical sense [22, 31, 32]. However, the methodologies of these approaches are quite different from ours, and the results obtained are different as well. The latter include sampling rate required to resolve details of a given size, and analysis of aliasing artifact if the sampling requirements are violated.

The paper is organized as follows. In Sect. 2 we introduce the GRT \({\mathcal {R}}\) and its adjoint \({\mathcal {R}}^*\), the sampling matrix D, the sampling lattice \({{\tilde{y}}}^j=\epsilon Dj\), \(j\in {\mathbb {Z}}^n\), and fix a pair \((x_0,{{\tilde{y}}}_0)\in {\mathcal {U}}\times {{\tilde{{\mathcal {V}}}}}\) such that \({\mathcal {S}}_{{{\tilde{y}}}_0}\) is tangent to \({\mathcal {S}}\) at \(x_0\). In Sect. 3 we select convenient coordinates both in the data and image domains, state the main geometric assumptions about \({\mathcal {R}}\) and the shape of \({\mathcal {S}}\), and define a generic pair \((x_0,{{\tilde{y}}}_0)\). In Sect. 4 we formulate the main assumptions about the operator \({\mathcal {B}}\), interpolation kernel \(\varphi \), and data function g. Essentially, \({\mathcal {B}}\) is a \(\Psi \)DO with a homogeneous top symbol. Likewise, g is a conormal distribution with a homogeneous top symbol associated with a smooth hypersurface \({{\tilde{\Gamma }}}\). The top symbol decays sufficiently fast, so g is a continuous function. We do not require that g be in the range of \({\mathcal {R}}\). We also give a formula for \(\kappa \) in terms of N and the orders of \({\mathcal {B}}\) and g (see 4.19). Then we state our main result as Theorem 4.7, where explicit formulas for the DTB are provided.

In Sect. 5 we look at \({\mathcal {R}}\) as an FIO, and discuss some of our assumptions from the FIO perspective. We also state a theorem about the relationship between the DTB and CTB (Theorem 5.4), and provide some intuition behind our results.

The proof of Theorem 4.7 is spread over Sects. 6–11. Preliminary results are in Sects. 6 and 7. In Sect. 6 we show that \({\mathcal {T}}_{\mathcal {S}}\) and \({\mathcal {T}}_{x_0}\) are tangent at \(y_0\) and investigate the contact. All calculations are done in the new y-coordinates, so we drop the tildas in \({{\tilde{y}}}\), \({{\tilde{\Gamma }}}\), \({{\tilde{{\mathcal {V}}}}}\), etc. Let \(g_\epsilon \) be the interpolated data (see 4.6). In Sect. 7 we obtain various bounds on g, \(g_\epsilon \), \(g-g_\epsilon \), and their derivatives. The core of the proof is in Sects. 8–11. To help the reader, Sect. 8.1 describes the main ideas of the proof and outlines what is done in each of the Sects. 8–11.

Theorem 5.4 is proven in Sect. 12. In Appendix B we show that our assumptions about g are reasonable. For example, they are satisfied when f is a conormal distribution associated with a smooth hypersurface \({\mathcal {S}}\). The fact that \(g={\mathcal {R}}f\) is conormal follows from the calculus of FIOs, see e.g. [33, Sect. VIII.5]. We present the necessary calculations here, because they are short, make the paper self-contained, and these calculations are used elsewhere in the paper. Finally, proofs of various auxiliary lemmas are collected in the other appendices.

2 Preliminaries

Let \({{\tilde{\Phi }}}(t,{{\tilde{y}}})\in C^\infty ({\mathbb {R}}^N\times {{\tilde{{\mathcal {V}}}}})\) be a defining function for the GRT \({\mathcal {R}}\). Here \(t\in {\mathbb {R}}^N\) is an auxiliary variable that parametrizes smooth manifolds \({\mathcal {S}}_{{{\tilde{y}}}}:=\{x\in {\mathcal {U}}:x={{\tilde{\Phi }}}(t,{{\tilde{y}}}),t\in {\mathbb {R}}^N\}\) over which \({\mathcal {R}}\) integrates, an open set \({\mathcal {U}}\subset {\mathbb {R}}^n\) is the image domain, \({{\tilde{y}}}\in {{\tilde{{\mathcal {V}}}}}\) is the data domain variable, and an open set \({{\tilde{{\mathcal {V}}}}}\subset {\mathbb {R}}^n\) is the data domain. Both \({\mathcal {U}}\) and \({{\tilde{{\mathcal {V}}}}}\) are endowed by the usual Euclidean metric. The corresponding GRT is given by

where \(b\in C_0^{\infty }({\mathcal {U}}\times {{\tilde{{\mathcal {V}}}}})\), \(\text {d}x=(\det G^{{\mathcal {S}}}(t,{{\tilde{y}}}))^{1/2}\text {d}t\) is the volume form on \({\mathcal {S}}_{{{\tilde{y}}}}\) induced by the embedding \({\mathcal {S}}_{{{\tilde{y}}}}\hookrightarrow {\mathcal {U}}\), and \(G^{{\mathcal {S}}}\) is the Gram matrix

Therefore, more explicitly,

We assume that f is compactly supported, \(\text {supp}(f)\subset {\mathcal {U}}\), and f is sufficiently smooth, so that \({\mathcal {R}}f({{\tilde{y}}})\) is a continuous function. Exact (i.e., continuous) reconstruction is computed by

where \(w\in C_0^{\infty }({\mathcal {U}}\times {{\tilde{{\mathcal {V}}}}})\), \(\text {d}{{\tilde{y}}}\) is the volume form on \({{\tilde{{\mathcal {T}}}}}_x:=\{{{\tilde{y}}}\in {{\tilde{{\mathcal {V}}}}}:x\in {\mathcal {S}}_{{{\tilde{y}}}}\}\) (which is induced by the embedding \({{\tilde{{\mathcal {T}}}}}_x\hookrightarrow {{\tilde{{\mathcal {V}}}}}\), see the paragraph following (4.1) below), \({\mathcal {R}}^*\) is a weighted adjoint of \({\mathcal {R}}\), and \({\mathcal {B}}\) is a fairly arbitrary pseudo-differential operator (\(\Psi \)DO). Lemma 3.7 below asserts that \({{\tilde{{\mathcal {T}}}}}_x\subset {{\tilde{{\mathcal {V}}}}}\) is a smooth, embedded submanifold. The reconstruction formula in (2.4) is of the Filtered-Backprojection type. Application of \({\mathcal {B}}\) is the filtering step, and integration with respect to \({{\tilde{y}}}\) (i.e., the application of \({\mathcal {R}}^*\)) is the backprojection step. By reconstruction here we mean any function (or, distribution) \({\check{f}}\) that is reconstructed from the data using (2.4). The reconstruction is intended to recover the visible wave-front set of f, but the strength of the singularities of \({\check{f}}\) and f need not match.

Let D be a data sampling matrix, \(\det D=1\). Discrete data \(g({{\tilde{y}}}^j)\) are given on the lattice

Reconstruction from discrete data is given by the same formula (2.4), where we replace g with its interpolated version \(g_\epsilon \) (see 4.6).

We assume that \({\mathcal {S}}:=\text {singsupp}(f)\) is a smooth hypersurface. Pick some \(x_0\in {\mathcal {S}}\). This point is fixed throughout the paper. Our goal is to study the function reconstructed from discrete data in a neighborhood of \(x_0\). All our results are local, so we assume that \({\mathcal {U}}\) is a sufficiently small neighborhood of \(x_0\). Let \({{\tilde{y}}}_0\in {{\tilde{{\mathcal {V}}}}}\) be such that \({\mathcal {S}}_{{{\tilde{y}}}_0}\) is tangent to \({\mathcal {S}}\) at \(x_0\). Only a small neighborhood of \({{\tilde{y}}}_0\) is relevant for the recovery of the singularity of f at \(x_0\). Hence we assume that \({{\tilde{{\mathcal {V}}}}}\) is a sufficiently small neighborhood of \({{\tilde{y}}}_0\).

3 Selecting Coordinates, Geometric Assumptions

Let \(\Psi (x)=0\) be an equation of \({\mathcal {S}}\), and \(\text {d}\Psi (x)\not =0\), \(x\in {\mathcal {U}}\). Introduce the matrix

which is the Jacobian matrix for the equations

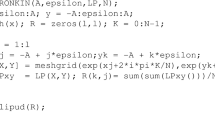

where \((t,{{\tilde{y}}})\in {\mathbb {R}}^N\times {{\tilde{{\mathcal {V}}}}}\) are the unknowns. See Fig. 1 for an illustration of \({\mathcal {S}}\) and \(\xi _0\). For convenience, in (3.1) and in the rest of the paper we frequently drop the arguments of \(\Psi \), \({{\tilde{\Phi }}}\), and similar functions whenever they are \(x_0\) and \((t_0,{{\tilde{y}}}_0)\), as appropriate. Here \(t_0\) is the unique point such that \(x_0={{\tilde{\Phi }}}(t_0,{{\tilde{y}}}_0)\). Our convention is that a variable in the subscript of a function denotes the partial derivative of the function with respect to the variable, e.g.,

Assumption 3.1

(Geometry of the GRT)

-

G1.

rank \({{\tilde{\Phi }}}_t=N\);

-

G2.

\(\det {{\tilde{M}}}\not =0\).

Remark 3.2

Assumption G1 implies that \({\mathcal {S}}_{{{\tilde{y}}}}\subset {\mathcal {U}}\) is a smooth N-dimensional embedded submanifold for any \({{\tilde{y}}}\in {{\tilde{{\mathcal {V}}}}}\) provided that \({\mathcal {U}}\ni x_0\) and \({{\tilde{{\mathcal {V}}}}}\ni {{\tilde{y}}}_0\) are sufficiently small neighborhoods. Assumption G2 guarantees that any singularity of f microlocally near \((x_0,\xi _0)\) is visible from the GRT data \({\mathcal {R}}f({{\tilde{y}}})\), \({{\tilde{y}}}\in {{\tilde{{\mathcal {V}}}}}\). In addition, Assumption G2 ensures that \({{\tilde{{\mathcal {T}}}}}_x=\{{{\tilde{y}}}\in {{\tilde{{\mathcal {V}}}}}:x\in {\mathcal {S}}_{{{\tilde{y}}}}\}\) is a codimension \(n-N\) embedded manifold for any \(x\in {\mathcal {U}}\) (see (3.14) and Lemma 3.7).

Example 3.3

Excluding some exceptional cases, Assumptions 3.1 are satisfied by the X-ray transform in \({\mathbb {R}}^3\), where \({\mathcal {S}}_{{{\tilde{y}}}}\) are lines intersecting a smooth curve \({\mathcal {C}}\). Since \({\mathcal {S}}_{{{\tilde{y}}}}\) are lines, it is trivial that one can find \({{\tilde{\Phi }}}\) so that G1 holds. It is well-known that G2 holds for some \({{\tilde{\Phi }}}\) if the plane \(\Pi _0:=\{x\in {\mathcal {U}}:\xi _0\cdot (x-x_0)=0\}\) intersects \({\mathcal {C}}\) transversely. By (3.1) and (3.2), this is, essentially, the Tuy condition [34]. Assumption 3.1(G2) fails to hold if \(\Pi _0\) is either tangent to \({\mathcal {C}}\) or does not intersect it. In the latter case the singularity at \((x_0,\xi _0)\) is invisible.

Definition 3.4

The pair \((x_0,{{\tilde{y}}}_0)\), such that \({\mathcal {S}}_{{{\tilde{y}}}_0}\) is tangent to \({\mathcal {S}}\) at \(x_0\), is generic for the sampling matrix D if

-

(1)

There is no vector \(m\in {\mathbb {Z}}^n\), \(m\not =0\), such that the 1-form

$$\begin{aligned} {{\tilde{\omega }}}=(D^{-T}m)_1\text {d}{{\tilde{y}}}_1+\dots +(D^{-T}m)_n\text {d}{{\tilde{y}}}_n\in T_{{{\tilde{y}}}_0}^*{{\widetilde{{\mathcal {T}}}}}_{x_0} \end{aligned}$$(3.4)vanishes identically on \(T_{{{\tilde{y}}}_0}{{\widetilde{{\mathcal {T}}}}}_{x_0}\), i.e. \({{\tilde{\omega }}}\not \in N^*_{{{\tilde{y}}}_0}{{\widetilde{{\mathcal {T}}}}}_{x_0}\), and

-

(2)

The matrix \((\Psi \circ {{\tilde{\Phi }}})_{tt}\) is either positive definite or negative definite.

In simple terms, condition (1) says that there is no nonzero vector \(m\in {\mathbb {Z}}^n\) such that \(D^{-T}m\) is orthogonal to \({{\tilde{{\mathcal {T}}}}}_{x_0}\) at \({{\tilde{y}}}_0\). For more information about this condition see Remark 5.1.

In the rest of the paper, we assume that the pair \((x_0,{{\tilde{y}}}_0)\) is generic in the sense of Definition 3.4, and \((\Psi \circ {{\tilde{\Phi }}})_{tt}\) is negative definite. The latter assumption is not restrictive, because the positive and negative definite cases can be converted into each other by a change of the x coordinates. To illustrate the notation convention described above, \((\Psi \circ {{\tilde{\Phi }}})_{tt}\) stands for the matrix of the second derivatives of the function \((\Psi \circ {{\tilde{\Phi }}})(t,y)\) with respect to t evaluated at \((t_0,{{\tilde{y}}}_0)\).

Using Assumption 3.1(G1), select x coordinates so that

We also denote \(x^\perp :=(x_2,\dots ,x_n)^T\). See Fig. 1 for an illustration of the coordinates \(x_1\) and \(x^\perp \) (the plane \(\{x:x_1=0,x^\perp \in {\mathbb {R}}^{n-1}\}\) is shown as a shaded oval). The notation \({{\tilde{\Phi }}}_{*}^{(j)}\), \(j=1,2\), stands for the derivative of the j-th group of coordinates of \(x={{\tilde{\Phi }}}(t,{{\tilde{y}}})\) (either along \(x^{(1)}\) or along \(x^{(2)}\)) with respect to \(*=t\) or \({{\tilde{y}}}\). By the last inequality in (3.5), we can select \(x^{(2)}\) as the t variables. With this choice we have (with some other defining function \({{\tilde{\Phi }}}\)):

where \(I_N\) is the \(N\times N\) identity matrix. This definition of \({{\tilde{\Phi }}}\) is assumed in what follows.

We also need a convenient y coordinate system. Since the data points in (2.5) are given in the original coordinates, we have to keep track of both the original and new coordinates. Points in the original and new y coordinates are denoted \({{\tilde{y}}}\) and y, respectively. Data domains in the original and new y coordinates are denoted \({{\tilde{{\mathcal {V}}}}}\) and \({\mathcal {V}}\), respectively. Suppose that \({{\tilde{y}}}=Uy+{{\tilde{y}}}_0\) and \({{\tilde{{\mathcal {V}}}}}=U{\mathcal {V}}+{{\tilde{y}}}_0\), where U is some orthogonal matrix \(U:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^n\).

In what follows we will be using mostly the new y coordinates, so we further modify the defining function:

Since the x variable remains the same, the function \(\Phi (x^{(2)},y)\) satisfies (3.6) (with the derivative computed at \((x^{(2)}_0,y_0)=(0,0)\)):

For the same reason, condition (2) in Definition 3.4 implies that the matrix \((\Psi \circ \Phi )_{x^{(2)}x^{(2)}}\) is either positive definite or negative definite. The following lemma is proven in Appendix A.

Lemma 3.5

Suppose \(x_0\in {\mathcal {S}}\), \({\mathcal {S}}_{{{\tilde{y}}}_0}\) is tangent to \({\mathcal {S}}\) at \(x_0\), \(\det (\Psi \circ {{\tilde{\Phi }}})_{tt}\not =0\), and Assumptions 3.1 hold. The orthogonal matrix U and the function \(\Psi \), which satisfies (3.5), can be selected so that the new y coordinates and the new function \(\Phi \) satisfy

and

Remark 3.6

The representations \(y=(y_1,y^\perp )^T\) and \(y=(y^{(1)},y^{(2)})^T\) are two different ways to split the y coordinates, which are convenient in different contexts. See Fig. 1 for an illustration of the coordinates \(y_1\) and \(y^\perp \) (the plane \(\{y:y_1=0,y^\perp \in {\mathbb {R}}^{n-1}\}\) is shown as a shaded oval).

Similarly to (3.1), we compute the matrix M by replacing \({{\tilde{y}}}\) and \({{\tilde{\Phi }}}\) with y and \(\Phi \), respectively. In block form

In the selected x- and y-coordinates (see (3.5), (3.10)), the matrix M becomes

By (3.12), Assumption 3.1(G2) is equivalent to

Let us introduce two important sets:

Here and in what follows, with some mild abuse of notation, \({\mathcal {S}}_y\) denotes \({\mathcal {S}}_{{{\tilde{y}}}(y)}\). See Fig. 1 for an illustration of \({\mathcal {S}}_{y_0}\), \({\mathcal {T}}_{\mathcal {S}}\), and \({\mathcal {T}}_{x_0}\).

Lemma 3.7

Suppose \(x_0\in {\mathcal {S}}\), \({\mathcal {S}}_{y_0}\) is tangent to \({\mathcal {S}}\) at \(x_0\), \(\det (\Psi \circ \Phi )_{x^{(2)}x^{(2)}}\not =0\), and Assumptions 3.1 hold. One can find sufficiently small neighborhoods \({\mathcal {U}}\ni x_0\) and \({\mathcal {V}}\ni y_0\) so that

-

(1)

\({\mathcal {T}}_{\mathcal {S}}\subset {\mathcal {V}}\) is a smooth, codimension one embedded manifold; and

-

(2)

\({\mathcal {T}}_x\subset {\mathcal {V}}\) is a smooth, codimension \(n-N\) embedded manifold for any \(x\in {\mathcal {U}}\).

Proof

To find \({\mathcal {T}}_{\mathcal {S}}\), we solve the equations

for \(x^{(2)}\) and \(y_1\) in terms of \(y^\perp \). The Jacobian matrix is

By condition (2) in Definition 3.4 (with \(t=x^{(2)}\)), \(\det (\Psi \circ \Phi )_{x^{(2)}x^{(2)}}\not =0\). Moreover, \((\Psi \circ \Phi )_{x^{(2)}}=0\) and \((\Psi \circ \Phi )_{y_1}\not =0\), and the Jacobian is non-degenerate. Therefore, solving (3.15) determines \(x^{(2)}(y^\perp )\) and \(y_1(y^\perp )\) as smooth functions of \(y^\perp \) in a small neighborhood of \(y^\perp =0\). In particular, \(y_1(y^\perp )\) is a local equation of the smooth, codimension 1 embedded submanifold \({\mathcal {T}}_{\mathcal {S}}\subset {\mathcal {V}}\). The point of tangency \(x_*=\Phi (x^{(2)}(y^\perp ),(y_1(y^\perp ),y^\perp ))\) also depends smoothly on \(y^\perp \).

To prove assertion (2), solve \(x^{(1)}=\Phi ^{(1)}(x^{(2)},y)\) for \(y^{(1)}\). This gives an equation for \({\mathcal {T}}_x\) in the form \(y=Y(y^{(2)},x)\) (where \(y^{(2)}\equiv Y^{(2)}(y^{(2)},x)\)). The property \(\det \Phi _{y^{(1)}}^{(1)}\not =0\) (cf. (3.10)) implies that \({\mathcal {T}}_x\subset {\mathcal {V}}\) is a smooth, codimension \(n-N\) embedded submanifold for any \(x\in {\mathcal {U}}\) provided that both \({\mathcal {U}}\) and \({\mathcal {V}}\) are sufficiently small. Since \(\Phi ^{(1)}_{y^{(2)}}=0\), we also get

\(\square \)

In what follows, we use

Thus \(\Theta _0\in T_{y_0}^*{\mathcal {V}}\) is the pull-back of \(\xi _0\in T_{x_0}^*{\mathcal {U}}\) by \(\Phi (0,\cdot )\). By (3.9), \(\Theta _0=\text {d}y_1\) (see Fig. 1).

4 Main Assumptions and Main Result

To simplify notations, in the rest of the paper we set \(b(x,y):=b(x,{{\tilde{y}}}(y))\), \(w(x,y):=w(x,{{\tilde{y}}}(y))\), and \(g(y):=g({{\tilde{y}}}(y))\). The original versions of these functions are used only in Sect. 2. Thus the reconstruction is computed by

where \(\text {d}y=(\det G^{{\mathcal {T}}}(y^{(2)},x))^{1/2}\text {d}y^{(2)}\) is the volume form on \({\mathcal {T}}_x\), \(G^{{\mathcal {T}}}\) is the Gram matrix:

\({\mathcal {B}}\) is a \(\Psi \)DO

and \({\mathcal {F}}\) is the Fourier transform in \({\mathbb {R}}^n\). To clarify the use of indices in (4.2), if \(y^{(2)}\) is viewed as part of y, then \(y^{(2)}_j=y_{n-N+j}\), \(1\le j\le N\). Using (2.5) and that \({{\tilde{y}}}=Uy+{{\tilde{y}}}_0\), the discrete data \(g({{\hat{y}}}^j)\) are known at the points

Reconstruction from discrete data is given by

where \(g_\epsilon (y)\) is the interpolated data:

\(\varphi \) is an interpolation kernel, \(\vartheta =\sigma _{\min }^{-1}\sup _{{{\tilde{y}}}\in {{\tilde{{\mathcal {V}}}}}}|{{\tilde{y}}}|\), and \(\sigma _{\min }\) is the smallest singular value of the sampling matrix D. The value of \(\vartheta \) is selected in such a way that \({{\hat{y}}}^j\in \text {supp}(g)\) implies \(|j|\le \vartheta /\epsilon \). In what follows we call (4.1) reconstruction from continuous data (as opposed to reconstruction from discrete data (4.5)). Denote \({\mathbb {N}}=\{1,2,\dots \}\) and \({\mathbb {N}}_0=\{0\}\cup {\mathbb {N}}\).

Definition 4.1

Given an open set \(V\subset {\mathbb {R}}^n\), \(r\in {\mathbb {R}}\), and \(N\in {\mathbb {N}}\), \(S^r(V\times R^N)\) denotes the space of \(C^\infty (V\times (R^N\setminus \{0\}))\) functions, \({{\tilde{B}}}(y,\eta )\), having the following properties

for some constants \(c_m,c_{m_1,m_2}>0\) and \(a>0\).

Now we state all the assumptions about \({\mathcal {B}}\), \(\varphi \), and g.

Assumption 4.2

(Properties of \({\mathcal {B}}\))

- \({\mathcal {B}}1\).:

-

\({{\tilde{B}}}(y,\eta )\equiv 0\) outside a small conic neighborhood of \((y_0,\Theta _0)\); and

- \({\mathcal {B}}2\).:

-

The amplitude of \({\mathcal {B}}\) satisfies

$$\begin{aligned} \begin{aligned}&{{\tilde{B}}}\in S^{\beta _0}({\mathcal {V}}\times {\mathbb {R}}^n);\ {{\tilde{B}}}-{{\tilde{B}}}_0\in S^{\beta _1}({\mathcal {V}}\times {\mathbb {R}}^n);\\&{{\tilde{B}}}_0(y,\lambda \eta )=\lambda ^{\beta _0}{{\tilde{B}}}_0(y,\eta );\ \beta _0>\beta _1; \end{aligned} \end{aligned}$$(4.8)for some \({{\tilde{B}}}_0\), \(\beta _0\), and \(\beta _1\), and for all \(y\in {\mathcal {V}}\), \(\lambda >0\).

Recall that \(\lfloor r\rfloor \), \(r\in {\mathbb {R}}\), denotes the largest integer not exceeding r. Similarly, \(\lceil r\rceil \) denotes the smallest integer greater than or equal to r. We also introduce:

Assumption 4.3

(Properties of the interpolation kernel \(\varphi \))

-

IK1.

\(\varphi \in C_0^{\lceil \beta _0^+\rceil }({\mathbb {R}}^n)\), i.e., \(\varphi \) is compactly supported, and all of its derivatives up to order \(\lceil \beta _0^+\rceil \) are \(L^\infty \);

-

IK2.

\(\varphi \) is exact up to order \(\lceil \beta _0\rceil \) for the sampling lattice determined by \(D_1:=U^TD\), i.e.,

$$\begin{aligned} \sum _{j\in {\mathbb {Z}}^n} {(D_1 j)}^m\varphi (u-{D_1}j)\equiv u^m,\ |m|\le \lceil \beta _0\rceil ,\ m\in {\mathbb {N}}_0^n,\ u\in {\mathbb {R}}^n, \end{aligned}$$(4.10)for all indicated m and u.

Assumption IK2 with \(m=0\) implies that \(\varphi \) is normalized. By assumption, \(|\det D_1|=1\). Then

Assume that g is given by

for some \(P\in C^\infty ({\mathcal {V}})\) with \(\text {d}P(y)\not =0\) on \({\mathcal {V}}\). We need the smooth hypersurface determined by P:

Even if g is not in the range of \({\mathcal {R}}\), Assumptions 3.1 and Definition 3.4 imply that there exists a smooth surface \({\mathcal {S}}\subset {\mathcal {U}}\) such that \(\Gamma ={\mathcal {T}}_{\mathcal {S}}\) (see Sect. 5.1 for additional information). Let \({\hat{{\mathcal {S}}}}_{y_0}\) be the projection of \({\mathcal {S}}_{y_0}\) onto \({\mathcal {S}}\) along the first coordinate \(x_1\) (see Fig. 1).

Definition 4.4

Set

where \(\text {II}_{{\mathcal {S}}_{y_0}}\) is the matrix of the second fundamental form of \({\mathcal {S}}_{y_0}\) at \(x_0\) written in the coordinates \((x_1,x^{(2)})^T\), and similarly for \({\hat{{\mathcal {S}}}}_{y_0}\).

Assumption 4.5

(Properties of the data function g)

-

g1.

\({{\tilde{\upsilon }}}\in S^{-(s_0+1)}({\mathcal {V}}\times {\mathbb {R}})\), and there exists a compact \(K\subset {\mathcal {V}}\) such that \({{\tilde{\upsilon }}}(y,\lambda )\equiv 0\) if \(y\in {\mathcal {V}}\setminus K\);

-

g2.

\({{\tilde{\upsilon }}}\) satisfies

$$\begin{aligned} \begin{aligned}&{{\tilde{\upsilon }}}(y,\lambda )= {{\tilde{\upsilon }}}^+(y)\lambda _+^{-(s_0+1)} +{{\tilde{\upsilon }}}^-(y)\lambda _-^{-(s_0+1)}+{{\tilde{R}}}(y,\lambda ),\ \forall y\in {\mathcal {V}},|\lambda |\ge 1,\\&{{\tilde{\upsilon }}}^\pm \in C_0^{\infty }({\mathcal {V}}),\ {{\tilde{R}}}\in S^{-(s_1+1)}({\mathcal {V}}\times {\mathbb {R}}),\ 0< s_0<s_1,\ s_1\not \in {\mathbb {N}}; \end{aligned}\qquad \end{aligned}$$(4.15)for some \({{\tilde{\upsilon }}}^\pm \), \({{\tilde{R}}}\), \(s_0\), and \(s_1\);

-

g3.

\(P\in C^\infty ({\mathcal {V}})\) is given by

$$\begin{aligned} P(y)=y_1-\psi (y^\perp ), \end{aligned}$$(4.16)where \(\psi \) is smooth, \(\psi (0)=0\), and \(\text {d}\psi (0)=0\);

-

g4.

If \(s_0\in {\mathbb {N}}\), one has

$$\begin{aligned} {{\tilde{\upsilon }}}^+(y)=(-1)^{s_0+1}{{\tilde{\upsilon }}}^-(y)\quad \text {for any}\quad y\in \Gamma . \end{aligned}$$(4.17) -

g5.

The matrix \(\Delta \text {II}_{{\mathcal {S}}}\) is negative definite.

We use superscripts \('\pm '\) to distinguish between two different functions as opposed to the positive and negative parts of a number. The latter are denoted by the subscripts \('\pm '\): \(\lambda _\pm :=\max (\pm \lambda ,0)\).

Assumptions g1, g2 imply that g is sufficiently regular. The assumption \(s_1\not \in {\mathbb {N}}\) is not restrictive. It is made to simplify some of the proofs. Assumption g3 is not restrictive either. An equivalent assumption is \(\text {d}P_1(y_0)=\Theta _0\) for some other smooth \(P_1\) (see also (B.5) and (B.9) below). Indeed, by shrinking \({\mathcal {V}}\), if necessary, we can find \(\psi (y^\perp )\) with the required properties such that the function \(u(y):=P_1(y)/P(y)\) (where P is as in (4.16)) satisfies \(u\in C^\infty ({\mathcal {V}})\) and \(c_1\le |u(y)|,|\text {d}u(y)|\le c_2\) for some \(c_{1,2}>0\) and all \(y\in {\mathcal {V}}\). Substituting \(P_1(y)=u(y)P(y)\) into (4.12) and changing variables \(\lambda _1=\lambda u(y)\), we see that the new amplitude \({{\tilde{\upsilon }}}_1(y,\lambda _1):={{\tilde{\upsilon }}}(y,\lambda _1/u(y))/u(y)\) satisfies g1, g2 (with the same \(s_0\) and \(s_1\)), and g4. See Remark B.1 about the meaning of assumption g4. Define

Assumption 4.6

(Joint properties of \({\mathcal {B}}\) and g)

-

C1.

The constants \(\beta _0\) and \(s_0\), defined in (4.8) and (4.15), respectively, satisfy

$$\begin{aligned} \kappa :=\beta _0-s_0-(N/2)\ge 0; \end{aligned}$$(4.19) -

C2.

The functions \({{\tilde{B}}}_0\) and \({{\tilde{\upsilon }}}^\pm \), defined in (4.8) and (4.15), respectively, satisfy

$$\begin{aligned} \begin{aligned}&\tilde{B}_0(y,\text {d}_yP(y)){{\tilde{\upsilon }}}^+(y){=}-e(2(\beta _0{-}s_0))\tilde{B}_0(y,-\text {d}_yP(y)){{\tilde{\upsilon }}}^-(y) \text { if }\kappa {=}0 \ \forall y{\in } \Gamma . \\ \end{aligned} \end{aligned}$$(4.20)

The role of conditions (4.17) and (4.20) is that they prevent the appearance of logarithmic terms in g and \({\check{f}}={\mathcal {R}}^*{\mathcal {B}}g\) in a neighborhood of \(\Gamma \) and \({\mathcal {S}}\), respectively, see (C.6) and (12.7).

Set \(x_\epsilon :=x_0+\epsilon {\check{x}}\). Adopt the convention that the interior side of \({\mathcal {S}}\) is the one where the \(x_1\) axis points and define

Let \({{\hat{\varphi }}}\) denote the classical Radon transform of \(\varphi \) (see (8.8)). Recall that \(D_1=U^TD\). Now we can state our main result.

Theorem 4.7

Suppose \({\mathcal {U}}\) and \({\mathcal {V}}\) are sufficiently small neighborhoods of \(x_0\) and \(y_0\), respectively, and

-

(1)

\({\mathcal {S}}_{y_0}\) is tangent to \({\mathcal {S}}\) at \(x_0\);

- (2)

-

(3)

The pair \((x_0,y_0)\) is generic for the sampling matrix \(D_1\).

Then one has

and

See [9, Chapter I, Sect. 3.6] for the definition and properties of the distributions \((p\pm i0)^a\).

Remark 4.8

Since \({\check{x}}_1\) is a rescaled coordinate, (4.22) and (4.23) imply that in the original coordinates x the resolution of reconstruction is \(\sim \epsilon r(\partial _{y_1}\Phi _1)(x^{(2)}_0,y_0)\), where \(r>0\) is (loosely speaking) a measure of the spread of \({{\hat{\varphi }}}(\Theta _0,p)\) as a function of p. This shows that the resolution is not only location-dependent (via the \(x^{(2)}\) dependence of \(\Phi \)), but also direction-dependent (via the y dependence of \(\Phi \)).

Even though the formulas (4.22), (4.23) do not contain the sampling matrix, the dependence on \(D_1\) is still there. It is implicit, and manifests itself via the kernel \(\varphi \), which is required to be exact on the lattice determined by \(D_1\) (see Assumption 4.3(IK2)).

Remark 4.9

The limits in (4.22) and (4.23) are functions of the scalar argument \(h={\check{x}}_1/\partial _{y_1}\Phi _1\). Hence, it is more appropriate to view the DTBs as functions on \({\mathbb {R}}\) rather than on \({\mathbb {R}}^n\). With this convention, the expressions in (4.22) and (4.23) can be written as \(\text {DTB}({\check{x}}_1/\partial _{y_1}\Phi _1)\). The same convention applies to the CTB as well. This convention will be used in the rest of the paper.

Remark 4.10

Set \(x=x_0+(h/|\xi _0|)\xi _0\), i.e. h is physical (not rescaled) signed distance from x to \(x_0\). Equation (4.23) implies that the derivative of the edge response function of the reconstruction (in \({\mathbb {R}}^2\), this derivative is known as the line spread function) is \(E^\prime (h):={\hat{\varphi }}\left( \Theta _0,h/(\epsilon \partial _{y_1} \Phi _1)\right) \). Thus, to compute, for example, FWHM, we perform the following steps: (1) Find the maximum \(M:=\max _h E^\prime (h)\). Frequently, \(M={{\hat{\varphi }}}(\Theta _0,0)\); and (2) Find the length FWHM\(=|\{h\in {\mathbb {R}}:E^\prime (h)\ge M/2\}|\).

The proof of the theorem is broken into several sections. The contact between \({\mathcal {T}}_{\mathcal {S}}\) and \({\mathcal {T}}_{x_0}\) in a neighborhood of \(y_0\) is investigated in Sect. 6. Properties of the continuous data function g and its interpolated version are investigated in Sect. 7. These two sections prepare the groundwork for the remainder of the proof in Sects. 8–11. A high-level overview of the remainder of the proof is at the end of Sect. 8.1.

5 Additional Results: Discussion

5.1 FIO Point of View

Introduce the function

Clearly, \(\phi \) is the phase function of the FIO \({\mathcal {R}}\) (cf. (2.3)):

with the canonical relation from \(T^*{\mathcal {U}}\) to \(T^*{\mathcal {V}}\):

In (5.2), \(G^{\mathcal {S}}\) is computed similarly to (2.2), but with \({{\tilde{y}}}\) and \({{\tilde{\Phi }}}\) replaced by y and \(\Phi \), respectively.

Set \(\lambda _0:=(1,0,\dots ,0)^T\). Clearly, \(\xi _0=|\text {d}\Psi |\text {d}_x \phi \not =0\) and \(\Theta _0=-|\text {d}\Psi |\text {d}_y \phi \not =0\). This follows easily from (3.5), (3.18), and (5.1). This also implies that the differentials \(\text {d}_{x,\lambda }\phi \) and \(\text {d}_{y,\lambda }\phi \) do not vanish anywhere in a conic neighborhood of \((x_0,y_0,\lambda _0)\) (see [33, Definition 2.1, Sect. VI.2]). As a reminder about our convention, the differentials are evaluated at \((x_0,y_0,\lambda _0)\). Clearly \(\partial ^2\phi /\partial x^{(1)}\partial \lambda =I_{n-N}\), so the differentials \(\text {d}_{x,y,\lambda }(\partial \phi /\partial \lambda _j)\), \(1\le j\le n-N\), are linearly independent, and \(\phi \) is nondegenerate [33, Definition 1.1, Sect. VIII.1].

Setting \(\lambda =\lambda _0\) gives

Here we have used that \(\Phi ^{(1)}_{x^{(2)}}=0\) and \(\Phi ^{(1)}_{y^{(2)}}=0\) (see (3.5) and (3.10)). In fact, the determinant in (5.4) is non-zero if and only if \(\det M\not =0\) (see (3.11)–(3.13)). Hence Assumption 3.1(G2) implies that C is a local canonical graph (see the discussion following Eq. (4.23) in [33, Sect. VI] and Definition 6.1 in [33, Sect. VIII]). In particular, there is a unique, smooth hypersurface \({\mathcal {S}}\subset {\mathcal {U}}\) such that \((N^*\Gamma \setminus {{\textbf{0}}})=C\circ (N^*{\mathcal {S}}\setminus {{\textbf{0}}})\). Here \(N^*\Gamma \) and \(N^*{\mathcal {S}}\) are the conormal bundles of \(\Gamma \) and \({\mathcal {S}}\), respectively, and \({{\textbf{0}}}\) is the zero section.

From (2.2) and (3.6), \(\det G^{{\mathcal {S}}}=1\). Clearly, \({\mathcal {R}}\) is an elliptic FIO in a neighborhood of \(((x_0,\xi _0),(y_0,\Theta _0))\in (T^*{\mathcal {U}}\setminus {{\textbf{0}}})\times (T^*{\mathcal {V}}\setminus {{\textbf{0}}})\) if \(b(x_0,y_0)\not =0\) and \({\mathcal {U}}\), \({\mathcal {V}}\) are sufficiently small.

The fact that the GRT and its adjoint can be viewed as FIOs has been known for a long time (see e.g., [11, 26]). The material in this section is well-known, and is presented for the convenience of the reader to make the paper self-contained.

Remark 5.1

We can now discuss condition (1) in Definition 3.4 in more detail. Here it is convenient to argue in the original \({{\tilde{y}}}\) coordinates. The preceding discussion shows that for every \(x\in {\mathcal {S}}\) there is \({{\tilde{y}}}(x)\in {{\tilde{\Gamma }}}\), which depends smoothly on x, such that \({\mathcal {S}}_{{{\tilde{y}}}(x)}\) is tangent to \({\mathcal {S}}\) and x. Consider the N-dimensional tangent space to \({{\tilde{{\mathcal {T}}}}}_{x}\) at \({{\tilde{y}}}(x)\). By (3.6) it is determined by (1) solving \(x^{(1)}={{\tilde{\Phi }}}^{(1)}(x^{(2)},({{\tilde{y}}}^{(1)},{{\tilde{y}}}^{(2)}))\) for \({{\tilde{y}}}^{(1)}\) in terms of x and \({{\tilde{y}}}^{(2)}\), and (2) computing the partial derivatives \(\partial {{\tilde{y}}}^{(1)}/\partial {{\tilde{y}}}_j^{(2)}\), \(1\le j\le N\), at \((x,{{\tilde{y}}}^{(2)}(x))\). Consider the \(n\times N\) matrix:

Condition (1) in Definition 3.4 is violated for an exceptional \(x\in {\mathcal {S}}\) if \((D^{-T}m) \Xi (x)=0\) for some \(m\in \mathbb Z^n\).

5.2 CTB and Its Relationship with DTB

When describing the leading singularity of a distribution at a point, the following definition (which is a slight modification of the one in [16]) is convenient.

Definition 5.2

[16] Given a distribution \(f\in {{\mathcal {D}}}'({\mathbb {R}}^n)\) and a point \(x_0\in {\mathbb {R}}^n\), suppose there exists a distribution \(f_0\in {\mathcal D}'({\mathbb {R}}^n)\) so that for some \(a\in {\mathbb {R}}\) the following equality holds

for any \(\omega \in C_0^{\infty }({\mathbb {R}}^n)\). Then we call \(f_0\) the leading order singularity of f at \(x_0\).

Definition 5.3

CTB is defined as the leading order singularity of the reconstruction from continuous data \({\mathcal {R}}^*{\mathcal {B}}g\) at \(x_0\).

Similarly to the DTB, the following theorem shows that the CTB can be viewed as a function of a scalar argument.

Theorem 5.4

Under the assumptions of Theorem 4.7, one has

where

and \(C_1,c_1\), and \(c_1^\pm \) are the same as in Theorem 4.7. Thus, the DTB is the convolution of the CTB with the scaled classical Radon transform of the interpolating kernel:

5.3 Discussion

Recall that \({\check{f}}(x):={\mathcal {R}}^*{\mathcal {B}}g(x)\) denotes the reconstruction from continuous data. Suppose \(\kappa =0\), i.e. \({\check{f}}\) has a jump across \({\mathcal {S}}\). The second term on the right in (4.23) equals zero for all \({\check{x}}_1>c\). Because \(\varphi \) is normalized: \(\int {{\hat{\varphi }}}(\Theta _0,p)dp=1\), the second term equals \(C_1c_1\) for all \({\check{x}}_1<-c\). Here \(c>0\) is sufficiently large, and we used that \(\partial _{y_1} \Phi _1>0\) (cf. (3.9)). By (12.7), the product \(C_1c_1\) is precisely the jump of \({\check{f}}(x)\) across \({\mathcal {S}}\) at \(x_0\): \(-C_1c_1={\check{f}}(x_0^{\text {int}})-\check{f}(x_0^{\text {ext}})\), see (10.2) and (4.21). Thus, the right-hand side of (4.23) equals to \(\check{f}(x_0^{\text {int}})\) if \({\check{x}}_1>c\), and to \(\check{f}(x_0^{\text {ext}})\) – if \({\check{x}}_1<-c\). This shows that (4.23) describes a smooth transition of the discrete reconstruction \({\check{f}}_\epsilon (x_\epsilon )\) from the value \(\check{f}(x_0^{\text {int}})\) on the interior side of \({\mathcal {S}}\) to the value \({\check{f}}(x_0^{\text {ext}})\) on the exterior side of \({\mathcal {S}}\). Loosely speaking, the transition happens over a region of size \(O(\epsilon )\):

Then the DTB is a “stretched” version of the abrupt jump of \({\check{f}}\) across \({\mathcal {S}}\) in the continuous case. This is most apparent from the last equations in (5.8), (5.9). See also Section 6 of [20] for a similar discussion in the setting of quasi-exact inversion of the GRT in \({\mathbb {R}}^3\).

6 Beginning of the Proof of Theorem 4.7. Tangency of \({\mathcal {T}}_{\mathcal {S}}\) and \({\mathcal {T}}_{x_0}\)

In this section we show that \({\mathcal {T}}_{\mathcal {S}}\) and \({\mathcal {T}}_{x_0}\) are tangent at \(y_0\), and investigate their properties near the point of tangency.

Lemma 6.1

Suppose the assumptions of Lemma 3.7 are satisfied. The submanifolds \({\mathcal {T}}_{\mathcal {S}}\) and \({\mathcal {T}}_{x_0}\) are tangent at \(y_0=0\), and \(\Theta _0=\text {d}_y(\Psi \circ \Phi )\) is conormal to both of them at \(y_0=0\).

Proof

Begin with \({\mathcal {T}}_{\mathcal {S}}\). Following the proof of the first assertion of Lemma 3.7, all we need to do is compute \(\partial _{y^\perp } y_1\). Viewing \(x^{(2)}\) and \(y_1\) as functions of \(y^\perp \) and differentiating the equation \((\Psi \circ \Phi )(x^{(2)},y)=0\) (cf. (3.15)) gives:

which implies \(\partial _{y^\perp } y_1=0\). Here we have used that (cf. (3.5), (3.9))

Therefore, in the selected y coordinates, the equation of the tangent space \(T_{y_0}{\mathcal {T}}_{\mathcal {S}}\) (viewed as a subspace of \({\mathbb {R}}^n\)) is \(y_1=0\). See the shaded ellipse on the left in Fig. 1. Since only the first component of \(\Theta _0\) is not zero (see the line following (3.18)), it follows that \(\Theta _0\) is conormal to \({\mathcal {T}}_{\mathcal {S}}\) at \(y_0\).

Consider next \({\mathcal {T}}_{x_0}\). Differentiating \(x_0^{{(1)}}=\Phi ^{(1)}(x^{(2)}_0,y)\), where \(y^{(1)}=Y_0^{(1)}(y^{(2)})\) (see (3.18)), and using (3.10) gives \(\partial y^{(1)}/\partial y^{(2)}=0\). Hence the equation of the tangent space \(T_{y_0}{\mathcal {T}}_{x_0}\) is \(y^{(1)}=0\), i.e. \(T_{y_0}{\mathcal {T}}_{x_0}\) is a subspace of \(T_{y_0}{\mathcal {T}}_{\mathcal {S}}\). \(\square \)

Next we look more closely at the contact between \({\mathcal {T}}_{x_0}\) and \({\mathcal {T}}_{\mathcal {S}}\).

Lemma 6.2

Suppose the assumptions of Lemma 3.7 are satisfied. Let \(y=Y_0(y^{(2)})\) be the equation of \({\mathcal {T}}_{x_0}\) defined in (3.18). Let \(y=Z(y^{(2)})\) be the equation of the projection of \({\mathcal {T}}_{x_0}\) onto \({\mathcal {T}}_{\mathcal {S}}\) along the first coordinate, i.e., \(Z(y^{(2)})\in {\mathcal {T}}_{\mathcal {S}}\) and \(Y_0(y^{(2)})-Z(y^{(2)})=(h(y^{(2)}),0,\dots ,0)^T\) for a scalar function \(h(y^{(2)})\). Then, with \(M_{22}\) as in (3.11), and \(\Theta _0\) as in (3.18), we have

Proof

We solve separately two sets of equations (recall that \(x_0=0\)):

Since \((x^{(2)},y)=(0,0)\) solves (6.4), we have to first order in \(y^{(2)}\)

The solution to the second system (i.e., related to \({\mathcal {T}}_{\mathcal {S}}\)) is denoted with a check. Because \({\mathcal {T}}_{x_0}\) and \({\mathcal {T}}_{\mathcal {S}}\) are tangent at \(y_0\), \(y={\check{y}}+O(|y^{(2)}|^2)\). Recall that we search not for the general solution \(y\in {\mathcal {T}}_{\mathcal {S}}\), but for the points \(y=Z(y^{(2)})\) obtained by projecting \({\mathcal {T}}_{x_0}\) onto \({\mathcal {T}}_{\mathcal {S}}\) along \(y_1\). Therefore, \({\check{y}}^{(2)}\equiv y^{(2)}\). By (3.10) and (6.5), \(y^{(1)}=O(|y^{(2)}|^2)\). By (6.7) and the assumption \(\det (\Psi \circ \Phi )_{x^{(2)}x^{(2)}}\not =0\), \(x^{(2)}=O(|y^{(2)}|)\).

Since \(\Phi ^{(2)}_y\equiv 0\), (6.5) implies \(\Phi _y y,\Phi _y {\check{y}}=O(|y^{(2)}|^2)\). Let \(\Delta x^{(2)}\) and \(\Delta y=\begin{pmatrix} \Delta y^{(1)}\\ 0\end{pmatrix}\) denote second order perturbations, i.e. \(y^{(1)}=\Delta y^{(1)}+O(|y^{(2)}|^3)\) (and analogously for \(x^{(2)}\) and \(\check{y}^{(1)}\)). Since \(y^{(2)}\) is an independent variable, its perturbation is not considered. Then

Only (6.6) was used to derive (6.9). Using (6.7) and that \(y^{(1)}=O(|y^{(2)}|^2)\), \(x^{(2)}=O(|y^{(2)}|)\), \((\Psi \circ \Phi )_y=(1,0,\dots ,0)\), \((\Psi \circ \Phi )_{x^{(2)}}=0\), and \(\Phi _y{\check{y}}=O(|y^{(2)}|^2)\) yields

Subtracting the two equations gives (recall that \(\xi _0=\text {d}\Psi \)):

Solving (6.7) for \({\check{x}}^{(2)}\) and substituting into (6.11) we get the formula for Q in (6.3). That Q is non-degenerate follows from (3.13). \(\square \)

Remark 6.3

In this remark we discuss the meaning of condition (2) in Definition 3.4 from two perspectives. First, consider the image domain perspective. An equation for \({\hat{{\mathcal {S}}}}_{y_0}\) is \(\Psi (x_1,\Phi ^\perp (x^{(2)},y_0))=0\), where \(x_1\) is viewed as a function of \(x^{(2)}\). Hence

Here we have used that \(\Psi _{x^\perp }=0\) implies \(\partial _{x^{(2)}}x_1=0\). By (3.5) and (3.8),

Using (3.5) and (3.8) again and then (4.14) gives

This shows that if Assumptions 3.1 hold, condition (2) is equivalent to the requirement that \(\Delta \text {II}_{{\mathcal {S}}}\) be either positive definite or negative definite.

To understand condition (2) from the data domain perspective, look at the surfaces \({\mathcal {T}}_{x_0}\) and \({\mathcal {T}}_{\mathcal {S}}\). Define similarly to (4.14):

where \({\hat{{\mathcal {T}}}}_{x_0}\) is the projection of \({\mathcal {T}}_{x_0}\) onto \({\mathcal {T}}_{\mathcal {S}}\) along the first coordinate \(y_1\), and \(\text {II}_{{\mathcal {T}}_{x_0}}\), \(\text {II}_{{\hat{{\mathcal {T}}}}_{x_0}}\) are the matrices of the second fundamental form of \({\mathcal {T}}_{x_0}\), \({\hat{{\mathcal {T}}}}_{x_0}\), respectively, at \(y_0\) written in the coordinates \((y_1,y^{(2)})^T\). By construction, \(y=Z(y^{(2)})\) is the equation of \({\hat{{\mathcal {T}}}}_{x_0}\). By Lemma 6.2, \(\Delta \text {II}_{{\mathcal {T}}}=-Q\). Hence, if Assumptions 3.1 hold, condition (2) in Definition 3.4 is equivalent to the requirement that \(\Delta \text {II}_{{\mathcal {T}}}\) be either positive definite or negative definite.

Let \(y=Y(y^{(2)},x_\epsilon )\) be the equation for \({\mathcal {T}}_{x_\epsilon }\) (see the proof of Lemma 3.7). This equation is obtained by solving \(\epsilon {\check{x}}^{(1)}=\Phi ^{(1)}(\epsilon {\check{x}}^{(2)},y)\) for \(y^{(1)}\) and setting \(y^{(2)}\equiv Y^{(2)}(y^{(2)},x_\epsilon )\). Suppose \(|{\check{x}}|=O(1)\) and \(|y^{(2)}|=O(\epsilon ^{1/2})\). The term \(\epsilon {\check{x}}\) is of a lower order than \(y^{(2)}\), so the equation for \({\mathcal {T}}_{x_0}\) in (6.5) is accurate on \({\mathcal {T}}_{x_\epsilon }\) to the order \(\epsilon ^{1/2}\). Due to \(\Phi ^{(1)}_{x^{(2)}}=0\), the updated version of (6.8) becomes

The terms \((1/2)\Phi ^{(1)}_{y^{(2)}y^{(2)}}y^{(2)}y^{(2)}\) in (6.8) and in (6.16) are the same. Also, \(\Delta y^{(1)}\) in (6.8) is the analogue of \(y^{(1)}\) in (6.16). Therefore, to order \(\epsilon \), introduction of the term \(\epsilon {\check{x}}\) requires only a linear correction compared with \(Y_0(y^{(2)})=Y(y^{(2)},x_0)\), and we have

Hence

7 On Some Properties of the Continuous Data g and Its Interpolated Version \(g_\epsilon \)

By [14, Proposition 25.1.3], g is a conormal distribution with respect to \(\Gamma (={\mathcal {T}}_{\mathcal {S}})\). The wave front set of g is contained in the conormal bundle of \(\Gamma \):

See also Sect. 18.2 and Definition 18.2.6 in [13] for a formal definition and in-depth discussion of conormal distributions. A discussion of closely related Lagrangian distributions is in Sect. 25.1 of [14].

In this paper we use two types of spaces of continuous functions. First, \(C_b^k({\mathbb {R}}^n)\), \(k\in {\mathbb {N}}_0\), is the Banach space of functions with bounded derivatives up to order k. The norm in \(C_b^k({\mathbb {R}}^n)\) is given by

The subscript ‘0’ in \(C_0^k\) means that we consider the subspace of compactly supported functions, \(C_0^k({\mathbb {R}}^n)\subset C_b^k({\mathbb {R}}^n)\).

The second type is the Hölder-Zygmund spaces \(C_*^r({\mathbb {R}}^n)\), \(r>0\). Pick any \(\mu _0\in C_0^{\infty }({\mathbb {R}}^n)\) such that \(\mu _0(\eta )=1\) for \(|\eta |\le 1\), \(\mu _0(\eta )=0\) for \(|\eta |\ge 2\), and define \(\mu _j(\eta ):=\mu _0(2^{-j}\eta )-\mu _0(2^{-j+1}\eta )\), \(j\in \mathbb N\) [1, Sect. 5.4]. Then

where \({{\tilde{h}}}={\mathcal {F}}h\). If \(r\not \in {\mathbb {Z}}\), i.e. \(r=k+\gamma \), \(k\in {\mathbb {N}}_0\), \(0<\gamma <1\), then \(C_*^r({\mathbb {R}}^n)\) consists of \(C_b^k({\mathbb {R}}^n)\) functions, which have Hölder continuous k-th order derivatives (see [30, Definition 2.4 and Example 2.3]):

As is easily seen, \(C_b^k\subset C_*^k\) if \(k\in {\mathbb {N}}\). The Hölder-Zygmund spaces are a particular case of the Besov spaces: \(C_*^r({\mathbb {R}}^n)=B^r_{p,q}({\mathbb {R}}^n)\), where \(p,q=\infty \) [1, item 2 in Remark 6.4].

The following two lemmas are proven in Appendix C.

Lemma 7.1

Suppose g satisfies Assumption 4.5. There exist \(c_m>0\) such that

Additionally,

If the leading term in \({{\tilde{\upsilon }}}\) is missing, i.e., \({{\tilde{\upsilon }}}^\pm \equiv 0\), then \(g\in C_*^{s_1}({\mathcal {V}})\), and (7.5) holds with \(s_0\) replaced by \(s_1\).

Lemma 7.2

Suppose \({\mathcal {B}}\) and g satisfy Assumptions 4.2, 4.5, and 4.6. There exists \(c_\beta >0\) such that

If \(\kappa =0\), we additionally have with some \(c>0\)

Define

The following two lemmas are proven in Appendix C.

Lemma 7.3

Suppose \(\varphi \) and g satisfy Assumptions 4.3 and 4.5, respectively. There exists \(\varkappa _1>0\) such that

for some \(c>0\).

If the top order term in \({{\tilde{\upsilon }}}\) is missing, i.e., \({{\tilde{\upsilon }}}^\pm \equiv 0\), then (7.10) holds with \(s_0\) replaced by \(s_1\) as long as \( l\le \lceil \beta _0^+ \rceil \).

Lemma 7.4

Suppose \(\varphi \) and g satisfy Assumptions 4.3 and 4.5, respectively. Let \(\varkappa _1\) be the same as in Lemma 7.3. One has

for some \(c>0\).

If the top order term in \({{\tilde{\upsilon }}}\) is missing, i.e. \({{\tilde{\upsilon }}}^\pm \equiv 0\), then (7.11), (7.12) hold with \(s_0\) replaced by \(s_1\) as long as \(l\le \lceil \beta _0^+\rceil \).

8 Computing the First Part of the Leading Term

8.1 Splitting the Reconstruction into Two Parts: \(f_\epsilon =f_\epsilon ^{(1)}+f_\epsilon ^{(2)}\)

By (4.5),

Pick some large \(A\gg 1\) and introduce two sets

Let \(f_\epsilon ^{(l)}(x_\epsilon )\) denote the reconstruction obtained using (8.1), where the y integration is restricted to the part of \({\mathcal {T}}_{x_\epsilon }\) corresponding to \(\Omega _l\), \(l=1,2\), respectively. The main ideas behind the split are that (1) The contribution of \(f_\epsilon ^{(2)}(x_\epsilon )\) to the DTB goes to zero when \(A\rightarrow \infty \); (2) For each fixed \(A>0\), the fact that \(|y^{(2)}|=O(\epsilon ^{1/2})\) greatly simplifies estimation of \(f_\epsilon ^{(1)}(x_\epsilon )\); and (3) The double limit \(\lim _{A\rightarrow \infty }\lim _{\epsilon \rightarrow 0}f_\epsilon ^{(1)}(x_\epsilon )\) exists and gives the DTB.

The remainder of the proof consists of four parts: (1) Show that the leading singular part of \(f_\epsilon ^{(1)}(x_\epsilon )\) (i.e., when only the top order terms are retained in \({\mathcal {B}}\) and g) gives the main contribution to the DTB. This is done in the rest of this section; (2) Show that the remaining, less singular part of \(f_\epsilon ^{(1)}(x_\epsilon )\) does not contribute to the DTB (Sect. 9); (3) Show that the contribution of \(f_\epsilon ^{(2)}(x_\epsilon )\) to the DTB can be made as small as one likes by selecting \(A>0\) sufficiently large (Sect. 10); and (4) Compute the DTB (Sect. 11).

8.2 Estimation of the Leading Term of \(f_\epsilon ^{(1)}(x_\epsilon )\)

Throughout this section we assume that \({\mathcal {B}}\) in (4.3) satisfies \({{\tilde{B}}}(y,\eta )\equiv {{\tilde{B}}}_0(y,\eta )\), i.e., we assume that the symbol of \({\mathcal {B}}\) contains only the top order term. Let \({\mathcal {B}}_0\) denote the \(\Psi \)DO of the form (4.3), where \(\tilde{B}(y,\eta )\equiv {{\tilde{B}}}_0(y_0,\eta )\). Likewise, we assume that the symbol of g coincides with its top order term (i.e., \(\tilde{R}\equiv 0\) in (4.15)). It then follows from (C.1) to (C.7) (see also (11.3)) that g is given by

where \(a^\pm (y)\) are linear combinations of \({{\tilde{\upsilon }}}^\pm (y)\). Substitute (8.3) into (8.1)

Introduce the operator

where g is sufficiently smooth and decays sufficiently fast, and \({\mathcal {F}}_{1d}\) denotes the 1D Fourier transform. Introduce an auxiliary function:

where \({{\tilde{\varphi }}}={\mathcal {F}}\varphi \), \({{\tilde{{\mathcal {A}}}}}={\mathcal {F}}_{1d}{\mathcal {A}}\),

\({\mathcal {B}}_{1d}\) acts with respect to the affine variable, and the hat denotes the classical Radon transform that integrates over hyperplanes:

Both \({{\tilde{b}}}(\lambda )\) and \({{\tilde{{\mathcal {A}}}}}(\lambda )\) are not smooth at \(\lambda =0\), so the product \(\tilde{b}(\lambda ){{\tilde{{\mathcal {A}}}}}(\lambda )\) needs to be computed carefully, see the discussion between (11.4) and (11.6).

The main result in this section is the following lemma.

Lemma 8.1

Suppose the symbols of \({\mathcal {B}}\) and g contain only the top order terms as described above. Under the assumptions of Theorem 4.7 one has

8.3 Proof of Lemma 8.1

We begin by investigating the sum in (8.4). The key result is the following lemma (see Appendix D for the proof).

Lemma 8.2

Suppose \(y,z\in {\mathcal {V}}\) satisfy

for some \(c>0\), and \(g_\epsilon \) is obtained by interpolating g in (8.3) (cf. (4.6)). One has

where the series on the right converges absolutely, and the big-O term is uniform with respect to z, y satisfying (8.10). Moreover, the left-hand side of (8.11) remains bounded as \(\epsilon \rightarrow 0\) uniformly with respect to z, y satisfying (8.10).

The next step is to use (8.11) in (8.4):

Here \(Z(y^{(2)})\) is obtained by projecting \(Y_0(y^{(2)})\) onto \(\Gamma \) along \(y_1\), see Lemma 6.2. From (3.17) and \(Y_0(y^{(2)},x)\equiv y^{(2)}\) we have \(G^{{\mathcal {T}}}=I_N\) and \(\det G^{{\mathcal {T}}}=1\), where \(G^{{\mathcal {T}}}\) is the Gram matrix (4.2) evaluated at \(y^{(2)}=0,x=x_0\). This property was used to obtain (8.12). By (6.17) and Lemma 6.2,

so the conditions in (8.10) hold, and (8.11) applies.

In view of (8.12), define

Recall that \(D_1=U^TD\) (cf. Assumption 4.3(IK2)). As is easily checked,

where

Using that \({\check{x}}=O(1)\) and \(|y^{(2)}|=O(\epsilon ^{1/2})\), (6.17) and (6.18) imply

By Lemma 6.2,

Therefore,

where \(y^{(2)}=\epsilon ^{1/2}{\check{y}}^{(2)}\).

The following result is proven in Appendix E.

Lemma 8.3

Pick any c, \(0<c<\infty \). One has

and the two big-O terms are uniform in v and p confined to the indicated sets.

Introduce an auxiliary function

By Lemma 8.3, the integrand in (8.21) can be written in the form:

where \(a=\min (1-\{\beta _0\},s_0,1)>0\).

Consider now \(u_\epsilon \). The assumption \(|y^{(2)}|=O(\epsilon ^{1/2})\) implies

where we have used that \(Y_0(0)=0\). Here \(Y_0^\prime (0)=\partial _{y^{(2)}}Y_0(y^{(2)})|_{y^{(2)}=0}\) and \(Y_0^{\prime \prime }(0)=\partial _{y^{(2)}}^2Y_0(y^{(2)})|_{y^{(2)}=0}\). For completeness, note that \(Y_0^\prime (0)=(0,I_N)^T\) (see (3.17)), but this is not used in what follows.

From (8.15), \(\phi _1(q;u+m)=\phi _1(q;u)\) for any \(m\in {\mathbb {Z}}^n\). Using Lemma 8.3 again and (8.25), (8.24) becomes

Thus, we need to compute the limit of the following integral as \(\epsilon \rightarrow 0\):

Represent \(\phi _1\) in terms of its Fourier series:

The N columns of \(Y_0^\prime (0)\) form a basis for the tangent space to \({\mathcal {T}}_{x_0}\) at \(y_0=0\) written in the new y coordinates. The columns of \(UY_0^\prime (0)\) span the tangent space to \(\tilde{\mathcal {T}}_{x_0}\) at \({{\tilde{y}}}_0\) written in the original \({{\tilde{y}}}\) coordinates. By assumption, \({{\tilde{{\mathcal {T}}}}}_{x_0}\) is generic at \({{\tilde{y}}}_0\) with respect to D (cf. Definition 3.4), so there is no \(m\in {\mathbb {Z}}^n\) such that \(m\not =0\) and \(m D_1^{-1}Y_0^\prime (0)=0\). The same argument as in (5.8)–(5.14) in [20] implies

Here is an outline of the argument. Break up the integral with respect to \({\check{y}}^{(2)}\) in (8.27) into a sum of integrals over a finite, pairwise disjoint covering of the domain of integration by subdomains \(B_k\) with diameter \(0<\delta \ll 1\). Then approximate each of these integrals by assuming that \({\check{y}}^{(2)}\) is constant everywhere except in the first term of u outside of brackets in (8.27). This is done by choosing \({\check{y}}^{(2)}_k\in B_k\) in an arbitrary fashion:

Thus, the variable of integration \({\check{y}}^{(2)}\) is present only in the rapidly changing term with \(\epsilon ^{1/2}\) in the denominator. The magnitude of the error term \(O(\delta ^{a})\) follows from Lemma 8.3. Represent each \(\phi _1\) in (8.30) in terms of its Fourier series (8.28). Using the fact that there is no \(m\in {\mathbb {Z}}^n\) such that \(m\not =0\) and \(m D_1^{-1}Y_0'(0)=0\) implies

The first term in \(u_k\) (cf. (8.30)) is the only one that contains \({\check{y}}^{(2)}\) and changes rapidly as \(\epsilon \rightarrow 0\). In turn, (8.31) implies

Using that \(\delta >0\) can be as small as we like finishes the proof of (8.29).

Combining (8.17), (8.21), (8.23), (8.26), (8.27), (8.29) gives

Since \(\partial Y_1/\partial x^\perp =0\) (cf. (11.8)) and \(\Theta _0=\text {d}y_1\), from the definition of \(v_0\) in (8.19)

9 Estimation of the Remaining Parts of \(f_\epsilon ^{(1)}(x_\epsilon )\)

In this section we prove that the lower order terms of \(f_\epsilon ^{(1)}(x_\epsilon )\) do not contribute to the DTB. In this section, by c we denote various positive constants that may have different values in different places. From (4.16), (6.3), (6.17), and (8.2) it follows that there exists \(c_1>0\) such that

Let \(\beta \) and s denote the remaining highest order exponents in (4.8) and (4.15), respectively. By construction, \(\beta _0-s_0>\beta -s\). This means that either \(s=s_0\) if the first term in \({\mathcal {B}}\) is missing (i.e., \(\beta =\beta _1<\beta _0\)), or \(\beta =\beta _0\) if the first term in \({{\tilde{\upsilon }}}\) is missing (i.e., \(s=s_1>s_0\)).

Suppose initially that \(\beta > \lfloor s^-\rfloor \). Set \(k:=\lceil \beta ^+\rceil \), \(\nu :=k-\beta \). Thus, \(0<\nu \le 1\), \(\nu =1\) if \(\beta \in {\mathbb {N}}_0\), and \(s_0\le s<k\le \lceil \beta _0^+\rceil \). Clearly,

for some \({\mathcal {W}}_1\in S^{-\nu }({\mathcal {V}}\times {\mathbb {R}}^n)\) and \({\mathcal {W}}_2\in S^{-\infty }({\mathcal {V}}\times {\mathbb {R}}^n)\). Here we use a cut-off near \(\eta =0\) and the fact that the amplitude of \({\mathcal {B}}\) is supported in a small conic neighborhood of \((y_0,\Theta _0=\text {d}y_1)\). Then

where K(y, w) is the Schwartz kernel of \({\mathcal {W}}_1\), and O(1) represents \({\mathcal {W}}_2 g_\epsilon (y)\). The latter statement follows, because \(g_\epsilon (y)\) is uniformly bounded as \(\epsilon \rightarrow 0\) for all \(y\in {\mathcal {V}}\) (cf. (4.6) and (7.6)) and compactly supported. By the estimate [1, Eq. (5.13)],

Combining (9.2), (7.9), the two top cases in (7.10) with \(l=k\) and \(s_0\) replaced by s, (9.3), and (9.4) with \(l=0\), gives

where \(\varkappa _1\) is the same as in Lemma 7.3. Consider \(J_1\):

where we denoted \(P:=P(y)\) and changed variables \(w_1\rightarrow p=w_1-\psi (w^\perp )\). There exists \(0<c'<1\) so that

By construction, \(\partial _{y^\perp }\psi (0)=0\). Assume \({\mathcal {V}}\) is sufficiently small, so that \(|\psi (y^\perp )-\psi (w^\perp )|\le c''|y^\perp -w^\perp |\), \(y,w\in {\mathcal {V}}\), for some \(0<c''<1\). Then any \(c'\) such that \(0<c'<1-c''\) works. This implies

Here we have used that \(P=O(\epsilon )\).

The term \(J_2\) can be estimated analogously, and we get an estimate similar to (9.8), where the bound is \(O(\epsilon ^{s-\beta })\) in all three cases.

Suppose \(\beta >s\). By (9.5), \(({\mathcal {B}}g_\epsilon )(y)=O(\epsilon ^{s-\beta })\). Estimate the integral in (8.4):

In a similar fashion,

Since \(\beta _0-s_0\ge N/2\ge 1/2\), \(\epsilon ^\kappa f_\epsilon ^{(1)}(x_\epsilon )\rightarrow 0\) in all three cases.

Suppose now \(0<\beta \le \lfloor s^- \rfloor \). Similarly to (9.2), \({\mathcal {B}}={\mathcal {W}}_1 \partial _{y_1}^k+{\mathcal {W}}_2\), where \(k=\lceil \beta \rceil \ge 1\), \(\nu =k-\beta \ge 0\), \(s>k\), \({\mathcal {W}}_1\in S^{-\nu }({\mathcal {V}}\times {\mathbb {R}}^n)\), and \({\mathcal {W}}_2\in S^{-\infty }({\mathcal {V}}\times {\mathbb {R}}^n)\). The kernel of \({\mathcal {W}}_1\) is an \(L^1\) function (see e.g. Theorem 5.15 in [1]) and \(\sup _{y\in {\mathcal {V}}}|\Delta g_\epsilon ^{(k)}(y)|=O(\epsilon ^{s-k})\) (cf. (7.12) with \(s_0\) replaced by s). This implies that \(\sup _{y\in {\mathcal {V}}}|({\mathcal {B}}g_\epsilon )(y)-{\mathcal {B}}g(y)|=O(\epsilon ^{s-k})\). From Lemma 7.1, \({\mathcal {B}}g\in C_*^{s-\beta }({\mathcal {V}})\), and \(s>\beta \). Thus, \(({\mathcal {B}}g_\epsilon )(y)=O(1)\), and the desired result follows similarly to the case \(\beta <s\) in (9.10). The case \(\beta \le 0\) is proven using the same argument with \(l=0\) in (7.12) and without splitting \({\mathcal {B}}\) into two parts.

10 Estimation of \(f_\epsilon ^{(2)}(x_\epsilon )\)

10.1 Statement of Results

In this section we prove the following two lemmas.

Lemma 10.1

Under the assumptions of Theorem 4.7 one has

Lemma 10.2

Suppose \(\kappa =0\). Under the assumptions of Theorem 4.7 one has

Recall that \(x_0^{\text {int}}\) is defined in (4.21). Lemma 10.1 is proven by considering the last remaining term \(f_\epsilon ^{(2)}(x_\epsilon )\):

where both \({\mathcal {B}}\) and g are given by their full expressions. The continuous counterpart of (10.3) is

10.2 Proof of Lemma 10.1

The following lemma is proven in Appendix F.

Lemma 10.3

Suppose \({\mathcal {B}}\), g, and \(\varphi \) satisfy Assumptions 4.2, 4.3, 4.5, and 4.6. There exist \(c,\varkappa _2>0\) such that for all \(\epsilon >0\) sufficiently small one has

whenever \(|P(y)|>\varkappa _2\epsilon \).

Return now to (10.3). Pick any \(y\in {\mathcal {T}}_{x_\epsilon }\). Recall that \(y=Y(y^{(2)},x)\) is obtained by solving \(x^{(1)}=\Phi ^{(1)}(x^{(2)},y)\) for \(y^{(1)}\), and that \(y^{(2)}\equiv Y^{{(2)}}(y^{(2)},x)\) (see the proof of Lemma 3.7). Hence \(|Y(y^{(2)},x_\epsilon )-Y_0(y^{(2)})|=O(\epsilon )\). Strictly speaking, we cannot invoke (6.17) here, because in (6.17) the assumption is \(|y^{(2)}|=O(\epsilon ^{1/2})\). Therefore,

Recall that \(Z(y^{(2)})\) is the projection of \(Y_0(y^{(2)})\) onto \(\Gamma \), cf. Lemma 6.2. Using (6.3), (8.2), and that Q is negative definite, by shrinking \({\mathcal {V}}\), if necessary, and taking \(A\gg 1\) large enough, we can make sure that (a) \(P(y)\ge c|y^{(2)}|^2\) for some \(c>0\) and (b) inequality (10.5) applies (i.e. \(P(y)>\varkappa _2\epsilon \)) if \(y\in {\mathcal {T}}_{x_\epsilon }\) and \(y^{(2)}\in \Omega _2\) for all \(\epsilon >0\) small enough.

Suppose first that \(\kappa >0\). Using (10.3), (10.5), and (7.7) gives an estimate

If \(\kappa =0\), we get from (10.4), (10.5), and (7.8)

As \(A\gg 1\) can be arbitrarily large, combining (10.7) and (10.8) with (8.9), (8.35) proves (10.1). Here we use that the integral on the right in (10.1) is absolutely convergent (see Sect. 11).

10.3 Proof of Lemma 10.2

Recall that \(\kappa =0\). By (6.3) and (10.6), for any \(c_1>0\) we can find \(A_0\gg 1\) sufficiently large so that \(P(y)>c|y^{(2)}|^2\) for all \(A\ge A_0\), \(\epsilon >0\) sufficiently small, \(|{\check{x}}|\le c_1\), and \(y\in {\mathcal {T}}_{x_\epsilon }\) as long as \(y^{(2)}\in \Omega _2\). It follows from (7.8) that the integral in (10.4) admits a uniform (i.e., independent of \(\epsilon >0\) sufficiently small, \(A\gg 1\) sufficiently large, and \(\check{x}\) confined to a bounded set) integrable bound:

In (10.9), the constant c in the exponent is the same as the one in (7.8). Therefore, we can compute the limit of \(f^{(2)}(x_\epsilon )\) as \(\epsilon \rightarrow 0\) by taking the pointwise limit of the integrand in (10.4). This limit is independent of \(A\gg 1\). Shrinking \({\mathcal {V}}\) if necessary, by (6.3) we can ensure that \(P(y)>0\) for any \(y\in {\mathcal {T}}_{x_0}\), \(y\not =0\). Hence

Thus, the limit is independent of \({\check{x}}\).

A slightly more general argument holds as well. Let \(x=(x_1,0,\dots 0)^T\in {\mathcal {U}}\) be a point with \(x_1>0\) sufficiently small, and let \(y\in {\mathcal {T}}_x\) be arbitrary. It follows from (6.3) and \(\partial Y_1/\partial x_1>0\) (see (11.8)) that \(P(Y(y^{(2)},x))\ge c(x_1+|y^{(2)}|^2)\) for some \(c>0\). This is easy to understand geometrically. If \(x_1>0\), i.e. x is on the interior side of \({\mathcal {S}}\), no curve \({\mathcal {S}}_y\), \(y\in {\mathcal {V}}\), is tangent to \({\mathcal {S}}\). Hence the curve \({\mathcal {T}}_x\) does not intersect \(\Gamma ={\mathcal {T}}_{\mathcal {S}}\), and \(P(Y(y^{(2)},x))\) is bounded away from zero. For any such x, we still have the same lower bound \(P(Y(y^{(2)},x))\ge c|y^{(2)}|^2\). In view of (4.21) and (10.9), we can use dominated convergence to conclude

11 Computation of the DTB: End of the Proof of Theorem 4.7

In this section we evaluate the right side of (10.1) and show that it equals to (4.22) if \(\kappa >0\) and to (4.23) if \(\kappa =0\).

The right side of (10.1) simplifies to the expression

where \(|S^{N -1}|\) is the area of the unit sphere in \({\mathbb {R}}^N\). Set (see (C.3))

As is shown in (C.1)-(C.7), the leading singular term of g (cf. (4.15)):

is indeed of the form (8.3) (where \(a^\pm (y)\) are linear combinations of \({{\tilde{\upsilon }}}^\pm (y)\)). Hence \({{\tilde{{\mathcal {A}}}}}(\lambda )={{\tilde{\upsilon }}}^+(y)\lambda _+^{-(s_0+1)}+{{\tilde{\upsilon }}}^-(y)\lambda _-^{-(s_0+1)}\), and (8.6), (11.2) yield

The function J(h) is identical to the one introduced in [19, Eq. (4.6)] if we replace n in the latter with \(N+1\). See [19, Sect. 4.5] for additional information about this function. In particular, \(\Upsilon (p)=O(|p|^{-(\beta _0-s_0)})\), \(p\rightarrow \infty \) (this follows from (8.6)), hence the integral in (11.2) is absolutely convergent if \(\kappa >0\). Also, \(\mu (\lambda )\) is the product of three distributions \({{\tilde{b}}}(\lambda ){{\tilde{{\mathcal {A}}}}}(\lambda )(\lambda -i0)^{-N/2}\), which is well-defined as a locally integrable function if \(\kappa >0\).

Combine with the factor \(w(x_0,y_0)\) in (10.1) and the factor in front of the integral in (11.1) and compute the inverse Fourier transform (cf. (C.3))

If \(\kappa =0\), a more careful analysis of J(h) is required (see Sect. 4.6 of [19]). Similarly to (C.16), (C.17), condition (4.20) implies that \(\Upsilon (p)\equiv 0\), \(p>c\), for some \(c>0\), hence the integral in (11.2) is still absolutely convergent. Straightforward multiplication of the distributions to obtain \(\mu (\lambda )\) no longer works, because \(\mu (\lambda )\) is not a locally integrable function if \(\kappa =0\). Fortunately, in this case \(\mu (\lambda )\) is computed in [19, Eq. (4.45)] (see [19, Eqs. (4.45)–(4.47)]). Observe that condition (4.20) in this paper is equivalent to [19, condition (4.6)]. At first glance the two conditions differ by a sign, but \(s_0\) in [19] corresponds to \(s_0+1\) here, which eliminates the discrepancy. Then \(\mu (\lambda )=\tilde{B}_0(y_0,\Theta _0){{\tilde{\upsilon }}}^+(y_0)e(-N/2)(\lambda -i0)^{-1}\), and (11.5) becomes

where \(C_1\) is the same as in (11.5).

Let us now compute \(|\det Q|^{1/2}\). The following lemma is proven in Appendix 1.

Lemma 11.1

One has

and

where \(y=Y(y^{(2)},x)\) is the function constructed in the proof of Lemma 3.7.

Substituting (11.7) into (11.5) and comparing (11.5), (11.6) with (4.22),(4.23), respectively, we finish the proof of Theorem 4.7.

12 Proof of Theorem 5.4

From (C.13) in the proof of Lemma 7.2 and (4.1) it follows that

By construction, \(P(Y_0(y^{(2)}))=\Theta _0\cdot (Y_0(y^{(2)})-Z(y^{(2)}))\). By (6.3),

Therefore the stationary point \(y^{(2)}_*(x)\) of the phase \(P(Y(y^{(2)},x))\) is a smooth function of x in a neighborhood of \(x=x_0\). Set \(x=x_\epsilon =x_0+\epsilon {\check{x}}\).