Abstract

Hölder regularity, isotropic wavelet bases and Hausdorff dimension are not optimal for analyzing directional or anisotropic features for signals on \({\mathbb {R}}^d\) with \(d \ge 2\). This paper aims to overcome this drawback using rectangular pointwise regularity, hyperbolic wavelets and dimension prints. Rectangular pointwise regularity \({\mathfrak {L}}^{(\alpha _1, \ldots , \alpha _d)}(x_1, \ldots ,x_d)\) is defined through local oscillations over rectangular parallelepipeds. It extends the alternative definition of Hölder regularity by means of isotropic local oscillations done by Jaffard. It also substitutes the rectangular pointwise Lipschitz regularity introduced by Ayache et al. and Kamont for \((\alpha _1, \ldots , \alpha _d) \in (0,1)^d\) in order to cover any \((\alpha _1, \ldots , \alpha _d) \in (0,\infty )^d\). It is also a pointwise version of the Kamont’s anisotropic Besov spaces on the unit cube. We prove characterizations in terms of hyperbolic wavelets. We deduce a new information on the multifractal analysis of rectangular pointwise behaviors, relating the dimension prints of sets of level rectangular pointwise regularities to average quantities expressed in terms of hyperbolic wavelet leaders. We apply our results for some anisotropic selfsimilar cascade wavelet series.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The multifractal formalism is a formula which is used to derive the spectrum of singularities of a signal f from average quantities extracted from f. It has proved important in mathematics, physics and signal analysis (for example, see [32, 39] and references therein). Usually, the spectrum consists of the Hausdorff dimension of the sets of points where the regularity of f takes a given value. It allows to understand the fractal geometry of pointwise regularity fluctuations. The multifractal formalism was first introduced in the context of the statistical study of fully developed turbulence in the mid 80’s by Frish and Parisi [26], following the pioneering works [41,42,43,44] of Mandelbrot who had associated fractals to measures (or functions) by introducing multiplicative cascades for the dissipation of energy in turbulent flows. The scope of the mathematical validity of the multifractal formalism has become an important issue (see Ref. [32] and references therein). The most commonly pointwise regularity used for functions is Hölder regularity.

Definition 1

Let \(\alpha >0\), \(\alpha \notin {\mathbb {N}}_0\), \({\mathbf {x}}=(x_1, \ldots ,x_d) \in {\mathbb {R}}^d\) and \(f: {\mathbb {R}}^d \rightarrow {\mathbb {R}}\). We say that \(f \in C^\alpha ({\mathbf {x}})\) if there exists \(C>0\), a neighborhood \(V({\mathbf {x}})\) of \({\mathbf {x}}\), and a polynomial \(P({\mathbf {y}}-{\mathbf {x}})= \displaystyle \sum _{I=(i_1, \ldots , i_d) \in {\mathbb {N}}_0^d} a_{I}({\mathbf {y}}-{\mathbf {x}})^{I} = \displaystyle \sum _{I=(i_1, \ldots , i_d)\in {\mathbb {N}}_0^d} a_{I} (y_1-x_1)^{i_1} \cdots (y_d-x_d)^{i_d}\) of degree \(\ d^o P < \alpha \), such that

We say that \(f \in C_{log}^\alpha ({\mathbf {x}})\) if (1) is replaced by

We say that f is uniformly Hölder if there exists \(\alpha >0\) such that \(f \in C^\alpha ({\mathbb {R}}^d)\) in the sense that (1) holds for all \({\mathbf {x}}, {\mathbf {y}}\in {\mathbb {R}}^d\) with C a uniform constant.

Wavelet analysis plays a key role since it characterizes Hölder regularity and many isotropic functional spaces. Standard orthonormal wavelet bases allow decompositions on tensor products of 1-D wavelets with the same dilation factor \(2^j\) at scale j in all coordinate axes. Nonetheless, they are not optimal for analyzing directional or anisotropic features like edges. Many signals on \({\mathbb {R}}^d\), \(d \ge 2\), present anisotropies quantified through regularity characteristics and features that strongly differ when measured in different directions [4, 15, 16, 18, 20, 33, 36, 48,49,50]. That signals belong to classes of functions that have different degrees of smoothness along different directions (see [1,2,3, 5, 7, 9, 10, 18, 20, 33, 50, 53,54,55,56] and the references therein, see also the recent book [23] for some historical comments on the classical mixed smoothness theory (without pointwise issues)). These directional behaviors are important for detection of edges, efficient image compression,texture classification, etc... Extensions of wavelet bases elongated in particular directions were considered (see [17, 22, 29, 33, 37, 40, 47, 54]). Useful decompositions of anisotropic function spaces in simple building anisotropic or hyperbolic blocks (atoms, quarks, splines, wavelets, Littlewood Paley,...) were also obtained (see [8, 10, 11, 13, 14, 21, 25, 27, 28, 30, 31, 35, 55, 57, 58]), depending on the precise definition of that spaces.

The pointwise Hölder regularity \(C^\alpha ({\mathbf {x}})\) doesn’t take into account possible directional regularity behaviors in coordinate axes. For example, if \(\varvec{\alpha }= (\alpha _1, \ldots ,\alpha _d) \in (0,1)^d\), functions \(f(y_1, \ldots ,y_d) = \displaystyle \sum _{i=1}^d |y_i-x_i|^{\alpha _i}\) and \(g(y_1, \ldots ,y_d) =\displaystyle \prod _{i=1}^d |y_i-x_i|^{\alpha _i} \) have respectively Hölder regularities \(\displaystyle \min \{ \alpha _i \}\) and \(\displaystyle \sum _{i=1}^d \alpha _i\) at point \({\mathbf {x}}=(x_1, \ldots ,x_d) \in {\mathbb {R}}^d\). There exist various ways to measure the directional regularity of a function around a given point. In order to capture directional behavior of the form \( \displaystyle \sum _{i=1}^d |y_i-x_i|^{\alpha _i}\), Jaffard [33] has defined the following d-parameter directional regularity \(C_J^{(\alpha _1, \ldots , \alpha _d)}({\mathbf {x}})\) for \(\alpha _1>0, \ldots , \alpha _d>0\).

Definition 2

Let \(f: {\mathbb {R}}^d \rightarrow {\mathbb {R}}\). Let \(\varvec{\alpha } = (\alpha _1, \ldots , \alpha _d) \in (0,\infty )^d\) (we will write \(\varvec{\alpha } > {\mathbf {0}}\) where \({\mathbf {0}}=(0, \ldots ,0)\) in \({\mathbb {R}}^d\)). We say that \(f \in C_J^{\varvec{\alpha }}({\mathbf {x}})\) if there exist a constant \(C>0\), a neighborhood \(V({\mathbf {x}})\) of \({\mathbf {x}}\), and a polynomial \(P({\mathbf {y}}-{\mathbf {x}}) = \displaystyle \sum _{I=(i_1, \ldots , i_d) \in {\mathbb {N}}_0^d} a_{I}({\mathbf {y}}-{\mathbf {x}})^{I} \) of degree less than \(\varvec{\alpha }\) in the sense that

such that

Actually, Definition 2 is an extension of the notion of anisotropic regularity which was already introduced in [10]; let \({\mathbf {u}}=(u_1, \ldots , u_n)\) satisfies

For \(I=(i_1,\cdots ,i_d) \in {\mathbb {N}}_0^d\), we set \(d_{{\mathbf {u}}}(I) = \displaystyle \sum _{n=1}^d \;u_n\; i_n\). If \(P=\displaystyle \sum _{I\in {\mathbb {N}}_0^d} a_{I} {\mathbf {y}}^{I}\) is a polynomial we define its \({\mathbf {u}}\)-homogeneous degree by

Definition 3

Let \(f: {\mathbb {R}}^d \rightarrow {\mathbb {R}}\). Let \(s >0\). We say that \(f \in C_{{\mathbf {u}}}^{s}({\mathbf {x}})\) if there exist a constant \(C>0\), a neighborhood \(V({\mathbf {x}})\) of \({\mathbf {x}}\) and a polynomial P of \({\mathbf {u}}\)-homogeneous degree less than s such that

In order to capture directional behavior of the form \(\displaystyle \prod _{i=1}^d |y_i-x_i|^{\alpha _i}\), Ayache et al. [6], and Kamont [35] have defined the following pointwise rectangular Lipschitz regularity \(Lip^{(\alpha _1, \ldots , \alpha _d)}({\mathbf {x}})\) for \(0< \alpha _1, \ldots , \alpha _d <1\). Denote by \({\mathcal {D}}\) the set \(\{1, \cdots ,d\}\). For \(i \in {\mathcal {D}}\), let \(e_i= ( \delta _{1,i}, \ldots , \delta _{d,i})\) denotes the i-th coordinate vector in \({\mathbb {R}}^d\). Let \({\mathbf {n}}=(n_{1},\ldots ,n_{d})\in {\mathbb {N}}_0^{d}\). For \(f: {\mathbb {R}}^d \rightarrow {\mathbb {R}}\), \({\mathbf {x}}=(x_{1}, \cdots ,x_{d})\in {\mathbb {R}}^{d}\) and \({\mathbf {h}}=(h_{1}, \ldots ,h_{d})\in {\mathbb {R}}^{d}\), the anisotropic differences of order \( {\mathbf {n}}\) were first defined by Kamont [34] as

where for \(n\in {\mathbb {N}}_0\),

Definition 4

Let \(\varvec{\alpha } = (\alpha _1, \ldots , \alpha _d) \in (0,1)^d\). Put \({\mathbf {1}}= (1, \ldots ,1) \in {\mathbb {R}}^d\). We say that \(f \in Lip^{\varvec{\alpha }}({\mathbf {x}})\), if there exists \(C>0\) such that

Actually, the rectangular Lipschitz regularity was introduced in a global sense for any \({\mathbf {x}}\) and \({\mathbf {x}}+ {\mathbf {h}}\) in a cube \({\mathcal {Q}}\) in order to prove that realizations of fractional Brownian sheets on \({\mathcal {Q}}\) are uniform rectangular Lipschitz.

If \(\varvec{\alpha }> {\mathbf {0}}\), define the average regularity \({\tilde{\alpha }}\) by the harmonic mean of the \(\alpha _n\), i.e.,

Then the anisotropy indices \({\mathbf {v}}=(v_1, \ldots , v_n)\) defined by

satisfy

In Ref. [10], it is proved that

An almost characterization of \(C_{{\mathbf {v}}}^{{\tilde{\alpha }}}({\mathbf {x}})\) in terms of \({\mathbf {v}}\)-anisotropic Triebel wavelet basis was also obtained in Ref. [10]. The functions of the latest basis and more generally anisotropic blocks are tensor products of 1-D functions that allow dilations factors about \(2^{j v_1}, \ldots , 2^{j v_d}\) in coordinate axes. Note that in several 2-D signals, the study was restricted to parabolic anisotropy, i.e a contraction by \(\lambda \) in the \(x_1\) axis and by \(\lambda ^2\) in the \(x_2\) axis (see [37, 47, 54] and references therein).

However, for signals where no a priori anisotropy is prescribed, hyperbolic blocks are tensor products of 1-D wavelets that allow different dilations factors \(2^{j_1}, \ldots , 2^{j_d}\) in coordinate axes (see [1, 21, 52, 59, 60]). The latest have the advantage to contain all possible anisotropies. Recently, in [12], we provided a characterization of \(Lip^{\varvec{\alpha }}({\mathbf {x}})\) in terms of the hyperbolic Schauder (spline) system. We also proved that fractional Brownian sheets are pointwise rectangular monofractal. On the opposite, we constructed a class of Sierpinski selfsimilar functions that are pointwise rectangular multifractal.

In this paper, we first propose a substitute for the rectangular pointwise Lipschitz regularity \(Lip^{\varvec{\alpha }}({\mathbf {x}})\) that has the advantage to cover any \(\varvec{\alpha }> {\mathbf {0}}\). We define rectangular pointwise regularity \({\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) through local oscillations of the function over rectangular parallelepipeds. Our definition is reminiscent of the Kamont’s anisotropic Besov spaces \(B^{\varvec{\alpha }}_{\infty , \infty }(I^d)\) on the unit cube (see [34]).

For \(\varvec{\epsilon }=(\epsilon _{1}, \ldots , \epsilon _{d}) > {\mathbf {0}}\), we denote by \(B({\mathbf {x}},\varvec{\epsilon })\) the rectangular parallelepiped

For \({\mathbf {n}} \in {\mathbb {N}}_0^{d}\), the anisotropic (or hyperbolic) \({\mathbf {n}}\)-oscillation of f in \(B({\mathbf {x}},\varvec{\epsilon })\) is defined by

where

For \({\mathbf {x}}=(x_{1}, \ldots ,x_{d})\in {\mathbb {R}}^{d}\) and \(\mathbf {x'}=(x'_{1}, \cdots ,x'_{d})\in {\mathbb {R}}^{d}\), we will write \({\mathbf {x}}\le \mathbf {x'}\) if \(x_{i}\le x'_{i} \; \forall \; i \in {\mathcal {D}}\), \({\mathbf {x}} < \mathbf {x'}\) if \(x_{i} < x'_{i} \; \forall \; i \in {\mathcal {D}}\), \({\mathbf {x}}\ge \mathbf {x'}\) if \(x_{i}\ge x'_{i} \; \forall \; i \in {\mathcal {D}}\), and \({\mathbf {x}} > \mathbf {x'}\) if \(x_{i} > x'_{i} \; \forall \; i \in {\mathcal {D}}\). Symbols \(\nleq , \nless , \ngeq \) and \( \ngtr \) are the negations.

Definition 5

Let \(\varvec{\alpha }=(\alpha _{1}, \ldots , \alpha _{d}) > {\mathbf {0}}\) and \({\mathbf {x}}=(x_{1}, \ldots ,x_{d})\in {\mathbb {R}}^{d}\). We say that f is rectangular pointwise regular \(\varvec{\alpha }\) at point \({\mathbf {x}}\), and we write \(f \in {\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\), if

Remark 1

Definition 5 is an extension of the following anisotropic rectangular regularity.

Definition 6

Let \(f: {\mathbb {R}}^d \rightarrow {\mathbb {R}}\). Let \(s >0\) and \({\mathbf {u}}\) be as in (5). We say that \(f \in R^s_{\mathbf {u}}({\mathbf {x}})\) if

If \(\varvec{\alpha }=(\alpha _{1}, \ldots , \alpha _{d}) > {\mathbf {0}}\) and \({\tilde{\alpha }}\) and \({\mathbf {v}}\) are as in (10) and (11) then

Another starting point which led us to Definition 5 is the almost characterization of Hölder regularity by means of isotropic local oscillations (see [32] and Remark 2 below); if \(n \in {\mathbb {N}}_0\), define the difference of order n of f by

Define the oscillation of order n of f on the Euclidean ball \(B({\mathbf {x}}, \varepsilon )\) of center \({\mathbf {x}}\) and radius \(\varepsilon \) by

where

In Ref. [32, Proposition 6], it is proved that if \(\alpha \in (0,\infty )\) and \(f \in C^\alpha ({\mathbf {x}})\) then

and conversely, if f is uniformly Hölder and (23) holds then \(f \in C_{log}^{\alpha }({\mathbf {x}})\).

Remark 2

Clearly, if \(u_1, \cdots , u_d\) are functions on \({\mathbb {R}}\) and f is a product of separable variable functions \(u_i(x_i)\), i.e., \(f({\mathbf {x}})=\prod _{i=1}^d u_i(x_{i})\) then

It follows that

If \(u_i \in C^{\alpha _i}(x_i)\) for all i then by applying result (23) for \(u_i\) instead of f, we deduce that \(f \in {\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\).

On the other hand, although Hausdorff dimension provides information on the fullness of a set when viewed at fine scales, two sets of the same dimension may have very different appearances. If C is the Cantor ‘middle half’ set, which has Hausdorff dimension equal to 1/2, then the product set \(C \times C \subset {\mathbb {R}}^2\) has the same Hausdorff dimension as a line segment in \({\mathbb {R}}^2\). Various quantities, such as lacunarity and porosity, have been introduced to complement dimension (see [45]). In 1988, Rogers [51] proposed dimension prints, based on measures of Hausdorff type, to provide more information about the local affine structure of subsets of \({\mathbb {R}}^d\), for \(d \ge 2\); define a d-parameter family of measures \({\mathcal {H}}^{(t_1, \ldots ,t_d)}\) on subsets of \({\mathbb {R}}^d\) similar to the Hausdorff measures \({\mathcal {H}}^{s}\). Let \({\mathcal {B}}\) be the set of rectangle parallelepipeds in \({\mathbb {R}}^d\). For \(B \in {\mathcal {B}}\), we denote by \(l_1(B), \ldots , l_d(B)\) the edge-lengths of B, written in the order \(l_d(B) \le \cdots \le l_1(B)\). For a subset A of \({\mathbb {R}}^d\) and \(\delta >0\), we say that \((B_n)_{n \in {\mathbb {N}}} \subset {\mathcal {B}} \) is a \(\delta \)-cover of A and we write \((B_n)_{n \in {\mathbb {N}}} \in {\mathcal {C}}_\delta (A)\) if \(\forall \; n \;\; l_1(B_n) \le \delta \) and \( A \subset \bigcup _{n} B_n\).

Define for all \(\delta >0\) and \({\mathbf {t}}= (t_1, \ldots ,t_d) \ge {\mathbf {0}}\) the quantity

Then the map

is a measure of Hausdorff type. The dimension print of A is not a number but the set defined as

As each d-parameter measure weights the side lengths of the rectangle parallelepipeds differently it is possible to distinguish between sets that are easily covered by long thin rectangle parallelepipeds, such as a line segment L, and sets which are not, such as the product of two regular Cantor sets in \({\mathbb {R}}\) (see [51], Example 2, p. 3 and [24], pp. 50–52).

In the next section, we characterize both \({\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) and \(Lip^{\varvec{\alpha }}({\mathbf {x}})\) in terms of hyperbolic wavelet coefficients (see Theorems 1, 2). We deduce that \({\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) is a good substitute for \( Lip^{\varvec{\alpha }}({\mathbf {x}})\). We also give the characterization of \({\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) in terms of hyperbolic wavelet leaders (see Theorem 3). Using Remark 1, we also deduce that hyperbolic wavelets are able to analyze all anisotropies. This confirms that the universality of hyperbolic wavelets, which is true for global regularity (see [1, 55]), is also true for pointwise regularity.

Section 3 aims at obtain a numerical procedure that permits to extract information on the dimension print of sets of level rectangular pointwise regularities, expressed in terms of hyperbolic wavelet leaders (see Theorem 4, Corollary 1).

Finally, in Sect. 4, we apply our results for some selfsimilar cascade wavelet series, written as the superposition of similar anisotropic structures at different scales, reminiscent of some possible modelization of turbulence or cascade models.

2 Characterization in Terms of Hyperbolic Wavelets

2.1 Characterization with Hyperbolic Wavelet Coefficients

We will characterize both \({\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) and \(Lip^{\varvec{\alpha }}({\mathbf {x}})\) in terms of hyperbolic wavelet coefficients. We will then deduce that \({\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) is a good substitute for \( Lip^{\varvec{\alpha }}({\mathbf {x}})\) that has the advantage to cover any \( \varvec{\alpha } > {\mathbf {0}}\). We will also give the characterization of \({\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) in terms of hyperbolic wavelet leaders.

Let \(\psi _{-1}\) and \(\psi _{1}\) be the Daubechies [19] r-smooth father and mother wavelets (resp. \(\infty \)-smooth Lemarié-Meyer [38, 46]), compactly supported and r times continuously differentiable for some large value of r (resp. in the Schwartz class). It is known that \(\displaystyle \int _{{\mathbb {R}}}\psi _{-1}(t) dt=1\) and \(\displaystyle \int t^n \psi _{1}(y) \;dt =0\) for \(0 \le n < r\) (resp. for all \(n \in {\mathbb {N}}_0\)).

Put

Put \({\mathbb {N}}_{-1} = {\mathbb {N}}_0 \cup \{-1\}\). For \(j \in {\mathbb {N}}_{-1}\) and \(k \in {\mathbb {Z}}\), put

Then the collection \(\Big (2^{[j]/2}\psi _{j,k}(t)\Big )_{j\in {\mathbb {N}}_{-1}, k\in {\mathbb {Z}}}\) is an orthonormal basis of \(L^2({\mathbb {R}})\).

For \( {\mathbf {j}}=(j_1, \ldots , j_d) \in {\mathbb {N}}_{-1}^d\), put \( [{\mathbf {j}}] =([j_1], \ldots , [j_d]) \) and \(|{\mathbf {j}}| = \displaystyle \sum _{i=1}^d j_i\).

For \( {\mathbf {k}}=(k_1, \ldots , k_d) \in {\mathbb {Z}}^d\) and \({\mathbf {x}}=(x_1,\ldots ,x_d)\in {\mathbb {R}}^d\), put

Then the collection \(\{2^{|[{\mathbf {j}}]|/2}\; {\varPsi }_{{\mathbf {j}},{\mathbf {k}}}\;:\;{\mathbf {j}}\in {\mathbb {N}}_{-1}^d, {\mathbf {k}}\in {\mathbb {Z}}^d\}\) is an orthonormal basis of \(L^2({\mathbb {R}}^d)\) called hyperbolic wavelet basis [1, 21, 52, 59, 60]. Thus any function \(f\in L^2({\mathbb {R}}^d)\) can be written as

with

Remark 3

Since we focus on the local behavior \(f \in {\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) at \({\mathbf {x}}\in {\mathbb {R}}^d\), what happens far from \({\mathbf {x}}\) should not interfere with our questions. This is why we can assume that \({\mathbf {x}} \in (0,1)^d\) and f is supported on the unit cube \([0, 1]^d\), and since we deal with wavelets, we can assume that f is a wavelet series like

Moreover, it is very easy to analyse \({\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) for low frequency terms; indeed, without any loss of generality, for \(d=2\)

using Remark 2, the first term belongs to \({\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) for all \(\varvec{\alpha } < (r,r)\) (recall that r is the regularity of \(\psi _{-1}\)). The second and third terms are products of separable variable functions so their rectangular pointwise regularities can be deduced from Remark 2. For these reasons, it suffices to deal with the fourth term series, which from now on we call F.

The following theorems almost characterize the rectangular pointwise regularity of the wavelet series

They also show that \({\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) is an alternative substitute for \( Lip^{\varvec{\alpha }}({\mathbf {x}})\).

Theorem 1

Let \(\varvec{\alpha }=(\alpha _{1}, \ldots , \alpha _{d}) > {\mathbf {0}}\) and \({\mathbf {x}}=(x_{1}, \ldots ,x_{d})\in {\mathbb {R}}^{d}\).

-

1.

If \(F \in {\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) then there exists \(C>0\) such that

$$\begin{aligned}&\forall \; {\mathbf {j}}=(j_{1},\ldots , j_{d}) \in {\mathbb {N}}_{0}^d\; \forall \; {\mathbf {k}}=(k_{1}, \ldots ,k_{d}) \quad \nonumber \\&\quad \left| C_{{\mathbf {j}},{\mathbf {k}}} \right| \le C \prod _{i=1}^d (2^{-j_{i}}+\left| k_{i}2^{-j_{i}}-x_i\right| )^{\alpha _{i}}. \end{aligned}$$(34) -

2.

Conversely if F is uniformly Hölder and if (34) holds then \(F \in {\mathfrak {L}}^{\varvec{\alpha '}}({\mathbf {x}})\) for all \(\varvec{\alpha '} < \varvec{\alpha }\).

Theorem 2

Let \({\mathbf {0}}< \varvec{\alpha } < {\mathbf {1}}\) and \({\mathbf {x}}\in {\mathbb {R}}^{d}\).

-

1.

If \(F \in Lip^{\varvec{\alpha }}({\mathbf {x}})\) then there exists \(C>0\) such that

$$\begin{aligned} \forall \; {\mathbf {j}} \in {\mathbb {N}}_{0}^d \;\; \forall \; {\mathbf {k}}\in {\mathbb {Z}}^{d} \quad \left| C_{{\mathbf {j}},{\mathbf {k}}}\right| \le C \prod _{i=1}^d \left( 2^{-j_{i}}+\left| k_{i}2^{-j_{i}}-x_{i}\right| \right) ^{\alpha _i}. \end{aligned}$$(35) -

2.

If F is uniformly Hölder and (35) is satisfied, then

$$\begin{aligned} \forall \; \varvec{\alpha '} < \varvec{\alpha } \qquad F \in Lip^{\varvec{\alpha '}}({\mathbf {x}}). \end{aligned}$$(36)

Remark 4

The converse parts in Theorems 1 and 2 remain valid if (34) is satisfied when \({\mathbf {k}}\) belongs to some well-chosen subset of \({\mathbb {Z}}^d\) depending on \({\mathbf {j}}\). In fact, without any loss of generality, assume that F is supported on the unit cube.

-

Assume that \(\psi _{-1}\) is compactly supported in \([-A,A]\). If there exists \(i \in {\mathcal {D}}\) such that \(|k_i| > A + 2^{j_i}\) then \(C_{{\mathbf {j}},{\mathbf {k}}} = 0\).

-

Assume that \(\psi _{-1}\) belongs to the Schwartz class. Let \(i \in {\mathcal {D}}\). For all \(N \in {\mathbb {N}}\) there exists \(C>0\) such that

$$\begin{aligned} \forall y_i \quad |\psi _{j_i,k_i}(y_i)| \le \frac{C}{(1+|2^{j_i}y_i-k_i|)^N}. \end{aligned}$$If \(|k_i| > 2^{j_i+1}\) and \(0 \le y_i \le 1\) then \( |\psi _{j_i,k_i}(y_i)| \le C 2^{-Nj_i}\). It follows that \(|C_{{\mathbf {j}},{\mathbf {k}}}| \le C \prod _{i=1}^d 2^{-N\theta _ij_i}\) for all \({\mathbf {k}}> (2^{j_1+1}, \ldots , 2^{j_d+1})\) and all \((\theta _1, \ldots , \theta _d) \in (0,1)^{\mathcal {D}}\) with \(\theta _1+ \ldots \theta _d=1\).

Proof of Theorem 1

Since only the wavelet \(\psi _{1}\) is involved, we will write \(\psi \).

-

1.

The proof of this part is reminiscent of that of the converse part of Proposition 6 in [32]. If \(\psi \) is r-smooth and \(r>n\), there exists a function \(\theta _{n}\) with fast decay if \(r = \infty \) (resp. compactly supported) such that \( \psi ={\varDelta }_{\frac{1}{2}}^{n}\theta _{n}\) (see Lemma 2 in [32]).

If u and v belong to \(L^2({\mathbb {R}})\) then using (20)

$$\begin{aligned} \int u(t)\; {\varDelta }_{h}^{n} v(t) \; d t=(-1)^{n} \int {\varDelta }_{-h}^{n} u (t)\; v(t) \;dt. \end{aligned}$$(37)For \({\mathbf {j}}=(j_{1}, \ldots ,j_{d})\in {\mathbb {N}}_0^{d}\) and \({\mathbf {k}}=(k_{1}, \ldots ,k_{d})\in {\mathbb {Z}}^{d}\)

$$\begin{aligned} C_{{\mathbf {j}},{\mathbf {k}}}&=2^{|{\mathbf {j}}|} \int _{{\mathbb {R}}^{d}} F\left( {\mathbf {y}}\right) \prod _{i=1}^d \psi \left( 2^{j_{i}} y_{i}-k_{i}\right) \mathrm {d}{\mathbf {y}}\\&=\int _{{\mathbb {R}}^{d}}{\widetilde{F}}({\mathbf {y}}) \prod _{i=1}^d \psi (y_{i})\mathrm {d}{\mathbf {y}}, \end{aligned}$$where \( \displaystyle {\widetilde{F}}({\mathbf {y}})=F\left( \frac{y_{1}+k_{1}}{2^{j_{1}}},\ldots ,\frac{y_{d}+k_{d}}{2^{j_{d}}}\right) \). Using property (37)

$$\begin{aligned} C_{{\mathbf {j}},{\mathbf {k}}}&=\int _{{\mathbb {R}}^{d}}{\widetilde{F}}({\mathbf {y}}) \prod _{i=1}^d {\varDelta }_{\frac{1}{2}}^{n_{i}}\theta _{n_{i}}(y_{i}) \mathrm {d}{\mathbf {y}}\\&= (-1)^{n_1+\cdots +n_d} \int _{{\mathbb {R}}^{d}} {\varDelta }_{-\frac{1}{2},1}^{n_{1}} \circ \cdots {\varDelta }_{-\frac{1}{2},d}^{n_{d}}{\widetilde{F}}({\mathbf {y}}) \prod _{i=1}^d \theta _{n_{i}}(y_{i}) \mathrm {d}{\mathbf {y}}\\&= (-1)^{n_1+\cdots +n_d}\sum _{(l_{1}, \cdots , l_d) \in {\mathbb {N}}_0^d} \int _{\prod _{i=1}^d K_{l_{i}}} {\varDelta }_{-\frac{1}{2},1}^{n_{1}}\circ \cdots {\varDelta }_{-\frac{1}{2},d}^{n_{d}}{\widetilde{F}}({\mathbf {y}}) \prod _{i=1}^d \theta _{n_{i}}(y_{i}) \mathrm {d}{\mathbf {y}}, \end{aligned}$$where \(K_{0}=\left\{ t \in {\mathbb {R}}\;:\; |t|<1\right\} \) and for \(l\ge 1\), \(K_{l}=\left\{ t\in {\mathbb {R}}\;:\; 2^{l-1}\le |t|<2^{l}\right\} \).

If \({\mathbf {y}} \in \prod _{i=1}^d K_{l_{i}}\) then

$$\begin{aligned} \left| \frac{y_{i}+k_{i}}{2^{j_{i}}}-\frac{n_{i}}{2^{(j_{i}+1)}}- x_i\right| \le \left| \frac{k_{i}}{2^{j_{i}}}-x_i\right| +n_{i}2^{l_{i}-j_{i}}. \end{aligned}$$For \(i \in {\mathcal {D}}\), put \((\varvec{\epsilon }_{l_{1}, \cdots ,l_{d}})_i= \left| \frac{k_{i}}{2^{j_{i}}}-x_i\right| +n_{i}2^{l_{i}-j_{i}}\).

Set \(\varvec{\epsilon }_{l_{1},\cdots ,l_{d}}=((\varvec{\epsilon }_{l_{1},\ldots ,l_{d}})_1, \cdots , (\varvec{\epsilon }_{l_{1},\ldots ,l_{d}})_d)\). Therefore

$$\begin{aligned} \left| C_{{\mathbf {j}},{\mathbf {k}}}\right|&\le \sum _{(l_{1},\ldots ,l_{d}) \in {\mathbb {N}}_0^d} \int _{\prod _{i=1}^d K_{l_{i}}} \omega _{F}({\mathbf {n}},\varvec{\epsilon }_{l_{1},\ldots ,l_{d}})({\mathbf {x}}) \prod _{i=1}^d |\theta _{n_{i}}(y_{i})| \; \mathrm {d}{\mathbf {y}}\\&\le C \sum _{(l_{1},\cdots ,l_{d}) \in {\mathbb {N}}_0^d} \; \int _{\prod _{i=1}^d K_{l_{i}}} \prod _{i=1}^d \frac{\left( \left| \frac{k_{i}}{2^{j_{i}}}-x_i\right| +n_{i}2^{l_{i}-j_{i}}\right) ^{\alpha _{i}}}{(1+|y_{i}|)^{\alpha _{i}+2}} \; \mathrm {d}{\mathbf {y}}\\&\le C \prod _{i=1}^d \left( \sum _{l_{i}=0}^{\infty }\int _{K_{l_{i}}}\frac{\left( \left| \frac{k_{i}}{2^{j_{i}}}-x_i\right| +n_{i}2^{l_{i}-j_{i}}\right) ^{\alpha _{i}}}{(1+|y_{i}|)^{\alpha _{i}+2}} \mathrm {d}y_{i}\right) . \end{aligned}$$But for \(y_i \in K_{l_i}\), \(\frac{\left( \left| \frac{k_i}{2^{j_i}}-x_i\right| +n_i2^{l_i-j_i}\right) ^{\alpha _i}}{(1+|y_i|)^{\alpha _i+2}}\) is bounded by \(C \frac{\left| \frac{k_i}{2^{j_i}}-x_i\right| ^{\alpha _i}}{2^{l_i(\alpha _i+1)}}\) if \(n_i 2^{l_i-j_i}\le \left| \frac{k_i}{2^{j_i}}-x_i\right| \), and else by \(C \frac{2^{-\alpha _i j_i}}{2^{l_i}}\). Thus, summing up over \((l_{1}, \ldots ,l_{d})\), we get (34).

-

2.

Let us prove the converse part. Since there exists \(\delta >0\) such that \(F \in C^\delta ({\mathbb {R}}^d)\) then for all \({\mathbf {j}} \in {\mathbb {N}}_0^{d}\) and all \({\mathbf {k}}\in {\mathbb {Z}}^{d}\)

$$\begin{aligned} |C_{{\mathbf {j}},{\mathbf {k}}}|= & {} 2^{|{\mathbf {j}}|} |\int (F({\mathbf {y}})-F(k_{1}2^{-j_{1}}, y_2, \ldots ,y_d)) {\varPsi }_{{\mathbf {j}},{\mathbf {k}}}({\mathbf {y}}) \;d{\mathbf {y}}| \\\le & {} C 2^{|{\mathbf {j}}|} \int |y_1-k_{1}2^{-j_{1}}|^\delta |{\varPsi }_{{\mathbf {j}},{\mathbf {k}}}({\mathbf {y}})| \;d{\mathbf {y}}\\\le & {} C 2^{j_1} \int |y_1-k_{1}2^{-j_{1}}|^\delta |\psi _{j_1,k_1}(y_1)| \;dy_1 \;\; \prod _{i=2}^{d} 2^{j_i} \int |\psi _{j_i,k_i}(y_i)| \;dy_i\;. \end{aligned}$$

Using the localization of the wavelet \(\psi \)

Analogously, for all \(i \in \{2,\ldots ,d\}\)

Relations (38) and (39) imply that

Put \(\delta ' = \delta /d\). Relations (34) and (40) yield

Let \(\varvec{\epsilon }=(\epsilon _{1},\ldots ,\epsilon _{d}) > {\mathbf {0}}\). Let \({\mathbf {y}}\) and \({\mathbf {h}}=(h_{1}, \cdots ,h_{d})\in {\mathbb {R}}^{d}\) such that \([{\mathbf {y}},{\mathbf {y}}+{\mathbf {n}}{\mathbf {h}}]\subset B({\mathbf {x}},\varvec{\epsilon })\).

It follows that

where for \(x,h,y \in {\mathbb {R}}\)

Let us show that there exists \(C>0\) such that

Let J be the unique integer such that \(2^{-J}\le \left| h\right| < 2^{-J+1}\). Split \(R_{\alpha ,n}[x](h,y)\) as

Let us first bound (44). Since \(\psi \) is r-smooth then for N and r large enough

For \(i\in \left\{ 0,\ldots ,n\right\} \)

It follows that

Summing up over \(j\ge J+1\), (44) is bounded by \(C(|y-x|^{\sigma \alpha }+|h|^{\sigma \alpha })\).

Let us now bound (43). Since \({\varDelta }_{h}^{n}\psi _{j,k}(y)={\varDelta }_{2^{j}h}^{n}\psi (2^{j}y-k)\) then using property 5.1 of [34]

-

If \(k\notin [2^{j}y,2^{j}(y+nh)]\) and \(u\in [2^{j}y-k,2^{j}(y+nh)-k]\) then \(\left| u\right| \ge \min \left\{ \left| 2^{j} y-k\right| ,\left| 2^{j}(y+nh)-k\right| \right\} \). Since \(\psi \) is r-smooth then for N and r large enough

$$\begin{aligned}&\sum _{k\notin [2^{j}y,2^{j}(y+nh)]}(2^{-j}+\left| k2^{-j}-x\right| )^{\sigma \alpha }\left| {\varDelta }^n_{h}\psi _{j,k}(y)\right| \\&\qquad \le C 2^{nj}|h|^{n} \sum _{k\notin [2^{j}y,2^{j}(y+h)]} \left( \frac{(2^{-\sigma \alpha j}+\left| k2^{-j}-x\right| ^{\sigma \alpha })}{\left( 1+\left| 2^{j} y-k\right| \right) ^{N}}+\frac{(2^{-\sigma \alpha j}+\left| k2^{-j}-x\right| ^{\sigma \alpha })}{\left( 1+\left| 2^{j} (y+nh)-k\right| \right) ^{N}}\right) \\&\qquad \le C 2^{nj}|h|^{n} \sum _{k\in Z} \left( \frac{(2^{-\sigma \alpha j}+\left| k2^{-j}-x\right| ^{\sigma \alpha })}{\left( 1+\left| 2^{j} y-k\right| \right) ^{N}} +\frac{(2^{-\sigma \alpha j}+\left| k2^{-j}-x\right| ^{\sigma \alpha })}{\left( 1+\left| 2^{j} (y+nh)-k\right| \right) ^{N}}\right) \\&\qquad \le C 2^{nj}|h|^{n}(2^{-\sigma \alpha j}+|y-x|^{\sigma \alpha }+|h|^{\sigma \alpha }) \quad \text{(as } \text{ in } \text{(45) } \text{ and } \text{(46)) }. \end{aligned}$$Since \(j\le J\) and \(2^{-J } \le |h| < 2^{-J+1}\), then

$$\begin{aligned}&\sum _{k\notin [2^{j}y,2^{j}(y+nh)]}(2^{-j}+\left| k2^{-j}-x\right| )^{\sigma \alpha }\left| {\varDelta }^n_{h}\psi _{j,k}(y)\right| \\&\quad \le C 2^{nj}|h|^{n}(2^{-\sigma \alpha j}+|y-x|^{\sigma \alpha }). \end{aligned}$$ -

If \(k\in [2^{j}y,2^{j}(y+nh)]\) then \(\left| k2^{-j}-x\right| \le \left| k2^{-j}-y\right| + \left| y-x\right| \le n|h| + \left| y-x\right| \). It follows that

$$\begin{aligned}&\sum _{k\in [2^{j}y,2^{j}(y+nh)]}(2^{-j}+\left| k2^{-j}-x\right| )^{\sigma \alpha }\left| {\varDelta }_{h}^{n}\psi _{j,k}(y)\right| \\&\qquad \le C \sum _{k\in [2^{j}y,2^{j}(y+nh)]}(2^{-j\sigma \alpha }+(n|h|)^{\sigma \alpha }+\left| y-x\right| ^{\sigma \alpha })2^{nj}|h|^{n}. \end{aligned}$$But there are about \(n2^{j}\left| h\right| \) integers k in \([2^{j}y,2^{j}(y+nh)]\). Since \(j\le J\) and \(2^{-J } \le |h| < 2^{-J+1}\), then

$$\begin{aligned}&\sum _{k\in [2^{j}y,2^{j}(y+h)]}(2^{-j}+\left| k2^{-j}-x\right| )^{\sigma \alpha }\left| {\varDelta }_{h}^{n}\psi _{j,k}(y)\right| \\&\quad \le C \left( 2^{-j\sigma \alpha }+\left| y-x\right| ^{\sigma \alpha }\right) 2^{nj}|h|^{n}. \end{aligned}$$

Thus

The two sums (43) and (44) are bounded by \(C(|y-x|^{\sigma \alpha }+|h|^{\sigma \alpha })\). This yields (42).

By (41)

Consequently

and \(F \in {\mathfrak {L}}^{\varvec{\alpha '}}({\mathbf {x}})\) for all \(\varvec{\alpha '} < \varvec{\alpha }\). \(\square \)

Proof of Theorem 2

For \(d=2\)

For \(d=3\)

By induction for any \(d \ge 2\), the quantity \({\varDelta }_{{\mathbf {y}}-{\mathbf {x}}}^{{\mathbf {1}}}F({\mathbf {x}}) - F({\mathbf {y}})\) is a linear combination of the values of F at some points of \({\mathbb {R}}^d\), where at least one component of each such points coincides of that of \({\mathbf {x}}\). Since \(\int _{\mathbb {R}}\psi (t) dt =0\), it follows that

Since \(F \in Lip^{\varvec{\alpha }}({\mathbf {x}})\) then

The localization of the wavelet \(\psi _{1}\) yields (35).

The proof of the converse part of Theorem 2 is self-contained in that of the converse part of Theorem 1. \(\square \)

2.2 Characterization with Hyperbolic Wavelet Leaders

We will now see that the \({\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) regularity of the function F is closely related to the rate of decay of its hyperbolic wavelet leaders; for any \({\mathbf {j}},{\mathbf {k}}\) let \(\varvec{\lambda }({\mathbf {j}},{\mathbf {k}})\) denotes the dyadic rectangular parallelepiped

For \({\mathbf {j}}\in {\mathbb {N}}_0^d\), set

The hyperbolic wavelet leader \(d_{\varvec{\lambda }}\) associated with \(\varvec{\lambda }\) is defined as

where \(\varvec{\lambda }' \in \varvec{{\varLambda }}_{{\mathbf {j}}'}\) with \({\mathbf {j}}' \ge {\mathbf {j}}\).

Set

If \({\mathbf {x}}\in {\mathbb {R}}^d\), denote by \(\varvec{\lambda }_{{\mathbf {j}}}({\mathbf {x}})\) the unique dyadic rectangular parallelepiped in \(\varvec{{\varLambda }}_{\mathbf {j}}\) that contains \({\mathbf {x}}\).

Set

The almost characterization of \({\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) regularity of the function F by decay conditions of hyperbolic wavelet leaders is the following.

Theorem 3

Let \(\varvec{\alpha }=(\alpha _{1}, \ldots , \alpha _{d}) > {\mathbf {0}}\) and \({\mathbf {x}}=(x_{1}, \ldots ,x_{d})\in {\mathbb {R}}^{d}\).

-

1.

If \(F \in {\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) then there exists \(C>0\) such that

$$\begin{aligned} \forall \; {\mathbf {j}}=(j_{1},\ldots , j_{d}) \in {\mathbb {N}}_0^d \qquad d_{{\mathbf {j}}}({\mathbf {x}}) \le C 2^{-\displaystyle \sum _{i=1}^d \alpha _i j_{i}}. \end{aligned}$$(53) -

2.

Conversely if F is uniformly Hölder and if (53) holds then \(F \in {\mathfrak {L}}^{\varvec{\alpha '}}({\mathbf {x}})\) for all \(\varvec{\alpha '} < \varvec{\alpha }\).

Proof of Theorem 3

The proof in all its steps and arguments is the same as the one of [12].

-

1.

Let \(F \in {\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\). Let \({\mathbf {j}}\ge {\mathbf {1}}\), if \(\varvec{\lambda }' \subset 3 \varvec{\lambda }({\mathbf {x}})\) then \({\mathbf {j}}' \ge {\mathbf {j}}-{\mathbf {1}}\) and \(\left| k_i'2^{-j_i'}-x_{i}\right| \le C 2^{-j_i}\) for all \(i \in \{1,\ldots ,d\}\). Hence (34) yields (53).

-

2.

Conversely, assume that F is uniformly Hölder and (53) holds. Let \(j_i' \ge 0\) be given. If \(\lambda '_i=[k_i'2^{-j_i'}, (k_i'+1)2^{-j_i'})\), denote by \(\lambda _i=[k_i 2^{-j_i}, (k_i+1)2^{-j_i})\) the dyadic interval defined by

-

if \(\lambda _i' \subset 3 \lambda _{j_i'}(x_i)\), then \(\lambda _i=\lambda _{j_i'}(x_i)\) and \(j_i=j_i'\)

-

else, if \(j_i = \sup \{ l : \lambda _i' \subset 3 \lambda _{l}(x_i)\}\), then \(\lambda _i = \lambda _{j_i}(x_i)\) and it follows that there exists \(C>0\) such that \(\displaystyle \frac{1}{C} 2^{-j_i} \le |k_i'2^{-j_i'}-x_i| \le C 2^{-j_i}\).

If \({\mathbf {j}}' \ge {\mathbf {0}}\), relation (53) implies that

$$\begin{aligned} |C_{{\mathbf {j}}',{\mathbf {k}}'}| \le C \prod _{i=1}^d \left( 2^{-j'_{i} \alpha _i}+|k'_i2^{-j'_i}-x_i|^{\alpha _i}\right) . \end{aligned}$$ -

\(\square \)

3 Dimension Prints of Sets of Level Rectangular Pointwise Regularities

We will obtain a numerical procedure that yields information on the dimension print of sets of level rectangular pointwise regularities, expressed in terms of hyperbolic wavelet leaders.

Definition 7

Let F be as in (33). Let \(p<0\) and \(\varvec{\beta }=(\beta _1, \ldots , \beta _d) \in {\mathbb {R}}^d\). We say that F belongs to the anisotropic oscillation space \(O_p^{\varvec{\beta }}\) if \((C_\lambda )\) is bounded and

The presence of \(\varepsilon \) is mandatory for functions which have very small \(d_{\varvec{\lambda }}\)’s. Note the zero function does not belong to \(O_p^{\varvec{\beta }}\) (since \(d_{\varvec{\lambda }}^{p} = \infty \)).

For \( \varvec{\alpha }> {\mathbf {0}}\), the set of \(\varvec{\alpha }\) level rectangular pointwise regularity of F is defined as

Theorem 4

Let F be as in (33). Let \(p<0\) and \(\varvec{\beta }=(\beta _1, \ldots , \beta _d) \in {\mathbb {R}}^d\). Assume that F belongs to the anisotropic oscillation space \(O_p^{\varvec{\beta }}\). Let \(\varvec{\alpha }> {\mathbf {0}}\). Then \(print \; {\mathcal {B}}_{\varvec{\alpha }}\) is included in the set \(G_{\varvec{\alpha },\varvec{\beta },p}\) of \({\mathbf {t}}\ge {\mathbf {0}}\) such that either \(t_d \le \displaystyle \max _{n \in {\mathcal {D}}} \xi _{n}\), or \( t_{d-1} + t_d \le \displaystyle \max _{n_1\ne n_2} (\xi _{n_1} + \xi _{n_2}), \ldots \), or \( t_2 + \cdots + t_{d} \le \displaystyle \max _{n_1\ne \cdots \ne n_{d-1}} (\xi _{n_1} + \cdots + \xi _{n_{d-1}})\) or \( t_1 + \cdots + t_{d} \le \xi _{1} + \cdots + \xi _{d}\), where \(\xi _i= (\alpha _i-\beta _i)p+1\).

Proof

Let \(\varvec{\alpha }> {\mathbf {0}}\). If \(F \in {\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) then

Let \(p<0\). Then

Let \({\varOmega }_{p, C,\varvec{\alpha }}\) the set of points \({\mathbf {x}}\) satisfying (56). Then

where the union can be countable. Thanks to the countable stability of the print dimension

Clearly

with

For \({\mathbf {j}}\ge {\mathbf {0}}\), let \( N_{p, C,{\mathbf {j}}, \varvec{\alpha }}\) the cardinality of \({\varOmega }_{p, C, {\mathbf {j}},\varvec{\alpha }}\).

Since \(F \in O_p^{\varvec{\beta }}\) then (54) implies that

Let \(\varepsilon >0\). Let \(0<\delta <1\) with \( \delta < 2^{- J+1}\). Let \( j_0 \ge J\) be the unique integer such that \(2^{-j_0} \le \delta < 2^{-j_0+1}\). Choose a \(\delta \)-cover \({\mathcal {C}}_\delta \) of \({\varOmega }_{p, C, \varvec{\alpha }}\) by rectangular parallelepipeds \(\varvec{\lambda } \in {\varOmega }_{p, C, {\mathbf {j}},\varvec{\alpha }}\) and \({\mathbf {j}}\ge j_0 {\mathbf {1}}\).

Let \({\mathbf {t}}=(t_1, \ldots ,t_d) \ge {\mathbf {0}}\). Clearly

Clearly the latest series is the sum on all arrangements of the form \(j_{i_d} \ge j_{i_{d-1}} \ge \cdots j_{i_2} \ge j_{i_1}\). For that fixed arrangement \(l_n = 2^{-j_{i_n}}\) for all \(n \in {\mathcal {D}}\) and by (58)

whenever \(t_d> \xi _{i_d}, t_{d-1} + t_d> \xi _{i_{d-1}} + \xi _{i_d}, \ldots , t_2 + \cdots + t_{d} > \xi _{i_2} + \cdots + \xi _{i_{d}}\) and \( t_1 + \cdots +t_d > \xi _{1} + \cdots + \xi _{d}\).

Therefore by (59)

whenever \(t_d> \displaystyle \max _{n \in {\mathcal {D}}} \xi _{n}, t_{d-1} + t_d> \displaystyle \max _{n_1\ne n_2} (\xi _{n_1} + \xi _{n_2}), \cdots , t_2 + \cdots + t_{d} > \displaystyle \max _{n_1\ne \cdots \ne n_{d-1}} (\xi _{n_1} + \cdots + \xi _{n_{d-1}})\) and \( t_1 + \cdots + t_{d} > \xi _{1} + \cdots + \xi _{d}\).

The information we have obtained about \(print {\varOmega }_{p, C,\varvec{\alpha }}\) does not depend on C. Hence (57) achieves the proof. \(\square \)

For \(p<0\), denote by \(HD_p\) the p-hyperbolic domain of F, that is

Corollary 1

4 Examples of Anisotropic Selfsimilar Cascade Wavelet Series

We will apply Theorem 4 and Corollary 1 for some examples of anisotropic selfsimilar cascade wavelet series, written as the superposition of similar anisotropic structures at different scales, reminiscent of some possible modelization of turbulence or cascade models. Here the anisotropy corresponds to a Sierpinski carpet K. In Ref. [8], it was proved that the classical multifractal formalism may fail: contrary to the Besov smoothness, the Hölder spectrum depends on the geometric disposition of the selected boxes \((\mathfrak {R}_\omega )_{\omega \in A}\) in the construction of K (see below). To avoid this drawback, we will prove that both p-hyperbolic domain \(HD_p\) of F and dimension prints of sets of level rectangular pointwise regularities depend on the geometric disposition of the selected boxes \((\mathfrak {R}_\omega )_{\omega \in A}\).

4.1 Construction of Anisotropic Selfsimilar Cascade Wavelet Series

Let \(S_1\) and \(S_2\) be two positive integers such that \( S_1<S_2\). Put \(s_1=2^{S_1}\) and \(s_2=2^{S_2}\). We divide the unit square \({\mathfrak {R}}=[0,1]^2\) into a uniform grid of rectangles of sides \(1/s_1\) and \(1/s_2\). Let A be a subset of \( \{0,1,\ldots ,s_1-1\} \times \{0,1,\ldots ,s_2-1\}\) that contains at least two elements. For \(\omega =(a,b) \in A\), the contraction \(\displaystyle D_{\omega }(x_{1},x_{2})= \left( \frac{x_1+a}{s_1}, \frac{x_2+b}{s_2}\right) \) maps the unit square \({\mathfrak {R}}\) into the rectangle

A Sierpinski carpet K (see [8, 36, 48]) and references therein) is the unique non-empty compact set (see [24]) that satisfies

Let \(L \in {\mathbb {N}}\) and \(\varvec{\omega }= (\omega _{1},\ldots ,\omega _{L}) \in A^{L}\), with \(\omega _{r}=(a_{r},b_{r})\). Put

Then

where

with

The Sierpinski carpet is given by

Let \((\gamma _\omega )_{\omega \in A}\) be scalars with \(0<|\gamma _\omega | <1\). For \({\mathbf {x}}=(x_1,x_2) \in {\mathbb {R}}^2\), put \({\varPsi }({\mathbf {x}})=\psi (x_1) \psi (x_2)\) where \(\psi =\psi _1\) is r-smooth. The Sierpinski cascade function adapted to the subdivision A satisfies the selfsimilar equation

Define

For \(L \in {\mathbb {N}}\) and \(\varvec{\omega }= (\omega _{1},\ldots ,\omega _{L}) \in A^{L}\), put

The following result can be deduced from Proposition 1 in [8].

Proposition 1

The series

is the unique solution in \(L^{1}({\mathfrak {R}})\) for Eq. (68).

If furthermore \( s_2^{-r}< |\gamma |_{\max } \) then F is uniformly Hölder.

Clearly if \(\omega _l=(a_l,b_l)\) and \(\varvec{\omega } \in A^{L}\) then

Consider the separated open set condition “SOSC”

If \( {\mathbf {x}}\notin K \) then there exists a neighborhood \(\vartheta ({\mathbf {x}})\) of \({\mathbf {x}}\) and \(L \in {\mathbb {N}}\) such that

It follows that \(F \in {\mathfrak {L}}^{\varvec{\alpha }}({\mathbf {x}})\) for all \( \varvec{\alpha } < r {\mathbf {1}}\).

On the other hand, from the “SOSC” (72), any \({\mathbf {x}}\in K\) has a unique expansion

4.2 Estimation of Hyperbolic Wavelet Leaders

For \(j \in {\mathbb {N}}\) and \(k\in \left\{ 0,\ldots ,2^{j}-1\right\} \), put \(\lambda _{j,k}=\displaystyle \left[ \frac{k}{2^{j}},\frac{k+1}{2^{j}}\right) \). Denote by \({\varLambda }_{j}\) the set of dyadic intervals \(\lambda _{j,k}\) with \(k\in \left\{ 0,\ldots ,2^{j}-1\right\} \). For \(\lambda =\lambda _{j,k}\in {\varLambda }_{j}\) there exists a unique \((\epsilon _{i}(\lambda ))_{1\le i\le j}\) in \(\left\{ 0,1\right\} ^j\) such that

Let \(S \in {\mathbb {N}}\) and \(s =2^S\). For \(j \in {\mathbb {N}}\) put

where [.] is the notation for the integer part. By some arrangement for (75), there exists a unique \((\alpha _{r}(\lambda ,S))_{1\le r\le m}\) in \(\left\{ 0,\ldots ,s-1\right\} ^{m}\) such that

where

For \(a\in \left\{ 0,\ldots ,s-1\right\} \) and \(r\ge 1\), denote by \((\epsilon _{i}(a,r,,S))_{ i\in \left\{ (r-1)S+1,\ldots ,rS\right\} } \in \left\{ 0,1\right\} ^S\), satisfying

and for \((r-1)S+1\le j\le rS\), put

Let \(\lambda \in {\varLambda }_{j}\) and \(\lambda '\in {\varLambda }_{j'}\) with \(j\le j'\). Clearly

Then, from above, the following result holds.

Lemma 1

Let \(\lambda \in {\varLambda }_{j}\) and \(m=\displaystyle [\frac{j}{ S} ]\). Then \(\lambda ' \subset \lambda \) if and only if

Let \({\mathbf {j}}=(j_1,j_2) \in {\mathbb {N}}^2\). Let \(\varvec{\lambda }=\lambda _{1}\times \lambda _{2}\in \varvec{{\varLambda }}_{{\mathbf {j}}}\). Let \(\varvec{\omega }= (\omega _{1},\dots ,\omega _{L}) \in A^{L}\), \(\omega _{l}=(a_{l},b_{l})\). For \(i \in \{1,2\}\), let \(m_{i}=\displaystyle [\frac{j}{ S_i} ]\). Then Lemma 1 yields the following result.

Lemma 2

Put

Definition 8

We say that \(\varvec{\lambda }\) is \(A^{*}-\)admissible if there exists \(j\ge 1\) and \(\varvec{\omega } \in A^{j}\) such that \({\mathfrak {R}}_{\varvec{\omega }}\subset \varvec{\lambda }\).

Lemma 2 yields the following result.

Proposition 2

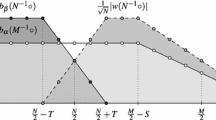

Let \(\varvec{\lambda } \in {\varLambda }_{j_{1}}\times {\varLambda }_{j_{2}}\). Put \(m=\inf \left\{ m_{1},m_{2}\right\} \). Suppose that \(\varvec{\lambda }\) is \(A^{*}-\)admissible. Put

Let j be the smallest integer satisfying \(j\ge \max \left\{ \frac{j_{1}}{S_1},\frac{j_{2}}{S_2}\right\} \). Then

where \(M \approx N\) means that there exists a constant \(C \ge 1\) such that \(N/C \le M \le CN\).

4.3 Two Geometric Cases

Let \(A_{1}=\left\{ a \;:\; \exists b \;;\; (a,b)\in A\right\} \) and \(B_{1}=\left\{ b \;:\; \exists a \;\;;\; (a,b)\in A\right\} \).

If \(\frac{j_{1}}{S_1}\ge \frac{j_{2}}{S_2}\) then using Proposition 2

If \(\frac{j_{1}}{S_1}\le \frac{j_{2}}{S_2}\) then using Proposition 2

We will prove that the p-hyperbolic domain \(HD_p\) of F and the dimension prints of sets of level rectangular pointwise regularities depend on the geometric disposition of boxes \(\mathfrak {R}_\omega \) with \(\omega \in A\). For that, it suffices to consider two geometric choices: first choice: each row and column of the grid contains at most one box \(\mathfrak {R}_\omega \) with \(\omega \in A\), and second choice: only one column contains all boxes \(\mathfrak {R}_\omega \) with \(\omega \in A\).

4.4 Case 1: Each Column and Each Row of the Grid Contains at Most One Box \(\mathfrak {R}_\omega \) with \(\omega \in A\).

4.4.1 p-Hyperbolic Domain

For \(q \in {\mathbb {R}}\), define \(\tau (q)\) by \( \displaystyle \sum _{\omega \in A} |\gamma |_{\omega }^{q} =s_1^{-\tau (q)}\). Let \(\sigma = S_1/S_2\).

Proposition 3

Assume that each column and each row of the grid contains at most one box \(\mathfrak {R}_\omega \) with \(\omega \in A\). Let \(p<0\). Then \(\varvec{\beta }=(\beta _1,\beta _2)\) belongs to the p-hyperbolic domain \(HD_p\) of F iff

and

Proof

Let \({\mathbf {j}}= (j_1,j_2) \ge {\mathbf {0}}\). For \(i \in \{1,2\}\), let \(m_{i}=\displaystyle [\frac{j}{ S_i} ]\).

-

Case \(\frac{j_{1}}{S_1}\le \frac{j_{2}}{S_2}\) . Then by (84)

$$\begin{aligned} \sum _{\varvec{\lambda }\in {\varLambda }_{j_{1}}\times {\varLambda }_{j_{2}}} d_{\varvec{\lambda }}^{p} \approx s_1^{-\tau (p) m_2}, \end{aligned}$$and

$$\begin{aligned} 2^{- |{\mathbf {j}}| + p \displaystyle \sum _{i=1}^2 \beta _i j_i } \sum _{\varvec{\lambda }\in {\varLambda }_{j_{1}}\times {\varLambda }_{j_{2}}} d_{\varvec{\lambda }}^{p}\approx 2^{-S_1m_1-S_2m_2+S_1m_1\beta _1p+S_2m_2 \beta _2p-S_1\tau (p) m_2}. \end{aligned}$$We have

$$\begin{aligned} \forall \; m_1 \le m_2 \quad 2^{-S_1m_1-S_2m_2+S_1m_1\beta _1p+S_2m_2 \beta _2p-S_1\tau (p) m_2} \le C, \end{aligned}$$if

$$\begin{aligned} \forall \; m_1 \le m_2 \quad -S_1m_1-S_2m_2+S_1m_1\beta _1p+S_2m_2 \beta _2p-S_1\tau (p) m_2 \le \log C. \end{aligned}$$Fix \(m_1\). Coefficient of \(m_2\) is

$$\begin{aligned} -S_2+S_2 \beta _2p-S_1\tau (p) \le 0. \end{aligned}$$Hence (86) holds.

In that case

$$\begin{aligned}&\sup _{m_2 \ge m_1} -S_1m_1-S_2m_2+S_1m_1\beta _1p+S_2m_2 \beta _2p-S_1\tau (p) m_2 \\&\qquad = -S_1m_1-S_2m_1+S_1m_1\beta _1p+S_2m_1 \beta _2p-S_1\tau (p) m_1. \end{aligned}$$So coefficient of \(m_1\) is

$$\begin{aligned} -S_1-S_2+S\beta _1p+S_2\beta _2p-S_1\tau (p) \le 0 \;\; \forall \; p<0. \end{aligned}$$ -

Case \(\frac{j_{1}}{S_1}\ge \frac{j_{2}}{S_2}\) . Then by (83)

$$\begin{aligned} \sum _{\varvec{\lambda }\in {\varLambda }_{j_{1}}\times {\varLambda }_{j_{2}}} d_{\varvec{\lambda }}^{p} \approx s_1^{-\tau (p) m_1}, \end{aligned}$$and

$$\begin{aligned} 2^{- |{\mathbf {j}}| + p \displaystyle \sum _{i=1}^2 \beta _i j_i } \sum _{\varvec{\lambda }\in {\varLambda }_{j_{1}}\times {\varLambda }_{j_{2}}} d_{\varvec{\lambda }}^{p} \approx 2^{-S_1m_1-S_2m_2+S_1m_1\beta _1p+S_2m_2 \beta _2p -S_1\tau (p) m_1}. \end{aligned}$$We have

$$\begin{aligned} \forall \; m_1 \ge m_2 \quad 2^{-S_1m_1-S_2m_2+S_1m_1\beta _1p+S_2m_2 \beta _2p -S_1\tau (p) m_1} \le C, \end{aligned}$$if

$$\begin{aligned} \forall \; m_1 \ge m_2 \quad -S_1m_1-S_2m_2+S_1m_1\beta _1p+S_2m_2 \beta _2p -S_1\tau (p) m_1 \le \log C. \end{aligned}$$Fix \(m_2\). Coefficient of \(m_1\) is

$$\begin{aligned} -S_1+S_1\beta _1p-S_1\tau (p) \le 0. \end{aligned}$$Hence (85) holds.

In that case

$$\begin{aligned}&\sup _{m_1 \ge m_2} -S_1m_1-S_2m_2+S_1m_1\beta _1p+S_2m_2 \beta _2p -S_1\tau (p) m_1 \\&\quad = -S_1m_2-S_2m_2+S_1m_2\beta _1p+S_2m_2 \beta _2p -S_1\tau (p) m_2. \end{aligned}$$So coefficient of \(m_2\) is

$$\begin{aligned} -S_1-S_2+S_1\beta _1p+S_2 \beta _2p -S_1\tau (p) \le 0 \;\; \forall \; p<0. \end{aligned}$$\(\square \)

4.4.2 \(Print \; {\mathcal {B}}_{\varvec{\alpha }}\)

Let \(\xi _{1},\xi _{2}\in {\mathbb {R}}\). Then, by Proposition 3, there exist \(\varvec{\beta }=(\beta _1,\beta _2)\) in the p-hyperbolic domain \(HD_p\) of F, for \(p<0\), such that \(\xi _{i}=(\alpha _i-\beta _i)p+1\), \(i=1,2\) if

For \(p< 0\), denote by

Write \(G(\varvec{\xi })\) instead of \(G_{\varvec{\alpha },\varvec{\beta },p}\) in Theorem 4, i.e.,

Then thanks to Corollary 1

Clearly, if \(\xi _1 < 0\) and \(\xi _2 < 0\) then

if \(\xi _1 \le 0\) then

and if \(\xi _2 \le 0\) then

Recall that \(\tau \) is concave and strictly increasing. The following lemma is straightforward.

Lemma 3

The function \(k:p\mapsto \frac{\tau (p)}{p}\) is strictly increasing on \((-\infty ,0)\). It’s range on \((-\infty ,0)\) is \(\left( \displaystyle \frac{H_{\max }}{\sigma }, \infty \right) \).

Corollary 2

If \(\alpha _{1} > \displaystyle \frac{H_{\max }}{\sigma }\) and \(\alpha _{2} > H_{\max }\) then \(print \; {\mathcal {B}}_{\varvec{\alpha }}=\emptyset \).

Proof

If \(\alpha _{1} > \displaystyle \frac{H_{\max }}{\sigma }\) and \(\alpha _{2} > H_{\max }\) then Lemma 3 implies that \(\alpha _{1}p-\tau (p)<0\) and \(\alpha _{2}p-\sigma \tau (p)<0\) for some \(p<0\). Thus there exists \(p<0\) and \(\varvec{\xi }\in D_{\varvec{\alpha },p}\) such that \(\varvec{\xi }< {\mathbf {0}}\). So Theorem 4 yields \(G(\varvec{\xi })=\emptyset \). Thus \(print \; {\mathcal {B}}_{\varvec{\alpha }}=\emptyset \). \(\square \)

Assume now that \(\varvec{\alpha }\ngtr \left( \displaystyle \frac{H_{\max }}{\sigma }, H_{\max }\right) \). Unfortunately, it isn’t easy to identify \(\displaystyle \bigcap _{p< 0\;,\;\varvec{\xi }\in D_{\varvec{\alpha },p}}G(\varvec{\xi })\).

-

Assume that \(\alpha _{1} > \displaystyle \frac{H_{\max }}{\sigma }\) and \(\alpha _{2} \le H_{\max }\). Using Lemma 3, write \(\alpha _{1}= k ({\widetilde{p}}_{1})\), with \({\widetilde{p}}_{1} <0\). Then

$$\begin{aligned} \alpha _1 p - \tau (p) \le 0 \; \Leftrightarrow \; p \le {\widetilde{p}}_{1}. \end{aligned}$$Thus for any \(p \le {\widetilde{p}}_{1}\)

$$\begin{aligned} \bigcap _{\varvec{\xi }\in D_{\varvec{\alpha },p},\; \xi _{1}\le 0}G(0,\xi _{2})=G\left( 0,\alpha _{2}p-\sigma \tau (p)\right) . \end{aligned}$$Thus

$$\begin{aligned} \bigcap _{p< 0\;,\;\varvec{\xi }\in D_{\varvec{\alpha },p}}G(\varvec{\xi })&\subset \bigcap _{p \le {\widetilde{p}}_{1}}G\left( 0,\alpha _{2}p-\sigma \tau (p)\right) \\&=\left\{ {\mathbf {t}} \ge {\mathbf {0}}\;;\; t_{2}\le \displaystyle \inf _{ p \le {\widetilde{p}}_{1} } \left( \alpha _{2}p-\sigma \tau (p)\right) \right\} . \end{aligned}$$ -

Assume that \(\alpha _{1} \le \displaystyle \frac{H_{\max }}{\sigma }\) and \(\alpha _{2} > H_{\max }\). Using Lemma 3, write \(\alpha _{2} = \sigma k ({\widetilde{p}}_{2})\), with \({\widetilde{p}}_{2} <0\). Then

$$\begin{aligned} \alpha _2 p - \sigma \tau (p) \le 0 \; \Leftrightarrow \; p \le {\widetilde{p}}_{2}. \end{aligned}$$Thus for any \(p \le {\widetilde{p}}_{2}\)

$$\begin{aligned} \bigcap _{\varvec{\xi }\in D_{\varvec{\alpha },p},\; \xi _{2}\le 0}G(\xi _{1},0)=G\left( \alpha _{1}p-\tau (p),0\right) . \end{aligned}$$It follows that

$$\begin{aligned} \bigcap _{p< 0\;,\;\varvec{\xi }\in D_{\varvec{\alpha },p}}G(\varvec{\xi })&\subset \bigcap _{p \le {\widetilde{p}}_{2}}G\left( \alpha _{1}p-\tau (p),0\right) \\&=\left\{ {\mathbf {t}} \ge {\mathbf {0}}\;;\; t_{2}\le \displaystyle \inf _{p \le {\widetilde{p}}_{2}} (\alpha _{1}p-\tau (p))\right\} . \end{aligned}$$ -

If \(\alpha _{1} \le \displaystyle \frac{H_{\max }}{\sigma }\) and \(\alpha _{2} \le H_{\max }\) then \( \alpha _1 p - \tau (p) > 0\) and \( \alpha _2 p - \sigma \tau (p) > 0\) for all \(p<0\). We propose to give information on \(\displaystyle \bigcap _{p< 0\;,\;\varvec{\xi }\in D_{\varvec{\alpha },p}}G(\varvec{\xi })\) using \(G({\widetilde{\varvec{\xi }}})\), with \(\widetilde{\varvec{\xi }}= ({\tilde{\xi }}_1, {\tilde{\xi }}_2) \in D_{\varvec{\alpha },{\tilde{p}}}\) and \({\tilde{p}} \le 0\), that minimizes the problem

$$\begin{aligned} {\tilde{\xi }}_1+ {\tilde{\xi }}_2=\inf _{p< 0\;,\;\varvec{\xi }\in D_{\varvec{\alpha },p} } (\xi _{1}+\xi _{2}). \end{aligned}$$(88)Two steps are needed to solve the previous optimisation problem.

-

We first fix \(p <0\) and minimize

$$\begin{aligned} {\tilde{\xi }}_1(p)+ {\tilde{\xi }}_2(p)=\inf _{\varvec{\xi }\in D_{\varvec{\alpha },p} } (\xi _{1}+\xi _{2}). \end{aligned}$$(89)Since the map \((\xi _1,\xi _2) \mapsto \xi _{1}+\xi _{2}\) is affine then the previous infimum is reached on the boundary of \(D_{\varvec{\alpha },p} \).

-

Next, we minimize

$$\begin{aligned} {\tilde{\xi }}_1+ {\tilde{\xi }}_2=\inf _{p <0}{\tilde{\xi }}_1(p)+ {\tilde{\xi }}_2(p). \end{aligned}$$(90)

We can easily check that \(({\tilde{\xi }}_1(p), {\tilde{\xi }}_2(p)) =(\alpha _{1}p-\tau (p),\alpha _{2}p-\sigma \tau (p)))\) and \(\widetilde{\varvec{\xi }}=(\alpha _{1}p_3-\tau (p_3),\alpha _{2}p_3-\sigma \tau (p_3)))\) where \(p_{3}\) satisfies

$$\begin{aligned} \alpha _{1}p_{3}+\alpha _{2}p_{3}-(1+\sigma )\tau (p_{3})=\displaystyle \inf _{p<0} (\alpha _{1}p+\alpha _{2}p-(1+\sigma )\tau (p)). \end{aligned}$$Since \(\alpha _{1}+\alpha _{2}\le (1+\sigma )\frac{H_{\max }}{\sigma }\) and \(\frac{H_{\max }}{\sigma }=\displaystyle \lim _{p\rightarrow -\infty }\tau '(p)=\displaystyle \sup _{p<0}\tau '(p)\), only two cases rise:

-

If \(\alpha _{1}+\alpha _{2}\le (1+\sigma )\tau '(0)\) then \(p_{3}=0\).

-

If \(\alpha _{1}+\alpha _{2}> (1+\sigma )\tau '(0)\) then \(p_{3}<0\) and \(\alpha _{1}+\alpha _{2}=(1+\sigma )\tau '(p_3)\).

-

4.5 Case 2: Only One Column Contains All Boxes \(\mathfrak {R}_\omega \) with \(\omega \in A\)

4.5.1 p-Hyperbolic Domain

Proposition 4

Assume that only one column contains all boxes \(\mathfrak {R}_\omega \) with \(\omega \in A\). Let \(p<0\). Then \(\varvec{\beta }=(\beta _1,\beta _2)\) belongs to the p-hyperbolic domain \(HD_p\) of F iff

and

4.5.2 \(print \; {\mathcal {B}}_{\varvec{\alpha }}\)

Let \(\xi _{1},\xi _{2}\in {\mathbb {R}}\). Then there exist \(\varvec{\beta }=(\beta _1,\beta _2)\) in the p-hyperbolic domain \(HD_p\) of F, for \(p<0\), such that \(\xi _{i}=(\alpha _i-\beta _i)p+1\), \(i=1,2\) iff the two following conditions are fulfilled

and

For \(p< 0\), denote by

We have

Corollary 3

If \(\alpha _{1}>\frac{H_{\min } }{\sigma }\) and \(\alpha _{2}>H_{\max }\) then \(print \; {\mathcal {B}}_{\varvec{\alpha }}=\emptyset \).

Proof

If \(\alpha _{1}>\frac{H_{\min } }{\sigma }\) and \(\alpha _{2}>H_{\max }\) then for some \(p<0\), we have \(\alpha _{1}p-\frac{H_{\min } }{\sigma }p<0\) and \(\alpha _{2}p-\sigma \tau (p)<0\). Thus there exists \(\varvec{\xi }\in D_{\varvec{\alpha },p}\) such that \(\varvec{\xi }< {\mathbf {0}}\), so that \(G(\varvec{\xi })=\emptyset \). Thus \(print \; {\mathcal {B}}_{\varvec{\alpha }}=\emptyset \). \(\square \)

Assume now that \(\varvec{\alpha }\ngtr ( \displaystyle \frac{H_{\min }}{\sigma }, H_{\max })\).

-

If \(\alpha _{1}>\frac{H_{\min } }{\sigma }\) and \(\alpha _{2}\le H_{\max }\) then for all \(p<0\) \(\alpha _{1}p-\frac{H_{\min } }{\sigma }p<0\) and

$$\begin{aligned} \bigcap _{\varvec{\xi }\in D_{\varvec{\alpha },p},\; \xi _{1}\le 0}G(0,\xi _{2})=G(0,\alpha _{2}p-\sigma \tau (p)). \end{aligned}$$It follows that

$$\begin{aligned} \bigcap _{p< 0\;,\;\varvec{\xi }\in D_{\varvec{\alpha },p}}G(\varvec{\xi })&\subset \bigcap _{p<0 }G(0,\alpha _{2}p-\sigma \tau (p))\\&=\left\{ {\mathbf {t}}\ge {\mathbf {0}}\;;\; t_{2}\le \displaystyle \inf _{p<0} (\alpha _{2}p-\sigma \tau (p)) \right\} . \end{aligned}$$ -

Assume that \(\alpha _{1}\le \frac{H_{\min } }{\sigma }\) and \(\alpha _{2}>H_{\max }\). Using Lemma 3, write \(\alpha _{2}=\sigma k ({\widetilde{p}}_{2})\), with \({\widetilde{p}}_{2} <0\). Then

$$\begin{aligned} \alpha _2 p - \sigma \tau (p) \le 0 \; \Leftrightarrow \; p \le {\widetilde{p}}_{2}. \end{aligned}$$Thus for any \(p \le {\widetilde{p}}_{2}\)

$$\begin{aligned} \bigcap _{\varvec{\xi }\in D_{\varvec{\alpha },p},\; \xi _{2}\le 0}G(\xi _{1},0)=G(\alpha _{1}p-\frac{H_{\min } }{\sigma }p,0). \end{aligned}$$Therefore

$$\begin{aligned} \bigcap _{p< 0\;,\;\varvec{\xi }\in D_{\varvec{\alpha },p}}G(\varvec{\xi })&\subset \bigcap _{p \le {\widetilde{p}}_{2}}G(\alpha _{1}p-\frac{H_{\min } }{\sigma }p,0)\\&=\left\{ {\mathbf {t}}\ge {\mathbf {0}}\;;\; t_{2}\le \displaystyle \inf _{p \le {\widetilde{p}}_{2}} (\alpha _{1}p-\frac{H_{\min } }{\sigma }p) \right\} . \end{aligned}$$ -

If \(\alpha _{1}\le \frac{H_{\min } }{\sigma }\) and \(\alpha _{2}\le H_{\max }\) then \( \alpha _1 p - \tau (p) > 0\) and \( \alpha _2 p - \sigma \tau (p) > 0\) for all \(p<0\). We proceed as in (88) with steps (89) and (90). We can easily check that \(({\tilde{\xi }}_1(p), {\tilde{\xi }}_2(p)) =\left( \alpha _{1}p-\frac{H_{\min } }{\sigma }p,\alpha _{2}p-\sigma \tau (p))\right) \) and \(\widetilde{\varvec{\xi }}=(\alpha _{1}p_{3}-\frac{H_{\min } }{\sigma }p_{3},\alpha _{2}p_3-\sigma \tau (p_3)))\) where \(p_{3}\) satisfies

$$\begin{aligned} \alpha _{1}p_{3}+\alpha _{2}p_{3}-\frac{H_{\min } }{\sigma }p_{3}-\sigma \tau (p_{3})=\displaystyle \inf _{p<0} \left( \alpha _{1}p+\alpha _{2}p-\frac{H_{\min } }{\sigma }p-\sigma \tau (p)\right) . \end{aligned}$$Since \(\alpha _{1}+\alpha _{2}\le \frac{H_{\min } }{\sigma }+H_{\max }\) only two cases rise:

-

If \(\alpha _{1}+\alpha _{2}\le \frac{H_{\min } }{\sigma }+\sigma \tau '(0)\), then \(p_{3}=0\).

-

If \(\alpha _{1}+\alpha _{2}> \frac{H_{\min } }{\sigma }+\sigma \tau '(0)\), then \(p_{3}<0\) and \(\alpha _{1}+\alpha _{2}= \frac{H_{\min } }{\sigma }-\sigma \tau '(p_{3})\).

-

References

Abry, P., Clausel, M., Jaffard, S., Roux, S.G., Vedel, B.: Hyperbolic wavelet transform: an efficient tool for multifractal analysis of anisotropic textures. Rev. Math. Iberoam. 31, 313–348 (2015)

Abry, P., Roux, S.G., Wendt, H., Messier, P., Klein, A.G., Tremblay, N., Borgnat, P., Jaffard, S., Vedel, B., Coddington, C., Daffner, L.: Multiscale anisotropic texture analysis and classification of photographic prints: art scholarship meets image processing algorithms. IEEE Signal Process. Mag. 32(4), 18–27 (2015)

Aimar, H., Gomez, I.: Parabolic Besov regularity for the heat equation. Constr. Approx. 36, 145–159 (2012)

Arneodo, A., Audit, B., Decoster, N., Muzy, J.-F., Vaillant, C.: Wavelet-based multifractal formalism: applications to DNA sequences, satellite images of the cloud structure and stock Mmarket data. In: Bunde, A., Kropp, J., Schellnhuber, H.J. (eds.) The Science of Disasters, pp. 27–102. Springer, Berlin (2002)

Aubry, J.M., Maman, D., Seuret, S.: Local behavior of traces of Besov functions: prevalent results. J. Funct. Anal. 264, 631–660 (2013)

Ayache, A., Léger, S., Pontier, M.: Drap Brownien fractionnaire. Potential Anal. 17, 31–43 (2002)

Ben Braiek, H., Ben Slimane, M.: Directional regularity criteria C. R. Acad. Sci. Paris Sér. I Math. 324, 981–986 (2011)

Ben Slimane, M.: Multifractal formalism and anisotropic self similar functions. Math. Proc. Camb. Philos. Soc. 124, 329–363 (1998)

Ben Slimane, M.: Wavelet characterizations of multi-directional regularity. Fractals 20, 245–256 (2012)

Ben Slimane, M., Ben Braiek, H.: Directional and anisotropic regularity and irregularity criteria in Triebel wavelet bases. J. Fourier Anal. Appl. 18, 893–914 (2012)

Ben Slimane, M., Ben Braiek, H.: Baire generic results for the anisotropic multifractal formalism. Rev. Mater. Comput. 29, 127–167 (2016)

Ben Slimane, M., Ben Abid, M., Ben Omrane, I., Turkawi, M.M.: Pointwise rectangular Lipschitz regularities for fractional Brownian sheets and some Sierpinski self similar functions. Mathematics 8, 1179 (2020)

Berkolako, M.Z., Novikov, I.Y.: Wavelet bases in spaces of differentiable functions of anisotropic smoothness. Dokl. Akad. Nauk. 324, 615–618 (1992)

Berkolako, M.Z., Novikov, I.Y.: Unconditional bases in spaces of functions of anisotropic smoothness. Trudy Mat. Inst. Steklov. Issled. Teor. Differ. Funktsii Mnogikh Peremen. Prilozh. 204, 35–51 (1993)

Biermé, H.M., Meerschaert, M., Scheffler, H.P.: Operator scaling stable random fields. Stoch. Proc. Appl. 9(3), 312–332 (2007)

Bonami, A., Estrade, A.: Anisotropic analysis of some Gaussian models. J. Fourier Anal. Appl. 9, 215–236 (2003)

Candès, E., Donoho, D.: Ridgelets: a key to higher-dimensional intermittency? Philos. Trans. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 357(1760), 2495–2509 (1999)

Clausel, M., Vedel, B.: Explicit constructions of operator scaling Gaussian fields. Fractals 19, 101–111 (2011)

Daubechies, I.: Orthonormal bases of compactly supported wavelets. Commun. Pure Appl. Math. 41, 909–996 (1988)

Davies, S., Hall, P.: Fractal analysis of surface roughness by using spatial data. J. R. Stat. Soc. Ser. B Stat. Methodol. 61, 3–37 (1999)

DeVore, R.-A., Konyagin, S.-V., Temlyakov, V.-N.: Hyperbolic wavelet approximation. Constr. Approx. 14, 1–26 (1998)

Donoho, D.: Wedgelets: nearly minimax estimation of edges. Ann. Stat. 27, 353–382 (1999)

Dũng, D., Temlyakov, V.N., Ullrich, T.: Hyperbolic Cross Approximation. Advanced Courses in Mathematics. CRM Barcelona. Birkhäuser/Springer, Berlin (2019)

Falconer, K.J.: Fractal Geometry: Mathematical Foundations and Applications. Wiley, Toronto (1990)

Farkas, W.: Atomic and subatomic decompositions in anisotropic function spaces. Math. Nachr. 209, 83–113 (2000)

Frisch, U., Parisi, G.: Fully developed turbulence and intermittency. In: Fermi, E. (ed.) Proceedings of the International Summer School in Physics, pp. 84–88. North Holland, Amsterdam (1985)

Garrigós, G., Tabacco, A.: Wavelet decompositions of anisotropic Besov spaces. Math. Nachr. 239, 80–102 (2002)

Garrigós, G., Hochmuth, R., Tabacco, A.: Wavelet characterizations for anisotropic Besov spaces with $0<p<1$. Proc. Edinb. Math. Soc. 47, 573–595 (2004)

Guo, K., Labate, D.: Analysis and detection of surface discontinuities using the 3D continuous shearlet transform. Appl. Comput. Harm. Anal. 30, 231–242 (2011)

Haroske, D., Tamàsi, E.: Wavelet frames for distributions in anisotropic Besov spaces. Georg. Math. J. 12(4), 637–658 (2005)

Hochmuth, R.: Wavelet characterizations for anisotropic Besov spaces. Appl. Comput. Harmon. Anal. 12, 179–208 (2002)

Jaffard, S.: Wavelet techniques in multifractal analysis. In: Fractal Geometry and Applications: A Jubilee of Benoit Mandelbrot. Proc. Symp. Pure Math., AMS, Providence (2004)

Jaffard, S.: Pointwise and directional regularity of nonharmonic Fourier series. Appl. Comput. Harmon. Anal. 28, 251–266 (2010)

Kamont, A.: Isomorphism of some anisotropic Besov and sequence spaces. Studia Math. 110(2), 169–189 (1994)

Kamont, A.: On the fractional anisotropic Wiener field. Probab. Math. Stat. 16, 85–98 (1996)

King, J.: The singularity spectrum for general Sierpinski carpets. Adv. Math. 116, 1–11 (1995)

Lakhonchai, P., Sampo, J., Sumetkijakan, S.: Shearlet transforms and Hölder regularities. Int. J. Wavelets Multiresolut. Inf. Process. 8(5), 743–771 (2010)

Lemarié, P.-G., Meyer, Y.: Ondelettes et bases hilbertiennes. Rev. Mater. Iberoam. 1, 1–8 (1986)

Lévy Véhel, J., Riedi, R.: Fractional Brownian Motion and Data Traffic Modeling: The Other End of the Spectrum. Fractals in Engineering. Springer, New York (1997)

Mallat, S.: Applied mathematics meets signal processing. In: Chen, L.H.Y., et al. (eds.) Challenges for the 21st Century. Papers from the International Conference on Fundamental Sciences: Mathematics and Theoretical Physics (ICFS 2000), Singapore, March 13–17, 2000, pp. 138–161. World Scientific, Singapore (2001)

Mandelbrot, B.: Intermittent turbulence in selfsimilar cascades: divergence of high moments and dimension of the carrier. J. Fluid Mech. 62, 331–358 (1974)

Mandelbrot, B.: Les Objets Fractals: Forme, Hasard et Dimension. Flammarian, Paris (1975)

Mandelbrot, B.: The Fractal Geometry of Nature. W. H. Freeman, New York (1982)

Mandelbrot, B.: Multifractals and $1/f$ Noise. Springer, New York (1999)

Mattila, P.: Geometry of Sets and Measures in Euclidean Space. Cambridge University Press, Cambridge (1995)

Meyer, Y.: Ondelettes et Opérateurs. Hermann, Paris (1990)

Nualtong, K., Sumetkijakan, S.: Analysis of Hölder regularities by wavelet-like transforms with parabolic scaling. Thai J. Math. 3, 275–283 (2005)

Olsen, L.: Self-affine multifractal Sierpinski sponges in $R^d$. Pac. J. Math. 183, 143–199 (1998)

Pesquet-Popesu, B., Lévy-Véhel, J.: Stochastic fractal models for image processing. IEEE Signal Process. Mag. 19, 48–62 (2002)

Ponson, L., Bonamy, D., Auradou, H., Mourot, G., Morel, S., Bouchaud, E., Guillot, C., Hulin, J.P.: Anisotropic self-affine properties of experimental fracture surfaces. Int. J. Fracture 140, 27–37 (2006)

Rogers, C.A.: Dimension prints. Mathematika 35, 1–27 (1988)

Rosiene, C.-P., Nguyen, T.-Q.: Tensor-product wavelet vs. Mallat decomposition: a comparative analysis. In: Proceedings of the 1999 IEEE International Symposium on Circuits and Systems VLSI (Cat. No.99CH36349)

Roux, S.G., Clausel, M., Vedel, B., Jaffard, S., Abry, P.: Self-similar anisotropic texture analysis: the hyperbolic wavelet transform contribution. IEEE Trans. Image Proc. 22(11), 4353–4363 (2013)

Sampo, J., Sumetkijakan, S.: Estimations of Hölder regularities and direction of singularity by Hart Smith and curvelet transforms. J. Fourier Anal. Appl. 15, 58–79 (2009)

Schäfer, M., Ullrich, T., Vedel, V.: Hyperbolic wavelet analysis of classical isotropic and anisotropic Besov-Sobolev spaces. J. Fourier Anal. Appl. 27, 51 (2021)

Triebel, H.: Interpolation Theory, Function Spaces, Differential Operators. North-Holland, Amsterdam (1978)

Triebel, H.: Wavelet bases in anisotropic function spaces. In: Proceedings of the Conference “Function Spaces, Differential Operators and Nonlinear Analysis”, Milovy, 2004, pp. 370–387. Math. Inst. Acad. Sci. Czech Republic, Prague (2005)

Triebel, H.: Theory of Function Spaces III. Monographs in Mathematics, vol. 78. Birkhäuser, Basel (2006)

Yu, T.-P., Stoschek, A., Donoho, D.-L.: Translation and direction invariant denoising of 2D and 3D images: experience and algorithms. Proc. SPIE 2825, 608–619 (1996)

Zavadsky, V.: Image approximation by rectangular wavelet transform. J. Math. Imaging Vis. 27, 129–138 (2007)

Acknowledgements

Mourad Ben Slimane and Maamoun Turkawi extend their appreciation to the Deanship of Scientific Research at King Saud University for funding this work through research group No. (RG-1435-063). The authors would like to thank the referees for their comments and remarks that greatly helped to improve the presentation of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Hartmut Führ.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ben Abid, M., Ben Slimane, M., Ben Omrane, I. et al. Multifractal Analysis of Rectangular Pointwise Regularity with Hyperbolic Wavelet Bases. J Fourier Anal Appl 27, 90 (2021). https://doi.org/10.1007/s00041-021-09890-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00041-021-09890-7

Keywords

- Pointwise regularity

- Hausdorff dimension

- Dimension print

- Wavelet analysis

- Anisotropic selfsimilar cascade wavelet series