Abstract

This paper provides analysis for convergence of the singular value thresholding algorithm for solving matrix completion and affine rank minimization problems arising from compressive sensing, signal processing, machine learning, and related topics. A necessary and sufficient condition for the convergence of the algorithm with respect to the Bregman distance is given in terms of the step size sequence \(\{\delta _k\}_{k\in \mathbb {N}}\) as \(\sum _{k=1}^{\infty }\delta _k=\infty \). Concrete convergence rates in terms of Bregman distances and Frobenius norms of matrices are presented. Our novel analysis is carried out by giving an identity for the Bregman distance as the excess gradient descent objective function values and an error decomposition after viewing the algorithm as a mirror descent algorithm with a non-differentiable mirror map.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Matrix completion and affine rank minimization are important research problems arising from numerous applications in various fields including compressive sensing, signal processing, machine learning, computer vision and control [6, 7, 18]. A simple and efficient first-order method for solving these problems is the singular value thresholding (SVT) algorithm introduced in [5].

Let \(\mathcal {A}\) be a linear transformation mapping \(n_1\times n_2\) matrices to \(\mathbb {R}^m\) and \(b\in \mathbb {R}^m\). SVT aims to find a low-rank solution to the linear system \(\mathcal {A}(X)=b\) by iteratively producing a sequence of matrix pairs \(\{(X^k,Y^k)\}_{k\in \mathbb {N}}\) as

where \(\mathcal {A}^*\) denotes the adjoint of \(\mathcal {A}\), \(X^1=Y^1\) is the zero matrix in \(\mathbb {R}^{n_1\times n_2}\) and \(\{\delta _k\}_{k\in \mathbb {N}} \) is a sequence of positive step sizes. Here \(\mathcal {D}_\tau (Y^{k+1})\) is a soft-thresholding operator at level \(\tau >0\) to be defined in (4) below, acting on the matrix \(Y^{k+1}\) to produce a low-rank approximation \(X^{k+1} = \mathcal {D}_\tau (Y^{k+1})\). Due to the ability of producing low-rank solutions with the soft-thresholding operator, SVT was shown to be extremely efficient at addressing problems with low-rank optimal solutions such as recommender systems [5]. It was shown in [5] that SVT is equivalent to the gradient descent algorithm applied to the dual problem of

where \(\Vert X\Vert _*=\Vert \sigma (X)\Vert _1\) and \(\Vert X\Vert _F=\Vert \sigma (X)\Vert _2\) are the nuclear norm and Frobenius norm of X, respectively. Here \(\sigma (X)\) denotes the vector of all singular values of X in nonincreasing order and \(\Vert x\Vert _p=[\sum _{i=1}^d|x_i|^p]^{\frac{1}{p}}\) denotes the \(\ell _p\)-norm of \(x=(x_i)^d_{i=1}\in \mathbb {R}^d\). Based on this interpretation, it was further shown that the sequence \(\{X^k\}\) converges to the unique solution \(X^\star \) of the optimization problem (2) with the error satisfying \(\sum _{k=1}^{\infty }\Vert X^k-X^\star \Vert _F^2<\infty \), provided that the linear system \(\mathcal {A}(X)=b\) is consistent and that the step size sequence is bounded above and below from 0 satisfying \(0<\inf _k\delta _k\le \sup _k\delta _k<\frac{2}{\Vert \mathcal {A}\Vert ^2}\), where \(\Vert \mathcal {A}\Vert \) is the operator norm of \(\mathcal {A}\) defined by \(\Vert \mathcal {A}\Vert =\sup \limits _{X\in \mathbb {R}^{n_1\times n_2}:\Vert X\Vert _F\le 1}\Vert \mathcal {A}(X)\Vert _2.\)

In this paper, we refine the existing convergence analysis of SVT in terms of both convergence conditions and convergence rates. We shall show that \(\{X^k\}\) converges to the unique solution \(X^\star \) of the optimization problem

with respect to the Bregman distance if and only if the step size sequence \(\{\delta _k\}_{k\in \mathbb {N}}\) satisfies \(\sum _{k=1}^{\infty }\delta _k=\infty \), under the mild assumption that the orthogonal projection \(b_0\) of b onto the range of \(\mathcal {A}\) is nonzero. This gives a precise characterization on the convergence of SVT, while only sufficient conditions for the convergence of SVT were considered in the literature. Then we shall establish a convergence rate \(\Vert X^{T+1}-X^\star \Vert _F^2=O(\frac{1}{\sum _{k=1}^{T}\delta _k})\), which gives the order \(O(\frac{1}{T})\) in the general case \(0<\inf _k\delta _k\le \sup _k\delta _k<\frac{2}{\Vert \mathcal {A}\Vert ^2}\). This improves the previous convergence result \(\sum _{k=1}^{\infty }\Vert X^k-X^{\star }\Vert _F^2<\infty \) under the same condition with no explicit convergence rates [5]. Our convergence rate discussion is based on a key identity on the Bregman distance between \(X^T\) and \(X^\star \) and the excess objective function values of the dual problem of (3) in gradient descent at step T. Our discussion in getting the necessary condition \(\sum _{k=1}^{\infty }\delta _k=\infty \) is based on a novel error decomposition for the excess Bregman distance after interpreting SVT as a specific mirror descent algorithm with a non-differentiable mirror map. Our basic idea with this error decomposition is to control the Bregman distance between \(X_k\) and \(X^*\) from below by making full use of the smoothness of the objective function. The new interpretation of SVT also opens the door of studying SVT in the mirror descent framework [2, 12]. Notice the above definition of \(b_0\) also allows us to remove the assumption on the consistency of the linear system \(\mathcal {A}(X)=b\) considered in the literature.

2 Main Results

Before stating our main results, we define the operator \(\mathcal {D}_\tau \). Let \(Y=U\Sigma V^*\) be a singular value decomposition of a matrix \(Y\in \mathbb {R}^{n_1\times n_2}\) of rank r, where U and V are \(n_1\times r\) and \(n_2\times r\) matrices with orthonormal columns, respectively, and \(\Sigma =\text {diag}(\{\sigma _1,\ldots ,\sigma _r\})\) is the \(r\times r\) diagonal matrix with the main diagonal entries \(\sigma _1\ge \sigma _2\ge \cdots \ge \sigma _r>0\) being the positive singular values of Y. The singular value shrinkage operator \(\mathcal {D}_\tau \) at level \(\tau \) is defined [5] by

where

and \((t)_+=\max (0,t)\).

Observe from the definition (3) of \(X^\star \) that \(X^\star =0\) is equivalent to \(b_0=0\). Since \(b_0\) is the projection of b onto the range of \(\mathcal {A}\), we know that \(b-b_0\) is orthogonal to the range of \(\mathcal {A}\) and thereby \(\mathcal {A}^*(b -b_0)=0\). So from the definition (1) of SVT, we see that in this special case, for any choice of the step size sequence, \(X^k=0\) and \(Y^k =0\) for all \(k\in \mathbb {N}\), and the convergence holds obviously.

Our first main result provides a necessary and sufficient condition for the convergence of \(\{X^k\}\) to \(X^\star \) with respect to the Bregman distance when the trivial case \(b_0 =0\) is excluded. We denote \(\left\langle X,Y\right\rangle = \sum _{i=1}^{n_1}\sum _{j=1}^{n_2}X_{ij}Y_{ij}\) the standard inner product between the matrices \(X=(X_{ij}) \in \mathbb {R}^{n_1\times n_2}\) and \(Y=(Y_{ij}) \in \mathbb {R}^{n_1\times n_2}\), and the subdifferential of a function \(f:\mathbb {R}^{n_1\times n_2}\rightarrow \mathbb {R}\) at \(X \in \mathbb {R}^{n_1\times n_2}\) as

If f is convex, the Bregman distance between X and \(\widetilde{X}\) under f and \(\widetilde{Y}\in \partial f(\widetilde{X})\) is defined as

If f is differentiable, then \(\partial f(X)\) consists of \(\nabla f(X)\), the gradient of f at X.

Now we can state our first main result as follows.

Theorem 1

Let \(\{(X^k,Y^k)\}_{k\in \mathbb {N}}\) be produced by (1) and \(b_0 \not =0\). Then the following statements hold.

- (a)

If \(\sup _k\delta _k<\frac{1}{2\Vert \mathcal {A}\Vert ^2}\), then

$$\begin{aligned} \lim _{T\rightarrow \infty } D_\Psi ^{Y^{T}}(X^\star ,X^{T}) =0 \text { if and only if } \sum _{k=1}^{\infty }\delta _k =\infty . \end{aligned}$$ - (b)

If \(\sup _k\delta _k<\frac{2}{\Vert \mathcal {A}\Vert ^2}\), then

$$\begin{aligned} \left\| X^{T+1}- X^\star \right\| _F^2 \le \widetilde{C} \Big [\sum _{k=1}^{T}\delta _k\Big ]^{-1}, \quad \forall T\in \mathbb {N}, \end{aligned}$$where \(\widetilde{C}\) is a constant independent of T.

The necessity part of (a) of Theorem 1 will be proved by Proposition 5 in Sect. 3 while the sufficiency part of (a) and (b) follows from Proposition 9 in Sect. 4. We see from Theorem 1 that when \(0<\inf _k\delta _k\le \sup _k\delta _k<\frac{2}{\Vert \mathcal {A}\Vert ^2}\), there holds \(\left\| X^{T+1}- X^\star \right\| _F^2 =O(1/T)\). Theorem 1 also applies to the linearized Bregman iteration for compressive sensing [4, 22].

Our second main result, to be proved in Sect. 3, is a monotonic property of the sequence \(\{X^k\}\) in terms of the least squares error F(X) used often in learning theory and defined for \(X\in \mathbb {R}^{n_1\times n_2}\) by \(F(X)=\frac{1}{2}\Vert \mathcal {A}(X)-b\Vert _2^2\).

Theorem 2

Let \(\{(X^k,Y^k)\}_{k\in \mathbb {N}}\) be produced by (1) with the step-size sequence \(\{\delta _k\}_{k\in \mathbb {N}}\) satisfying \(0<\delta _k\le \frac{1}{\Vert \mathcal {A}\Vert ^{2}}\) for every \(k\in \mathbb {N}\). Then the following statements hold.

- (a)

\(F(X^{k+1})\le F(X^k)\) for \(k\in \mathbb {N}\).

- (b)

\(X^\star \) is a minimizer of F over \(\mathbb {R}^{n_1 \times n_2}\).

- (c)

The following inequality holds for all \(T\in \mathbb {N}\)

$$\begin{aligned} F(X^{T+1})-F(X^\star ) = \frac{1}{2} \Vert \mathcal {A}(X^{T+1}-X^\star )\Vert _2^2 \le \Psi (X^\star )\Big [\sum _{k=1}^{T}\delta _k\Big ]^{-1}. \end{aligned}$$(5)

Some of our ideas in the above results can be used to analyze some other thresholding algorithms such as those derived from spectral algorithms [1, 8, 9]. It would be interesting to establish learning theory analysis [14, 15, 20, 21] for SVT algorithms in a noisy setting.

3 Necessity of Convergence

Our proof of the necessity part of (a) of Theorem 1 is based on interpreting SVT as a specific instantiation of mirror descent algorithms, a class of algorithms performing gradient descent in the dual space mapped from the primal space by the subgradient of the mirror map [2, 16]. This interpretation enables us to use arguments for mirror descent algorithms to analyze the convergence of SVT. However, standard analysis for mirror descent algorithms requires the mirror map to be differentiable, which is not the case for SVT having the non-differentiable mirror map \(\Psi \). We use Bregman distances to overcome the difficulty. Our analysis can be extended to study SVT in the online setting [11, 13].

Our analysis needs some basic facts about convex functions. A function \(f:\mathbb {R}^{n_1\times n_2}\rightarrow \mathbb {R}\) is said to be \(\sigma \)-strongly convex with \(\sigma >0\) if \(D_f^{\widetilde{Y}}(X,\widetilde{X})\ge \frac{\sigma }{2}\Vert X-\widetilde{X}\Vert _F^2\) for any \(X,\widetilde{X} \in \mathbb {R}^{n_1\times n_2}\) and \(\widetilde{Y}\in \partial f(\widetilde{X})\). It is said to be L-strongly smooth if it is differentiable and \(D_f^{\nabla f(\widetilde{X})}(X,\widetilde{X})\le \frac{L}{2}\Vert X-\widetilde{X}\Vert _F^2\) for any \(X,\widetilde{X}\). We denote \(f^*(Y)=\sup \limits _{X\in \mathbb {R}^{n_1\times n_2}}\big [\left\langle X,Y\right\rangle -f(X)\big ]\) the Fenchel (convex) conjugate of f.

Lemma 3

For a convex function \(f:\mathbb {R}^{n_1\times n_2}\rightarrow \mathbb {R}\), the following statements hold.

- (a)

\(f^{**}=f\) and

$$\begin{aligned} \partial f^*(Y) = \{X:Y\in \partial f(X)\}, \quad \forall Y\in \mathbb {R}^{n_1\times n_2}. \end{aligned}$$ - (b)

For \(\beta >0\), the function f is \(\beta \)-strongly convex if and only if \(f^*\) is \(\frac{1}{\beta }\)-strongly smooth.

- (c)

If there exists a constant \(L>0\) such that

$$\begin{aligned} \Vert \nabla f(X)-\nabla f(\widetilde{X})\Vert _F\le L\Vert X-\widetilde{X}\Vert _F \end{aligned}$$(6)for all \(X, \widetilde{X}\in \mathbb {R}^{n_1\times n_2}\), then we have

$$\begin{aligned} f(X)\le f(\widetilde{X})+\left\langle X-\widetilde{X},\nabla f(\widetilde{X})\right\rangle +\frac{L}{2}\Vert X-\widetilde{X}\Vert _F^2. \end{aligned}$$

Part (a) of Lemma 3 on the duality between f and its Fenchel conjugate \(f^*\) can be found in [3]. Part (b) on the duality between strong convexity and strong smoothness can be found in [10]. Part (c) is a standard result in relating the Lipschitz continuity of \(\nabla F\) to the strong smoothness of F (see, e.g., [17, 23]).

The idea of applying Bregman distances to SVT has been introduced in the literature. For example, it can be found in [5] that

for all \(X,\widetilde{X}\in \mathbb {R}^{n_1\times n_2},\widetilde{Y}\in \partial \Psi (\widetilde{X})\).

We observe the relation \(X^{k}=\nabla \Psi ^*(Y^{k})\) for SVT, which is a novelty of our analysis.

Lemma 4

The sequence \(\{(X^k,Y^k)\}_{k}\) produced by (1) satisfies \(Y^{k}\in \partial \Psi (X^{k})\) and \(X^{k}=\nabla \Psi ^*(Y^{k})\), and \(\Psi ^*\) is differentiable. Hence from \(\nabla F(X)=\mathcal {A}^*(\mathcal {A}(X)-b)\), we have

Proof

The gradient of F reads directly as \(\nabla F(X)=\mathcal {A}^*(\mathcal {A}(X)-b)\). It was shown in [5] that for each \(\tau >0\) and \(Y\in \mathbb {R}^{n_1\times n_2}\), the singular value shrinkage operator obeys \(\mathcal {D}_\tau (Y)=\arg \min _X\frac{1}{2}\Vert X-Y\Vert _F^2+\tau \Vert X\Vert _*\). It follows that the second equation in (1) for \(Y^k\) is equivalent to

Combining this with the optimality condition implies \(0\in X^{k}-Y^{k}+\tau \partial \Vert X^{k}\Vert _*\). That is, \(Y^{k}\in \partial \Psi (X^{k})\). By Part (a) of Lemma 3, this implies \(X^{k}\in \partial \Psi ^*(Y^{k})\). But (7) shows that \(\Psi \) is 1-strongly convex, which implies that \(\Psi ^*\) is differentiable according to Part (b) of Lemma 3. Therefore, \(X^{k}=\nabla \Psi ^*(Y^{k})\). This proves the desired statement. \(\square \)

Now we can carry out the novel analysis stated in the following proposition which proves the necessity part of Theorem 1.

Proposition 5

Let \(\{(X^k,Y^k)\}_{k\in \mathbb {N}}\) be produced by (1). If \(b_0 \not =0\) and for some \(\kappa >0\), the step-size sequence \(\{\delta _k\}_{k\in \mathbb {N}}\) satisfies \(0<\delta _k\le \frac{1}{(2+\kappa )\Vert \mathcal {A}\Vert ^2}\) for every \(k\in \mathbb {N}\), then \(D_\Psi ^{Y^T}(X^\star ,X^T)>0\) for \(T\in \mathbb {N}\) and

In particular, \(\lim _{T\rightarrow \infty } D_\Psi ^{Y^{T}}(X^\star ,X^{T}) =0\) implies \(\sum _{k=1}^{\infty }\delta _k =\infty \).

Proof

Let us first analyze how the Bregman distance is reduced in one step iteration of SVT.

Let \(k\in \mathbb {N}\). By Lemma 4 and the definition of the Bregman distance, for \(X\in \mathbb {R}^{n_1\times n_2}\), we have

Notice that

Hence, by Lemma 4

Setting \(X= X^\star \), we have

To estimate the inner product in (11), we apply Part (c) of Lemma 3 to the function F whose gradient satisfies the Lipschitz condition as

Setting \(X=X^\star \), \(\widetilde{X}=X^{k}\) yields

while the choice of \(X=X^{k}\), \(\widetilde{X}=X^\star \) gives

Recall that \(\mathcal {A}^*(b -b_0)=0\). It follows that

and

Combining this with (11) and (13) tells us that

But \(\Vert X^k-X^\star \Vert _F^2 \le 2 D_\Psi ^{Y^k}(X^\star ,X^k)\) according to (7). Then we have

Now we need the restriction \(0<\delta _k\le \frac{1}{(2+\kappa )\Vert \mathcal {A}\Vert ^2}\) with \(\kappa >0\) on the step size sequence. Denote \(a=\frac{2+\kappa }{2}\log \frac{2+\kappa }{\kappa }\) and apply the elementary inequality

Then we see from (15) that

Applying this inequality iteratively for \(k=1,\ldots ,T\) yields

Since \(Y^1=X^1=0\), we have \(D_\Psi ^{Y^1}(X^\star ,X^1)= \Psi (X^\star )>0\) by our assumption of \(b_0\not =0\). So \(D_\Psi ^{Y^{T+1}}(X^\star ,X^{T+1})>0\) and

This verifies the desired lower bound on \(\sum _{k=1}^{T}\delta _k\). The proof is complete. \(\square \)

We are in a position to prove our second main result.

Proof of Theorem 2

We follow (10), but decompose \(\Delta _k (X)\) in a different way by means of \(D_\Psi ^{Y^k}(X^{k+1},X^k) =\Psi (X^{k+1}) - \Psi (X^k) - \left\langle X^{k+1}-X^k,Y^k\right\rangle \) to get

By (8), \(Y^{k+1}-Y^k=-\delta _k\nabla F(X^k)\). To be consistent with the gradient at \(X^k\), we separate \(X-X^{k+1}\) into \(X-X^{k} + X^{k} -X^{k+1}\) and decompose \(\Delta _k (X)\) as

The inner product in the above last term can be estimated by applying Part (c) of Lemma 3 to the function F satisfying (12) as

But

according to (7). Putting these lower bounds into the last term of (16) and applying the bound \(\left\langle X-X^{k},\nabla F(X^k)\right\rangle \le F(X) - F(X^k)\) derived from the convexity of F, we find

By the assumption on the step size, \(\delta _k\Vert \mathcal {A}\Vert ^2 \le 1\). Therefore, the following inequality holds for all \(X\in \mathbb {R}^{n_1\times n_2}\)

Then the property \(F(X^{k+1})\le F(X^k)\) stated in Part (a) follows by setting \(X=X^k\) in (17) because \(D_\Psi ^{Y^{k}}(X^k,X^k)=0\) and \(D_\Psi ^{Y^{k+1}}(X^k,X^{k+1}) \ge 0\).

The statement in Part (b) follows immediately from (14). In fact, from the orthogonality of \(b-b_0\) and the range of \(\mathcal {A}\) and \(\mathcal {A}(X^\star ) =b_0\), we see the following well known relation in learning theory

To prove the statement in Part (c), we apply the monotonicity \(F(X^{k+1})\le F(X^k)\) derived in Part (a) and find

Taking the summation of (17) from \(k=1\) to T gives

But \(-D_\Psi ^{Y^{T+1}}(X,X^{T+1})\le 0\) and \(D_\Psi ^{Y^1}(X,X^1) = \Psi (X)\) since \(X^1=Y^1=0\). Hence

In particular, taking \(X=X^\star \) and applying (18), we get (5). This completes the proof of Theorem 2. \(\square \)

4 Sufficiency of Convergence

This section presents the proof for the sufficiency part of (a) and (b) of Theorem 1. Our analysis is based on the observation that SVT can be viewed as a gradient descent algorithm applied to the dual problem of (3), hence results on gradient descent algorithms can be applied. Here we apply the following standard estimates for the convergence of the gradient descent method applied to smooth optimization problems. The proof is given in the appendix for completeness.

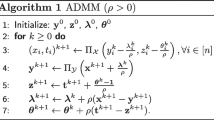

Lemma 6

Suppose \(f:\mathbb {R}^{m}\rightarrow \mathbb {R}\) is convex and L-strongly smooth with \(\lambda ^\star \) being a minimizer. Let \(\{\lambda ^k\}_{k\in \mathbb {N}}\) be the following sequence produced by the gradient descent algorithm

with a step size sequence \(\{\delta _k>0\}_{k\in \mathbb {N}}\). Then the following statements hold.

- (a)

If \(\sup _k\delta _k<2/L\), then there exists a constant \(\widetilde{C}\)

$$\begin{aligned} f(\lambda ^{T+1})-f(\lambda ^\star )\le \widetilde{C}\Big [\sum _{k=1}^{T}\delta _k\Big ]^{-1}. \end{aligned}$$(20) - (b)

If \(\sup _k\delta _k\le 1/L\), then (20) holds with \(\widetilde{C}=\Vert \lambda ^\star \Vert _2^2/2\).

The following lemma shows how SVT can be viewed as a gradient descent algorithm applied to the dual of (3). Part (a) establishes the dual problem of the optimization problem (3), and Part (b) shows that the sequence \(\{Y^k\}\) coincides with \(\{\mathcal {A}^*(\lambda ^k)\}_{k\in \mathbb {N}}\) with \(\{\lambda _k\}\) produced by applying the gradient descent algorithm (19) to the function G given in Part (a). This lemma was presented in [5] when \(\mathcal {A}\) is an orthogonal projector and the system \(\mathcal {A}(X)=b\) is consistent. It is extended here to the general linear transformation \(\mathcal {A}\) allowing for inconsistent systems with b replaced by its orthogonal projection onto the range of \(\mathcal {A}\).

Lemma 7

-

(a)

The Lagrangian dual problem of (3) is

$$\begin{aligned} \min _{\lambda \in \mathbb {R}^m}G(\lambda ), \ \hbox {where} \ G(\lambda ):=\Psi ^*(\mathcal {A}^*(\lambda ))-\left\langle \lambda ,b_0\right\rangle . \end{aligned}$$(21) -

(b)

If \(\{(X^k,Y^k)\}_{k\in \mathbb {N}}\) is produced by (1), and \(\{\lambda ^k\}_{k\in \mathbb {N}}\) is produced by applying the gradient descent algorithm (19) to the function G, then we have \(Y^k=\mathcal {A}^*(\lambda ^k)\) for \(k\in \mathbb {N}\).

Proof

The Lagrangian dual problem of (3) is

where in the second identity we have used the definition of Fenchel conjugate. This proves (21).

When the gradient descent algorithm (19) is applied to the function G defined in (21), we see by the chain rule that the gradient equals

So the sequence \(\{\lambda ^k\}_{k\in \mathbb {N}}\) produced by (19) translates to

Applying the transformation \(\mathcal {A}^*\) to both sides and noticing \(\mathcal {A}^* b_0 = \mathcal {A}^* b\) yield the following identity for all \(k\in \mathbb {N}\)

This iteration relation for the sequence \(\{\mathcal {A}^*(\lambda ^k)\}_{k\in \mathbb {N}}\) is exactly the same as (8) in Lemma 4 for the sequence \(\{Y^k\}_{k\in \mathbb {N}}\). This together with the initial conditions \(Y^1=0, \mathcal {A}^*(\lambda ^{1})=0\) tells us that \(Y^k=\mathcal {A}^*(\lambda ^k)\) for \(k\in \mathbb {N}\). The proof of the lemma is complete. \(\square \)

Combining Lemmas 6 and 7 enables us to bound the excess dual objective value \(G(\lambda ^{T+1})-G(\lambda ^\star )\) in terms of \(\sum _{k=1}^T \delta _k\). What is left for estimating \(D_{\Psi }^{Y^{T+1}}(X^\star ,X^{T+1})\) to prove the sufficiency part of Theorem 1 is to find a relation between the excess dual objective value \(G(\lambda ^{T+1})-G(\lambda ^\star )\) and the Bregman distance \(D_{\Psi }^{Y^{T+1}}(X^\star ,X^{T+1})\). This is given in the following key identity which provides an elegant scheme to transfer decay rates of excess dual objective values to those for the Bregman distance of primal variables.

Lemma 8

If \(\{(X^k,Y^k)\}_{k\in \mathbb {N}}\) is produced by (1), and \(\{\lambda ^k\}_{k\in \mathbb {N}}\) is produced by applying the gradient descent algorithm (19) to the function G, then there exists some \(\lambda ^\star \in \mathbb {R}^m\) such that \(\mathcal {A}^*(\lambda ^\star )\in \partial \Psi (X^\star )\) and

Proof

Since \(X^\star \) is an optimal point of the problem (3) with only linear constraints, the existence of Lagrange multipliers (e.g., Corollary 28.2.2 in [19]) and the first-order optimality condition imply the existence of \(\lambda ^\star \in \mathbb {R}^m\) satisfying

Together with Part (a) of Lemmas 3 and 4, this implies that

Since \(\Psi \) is convex, we know (see, e.g., Proposition 3.3.4 in [3]) that for any \(X\in \mathbb {R}^{n_1 \times n_2}\),

Applying this implication to the pairs \((X^\star , Y^\star )\) in (24) and \((X^{k}, Y^{k})\) in Lemma 4 satisfying \(Y^{k}\in \partial \Psi (X^{k})\), we know that

where we have used (25) in the last equality. But \(Y^k=\mathcal {A}^*(\lambda ^k)\) according to Part (b) of Lemma 7. Then we see from the definition of the function G that \(D_{\Psi }^{Y^k}(X^\star ,X^k)\) equals

This together with the identities (25) and \(\mathcal {A}(X^\star )=b_0\) implies

The proof of the lemma is complete. \(\square \)

Now we can prove the sufficiency part of (a) and (b) of Theorem 1 by presenting the following more general estimate.

Proposition 9

Let \(\{(X^k,Y^k)\}_{k\in \mathbb {N}}\) be produced by (1) with a positive step-size sequence \(\{\delta _k\}\) satisfying \(\sup _k\delta _k<\frac{2}{\Vert \mathcal {A}\Vert ^2}\). Then we have

where \(\widetilde{C}\) is a constant independent of T. Furthermore, if \(\sup _k\delta _k\le \frac{1}{\Vert \mathcal {A}\Vert ^2}\), then (26) holds with \(\widetilde{C}=\Vert \lambda ^\star \Vert _2^2/2\), where \(\lambda ^\star \) is an element in \(\mathbb {R}^m\) satisfying \(\mathcal {A}^*(\lambda ^\star )\in \partial \Psi (X^\star )\).

Proof

Recall the expression (22) for the gradient of G. Take the vector \(\lambda ^\star \) given in Lemma 8. The identity (25) implies

and therefore \(\lambda ^\star \) minimizes G.

By (7), \(\Psi \) is 1-strongly convex. So its Fenchel conjugate \(\Psi ^*\) is 1-strongly smooth according to Part (b) of Lemma 3. It follows that for \(\lambda , \tilde{\lambda } \in \mathbb {R}^m\),

where in the last step we have used (22) and the definition of operator norm. It tells us that the function \(G(\lambda )\) is \(\Vert \mathcal {A}\Vert ^2\)-strongly smooth. So we apply Lemmas 6(a) and 8 and know that when \(\{\delta _k\}_k\) satisfies \(\sup _k\delta _k \le \frac{2}{\Vert \mathcal {A}\Vert ^2}\), the following inequality holds with a constant \(\widetilde{C}\) independent of T

According to Lemmas 6(b) and 8, the constant \(\widetilde{C}\) can be chosen to be \(\Vert \lambda ^\star \Vert _2^2/2\) if \(\delta _k\le \frac{1}{\Vert \mathcal {A}\Vert ^2}\). The proof is complete. \(\square \)

References

Bauer, F., Pereverzev, S., Rosasco, L.: On regularization algorithms in learning theory. J. Complex. 23(1), 52–72 (2007)

Beck, A., Teboulle, M.: Mirror descent and nonlinear projected subgradient methods for convex optimization. Oper. Res. Lett. 31(3), 167–175 (2003)

Borwein, J.M., Lewis, A.S.: Convex Analysis and Nonlinear Optimization: Theory and Examples. Springer, Berlin (2010)

Cai, J.-F., Osher, S., Shen, Z.: Convergence of the linearized bregman iteration for \(\ell _1\)-norm minimization. Math. Comput. 78(268), 2127–2136 (2009)

Cai, J.-F., Candès, E.J., Shen, Z.: A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 20(4), 1956–1982 (2010)

Candès, E.J., Recht, B.: Exact matrix completion via convex optimization. Found. Comput. Math. 9(6), 717–772 (2009)

Candès, E.J., Tao, T.: The power of convex relaxation: near-optimal matrix completion. IEEE Trans. Inf. Theory 56(5), 2053–2080 (2010)

Gerfo, L.L., Rosasco, L., Odone, F., Vito, E.D., Verri, A.: Spectral algorithms for supervised learning. Neural Comput. 20(7), 1873–1897 (2008)

Guo, Z.-C., Xiang, D.-H., Guo, X., Zhou, D.-X.: Thresholded spectral algorithms for sparse approximations. Anal. Appl. 15(03), 433–455 (2017)

Kakade, S.M., Shalev-Shwartz, S., Tewari, A.: Regularization techniques for learning with matrices. J. Mach. Learn. Res. 13, 1865–1890 (2012)

Lei, Y., Zhou, D.-X.: Analysis of online composite mirror descent algorithm. Neural Comput. 29(3), 825–860 (2017)

Lei, Y., Zhou, D.-X.: Convergence of online mirror descent. Appl. Comput. Harmon. Anal. (2018). https://doi.org/10.1016/j.acha.2018.05.005

Lei, Y., Zhou, D.-X.: Learning theory of randomized sparse Kaczmarz method. SIAM J. Imaging Sci. 11(1), 547–574 (2018)

Lin, J., Zhou, D.-X.: Learning theory of randomized Kaczmarz algorithm. J. Mach. Learn. Res. 16, 3341–3365 (2015)

Minh, H.Q.: Infinite-dimensional log-determinant divergences between positive definite trace class operators. Linear Algebra Appl. 528, 331–383 (2017)

Nemirovsky, A.-S., Yudin, D.-B.: Problem Complexity and Method Efficiency in Optimization. Wiley, New York (1983)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course. Springer, New York (2013)

Recht, B., Fazel, M., Parrilo, P.A.: Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization. SIAM Rev. 52(3), 471–501 (2010)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (1960)

Steinwart, I., Christmann, A.: Support Vector Machines. Springer, Berlin (2008)

Strohmer, T., Vershynin, R.: A randomized Kaczmarz algorithm with exponential convergence. J. Fourier Anal. Appl. 15(2), 262 (2009)

Yin, W., Osher, S., Goldfarb, D., Darbon, J.: Bregman iterative algorithms for \(\ell _1\)-minimization with applications to compressed sensing. SIAM J. Imaging Sci. 1(1), 143–168 (2008)

Ying, Y., Zhou, D.-X.: Unregularized online learning algorithms with general loss functions. Appl. Comput. Harmon. Anal. 42(2), 224–244 (2017)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Roman Vershynin.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The work described in this paper is partially supported by the National Natural Science Foundation of China (Grant No. 61806091), and the NSFC/RGC Joint Research Scheme [RGC Project No. 11338616 and NSFC Project No. 11461161006].

Appendix: Proof of Lemma 6

Appendix: Proof of Lemma 6

We first prove part (a). Since \(\sup _k\delta _k<2/L\), there exists a \(\gamma \in (0,2)\) such that \(\delta _k\le (2-\gamma )/L\) for all \(k\in \mathbb {N}\). According to the iteration (19), we know

Since f is convex and L-strongly smooth, the co-coercivity of \(\nabla f\) implies (see, e.g., Theorem 2.1.5 in [17]

Plugging this inequality back into (27) and using \(\nabla f(\lambda ^\star )=0\), we derive

where we have used the Jensen inequality and \(\delta _k<2/L\) in the second inequality and \(\delta _k\le (2-\gamma )/L\) in the last inequality. It then follows that

Furthermore, it follows from Lemma 3(c) and the iteration (19) that

The assumption \(\delta _k<2/L\) then implies \(f(\lambda ^{k+1})\le f(\lambda ^k)\) for all \(k\in \mathbb {N}\). This monotonicity together with (28) then shows (20) with \(\widetilde{C}=\Vert \lambda ^\star \Vert _2^2/\gamma \).

We now prove part (b) under the assumption \(\sup _k\delta _k\le 1/L\). An application of the Jensen inequality in (29) then implies

where we have used \(\delta _k\le 1/L\) in the second inequality and (27) in the last identity. It then follows that

which together with the monotonicity of \(f(\lambda ^k)\) implies the stated inequality (20) with \(\widetilde{C}=\Vert \lambda ^\star \Vert _2^2/2\). The proof of Lemma 6 is complete. \(\square \)

Rights and permissions

About this article

Cite this article

Lei, Y., Zhou, DX. Analysis of Singular Value Thresholding Algorithm for Matrix Completion. J Fourier Anal Appl 25, 2957–2972 (2019). https://doi.org/10.1007/s00041-019-09688-8

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00041-019-09688-8