Abstract

Spectral collocation and reconstruction methods have been widely studied for periodic functions using Fourier expansions. We investigate the use of cosine series for the approximation and collocation of multivariate non-periodic functions with frequency support mainly determined by hyperbolic crosses. We seek methods that work for an arbitrary number of dimensions. We show that applying the tent-transformation on rank-1 lattice points renders them suitable to be collocation/sampling points for the approximation of non-periodic functions with perfect numerical stability. Moreover, we show that the approximation degree—in the sense of approximating inner products of basis functions up to a certain degree exactly—of the tent-transformed lattice point set with respect to cosine series, is the same as the approximation degree of the original lattice point set with respect to Fourier series, although the error can still be reduced in the case of cosine series. A component-by-component algorithm is studied to construct such a point set. We are then able to reconstruct a non-periodic function from its samples and approximate the solutions to certain PDEs subject to Neumann and Dirichlet boundary conditions. Finally, we present some numerical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Cosine series are used for the expansion of non-periodic functions in the \(d\)-dimensional unit cube. They are the eigenfunctions of the Laplace differential operator with homogeneous Neumann boundary conditions. Cosine series are also sometimes referred to as modified Fourier series, see [1, 10]. The half-period cosine functions form a set of orthonormal basis functions of \(L_2([0,1])\) and are given by

Here \(\mathbb {N}:\,= \{1,2,\ldots \}\) is the set of natural numbers. We will further write \(\mathbb {Z}\) for the set of integers, \(\mathbb {Z}_+ :\,= \{0,1,\ldots \}\) for the set of non-negative integers and \(\mathbb {Z}_N :\,= \{0,1,\ldots ,N-1\}\) for the set of integers reduced modulo \(N\).

Cosine series have been widely studied in the context of non-periodic functions, where traditional Fourier series face the problem of the well known Gibbs phenomenon in the absence of periodicity. Cosine series and spectral methods using them have been studied in depth in [1, 10] and its successors. Multivariate cosine expansions have been studied alongside hyperbolic cross approximations in [1] and [2]; also in [1], cosine series and spectral Galerkin methods have been used to solve boundary value problems. Cosine series expansions have been used in financial mathematics, e.g., to solve stochastic control problems in [20].

Here, we focus on multivariate pseudospectral methods, and specifically on collocation methods where lattice points are used as sampling nodes. Our motivation comes from the recent developments that have shown that rank-1 lattice points are well suited to be collocation points in a periodic setting. Li and Hickernell [16] introduced a spectral collocation method with Fourier basis where the samples of the input function along a rank-1 lattice point set are used to approximate the solution of PDEs such as the Poisson equation in \(d\) dimensions. Kämmerer [13] has presented an approach for reconstructing multivariate trigonometric polynomials (periodic) that have support on a hyperbolic cross, by sampling them on rank-1 lattices. This approach is shown to achieve a perfectly stable reconstruction. Finally in [8] we see that the worst-case error for cubature in the cosine series space using tent-transformed rank-1 lattice rules can be related to that of rank-1 lattice rules in a certain periodic function space.

The above research leads us to the use of tent-transformed rank-1 lattice points (see Definition 6) as collocation/sampling nodes in the approximation of multivariate non-periodic functions using cosine series. We study two problems in the approximation of non-periodic functions: stable reconstruction of a function from its samples and a spectral collocation method to find the approximate solution of a PDE. We show that the samples of a function along tent-transformed rank-1 lattice points can be used to find a stable reconstruction of the function. We reconstruct the function from these samples by finding its cosine coefficients on a weighted hyperbolic cross (see Definition 3); this helps reduce the number of degrees of freedom. We also show that these coefficients can be evaluated efficiently using a one dimensional FFT. Finally we show that the same concepts can be extended to a spectral collocation method where tent-transformed rank-1 lattice points can be used as collocation points.

2 Background and Notation

In this section we introduce the concepts and notation required for the paper.

2.1 Series Expansion

The basis functions (1) of the cosine series can also defined in the following form over the alternative domain for \(t \in [-1,1]\), see [1, 10],

With the simple transformation \(t = 2x -1\), we arrive at the equivalent cosine series in our domain for \(x \in [0,1]\). The latter choice is convenient when working with rank-1 lattice points, which are the building blocks for our sampling points. Note that the \(\psi \)’s correspond to the even indices in (1) and \(\eta \)’s correspond to the odd indices in (1).

For the \(d\)-dimensional case, we use the tensor product of the one-dimensional basis functions:

where we denote by \({|\varvec{k}|_0}\) the number of non-zero elements in \(\varvec{k}\).

The cosine series expansion of a \(d\)-variate function \(f \in L_2([0,1])\) converges to \(f\) in the \(L_2\) norm; additionally if \(f\) is continuously differentiable, we have uniform pointwise convergence and \(f\) can be expressed as a cosine series expansion as follows, see [1, 10]:

where \(\hat{f}\) is the cosine transform of \(f\) and is obtained as follows

2.2 Function Space Setting

Hyperbolic crosses have been widely used to decrease the number of degrees of freedom by taking into account the decay properties of the Fourier coefficients of multivariate functions in cubature and approximation problems, see, e.g., [6] and [13]. Weights were introduced along with hyperbolic crosses for truncated series representations in [15] and [6], for approximation and integration respectively, and this helped overcome the exponential growth in the number of degrees of freedom. For a detailed discussion on weights, as they appear in weighted norms, i.e., in terms of weighted function spaces, see [7, 22].

We will introduce two types of weights: \(\varvec{\beta }\) weights to control the basis functions appearing in the truncated series and \(\varvec{\gamma }\) weights to control the contribution of the different basis functions (dimension wise) in the norm of the space. This setup of weights was introduced in [6].

We first define a function space for which the cosine coefficients decay sufficiently fast at an algebraic rate, controlled by a smoothness parameter \(\alpha > 1/2\). Next we define the Korobov space which is a function space of periodic functions with a similar condition on the Fourier coefficients. All our function spaces are reproducing kernel Hilbert spaces. Since we will introduce similar symbols for the periodic and non-periodic cases, the periodic case symbols will be marked with a tilde on top. First we define

where the weights \(\varvec{\gamma }\) can also be replaced by the weights \(\varvec{\beta }\) when needed. The half-period cosine space, or just cosine space in short (studied in [8]), is then defined as

Definition 1

For \(\alpha > 1/2\) the (half-period) cosine space is defined by

with weights \(\varvec{\gamma }\) for which we assume \(1 \ge \gamma _1 \ge \gamma _2 \ge \cdots > 0\).

We remark that for \(\alpha = 1\), we recover the unanchored Sobolev space of (dominating mixed) smoothness 1, see [8]; the norm in this case is given by

Similarly, we define the Korobov space of periodic functions which makes use of the Fourier coefficients of \(f\) for \(\varvec{h}\in \mathbb {Z}^d\):

Note that the cosine coefficients are marked with a hat and the Fourier coefficients are marked with a tilde.

Definition 2

For \(\alpha > 1/2\) the Korobov space is defined by

with weights \(\varvec{\gamma }\) for which we assume \(1 \ge \gamma _1 \ge \gamma _2 \ge \cdots > 0\).

To approximate a function \(f \in C_{d,\alpha ,\varvec{\gamma }}\), we will look at an \(n\)-term approximation, where \(n\) is the number of basis functions with indices \(\varvec{k}\in \mathbb {Z}_+^d\) inside a certain weighted hyperbolic cross \(H_T^{d,\varvec{\beta }} \subset \mathbb {Z}_+^d\) of degree \(T \ge 0\).

Definition 3

The weighted hyperbolic cross \(H_T^{d,\varvec{\beta }}\) (on the positive hyperoctant) is defined by

with \(T \in \mathbb {R}\), \(T \ge 0\), being the degree of the hyperbolic cross, and weights \(1 \ge \beta _1 \ge \beta _2 \ge \cdots > 0\).

Obviously, the degree \(T\) controls the number of terms in the approximation. An \(n\)-term approximation to \(f \in C_{d,\alpha ,\varvec{\gamma }}\) up to degree \(T\), where \(n=n(T)=|H_T^{d,\varvec{\beta }}|\), can then be written as

Likewise, for the periodic case we have the following definition:

Definition 4

The weighted hyperbolic cross \(\widetilde{H}_T^{d,\varvec{\beta }}\) for the periodic case is given by

with \(T \in \mathbb {R}\), \(T \ge 0\), being the degree of the hyperbolic cross, and weights \(1 \ge \beta _1 \ge \beta _2 \ge \cdots > 0\).

2.3 Spectral Methods

Consider a problem of the form \( \mathcal {L}u(\varvec{x}) = f(\varvec{x})\) where \(\mathcal {L}\) is a bounded linear operator. We want to find an approximation to the solution \(u(\varvec{x})\), given samples of the input function \(f(\varvec{x})\) and assuming \(u(\varvec{x}) \in C_{d,\alpha ,\varvec{\gamma }}\) has an \(n\)-term approximation

and for which \(\lim _{n \rightarrow \infty } u_n = u\). Pseudospectral or collocation methods make the so called residual error function \(R(\varvec{x}) :\,= \mathcal {L}u_n(\varvec{x}) - f(\varvec{x})\) zero at a set of selected points called the collocation points.

Spectral methods such as the Galerkin method calculate the coefficients \(\hat{u}_{\varvec{k}}\) such that the residue is orthogonal to the approximation space,

Provided that the cubature points are suitably chosen to approximate the integrals in the Galerkin method, the resulting approximations from the two methods are identical when these points are used as collocation points. The reconstruction methods we propose belong in this category, so we seek sampling points that can integrate the inner products of the basis functions exactly as much as possible.

Note that, for functions with decaying spectral coefficients, if we were to evaluate the coefficients exactly, \(u_n(\varvec{x})\) would be the best \(n\)-term approximation to \(u\) in the \(L_2\) norm. However, we incur errors due to truncation and cubature errors. The truncation error can be controlled by adjusting \(T\) and at the same time we will apply cubature rules which will integrate inner products of basis functions from the hyperbolic cross exactly.

2.4 Rank-1 Lattice Point Sets and the Tent-Transformation

Rank-1 lattice rules have been widely studied in the context of multivariate cubature, see [5, 18, 21] and in function approximation, see [15–17]. A rank-1 lattice point set is defined as follows:

Definition 5

For a given \(N \in \mathbb {N}\) and \(\varvec{z}\in \mathbb {Z}_N^d\), a rank-1 lattice point set \(\Lambda (\varvec{z}, N)\) is given by

and \(\varvec{z}\) is called the generating vector.

The tent-transformation, \(\varphi : [0,1] \rightarrow [0,1]\), is given by

and is applied to each coordinate separately when applied to a vector: \(\varphi (\varvec{x}) = (\varphi (x_1), \ldots , \varphi (x_d))\). It is trivial to see that \(\cos (\pi k \varphi (x)) = \cos (2\pi k x)\) for \(k \in \mathbb {Z}\). Loosely speaking, we apply the tent-transformation to get symmetry in the sampling points around the midpoint and to also benefit from the characteristics of the lattice points when used with traditional Fourier series. We note that applying the tent-transform to the points is a convenient way of creating an even extension of the function by putting the symmetry in the point set, and an easy pathway to analyse such point sets in the cosine space. The benefits of using this transform in the context of integrating non-periodic functions can be found in [8, 9, 18].

Definition 6

For a given \(N \in \mathbb {N}\) and \(\varvec{z}\in \mathbb {Z}_N^d\), the tent-transformed rank-1 lattice point multiset \(\Lambda _{\varphi }(\varvec{z}, N)\) is given by

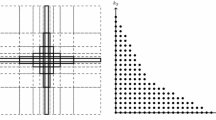

Figure 1 shows that the number of distinct spatial points reduces after applying the tent-transformation. The next proposition shows that the number of samples is basically halved.

Proposition 1

For a given \(N \in \mathbb {N}\) and \(\varvec{z}\in \mathbb {Z}_N^d\) such that \(\gcd (N,z_1,\ldots ,z_d) = 1\), and the corresponding \(N\)-point rank-1 lattice point set \(\Lambda (\varvec{z}, N)\), the number of distinct points in the multiset \(\Lambda _{\varphi }(\varvec{z}, N)\) obtained by applying the tent-transformation to \(\Lambda (\varvec{z}, N)\) is \(\left\lfloor N/2+1 \right\rfloor \).

Proof

Since \(\gcd (N,z_j) = 1\) for at least one \(j\), with \(1 \le j \le d\), we have \(N\) distinct points in \(\Lambda (\varvec{z}, N)\). We will write \(\{x\} :\,= x \mod 1\) to concisely denote the fractional part of \(x\). We also have \(1 - \{ N^{-1} n z_j \} = \{ N^{-1} (N-n) z_j \}\). Now consider the 1-dimensional projection of the points in the lattice point set \(\Lambda (\varvec{z}, N)\) to a dimension \(j\) with \(\gcd (N, z_j) = 1\). For odd \(N\), these are given by:

and for even \(N\) we have

Because of the inherent presence of \(x\) and \(1-x\) for \(x\ne 0\) and \(x\ne 1/2\), both appearing in different halves of the interval, and the same symmetry in (6), the tent-transform maps these halves to \((N-1)/2\) points with multiplicity exactly two for odd \(N\) and \((N-2)/2\) points for even \(N\). This leads to \((N-1)/2+1\) unique points for odd \(N\) and \((N-2)/2+2\) unique points for even \(N\), in dimension \(j\). This multiplicity is present in all dimensions \(1 \le j \le d\) simultaneously for the pairs \(x_j^{(n)} = \{ N^{-1} n z_j \}\) and \(x_j^{(N-n)} = \{ N^{-1} (N-n) z_j \} = 1 - x_j^{(n)}\) at the same indices \(n\) and thus the result follows. For \(j\) where \(g_j = \gcd (N, z_j) > 1\) the multiplicity in that dimension is multiplied with \(g_j\) but this does not change the result. \(\square \)

To approximate integrals (and inner products) we will consider \(N\)-point cubature rules \(Q_N\) which are linear combinations of functions values:

where \(\varvec{x}^{(0)},\ldots ,\varvec{x}^{(N-1)}\) are the sampling points and \(w_0,\ldots ,w_{N-1}\) are the weights. A lattice rule is then a cubature rule that uses points from a lattice \(\Lambda (\varvec{z}, N)\) with equal weights of \(1/N\) and we will denote its application to a function \(f\) by \(Q_N(f;\varvec{z})\). Likewise, a tent-transformed lattice rule uses points from the tent-transformed point multiset \(\Lambda _{\varphi }(\varvec{z}, N)\), conveniently denoted as \(Q_N(f \circ \varphi ;\varvec{z})\), again with equal weights \(1/N\), or, using the result of Proposition 1, with \(\left\lfloor N/2+1 \right\rfloor \) points and adjusted weights.

The quality of a cubature rule is often described by the largest degree (of, e.g., the hyperbolic cross) for which it integrates all the basis functions of that degree or less exactly. Similarly, we define an approximation degree based on the exact approximation of the inner products of the basis functions, and this will be useful in the next section. In what follows, we will write \(H_{\star }^{d,\varvec{\beta }}\) to denote \(H_{T}^{d,\varvec{\beta }}\) for arbitrary \(T\).

Definition 7

The approximation degree \(\mathcal {T}(Q_N) = \mathcal {T}(Q_N, H_{\star }^{d,\varvec{\beta }},\{\phi _{\varvec{k}}\}_{\varvec{k}})\) of a cubature rule \(Q_N\) with respect to the weighted hyperbolic cross \(H_T^{d,\varvec{\beta }}\) and the set of basis functions \(\{\phi _{\varvec{k}}\}_{\varvec{k}}\) is the largest \(T \ge 0\) for which

Note that this definition applies with the obvious changes to the periodic case, using \(\widetilde{H}_{T}^{d,\varvec{\beta }}\) and the basis functions \(\{ \exp (2 \pi \mathrm {i}\,\varvec{h}\cdot \varvec{x}) \}_{\varvec{h}}\), as well as for other arbitrary index sets and bases. We denote the degree in the periodic setting as \(\widetilde{\mathcal {T}}(Q_N) = \mathcal {T}(Q_N, \widetilde{H}_{\star }^{d,\varvec{\beta }},\{\exp (2 \pi \mathrm {i}\,\varvec{h}\cdot \varvec{x})\}_{\varvec{h}})\). In [8], embeddings between the cosine space, the Korobov space and the unanchored Sobolev space are studied. From there we are interested in studying which properties of lattice rules can be carried over from the periodic case to the non-periodic case. It is now easy to show that the approximation degree of a tent-transformed lattice rule is at least the approximation degree of the corresponding lattice rule in the periodic case. This is stated in the next lemma. In Theorem 1 we will show that they actually coincide.

Lemma 1

For a dimension \(d \ge 1\) and a set of weights \(\varvec{\beta }\), we have

Proof

Starting with Definition 7 we can immediately write

where \(\phi _{\varvec{k}}(\varphi (\varvec{x}))\) and \(\phi _{\varvec{\ell }}(\varphi (\varvec{x}))\) are both in the span of \(\{\exp (2 \pi \mathrm {i}\,\varvec{h}\cdot \varvec{x})\}\) for \(\varvec{h}\in \widetilde{H}_T^{d,\varvec{\beta }}\) whenever \(\varvec{k}, \varvec{\ell }\in H_T^{d,\varvec{\beta }}\) since \(\cos (\pi k \varphi (x)) = \cos (2\pi k x)\) for \(k \in \mathbb {Z}\). This proves the claim. \(\square \)

3 Function Reconstruction and Approximation

The reconstruction and approximation of a function \(f\) involves calculating its series coefficients from samples of the function at a specific point set. Denoting by \(\hat{f}_a\) the approximation of the cosine coefficients of \(f \in C_{d,\alpha ,\varvec{\gamma }}\), we can define an approximation \(f_a\) by

where the the cosine coefficients are approximated by a cubature rule

Note that, expanding \(f\) back in terms of its cosine series (2) in (3), gives us

where the last integral is unity for \(\varvec{k}= \varvec{\ell }\), and vanishes otherwise, due to the orthonormality of the basis. On the other hand we have for the approximation of the coefficients,

where the last equality is valid when \(Q_N\) has an approximation degree of at least \(T\) with respect to \(H_\star ^{d,\varvec{\beta }}\) and \(\{\phi _{\varvec{k}}\}_{\varvec{k}}\). Comparing (11) with (10) we seek a cubature rule which satisfies the discrete orthogonality condition \(Q_N( \phi _{\varvec{\ell }} \, \phi _{\varvec{k}} ) = \delta _{\varvec{\ell },\varvec{k}}\) as much as possible (\(\delta \) being the Dirac delta function); this is the motivation for the definition of approximation degree in Definition 7. From (12) we note that \(\hat{f}_a(\varvec{k})\) will be contaminated by other coefficients, this is called aliasing. For a function which has support restricted to \(H_T^{d,\varvec{\beta }}\) the condition that \(Q_N\) has approximation degree \(T\) will guarantee no aliasing. For functions with wider support we are guaranteed that any aliasing error comes from indices outside \(H_T^{d,\varvec{\beta }}\). Under the assumption that the spectral coefficients decay sufficiently fast and the series expansion converges, this error is small and can be controlled by the choice of \(H_T^{d,\varvec{\beta }}\). As mentioned earlier, in the absence of cubature error, (9) would be the best \(n\)-term approximation (\(n = |H_T^{d,\varvec{\beta }}|\)) possible with respect to the \(L_2\) norm; by choosing cubature rules that result in low aliasing effects, we control the additional error due to cubature.

3.1 The Difference Set

We use as building blocks the concepts described in [13], where trigonometric polynomials with support on a weighted hyperbolic cross \(\widetilde{H}_T^{d,\varvec{\beta }}\) are reconstructed using rank-1 lattice points as sampling nodes. There also, we have a discrete inner product condition similar to that in (8), which requires the following criterion to be met for exact reconstruction using a lattice rule: for all \(\varvec{k},\varvec{\ell }\in \widetilde{H}_T^{d,\varvec{\beta }}\)

A difference set \(\widetilde{\mathcal {D}}_T^{d,\varvec{\beta }}\) is then defined

and it follows that if a lattice rule using the point set \(\Lambda (\varvec{z}, N)\) integrates all the frequencies in the set \(\widetilde{\mathcal {D}}_T^{d,\varvec{\beta }}\) exactly, it can be used to evaluate the coefficients for all \(\varvec{k}\in \widetilde{H}_T^{d,\varvec{\beta }}\) exactly (with no aliasing from other frequencies). The following lemmas are used to build a similar difference set in our case.

Lemma 2

Let \(\varphi (x)\) be the tent-transform function as in (6). Then for any \(\varvec{k}, \varvec{\ell }\in \mathbb {Z}_+^d\), we have

where \(\varvec{\sigma }\) and \(\varvec{\sigma }'\) are the sets of all possible sign combinations on the indices of \(\varvec{\ell }\) and \(\varvec{k}\) respectively.

Proof

Expanding the cosine functions in terms of exponentials we have,

\(\square \)

Condition (8) and Lemma 2 then lead to the following lemma:

Lemma 3

Let \(\Lambda _{\varphi }(\varvec{z}, N)\) be the point multiset obtained by applying the tent-transform (6) to a rank-1 lattice point set \(\Lambda (\varvec{z}, N)\), and \(H_T^{d,\varvec{\beta }}\) a \(d\)-dimensional hyperbolic cross on the positive quadrant as in (4). If \(\Lambda (\varvec{z}, N)\) is such that \(\forall \varvec{k},\varvec{\ell }\in H_T^{d,\varvec{\beta }}\)

then \(\Lambda _{\varphi }(\varvec{z}, N)\) satisfies (8).

Proof

Consider a cubature rule using \(\Lambda _{\varphi }(\varvec{z}, N)\) to approximate the inner products in (11), using Lemma 2 we get:

\(\square \)

Lemma 3 leads us to defining the following difference set:

From (15) it follows that if a lattice rule using the point set \(\Lambda (\varvec{z}, N)\) integrates all the frequencies in \(\mathcal {D}_T^{d,\varvec{\beta }}\) exactly, \(\Lambda _{\varphi }(\varvec{z}, N)\) will satisfy the orthogonality condition in (8).

Lemma 4

The difference sets \(\mathcal {D}_{T}^{d,\varvec{\beta }}\) and \(\widetilde{\mathcal {D}}_T^{d,\varvec{\beta }}\) as defined in (14) and (16) are identical.

Proof

We compare the difference sets in the two cases: since \(\widetilde{H}_T^{d,\varvec{\beta }}\) is symmetric across the \(d\) axes, and \(H_T^{d,\varvec{\beta }}\) has support only in the positive hyperoctant, the following holds

On the other hand we can also write \(\widetilde{H}_T^{d,\varvec{\beta }} = \{\varvec{\sigma }(\varvec{k}) : \varvec{k}\in H_T^{d,\varvec{\beta }}, \varvec{\sigma }\in \{\pm 1\}^d \}\). We thus have

\(\square \)

Lemma 4 is illustrated in Fig. 2 in 2 dimensions. We hence arrive at the following theorem.

Theorem 1

Let \(\Lambda _{\varphi }(\varvec{z}, N)\) be obtained by applying the tent-transformation to the rank-1 lattice point set \(\Lambda (\varvec{z}, N)\), where \(\varvec{z}\) and \(N\) are as before. The approximation degree of the tent-transformed lattice rule using \(\Lambda _{\varphi }(\varvec{z}, N)\) with respect to the cosine series is equal to the approximation degree of the lattice rule using \(\Lambda (\varvec{z}, N)\) with respect to the Fourier series. , i.e.,

Proof

The proof follows directly from Lemma 4. \(\square \)

Remark 1

Note that although the approximation degree of the lattice point set with respect to the Fourier series and the tent-transformed point set with respect to the cosine series are the same, the error can still be improved in the cosine space. In the Korobov space the Fourier coefficients of frequencies for which (13) does not hold, alias each another in whole (i.e., the cubature error of the inner products of basis functions is either 0 or 1). Where as in the cosine space, when (15) does not hold, the aliasing is scaled (by \(\le \)1) as the cubature error depends on the number of sign combinations for which the discrete orthogonality condition does not hold. This error can be reduced by choosing a point set that minimizes the quantity

where \(\Lambda ^{\perp }\) is the so called dual of the lattice point set (i.e., those frequencies which are not integrated exactly in Fourier space and therefore have error 1).

3.2 Construction of the Sampling Points

In the previous section we have seen that the problem of construction of a tent-transformed point multiset for the reconstruction of a non-periodic function can be reduced to that of constructing a rank-1 lattice point set for the reconstruction of a trigonometric polynomial as given in [13]. Kämmerer [13] proposes a component-by-component algorithm to construct a generating vector \(\varvec{z}\) for a predetermined number of points \(N\) that integrates all monomials in \(\widetilde{\mathcal {D}}_T^{d,\varvec{\beta }}\) exactly. One can then immediately apply the tent-transformation to obtain the required sampling points for the non-periodic problem. In this section we make use of some of the key results from [13].

Theorem 2

Let \(d\in \mathbb {N}\), \(d \ge 2\), \(T \in \mathbb {R}\), weights \(\varvec{\beta }\) and \(N \in \mathbb {N}\) prime satisfying

and assume there exists a rank-1 lattice \(\Lambda (\varvec{z}^*, N), \varvec{z}^* \in \mathbb {Z}^{d-1}\) and \(\varvec{h}\cdot \varvec{z}^* \not \equiv 0 \pmod {N}\) for all \(\varvec{h}\in \widetilde{\mathcal {D}}_T^{d-1,\varvec{\beta }}\backslash \{\varvec{0}\}\), then there exists a \(z_d \in \{1,\ldots ,N-1\}\) such that for all \(\varvec{h}\in \widetilde{\mathcal {D}}_T^{d,\varvec{\beta }}\backslash \{\varvec{0}\}\)

Proof

We refer the reader to [13], Theorem 3.2] for the proof, which is based on the result in [6]. \(\square \)

The steps to find the entries of the generating vector \(\varvec{z}\) component-by-component directly follow from the above theorem and are outlined in Algorithm 1. The algorithm can straightforwardly be modified to take (17) into account, albeit, at an exponential cost.

In addition to the CBC algorithm, Theorem 2 also prescribes the cardinality of the point set that is needed to achieve the same. The quantity \(N_{s,\varvec{\beta },T}^{\mathrm {low}}\) is defined,

and \(N\) should then be a prime chosen such that \(N \ge \max _{s =1,\ldots ,d}N_{s,\varvec{\beta },T}^{\mathrm {low}}\), so that condition (18) is met at all steps in the CBC algorithm. Further, [13], Corollary 3.4] states that there exists a prime \(N^*\) such that \(N^* < 2\max _{s =1,\ldots ,d}N_{s,\varvec{\beta },T}^{\mathrm {low}}\). Also an upperbound for \(N^*\) is derived in [13]:

It is noted in [6] that the lower bound might be a severe overestimate and we can use [13], Algorithm 4] to possibly further decrease the number of points. As the upper bound for the number of degrees of freedom in the frequency space, i.e., the size of the (unweighted) hyperbolic cross, is only of order \(T \log (T)^{d-1}\), we note that this method leads to oversampling in the spatial domain, but it provides a unique and stable reconstruction, see the forthcoming Theorem 3. In this context, sparse grids provide a more natural spatial discretization corresponding to hyperbolic crosses, see [4]. These methods do not oversample in the spatial domain, but fall short in numerical stability, in that the Fourier matrices obtained from sampling trigonometric polynomials on sparse grids have large condition numbers; these condition numbers grow with the number of points as well as the number of dimensions, see [11] for more details and in particular, [11], Table 1.1] for an illustration on how these condition numbers grow.

Remark 2

We note that, for an \(N\)-point lattice rule, one can always find a set of exactly \(N\) Fourier modes which do not alias each other. Such a set is called a non-aliasing index set, see, e.g., [16, 17]. There exist several choices for such non-aliasing index sets and their exact shape depends on the particular choice of lattice point set. When considering such a set, the number of degrees of freedom in the spatial domain agrees perfectly with the number of degrees of freedom in the frequency domain. E.g., instead of considering a (weighted) hyperbolic cross as the region of interesting frequencies and basis functions, we could switch to an \(\ell _1\)-based metric and consider (weighted) cross-polytopes of a certain degree, see, e.g., [6, 17]. This corresponds to the trigonometric degree for cubature rules, and it is known that lattice rules perform extremely well in this case, see, e.g., [5].

3.3 Fast Reconstruction

The evaluation of the cosine coefficients of a \(d\)-variate function can be reduced to computing a one-dimensional FFT. In [17] and [13], a 1D FFT is used to evaluate the Fourier coefficients of multivariate periodic functions; we extend this technique to handle non-periodic functions. Note that, in general the Fourier coefficients obtained from the 1D FFT are aliased in the following way

where \(\tilde{f}_{a}(\varvec{k})\) denote the aliased Fourier coefficients. Algorithm 2 gives the steps to compute the cosine coefficients efficiently.

3.4 Numerical Stability

As mentioned before the reconstruction method we propose is numerically stable, i.e., the condition number of the matrix obtained by our sampling scheme does not grow with either the number of points or the number of dimensions. We briefly recall the stability concepts from [13] and analyze the above algorithms in our problem setting.

Theorem 3

When the point set \(\Lambda _{\varphi }(\varvec{z}, N)\) is chosen as in Algorithm 1, the resulting reconstruction is unique and numerically stable.

Proof

We present an outline of the proof as given in [12, 13]. Although the proofs are in the context of traditional Fourier series they can easily be adapted for the cosine series case. Consider the cosine matrix \(A\) with entries

and the vectors \(\varvec{f}= \{f(\varvec{x}) : \varvec{x}\in \Lambda _{\varphi }(\varvec{z}, N)\}\) and \(\hat{\varvec{f}} = \{\hat{f}(\varvec{k})\) : \(\varvec{k}\in {H}_T^{d,\varvec{\beta }}\}\). Then for the reconstruction of a function from its samples, we need to find the vector \(\hat{\varvec{f}}\) such that \(A\hat{\varvec{f}} = \varvec{f}\). This amounts to finding the coefficients \(\hat{\varvec{f}}\) such that we interpolate \(f(\varvec{x})\) at the points in \(\Lambda _{\varphi }(\varvec{z}, N)\). If \(\hat{\varvec{f}}\) is to be evaluated this way, \(A^T\) is premultiplied to the above condition to obtain \(A^TA\hat{\varvec{f}} = A^T\varvec{f}\), which is referred to as the normal equation of the first kind (note that \(A^TA\) is now a square matrix). The uniqueness of the reconstruction depends on the invertibility of the matrix \(A^TA\). Note that an entry of \(A^TA\) is given by, for \(1 \le p,q \le |H_T^{d,\varvec{\beta }}|\)

Equation (15) together with Algorithm 1 ensures that we can find an \(N \ge 1\) and a generating vector \(\varvec{z}\) such that \([A^TA](p,q) = N\delta _{p,q}\). This makes \(A^TA\) a diagonal matrix with the diagonal entries being \(N\) and the evaluation of \(\hat{\varvec{f}}\) can be simplified to

\(\square \)

3.5 Fast Evaluation

The dual of the reconstruction problem is that of evaluation, where given the coefficients \(\hat{f}(\varvec{k})\) for an appropriate index set, we need a fast way of evaluating \(f(\varvec{x})\) for all points in \(\Lambda _{\varphi }(\varvec{z}, N)\).

Theorem 4

The evaluation of a \(d\)-dimensional function at a set of tent-transformed lattice points, given the cosine coefficients \(\hat{f}(\varvec{k})\) on the hyperbolic cross \(H_T^{d,\varvec{\beta }}\), simplifies to calculating a one-dimensional inverse fast Fourier transform, and is evaluated in \(C(N\log (N) + d|\widetilde{H}_T^{d,\varvec{\beta }}|)\) floating point operations where \(C\) is independent of the number of dimensions.

Proof

Let \(\varvec{x}^{(n)}, n = 0,\ldots ,N-1\) be the points in the rank-1 lattice point set \(\Lambda (\varvec{z}, N)\). Then,

We have used the following relation when moving from the index set \(H_T^{d,\varvec{\beta }}\) to \(\widetilde{H}_T^{d,\varvec{\beta }}\): For any function \(A(\varvec{k})\),

We can evaluate \(f\) at all the tent-transformed nodes, which is roughly at \(N/2\) distinct points, by precomputing all \(\sum _{\varvec{k}\cdot \varvec{z}\equiv \ell \pmod {N}}\frac{\hat{f}(|\varvec{k}|)}{\left( \sqrt{2}\right) ^{|\varvec{k}|_0 }}\) and doing a one-dimensional inverse FFT. \(\square \)

The steps for finding the function evaluations using Theorem 4 are listed in Algorithm 3.

4 Collocation

In addition to reconstruction, another natural application of the sampling method described in Sect. 3 is the problem of collocation. Spectral collocation methods with Fourier series and rank-1 lattice points have appeared earlier, see [14, 16, 17]. Also, hyperbolic cross approximations have been used within this context in [14] and [17].

As a model example, consider the Poisson partial differential equation

where \(\nabla ^2\) is the Laplace operator given by

with either Neumann or Dirichlet boundary conditions.

Collocation methods are often employed to find the solution of the above differential operator. The goal here is to use the point sets obtained in the previous section as collocation points, i.e., to find the cosine coefficients of the solution such that the governing equations are satisfied at the chosen set of points.

4.1 Neumann Boundary Conditions

Neumann boundary conditions specify the behaviour of the derivatives on the boundaries of the domain:

where \(\partial \Omega \) is the boundary of the domain and \(\varvec{n}\) denotes the normal to the boundary.

Theorem 5

Consider the following Poisson problem with Neumann boundary conditions as in (20) and let \(f(\varvec{x}) \in C_{d,\alpha ,\varvec{\gamma }}\) as in Definition 1,

Then, \(u(\varvec{x})\) has the following series expansion:

where \(B(\varvec{x}) \in C_{d,\alpha ,\varvec{\gamma }}\), called the shift, is an arbitrary function chosen to satisfy the inhomogeneous boundary conditions and \(g(\varvec{x}) = f(\varvec{x}) - \nabla ^2 B(\varvec{x})\).

Proof

We first consider the case of homogeneous boundary conditions. Expanding the functions \(u(\varvec{x})\) and \(f(\varvec{x})\) in terms of cosine series gives us the following relation between their coefficients: for all \(\varvec{k}\ne \varvec{0}\) we have

The solution of the PDE can then be written as

Inhomogeneous boundary conditions are handled by the basis recombination approach for a linear differential operator as described in [3]. We set

and choose \(B(\varvec{x})\) to be an arbitrary function such that it satisfies all the boundary conditions. If \(B(\varvec{x})\) is chosen this way, what remains to be solved is \(\nabla ^2 v(\varvec{x}) = f(\varvec{x}) - \nabla ^2 B(\varvec{x}) = g(\varvec{x})\). The residue \(v(\varvec{x})\), by virtue of cosine series naturally satisfies homogeneous Neumann boundary conditions. Using (21) and (22) we have

\(\square \)

Using the above result, one can find an approximation to the solution, denoted by \(u_a(\varvec{x})\), by first truncating the summation to a hyperbolic cross \(H_T^{d,\varvec{\beta }}\) and then approximating the coefficients \(\hat{f}(\varvec{k})\) or \(\hat{g}(\varvec{k})\) from the samples of the input function on the tent-transformed rank-1 lattice points using Algorithm 2. The sampling points, in this context are the collocation points. It is observed from (21) that when \(f(\varvec{x})\) has support limited to the hyperbolic cross, so does \(u(\varvec{x})\). When \(f(\varvec{x})\) has support beyond the hyperbolic cross, with spectral coefficients that decay sufficiently fast, then the coefficients of \(u(\varvec{x})\) decay faster and in this case we truncate the summation to a hyperbolic cross and try to reduce the \(L_2\) error of approximation. In case of inhomogeneous boundary conditions, the decay of the spectral coefficients of the solution \(u(\varvec{x})\) depend on those of \(B(\varvec{x})\) additionally and the same argument follows. The behaviour of \(u(\varvec{x})\) will be dominated by the function with wider spectral support and slower decay of coefficients. We however assume that the boundary conditions are prescribed such that \(B(\varvec{x})\) has well behaved coefficients.

4.2 Dirichlet Boundary Conditions

Dirichlet boundary conditions prescribe the value of the solution at the boundaries of the domain:

where \(\partial \Omega \) is the boundary of the domain. The approach for Dirichlet boundary conditions varies as cosine series do not yield homogeneous Dirichlet boundary conditions by definition. Consider the Poisson problem as in (19) with Dirichlet boundary conditions.

We assume that the boundary conditions are continuous at the corners. One of the standard approaches here is to use the eigenfunctions of the Laplace operator with homogeneous Dirichlet boundary conditions [1]. They form an orthonormal basis set for \(L_2(\Omega )\), \(\Omega = (0,1)\) and are given by

As before, for the \(d\)-dimensional case we consider the tensor product of the basis functions above,

The basis recombination approach can now be applied, where \(B(\varvec{x})\) satisfies the corresponding inhomogeneous boundary conditions and \(v(\varvec{x})\) yields homogeneous boundary conditions owing to the sine series. When applied naively to inhomogeneous Dirichlet boundary conditions, the sine series suffer from the same disadvantage as the Fourier series, that they also exhibit the Gibbs phenomenon near the end points. However, when one uses the basis recombination approach to subtract the boundary conditions, this can be avoided. Function approximation using multivariate sine series is challenging as the odd frequencies of the half-period sine functions cannot be integrated exactly with the cubature rules described above. We propose an approach to overcome this. We make use of the expansion of a function in terms of the sine series

where \(\hat{f}_s(\varvec{k})\) are now the sine coefficients, given by

Additionally we assume that the derivative of the forcing function, \(f'(\varvec{x})=\frac{\mathrm {d}f(\varvec{x})}{\mathrm {d}x_1\cdots \mathrm {d}x_d}\), is available to us for sampling.

Theorem 6

Consider the following Poisson problem with Dirichlet boundary conditions as in (23)

and let \(f(\varvec{x})\) have an absolutely converging sine series expansion, with additional smoothness such that the coefficients satisfy

Then, \(u(\varvec{x})\) can be expressed in terms of the following series expansion:

where \(B(\varvec{x})\) is an arbitrary shift function chosen to satisfy the inhomogeneous boundary conditions, \(H_T^{d,\varvec{\beta }}\subset \mathbb {N}^d\) is a hyperbolic cross and \(g(\varvec{x}) = f(\varvec{x}) - \nabla ^2 B(\varvec{x})\).

Proof

Starting with the series expansion of \(f(\varvec{x})\) as in (25), differentiate in all variables to get

If \(f(\varvec{x})\) complies to the additional smoothness constraint in (26), the sum in (27) converges absolutely. It is observed that the total derivative \(f'(\varvec{x})\) is then an expansion in terms of the cosine series. Now take \(u(\varvec{x}) = v(\varvec{x}) + B(\varvec{x})\) and denote by \(u'(\varvec{x}),v'(\varvec{x})\) and \(B'(\varvec{x})\) their total derivatives. Then

We define \(g'(\varvec{x}) :\,= f'(\varvec{x}) - \nabla ^2 B'(\varvec{x}) \left( =\nabla ^2 v'(\varvec{x})\right) \). The steps described in the previous section are then applied to get

and

We then recover \(u(\varvec{x})\) using (25) and (27) as

\(\square \)

This solution is unique as there is no constant term present in the sine series expansion. As before, the solution can be approximated by truncating the above expansion to a hyperbolic cross \(H_T^{d,\varvec{\beta }}\) and calculating the coefficients \(\hat{f'}(\varvec{k})\) and \(\hat{g'}(\varvec{k})\) as mentioned in the previous section. We note that for sine series one can also approximate the solution using traditional Fourier series by considering the odd extension of the function.

5 Numerical Results

In this section we provide numerical results to demonstrate the use of cosine series with tent-transformed lattice points. Algorithms 1 and 2 were used for finding the rank-1 lattice point sets and evaluating the cosine coefficients respectively. Experiments were carried out for functions with support limited to hyperbolic crosses and for functions with wider spectral supports. While the former could be reconstructed accurately to machine precision, the latter are of more interest for this section and are presented here.

Example 1

First, we solve for the Poisson problem as in (19), for different choices for the number of dimensions. We consider the following problem with homogeneous Neumann boundary conditions as an example

where \(\gamma _j >0 \) for all \(j\). The solution for the above problem is known and is

When \(\gamma _j = 1\) for all \(j\), we have

and

This falls into the class of functions with convergent and decaying cosine coefficients, not restricted to a hyperbolic cross. Clearly, the \(\gamma \)’s determine the importance of each dimension and control the difficulty of the problem. With larger values of \(\gamma \)’s (or slower decays), the problem becomes more difficult and larger \(\beta \)’s are required to solve the problem. For more details on the decay of these weights and their impact on tractability, see [6].

As described in the previous sections we approximate \(u\) as follows

where we denote by \(\hat{u}_a(\varvec{k})\) the coefficients approximated by the collocation method. The total mean square error is then given by

In the above expression the first term is the truncation error; it comes from truncating the expansion to the hyperbolic cross. The second term is the approximation error intrinsic to the approximation method used; it is the aliasing error from collocation in our case. To obtain the relative errors we divide through by \(\Vert u\Vert _{L_2}^2\). We assume that the zeroth cosine coefficient is given.

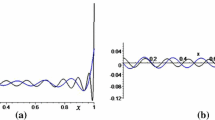

Figure 3 shows the decay of the truncation error and the root-mean-square approximation error with the increase in the number of collocation points. The experiment was setup as follows:

-

The number of collocation points and the generating vectors were searched based on increasing hyperbolic cross indices (with small increments). For each degree, generating vectors were found for a range of prime numbers using Algorithm 1; in each case the number of points was then reduced using [13], Algorithm 4]. Thereafter, for every degree, the point set with the least number of points was chosen.

-

The \(\beta \)s and \(\gamma \)s are chosen based on [6], Sect. 5] and such that the truncation and approximation errors are of the same order. Note that if the truncation error is high, there is no gain for the overall accuracy in reducing the approximation error and vice-versa.

The rate of convergence of the approximation error is approximately \(N^{-1.28}\) and \(N^{-1.07}\) for 3 and 5 dimensions respectively. The reduction in the error with increase in \(N\) is due to the increased hyperbolic cross degree that is supported by the larger point set. This combined with the decay of cosine coefficients reduces both the truncation and the aliasing errors (cosine coefficients \(\hat{f}(k)\) of the function \(x^2(1-x)^2\) decay as \(k^{-4}\)). It is known that if the decay parameter \(\alpha \) (as given in Definition 1) is 1, the worst case error of quadrature decays as \(N^{-1}\), see [8], and we could expect a similar order of convergence here; however, the relation between the decay of cosine coefficients and the rates of convergence of the truncation and approximation errors are topics of further research.

It is worth mentioning that the collocation method using cosine series addresses a range of problems for which a similar method using Fourier series will fail. To start with, the Fourier series do not satisfy homogeneous Neumann boundary conditions. Also the spectral method used here prescribes some implicit conditions. First, the input functions and the solutions should have an exact representation in terms of the series expansions, i.e., the series expansion should converge to the function over the entire domain. For a function that does not meet periodic boundary conditions, its Fourier series expansion always has an \(O(1)\) error at the boundaries of the domain, failing the first condition. Secondly, the spectral coefficients of the solution \(u(\varvec{x})\) should decay faster than that of the input function \(f(\varvec{x})\). Consider a one dimensional problem where \(u(x)\) and \(f(x)\) are both non periodic, and hence the Fourier coefficients of both \(u(x)\) and \(f(x)\) decay not faster than \(O(k^{-1})\). Now if one would employ the collocation method with Fourier series, the method will converge to a function with coefficients that decay as \(O(k^{-3})\), hence converging to a function which is different from the solution.

Example 2

Next, we compare the reconstruction method using cosine and Fourier expansions for non-periodic functions. We consider two test functions belonging to different smoothness classes:

and

We measure the total error \(e^2_{\text {total}}\) in both cases as follows: denote the reconstructed function as \(u_{H}\) where \(H\) is \(H_T^{d,\varvec{\beta }}\) for the cosine series and \(\widetilde{H}_T^{d,\varvec{\beta }}\) for the Fourier series, and let \(\phi _{\varvec{k}}(\varvec{x})\) denote either the cosine bases or the Fourier bases. Then,

In case of cosine series \(\hat{u}^*_a = \hat{u}_a\), \(\hat{u}^* = \hat{u}\) and \(\phi ^*_{\varvec{k}}(\varvec{x}) = \phi _{\varvec{k}}(\varvec{x})\). Figure 4 (for \(u_1(\varvec{x})\)) and Fig. 5 (for \(u_2(\varvec{x})\)) show the errors plotted against the number of sampling points for 3 and 4 dimensions, with Fourier and cosine series. Algorithm 1 was used to find the point sets for various hyperbolic cross indices (note that the same algorithm works for both Fourier and cosine series) and the number of points in each case was then reduced using [13], Algorithm 4].

For \(u_1(\varvec{x})\), we achieve convergence rates of \(O(N^{-1.79})\) and \(O(N^{-1.54})\) in 3 and 4 dimensions with cosine series. In contrast, with Fourier series the convergence rates are \(O(N^{-0.26})\) and \(O(N^{-0.22})\). The cosine series have a higher order of convergence because the spectral coefficients of the given test function decay at a faster rate of \(O(k^{-4})\) in the cosine space. Decay of \(O(k^{-4})\) is due to the fact that the first (partial) derivative of the function satisfies homogeneous Neumann boundary conditions. In general, for every odd (partial) derivative of the function that meets the Neumann boundary conditions, the decay of the cosine coefficients increases by an order of 2, see [1, 19], hence giving possibilities for even higher orders of convergence that are not possible for non-periodic functions with Fourier series.

For \(u_2(\varvec{x})\), with cosine series, we achieve convergence rates of \(O(N^{-1.61})\) and \(O(N^{-1.34})\) in 3 and 4 dimensions respectively. With Fourier series the convergence rates are \(O(N^{-0.25})\) and \(O(N^{-0.22})\). The same reasoning as before holds, the cosine coefficients decay as \(O(k^{-2})\) leading to higher orders of convergence in the cosine space. We observe that although \(u_2(\varvec{x})\) is less smooth than \(u_1(\varvec{x})\), the order of convergence with cosine series still remains good.

6 Conclusions

We have shown that non-periodic functions expanded in terms of cosine series can be reconstructed from the samples of the input function at tent-transformed lattice points using extensions to the methods that exist for periodic functions. These extensions also apply for function evaluation. We have shown that the same sampling points are also suitable for being collocation nodes, when solving a Poisson type PDE with Neumann/Dirichlet boundary conditions with a spectral collocation method. One could also extend the same method to other operators as well as for mixed boundary conditions.

Analysis of the full \(L_2\) error in terms of the smoothness of the cosine space, similar to the analysis for the Korobov space in [15], is left for future research. Algorithm 1 can then also be adjusted while at the same time trying to minimize (17).

References

Adcock, B.: Multivariate modified Fourier series and application to boundary value problems. Numerische Mathematik 115, 511–552 (2010)

Adcock, B., Huybrechs, D.: Multivariate modified Fourier expansions. In: Proceedings of the International Conference on Spectral and High Order Methods, vol. 76, pp. 85–92 (2011)

Boyd, J.P.: Chebyshev and Fourier Spectral Methods. Courier Dover Publications, Mineola (2001)

Bungartz, H.-J., Griebel, M.: Sparse grids. Acta Numer. 13, 1–123 (2004)

Cools, R., Kuo, F.Y., Nuyens, D.: Constructing lattice rules based on weighted degree of exactness and worst case error. Computing 87, 63–89 (2010)

Cools, R., Nuyens, D.: A Belgian view on lattice rules. In: Keller, A., Heinrich, S., Niederreiter, H.(eds.) Monte Carlo and Quasi-Monte Carlo Methods 2006, pp. 3–21. Springer, Berlin (2008)

Dick, J., Kuo, F.Y., Sloan, I.H.: High-dimensional integration: the quasi-Monte Carlo way. Acta Numer. 22, 133–288 (2013)

Dick, J., Nuyens, D., Pillichshammer, F.: Lattice rules for nonperiodic smooth integrands. Numerische Mathematik 126, 259–291 (2013)

Hickernell, F.J.: Obtaining O\((n^{-2+\epsilon })\) convergence for lattice quadrature rules. In: Fang, K.T., Hickernell, F.J., Niederreiter, H. (eds.) Monte Carlo and Quasi-Monte Carlo Methods 2000, pp. 274–289 (2002)

Iserles, A., Nørsett, S.P.: Modified Fourier expansions: from high oscillation to rapid approximation I.IMA J. Numer. Anal. 28, 862–887 (2008)

Kämmerer, L., Kunis, S.: On the stability of the hyperbolic cross discrete Fourier transform. Numerische Mathematik 117, 581–600 (2011)

Kämmerer, L., Kunis, S., Potts, D.: Interpolation lattices for hyperbolic cross trigonometric polynomials. J. Complex. 28, 76–92 (2012)

Kämmerer, L.: Reconstructing hyperbolic cross trigonometric polynomials by sampling along rank-1 lattices. SIAM J. Numer. Anal. 51(5), 2773–2796 (2013)

Keng, H.L., Yuan, W.: Applications of Number Theory to Numerical Analysis. Springer, New York (1981)

Kuo, F.Y., Sloan, I.H., Woźniakowski, H.: Lattice rules for multivariate approximation in the worst case setting. In: Niederreiter, H., Talay, D. (eds.), Monte Carlo and Quasi-Monte Carlo Methods 2004, pp. 289–330 (2006)

Li, D., Hickernell, F.J.: Trigonometric spectral collocation methods on lattices. In: Cheng, S.Y., Shu, C.-W., Tang, T. (eds.) Recent Advances in Scientific Computing and Partial Differential Equations. AMS Series in Contemporary Mathematics, vol. 330, pp. 121–132. American Mathematical Society, Providence (2003)

Munthe-Kaas, H., Sørevik, T.: Multidimensional pseudo-spectral methods on lattice grids. Appl. Numer. Math. 62(3), 155–165 (2012)

Nuyens, D.: The construction of good lattice rules and polynomial lattice rules. In: Kritzer, P., Niederreiter, H., Pillichshammer, F., Winterhof, A. (eds.) Uniform Distribution and Quasi-Monte Carlo Methods: Discrepancy, Integration and Applications. Radon Series on Computational and Applied Mathematics, vol. 15, pp. 223–256. De Gruyter, Berlin (2014)

Olver, S.: On the convergence rate of a modified Fourier series. Math. Comput. 78, 1629–1645 (2009)

Ruijter, M.J., Oosterlee, C.W., Aalbers, R.F.T.: On the Fourier cosine series expansion (COS) method for stochastic control problems. Numer. Linear Algebra Appl. 20(4), 598–625 (2013)

Sloan, I.H., Joe, S.: Lattice Methods for Multiple Integration. Oxford University Press, Oxford (1994)

Sloan, I.H., Woźniakowski, H.: When are quasi-Monte Carlo algorithms efficient for high dimensional integrals? J. Complex. 14, 1–33 (1998)

Acknowledgments

We thank Daan Huybrechs for his comments on the initial transcripts of the paper. We thank Dirk Abbeloos for some of his valuable tips on PDEs. We also thank our anonymous referees for their constructive review comments. We thank the ICERM organization at Brown University for their kind hospitality during the semester program for High-dimensional Approximation, many ideas to improve this manuscript came while working in the vast collaborative spaces they provided.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Arieh Iserles.

Rights and permissions

About this article

Cite this article

Suryanarayana, G., Nuyens, D. & Cools, R. Reconstruction and Collocation of a Class of Non-periodic Functions by Sampling Along Tent-Transformed Rank-1 Lattices. J Fourier Anal Appl 22, 187–214 (2016). https://doi.org/10.1007/s00041-015-9412-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00041-015-9412-3

Keywords

- Quasi-Monte Carlo methods

- Cosine series

- Function approximation

- Hyperbolic crosses

- Rank-1 lattice rules

- Spectral methods

- Component-by-component construction