Abstract

We address the problem of recovering an n-vector from m linear measurements lacking sign or phase information. We show that lifting and semidefinite relaxation suffice by themselves for stable recovery in the setting of m=O(nlogn) random sensing vectors, with high probability. The recovery method is optimizationless in the sense that trace minimization in the PhaseLift procedure is unnecessary. That is, PhaseLift reduces to a feasibility problem. The optimizationless perspective allows for a Douglas-Rachford numerical algorithm that is unavailable for PhaseLift. This method exhibits linear convergence with a favorable convergence rate and without any parameter tuning.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We study the recovery of a vector \(\mathbf {x}_{0} \in \mathbb {R}^{n}\) or \(\mathbb {C}^{n}\) from the set of phaseless linear measurements

where \(\mathbf {z}_{i} \in \mathbb {R}^{n}\) or \(\mathbb {C}^{n}\) are known random sensing vectors. Such amplitude-only measurements arise in a variety of imaging applications, such as X-ray crystallography [5, 15, 17], optics [23], and microscopy [16]. We seek stable and efficient methods for finding x 0 using as few measurements as possible.

This recovery problem is difficult because the set of real or complex numbers with a given magnitude is nonconvex. In the real case, there are 2m possible assignments of sign to the m phaseless measurements. Hence, exhaustive searching is infeasible. In the complex case, the situation is even worse, as there are a continuum of phase assignments to consider. A method based of alternated projections avoids an exhaustive search but does not always converge toward a solution [11, 12, 14].

In [5, 7, 8], the authors convexify the problem by lifting it to the space of n×n matrices, where xx ∗ is a proxy for the vector x. A key motivation for this lifting is that the nonconvex measurements on vectors become linear measurements on matrices [1]. A rank-1 constraint is then relaxed to a trace minimization over the cone of positive semi-definite matrices, as is now standard in matrix completion [19]. This convex program is called PhaseLift in [7], where it is shown that x 0 can be found robustly in the case of random z i , if m=O(nlogn). The matrix minimizer is unique, which in turn determines x 0 up to a global phase.

The contribution of the present paper is to show that trace minimization is unnecessary in this lifting framework for the phaseless recovery problem. The vector x 0 can be recovered robustly by an optimizationless convex problem: one of finding a positive semi-definite matrix that is consistent with linear measurements. We prove there is only one such matrix, provided that there are O(nlogn) measurements. In other words, the phase recovery problem can be solved by intersecting two convex sets, without minimizing an objective. We show empirically that two algorithms converge linearly (exponentially fast) toward the solution. We remark that these methods are simpler than methods for PhaseLift because they require less or no parameter tuning. A result subsequent to the posting of this paper has improved the number of required measurements to O(n) by considering an alternative construction of the dual certificate that allows tighter probabilistic bounds [6].

In [2], the authors show that the complex phaseless recovery problem from random measurements is determined if m≥4n−2 (with probability one). This means that the x satisfying |〈x,z i 〉|=|〈x 0,z i 〉| is unique and equal to x 0, regardless of the method used to find it. A corollary of the analysis in [7], and of the present paper, is that this property is stable under perturbations of the data, provided m=O(nlogn). This determinacy is in contrast to compressed sensing and matrix completion, where a prior (sparsity, low-rank) is used to select a solution of an otherwise underdetermined system of equations. The relaxation of this prior (ℓ 1 norm, nuclear norm) is then typically shown to determine the same solution. No such prior is needed here; the semi-definite relaxation helps find the solution, not determine it.

The determinacy of the recovery problem over n×n matrices may be unexpected because there are n 2 unknowns and only O(nlogn) measurements. What compensates for the apparent lack of data is the fact that the matrix we seek has rank one and is thus on the edge of the cone of positive semi-definite matrices. Most perturbed matrices that are consistent with the measurements cease to remain positive semi-definite. In other words, the positive semi-definite cone X⪰0 is “spiky” around a rank-1 matrix X 0. That is, with high probability, particular random hyperplanes that contain X 0 and have large enough codimension will have no other intersection with the cone.

The present paper does not advocate for fully abandoning trace minimization in the context of phase retrieval. The structure of the sensing matrices appears to affect the number of measurements required for recovery. Consider measurements of the form \(\mathbf {x}_{0}^{*} \varPhi \mathbf {x}_{0}\), for some Φ. Numerical simulations (not shown) suggest that O(n 2) measurements are needed if Φ is a matrix with Gaussian i.i.d. entries. On the other hand, it was shown in [19] that minimization of the nuclear norm constrained by Tr\((\mathbf {X}\varPhi) = \mathbf {x}_{0}^{*} \varPhi \mathbf {x}_{0}\) recovers \(\mathbf {x}_{0} \mathbf {x}_{0}^{*}\) with high probability as soon as m=O(nlogn). Other numerical observations (not shown) suggest that it is the symmetric, positive semi-definite character of Φ that allows for optimizationless recovery.

The present paper owes much to [7], as our analysis is very similar to theirs. We wish to also reference the papers [20, 22], where phase recovery is cast as synchronization problem and solved via a semi-definite relaxation of max-cut type over the complex torus (i.e., the magnitude information is first factored out.) The idea of lifting and semi-definite relaxation was introduced very successfully for the max-cut problem in [13]. The paper [20] also introduces a fast and efficient method based on eigenvectors of the graph connection Laplacian for solving the angular synchronization problem. The performance of this latter method was further studied in [3].

1.1 Problem Statement and Main Result

Let \(\mathbf{x}_{0} \in \mathbb {R}^{n}\) or \(\mathbb {C}^{n}\) be a vector for which we have the m measurements \(| \langle \mathbf {x}_{0}, \mathbf {z}_{i} \rangle | = \sqrt{b_{i}}\), for independent sensing vectors z i distributed uniformly on the unit sphere. We write the phaseless recovery problem for x 0 as

where \(A:\mathbb {R}^{n} \to \mathbb {R}^{m}\) is given by A(x) i =|〈x,z i 〉|2, and A(x 0)=b.

Problem (1) can be convexified by lifting it to a matrix recovery problem. Let \(\mathcal {A}\) and its adjoint be the linear operators

where \(\mathcal{H}^{n\times n}\) is the space of n×n Hermitian matrices. Observe that \(\mathcal {A}( \mathbf {x}\mathbf {x}^{*}) = A(\mathbf {x}) \) for all vectors x. Letting \(\mathbf {X}_{0} = \mathbf {x}_{0} \mathbf {x}_{0}^{*}\), we note that \(\mathcal {A}(\mathbf {X}_{0}) = b\). We emphasize that \(\mathcal {A}\) is linear in X whereas A is nonlinear in x.

The matrix recovery problem we consider is

Without the positivity constraint, there would be multiple solutions whenever \(m < \frac{(n+1)n}{2}\). We include the constraint in order to allow for recovery in this classically underdetermined regime.

Our main result is that the matrix recovery problem (2) has a unique solution when there are O(nlogn) measurements.

Theorem 1

Let \(\mathbf {x}_{0} \in \mathbb {R}^{n}\) or \(\mathbb {C}^{n}\) and \(\mathbf {X}_{0} = \mathbf {x}_{0} \mathbf {x}_{0}^{*}\). Let m≥cnlogn for a sufficiently large c. With high probability, X=X 0 is the unique solution to X⪰0 and \(\mathcal {A}(\mathbf {X}) = b\). This probability is at least \(1 - e^{-\gamma\frac{m}{n}}\), for some γ>0.

As a result, the phaseless recovery problem has a unique solution, up to a global phase, with O(nlogn) measurements. In the real-valued case, the problem is determined up to a minus sign.

Corollary 2

Let \(\mathbf {x}_{0} \in \mathbb {R}^{n}\) or \(\mathbb {C}^{n}\). Let m≥cnlogn for a sufficiently large c. With high probability, {e iϕ x 0} are the only solutions to A(x)=b. This probability is at least \(1 - e^{-\gamma\frac{m}{n}}\), for some γ>0.

Theorem 1 suggests ways of recovering x 0. If an \(\mathbf {X}\in\{\mathbf {X}\succeq 0\} \cap\{\mathbf {X}\mid \mathcal {A}( \mathbf {X}) = \mathbf {b}\}\) can be found, x 0 is given by the leading eigenvector of X. See Sect. 6 for more details on how to find X.

1.2 Stability Result

In practical applications, measurements are contaminated by noise. To show stability of optimizationless recovery, we consider the model

where ν corresponds to a noise term with bounded ℓ 2 norm, ∥ν∥2≤ε. The corresponding noisy variant of (1) is

We note that all three terms in (3) scale quadratically in x or x 0.

Problem (3) can be convexified by lifting it to the space of matrices. The noisy matrix recovery problem is

We show that all feasible X are within an O(ε) ball of X 0 provided there are O(nlogn) measurements.

Theorem 3

Let \(\mathbf {x}_{0} \in \mathbb {R}^{n}\) or \(\mathbb {C}^{n}\) and \(\mathbf {X}_{0} = \mathbf {x}_{0} \mathbf {x}_{0}^{*}\). Let m≥cnlogn for a sufficiently large c. With high probability,

for some C>0. This probability is at least \(1 - e^{-\gamma\frac {m}{n}}\), for some γ>0.

As a result, the phaseless recovery problem is stable with O(nlogn) measurements.

Corollary 4

Let \(\mathbf {x}_{0} \in \mathbb {R}^{n}\) or \(\mathbb {C}^{n}\). Let m≥cnlogn for a sufficiently large c. With high probability,

for some ϕ∈[0,2π), and for some C>0. This probability is at least \(1 - e^{-\gamma\frac{m}{n}}\), for some γ>0.

Theorem 3 ensures that numerical methods can be used to find X. See Sect. 6 for ways of finding \(\mathbf {X}\in\{\mathbf {X}\succeq 0\} \cap\{\mathcal {A}(\mathbf {X}) \approx \mathbf {b}\}\). As the recovered matrix may have large rank, we approximate x 0 with the leading eigenvector of X.

1.3 Organization of this Paper

In Sect. 2, we prove a lemma containing the central argument for the proof of Theorem 1. Its assumptions involve ℓ 1-isometry properties and the existence of an inexact dual certificate. Section 2.3 provides the proof of Theorem 1 in the real-valued case. It cites [7] for the ℓ 1-isometry properties and Sect. 3 for existence of an inexact dual certificate. In Sect. 3 we construct an inexact dual certificate and show that it satisfies the required properties in the real-valued case. In Sect. 4 we prove Theorem 3 on stability in the real-valued case. In Sect. 5, we discuss the modifications in the complex-valued case. In Sect. 6, we present computational methods for the optimizationless problem with comparisons to PhaseLift. We also simulate them to establish stability empirically.

1.4 Notation

We use boldface for variables representing vectors or matrices. We use normal typeface for scalar quantities. Let z i,k denote the kth entry of the vector z i . For two matrices, let 〈X,Y〉=Tr(Y ∗ X) be the Hilbert-Schmidt inner product. Let σ i be the singular values of the matrix X. We define the norms

In particular, we write the Frobenius norm of X as ∥X∥2. We write the spectral norm of X as ∥X∥.

An n-vector x generates a decomposition of \(\mathbb {R}^{n}\) or \(\mathbb {C}^{n}\) into two subspaces. These subspaces are the span of x and the span of all vectors orthogonal to x. Abusing notation, we write these subspaces as x and x ⊥. The space of n-by-n matrices is correspondingly partitioned into the four subspaces x ⊗ x, x ⊗ x ⊥, x ⊥ ⊗ x, and x ⊥ ⊗ x ⊥, where ⊗ denotes the outer product. We write T x for the set of symmetric matrices which lie in the direct sum of the first three subspaces, namely \(T_{\mathbf {x}}= \{ \mathbf {x}\mathbf {y}^{*} + \mathbf {y}\mathbf {x}^{*} \mid \mathbf {y}\in \mathbb {R}^{n} \text { or } \mathbb {C}^{n}\}\). Correspondingly, we write \(T^{\perp}_{\mathbf {x}}\) for the set of symmetric matrices in the fourth subspace. We note that \(T^{\perp}_{\mathbf {x}}\) is the orthogonal complement of T x with respect to the Hilbert-Schmidt inner product. Let e 1 be the first coordinate vector. For short, let \(T = T_{\mathbf {e}_{1}}\) and \(T^{\bot}= T^{\bot}_{\mathbf {e}_{1}}\). We denote the projection of X onto T as either \(\mathcal{P}_{T} \mathbf {X}\) or X T . We denote projections onto T ⊥ similarly.

We let I be the n×n identity matrix. We denote the range of \(\mathcal {A}^{*}\) by \(\mathcal{R}( \mathcal {A}^{*})\).

2 Proof of Main Result

Because of scaling and the property that the measurement vectors z i come from a rotationally invariant distribution, we take x 0=e 1 without loss of generality. Because all measurements scale with the length ∥z i ∥2, it is equivalent to establish the result for independent unit normal sensing vectors z i . To prove Theorem 1, we use an argument based on inexact dual certificates and ℓ 1-isometry properties of \(\mathcal {A}\). This argument parallels that of [7]. We directly use the ℓ 1-isometry properties they establish, but we require different properties on the inexact dual certificate.

2.1 About Dual Certificates

As motivation for the introduction of an inexact dual certificate in the next section, observe that if \(\mathcal {A}\) is injective on T, and if there exists a (exact) dual certificate \(\mathbf {Y}\in\mathcal{R}(\mathcal {A}^{*})\) such that

then X 0 is the only solution to \(\mathcal {A}(\mathbf {X}) = \mathbf {b}\). This is because

where the first equality is because \(\mathbf {Y}\in\mathcal{R}(\mathcal {A}^{*})\) and \(\mathcal {A}(\mathbf {X}) = \mathcal {A}(\mathbf {X}_{0})\). The last implication follows from injectivity on T.

Conceptually, Y arises as a Lagrange multiplier, dual to the constraint X⪰0 in the feasibility problem

Dual feasibility requires Y⪰0. As visualized in Fig. 1a, Y acts as a vector normal to a codimension-1 hyperplane that separates the lower-dimensional space of solutions \(\{\mathcal {A}(\mathbf {X}) = b\}\) from the positive matrices not in T. The condition \(\mathbf {Y}_{T^{\perp}} \succ 0\) is further needed to ensure that this hyperplane only intersects the cone along T, ensuring uniqueness of the solution.

The graphical interpretation of the exact and inexact dual certificates. The positive axes represent the cone of positive matrices. The thick gray line represents the solutions to \(\mathcal {A}(\mathbf {X}) = \mathbf {b}\). The exact dual certificate Y is a normal vector to a hyperplane that separates the space of solutions from positive matrices. When the dual certificate is inexact, we use the fact that ℓ 1-isometry properties imply X is restricted to the cone (10). The inexact dual certificate \(\bar {\mathbf {Y}}\) is normal to a hyperplane that separates X 0 from the rest of this restricted cone. As shown, the hyperplane normal to \(\bar {\mathbf {Y}}\) does not separate X 0 from positive matrices

The nullspace condition Y T =0 is what makes the certificate exact. As \(\mathbf {Y}\in\mathcal{R}(\mathcal {A}^{*})\), Y must be of the form \(\sum_{i} \lambda _{i} \mathbf {z}_{i} \mathbf {z}_{i}^{*}\). The strict requirement that Y T =0 would force the λ i to be complicated (at best algebraic) functions of all the z j , j=1,…,m. We follow [7] in constructing instead an inexact dual certificate, such that Y T is close to but not equal to 0, and for which the λ i are more tractable (quadratic) polynomials in the z i . A careful inspection of the injectivity properties of \(\mathcal {A}\), in the form of the RIP-like condition in [7], is what allows the relaxation of the nullspace condition on Y.

2.2 Central Lemma on Inexact Dual Certificates

With further information about feasible X, we can relax the property that Y T is exactly zero. In [7], the authors show that all feasible X lie in a cone that is approximately \(\{ \|\mathbf {X}_{T^{\perp}}\|_{1} \geq\|\mathbf {X}_{T}- \mathbf {X}_{0}\|\}\), provided there are O(n) measurements. As visualized in Fig. 1b, \(\bar {\mathbf {Y}}\) acts as a vector normal to a hyperplane that separates X 0 from the rest of this cone. The proof of Theorem 1 hinges on the existence of such an inexact dual certificate, along with ℓ 1-isometry properties that establish X is in this cone with high probability.

Lemma 1

Suppose that \(\mathcal {A}\) satisfies

for some δ≤1/9. Suppose that there exists \(\bar {\mathbf {Y}}\in \mathcal{R} (\mathcal {A}^{*})\) satisfying

Then, X 0 is the unique solution to (2).

Proof of Lemma 1

Let X solve (2), and let H=X−X 0. We start by showing, as in [7], that the ℓ 1-isometry conditions (7)–(8) guarantee solutions lie on the cone

This is because

where the equality comes from \(0 = \mathcal {A}(\mathbf {H}) = \mathcal {A}(\mathbf {H}_{T}) + \mathcal {A}(\mathbf {H}_{T^{\perp}})\), and the two inequalities come from the ℓ 1-isometry properties (7)–(8) and the fact that \(\mathbf {H}_{T^{\perp}} \succeq 0\).

Because \(\mathcal {A}(\mathbf {H}) = 0\) and \(\bar {\mathbf {Y}}\in\mathcal{R}(\mathcal {A}^{*})\),

where (11) and (12) follow from (9) and (10), respectively. Because the constant in (12) is positive, we conclude H T =0. Then, (11) establishes \(\mathbf {H}_{T^{\perp}}= 0\). □

2.3 Proof of Theorem 1 and Corollary 2

We use Lemma 1 to prove Theorem 1 for real-valued signals.

Proof of Theorem 1

We need to show that (7)–(9) hold with high probability if m>cnlogn for some c. Lemmas 3.1 and 3.2 in [7] show that (7) and (8) both hold with probability of at least \(1 - 3 e^{-\gamma_{1} m}\) provided m>c 1 n for some c 1. In section 3, we construct \(\bar {\mathbf {Y}}\in\mathcal{R}({\mathcal {A}^{*}})\). As per Lemma 2, \(\| \bar {\mathbf {Y}}_{T}\|_{1} \leq1/2\) with probability at least \(1 - e^{-\gamma_{2} m / n}\) if m>c 2 n. As per Lemma 3, \(\| \bar {\mathbf {Y}}_{T^{\perp}}- 2 \mathbf {I}_{T^{\perp}}\| \leq1\) with probability at least \(1 - 2 e^{-\gamma_{2} m / \log n}\) if m>c 3 nlogn. Hence, \(\bar {\mathbf {Y}}_{T^{\perp}} \succeq \mathbf {I}_{T^{\perp}}\) with at least the same probability. Hence, all of the conditions of Lemma 1 hold with probability at least 1−e −γm/n if m>cnlogn for some c and γ. □

The proof of Corollary 2 is immediate because, with high probability, Theorem 1 implies

3 Existence of Inexact Dual Certificate

To use Lemma 1 in the proof of Theorem 1, we need to show that there exists an inexact dual certificate satisfying (9) with high probability. Our inexact dual certificate vector is different from that in [7], but we use identical tools for its construction and analysis. We also adopt similar notation.

We note that \(\mathcal {A}^{*} \mathcal {A}( \mathbf {X}) = \sum_{i} \langle \mathbf {X}, \mathbf {z}_{i} \mathbf {z}_{i}^{*} \rangle \mathbf {z}_{i} \mathbf {z}_{i}^{*}\), which can alternatively be written as

We let \(\mathcal {S}= \mathbb {E}[\mathbf {z}_{i} \mathbf {z}_{i}^{*} \otimes \mathbf {z}_{i} \mathbf {z}_{i}^{*}]\). The operator \(\mathcal {S}\) is invertible. It and its inverse are given by

We define the inexact dual certificate

where

Alternatively, we can write the inexact dual certificate vector as

where \((\mathbf {1}_{E})_{i} = 1_{E_{i}}\) and ∘ is the elementwise product of vectors. In our notation, truncated quantities have overbars. We subsequently omit the subscript i in z i when it is implied by context.

3.1 Motivation for the Dual Certificate

For ease of understanding, we first consider a candidate dual certificate given by

The motivation for this candidate is twofold: \(\widetilde{\mathbf {Y}} \in \mathcal{R}(\mathcal {A}^{*})\), and \(\widetilde{\mathbf {Y}} \approx2(\mathbf {I}- \mathbf {e}_{1} \mathbf {e}_{1}^{*})\) as m→∞ because \(\mathbb {E}[ \mathcal {A}^{*} \mathcal {A}] = m \mathcal{S}\). In this limit, \(\widetilde{Y}\) becomes an exact dual certificate. For finite m, it should be close but inexact. We can write

where Y i is an independent sample of the random matrix

where \(\mathbf {z}\sim\mathcal{N}(0, \mathbf {I})\). Because the vector Bernstein inequality requires bounded vectors, we truncate the dual certificate in the same manner as [7]. That is, we consider \(1_{E_{i}} \mathbf {Y}_{i}\), completing the derivation of (14).

3.2 Bounds on \(\bar {\mathbf {Y}}\)

We define \(\pi(\beta) = \mathbb {P}(E^{c})\), where E is the event given by

where \(\mathbf {z}\sim\mathcal{N}(0, \mathbf {I})\). In [7], the authors provide the bound \(\pi(\beta) \leq \mathbb {P}( |z_{1}| > \sqrt{2 \beta\log n}) + \mathbb {P}(\|\mathbf {z}\|_{2}^{2} > 3n) \leq n^{-\beta} + e^{-n/3}\), which holds if 2βlogn≥1.

We now present two lemmas that establish that \(\bar {\mathbf {Y}}\) is approximately \(2(\mathbf {I}- \mathbf {e}_{1} \mathbf {e}_{1}^{*})\), and is thus an inexact dual certificate satisfying (9).

Lemma 2

Let \(\bar {\mathbf {Y}}\) be given by (14). There exists positive γ and c such that for sufficiently large n

if m≥cn.

Lemma 3

Let \(\bar {\mathbf {Y}}\) be given by (14). There exists positive γ and c such that for sufficiently large n

if m≥cnlogn.

3.3 Proof of Lemma 2: \(\bar {\mathbf {Y}}\) on T

We prove Lemma 2 in a way that parallels the corresponding proof in [7]. Observe that

where the first inequality follows because \(\bar {\mathbf {Y}}_{T}\) has rank at most 2, and the second inequality follows because \(\bar {\mathbf {Y}}_{T}\) can be nonzero only in its first row and column. We can write

where \(\bar {\mathbf {y}}_{i} = \mathbf {y}_{i} 1_{E_{i}}\), and y i are independent samples of

To bound the ℓ 2 norm of \(\bar {\mathbf {Y}}_{T}\mathbf {e}_{1}\), we use the Vector Bernstein inequality on \(\bar {\mathbf {y}}_{i}\).

Theorem 5

(Vector Bernstein inequality)

Let x i be a sequence of independent random vectors and set \(V \geq\sum_{i} \mathbb {E}\|\mathbf {x}_{i}\|_{2}^{2}\). Then for all t≤V/max∥x i ∥2, we have

In order to apply this inequality, we need to compute \(\max \| \bar {\mathbf {y}}\|_{2}\), \(\mathbb {E}\bar {\mathbf {y}}\), and \(\mathbb {E}\| \bar {\mathbf {y}}\|_{2}\), where \(\bar {\mathbf {y}}= \mathbf {y}1_{E}\).

First, we compute \(\max\| \bar {\mathbf {y}}\|_{2}\). On the event E, \(|z_{1}| \leq \sqrt{2 \beta\log n}\) and \(\|\mathbf {z}\|_{2} \leq\sqrt{3 n}\). If n is large enough that 2βlogn≥9, then |ξ|≤2βlogn. Thus,

for sufficiently large n.

Second, we find an upper bound for \(\mathbb {E}\bar {\mathbf {y}}\). Note that \(\mathbb {E}y_{1} = 0\) because

By symmetry, every entry of \(\bar {\mathbf {y}}\) has zero mean except the first. Hence,

Computing,

we find

where we have used

Thus,

Third, we find an upper bound for \(\mathbb {E}\| \bar {\mathbf {y}}\|_{2}^{2}\). Because \(\| \bar {\mathbf {y}}\|_{2}^{2} \leq\| \mathbf {y}\|_{2}^{2}\), we write out

Hence,

where we have used (20), (21), and

Applying the vector Bernstein inequality with V=m(8n+16), we have that for all \(t \leq(8n+16) / [ \sqrt{24n}(\beta\log n)^{3/2}]\),

Using the triangle inequality and (22), we get

Lemma 2 follows by choosing t,β, and m≥cn where n and c are large enough that

3.4 Proof of Lemma 3: \(\bar {\mathbf {Y}}\) on T ⊥

We prove Lemma 3 in a way that parallels the corresponding proof in [7]. We write

where W i are independent samples of

We decompose W into the three terms

Letting \(\bar {\mathbf {W}}^{(k)}_{i} = \mathbf {W}^{(k)}_{i} 1_{E_{i}}\), it suffices to show that with high probability

3.4.1 Bound on \(\mathbf {I}_{T^{\perp}}1_{E_{i}^{c}}\)

We show that \(m^{-1} \| \sum_{i} \mathbf {I}_{T^{\perp}}1_{E_{i}^{c}}\| = m^{-1} \sum_{i} 1_{E_{i}^{c}} \) is small with probability at least 1−2e −γm for some constant γ>0. To do this, we use the scalar Bernstein inequality.

Theorem 6

(Bernstein inequality)

Let {X i } be a finite sequence of independent random variables. Suppose that there exists V and c such that for all X i and all k≥3,

Then for all t≥0,

Observing that \(\mathbb {E}| 1_{E_{i}^{c}}|^{k} = \mathbb {E}1_{E_{i}^{c}} = \pi(\beta)\), we apply the Bernstein inequality with V=π(β)m and c 0=1/3. Thus,

Using the triangle inequality and taking t and β such that π(β)+t≤1/8 for sufficiently large n, we get

for a γ>0.

3.4.2 Bound on \(\bar {\mathbf {W}}^{(0)}\)

We show \(m^{-1} \|\sum_{i} \bar {\mathbf {X}}^{(0)}\|\) is small with probability at least 1−2exp(−γ/logn). We write this norm as a supremum over all unit vector perpendicular to e 1:

To control the supremum, we follow the same reasoning as in [7]. We bound \(\sum_{i} \langle \mathbf {u}, \bar {\mathbf {W}}^{(0)}_{i} \mathbf {u}\rangle \) for fixed u and apply a covering argument over the sphere of u’s. We write

where η i are independent samples of

To apply the scalar Bernstein inequality, we compute \(\mathbb {E}| \eta1_{E} |^{k}\). Because u⊥e 1, z 1 and 〈z,u〉 are independent. Hence,

Bounding the first factor, we get

Observing that 〈z,u〉 is a chi-squared variable with one degree of freedom, we have

Applying the scalar Bernstein inequality with V=16m and c 0=4βlogn, we get

Because \(\mathbb {E}\eta_{i} = 0\), we get

where we have used \(\mathbb {E}(1-z_{1}^{2})^{2} = 2\), and \(\mathbb {E}|\langle \mathbf {z}, \mathbf {u}\rangle |^{4} = 3\). Hence,

Taking t,β,m≥c 1 n with n large enough so that \(t + 2 \sqrt{\pi(\beta)} \leq1/8\), we have

for some γ′>0. To complete the bound on (31), we use Lemma 4 in [21]:

where \(\mathcal{N}_{1/4}\) is a 1/4-net of the unit sphere of vectors u⊥e 1. As \(| \mathcal{N}_{1/4}| \leq9^{n}\), a union bound gives

Hence,

for some γ>0.

3.4.3 Bounds on \(\bar {\mathbf {W}}^{(1)}\) and \(\bar {\mathbf {W}}^{(2)}\)

The bound for the \(\|\sum_{i} \bar {\mathbf {W}}^{(1)}\|\) term is similar. We write

where η i are independent samples of

We can bound \(\mathbb {E}| \eta_{i} 1_{E} |^{k} \leq12^{k} k!\) because \(\|\mathbf {z}\|_{2}^{2} \leq3n\) on E. Applying the scalar Bernstein inequality with c 0=12 and V=288m gives

The rest of the bound is similar to that of \(\|\sum_{i} \bar {\mathbf {X}}^{(0)}\|\) above.

Finally, we also bound \(\|\sum_{i} \bar {\mathbf {W}}^{(2)}\|\) similarly. We write

where η i are independent samples of

Observing that

we apply the scalar Bernstein inequality with c 0=4 and V=32m, giving

The rest of the bound is as above.

4 Stability

We now prove Theorem 3, establishing the stability of the matrix recovery problem (4). We also prove Corollary 4, establishing the stability of the vector recovery problem (3). As in the exact case, the proof of Theorem 3 hinges on the ℓ 1-isometry properties (7)–(8) and the existence of an inexact dual certificate satisfying (9). For stability, we use the additional property that \(\mathbf {Y}= \mathcal {A}^{*} \lambda\) for a λ controlled in ℓ 2. It suffices to establish an analogue of Lemma 1 along with a bound on ∥λ∥2.

Lemma 4

Suppose that \(\mathcal {A}\) satisfies (7)–(8) and there exists \(\mathbf {Y}= \mathcal {A}^{*} \lambda\) satisfying (9) and ∥λ∥1≤5. Then,

for some C>0.

Proof of Lemma 4

As before, we take x 0=e 1 and \(\mathbf {X}_{0} = \mathbf {e}_{1} \mathbf {e}_{1}^{*}\) without loss of generality. Consider any X⪰0 such that \(\|\mathcal {A}(\mathbf {X}) - \mathbf {b}\|_{2}\leq \varepsilon \), and let H=X−X 0. Whereas \(\mathcal {A}(\mathbf {H}) = 0\) in the noiseless case, it is now of order ε because

Similarly, |〈H,Y〉| is also of order ε because

Analogous to the proof of Lemma 1, we use (9) to compute that

Using the ℓ 1-isometry properties (7)–(8), we have

Thus (33) becomes

which, along with (34), implies

for some C 0,C 1>0. Recalling that H T has rank at most 2,

□

4.1 Dual Certificate Property

It remains to show ∥λ∥1≤5 for \(\bar {\mathbf {Y}}= \mathcal {A}^{*} \lambda\). From (17), we identify \(\lambda = m^{-1} (\mathbf {1}_{E} \circ \mathcal {A}\mathcal {S}^{-1}2(\mathbf {I}- \mathbf {e}_{1} \mathbf {e}_{1}^{*}))\). Computing,

where (37) follows from (13), and (38) follows from the triangle inequality and the ℓ 1-isometry property (7). Hence ∥λ∥1≤5.

4.2 Proof of Corollary 4

Now we prove Corollary 4, showing that stability of the lifted problem (4) implies stability of the unlifted problem (3). As before, we take x 0=e 1 without loss of generality. Hence ∥X 0∥2=1. Lemma 4 establishes that ∥X−X 0∥≤C 0 ε. Recall that \(\mathbf {X}_{0} = \mathbf {x}_{0} \mathbf {x}_{0}^{*}\). Decompose \(X = \sum_{j} \lambda_{j} \mathbf {v}_{j} \mathbf {v}_{j}^{t}\) with unit-normalized eigenvectors v j sorted by decreasing eigenvalue. By Weyl’s perturbation theorem,

Writing

we use the triangle inequality to form the spectral bound

Noting that

we conclude

5 Complex Case

The proof of Theorems 1 and 3 are analogous to the complex-valued cases. There are a few minor differences, as outlined and proved in [7]. The sensing vectors are assumed to be of the form \(\Re \mathbf {z}_{i} \sim\mathcal {N}(0, \mathbf {I})\) and \(\Im \mathbf {z}_{i} \sim\mathcal{N}(0, \mathbf {I})\). The ℓ 1-isometry conditions for complex \(\mathcal {A}\) have weaker constants. Lemma 1 becomes

Lemma 5

Suppose that \(\mathcal {A}\) satisfies

for some δ≤3/13. Suppose that there exists \(\bar {\mathbf {Y}}\in \mathcal{R} (\mathcal {A}^{*})\) satisfying

Then, X 0 is the unique solution to (2).

The proof of this lemma is identical to the real-valued case. The conditions of the lemma are satisfied with high probability, as before.

The construction of the inexact dual certificate is slightly different because \(\mathcal{S}(\mathbf {X}) = \mathbf {X}+ \text {Tr}(\mathbf {X}) \mathbf {I}\) and \(\mathcal {S}^{-1}(\mathbf {X}) = \mathbf {X}- \frac {1}{n+1} \text {Tr}(\mathbf {X}) \mathbf {I}\). As a result

The remaining modifications are identical to those in [7], and we refer interested readers there for details.

6 Numerical Simulations

In this section, we show that the optimizationless perspective allows for additional numerical algorithms that are unavailable for PhaseLift directly. These methods give rise to simpler algorithms with less or no parameter tuning. We demonstrate successful recovery under Douglas-Rachford and Nesterov algorithms, and we empirically show that the convergence of these algorithms is linear.

6.1 Optimization Framework

From the perspective of nonsmooth optimization, PhaseLift and the optimizationless feasibility problem can be viewed as a two-term minimization problem

See, for example, the introduction to [18]. Numerical methods based on this splitting include Forward-Backward, ISTA, FISTA, and Douglas-Rachford [4, 9, 10, 18]. If F is smooth, it enables a forward step based on a gradient descent. Nonsmooth terms admit backward steps involving proximal operators. We recall that the proximal operator for a function G is given by

and we note that the proximal operator for a convex indicator function is the projector onto the indicated set.

PhaseLift can be put in this two-term form by softly enforcing the data fit. That gives the minimization problem

where ι X⪰0 is the indicator function that is zero on the positive semidefinite cone and infinite otherwise, and where λ is small and positive. If λ=0, (43) reduces to the optimizationless feasibility problem. The smoothness of F enables methods that are forward on F and backward on G. As a representative of this class of methods, we will consider a Nesterov iteration for our simulations below.

The optimizationless view suggests the splitting

where the data fit term is enforced in a hard manner by the indicator function \(\iota _{\mathcal {A}(\mathbf {X})= \mathbf {b}}\). Because of the lack of smoothness, we can only use the proximal operators for F and G. These operators are projectors on to the affine space \(\mathcal {A}(\mathbf {X}) = \mathbf {b}\) and X⪰0, which we denote by \(\mathcal {P}_{\mathcal {A}(\mathbf {X}) = \mathbf {b}}\) and \(\mathcal {P}_{\text{psd}}\), respectively.

The simplest method for (44) is Projection onto Convex Sets (POCS), which is given by the backward-backward iteration \(\mathbf {X}_{n+1} = \mathcal {P}_{\text{psd}} \mathcal {P}_{\mathcal {A}(\mathbf {X}) = \mathbf {b}}\mathbf {X}_{n}\). Douglas-Rachford iteration often gives superior performance than POCS, so we consider it as a representative of this class of backward-backward methods.

A strength of the optimizationless perspective is that it does not require as much parameter tuning as PhaseLift. For example, formulation (43) requires a numerical choice for λ. Nonzero λ will generally change the minimizer. It is possible to consider a sequence of problems with varying λ, or perhaps to create a schedule of λ within a problem, but these considerations are unnecessary because the optimizationless perspective says we can take λ=0. In particular, formulation (44) has the further strength of requiring no parameters at all.

We note that PhaseLift could alternatively give rise to the two-term splitting

where the data fit term is enforced in a hard manner. An iterative approach with this splitting would have an inner loop which approximates the proximal operator of G. This inner iteration is equivalent to solving the optimizationless problem.

6.2 Numerical Results

First, we present a Douglas-Rachford [9] approach for finding \(\mathbf {X}\in\{\mathbf {X}\succeq 0\} \cap\{ \mathcal {A}(\mathbf {X}) \approx \mathbf {b}\}\) by the splitting (44). It is given by the iteration

where \(\mathcal {P}_{\text{psd}}\) is the projector onto the positive semi-definite cone of matrices, and \(\mathcal {P}_{\mathcal {A}(\mathbf {X}) = \mathbf {b}}\) is the projector onto the affine space of solutions to \(\mathcal {A}(\mathbf {X}) = \mathbf {b}\). In the classically underdetermined case, \(m < \frac{(n+1)n}{2}\), we can write

In the case that \(m \geq\frac{(n+1)n}{2}\), we interpret \(\mathcal {P}_{\mathcal {A}(\mathbf {X}) = \mathbf {b}}\) as the least squares solution to \(\mathcal {A}(\mathbf {X}) = b\).

Second, we present a Nesterov gradient-based method for solving the problem (43). Letting \({g(\mathbf {X}) = \frac{1}{2} \| \mathcal {A}(\mathbf {X}) - \mathbf {b}\|^{2} + \lambda\ \text {tr} (\mathbf {X})}\), we consider the following Nesterov iteration [5] with constant step size α:

For our simulations, we consider \(\mathbf {x}_{0} \in \mathbb {R}^{n}\) sampled uniformly at random from the unit sphere. We take independent, real-valued \(\mathbf {z}_{i} \sim\mathcal{N}(0,\mathbf {I})\), and let the measurements b be subject to additive Gaussian noise corresponding to ε=1/10. We let n vary from 5 to 50 and let m vary from 10 to 250. We define the recovery error as ∥X−X 0∥2/∥X 0∥2.

Figure 2 shows the average recovery error for the optimizationless problem under the Douglas-Rachford method and the Nesterov method over a range of values of n and m. For the Nesterov method, we consider the optimizationless case of λ=0, and we let the step size parameter α=2×10−4. Each pair of values was independently sampled 10 times, and both methods were run for 1000 iterations. The plot shows that the number of measurements needed for recovery is approximately linear in n, significantly lower than the amount for which there are an equal number of measurements as unknowns. The artifacts around the curve \(m = \frac {n(n+1)}{2}\) appear because the problem is critically determined, and the only solution to the noisy \(\mathcal {A}(\mathbf {X}) = \mathbf {b}\) is not necessarily positive in that case.

Recovery error for the Douglas-Rachford (DR) and Nesterov methods for the noisy optimizationless matrix recovery problem (4) as a function of n and m. In these plots, ε=10−1. For the Nesterov method, λ=0. Black represents an average recovery error of 100 %. White represents zero average recovery error. Each block corresponds to the average of 10 independent samples. The solid curve depicts when there are the same number of measurements as degrees of freedom. The number of measurements required for recovery appears to be roughly linear, as opposed to quadratic, in n. The DR algorithm has large recovery errors near the curve where the number of measurements equals the number of degrees of freedom

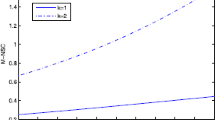

Figure 3 shows recovery error versus iteration number under the Douglas-Rachford method, the Nesterov method for λ=0 and the Nesterov method for λ=10−5. For the Nesterov methods, we let the step size parameter be α=10−4. For noisy data, convergence is initially linear until it tapers off around the noise level. For noiseless data, convergence for feasibility problem is linear under both the Douglas-Rachford and Nesterov methods. The Nesterov implementation of PhaseLift shows initial linear convergence until it tapers off. Because any nonzero λ allows for some data misfit in exchange for a smaller trace, the computed minimum is not X 0 and the procedure converges to some nearby matrix. The convergence rates of the Nesterov method could probably be improved by tuning the step-sizes in a more complicated way. Nonetheless, we observe that the Douglas-Rachford method exhibits a favorable convergence rate while requiring no parameter tuning.

The relative error versus iteration number for the noiseless and noisy matrix recovery problems, (2) and (4), under the Douglas-Rachford and Nesterov methods. The left panel corresponds to the iteration (46)–(48). The middle panel corresponds to the iteration (49)–(53) in the optimizationless case, where λ=0. The right panel corresponds to the iteration (49)–(53) for λ=10−5. As expected, convergence is linear until it saturates due to noise. In the Nesterov implementation of PhaseLift, convergence is linear until it saturates because the nonzero λ makes the solution to the problem different than X 0

We would like to remark that work subsequent to this paper shows that the number of measurements needed by the optimizationless feasibility problem is about the same as the number needed by PhaseLift [22]. That is, the phase transition in Fig. 2 occurs in about the same place for both problems.

References

Balan, R., Bodmann, B., Casazza, P.G., Edidin, D.: Painless reconstruction from magnitudes of frame vectors. J. Fourier Anal. Appl. 15(4), 488–501 (2009)

Balan, R., Casazza, P., Edidin, D.: On signal reconstruction without phase. Appl. Comput. Harmon. Anal. 20(3), 345–356 (2006)

Bandeira, A.S., Singer, A., Spielman, D.A.: A Cheeger inequality for the graph connection Laplacian. To appear in SIAM J. Matrix Anal. Appl.

Beck, A., Teboulle, M.: A fast iterative Shrinkage-Thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Candes, E.J., Eldar, Y.C., Strohmer, T., Voroninski, V.: Phase retrieval via matrix completion. SIAM J. Imaging Sci. 6(1), 199–225 (2011)

Candes, E.J., Li, X.: Solving quadratic equations via phaselift when there are about as many equations as unknowns. To appear in Found. Comput. Math. (2012)

Candes, E.J., Strohmer, T., Voroninski, V.: PhaseLift: exact and stable signal recovery from magnitude measurements via convex programming. Commun. Pure Appl. Math. 66(8), 1241–1274 (2013)

Chai, A., Moscoso, M., Papanicolaou, G.: Array imaging using intensity-only measurements. Inverse Probl. 27(1), 015005 (2011)

Combettes, P., Pesquet, J.: Proximal splitting methods in signal processing. In: Fixed-Point Algorithms for Inverse Problems in Science and Engineering. Springer, New York (2010)

Douglas, J., Rachford, H.H.: On the numerical solution of heat conduction problems in two and three space variables. Trans. Am. Math. Soc. 82(2), 421–439 (1956)

Fienup, J.R.: Phase retrieval algorithms: a comparison. Appl. Opt. 21(15), 2758–2769 (1982)

Gerchberg, R., Saxton, W.: A practical algorithm for the determination of phase from image and diffraction plane pictures. Optik 35, 237–246 (1972)

Goemans, M.X., Williamson, D.P.: Improved approximation algorithms for maximum cut and satisfiability problems using semi-definite programming. J. ACM 42, 1115–1145 (1995)

Griffin, D., Lim, J.: Signal estimation from modified short-time Fourier transform. IEEE Trans. Acoust. Speech Signal Process. 32(2), 236–243 (1984)

Harrison, R.W.: Phase problem in crystallography. J. Opt. Soc. Am. A 10(5), 1045–1055 (1993)

Miao, J., Ishikawa, T., Shen, Q., Earnest, T.: Extending X-ray crystallography to allow the imaging of non-crystalline materials, cells and single protein complexes. Annu. Rev. Phys. Chem. 59, 387–410 (2008)

Millane, R.P.: Phase retrieval in crystallography and optics. J. Opt. Soc. Am. A 7, 394–411 (1990)

Raguet, H., Fadili, J.M., Peyre, G.: A generalized forward-backward splitting. SIAM J. Imaging Sci. 6(3), 1199–1226 (2013)

Recht, B., Fazel, M., Parrilo, P.: Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization. SIAM Rev. 52(3), 471–501 (2010)

Singer, A.: Angular synchronization by eigenvectors and semidefinite programming. Appl. Comput. Harmon. Anal. 30(1), 20–36 (2011)

Vershynin, R.: Introduction to the non-asymptotic analysis of random matrices. In: Eldar, Y.C., Kutyniok, G. (eds.) Compressed Sensing: Theory and Applications. Camb. Univ Press, Cambridge (2010)

Waldspurger, I., d’Aspremont, A., Mallat, S.: Phase recovery, Maxcut, and complex semi-definite programming. Preprint (2012). arXiv:1206.0102

Walther, A.: The question of phase retrieval in optics. Opt. Acta 10, 41–49 (1963)

Acknowledgements

The authors acknowledge generous funding from the National Science Foundation, the Alfred P. Sloan Foundation, TOTAL S.A., and the Air Force Office of Scientific Research. The authors would also like to thank Xiangxiong Zhang for helpful discussions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Peter Casazza.

Rights and permissions

About this article

Cite this article

Demanet, L., Hand, P. Stable Optimizationless Recovery from Phaseless Linear Measurements. J Fourier Anal Appl 20, 199–221 (2014). https://doi.org/10.1007/s00041-013-9305-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00041-013-9305-2