Abstract

Cooperative behavior represents a situation in which individuals sometimes act in a way that produces a gain to another at a cost to themselves. This may be explained by a history of repeated interactions with others in which such behavior has resulted in reciprocal cooperation from others. Sometimes, even with reciprocal cooperation, gains and costs are unbalanced between partners. In this case, there is evidence that people may present an aversion to both disadvantageous and advantageous distributions of gains. In other words, they may act in such a way as to ensure an equal outcome among all group members. Aversion to inequity that benefits oneself (advantageous inequity (AI) aversion) may be more dependent on social and cultural cues than aversion to inequity that benefits others (disadvantageous inequity (DI) aversion). Using both between-subjects (Experiment 1) and within-subjects (Experiment 2) manipulations, the influence of recent experience with AI on participants’ willingness to produce DI was explored within the context of a two-player card game. In initial game phases, the percentage of trials in which the participant experienced AI was manipulated. In subsequent game phases, participants had the opportunity to produce DI to themselves. The results from both experiments suggest that aversion to DI is reduced by recent experience with AI. This procedure allows social influences on DI to be tested, which may be important for providing a psychological explanation of cultural differences in aversion to DI.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Cooperation can be broadly defined as two or more individuals working together to achieve a common goal (Schmitt, 1986). In a strict sense, cooperative behavior represents a situation in which individuals sometimes act in a way that produces a gain to another at a cost to themselves (Nowak, 2006). Cooperative behavior between organisms within a group is often studied from evolutionary, economic, organizational, psychological, and cultural perspectives (e.g., Boyd & Richerson, 1985; Cropanzano & Mitchell, 2005; Hayes & Sanford, 2014; Molm et al., 2000; Nowak, 2006). In this paper, we review some of the empirical work in this area and suggest that general understanding of this type of social behavior can be enhanced by considering the role of the individual’s learning history when another person’s behavior has served as a context or controlling antecedent (e.g., Hayes et al., 2021; Schmitt, 1986; Skinner, 1981). In other words, within a particular cultural context, a given individual’s cooperative behavior may be jointly controlled by the cultural level contingencies in place as well as by his/her own specific history involving others who either did, or did not, engage in cooperative behavior. The current study explores this idea in two complementary experiments in which the participant’s recent history is directly manipulated in a between-subjects (Experiment 1) or within-subjects (Experiment 2) design.

From an evolutionary perspective, cooperative behavior among related individuals is expected because the behavior increases the likelihood that the individuals’ genes are replicated in the next generation (Hamilton, 1964). A more interesting situation is when cooperative behavior occurs between unrelated individuals who are competing for resources. In some instances, it may be necessary for an individual group member to behave in such a way as that reduces personal gains but increases the total gain for the group in general. In one sense, it seems that actions that benefit an unrelated individual at a cost to oneself should not be selected, but this is contrary to organisms’ (human and animal) actions in a variety of situations (Ahmed & Karlapalem, 2014; Brosnan & de Waal, 2014; Rachlin & Jones, 2008).

Some models explain cooperative behavior among unrelated individuals in terms of reciprocity (Axelrod & Hamilton, 1981): Putting oneself at a disadvantage now may increase the likelihood that the other group members will put themselves at a disadvantage in such a way that benefits the individual in the future (Ahmed & Karlapalem, 2014; Baker & Rachlin, 2001; Blake, Rand, et al., 2015; Nowak, 2006; Molm et al., 2000). These models assume that the possibility of future, repeated interactions with the other group members will increase cooperative behavior, and this has been supported by experimental work (Blake, Rand, et al., 2015; Dal Bó, 2005; Rand & Nowak, 2013). For example, reciprocity has been investigated in experimental work using the prisoner’s dilemma game (e.g., Baker & Rachlin, 2001; Locey et al., 2013; Rand & Nowak, 2013). In this game, if both players cooperate, there is a better payoff than if both defect, but if one player cooperates, the other player can gain an advantage by defecting. For instance, if both players choose to cooperate, each might earn five points but if each chooses to defect, they might earn two points. However, if one player cooperates and the other defects, the cooperator earns only one point whereas the defector earns six (cf. Baker & Rachlin, 2001). When only one round of the game is played, participants typically defect, but cooperative responses tend to occur in repeated-round games in which there is the potential for reciprocity. For example, Blake, Rand, et al. (2015) compared cooperative behavior in middle-school-aged children in a prisoner’s dilemma game that involved either one round or repeated rounds with the same partner and found greater instances of cooperative behavior in the repeated-round version. In Baker and Rachlin, participants played against a computer and the percentage of reciprocal plays by the computer was manipulated. In other words, after the participant made a choice on a given trial, the computer made the same choice on the next trial (i.e., “tit-for-tat”). The results indicated, in general, that when the probability of tit-for-tat was 100%, participants cooperated and when it was 50%, they defected. However, when the probability was 75%, they cooperated more when the computer’s choice was clearly signaled relative to when it was not.

Cooperation between unrelated individuals may also be related to an aversion to inequity, and this aversion could either be a behavioral predisposition or transmitted culturally (Blake, McAuliffe, et al., 2015; Brosnan & de Waal, 2014; Shaw et al., 2016; Spiga et al., 1992). Aversion to inequity can be defined as a preference for fairness when there are unequal outcomes. Behaviorally, it can be observed by strong emotional reactions or a refusal of gains. In this conceptualization, “fair” does not necessarily mean that all group members get the same outcome; it could mean that quality of an outcome is related to the overall effort made by the individual (i.e., it is inherently unfair for group members to receive unequal outcomes for equal amounts of effort; Fehr et al., 1999). From this perspective, whether a given individual cooperates in a task (i.e., behaves in a way to benefit another at his/her expense) is determined by aversion to inequity and a preference for a fair outcome (e.g., Shimoff & Matthews, 1975; Spiga et al., 1992). For example, in Spiga et al. (1992), participants completed a task with a fictitious partner in which the responses by both players determined the outcome (game points) for each. The participant responded on a panel that contained two buttons. Responding on button A resulted in only points for the participant, whereas responding on button B resulted in points for both players (counters with points accumulation for both players was visible to the participant). However, button B only became available if the partner made it available. Across sessions, the number of points available on button A was manipulated such that all participants experienced a disadvantage relative to the other player, and the results indicated a decrease in cooperative responding (i.e., responses to button B) in the inequity conditions relative to equity conditions. These results suggest that differences in reinforcement distributed across group members is aversive and that participants will work (i.e., respond cooperatively or not) in order to reduce those differences.

There are two types of inequity aversion: advantageous inequity and disadvantageous inequity. Some researchers have suggested that evidence for each type presents at a different developmental point in humans (Blake, McAuliffe, et al., 2015; McAuliffe et al., 2014). Advantageous inequity (AI) refers to a situation in which an individual receives more than other members of the group, and disadvantageous inequity (DI) refers to a situation in which an individual receives less than other members of the group. Evidence for DI aversion has been noted in children as young as four years of age (Blake, McAuliffe, et al., 2015), but evidence for AI aversion has only been observed in older children (e.g., 8 years of age) and adults (e.g., Shimoff & Matthews, 1975; Spiga et al., 1992), children in Western societies (Blake, McAuliffe, et al., 2015), or from a consistent experimental history of collaboration (Corbit et al., 2017). These latter results have led researchers to entertain the idea that DI aversion may have different evolutionary and developmental roots than AI, with DI being more general between species and AI being more dependent on social and cultural contexts (see also House et al., 2013).

Shaw et al. (2016) have suggested that DI may also be sensitive to social cues. They assert that aversion to inequity depends both on the type of inequity and the way in which it was produced. For example, they have suggested that self-imposed DI is more acceptable than other types of inequity. In other words, if someone chooses an outcome that gives them a disadvantage, the inequity is less aversive than if the inequity is determined by another person. Shaw et al. have also suggested that as children mature, both tolerance for self-imposed DI and aversion to other types of inequity grow stronger. They further propose that the underlying mechanisms are the development of the desire to appear generous and to avoid appearing partial. They tested these ideas in younger (4- to 6-year-old) and older (7- to 8-year-old) children who were approached at a museum and told that they had behaved well enough that day to win a prize (a small toy). The children were also told that another child (who was not present) had also won a prize, and they were shown three similar prizes. Children were then asked what should be done with the third prize. In the DI condition, the choice was between giving it to the other child or throwing it away (and the latter choice resulted in the toy being placed in a trash can), and in the AI condition, the choice was between giving it to themselves or throwing it away. If children are averse to all unequal outcomes, they should choose to throw away the third prize. Aversion to AI would be indicated by a higher percentage of throwing away the prize relative to the DI condition, and aversion to DI would be indicated by a higher percentage of throwing away the prize relative to the AI condition. The results indicated that older children were more likely to create DI than the younger children. In a second experiment, the same options were presented to the participants, but the researcher made the choice and then asked the participants how they felt about it. The results indicated that both the younger and older participants reported similar levels of aversion to DI and AI.

Shaw et al.’s results illustrate that although DI aversion may be universally observed in young children (Blake, McAuliffe, et al., 2015), it also can be influenced by cultural factors, such as AI. DI is also observed frequently in nonhumans, but there is substantial variability across studies and within species (Brosnan & Bshary, 2016), suggesting that it may be sensitive to several variables. We suggest that one such variable may be a person’s immediate learning history. In other words, cooperative behavior (i.e., accepting or allowing an outcome that places one at a current disadvantage) may be explained in terms of a person having a history of repeated interactions with others in which cooperating at a cost to oneself has resulted in reciprocal cooperation from the other person. From our perspective, a person’s willingness to engage in reciprocal cooperation is mediated by the different outcomes experienced with other people throughout their learning history in addition to cultural factors and biological predispositions. This idea can complement the idea that reciprocal cooperation is based on one’s prediction of what the other person is likely to do in the future (Blake, Rand, et al., 2015; Molm et al., 2000). Thus, the overarching purpose of the current study was to test the idea that recent experience will influence participants’ willingness to produce DI.

The role of learning on inequity aversion can be evaluated by directly manipulating the level of inequity experienced by the participant as a result of a partner’s behavior (e.g., Benvenuti et al., 2020; Marwell & Schmitt, 1975; Schmitt, 1998; Spiga et al., 1992; Suarez et al., 2021). For example, Benvenuti et al. (2020) created an experimental situation to test if recent, repeated interactions with another person who allowed AI can reduce aversion to DI, measured by the participants’ willingness to produce it. Participants played a card game with a research confederate in which each trial could potentially result in an equal or unequal outcome (i.e., points awarded on the trial). In Phase 1, one group of participants experienced AI on 100% of the trials (i.e., the confederate let the participant win every time). A second group of participants experienced equal outcomes on every trial (i.e., the confederate prevented the participant from winning on every trial), and a third group did not experience Phase 1. In Phase 2, the game rules changed such that the trial outcomes could be equal or unequal favoring the confederate. The dependent variable was the participants’ behavior in Phase 2, measured as the percentage of trials in which the participants allowed the confederate to win (i.e., the percentage of trials on which the participant produced DI). The results indicated that participants who experienced AI in Phase 1 were significantly more likely to produce DI than the participants in the other two groups.

Data showing the role of learning in reciprocal cooperation with different payoffs (disadvantageous or advantageous gains for the participant) are important for several reasons. First, they help increase understanding of the interplay between inequity aversion and cooperation (e.g., Brosnan, 2011; Ferh & Schmidt, 1999) and how cooperation may develop with participants receiving different payoffs (e.g., Hauser et al., 2019). Additionally, they have theoretical implications for understanding reciprocity. Many models based on reciprocity are “future oriented” in that they assume people decide to cooperate in the present time based on how they predict the other group members will behave in the future. For example, social exchange theory views social behavior as an exchange process in which each person works to maximize benefits and in which interactions with others result in future obligations (Cropanzano & Mitchell, 2005; Molm et al., 2000). In a negotiated exchange, the expectations for each group member are predetermined and the terms are known in advance by each group member. Thus, there is no uncertainty in these sorts of exchanges. By contrast, in a reciprocal exchange, a group member behaves in such a way to benefit others without prior negotiation of terms or knowledge of whether the other person will return the act. Often, this choice occurs under conditions in which there is an incentive to exploit the other person. Reciprocal exchanges are inherently uncertain because when the individual makes the decision to act toward the benefit of the other person, he/she does not know whether that behavior will be repaid in kind later. This theory further suggests that over time and with repeated interactions, reciprocal exchanges (but not negotiated exchanges) result in mutual commitments and the development of trust among group members (Molm et al., 2000).

The primary purpose of the current set of experiments was to further explore the role of recent learning on participants’ willingness to produce DI. In Benvenuti et al. (2020) participants experienced AI on either 100% or 0% of the trials, which did not allow a determination of how the amount of AI experienced influences production of DI. Experiment 1 was a replication of Benvenuti et al. in which the degree of experience with AI was more systematically varied: Participants experienced AI on 0%, 25%, 50%, 75%, or 100% of trials in Phase 1 and production of DI was measured in Phase 2 using a between-subjects design. To further explore the generality of the effect, Experiment 2 manipulated AI in Phase 1 in the same general manner using a within-subjects design and a computerized (rather than tabletop) version of the game. Based on Benvenuti et al., we expected participants in both experiments to be more likely to produce DI after experiencing high levels (i.e.,100%) of AI in Phase 1 relative to no recent experience with AI. Moreover, we expected to see systematic decreases in DI production based on the level of AI experienced in Phase 1. A second purpose of the current study was to begin a preliminary investigation of the interaction between recent learning history and cultural influences on production of DI by recruiting participants from two different countries: the United States (Experiment 1) and Brazil (Experiment 2). In both experiments, we did not “force” participants to accept AI in Phase 1. This allowed for exploration of a potential difference in aversion to AI in participants from the two different countries.

Experiment 1

Method

Participants

The participants were one minor (17 years) and 49 adult (18–46 years) students at a private, liberal arts university. Twenty-eight were undergraduate students (representing the following majors: biology, business, education, graphic design, health sciences, human services, marketing, and psychology); the remaining participants were graduate students (representing the following programs: business administration, clinical counseling psychology, and school counseling). In terms of gender and race/ethnicity, the sample was homogenous: There were only seven male participants and only four participants self-identified as Asian, African American, or Hispanic (the remaining self-identified as Caucasian).

Research sessions took place both during the summer term and during the regular academic year. Sessions lasted approximately 20 min. During the summer, participants were compensated for their time with a $5.00 gift card. During the regular academic year, participants received course credit for participation (undergraduate students) or a gift card (graduate students). All participants signed a consent form that had been approved by the university’s institutional review board (IRB); separate parental consent was also obtained for the minor participant.

Materials and setting

The experiment took place in a research room located on campus that contained two student desks, a tri-part cardboard room divider, and a whiteboard. Figure 1 depicts an overhead view of the set-up. The experimenter stood next to the whiteboard and was able to see whoever was seated at both desks. The tri-part room divider was positioned between the two desks such that whoever was seated at the desks could not see the other desk, but they could see the experimenter and the whiteboard. Additional materials included blue and green “cards;” these were 2-in. × 3-in. cutouts from cardstock that were laminated. There was a space marked on each table indicating where a card should be placed on each trial. Sessions were recorded using an iPad. When seated at the desks, the participants could see the card the other person played on each trial.

Procedure

The participants were randomly assigned to one of five experimental groups: 100%, 75%, 50%, 25%, and 0%. There were ten participants in each group. Group size was determined by completing a power analysis using pilot data from groups 100% and 0% in Benvenuti et al. (2020) using the program G*Power 3 (Faul et al., 2007). This analysis indicated a total sample size of 25 for a medium effect size. Thus, ten participants per group is more than adequate. Participants completed two experimental phases. In Phase 1, the level of AI experienced by participants was manipulated via confederate behavior that varied across groups. In Phase 2, participants’ production of DI was measured. Participants also completed a pre-experimental phase with four trials so that they experienced the general rules about choosing cards, combinations of cards and outcomes, and the matrix of point values for different card combinations. These four trials allowed participants to experience all potential game-trial outcomes once.

On each trial, the number of points earned by the participant and confederate was determined by the combined choices of blue or green cards. When the participant and confederate both played the blue card, there was an unequal outcome. When one or both played a green card, there was an equal outcome. For equal outcomes, both received two points, and for unequal outcomes, one person received five points and the other received two. Which player earned more points with unequal outcomes was determined by the game phase. The confederate played cards on each trial according to a pre-determined sequence so that the percentage of trials with AI to the participant appropriate for each condition in Phase 1 could be produced. The pre-determined card-play sequence was printed on a prepared data sheet which was placed behind the divider and out of the participant’s view. On each trial, the confederate played the card indicated and recorded the card played by the participant. All sessions were recorded, and 74% of the sessions were subsequently reviewed by a researcher who did not participate in the session for procedural integrity and inter-observer reliability, which were 100% and 99.5%, respectively.

When the participant arrived at the research room, the confederate was already sitting at one of the desks, behind the screen. The experimenter gave the consent form to the participant and asked him/her to read it. Then, any questions were answered, and the participant signed the form. If after reading the consent form, the participant chose not to participate, he/she would have been given the gift card/class credit, but this situation did not occur. Once the participant was seated at the desk, written instructions were simultaneously given to both players, and the experimenter asked that the instructions be read silently. The instructions were:

This study is not about intelligence, and it is not about assessing your intellectual abilities. When you have finished the task, you will receive a detailed explanation. You will be working with a partner, and both of you will have an identical task to perform. You and your partner will receive two cards (one blue and one green). When the experimenter says, “Attention,” you will make a choice by placing your hand on the blue card or the green card. After your choice, the experimenter will say, “Now!” At this point, put the chosen card in the place indicated on your desk so that you and your partner can see each other’s choices. On each trial, you will receive a certain number of points. The number of points you will receive depends on your choice and the choice of your partner. The experimenter will notify you when the session is finished. Please remain seated and do not talk with your partner or the experimenter during the session. All instructions are contained on this sheet. When you’re ready to begin, raise your right hand.

After returning the paper with the general instructions to the experimenter, the participants received the following instructions, also printed on paper: “If you choose the blue card and the other player also chooses the blue card (combination: blue-blue), you will earn five points and the other player will earn two points. If you or the other player choose the green card (combinations: blue-green, green-blue, or green-green), you both will earn two points.” This instruction sheet remained on the participant’s desk throughout the game phase.

Next, the participants completed a pre-training phase consisting of four trials so they could practice how to play the game and so they were exposed to all possible outcomes (i.e., receiving five or two points for the various card combinations). In this phase, the participant and confederate were told which card combinations to play, and the same outcome contingencies for played card combinations described above were in effect.

In Phase 1, the behavior of the confederate varied depending on the participants’ experimental group. In the 100% group, the confederate played the blue card on every trial, thus allowing the participant the opportunity to earn five points instead of both players receiving two points on every trial. In other words, the confederate allowed AI to the participant on all the trials. In the 75%, 50%, and 25% groups, the confederate played the blue card on 75%, 50%, and 25% of the trials, respectively. Thus, for these three groups, the confederate allowed AI to the participant on a varying percentage of trials. The percentage was programmed for each quarter of the session such that the level of AI programmed was consistent throughout the session. In the 0% group, the confederate never played the blue card, which resulted in equal points between the two players on all trials. Thus, the confederate never allowed AI to the participant. There were 16 Phase 1 trials. On each trial after the participant and confederate played a card, the experimenter verbally stated the number of points each player had earned and tallied the points on the whiteboard. Thus, the number of points earned by both players was clearly visible throughout the phase. The next trial began immediately after points delivery. Upon completion of Phase 1, the experimenter stated the total number of points earned by each player and stated which player “won” the phase.

At the beginning of Phase 2, the experimenter provided additional written instructions indicating that the earnings matrix was reversed: Now, blue-blue outcomes resulted in the confederate earning five points and the participant earning two (all other card-combination outcomes resulted in both players earning two points). In other words, in this phase, the participant had the opportunity to produce DI (i.e., allow the confederate to win a greater number of points). On every trial in this phase, the confederate chose the blue card in all five groups. There were 32 trials in Phase 2. The number of trials in this phase was doubled relative to the previous phase because early in the phase, we expected participants’ choices to be a function of Phase 1 experience. However, it is possible for the participants’ behavior to also be influenced by the confederate’s choices in Phase 2, which could potentially be seen as a decrease in the blue-card choices in the second half of the phase relative to the first half. As in Phase 1, at the end of each trial, the experimenter verbally stated the number of points that each player had earned and tallied the points on the whiteboard. At the end of the phase, the experimenter stated the total number of points earned by each player and indicated who had “won” the round.

Upon completing Phase 2, the participants completed a questionnaire assessing the social validity of the task (described with the results) and some demographic variables. Next, the participants were debriefed. During the debriefing, the participants were told that the experiment investigated the role of immediate experiences with a helpful or unhelpful partner on willingness to behave cooperatively in a game. In addition, the participants were told that the confederate was not actually another participant but one of the researchers, and that it was necessary to use a confederate so that we could control the relative helpfulness of the other player during the first part of the game. Any questions that the participants had were answered, and, as described above, the participants were either granted course credit or offered a gift card.

Results

The primary dependent variable was the percent of trials on which the participant chose the blue card, and this is depicted for individual participants in each phase in Table 1. In Phase 1, it was to the participants’ advantage to always choose the blue card (i.e., blue-blue card outcomes resulted in more points to the participant than to the confederate), so percent blue-card choices can be one index of AI aversion. The mean value of blue-card responses across all groups was 59.3% (range: 50.0–64.38% across the five groups). These data indicate that across groups, participants’ blue-card choices were at or slightly above chance. However, the individual participant data indicate substantial variability across participants, such that blue-card responses ranged from 0% to 100%, and only 18% of the participants chose the blue card on at least 80% of the trials in Phase 1. There was no consistent pattern across groups.

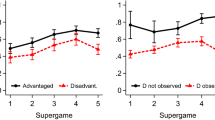

Figure 2 depicts the mean blue-card responses in Phases 1 and 2 for each of the five groups. In Phase 2, the mean percent of blue-card responses was 24.1% (range: 8.13–48.75%), and participants in each group tended to make fewer blue-card choices relative to Phase 1. The individual participant data (Table 1) indicate variability within each group in Phase 2. Notably, however, 30% of participants in group 100% chose the blue card on at least 80% of trials; this can be contrasted with the remaining four groups, in which no participant chose the blue card on more than 78% of trials. There was no difference in the percent of blue card choices in the first versus the second half of Phase 2 for groups 100% (48.75% and 48.75%), 75% (30.63% and 30.31%), and 0% (8.75% and 8.13%), respectively. Groups 50% and 25% showed a slight decrease in blue-card choices in the second half of the session relative to the first half (16.35% and 14.38%, and 22.5% and 18.71%, respectively).

To determine the effect of programmed level of AI in Phase 1 on the likelihood of participants subsequently producing DI in Phase 2, the data were analyzed using a generalized linear mixed model (GLMM) with GENLINMIXED as a method using SPSS version 27 (cf. Suarez et al., 2021). The dichotomous dependent variable was the participants’ card choice in Phase 2, either blue or green, with green as the reference variable. We used the binomial distribution and the logit link function, resulting in a logistic regression model. Trial in Phase 2 was specified as a repeated measure. Participant number was included as a random effect, and the categorical variable of group (0%, 25%, 50%, 75%, and 100%) was a fixed effect. Significance level was .05. Each group contained 10 participants, and each participant was exposed to 32 trials in Phase 2, resulting in 1,600 trials in the analysis.

Table 2 depicts the analysis of variance of logistic regression with participant number as a random effect. The fixed effect of group was statistically significant (p = .007), indicating that the percentage of AI experienced in Phase 1 directly influenced participants’ choice of the blue card in Phase 2. Table 3 depicts the estimated marginal means of the participants’ blue-card choices in Phase 2, standard error, and average blue-card choices over a 95% confidence interval as a function of group. Using pairwise contrasts, the differences between groups 100% and 0% and between groups 100% and 25% were significant (least significant difference adjusted p = .025 and .041, respectively). These results indicate that participants in group 100% played the blue card more often than those in groups 0% and 25%.

The independent variable in this experiment was the percent of trials in which the confederate played the blue card in Phase 1, and the purpose was to manipulate the percent of trials in which the participants experienced AI in that phase. Thus, we also examined whether the amount of AI produced by each group in Phase 1 approached what was programmed. If the actual amount of AI for each group equaled what was programmed, groups 100%, 75%, 50%, 25%, and 0% should have approximately 16, 12, 8, 4, and 0 blue-blue outcomes, respectively. Separate t-tests were used to determine whether the actual number of blue-blue outcomes in Phase 1 differed from that programmed, and the results are in Table 4. Groups 100%, 75%, and 50% produced significantly fewer blue-blue outcomes in Phase 1 than programmed, which may be a factor in the amount of within-group variability observed in Phase 2.

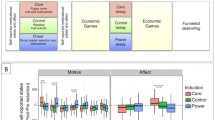

Table 5 indicates the mean response to each social validity question for each group. Participants were asked to make a rating on a scale ranging from 1 (low) to 5 (high). Although no between-group differences are apparent, these data indicate that the participants thought that cooperation and fairness were important aspects of daily life (mean scores of 4.54 and 3.98, respectively) and that the experimental task did not elicit strong, adverse emotional reactions (which is sometimes observed in situations with unequal outcomes): Ratings for level of comfort were relatively high (mean = 4.04) and ratings for levels of anxiety and anger were relatively low (means = 1.6 and 1.26, respectively). Perhaps somewhat surprising is that there were no differences across groups on ratings of how friendly (mean = 3.30) or empathetic (mean = 3.24) the confederate was; this issue is explored in the Discussion section.

Discussion

The purpose of Experiment 1 was to explore the influence of immediate experience with AI in the context of a repeated interaction with another person on subsequent willingness to produce DI. In general, the results indicated that experiencing a relatively high level of AI in Phase 1 increased participants’ willingness to subsequently produce DI: Participants in group 100% were more likely than those in groups 0% and 25% to produce DI in Phase 2. This might indicate that willingness to produce DI depends on a recent experience with a partner who allowed the person to experience AI on a relatively high number of opportunities, but this conclusion is tempered by the high degree of variability in the individual participant data. The results of this experiment complement the findings of Shaw et al. (2016), who showed that child participants were more accepting of DI in situations in which they had the choice to produce it relative to situations in which someone else made the decision. We extend those findings to show that adults can be more accepting of DI after interacting with a partner who previously allowed them an advantageous unequal outcome.

We manipulated the percentage of trials on which the confederate allowed AI to the participant, and we hypothesized that participants would allow DI on a similar percent of trials. One reason this effect was not observed may be related to what participants were told at the end of Phase 1. If the participant had more points than the confederate at the end of Phase 1, they were told that they “won” the game. This was done to increase the likelihood that the participant attended to inequity in points between the two players. Although the participants were not given any additional information about the value of points or winning the phase, being told they won might have functioned as an establishing operation (cf. Edwards et al., 2019) that increased the value of winning and thereby decreased production of DI in the subsequence phase.

Another reason that levels of DI in Phase 2 did not correspond to level of AI in Phase 1 may be that that our manipulation was only partly effective: The number of trials on which the participants actually received AI in Phase 1 significantly differed from what was programmed. For example, for group 100%, the confederate played the blue card on every trial, but the mean number of blue-blue outcomes experienced by this group was 10.1 (out of 16 trials). Similar decreases between what was programmed and actually experienced by the participants occurred for groups 75%, and 50% (see Table 4) and is a result of the participants only playing the blue card on approximately half the trials in Phase 1.

It is somewhat surprising that the participants did not play the blue card on a majority of Phase 1 trials because doing so would have allowed them to win more points in the game. A similar result was observed in the corresponding condition in Benvenuti et al. (2020), who used a similar procedure: Participants who experienced equal outcomes in an AI phase chose the blue card on approximately 70% of trials. A lower-than-expected percentage of blue-card responses may indicate an aversion to inequity, even when that inequity is advantageous, and is consistent with the results from other tasks investigating aversion to inequity (e.g., Blake, McAuliffe, et al., 2015; Shaw et al., 2016). For example, in a group task that sometimes required participants to distribute points equally and at other times required participants to distribute points unequally, Vichi et al. (2009) showed that participants were more likely to distribute points equally even when the contingencies required unequal distributions. Alternatively, the high degree of within-group variability may suggest that not all the participants fully understood the contingencies or that participants may have needed more than four practice trials to learn how the game works. One way to reduce within-group variability is to use a within-subjects manipulation, which is what was done in Experiment 2.

Experiment 2

Experiment 1 provides evidence of how cooperative behavior, even in situations of inequity, can change due to recent experience. Thus, an important contribution to the literature on cooperative behavior is the consideration of a person’s learning history and the effect that history can have on current cooperative behavior. Inspection of the individual participant data from Experiment 1 indicate substantial within-group variability, which may indicate that several variables influence willingness to produce DI, such as pre-experimental experiences. The purpose of Experiment 2 was to investigate the role of learning history on participants’ willingness to produce DI within-subjects, which can better control for pre-experimental experiences.

In Experiment 2, we programed a situation in which the participants’ partner in the game chose the blue card on a different percentage of trials in AI phases, as in Experiment 1. Unlike in Experiment 1, however, the participants first experienced an equity phase of the game (i.e., a baseline phase in which the participant and the other player received the same number of points on every trial; cf. Suarez et al., 2021). The purpose of this phase was to attempt to minimize pre-experimental influences on willingness to produce DI by giving all participants a similar, recent history. This was followed by exposure to five pairs of AI-DI phases in which the level of AI was manipulated as in Experiment 1. Exposure to the conditions was counterbalanced such that half the participants experienced increasing levels of AI across the AI-DI pairs (i.e., 0%, 25%, 50%, 75%, and 100%) and the remaining half experienced decreasing levels.

Another change relative to Experiment 1 was that the participant played the game on a computer (rather than on the tabletop) that was set up to appear like he/she was playing with another person (similar to the confederate in Experiment 1 except that the confederate was actually the computer; cf. Suarez et al., 2021). This change was made in order to automate data collection (and thus remove the potential for human error). Previous studies have used “computer confederates” (e.g., Baker & Rachlin, 2001) and some have not shown differences in participant behavior compared to when the confederate was a person (e.g., Fantino & Kennelly, 2009). Additional changes to the procedure, such as increased pre-session training to ensure the participants understood the game and points matrix, are described in the Method section.

As in Experiment 1, our experimental question was whether participants’ production of DI would be influenced by systematic differences in the amount of AI just experienced. Based on the results of Experiment 1, we expected participants to be more willing to produce DI after having just experienced higher versus lower levels of AI.

Method

Participants

Twenty college students, ranging in age from 18 to 25 years, were recruited from a university campus: six were female and 14 were male. All participants signed a consent form that had been approved by the university’s IRB. Participants were graduate students majoring in civil engineering or electrical engineering enrolled in the same university course. Although participants were asked not to talk about the experiment and the experimental task, this was not controlled. Before starting the experiment, participants were informed that any points earned would be exchanged for money at the end of the experiment. Each participant received R$10,00 (the equivalent of US$2.71, which was enough to purchase a sandwich and drink in the university cafeteria).

Participants were told that they would form dyads with another participant to perform the experimental task (i.e., a computerized version of the card game described in Experiment 1). In fact, the participants played the card game against the computer. However, another experimenter (i.e., a confederate) remained in the experimental room throughout data collection. The confederate did not actively participate in the experiment, but he/she simulated participation by producing sounds before data collection began, such as dragging the chair and writing on paper when necessary.

Setting and materials

The setting and materials were similar to those described in Experiment 1 and by Suarez et al. (2021). Different from Experiment 1, we used a computer system for interdependent response and consequence programming (ProgRCI) to display the task to the participant, record the data, and simulate the confederate’s responses. The experiment took place in a quiet room on campus. Figure 3 depicts the arrangement of equipment and furniture in the data collection room. The room contained four desks, three chairs, a screen, two computer notebooks (Samsung FR511, Intel i7-2670QM, Windows 7 Professional 64 Bit operating system), a mouse, and a headset. The desks and chairs for the participant and confederate were arranged side-by-side with the screen between them, making eye contact impossible between the participant and the confederate. During the experimental session, white noise was reproduced in the participant’s headset to mask external sounds.

An overhead depiction of the setting in Experiment 2. Note. This figure was originally published in Suarez, C. J., Benvenuti, M. F., Couto, K. C., Siqueira, J. O., Abreu-Rodrigues, J., Lionello-DeNolf, K. M., & Sandaker, I. (2021). Reciprocity with unequal payoffs: Cooperative and uncooperative interactions affect disadvantageous inequity aversion. Frontiers in Psychology, 12, 628425, 10.3389/fpsyg.2021.628425. It is reprinted here under the CC-BY Creative Commons license (https://creativecommons.org/licenses/by/4.0/). CC BY 4.0

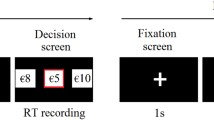

The experimental task was the same as in Experiment 1, but the “cards” were presented side-by-side on the computer screen and the participant had to click on a card with the mouse to make a choice on each trial. Figure 4 illustrates the screen that appeared to the participant during the task. Presented on the computer screen were the participant’s playing cards, the cards representing the other player (represented as Participant 2), each player’s earned points, the matrix of points depending on card combinations, and the choices made on each trial by each player.

Procedure

Participants completed the experiment in one session. When the participant entered the room with the experimenter, the confederate was already there. Throughout the experiment, the participant remained sitting in a chair (see Fig. 3). By sitting in this chair, the participant had visual access to both his/her notebook (N) and instructional notebook (NFI). The NFI was used to expose the participant, before the beginning of the experiment, to a simulation that described the task and the matrix of points. First, the experimenter told the participant that he/she would be Participant 1 throughout the experiment and that the “other participant” would be Participant 2. Then, the experimenter explained the task and the matrix of points, indicating that the number of points they would earn depended on the color combination produced by their choice and that of the other player on each trial.

Using the NFI, four trials were simulated in which card choices alternated, such that all four color combinations were performed using the same payoff matrix as the baseline phase (described below). At the end of each simulated trial, the experimenter asked the participant and the confederate to write, on a sheet of paper given to them, how many points they would receive in each color combination had that been an actual trial. For example, in the first simulation trial, the experimenter said: “Let’s assume that Participant 1 chose the blue card and Participant 2 chose the green card. With this color combination, how many points would each of you would receive? Please do not speak out loud. Write your answer on the paper and I’ll go to your desk to check it out.” If the participant’s answer was incorrect in any of the simulation trials, the experimenter would explain the matrix of points again.

After simulating four trials, the experimenter gave the participant and the confederate written general instructions that were read silently. The instructions were (as translated from Portuguese):

Hello, Participant. Thank you for participating in this research project! This study is not about intelligence or emotions. You will be working with a partner and you will have an identical task to perform during the experiment. You and your partner must choose between two cards (one blue and one green). In each trial, you will receive a certain number of points. The number of points you will receive depends on your choice and on your partner’s choice. The matrix of points that will appear to you is the same as that for the other participant. Please remain seated and do not talk to your partner or the experimenter during the session. If you have any questions, raise one hand and the experimenter will come to you. Once the experiment is over, the following message will appear: Thank you very much for participating! Please call the experimenter! When this message appears, raise one of your hands so that the experimenter will come to you. Put on the headset lying on your desk and get started on the task. Remember that the points will be exchanged for money at the end of the experiment.

Each participant first experienced a baseline phase in which equality was programmed (i.e., both players received the same number of points on each trial), followed by a series of ten conditions in which an AI phase was paired with a subsequent DI phase. Before the start of each phase, the payoff matrix that would be in effect during that phase appeared on the right, upper left of the computer screen. The payoff matrix was visible throughout the phase. To start the phase, the participant needed to click a yellow button at the bottom of the screen. Across conditions, the level of AI (i.e., the percentage of trials in which the computer “confederate” made blue-card choices) varied. In the DI phases, the computer always chose the blue card. Each condition had 16 trials. On each trial, the participant could select one of the two available cards by clicking and dragging it to the “choices” box centered in the game play area of the screen. The computer’s choice was programmed to occur at varying times in the trial to simulate a live person. When the computer made a choice, the participant could see the card move to the choices box. In addition, the participant would see both players’ counters update based on the card-combination (see Fig. 4, upper left). As trials continued, a depiction of each player’s choices on each trial remained on the screen. After the points were added to the counter, a 1-s inter-trial interval occurred, and the screen turned white. With each new trial, the mouse pointer returned to the middle of the computer screen. Participants were able to see a running total of their points and those of computer throughout the phase.

The baseline condition was used to assess the participant’s choices in a situation of equity. During the baseline condition, blue-blue outcomes resulted in both players (i.e., the participant and the computer) receiving 100 points on the counter. With all other outcome combinations (blue-green, green-blue, and green-green), both players received 20 points. In this phase, the computer was programmed to choose the blue card on 12 random trials. The criteria to advance to the next phase was at least 12 blue-card responses; all the participants chose the blue card more than 12 times in baseline, so all participants advanced to the next phase.

In the subsequent conditions, when both the participant and the computer chose the blue card, there was an unequal distribution of points across the two players (i.e., an unequal outcome). When one or both players chose a green card, there was an equal distribution of points (i.e., an equal outcome). For equal outcomes, both players received 20 points, and for unequal outcomes, the participant or the computer received 100 points and the other player received 20 points, depending on the phase. In experimental conditions, an AI phase was followed by a DI phase (i.e., AI-DI pair). Each participant experienced AI-DI pairs in which AI was programmed on 100%, 75%, 50%, 25%, and 0% of the trials (as in Experiment 1 except as a within-subjects manipulation). Participants were exposed to AI-DI pairs in either an ascending (0–100%; ten participants) or descending (100–0%; ten participants) sequence. In all DI phases, the computer chose the blue card on every trial.

Results

The percent of blue choices in baseline, each AI condition, and each DI phase for all participants are presented in Table 6, separated for participants in the descending (top portion) and ascending (bottom portion) sequences. In baseline, the participant and computer confederate received the same number of points for all the card combinations, but blue-blue outcomes resulted in more points (100) than the other three outcomes (20 points). In this phase, the participants chose the blue card on 94.1% of trials (range: 75–100%), and 11 of the 20 participants did so on 100% of trials.

Across all participants and AI conditions, the mean percent of blue-card choices was 92.4% (range: 6.3–100%), which was generally comparable to the mean blue-card choices in baseline. However, compared to baseline, there was more variability in the AI conditions. Fourteen of the 20 participants chose the blue card on at least 80% of the trials in each AI condition. Of the remaining six participants, three (P2, P6, and P19) chose the blue card on at least 80% of trials in all but one AI condition, and the AI condition with a low percent of blue-card choices was different for each. The remaining three participants (P4, P7, and P18) chose the blue card on an average of 65.4% of trials (range: 18.7–81.2%), with no systematic differences across conditions.

Figure 5 shows the mean percent of participants’ blue-card choices in both the AI and DI phases as a function of AI condition. In general, the mean percent of blue-card responses across all DI phases was less than the overall percent of blue-card responses across all AI conditions; the mean blue-card choices across all participants and DI phases was 58.8% (range: 0–100%). Notably, the percent of blue-card responses decreased systematically with decreases in the programmed level of AI in Phase 1: The mean percent blue-card responses in the DI phases were 88.1%, 74.1%, 63.8%, 42.2%, and 30.9% for the AI 100%, 75%, 50%, 25%, and 0% conditions, respectively.

Individual participant data from the DI phases are presented in Table 6. For a majority of participants, the same general pattern as the averaged data was observed: 13 of the 20 participants chose the blue card the most frequently in the DI phase that followed the 100% AI condition, and the percentage of blue-card choices decreased systematically in the remaining DI phases. Exceptions include P1, P2, P11, and P12 who chose the blue card on 100% of trials in all DI phases, P3 who chose the blue card on 37.5% of trials in the DI phase following the 100% AI condition and on 100% of trials in all the other DI phases, and P7 and P8 whose blue-card choices were variable.

As in Experiment 1, to determine the effect of programmed level of AI in Phase 1 on the likelihood of participants subsequently producing DI in Phase 2, the data were analyzed using a GLMM with GENLINMIXED as a method. The dichotomous dependent variable was the participants’ card choice in Phase 2, either blue or green, with green as the reference variable. We used the binomial distribution and the logit link function, resulting in a logistic regression model. Participant number was included as a random effect. Condition and trial in Phase 2 were specified as repeated measures, and Phase 2 condition (0%, 25%, 50%, 75%, and 100%) was a fixed effect. Significance level was .05. There were 20 participants, and each was exposed to 16 trials per condition in Phase 2, resulting in 1,600 trials in the analysis.

Table 7 depicts the analysis of variance of logistic regression with participant number as a random effect. The fixed effect of group was statistically significant (p < .001), indicating that the percentage of AI experienced in Phase 1 directly influenced participants’ choice of the blue card in Phase 2. Table 8 depicts the estimated marginal means of the participants’ blue-card choices in Phase 2, standard error, and average blue-card choices over a 95% confidence interval in Phase 2 as a function of condition. Using pairwise contrasts, the differences between conditions 100% and 0%, 75% and 0%, 50% and 0%, and 50% and 25% were significant (least significant difference adjusted p = < .001, .001, .013, and .022, respectively). These results generally indicate that participants played the blue card more often in conditions 100%, 75%, and 50% than in condition 0%.

Table 9 displays the mean number of trials with a blue-blue outcome for each AI condition across all participants. Different from Experiment 1, the actual number of blue-blue outcomes experienced approached the number programmed for all conditions. In no instance was there a difference between programmed and actual outcomes that was greater than two trials.

Discussion

In general, the results of this experiment indicate that when participants experienced relatively high (50–100%) levels of AI in phase 1, they were more willing to produce DI in a subsequent phase relative to a condition in which they did not experience AI in phase 1. Whereas participants did not differ in production of DI in the 100%, 75%, and 50% AI conditions, they were significantly less likely to produce DI in the 25%, and 0% AI conditions. These results indicate that reciprocal behavior by the participant was a function of the percent of reciprocal behavior recently experienced with the same partner (in the case of the current experiment, the “partner” was a computer whose responses had been programmed, but the participants were not aware of that during the game). These data replicate the results of Experiment 1 and extend them to a within-subjects manipulation presented in a considerably different format (computer versus tabletop game).

In this experiment, participants were exposed to either an ascending or a descending series of the five different AI conditions. In other words, a full counterbalancing of all possible AI-condition orders was not used. Although there were no significant differences between participants in the two sequences, these results do not rule out the possibility that experiencing the AI conditions in a more random (i.e., non-linear) manner would result in a different likelihood of producing DI in the DI phases. Therefore, future work should replicate this study using additional counterbalancing methods.

The results of the present experiment are similar to the findings obtained by Ribes-Iñesta et al. (2010) and Avalos et al. (2015), who showed that the participants’ reciprocal behavior in a puzzle completion task was a function of responding initiated and induced by a peer. The percentage of trials on which the confederate played on the participants’ puzzles was systematically manipulated in a manner similar to the current experiments (100%, 75%, 50%, 25%, 0%) within-subjects using both ascending and descending sequences. In both Ribes-Iñesta et al. and Avalos et al., when confederates placed puzzle pieces on the participants’ puzzles, participants reciprocated by placing a piece on the confederate’s puzzle. In contrast, when confederates placed pieces on their own puzzles, participants also tended to respond to their own. However, reciprocal behavior resulted in both people (i.e., participant and confederate) receiving the same number of points. The current study extends these results to a situation in which reciprocal behavior in the DI phase would create an unequal outcome, and the results were similar. More importantly, the present results replicate those obtained by Benvenuti et al. (2020) and Suarez et al. (2021) with the AI conditions of 100% and 0% and extend them to an analysis of AI at various points between the two extremes. In this sense, the current study replicates the previous results and adds the complexity of social interactions highlighted by Ribes-Iñesta et al. (2010) and Avalos et al. (2015).

General discussion

The results of Experiments 1 and 2 demonstrate how recent learning history with a partner may affect production of DI, and we were able to illustrate this using both a between- and a within-subjects design. The primary contribution of these data is showing that aversion to DI can be modulated by a personal history of learning about the other person’s behavior in a situation in which repeated interactions with that person are experienced. Moreover, this effect was shown despite many differences across the two experiments (e.g., participants from two distinct cultures (the USA and Brazil), table-top vs. computerized task, no verification versus verification that participants understood the points matrix, presence vs. absence of an equity baseline). Previous research has suggested that AI is sensitive to cultural norms (e.g., Blake, McAuliffe, et al., 2015; Corbit et al., 2017), but that DI is more common across cultures and species (Brosnan & de Waal, 2014). Shaw et al. (2016) have recently provided evidence in children that DI may also be sensitive to social norms under certain circumstances (e.g., those in which the individual as the choice to produce it). Thus, the current study extends these results to suggest that DI may also be sensitive to one’s recent, personal learning history and represents an initial step toward an analysis of learned patterns that can emerge as a result of a history with a particular partner in cooperative situations. Variables related to cooperation (e.g., a remote history of cooperation or lack of cooperation with multiple other people, particular cultural practices) are likely to influence one’s aversion to inequity in general and one’s likelihood of accepting DI in particular.

One difference in the results of Experiments 1 and 2 is the observed level of acceptance of AI, measured as the percent of blue card choices in Phase 1 of the card game. In Experiment 1 (US participants), the percentage of blue-card choices in AI phases was at chance levels (59%), but it was 92% in Experiment 2 (Brazil participants). This may represent different levels of aversion to advantageous inequity across the two cultures (cf., Blake, McAuliffe, et al., 2015). However, it is also possible that procedural differences across the two experiments are responsible for this observed difference. Benvenuti et al. (2020) used similar procedures as Experiment 1 with participants in Brazil and found that the percent of blue-card responses in the AI phases was approximately 70% and tended to be more variable relative to the current Experiment 2, supporting the idea that procedural, rather than cultural, factors may be responsible for the differences across the current experiments. For example, although participants in both experiments earned points during the game, only those in Experiment 2 were told they would be able to exchange the points earned for money. This instruction may have increased the value of the game points for participants in Experiment 2 relative to those in Experiment 1, who were told simply that at the end of the study they would receive either course credit or a $5 gift card. However, Suarez et al. (2021) directly compared participants who were told that points could be exchanged for money to participants who were not given this instruction and found similar levels of AI and DI. Thus, Suarez et al.’s findings reduce the likelihood that being told points could be exchanged for money influenced Phase 1 AI rates in the current experiment.

Another procedural difference that may have affect influenced AI rates in Phase 1 is that Experiment 2 included an equity baseline but Experiment 1 and Benvenuti et al. did not. The purpose of the equity phase was to attempt to reduce the influence of pre-experimental history on willingness to produce DI and to give all participants a comparable recent history with equal outcomes in the context of the game task. It is possible that initially experiencing equity in the task increased participants’ willingness to produce AI, but it is also possible that this initial phase allowed participants to become more familiar with the task itself in general. Future research should systematically explore the effect of an equity baseline on participants’ willingness to produce both AI and DI.

Another potentially important difference between Experiments 1 and 2 that may have affected Phase 1 responding is how the participants were recruited. In Experiment 1, the participants were recruited from a department-wide participant pool comprising students across multiple sections of undergraduate introductory psychology, statistics, and research methods classes and from a variety of graduate classes, whereas in Experiment 2 they were recruited from a single class. In both experiments, participants were asked to refrain from talking about the experiment to others so that the results would not be influenced (i.e., participants were asked to not reveal to naïve participants that procedures involved a confederate). Because the participants in Experiment 2 interacted with each other regularly outside of the experimental situation, there is a higher likelihood, relative to Experiment 1, of them discussing the experiment amongst themselves, which may have affected the results. Future research should better control for this possibility, such as by using anonymous participant recruiting software.

In the current experiments, all the participants were exposed to AI conditions prior to assessing their production of DI. Thus, we were unable to get an estimated measure of participants’ willingness to produce DI outside of a context that had previously benefited them. Benvenuti et al. (2020) included a group in their experiment that was only exposed to a DI phase (i.e., this group never experienced equity or AI in the context of the game) and found that fewer than 50% of the participants produced DI. Future work could further investigate this issue by first giving participants the opportunity to produce DI without any prior interaction with the other game player (i.e., a direct comparison between AI DI and DI AI phases).

Experiment 1 included a social validity survey in which we assessed participants’ opinions on the level of importance of cooperation, their levels of anxiety and anger during the game, and their opinion about how empathetic their partner in the game was. Most responses were in the form of a rating scale, but the final question was opened ended, and it asked participants to tell what they thought the experiment was about. On the rating scale questions, there were generally no differences across participants exposed to the different AI conditions. One reason may be that the questions were too general. Future iterations of the social validity survey should ask more targeted questions that isolate reactions to particular phases of the game rather than the overall game. Most of the participants chose not to answer the open-ended question, and those who did typically responded with some version of the title of the study used in participant recruitment. A similar survey was not used in Experiment 2 because doing so was not the norm for research at the university where the work was conducted. Thus, future surveys should ask more probing open-ended questions and should be conducted with all participants.

The present results may have implications for both the analysis of social behavior and for cultural analysis inspired by the idea that interlocking behaviors may produce aggregated products that may be affected by an external, selective, environment (Glenn et al., 2016). Almost all the basic data from learning principles comes from situations in which individual behavior was measured as a function of individual outcomes (or stimulus control directly or indirectly based on reinforcement history). The idea of inequity aversion may sound counterintuitive in this sense. However, much human behavior is controlled by social consequences (Skinner, 1953), and cultures vary in their overall acceptance of inequality across group members. Many researchers have highlighted the need to include a behavioral/selectionist perspective in analyses of cultural practices (e.g., da Hora & Sampaio, 2019; Guimarães et al., 2019; Mattaini, 2019) as well as the need to take into account both the biological and behavioral processes in the study of behavior (Baum, 2005; Rachlin, 2014). The current study highlights the importance of the study of stimulus control by another person’s behavior within a social interaction as another influence on social behavior, in the same sense discussed by Schmitt (1998). With respect to cooperative situations in which reciprocity is uncertain, this kind of investigation suggests flexibility of behavior that is often considered to be culturally determined: Social interactions may constrain one’s tendency to maximize personal gains in favor of another person’s benefit in some circumstances. Understanding these kinds of circumstances is something that we can achieve using experimental strategies similar the ones we described here.

Data Availability

The data reported in these experiments are available on request to the first author. Neither of the experiments were preregistered.

References

Ahmed, A., & Karlapalem, K. (2014). Inequity aversion and the evolution of cooperation. Physical Review E, 89, 022802. https://doi.org/10.1103/PhysRevE.89.022802

Avalos, L. P., Ribes-Iñesta, E., Ortiz, I. L., & Serna, B. L. (2015). Interacciones altruístas totales como función de la inducción de reciprocidad. Revista Mexicana de Análisis de la Conducta, 41, 32–52. https://doi.org/10.5514/rmac.v41.i1.63688

Axelrod, R., & Hamilton, W. D. (1981). The evolution of cooperation. Science, 211, 1390–96.

Baker, F., & Rachlin, H. (2001). Probability of reciprocation in repeated prisoner’s dilemma games. Behavioral Decision Making, 14(1), 51–67. https://doi.org/10.1002/1099-0771(200101)14:1%3C51::AID-BDM365%3E3.0.CO;2-K

Baum, W.M. (2005). Understanding behaviorism: Behavior, culture, and evolution. : Blackwell.

Benvenuti, M., Siqueira, J. O., Suarez, C. J., Nascimento, C. A. V. S., Lionello-DeNolf, K. M., & Abreu-Rodrigues, J. (2020). A preliminary two-phase test of how inequity aversion is modulated by previous dyadic interactions. Experimental Analysis of Human Behavior Bulletin, 32, 13–21.

Blake, P. R., McAuliffe, K., Corbit, J., Callaghan, T. C., Barry, O., Bowie, A., . . . Warneken, F. (2015). The ontogeny of fairness in seven societies. Nature, 528, 258–262. https://doi.org/10.1038/nature15703

Blake, P. R., Rand, D. G., Tingley, D., & Warneken, F. (2015). The shadow of the future promotes cooperation in a repeated prisoner’s dilemma for children. Scientific Reports, 5, 14559. https://doi.org/10.1038/srep14559

Boyd, R., & Richerson, P. J. (1985). Culture and the evolutionary process. : University of Chicago Press.

Brosnan, S. F. (2011). A hypothesis of the co-evolution of cooperation and responses to inequity. Frontiers in Neuroscience, 5, 43. https://doi.org/10.3389/2Ffnins.2011.00043

Brosnan, S. F., & Bshary, R. (2016). On potential links between inequity aversion and the structure of interactions for the evolution of cooperation. Behaviour, 153(9-11), 1267-1292. https://doi.org/10.1163/1568539X-00003355

Brosnan, S.F., , & de Waal, F.B.M. (2014). Evolution of responses to (un)fairness. Science, 17, 346 (6207). https://doi.org/10.1126/science.1251776

Corbit, J., McAuliffe, K., Callaghan, T. C., Blake, P. R., & Warneken, F. (2017). Children’s collaboration induces fairness rather than generosity. Cognition, 168, 344–356. https://doi.org/10.1016/j.cognition.2017.07.006

Cropanzano, R., & Mitchell, M. S. (2005). Social exchange theory: An interdisciplinary review. Journal of Management, 31(6), 874–900. https://doi.org/10.1177/0149206305279602

Dal Bó, P. (2005). Cooperation under the shadow of the future: Experimental evidence from infinitely repeated games. American Economic Review, 1591–1604. https://doi.org/10.1257/000282805775014434

Edwards, T. L., Lotfizadeh, A. D., & Poling, A. (2019). Motivating operations and stimulus control. Journal of the Experimental Analysis of Behavior, 112(1), 1–9. https://doi.org/10.1002/jeab.516

Fantino, E., & Kennelly, A. (2009). Sharing the wealth: Factors influencing resource allocation in the sharing game. Journal of the Experimental Analysis of Behavior, 91(3), 337–354. https://doi.org/10.1901/jeab.2009.91-337

Faul, F., Erdfelder, E., Lang, A.-G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39, 175-191. https://doi.org/10.3758/bf03193146

Fehr, E., & Schmidt, K. M. (1999). A theory of fairness, competition, and cooperation. Quarterly Journal of Economics, 114, 817–868. https://doi.org/10.1162/003355399556151

Glenn, S. S., Malott, M. E., Andery, M. A.P.A, Benvenuti, M., Houmanfar, R. A., Sandaker, I., Todorov, J. C., Tourinho, E.Z., Vasconcelos, L. A. (2016). Toward consistent terminology in a behaviorist approach to cultural analysis. Behavior and Social Issues, 25, 11–27. https://doi.org/10.5210/bsi.v25i0.6634

Guimarães, T.M.M., Picanço, C. R. F., & Tourinho, E. Z. (2019). Effects of negative punishment on culturants in a situation of concurrence between operant contingencies and metacontingencies. Perspectives on Behavior Science, 42, 733–750. https://doi.org/10.1007/s40614-019-00224-z

Hamilton, W. D. (1964). The genetical evolution of social behavior, Journal of Theoretical Biology 7, 1–16. https://doi.org/10.1016/0022-5193(64)90038-4

Hauser, O., Hilbe, C., Chatterjee, K., & Nowak, M. (2019). Social dilemmas among unequals. Nature, 572, 524–527. https://doi.org/10.1038/s41586-019-1488-5

Hayes, S. C., Atkins, P., Wilson, D. S. (2021). Prosocial: Using an evolutionary approach to modify cooperation in small groups. In R. Houmanfar, M. Fryling & M. P. Alavosius (Eds.), Applied behavior science in organizations: Consilience and emerging trends in organizational behavior management (pp. 197–224). Routledge.

Hayes, S. C., & Sanford, B. T. (2014). Cooperation cam first: Evolution and human cognition. Journal of the Experimental Analysis of Behavior, 101(1), 112–129. https://doi.org/10.1002/jeab.64

da Hora, K., L., & Sampaio, A., A., S. (2019). Units of analysis for corruption experiments: Operant, culturobehavioral lineage, culturant, and macrobehavior. Perspectives on Behavior Science, 42, 751–771. https://doi.org/10.1007/s40614-019-00225-y

House, B. R., Silk, J. B., Henrich, J., Barrett, H. C, Scelza, B. A., Boyette, A. H., Hewlett, B. S., McElreath, R., & Laurence, S. (2013). Ontogeny of prosocial behavior across diverse societies. Proceedings of the National Academy of Sciences, 110(36), 14586–14591. https://doi.org/10.1073/pnas.1221217110

Locey, M. L., Safin, V., & Rachlin, H. (2013). Social discounting and the prisoner’s dilemma game. Journal of the Experimental Analysis of Behavior, 99, 85–97. https://doi.org/10.1002/jeab.3

Marwell, G., & Schmitt, D. R. (1975). Cooperation: An Experimental analysis. Academic Press.

Mattaini, M. A. (2019). Out of the lab: Shaping an ecological and constructional cultural systems science. Perspectives on Behavior Science, 42, 713–731. https://doi.org/10.1007/s40614-019-00208-z

McAuliffe, K., Warneken, F., & Blake, P. (2014). Children's sense of fairness: Respect isn't everything. Trends in Cognitive Sciences, 23(9), 715–716. https://doi.org/10.1016/j.tics.2019.05.005

Molm, L. D., Takahashi, N., & Peterson, G. (2000). Risk and trust in social exchange: An experimental test of a classical proposition. American Journal of Sociology, 105(5), 1396–1427. http://www.jstor.org/stable/3003770

Nowak, M.A, (2006). Five rules for the evolution of cooperation. Science, 314, 1560–63. https://doi.org/10.1126/science.1133755

Rachlin, H. (2014). The escape of the mind. Oxford University Press.

Rachlin, H., & Jones, B. A. (2008). Social discounting and delay discounting. Behavioral Decision Making, 21(1), 29–43. https://doi.org/10.1002/bdm.567

Rand, D. G., & Nowak, M. A. (2013). Human cooperation. Trends in Cognitive Sciences, 17, 413–25. https://doi.org/10.1016/j.tics.2013.06.003

Ribes-Iñesta, E., Rangel, N., Pulido, L., Valdez, U., Ramírez, E., Jiménez, C., & Hernández, M. (2010). Reciprocity of responding as a determinant of partial-altruistic behavior in Humans. European Journal of Behavior Analysis, 11(2), 105–114.

Schmitt, D. R. (1986). Competition: Some behavioral issues. The Behavior Analyst, 9(1), 27–34. https://doi.org/10.1007/BF03391927

Schmitt, D. R. (1998). Social behavior. In K. Lattal & M. Perone (Ed.), Handbook of research method in human operant behavior. Plenum Press.

Shaw, A., Choshen-Hillel, S., & Caruso, E. M. (2016). The development of inequity aversion: Understanding when (and why) people give others the bigger piece of the pie. Psychological Science, 27, 1352–1359. https://doi.org/10.1177/2F0956797616660548

Shimoff, E., & Matthews, B. A. (1975). Unequal reinforcer magnitudes and relative preference for cooperation in the dyad. Journal of the Experimental Analysis of Behavior, 24(1), 1–16. https://doi.org/10.1901/jeab.1975.24-1

Skinner, B. F. (1953). Science and human behavior. Macmillan.

Skinner, B. F. (1981). Selection by consequences. Science, 31(4507), 501–504. https://doi.org/10.1126/science.7244649

Spiga, R., Cherek, D. R., Grabowski, J., & Bennett, R. H. (1992). Effects of inequity on human free-operant cooperative responding: A validation study. The Psychological Record, 42(1), 29–40. https://doi.org/10.1007/BF03399585

Suarez, C. J., Benvenuti, M. F., Couto, K. C., Siqueira, J. O., Abreu-Rodrigues, J., Lionello-DeNolf, K. M., & Sandaker, I. (2021). Reciprocity with unequal payoffs: Cooperative and uncooperative interactions affect disadvantageous inequity aversion. Frontiers in Psychology, 12, 628425. https://doi.org/10.3389/fpsyg.2021.628425

Vichi, C., Andery, M. A. P. A., & Glenn, S. (2009). A metacontingency experiment: Effects of contingent consequences on patterns of interlocking contingencies of reinforcement. Behavior and Social Issues, 18, 41–57. https://doi.org/10.5210/bsi.v18i1.2292.

Acknowledgements

This study was reviewed and approved by the Assumption University Institutional Review Board (#2017-12) and by the Psychological Institute Institutional Ethical Review Board from the University of São Paulo (Plataforma Brasil protocol: CAAE: 64336716.4.0000.5561). We thank Anthea Oikonomou-Savvidis, Adriana Savage, Alana Kearney, Katie Kalenoski, Joe Malmborg, Lisa Mazzola, and Lily Elicier (Experiment 1) and Cesar do Nascimento (Experiment 2) for their assistance with data collection.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lionello-DeNolf, K.M., Benvenuti, M.F. & Suarez, C.J. Willingness to produce disadvantageous outcomes in cooperative tasks is modulated by recent experience. Learn Behav 51, 135–152 (2023). https://doi.org/10.3758/s13420-021-00508-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13420-021-00508-y