Abstract

This paper is concerned with the generalized finite-time stability, boundedness and stabilization for fractional-order memristive neural networks (FMNNs) with the fractional-order 0 < α < 1. Under the fractional-order Filippov differential inclusion frame, FMNNs are modelled as a fractional-order differential equation with discontinuous right-hand. Based on the topological degree property, the existence of equilibrium point of FMNNs is proved. By means of the generalized Gronwall inequality, the Laplace transform and the Lyapunov functional candidate, some conditions to guarantee the generalized finite-time stability and boundedness for FMNNs are derived in terms of linear matrix inequalities (LMIs). In addition, by using appropriate feedback controller, the generalized finite-time stabilization condition is also addressed in forms of LMIs. Finally, two examples are given to demonstrate the validity of the theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 INTRODUCTION

In recent years, neural networks (NNs) have been applied in different fields widely, such as secure quantum communication, optimization, signal and image processing and automatic control, etc. [1–4]. Many results with respect to dynamics of integer-order NNs have been done (see [5–8], and references therein).

As we all know, compared with integer-order calculus, fractional-order calculus has better advantage for the description of memory and hereditary properties of various processes. It has found that, fractional calculus can be applied to precisely describe physical systems, biological system, colored noise, finance and so on [9–11]. Taking the above facts into account, it is significant for scholars to analyze the dynamical behavior of NNs by applying the fractional-order calculus. In [12], Arena et al., firstly proposed a fractional-order cellular neural network model. In [13], the authors presented a fractional-order three-network, which put forward limit cycles and stable orbits for different parameter values. It is worth noting that some excellent results on dynamical behaviors, such as the stability, stabilization and synchronization, have been developed for fractional-order neural networks (see [14–20]).

Memristor, as the fourth basic passive circuit element along with resistor, inductor and capacitor (see Fig. 1) was firstly proposed by Chua [21], and is realized by the research team from the Hewlett-Packard Lab in 2008 [22]. As a two-terminal passive device, the value of Memristor which is called as memristance, relays on magnitude and polarity of the voltage, and shares many properties of resistor and the same unit of measurement (Ohm). According to the characteristics of current–voltage (see Fig. 2), it is easy to find that memristor exhibits the feature of pinched hysteresis and is same as the neurons in the human brain. Therefore, the memristor possesses a great potential in application.

Recently, some researchers begin to construct the memristive neural dynamic networks through replacing the resistor with the memristor [23, 24]. The hybrid complementary metal-oxide-semiconductor is an important component of the memristor-based neural networks, so that memristive neural networks could have a much wider application in bioinspired engineering [25–28].

Meanwhile, more and more scholars have paid attention to the dynamic behavior of the memristor-based neural networks and achieved many significative results (see [29–40]). In [29], Bao and Zeng discussed global Mittag–Leffler multistability for periodic delayed recurrent neural network with memristors, and the global exponential stability for switched memristive neural networks with time-varying delays was considered in [30]. In [31], authors studied the global exponential stability of memristive neural networks with impulse time window and time-varying delays, some stability conditions were proposed. Zhang and Shen proposed some new algebraic criteria for synchronization stability of chaotic memristive neural networks with time-varying delays in [32]. In [33], weak, modified and function projective synchronization of chaotic memristive neural networks with time delays was given by Wu and Li et al. Besides, Wu and Zhang et al. also investigated the adaptive anti-synchronization and \({{H}_{\infty }}\) anti-synchronization for memristive neural networks with mixed time delays and reaction-diffusion terms in [34]. In [35], authors derived some sufficient conditions and explored the global anti-synchronization of a class of chaotic memristive neural networks with time-varying delays. For the memristive neural networks with mixed time-varying delays, Wu and Han et al. considered its exponential passivity in [36]. In [37] and [38], Chen et al. discussed the global Mittag–Leffler stability and synchronization of memristor-based fractional-order neural networks with or without delays. In [39], authors investigated the global finite-time synchronization for fractional-order memristor-based neural networks with time delays. In [40], Bao and Cao considered the global projective synchronization for fractional-order memristor-based neural networks. The reliable stabilization condition was proposed for memristor-based recurrent neural networks with time-varying delays by Mathiyalagan etc. in [41].

It should be mentioned that, in practical applications, the main attention is paid to the dynamics of systems over a finite time interval (see [16, 18–20, 39]). In [16], authors studied the global synchronization in finite time for fractional-order neural networks with discontinuous activations and time delays. Subsequently, in [18] and [19] Peng and Wu considered the global non-fragile synchronization and the global projective synchronization in finite time for fractional-order discontinuous neural networks with nonlinear growth activations based on sliding mode control strategy. In [20], the non-fragile robust finite-time stabilization and \({{H}_{\infty }}\) performance analysis was proposed for fractional-order delayed neural networks with discontinuous activations under the asynchronous switching. In [42], Song etc. discussed the global robust stability in finite time for fractional-order neural networks. And finite-time stability analysis of fractional-order time-delay systems with Gronwall’s approach was performed in [43]. It should be pointed out that, very little attention has been paid on the generalized finite-time stability and stabilization of FMNNs.

Motivated by the preceding description, our aim is to explore the issue of generalized finite-time stability, boundedness and stabilization for FMNNs. The main novelty of our contribution lies in three aspects:

(1) The existence of equilibrium point is proved by applying the topological degree theory;

(2) The conditions to guarantee the generalized finite-time stability and boundedness are given in terms of LMIs;

(3) A new feedback controller is designed;

(4) The generalized finite-time stabilization condition is presented in forms of LMIs.

The rest of this paper is organized as follows. In Section 2, some preliminaries and the model formulation are introduced. Sufficient criteria about generalized finite-time stability, boundedness, and stabilization of FMNNs are derived in Section 3. Two numerical simulations are given in Section 4. Finally conclusion is drawn in Section 5.

2 PRELIMINARIES AND MODEL DESCRIPTION

2.1 Preliminaries

In this section, we first recall some definitions and Lemmas, which will be useful to derive the main results.

Definition 2.1 [9]. The fractional integral of order \({{\alpha }}\) for a function \(g\left( t \right)\) is defined as

where \(t \geqslant 0\) and \({{\alpha }} > 0\), \({{\Gamma }}\left( \cdot \right)\) is the gamma function, which is \({{\Gamma }}\left( {{\alpha }} \right) = \int_0^\infty {{{t}^{{\alpha - 1}}}} {{e}^{{ - t}}}dt\).

Definition 2.2 [9]. Caputo’s derivative with fractional-order \({{\alpha }}\) for a function \(g\left( t \right) \in {{C}^{n}}\left( {\left[ {0, + \infty } \right],R} \right)\) is defined as

where \(t \geqslant 0\) and n is a positive integer such that \(n - 1 < \alpha < n\). Moreover, when 0 < α < 1,

A function frequently used in the solutions of fractional-order systems is the Mittag–Leffler function

where \({{\alpha }} > 0\). The Mittag–Leffler function with two parameters appears most frequently and has the form as follows:

where \({{\;}{\alpha }} > 0,\beta > 0\), and z is complex number. Obviously, for \({{\beta }} = 1\), we have \({{E}_{\alpha }}\left( z \right) = {{E}_{{\alpha ,1}}}\left( z \right)\), \({{E}_{{0,1}}}\left( z \right) = \frac{1}{{1 - z}}\), also \({{E}_{{1,1}}}\left( z \right) = {{e}^{z}}\).

The Laplace transform \(\mathfrak{L}\left\{ \cdot \right\}\) of \({{E}_{{\alpha ,\beta }}}\left\{ \cdot \right\}\) is defined by

where t and s denote the variables in the time domain and Laplace domain, respectively, ν is the real number, the real part \(\operatorname{Re} \left( s \right)\) of s is \(\operatorname{Re} \left( s \right) > {{\left| \nu \right|}^{{\frac{1}{\alpha }}}}\).

Consider the fractional-order differential equation with discontinuous right-hand

where f(x) is a discontinuous function. Define the set-valued map of f(x) as

where \(B\left( {x,\delta } \right) = \left\{ {y{\kern 1pt} :\,\left| {\left| {y - x} \right|} \right| \leqslant \delta } \right\}\) is the ball of center x and radius \({{\delta }}\), intersection is taken over all sets N of measure zero and over all \({{\delta }} > 0\), and \(\mu \left( N \right)\) is Lebesgue measure of set N.

Definition 2.3 [44]. A Filippov solution x(t) of the system (1) with initial condition x(0) = x0 is an absolutely continuous function on any compact subinterval [t1, t2] of [0, T], which satisfies x(0) = x0 and the differential inclusion:

Let \(x{\text{*}} \in {{R}^{n}}\) be a constant vector, if \(0 \in F\left( {x{\text{*}}} \right)\), then \(x{\text{*}}\) is said to be the equilibrium point of the fractional-order system (1).

Definition 2.4. The equilibrium point \(x{\text{*}}\) of the system (1) is said to be generalized finite-time stable with respect to (b1, b2, T, R) with positive scalars b1, b2, T, b2 > b1 and matrix \(R > 0\), if

then

Moreover, consider the fractional-order differential equation system

where \(w \in {{R}^{m}}\) is the disturbance input, and satisfies \({{w}^{T}}\left( t \right)w\left( t \right) \leqslant S\), \(S \geqslant 0\) is a constant, \(D \in {{R}^{{n \times m}}}\).

Definition 2.5. The equilibrium point \(x{\text{*}}\) of the system (1) is said to be generalized finite-time bounded with respect to (b1, b2, T, R, S) with positive scalars b1, b2, T, b2 > b1 and matrix R > 0, if

then

Lemma 2.1 [42]. Let \(x\left( t \right) \in {{R}^{n}}\) be a continuous and differential function and \(P\) be a positive definite matrix. For \({{\alpha }} \in \left( {0,1} \right)\), then the following inequality holds

Lemma 2.2 [43]. Consider that \(x\left( t \right)\) and \(a\left( t \right)\) are nonnegative and local integrable on [0, T], \(T \leqslant + \infty \), \(f(t)\) is a nonnegative, nondecreasing, and continuous function defined on \(t \in \left[ {0,T} \right]\), \(f\left( t \right) \leqslant M\)(constant), \({{\alpha }} > 0\), satisfying

If \(a\left( t \right)\) is a nondecreasing function on [0, T], then

where \({{E}_{\alpha }}\) is Mittag–Leffler function.

Lemma 2.3. Let \({{\varepsilon }} > 0\), given any \(x,y \in {{R}^{n}}\), and matrix A, such taht

Supposing \({{\Omega }}\) is a nonempty, bounded and open subset of \({{R}^{n}}\). The closure of \({{\Omega }}\) is denoted by \(\bar {\Omega }\) , and the boundary of \({{\Omega }}\) is denoted by \(\partial {{\Omega }}\). The following two Lemmas give two properties of the topological degree.

Lemma 2.4 [42]. Let \(H\left( {\mu ,x} \right):\left[ {0,1} \right] \times \bar {\Omega } \to {{R}^{n}}\) be a continuous homotopy mapping. If \(H\left( {\mu ,x} \right) = z\) has no solutions in \(\partial {{\Omega }}\) for \({{\mu }} \in \left[ {0,1} \right]\) and \(z \in {{R}^{n}}\backslash H\left( {\mu ,\partial \Omega } \right)\), then the topological degree \(\deg \left( {H\left( {\mu ,x} \right),\Omega ,z} \right)\) of \(H\left( {\mu ,x} \right)\) is a constant which is independent of \({{\mu }}.\) In this case, \(\deg \left( {H\left( {0,x} \right),\Omega ,z} \right) = \deg \left( {H\left( {1,x} \right),\Omega ,z} \right)\).

Lemma 2.5 [42]. Let \(H\left( {\mu x} \right):\bar {\Omega } \to {{R}^{n}}\)be a continuous mapping. If \(\deg \left( {H\left( x \right),\Omega ,z} \right) \ne 0\), then there exists at least one solution of H(x) = z in Ω.

2.2 Model Description

In this paper, we consider FMNNs described by

where 0 < α < 1, \(i = 1,2, \ldots ,n\), \(n\) is the number of units in a neural network, \({{x}_{i}}\left( t \right)\) denotes the state variable associated with the ith neuron; ci > 0 is a constant; \({{I}_{i}}\) represents the external input; \({{f}_{i}}\left( \cdot \right)\) stands for the neural activation function; \({{a}_{{ij}}}\left( \cdot \right)\) is connection memristive weight, which is defined as

where switching jump \({{\chi }_{j}} > 0\), and weights \({{\acute{a} }_{{ij}}}\) and \({{\grave{a} }_{{ij}}}\) are constants, and \(\widetilde {{{a}_{{ij}}}} = \max \left\{ {\left| {\acute{a} _{{ij}}^{{}}} \right|,\left| {\acute{a} _{{ij}}^{{}}} \right|} \right\}\).

Set, x(t) = \({{\left( {{{x}_{1}}\left( t \right),{{x}_{2}}\left( t \right), \ldots ,{{x}_{n}}\left( t \right)} \right)}^{T}},\) \(\left| {x\left( t \right)} \right| = {{\left( {\left| {{{x}_{1}}\left( t \right)} \right|,\left| {{{x}_{2}}\left( t \right)} \right|, \ldots ,\left| {{{x}_{n}}\left( t \right)} \right|} \right)}^{T}},\) \(\acute{A} = {{({{\acute{a} }_{{ij}}})}_{{n \times n}}}\), \(\grave{A}\,\, = {{({{\grave{a} }_{{ij}}})}_{{n \times n}}}\), \(\chi = {{({{\chi }_{1}},{{\chi }_{2}}, \ldots ,{{\chi }_{n}})}^{T}}\). The system (2) can be rewritten as the following vector form:

where C = diag(c1, c2, …, cn), \(A\left( {x\left( t \right)} \right) = {{\left( {{{a}_{{ij}}}\left( {{{x}_{j}}\left( t \right)} \right)} \right)}_{{n \times n}}}\), \(f\left( {x\left( t \right)} \right) = {{\left( {{{f}_{1}}\left( {{{x}_{1}}\left( t \right)} \right),{{f}_{2}}\left( {{{x}_{2}}\left( t \right)} \right), \ldots ,{{f}_{n}}\left( {{{x}_{n}}\left( t \right)} \right)} \right)}^{T}}\), I = \({{\left( {{{I}_{1}},{{I}_{2}}, \ldots ,{{I}_{n}}} \right)}^{T}}\) , and

Noting that system (3) is a fractional-order differential equation with discontinuous right-hand, the solution of the system (3) is usually considered in Filippov’s sense. Based on Definition 2.3, \(x\left( t \right)\) is the solution of FMNNs (3) if \(x\left( t \right)\) is an absolutely continuous function on any compact subinterval [t1, t2] of \(\left[ {0,T} \right]\), and satisfies x(0) = x0 and the differential inclusion:

In order to ensure the existence and uniqueness of the solutions of FMNNs (3), the following assumption is made for the activation function \(f\left( x \right)\) : \(\left( {{{H}_{1}}} \right)\) : \(\left( i \right)f\left( x \right)\) is Lipschitz continuous, i.e. for any given \(u,{v} \in R\), there exists L = diag(l1, l2, …, ln), such that \(\left| {\left| {f\left( u \right) - f\left( {v} \right)} \right|} \right| \leqslant \left| {\left| {L\left( {u - {v}} \right)} \right|} \right|\); \(\left( {ii} \right){{f}_{j}}\left( { \pm {{\chi }_{j}}} \right) = 0,j = 1,2, \ldots ,n\).

Lemma 2.6. Under the assumption \(\left( {{{H}_{1}}} \right)\), the following inequality holds

Proof. For any \(i,j = 1,2, \ldots ,n\), we separate four cases to illustrate the inequality in the above Lemma. Case 1: Let \(\left| {{{x}_{i}}\left( t \right)} \right| < {{\chi }_{i}},\left| {{{y}_{i}}\left( t \right)} \right| > {{\chi }_{i}}\) one has

Case 2: If \(\left| {{{x}_{i}}\left( t \right)} \right| > {{\chi }_{i}},\left| {{{y}_{i}}\left( t \right)} \right| > {{\chi }_{i}}\) then

Case 3: Let \(\left| {{{x}_{i}}\left( t \right)} \right| \leqslant {{\chi }_{i}}\), \(\left| {{{y}_{i}}\left( t \right)} \right| \geqslant {{\chi }_{i}}\), in this case, we have \({{y}_{i}}\left( t \right) \leqslant - {{\chi }_{i}}\) or \({{y}_{i}}\left( t \right) \geqslant {{\chi }_{i}}\). If \({{y}_{i}}\left( t \right) \leqslant - {{\chi }_{i}}\), then

And another case, if \({{y}_{i}}\left( t \right) \geqslant {{\chi }_{i}}\), then

Case 4: This case \(\left| {{{y}_{i}}\left( t \right)} \right| \leqslant {{\chi }_{i}} \leqslant \left| {{{x}_{i}}\left( t \right)} \right|\) is similar to Case 3, so we can also get

3 MAIN RESULTS

In this section, we address some sufficient conditions of the existence of equilibrium point, the generalized finite-time stability, boundedness and stabilization for FMNNs (3).

Theorem 3.1. Under the assumption \(\left( {{{H}_{1}}} \right)\), if there exists a scalar \({{\varepsilon }} > 0\), and a positive definite matrix P, such that

then FMNNs (3) has at least an equilibrium point.

Proof. Set

where \(\tilde {U} = A\left( x \right)f\left( 0 \right) + I\). It’s obvious that \(x{\text{*}} \in {{R}^{n}}\) is an equilibrium point of the FMNNs (3), if and only if \(U\left( {x{\text{*}}} \right) = 0\).

Let \(H\left( {x,\mu } \right) = - Cx + \mu A\left( x \right)h\left( x \right) + \mu \tilde {U}\), \({{\mu }} \in \left[ {0,1} \right]\), where \(h\left( x \right) = f\left( x \right) - f\left( 0 \right)\). It’s easy to find that \(H\left( {x,\mu } \right)\) is a continuous homo-topy mapping.

Now, we construct a convex region \({{\Omega }}\), such that

(i) \(0 \in {\text{int}{\Omega }}\),

(ii) \(H\left( {x,\mu } \right) \ne 0,\,\,x \in \partial \Omega ,\,\,\mu \in \left[ {0,1} \right]\).

By Lemma 2.3, we have

Let \({{\pi }_{i}} = 2\lambda _{{\min }}^{{ - 1}}\left( { - \psi } \right)\left| {{{{(P\tilde {U})}}_{i}}} \right|\), \(\Omega = \{ x \in {{R}^{n}}:\left| {{{x}_{i}}} \right| < {{\pi }_{i}} + 1,i = 1,2, \ldots ,n\} \). Obviously, \({{\Omega }}\) is an open convex bounded set independent of parameter \({{\mu }}\). If \(x \in \partial \Omega \), then combining with (5), it follows that \({{x}^{T}}PH\left( {x,\mu } \right) < 0\), which implies that \(H\left( {x,\mu } \right) \ne 0\) for any \(x \in \partial \Omega \), \({{\mu }} \in \left[ {0,1} \right]\). According to Lemma 2.4, it follows that \(\deg \left( {H\left( {1,x} \right),\Omega ,0} \right) = \deg \left( {H\left( {0,x} \right),\Omega ,0} \right)\), i.e., \(\deg \left( {U\left( x \right),\Omega ,0} \right) = \deg \left( { - Dx,\Omega ,0} \right) = \operatorname{sgn} \left| { - D} \right| \ne 0,\) where \(\left| { - D} \right|\) is the determinant of \( - D\). By Lemma 2.5, U(x) = 0 has at least a solution in \({{\Omega }}\). This indicates that FMNNs (3) has at least an equilibrium point in \({{\Omega }}\).

Theorem 3.2. Suppose that the condition of Theorem 3.1 holds, and there exists a scalar \({{\delta }}\), a matrix \(Q > 0\), \(Q \in {{R}^{{n \times n}}}\), such that

where P = \({{R}^{{\frac{1}{2}}}}Q{{R}^{{\frac{1}{2}}}},{\text{cond}}\left( Q \right) = \frac{{{{\lambda }_{{\max }}}\left( Q \right)}}{{{{\lambda }_{{\min }}}\left( Q \right)}}\) denotes the condition number of Q, \({{\lambda }_{{\max }}}\left( Q \right)\) and \({{\lambda }_{{\min }}}\left( Q \right)\) indicate the maximum and minimum eigenvalue of the matrix, respectively, then, FMNNs (3) is generalized finite-time stable with respect to (b1, b2, T, R).

Proof. Let \(x{\text{*}}\) be the equilibrium point of system (3), and shift it to the origin by using \(y\left( t \right) = x\left( t \right) - x{\text{*}}\). We have

It is easy to check that \(F\left( x \right) = - cx\left( t \right) + \sum\nolimits_{j = 1}^n {\overline {co} } \left[ {A\left( {x\left( t \right)} \right)} \right]f\left( {x\left( t \right)} \right) + I\) is an upper semicontinuous set-valued map with nonempty, compact and convex values. Hence, \(F\left( x \right)\) is measurable. By the measurable selection theorem, there exists a measurable function \(\gamma \left( {y\left( t \right)} \right) \in \overline {co} \left[ {A\left( {y\left( t \right) + x{\text{*}}} \right)} \right]f\left( {y\left( t \right) + x{\text{*}}} \right) - \overline {co} \left[ {A\left( {x{\text{*}}} \right)} \right]f\left( {x{\text{*}}} \right)\), such that

From Lemma 2.6, it follows that

Consider the Lyapunov functional candidate

Calculating Caputo’s derivative with order \({{\alpha }}\) for \(V\left( {y\left( t \right)} \right)\) along the trajectory of (8), by using Lemma 2.1 we have

Let \(\delta = {{\lambda }_{{\max }}}\left( {{{P}^{{ - \frac{1}{2}}}}\psi {{P}^{{ - \frac{1}{2}}}}} \right)\), we can get \(\begin{array}{*{20}{r}} {\begin{array}{*{20}{r}} {_{t}D_{0}^{\alpha }V\left( {y\left( t \right)} \right) \leqslant \delta V\left( {y\left( t \right)} \right).} \end{array}} \end{array}\) Correspondingly, there exists a nonnegative function \(M\left( t \right)\), such that

Applying Laplace transform to (9), it results that

The above equality is equivalent to

Taking the inverse Laplace transform of (10) gives

Since both \({{(t - \tau )}^{{\alpha - 1}}}\) and \({{E}_{{\alpha ,\alpha }}}(\delta \left( {t - \tau {{)}^{\alpha }}} \right)\) are nonnegative functions, the above equation can be converted into

From the definition of Lyapunov function \(V\left( {y\left( t \right)} \right)\), the aforementioned inequality can be written as

Noting that \(P = {{R}^{{\frac{1}{2}}}}Q{{R}^{{\frac{1}{2}}}}\), we can obtain that

It implies that

Combining \({{y}^{T}}\left( 0 \right)Ry\left( 0 \right) \leqslant {{b}_{1}}\) with (6) yields

This shows that FMNNs (3) is generalized finite-time stable.

Consider the following FMNNs

The following theorem introduces the generalized finite-time boundedness of FMNNs (11).

Theorem 3.3. Under the assumption \(\left( {{{H}_{1}}} \right)\), if there exists a scalar \({{\beta }} > 0\), a matrix Q1 > 0, \({{Q}_{1}} \in {{R}^{{n \times n}}}\), and a nonsingular matrix \({{Q}_{2}} \in {{R}^{{m \times m}}}\), such that

where \(P = {{R}^{{ - \frac{1}{2}}}}{{Q}_{1}}{{R}^{{ - \frac{1}{2}}}}\), then, FMNNs (11) is generalized finite-time bounded with respect to (b1, b2, T, R, S).

Proof. Consider the Lyapunov function:

In the light of Lemma 2.1, computing the Caputo’s derivative along the trajectory of (11), we have

where \(\Delta = - {{P}^{{ - 1}}}C - {{C}^{T}}{{P}^{{ - 1}}} + {{\varepsilon }^{{ - 1}}}\left( {{{P}^{{ - 1}}}\tilde {A}} \right){{({{P}^{{ - 1}}}\tilde {A})}^{T}} + \varepsilon {{L}^{T}}L\). Under condition (12), pre- and post-multiplying (12) by the symmetric positive definite matrix \(\left( {\begin{array}{*{20}{c}} {{{P}^{{ - 1}}}}&0 \\ 0&{Q_{2}^{{ - 1}}} \end{array}} \right)\), it derives that:

where \(\Theta = - {{P}^{{ - 1}}}C - {{C}^{T}}{{P}^{{ - 1}}} + \frac{1}{\varepsilon }\left( {{{P}^{{ - 1}}}\tilde {A}} \right){{({{P}^{{ - 1}}}\tilde {A})}^{T}} + \varepsilon {{L}^{T}}L - \beta {{P}^{{ - 1}}}.\) By (14) and (15), we can obtain that

Applying

\({{w}^{T}}\left( t \right)Q_{2}^{{ - 1}}w\left( t \right) \leqslant {{\lambda }_{{\max }}}\left( {Q_{2}^{{ - 1}}} \right){{w}^{T}}\left( t \right)w\left( t \right) \leqslant \frac{S}{{{{\lambda }_{{\min }}}\left( {{{Q}_{2}}} \right)}}\), (16) can be changed as

Integrating with order \({{\alpha }}\) on both sides of (17) from 0 to t, where \(t \leqslant T\), we can obtain that

By means of Lemma 2.2, it follows that

Noting \(P = {{R}^{{ - \frac{1}{2}}}}{{Q}_{1}}{{R}^{{ - \frac{1}{2}}}}\), we have

Taking above two inequalities into (18), it forms that

Comparing (19) with (13), it follows that \({{y}^{T}}\left( t \right)Ry\left( t \right) < {{b}_{2}},\,\,\,\forall t \in \left[ {0,T} \right]\). This proof is completed.

In the subsection, we pay attention to designing the feedback controller u(t) = Ky(t) for solving the finite-time boundedness problem of the following fractional-order system

Theorem 3.4. Suppose that \(\left( {{{H}_{1}}} \right)\) holds. If there exist a scalar \({{\beta }} > 0\), a matrix Q1 > 0, \({{Q}_{1}} \in {{R}^{{n \times n}}}\), a nonsingular matrix \({{Q}_{2}} \in {{R}^{{m \times m}}}\), and a matrix \(N \in {{R}^{{l \times n}}}\), such that (13) and

hold, where \(\Phi = - PC - {{C}^{T}}P + BN + {{N}^{T}}{{B}^{T}} + {{\varepsilon }^{{ - 1}}}\tilde {A}{{\tilde {A}}^{T}} + \varepsilon P{{L}^{T}}LP - \beta P\), and \(P = {{R}^{{ - \frac{1}{2}}}}{{Q}_{1}}{{R}^{{ - \frac{1}{2}}}}\), then, FMNNs (20) is generalized finite-time stabilized with respect to (b1, b2, T, R, S) under the feedback controller \(u\left( t \right) = Ky\left( t \right) = N{{P}^{{ - 1}}}y\left( t \right)\).

The proof is similar as Theorem 3, so omitted.

4 NUMERICAL EXAMPLES

In this section, we apply two examples to illustrate the validity and effectiveness of the proposed theoretical results in this paper.

Example 1. Consider the following FMNNs:

where \(\alpha = 0.5,f\left( x \right) = \tanh \left( x \right),L = {\text{diag}}\left( {1,1} \right),C = {\text{diag}}\left( {1,1} \right)\), and \(I = {{(0,0)}^{T}}\),

The initial conditions of (22) are determined as \(x\left( 0 \right) = {{(1,0)}^{T}}\). Choose parameters b1 = 1, b2 = 2, \({{\varepsilon }} = 1\), \(T = 6\), \(R = E\), where E is taken as the identity matrix. By using Matlab’s LMI control toolbox to calculate (4), we can get the feasible solution

Accordingly, we can know \({{\delta }} = - 0.024\), which satisfies the condition (6). By Theorem 3.2, FMNNs (22) is generalized finite-time stable with respect to \(\left( {1,2,6,E} \right)\).

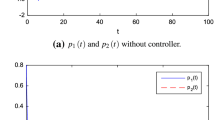

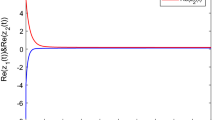

The state trajectory in \(0 \sim 6\,\,{\text{s}}\) with the initial value \(x\left( 0 \right)\) is shown in Figs. 3 and 4. Figure 4 denotes the trajectory of \({{x}^{T}}\left( t \right)Rx\left( t \right)\) of system (22).

Example 2. Consider the finite-time boundedness problem about the following FMNNs:

where \(\alpha = 0.5,f\left( x \right) = \tanh \left( x \right),L = {\text{diag}}\left( {1,1} \right)\), and \(I = {{(0,0)}^{T}}\), \(C = \left( {\begin{array}{*{20}{c}} 1&0 \\ 0&1 \end{array}} \right),\,\,D = \left( {\begin{array}{*{20}{c}} 1&0 \\ 0&1 \end{array}} \right)\).

The corresponding parameters are chosen as:\(w\left( t \right) = {{(\sin t,\cos t)}^{T}}\), \({{b}_{1}} = 0.05\), \({{b}_{2}} = 3.8\), \(S = 1\), \(T = 10\), \(R = E\). Numerical simulation has been operated for system (23) with the initial value \(~x\left( 0 \right) = {{(0.2,0.1)}^{T}}\), which satisfies the condition \({{x}^{T}}\left( 0 \right)Rx\left( 0 \right) \leqslant {{b}_{1}}\). Let \({{\beta }} = 1,\) we attain the reasonable solution:

By Theorem 3.3, FMNNs (23) is finite-time bounded. The state trajectory in \(0 \sim 10\,\,{\text{s}}\) is shown in Figs. 5 and 6. From Fig. 6, we conclude that FMNNs (23) is generalized finite-time bounded with respect to \(\left( {0.05,3.8,10,E,1} \right)\).

5 CONCLUSIONS

In this paper, we have investigated the generalized finite-time stability, boundedness and stabilization for a class of FMNNs. The existence of equilibrium point for the considered FMNNs has been proved. The conditions with respect to the generalized finite-time stability and boundedness have been derived in terms of LMIs. Under designed feedback controller, the generalized finite-time stabilization condition has been also achieved in the forms of LMIs.

It would be interesting to extend the results of this paper to FMNNs with delays, in addition, how solve the equilibrium and uniform charge distribution and of FMNNs, these will be the challenging issues for future research.

REFERENCES

Naseri, M., Raji, M.A., Hantehzadeh, M.R., Farouk, A., Boochani, A., and Solaymani, S., A scheme for secure quantum communication network with authentication using GHZ-like states and cluster states controlled teleportation, Quantum Inf. Process., 2015, vol. 14, pp. 4279–4295.

Farouk, A., Batle, J., Elhoseny, M., Naseri, M., Lone, M., Fedorov, A., Alkhambashi, M., Ahmed, S.H., and Abdel-Aty, M., Robust general N user authentication scheme in a centralized quantum communication network via generalized GHZ states, Front. Phys., 2018, vol. 13, p. 130306.

Farouk, A., Zakaria, M., Megahed, A., and Omara, F.A., A generalized architecture of quantum secure direct communication for N disjointed users with authentication, Sci. Rep., 2015, vol. 5, p. 16080.

Batle, J., Naseri, M., Ghoranneviss, M., Farouk, A., Alkham-bashi, M., and Elhoseny, M., Shareability of correlations in multiqubit states: Optimization of nonlocal monogamy inequalities, Phys. Rev. A, 2017, vol. 95, p. 032123.

Liu, M. and Wu, H., Stochastic finite-time synchronization for discontinuous semi-Markovian switching neural networks with time delays and noise disturbance, Neurocomputing, 2018, vol. 310, pp. 246–264.

Zhao, W. and Wu, H., Fixed-time synchronization of semi-Markovian jumping neural networks with time-varying delays, Adv. Differ. Equations, 2018, vol. 213. https://doi.org/10.1186/s13662-018-1666-z

Wang, Z. and Wu, H., Global synchronization in fixed time for semi-Markovian switching complex dynamical networks with hybrid couplings and time-varying delays, Nonlinear Dyn., 2019, vol. 95, pp. 2031–2062.

Peng, X., Wu, H., Song, K., and Shi, J., Non-fragile chaotic synchronization for discontinuous neural networks with time-varying delays and random feedback gain uncertainties, Neurocomputing, 2018, vol. 273, pp. 89–100.

Butzer, P.L. and Westphal, U., An Introduction to Fractional Calculus, Singapore: World Sci., 2000.

Hilfer, R., Applications of Fractional Calculus in Physics, Hackensack, NJ: World Sci., 2001.

Kilbas, A.A., Srivastava, H.M., and Trujillo, J.J., Theory and Application of Fractional Differential Equations, Amsterdam: Elsevier, 2006.

Arena, P., Caponetto, R., Fortuna, L., and Porto, D., Bifurcation and chaos in noninteger order cellular neural networks, Int. J. Bifurcation Chaos, 1998, vol. 8, pp. 1527–1539.

Petras, I., A note on the fractional-order cellular neural networks, in 2006 Int. Joint Conf. on Neural Networks, Canada, BC, Vancouver, Sheraton Vancouver Wall Centre Hotel, 16–21 July, 2006, pp. 1021–1024.

Wu, H., Zhang, X., Xue, S., Wang, L., and Wang, Y., LMI conditions to global Mittag–Leffler stability of fractional-order neural networks with impulses, Neurocomputing, 2016, vol. 193, pp. 148–154.

Zhang, S., Yu, Y., and Wang, Q., Stability analysis of fractional-order Hopfield neural networks with discontinuous activation functions, Neurocomputing, 2016, vol. 171, pp. 1075–1084.

Peng, X., Wu, H., Song, K., and Shi, J., Global synchronization in finite time for fractional-order neural networks with discontinuous activations and time delays, Neural Networks, 2017, vol. 94, pp. 46–54.

Peng, X. and Wu, H., Robust Mittag–Leffler synchronization for uncertain fractional-order discontinuous neural networks via non-fragile control strategy, Neural Process. Lett., 2018, vol. 48, pp. 1521–1542.

Peng, X., Wu, H., and Cao, J., Global non-fragile synchronization in finite time for fractional-order discontinuous neural networks with nonlinear growth activations, IEEE Trans. Neural Networks Learning Syst., 2019, vol. 30, pp. 2123–2137.

Wu, H., Wang, L., and Niu, P., Global projective synchronization in finite time of nonidentical fractional-order neural networks based on sliding mode control strategy, Neurocomputing, 2017, vol. 235, pp. 264–273.

Peng, X., and Wu, H., Non-fragile robust finite-time stabilization and performance analysis for fractional-order delayed neural networks with discontinuous activations under the asynchronous switching, Neural Comput. Appl., 2018. https://doi.org/10.1007/s00521-018-3682-z

Chua, L.O., Memristor-the missing circuit element, IEEE Trans. Circuit Theory, 1971, vol. 18, pp. 507–519.

Tour, J. and T. He, The fourth element, Nature, 2008, vol. 453, pp. 42–43.

Itoh, M. and Chua, L.O., Memristor cellular automata and memris- tor discrete-time cellular neural networks, Int. J. Bifurcation Chaos, 2009, vol. 19, pp. 3605–3656.

Pershin, Y.V. and Ventra, M.D., Experimental demonstration of associative memory with memristive neural networks, Neural Networks, 2010, vol. 23, pp. 881–886.

Zhao, Y., Jiang, Y., Feng, J., and Wu, L., Modeling of memristor-based chaotic systems using nonlinear Wiener adaptive filters based on backslash operator, Chaos, Solitons Fractals, 2016, vol. 87, pp. 12–16.

Cantley, K.D., Subramaniam, A., Stiegler, H.J., Chapman, R.A., and Vogel, E.M., Neural learning circuits utilizing nanocrystalline silicon transistors and memristors, IEEE Trans. Neural Networks Learning Syst., 2012, vol. 23, pp. 565–573.

Kim, H., Sah, M.P., Yang, C., Roska, T., and Chua, L.O., Neural synaptic weighting with a pulse-based memristor circuit, IEEE Trans. Circuits Syst., I: Regular Papers, 2012, vol. 59, pp. 148–158.

Sharifiy, M.J. and Banadaki, Y.M., General spice models for memristor and application to circuit simulation of memristor-based synapses and memory cells, J. Circuits, Syst. Comput., 2010, vol. 19, pp. 407–424.

Bao, G. and Zeng, Z., Multistability of periodic delayed recurrent neural network with memristors, Neural Comput. Appl., 2013, vol. 23, pp. 1963–1967.

Xin, Y., Li, Y., Cheng, Z., and Huang, X., Global exponential stability for switched memristive neural networks with time-varying delays, Neural Networks, 2016, vol. 80, pp. 34–42.

Yang, D., Qiu, G., and Li, C., Global exponential stability of memristive neural networks with impulse time window and time-varying delays, Neurocomputing, 2016, vol. 171, pp. 1021–1026.

Zhang, G. and Shen, Y., New algebraic criteria for synchronization stability of chaotic memristive neural networks with time-varying delays, IEEE Trans. Neural Networks Learning Syst., 2013, vol. 24, pp. 1701–1707.

Wu, H., Li, X., and Yao, R., Weak, modified and function projective synchronization of chaotic memristive neural networks with time delays, Neurocomputing, 2015, vol. 149, pp. 667–676.

Wu, H., Zhang, X., and Li, R., Adaptive anti-synchronization and Hg anti-synchronization for memristive neural networks with mixed time delays and reaction-diffusion terms, Neurocomputing, 2015, vol. 168, pp. 726–740.

Zhang, G., Shen, Y., and Wang, L., Global anti-synchronization of a class of chaotic memristive neural networks with time-varying delays, Neural Networks, 2013, vol. 46, pp. 1–8.

Wu, H., Han, X., and Wang, L., Exponential passivity of memristive neural networks with mixed time-varying delays, J. Franklin Inst., 2016, vol. 353, pp. 688–712.

Chen, J., Zeng, Z., and Jiang, P., Global Mittag–Leffler stability and synchronization of memristor-based fractional-order neural networks, Neural Networks, 2014, vol. 51, pp. 1–8.

Chen, J., Wu, R., Cao, J., and Liu, J., Stability and synchronization of memristor-based fractional-order delayed neural networks, Neural Networks, 2015, vol. 71, pp. 37–44.

Velmurugan, G., Rakkiyappan, R., and Cao, J., Finite-time synchronization of fractional-order memristor-based neural networks with time delays, Neural Networks, 2016, vol. 73, pp. 36–46.

Bao, H. and Cao, J., Projective synchronization of fractional-order memristor-based neural networks, Neural Networks, 2015, vol. 63, pp. 1–9.

Mathiyalagan, K., Anbuvithya, R., Sakthivel, R., Parka, J.H., and Prakash, P., Reliable stabilization for memristor-based recurrent neural networks with time-varying delays, Neurocomputing, 2015, vol. 153, pp. 140–147.

Song, K., Wu, H., and Wang, L., Lur’e–Postnikov Lyapunov function approach to global robust Mittag–Leffler stability of fractional-order neural networks, Adv. Differ. Equations, 2017, vol. 232, no. 2017. https://doi.org/10.1186/s13662-017-1298-8

Lazarevic, M.P. and Spasic, A.M., Finite-time stability analysis of fractional-order time-delay systems: Gronwall’s approach, Math. Comput. Modell., 2009, vol. 49, pp. 475–481.

Filippov, A.F., Differential equations with discontinuous right hand side, Mat. Sb., 1960, vol. 93, pp. 99–128.

Gao, W., Guirao, J.L.G., Abdel-Aty, M., and Xi, W., An independent set degree condition for fractional critical deleted graphs, Discrete Contin. Dyn. Syst., Ser. S, 2019, vol. 12, pp. 877–886.

Batle, J., Ciftja, O., Naseri, M., Ghoranneviss, M., Farouk, A., and Elhoseny, M., Equilibrium and uniform charge distribution of a classical two-dimensional system of point charges with hard-wall confinement, Phys. Scr., 2017, vol. 92, p. 055 801.

Funding

The authors would like to thank the Editors and the Reviewers for their insightful comments, which help to enrich the content and improve the presentation of this paper.

This work was supported by the Natural Science Foundation of Hebei Province of China (A2018203288).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

The authors declare that they have no conflicts of interest.

About this article

Cite this article

Lirui Zhao, Huaiqin Wu Generalized Finite-Time Stability and Stabilization for Fractional-Order Memristive Neural Networks. Opt. Mem. Neural Networks 30, 11–25 (2021). https://doi.org/10.3103/S1060992X21010070

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3103/S1060992X21010070