Abstract

Due to a beneficial balance of computational cost and accuracy, real-time time-dependent density-functional theory has emerged as a promising first-principles framework to describe electron real-time dynamics. Here we discuss recent implementations around this approach, in particular in the context of complex, extended systems. Results include an analysis of the computational cost associated with numerical propagation and when using absorbing boundary conditions. We extensively explore the shortcomings for describing electron–electron scattering in real time and compare to many-body perturbation theory. Modern improvements of the description of exchange and correlation are reviewed. In this work, we specifically focus on the Qb@ll code, which we have mainly used for these types of simulations over the last years, and we conclude by pointing to further progress needed going forward.

Graphical abstract

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Real-time time-dependent density-functional theory (RT-TDDFT) has attracted tremendous attention in the context of accurate theoretical characterization of materials recently and over the years. It is arguably one of the most promising approaches to simulate the real-time quantum dynamics of electrons as well as its coupling to ion dynamics. In particular, its promising balance between accuracy and computational cost make this technique increasingly applicable also for development, design, and discovery of materials, including for electronic, optical, and electrochemical applications, among others.[1] Recent applications include laser excitation of materials,[2] interaction of materials with energetic ions,[3] and non-linear excitation dynamics.[4] The framework is implemented in many software packages and readily usable on a large variety of computational resources, including use of graphics processing units (GPUs). This makes the technique applicable to many diverse materials from just a few atoms to complex extended structures consisting of hundreds of atoms.

In this work we provide examples for recent developments and applications that we accomplished and use these to illustrate the need for future improvements. This includes discussing the underlying approximations and the path toward a computationally more feasible and widely applicable implementation of this approach for complex and extended systems. Simulations of complex, extended materials can benefit from less mainstream approaches, such as orbital-free TDDFT,[5,6] subsystem TDDFT,[7] or time-dependent density-functional tight binding techniques.[8] However, in what follows, we focus on plane-wave RT-TDDFT and discuss our own work of using and extending the Qb@ll code.[9,10,11,12]

First, the time stepping that is used in RT-TDDFT critically determines the computational cost. Second, we also give a specific example for how absorbing boundary conditions can mitigate high computational cost when studying two-dimensional materials. Next, the physics of charged projectile ions or electrons interacting with the electronic system of the target is briefly discussed and the computational cost of using an electron wave packet instead of a classical Coulomb potential in a plane-wave framework is assessed. Subsequently, we analyze in detail the RT-TDDFT description of electron dynamics and find shortcomings in capturing the time scale of electron–electron scattering-mediated thermalization. These results are compared to the literature and discussed relative to GW simulations within many-body perturbation theory. Finally, we discuss recent progress in describing the electron–electron interaction via exchange and correlation in RT-TDDFT and the associated computational cost. All RT-TDDFT simulations presented here were performed with the Qb@ll code and extensions thereof,[9,10,11,12] and we conclude our discussion with a brief outlook on future directions of this software, hoping to stimulate exciting developments in the field of RT-TDDFT for years to come, including for computational materials discovery and development, as is the goal of this focus issue.

Real-time propagation of time-dependent Kohn–Sham equations

Excited electron dynamics can be modeled from first principles with real-time time-dependent density-functional theory (TDDFT).[13,14] In this approach, the electron density \(n({\varvec {r}},t)\) evolves over time according to the time-dependent Kohn–Sham (TDKS) equations:

Here, \(\psi _{\text{j}}\) are single-particle Kohn–Sham orbitals with occupations \(f_{\text{j}}\). The single-particle Hamiltonian,

contains the kinetic energy operator \({\hat{T}}\), the external potential \({\hat{V}}_{\mathrm {ext}}(t)\) due to nuclei and any external fields, the Hartree electron–electron potential \({\hat{V}}_{\mathrm {Har}}[n]\), and the exchange–correlation potential \({\hat{V}}_{\mathrm {XC}}[n]\). The electronic system may be coupled to nuclear motion through Ehrenfest dynamics.[15]

Explicit time dependence may arise within \({\hat{V}}_{\mathrm {ext}}(t)\) from an external perturbation such as a moving projectile ion or a dynamic electromagnetic field. Depending on the gauge choice, an external vector potential \({\varvec {A}}_{\mathrm {ext}}({\varvec {r}},t)\) may enter into the kinetic energy as \({\hat{T}}=\frac{1}{2}\left( -i\nabla + {\varvec {A}}_{\mathrm {ext}}({\varvec {r}},t)\right) ^2\). To apply a uniform external electric field to an infinite periodic system, it is often convenient to work in the velocity gauge, where the electric field is generated by the vector potential[16,17,18,19]

Alternatively, the length gauge, which instead involves the scalar potential \({\varvec {E}}_{\mathrm {ext}}(t)\cdot {\varvec {r}}\), can be appropriate for finite systems[20] or with the use of maximally localized Wannier functions.[21] Both capabilities have been implemented in the plane-wave TDDFT code Qbox/Qb@ll,[9,10,11] with options for static fields, delta kicks, and dynamic laser pulses.[19,21]

In the vector potential formulation, the vector potential is chosen such that its time derivative gives the proper electric field according to Eq. (3). For example, the delta kick is implemented by a step function in the vector potential. In practice this means the propagation is done with a constant vector potential whose amplitude is given by a desired intensity of the kick (as the initial condition is the ground state calculated without a vector potential). A laser field is simply simulated by an oscillatory electric field with constant or time-dependent amplitude. Since the dipole is not properly defined for extended systems, the polarization is obtained from the macroscopic current.[16] We use the usual definition of the quantum mechanical current

which is not strictly correct when using non-local pseudopotentials.[18] However, the correction term is small for electric perturbations.[22]

Both the computational cost and accuracy of real-time TDDFT simulations are in large part governed by the numerical algorithm used to integrate the TDKS equations, Eq. (1). While a simple explicit integration scheme such as fourth-order Runge–Kutta (RK4) is suitable for modest size systems,[9] very large supercells and long-time propagation require higher accuracy[23] offered by time-reversible schemes, such as the enforced time-reversal symmetry (ETRS) method.[11,24] We specifically showed this for systems containing vacuum.[25] More efficient algorithms which reduce time to solution without sacrificing accuracy would accelerate the study of excited electron dynamics in materials and enable consideration of larger systems of practical interest over longer simulation time scales, including defect systems, material surfaces, and 2D heterostructures.

Below we briefly present our recent efforts toward a systematic assessment of numerous explicit time steppers and several variants of the ETRS approach. Interfacing Qbox/Qb@ll[9,11] with the PETSc numerical library[26] provided us with seamless access to a wide range of Runge–Kutta (RK)[27] and strong stability preserving (SSP) RK[28] methods. Each algorithm’s performance was assessed for a sodium dimer test system over a range of time step sizes \(\Delta t=0.01\) – 0.5 atomic units (at. u.), and computational cost was measured as the average wall time per simulated time. After perturbing the initially ground-state system by slightly displacing the atoms away from their equilibrium positions, the electronic response was evolved for 100 time steps on a single processor. For the most promising methods, additional tests on a 112-atom graphene supercell confirmed the qualitative trends observed for the smaller test system.

Since exact time evolution should conserve both energy and charge, we compute an error metric given by the product of average errors in total energy E and net charge Q per simulation time:

where brackets denote time averages,

and \(t_{\text{f}}\) is the total time. This form was chosen to give a reasonable error measure for both linear and oscillatory error accumulation models. In particular, using this definition \(\delta Q\) has a reasonable long-time limit both when \(Q(t)-Q(0)\) can be modeled as \(\propto t\) and when \(Q(t)-Q(0)\) can be modeled as \(\propto \sin (\omega t)\).[29] The tolerable error level for a particular application depends on the system studied and the observable of interest. For example, electronic stopping power calculations in bulk materials[30,31,32,33,34,35,36,37,38,39] extract total energy differences typically about 5–50 Ha over the course of a \(\sim\)1-fs simulation, so \(\delta E \ll 0.1\) Ha/at. u. suffices and \(\delta Q\) is not important beyond its correlation with \(\delta E\). In contrast, simulations of ion-irradiated 2D materials[25,40,41,42,43,44,45] involve smaller energy transfers around 0.2–5 Ha and may additionally examine sensitive charge-transfer processes such as emission of 0.1–10 electrons into vacuum. Thus, these calculations require \(\delta Q\, \delta E \ll 10^{-5}\) e Ha/at. u.\(^2\)

Performance of 4th-order ETRS (black stars) compared to (a) all Runge–Kutta time steppers available in PETSc, (b) various strong stability preserving Runge–Kutta time steppers available in PETSc, and (c) other variants of ETRS and a naive application of PETSc’s Crank–Nicolson (CN). RKN[X] denotes an Nth-order Runge–Kutta scheme, where X is an additional PETSc identifier, typically the initials of original developers. SSP(M, N) denotes an M-stage, Nth-order SSPRK method, and ETRSN denotes ETRS using Nth-order Taylor expansions to approximate exponentials.

From our data in Fig. 1 we find that ETRS generally outperforms all explicit time steppers tested: it achieves lower computational cost at an acceptable error level. The only competitive Runge–Kutta scheme is the fifth-order Bogacki–Shampine algorithm (RK5BS),[46] which is even more accurate than ETRS for small step sizes [see Fig. 1(a)]. However, while RK5BS becomes unstable for \(\Delta t\gtrsim 0.1\) at. u. in our sodium dimer simulations, ETRS maintains tolerable error rates for step sizes twice as large, allowing lower computational cost. Among the SSP methods tested, the 4th-order schemes are most successful but do not improve over ETRS’s accuracy, stability, or speed [see Fig. 1(b)]. Lower-order SSP schemes involving many (\(\ge 16\)) stages do allow larger step sizes than ETRS, but the expense associated with a large number of stages outweighs the increased stability. Overall, we find that ETRS achieves lowest time to solution. Recent work[47] also tested the Adams–Bashforth and Adams–Bashforth–Moulton classes of explicit time steppers, finding that these methods can outperform RK under certain conditions, but their performance has not yet been compared to ETRS.

Several possible schemes exist to approximate the exponentials of the Hamiltonian involved in ETRS.[24] Here, we use Taylor expansions for their simplicity and compare different orders in Fig. 1(c). Consistent with assertions made in Ref. 24 we find that 4th- or 5th-order Taylor expansions are optimal. A 6th-order expansion is less stable, while a 3rd-order expansion sacrifices accuracy without significantly reducing computational cost.

Other implicit methods may yet prove more efficient than ETRS. One promising option is Crank–Nicolson (CN), which some other TDDFT implementations successfully employ.[20,31,48,49,50,51,52] We find that CN is generally more accurate than ETRS [see Fig. 1(c)], perhaps thanks to the unitarity of the Padé form of the CN propagator in contrast to the truncated Taylor expansion used in the ETRS implementation. Although CN can maintain accuracy even for large time steps, i.e., stability restrictions do not limit this method, it involves a costly non-linear solve. The large number of \({\hat{H}}\phi\) evaluations performed by PETSc’s algorithm for this non-linear solve made CN prohibitively expensive in this work [see Fig. 1(c)]. However, further optimization, efficient preconditioners, or the use of predictor–corrector methods that obviate the non-linear solve[48,53] could alleviate this issue. Implicit schemes such as CN could be particularly advantageous for ultrasoft pseudopotentials or the projector-augmented wave method, where the left-hand side of the TDKS equations involves an overlap matrix acting on the time derivative of the pseudized orbitals.[51] Since explicit time-stepping schemes require the application of the inverse of this matrix at each time step, this complication narrows the prospective efficiency gap between explicit and implicit schemes. However, this work used norm-conserving pseudopotentials[54] and thus did not benefit from CN.

Explicit RK methods cannot conserve energy, and some of the least expensive implicit RK methods, such as CN, do not in general. In general, a direct way to alleviate errors in invariant quantities represented by inner product norms is to control the time step. One can reduce the time step adaptively if the energy loss exceeds a certain level. Moreover, a promising strategy that also applies to explicit methods is to use a time step adaptation that adjusts step length such that the energy is conserved exactly in finite precision. These methods are referred to as relaxation RK and rely on modifying the prescribed time step (typically reducing it by a small fraction) so that the solution at each of these modified steps preserves energy.[55,56] Explicit methods are conditionally stable; nevertheless, the stability regions can be optimized for a specific eigenvalue portrait, which is a promising strategy to improve their performance. Furthermore, new machine learning developments in neural ODE may provide new ways to accelerate the time-stepping process.[57]

Finally, the parallel transport gauge approach[58] applies a unitary transformation to the Kohn–Sham orbitals to instead solve for slower varying orbitals that reproduce the same electron density but introduce an additional term in the TDKS equations. This promising method can be combined with an efficient time stepper to produce speedups of 5–50 over standard RK4 for molecules,[58] solids containing up to 1024 atoms,[58] and mixed states in model systems.[59]

Complex absorbing potential for secondary electron emission

After examining the computational cost associated with real-time propagation in the previous section, we also explored the need for a large vacuum region as part of the simulation cell when studying electron emission, e.g., from surfaces or two-dimensional (2D) materials. When using periodic boundary conditions, vacuum lengths of \(150~a_0\) or more are necessary to prevent the unphysical interaction of the electrons emitted from both sides of the 2D material across the boundary of the simulation cell, resulting in a high computational demand.[43] To address this problem, absorbing boundaries[60] are frequently employed to emulate open boundary conditions. Absorbing boundaries based on a complex absorbing potential (CAP)[61] alter the Hamiltonian, Eq. (2), by adding an artificial complex (imaginary) potential in a defined region of the simulation cell, resulting in a non-Hermitian Hamiltonian and non-unitary time evolution operator. This approach has been successfully used in simulating the real-time dynamics of wavefunctions of 2D materials, including secondary electron emission due to electron irradiation[62] and angular resolved photo-emission spectra.[60]

We implemented an absorbing potential into the Qb@ll[9,11] code that follows the form

where W defines the maximum of the CAP and \(z_{\text{s}}\) and \(d_{\text{z}}\) are the position of the front boundary and the half width of the CAP.

Total emitted electrons in vacuum, after a channeling proton[43] with a velocity of 1.79 at. u. impacts graphene. When using a CAP, smaller vacuum sizes suffice for convergence.

Here, we compare to our previous work on secondary electron emission from graphene under proton irradiation[43] and demonstrate that a CAP can significantly reduce finite size effects, leading to an acceleration of the simulation by reducing the vacuum size. We use the same simulation cell and computational parameters as described in Ref. 43. The target graphene is placed at the center of the simulation cell, at \(z=0\) on the \(x{- }y\) plane. Emitted electrons in vacuum are determined by integrating the electron density over a region farther than \(10.5\,{a_0}\) from the graphene. We assess finite size effects for different vacuum sizes along the direction of proton travel for a channeling proton with 1.79 at. u. of velocity. Following Ref. 43 we treat the maximum of the emitted electron curves in Fig. 2 as the total number of emitted electrons.

Comparing the resulting number of total emitted electrons for periodic boundary conditions, the data in Fig. 2 show a difference of 3% when 150 \({a_0}\) and 250 \({a_0}\) of vacuum are used, whereas the difference is 8.22% between 100 \({a_0}\) and 250 \({a_0}\) of vacuum. This shows that a large vacuum size is needed to obtain converged results. For comparison, a CAP of the form of Eq. (7) is placed at the boundary of the simulation cell. We set \(W=15\,\mathrm {Ha}\), \(z_{\text{s}}=40\,{a_0}\), and \(d_{\text{z}}=10\,{a_0}\) for 100 \({a_0}\) of vacuum and \(W=20\,\mathrm {Ha}\), \(z_{\text{s}}=63.75\,{a_0}\), and \(d_{\text{z}}=11.25\,{a_0}\) for 150 \({a_0}\) of vacuum. With these parameters for the CAP, the difference between emitted electrons for 100 \({a_0}\) and 150 \({a_0}\) of vacuum is 1.14%. The reduced finite size error with a CAP allows using smaller vacuum regions of 100 \({a_0}\) or less, instead of 150 \({a_0}\), reducing the simulation time per iteration from 61.44 core hours to 40.96 core hours, a 33% speedup, when running on ALCF Theta. In general, depending on the targeted problem, a careful convergence test of the vacuum size is required for 2D systems.

Quantum mechanical projectile: electron wave packet

In the previous section and in most of the literature on electronic stopping, the excitation mechanism is described using a classical projectile, i.e., a time-dependent Coulomb potential moving at constant velocity. It is currently unclear to what extent this approximation becomes unreliable for light projectiles, such as protons or electrons. Electrons are particularly small and lightweight compared to protons or heavy-ion projectiles and the electronic wavelength can reach the scale of inter-atomic distances. Hence, the approximation of using a classical Coulomb potential to describe electron projectiles is expected to be more severe. The explicit breakdown of this approximation is currently not studied thoroughly and systematically.

Treating the incident electron fully quantum mechanically is, hence, a promising alternative. Following the work by Tsubonoya et al.[63] the initial incident electron can be modeled as a Gaussian-shaped wave packet at the start of the simulation,

where d, \({\varvec {b}}\), and \({\varvec {k}}\) are the parameters for defining the spread, the center location, and the wave vector of the wave packet, respectively. The wave vector \({\varvec {k}}\) represents the group velocity of the incident electron and is the single parameter that controls the kinetic energy of the incident electron. The time evolution of this wave packet is described by the time-dependent Kohn–Sham equations, Eq. (1), on the same footing as the rest of the system. Thus, the time-dependent Kohn–Sham orbitals include all electrons in the target material and the incident electron of the wave packet. The electron density is then the sum of the electron density of the target material and the electron density of the wave packet,

In the following we characterize the convergence behavior of the Gaussian wave packet with respect to plane-wave cutoff energy (see Fig. 3). We simulate Gaussian wave packets with different velocities and find that high cutoff values are necessary to converge fast wave packets, possibly leading to a limitation of these simulations. We also note that the wave packet itself spreads over time, rendering comparison to the classical electron approximation challenging. Finally, the computation of electronic stopping power S is complicated by the fact that the projectile, if treated quantum mechanically, is part of the electronic system and the approach of computing the stopping power from the increase dE/dx of the electronic total energy is no longer applicable. Solving this problem remains an open question for future work.

Real-time electron dynamics in aluminum

Electron dynamics computed for Al with fixed ions using real-time TDDFT. In (a) we show occupation numbers of the different Kohn–Sham states, averaged over 315 fs of simulation time, as a function of their Kohn–Sham energy. “000 fs” shows the average taken from t = 0 fs to t = 315 fs. In (b) we plot a single snapshot at shown simulation time for high-energy eigenstates. Semi-transparent gray arrows guide the eyes for how occupation numbers evolve over time.

In the following, we explore using real-time TDDFT to simulate electronic thermalization in metals, which is generally assumed to be fast, on the order of 10–100 fs. Previous studies applying the GW method to compute the self-energy for Al support this assumption, where the lifetimes mediated by electron–electron scattering are found to be a few tens of fs at energies further away from the Fermi energy and on the order of 100 fs when nearing the Fermi energy.[64,65] Given these short-time scales, real-time TDDFT in principle can be used to perform statistical ensemble sampling of an electronic system in internal thermodynamic equilibrium and to calculate expectation values of an observable under different conditions.[66] This is similar to Mermin DFT,[67] but such a real-time approach can potentially capture additional dynamic effects using the same exchange–correlation functional.

To this end, Modine et al.[66] previously explored the idea of performing statistical mechanics on electronic systems, in analogy to simulations of statistical thermodynamics using classical molecular dynamics. As a first step toward this idea, they initiated a 100-fs RT-TDDFT simulation using adiabatic LDA for an excited electronic system of Al with fixed ions. They showed that although the distribution of the time-averaged occupation numbers is Fermi like, it seems to decrease more sharply near the Fermi energy and takes longer to reach asymptotic values.[66] To further understand this behavior, we performed significantly longer RT-TDDFT simulations (> 1 ps) for the same Al system. We used the same plane-wave cutoff energy of 20 Ry, \(\Gamma\)-only Brillouin zone sampling, and the same 32-atom cell. In our simulations, this 32-atom cell is either an ideal crystal or a snapshot of a molecular dynamics simulation with a temperature of 7900 K.

In Fig. 4(a) we show the resulting long-term electron dynamics in Al with fixed ions, simulated with real-time TDDFT up to \(\sim 6\) ps. Following the approach by Modine et al.[66] the initial wavefunction was prepared in such a way that the distribution of its occupation numbers is close to the Fermi distribution at a given temperature. For Fig. 4 we used 7900 K and at \(t=0\), we can see that the dark blue dots loosely follow the Fermi distribution of the same temperature. This is, by construction, expected for initial states that are thermal states.[66] We would then expect the occupation numbers to fluctuate around an average that corresponds to this Fermi distribution.

In contrast to this expectation, Fig. 4(a) clearly shows that the distribution deviates more and more from the initial Fermi distribution as time propagates, indicated by semi-transparent gray arrows. If we focus on the dynamics near the Fermi energy, the drop in occupations at the Fermi surface becomes steeper and steeper, which is usually associated with lower electronic temperature. However, the total energy of the system is conserved. To analyze this further, we also investigate high-energy eigenstates in Fig. 4(b), showing that their occupation numbers grow over time, indicating that electrons are promoted to higher energy states and providing a mechanism for energy conservation.

Since scattering of electrons into higher energy states during electronic thermalization is counter-intuitive, we first thoroughly examine the effect of the initial wavefunction and several numerical parameters. We ensured that over the simulation time of about 6.3 ps, the total energy of the system remains conserved within acceptable numerical error of \(<0.1\) meV/atom, suggesting that the numerical time integrator remains stable for the whole simulation. We also tested that this behavior is independent of the cell size by comparing the dynamics of occupation numbers of high-energy eigenstates in the 32-atom cell to a 108-atom cell, finding again a high-energy tail emerging over time. Furthermore, we excluded the symmetry of the lattice as a factor by comparing the dynamics for relaxed (\(T=0\) K) atomic positions vs. a \(T=7900\) K molecular dynamics snapshot. In addition, we excluded an influence of the particular real-time TDDFT implementation by comparing the Qb@ll and Socorro[68] codes.

The occupation number of eigenstate i at simulation time t, \(f_{i}(t)\), is defined as

where the reference states \(\phi _{i}\) can be either the DFT ground state or instantaneous adiabatic eigenstates of the time-dependent KS Hamiltonian. An influence of the reference states used to compute the occupation number was excluded by comparing the adiabatic ground state and the eigenstates of the instantaneous TDKS Hamiltonian for projection. Finally, we also compared different approaches of creating the initial electronic excitation (i) using the above-described thermal state,[66] (ii) promoting one electron from valence to conduction band by changing the Kohn–Sham occupation number, and (iii) imposing a vigorous time-dependent displacement of randomly selected atoms. In all cases we observed the same behavior shown in Fig. 4.

Occupation number as a function of simulation time for selected eigenstates. These, otherwise randomly chosen, eigenstates have initial occupation numbers of roughly 0.0, 0.5, 1.0, 1.5, and 2.0. Red-dashed curves show the fit against the exponential \(a+b\cdot \exp (-t/c)\) to extract the characteristic time scale. Gray-dashed horizontal lines indicate the expected occupation number for a given eigenstate under the Fermi distribution. Text describes the band index (BI) and energy difference from the Fermi energy at \(T=7900\) K. We found no clear connection of the occupation number dynamics with the energy of the state.

Next, we extract a characteristic relaxation time by fitting this data to an exponential decay. We randomly select a few eigenstates across the energy spectrum with initial occupations of about 0.0, 0.5, 1.0, 1.5, and 2.0 and show their dynamics in Fig. 5. For the following discussion, we refer to them by their band index (BI = 0, 39, 47, 48, 53, and 95, respectively). Figure 5 shows that BI = 48, which is above the Fermi energy, couples with BI = 47, which is below the Fermi energy, since their dynamics show the same oscillation frequency but are antiphase. The frequency of these oscillations is about 8–10 THz, which equals half the energy distance between these states. We conclude that the observed oscillations are associated with TDDFT electron–hole excitation energies. The expectation that temperature-induced excitations are most dominant near the Fermi energy is consistent with our observation of such oscillations only between electrons and holes near the Fermi energy.

Next, we notice that all states are evolving away from the occupation number expected based on a Fermi distribution of \(T=7900\) K (gray-dashed horizontal lines in Fig. 5). We also notice that the dynamics for the BI = 95 state is not monotonic and the occupation number changes in a completely different direction before and after the reflection at around 700 fs. In addition, fitting the data before 700 fs leads to a characteristic time much shorter than the fit to the data after 700 fs. Such non-monotonic behavior is not limited to eigenstates with large BI but is commonly observed for other eigenstates. For these, we only extract the characteristic time for the second part of the dynamics (see the red-dashed curve for the BI = 95 example in Fig. 5). From the extracted characteristic times we found that BI = 95, which is far from the Fermi energy, relaxes more slowly than BI = 48, which is near the Fermi energy. This behavior is different from Fermi liquid theory, which predicts that the lifetime of an eigenstate is longer when its energy is close to the Fermi energy.[69] For this reason and because the excited Al system evolves away from a Fermi distribution, applicability of this relation between Fermi level and lifetime remains unclear.

Time dynamics of the \(\sigma _{xx}\) component of the stress tensor, after starting from a thermal state generated with a Fermi temperature of 7900 K. Stresses are sampled sparsely across the whole simulation and, at each sampled time point, the stress values of the subsequent 10 fs are collected to compute average (solid circles) and standard deviation (error bars). The red curve shows a fit against \(\sigma _{xx}=a+b\cdot \exp (-t/c)\) to extract the characteristic time scale.

The result is an important, albeit negative, result that points to the inability of a theory such as TDDFT (at least in its current form) to thermalize electrons. One potential shortcoming of this analysis may be that from a fundamental point of view, the Kohn–Sham occupation number is not an observable in TDDFT, although that would be an illuminating reason for this inability.

To address this concern, we also analyze stress, which is a functional of the time-dependent electron charge density. In Fig. 6, we show the real-time dynamics of the stress on the simulation cell after excitation for the \(\sigma _{xx}\) component of the stress tensor. Fitting to this data yields a characteristic time of 268 fs. The \(\sigma _{yy}\) and \(\sigma _{zz}\) components have significantly different characteristic times of 889 and 691 fs, respectively, but their dynamics are also essentially monotonic. Since a set of independent complex numbers with random phases and magnitudes are drawn from a distribution to construct the initial thermal state,[66] the stress and its dynamics are not expected to be isotropic for any given thermal state, but would average out over many thermal states for the same temperature. We note that these time scales are in the same range as those of dynamics of the eigenstates with monotonic behavior. Hence, based on the dynamics of occupation numbers and stress, we conclude that equilibrium is reached over a time scale of 1 ps. At energies above the Fermi level, \(E-E_{\text{F}}\), of about 1.0 eV, experimental results report values around 15 fs.[70] Computational results include around 30 fs in Ref. 69, 20 fs from the \(GW+T\) method[65], and 70 fs in Ref. 64. There is an unresolved discrepancy between Ref. 64 and 69, but the literature agrees that Al qualitatively follows Fermi liquid theory with band structure effects only giving rise to small quantitative differences. Not only is this electron–electron relaxation time significantly longer than these results, but we also find that the system evolves into an unknown distribution with a lower Fermi temperature near the Fermi level and with high-energy tails, compared to the initial Fermi distribution.

In order to address these discrepancies, it is necessary to incorporate electron–electron and electron–phonon decay channels into the RT-TDDFT Ehrenfest molecular dynamics framework. Electron–electron scattering mechanisms should be treated on the level of the exchange–correlation functional and we view hybrid functionals and overcoming the adiabatic approximation typically used in TDDFT as possible paths forward. In addition, efforts to better describe the energy decay channels from excited electrons into the system of ions are appearing in the literature.[71] We note that these difficulties in modeling relaxation times within RT-TDDFT are exacerbated in strongly correlated systems, requiring adequate approximations to exchange and correlation.

Convergence of \(G_0W_0\) calculations with increasing \({\varvec {k}}\)-point sampling. The inset shows the results of the 10 \(\times\) 10 \(\times\) 10 \({\varvec {k}}\)-point calculations with three different \(\eta\) values. The energy range of the inset is the same energy range used for the electron–electron lifetime fit (see text).

Next, we pursue an alternative route to compute the electron–electron scattering lifetime from first principles, based on equating the scattering term to the imaginary part of the electronic self-energy, \(\Gamma _{n{{\textbf {k}}}}=-2 {\text {Im}}\left\{ \Sigma (\varepsilon _{n{{\textbf {k}}}})\right\} /\hbar\).[72] Computing the imaginary part of the self-energy within the GW framework provides lifetimes, using a procedure described by Ladstädter et al.[64] Here we use a computationally more efficient approach by fitting \(-2{\text {Im}} \left\{ \Sigma (\varepsilon _{n{{\textbf {k}}}})\right\}\) to a scattering rate of the form \(\alpha (\varepsilon _{{\text{n}}{{\textbf {k}}}}-E_{\text{F}})^2\), predicted by Landau’s theory of the Fermi liquid.[72] We compute the imaginary part of the self-energy by performing a \(G_0W_0\) calculation where the complex shift \(\eta\) of the Kramers–Kronig transformation is set to a value much smaller than what is used in typical GW band structure calculations. This allows us to accurately resolve the imaginary part of the self-energy near the Fermi energy (see inset of Fig. 7) and Fig. 7 also illustrates \({\varvec {k}}\)-point grid convergence tests of our \(G_0W_0\) calculations.

Electron–electron lifetimes obtained from the fit to Landau’s theory of the Fermi liquid for the first conduction band at the \(\Gamma\) point, computed using a 10 \(\times\) 10 \(\times\) 10 \({\varvec {k}}\)-point grid and \(\eta =0.005\) eV. Data points were calculated by Ladstädter et al.[64] The error bars show the standard deviation for relaxation times from different \({\varvec {k}}\)-point grids and \(\eta\) values.

Next, we fit the \(-2{\text {Im}} \left\{ \Sigma (\varepsilon _{n{{\textbf {k}}}})\right\}\) values for the first conduction band at the \(\Gamma\) point, computed using a 10 \(\times\) 10 \(\times\) 10 \({\varvec {k}}\)-point grid and the smallest value of \(\eta =0.005\) eV, over an energy range between 0 and 18 eV to the form from Landau’s theory of the Fermi liquid. The value of \(\alpha\) from this fit gives the hot electron lifetimes as

which are plotted in Fig. 8. We include standard deviation error bars at integer and half-integer energy values which compare the lifetimes of the 10 \(\times\) 10 \(\times\) 10 and \(\eta =0.005\) eV case to the lifetimes computed from 8 \(\times\) 8 \(\times\) 8 \({\varvec {k}}\)-point grids with \(\eta\) values of 0.005, 0.01, and 0.04 eV and lifetimes from 10 \(\times\) 10 \(\times\) 10 \({\varvec {k}}\)-point grids with \(\eta\) values of 0.01 and 0.04 eV. The average of the \(\alpha\) values from this set of calculations was computed to be 0.0116 (eV)\(^{-1}\). We are satisfied with the use of a 10 \(\times\) 10 \(\times\) 10 \({\varvec {k}}\)-point grid and \(\eta =0.005\) eV due to the error bars being small and the relative error of \(\alpha\) being 3.4\(\%\) when compared to the average \(\alpha\) value. Figure 8 shows that our calculated electron–electron lifetimes from the Fermi liquid fit match the lifetimes from the full GW method[64] well, justifying the future use of this method. In particular, we note that this approach reduces the computational cost compared to full GW simulations, possibly extending its range of applicability into the high-excitation or warm dense matter regime. Our calculation of the electron–electron lifetimes predicts that electrons located close to the Fermi energy have lifetimes that are on the order of a few hundred femtoseconds and larger. For electrons at energies further away from the Fermi energy, our calculation predicts smaller lifetimes on the order of tens of femtoseconds and smaller.

The relaxation times from the GW electronic self-energy are about one order of magnitude smaller than our results from TDDFT. Since we have excluded numerical convergence parameters and finite size effects as possible reasons, we tentatively attribute the unexpected behavior observed in our TDDFT simulations to the limitations of ALDA, which is local in time and space. The limitations of ALDA for electron–electron scattering were studied before for 1-D model systems[73] and ALDA is expected to underestimate the scattering probability. In addition, even the “exact” adiabatic functional lacks the “peak and valley” features observed in truly exact exchange–correlation potentials and gives rise to spurious oscillations in charge density.[73] More generally an explanation for the lack of electron–electron thermalization could be related to the lack of explicit static correlation in the theory, similarly to the problem of electron–ion thermalization.[74] One could imagine that the promotion of electrons into higher energy states in a 3D metal might be analogous to the charge oscillations observed in the 1-D model. However, the actual limit of adiabatic semi-local functionals like ALDA remains unclear for condensed systems. Future investigation using XC functionals that address self-interaction errors (see e.g., Sec. “Exchange and correlation”) or non-adiabatic memory effects (e.g., the Vignale–Kohn functional[75,76]) are needed. However, such computationally intensive simulations remain impractical at the point of writing. We also note that other considerations such as choice of pseudopotentials or convergence with respect to Brillouin zone sampling could potentially affect the results to a minor extent.

Exchange and correlation

Local or semi-local approximations of exchange and correlation (XC) are most prevalent in applications of TDDFT to study the dynamics of interacting electrons. This typically means using the adiabatic local-density approximation (ALDA) or its generalized gradient approximation (GGA) extension, but in more recent works[77,78] also modern meta-GGA approximations such as the strongly constrained and appropriately normed (SCAN) functional[79,80] are employed within RT-TDDFT. More accurate and computationally tractable functionals are always desirable and specifically the influence of long-range corrections, self-interaction errors, and the adiabatic approximation remain unexplored, e.g., for electron capture and emission processes. First-principles simulations are particularly likely to provide most urgently needed insight when applied to complex or heterogeneous systems such as molecules adsorbed at semiconductor surfaces. For these, it becomes important to examine and advance the extent to which XC functionals can correctly model long-range charge transfer and exciton formation/dissociation in RT-TDDFT.

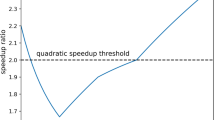

As a practical approach to move forward, recent progress includes using hybrid XC functionals within RT-TDDFT.[81] However, the plane-wave implementation would carry a computational cost typically about two orders of magnitude higher than that of semi-local functionals,[77] rendering applications to complex, extended systems challenging. The dominant cost of these calculations is the evaluation of exchange integrals. To alleviate this problem, some of us pursued the propagation of maximally localized Wannier functions[82] in RT-TDDFT, significantly reducing the computational cost of evaluating exact exchange integrals.[77] Maximally localized Wannier functions (MLWF) are propagated by[21]

where the maximal localization operator \({\hat{A}}^{ML}\) is an exponential of a unitary matrix that minimizes the spread of the propagating Wannier functions, \({\text {min}}\left\{ \sum _{\text{n}}^{N}\left[ \left\langle w_{\text{n}}\left| \hat{{\varvec {r}}}^{2}\right| w_{\text{n}}\right\rangle -\left\langle w_{\text{n}}|\hat{{\varvec {r}}}| w_{\text{n}}\right\rangle ^{2}\right] \right\} _{\text{U}}\), and the position operator is \(\langle \hat{{\varvec {r}}}\rangle =\frac{L}{2 \pi } {\text {Im}}\left\{ \ln \langle\psi | e^{\frac{i 2 \pi }{\mathrm {L}} \hat{{\varvec {r}}}}|\psi \rangle \right\}\). For insulating systems with a finite energy gap, the near-sightedness principle of electrons[83] allows high spatial localization of time-dependent MLWF orbitals. This can then be exploited for efficiently implementing hybrid exchange–correlation functionals. In particular, the spatially localized nature allows to reduce the number of exchange integrals

that needs to be evaluated. While time-dependent Kohn–Sham states are generally itinerant, only minimal spatial overlap is expected for distant time-dependent MLWFs and neglecting exchange integrals based on the geometric centers and spreads of the time-dependent MLWFs in the integrand significantly reduces computational cost.[77] Table I illustrates this reduction of computational cost for a system of 512 crystalline silicon atoms (2048 electrons), when using a cutoff distance for evaluating the exchange integrals needed for the PBE0 hybrid XC approximation.[77] For this test system the computational cost is reduced by an order of magnitude, using a cutoff distance of 25 \(a_0\). We note that due to the near-sightedness principle, this required cutoff distance does not scale with system size. Consequently, the MLWF approach becomes increasingly appealing for simulations of large systems, because a larger fraction of the exchange integrals can be removed while preserving accuracy. For ground-state calculations, such efforts exist[84,85] and we expect the MLWF approach to be crucial to making hybrid XC functionals applicable also in the context of RT-TDDFT for studying complex systems in the near future.

As an alternative hardware-based paradigm, the high computational cost of hybrid XC functionals for plane-wave (RT-TD)DFT codes can be alleviated by adopting GPU architectures. This is also driven by the growing prevalence of hybrid CPU/GPU architectures for high-performance computing, aiming to achieve exascale supercomputers. Such an approach has been successful for ground-state DFT calculations[86,87] and RT-TDDFT simulations using the parallel transport gauge.[88] Andrade et al. developed a new plane-wave (TD)DFT code, INQ,[89] based on GPU architectures. Computationally intensive methods like hybrid XC functionals are supported in INQ but the speedup remains to be explored in the future.

In terms of how hybrid XC approximations can advance (RT-TD)DFT methodologies, screened range-separated[90] and dielectric-dependent hybrid approximations[91] have emerged as interesting paradigms in recent years. Such advanced hybrid XC approximations could provide an alternative to the computationally expensive many-body perturbation theory framework and potentially enable an accurate description of exciton dynamics in large and complex systems within RT-TDDFT. Screened range-separated hybrid functionals have been used in linear response TDDFT to successfully model excitonic features in the absorption spectrum. These effects, as well as an accurate description of long-range charge-transfer excitations, typically go beyond standard semi-local approximations for exchange and correlation. Range-separated hybrid XC approximations are expected to enable a description of charge-transfer dynamics in heterogeneous systems[92] such as molecule-semiconductor interfaces within RT-TDDFT in combination with the MLWF approach.

While the above-discussed approaches render hybrid XC functionals more attractive, the computational cost still remains significantly higher than for local and semi-local approximations. Alternatively, we recently demonstrated[19] the use of a long-range corrected (LRC) kernel in the context of RT-TDDFT. The resulting vector potential accounts for the long-range screened electron-hole interaction and is capable of describing excitonic effects in optical spectra. At the same time, this RT-TDDFT implementation exhibits computational benefits using massively parallel computing and retains a description of non-linear effects that are not accessible within the linear response approximation. We also note that this enables more general future developments around real-time TD current DFT.

Finally, we note that recent work on the temperature dependence of exchange–correlation models is instructive to consider in working toward a dynamical treatment of thermalization based on TDDFT. Numerous results have established formal foundations for incorporating electronic temperature in DFT[93] and TDDFT[94,95] beyond the standard Mermin approach.[67] Building on these foundations, high-quality reference calculations for the uniform electron gas at non-zero temperature[96,97] have been used to create exchange–correlation functionals[98] and applied to materials in extreme but equilibrated conditions.[99] However, these results concern electrons that are equilibrated at a fixed temperature, not electrons that are in the process of equilibrating. Because the thermal contribution to exchange–correlation is typically relatively small, it is reasonable to assume that thermalization through electron–ion scattering can be captured by existing adiabatic functionals. However, thermalization through electron–electron scattering will require accounting for physics beyond the adiabatic approximation, which is notoriously challenging. We note one potentially promising direction from plasma physics, in which a correction accounting for electron–electron scattering beyond a mean-field treatment was proposed as a mechanism to improve agreement with quantum kinetic theory[100] for the thermal conductivity of non-degenerate hydrogen plasmas. Investigations of discrepancies in TDDFT or GW for comparably simple systems might yield insights into deficiencies in these approaches, although extrapolating to degenerate systems would likely be a challenge.

Summary and future directions

We discussed various interesting lines of recent development in the context of using real-time time-dependent density-functional theory for simulations of electron dynamics on femto- to pico-second time scales. While our efforts have not yet revealed an integrator that outperforms the enforced time-reversal symmetry method, optimization of the stability region of explicit methods or incorporation of machine learning techniques may turn out promising. Complex absorbing boundary conditions straightforwardly reduce computational cost in particular for finite systems. Treating the projectile particle quantum mechanically is within reach, albeit expensive, but difficulties around the vanishing distinction of projectile electrons and those of the host material require further development efforts. Based on our detailed simulation results, we conclude that reconciling electron–electron scattering from real-time propagation with many-body perturbation theory will require advances in the description of exchange and correlation. Finally, such advances seem possible, involving maximally localized Wannier functions or a long-range corrected approach to exchange and correlation.

All of these future developments will undoubtedly be impactful for materials discovery and development and can facilitate the tight integration of electronic excitations and ion dynamics. Efforts in such directions, including those involving machine learning, are currently underway in many groups worldwide. Going beyond the scope of the present work are interesting and necessary developments that couple electrons and ions, e.g., within Ehrenfest dynamics, or even treat ions quantum mechanically. At the same time, such developments in most cases will lead to moderately or significantly increased computational cost. Taking ongoing developments of modern supercomputing architectures into account, this will require simulation codes which can efficiently benefit from graphics processing units, such as the INQ code,[89] the successor to Qb@ll.

Data availability

The data that support the findings of this study are available from the corresponding author, A.S., upon reasonable request.

References

J. Lloyd-Hughes, P.M. Oppeneer, T.P. Dos Santos, A. Schleife, S. Meng, M.A. Sentef, M. Ruggenthaler, A. Rubio, I. Radu, M. Murnane, X. Shi, H. Kapteyn, B. Stadtmüller, K.M. Dani, F. da Jornada, E. Prinz, M. Aeschlimann, R. Milot, M. Burdanova, J. Boland, T.L. Cocker, F.A. Hegmann, J. Phys. Condens. Matter 33, 353001 (2021). https://doi.org/10.1088/1361-648X/abfe21

Y. Miyamoto, Sci. Rep. 11, 14626 (2021). https://doi.org/10.1038/s41598-021-94036-4

A.A. Correa, Comput. Mater. Sci. 150, 291 (2018). https://doi.org/10.1016/j.commatsci.2018.03.064

M. Uemoto, Y. Kuwabara, S.A. Sato, K. Yabana, J. Chem. Phys. 150, 094101 (2019). https://doi.org/10.1063/1.5068711

K. Jiang, M. Pavanello, Phys. Rev. B 103, 245102 (2021). https://doi.org/10.1103/PhysRevB.103.245102

C. Covington, J. Malave, K. Varga, Phys. Rev. B 103, 075119 (2021). https://doi.org/10.1103/PhysRevB.103.075119

G. Giannone, S. Śmiga, S. D’Agostino, E. Fabiano, F. Della Sala, J. Phys. Chem. A 125, 7246 (2021). https://doi.org/10.1021/acs.jpca.1c05384

F.P. Bonafé, B. Aradi, B. Hourahine, C.R. Medrano, F.J. Hernández, T. Frauenheim, J. Chem. Theor. Comput. 16, 4454 (2020). https://doi.org/10.1021/acs.jctc.9b01217

A. Schleife, E.W. Draeger, Y. Kanai, A.A. Correa, J. Chem. Phys. 137, 22A546 (2012). https://doi.org/10.1063/1.4758792

A. Schleife, E.W. Draeger, V.M. Anisimov, A.A. Correa, Y. Kanai, Comput. Sci. Eng. 16, 54 (2014). https://doi.org/10.1109/MCSE.2014.55

E.W. Draeger, X. Andrade, J.A. Gunnels, A. Bhatele, A. Schleife, A.A. Correa, J. Parallel Distrib. Comput. 106, 205 (2017). https://doi.org/10.1016/j.jpdc.2017.02.005

F. Gygi, IBM J. Res. Dev. 52, 137 (2008). https://doi.org/10.1147/rd.521.0137

E. Runge, E.K.U. Gross, Phys. Rev. Lett. 52, 997 (1984). https://doi.org/10.1103/PhysRevLett.52.997

C.A. Ullrich, Time-dependent density-functional theory: concepts and applications (Oxford University Press, Oxford, 2012). https://doi.org/10.1093/acprof:oso/9780199563029.001.0001

X. Andrade, A. Castro, D. Zueco, J. Alonso, P. Echenique, F. Falceto, A. Rubio, J. Chem. Theor. Comput. 5, 728 (2009). https://doi.org/10.1021/ct800518j

G.F. Bertsch, J.-I. Iwata, A. Rubio, K. Yabana, Phys. Rev. B 62, 7998 (2000)

K. Yabana, T. Nakatsukasa, J.-I. Iwata, G.F. Bertsch, Phys. Status Solidi (b) 243, 1121 (2006). https://doi.org/10.1002/pssb.200642005

X. Andrade, S. Hamel, A.A. Correa, Eur. Phys. J. B 91, 229 (2018). https://doi.org/10.1140/epjb/e2018-90291-5

J. Sun, C.-W. Lee, A. Kononov, A. Schleife, C.A. Ullrich, Phys. Rev. Lett. 127, 077401 (2021). https://doi.org/10.1103/PhysRevLett.127.077401

A. Tsolakidis, D. Sánchez-Portal, R.M. Martin, Phys. Rev. B 66, 235416 (2002). https://doi.org/10.1103/PhysRevB.66.235416

D.C. Yost, Y. Yao, Y. Kanai, J. Chem. Phys. 150, 194113 (2019). https://doi.org/10.1063/1.5095631

E. Luppi, H.-C. Weissker, S. Bottaro, F. Sottile, V. Veniard, L. Reining, G. Onida, Phys. Rev. B 78, 245124 (2008)

K. Kang, A. Kononov, C.-W. Lee, J.A. Leveillee, E.P. Shapera, X. Zhang, A. Schleife, Comput. Mater. Sci. 160, 207 (2019). https://doi.org/10.1016/j.commatsci.2019.01.004

A. Castro, M.A.L. Marques, A. Rubio, J. Chem. Phys. 121, 3425 (2004). https://doi.org/10.1063/1.1774980

A. Kononov, A. Schleife, Phys. Rev. B 102, 165401 (2020). https://doi.org/10.1103/PhysRevB.102.165401

S. Balay, S. Abhyankar, M.F. Adams, S. Benson, J. Brown, P. Brune, K. Buschelman, E. Constantinescu, L. Dalcin, A. Dener, V. Eijkhout, W.D. Gropp, V. Hapla, T. Isaac, P. Jolivet, D. Karpeev, D. Kaushik, M.G. Knepley, F. Kong, S. Kruger, D.A. May, L.C. McInnes, R.T. Mills, L. Mitchell, T. Munson, J.E. Roman, K. Rupp, P. Sanan, J. Sarich, B.F. Smith, S. Zampini, H. Zhang, H. Zhang, and J. Zhang, PETSc/TAO users manual (Argonne National Laboratory, 2020)

J. Butcher, Appl. Numer. Math. 20, 247 (1996). https://doi.org/10.1016/0168-9274(95)00108-5

S. Gottlieb, D.I. Ketcheson, C.-W. Shu, Strong stability preserving Runge-Kutta and multistep time discretizations (World Scientific, Singapore, 2011)

A. Kononov, Energy and charge dynamics in ion-irradiated surfaces and 2D materials from first principles, Ph.D. thesis, School University of Illinois at Urbana-Champaign (2020)

A. Schleife, Y. Kanai, A.A. Correa, Phys. Rev. B 91, 014306 (2015). https://doi.org/10.1103/PhysRevB.91.014306

R.J. Magyar, L. Shulenburger, A.D. Baczewski, Contrib. Plasm. Phys. 56, 459 (2016). https://doi.org/10.1002/ctpp.201500143

A. Lim, W.M.C. Foulkes, A.P. Horsfield, D.R. Mason, A. Schleife, E.W. Draeger, A.A. Correa, Phys. Rev. Lett. 116, 043201 (2016). https://doi.org/10.1103/PhysRevLett.116.043201

D.C. Yost, Y. Kanai, Phys. Rev. B 94, 115107 (2016). https://doi.org/10.1103/PhysRevB.94.115107

D.C. Yost, Y. Yao, Y. Kanai, Phys. Rev. B 96, 115134 (2017). https://doi.org/10.1103/PhysRevB.96.115134

C.-W. Lee, A. Schleife, Eur. Phys. J. B 91, 222 (2018). https://doi.org/10.1140/epjb/e2018-90204-8

R. Ullah, E. Artacho, A.A. Correa, Phys. Rev. Lett. 121, 116401 (2018). https://doi.org/10.1103/PhysRevLett.121.116401

C.-W. Lee, A. Schleife, Nano Lett. 19, 3939 (2019). https://doi.org/10.1021/acs.nanolett.9b01214

C.-W. Lee, J.A. Stewart, R. Dingreville, S.M. Foiles, A. Schleife, Phys. Rev. B 102, 024107 (2020). https://doi.org/10.1103/PhysRevB.102.024107

X. Qi, F. Bruneval, I. Maliyov, Phys. Rev. Lett. 128, 043401 (2022). https://doi.org/10.1103/PhysRevLett.128.043401

H. Zhang, Y. Miyamoto, A. Rubio, Phys. Rev. Lett. 109, 265505 (2012). https://doi.org/10.1103/PhysRevLett.109.265505

A. Ojanperä, A.V. Krasheninnikov, M. Puska, Phys. Rev. B 89, 035120 (2014). https://doi.org/10.1103/PhysRevB.89.035120

S. Zhao, W. Kang, J. Xue, X. Zhang, P. Zhang, J. Phys. Condens. Matter 27, 025401 (2015). https://doi.org/10.1088/0953-8984/27/2/025401

A. Kononov, A. Schleife, Nano Lett. 21, 4816 (2021). https://doi.org/10.1021/acs.nanolett.1c01416

H. Vázquez, A. Kononov, A. Kyritsakis, N. Medvedev, A. Schleife, F. Djurabekova, Phys. Rev. B 103, 224306 (2021). https://doi.org/10.1103/PhysRevB.103.224306

A. Kononov, A. Olmstead, A.D. Baczewski, A. Schleife, 2D Mater. 9, 4 (2022). https://doi.org/10.1088/2053-1583/ac8e7e

P. Bogacki, L.F. Shampine, Comput. Math. Appl. 32, 15 (1996). https://doi.org/10.1016/0898-1221(96)00141-1

D.A. Rehn, Y. Shen, M.E. Buchholz, M. Dubey, R. Namburu, E.J. Reed, J. Chem. Phys. 150, 014101 (2019). https://doi.org/10.1063/1.5056258

X. Qian, J. Li, X. Lin, S. Yip, Phys. Rev. B 73, 035408 (2006). https://doi.org/10.1103/PhysRevB.73.035408

S. Meng, E. Kaxiras, J. Chem. Phys. 129, 054110 (2008). https://doi.org/10.1063/1.2960628

A. Ojanperä, V. Havu, L. Lehtovaara, M. Puska, J. Chem. Phys. 136, 144103 (2012). https://doi.org/10.1063/1.3700800

Numerical implementation of time-dependent density functional theory for extended systems in extreme environments, SAND2014-0597 (Sandia National Laboratories)

A.D. Baczewski, L. Shulenburger, M. Desjarlais, S. Hansen, R. Magyar, Phys. Rev. Lett. 116, 115004 (2016). https://doi.org/10.1103/PhysRevLett.116.115004

M. Walter, H. Häkkinen, L. Lehtovaara, M. Puska, J. Enkovaara, C. Rostgaard, J.J. Mortensen, J. Chem. Phys. 128, 244101 (2008)

N. Troullier, J.L. Martins, Phys. Rev. B 43, 1993 (1991). https://doi.org/10.1103/PhysRevB.43.1993

S. Kang, E.M. Constantinescu, J. Sci Comput. 93, 23 (2022). https://doi.org/10.1007/s10915-022-01982-w

D.I. Ketcheson, SIAM J. Numer. Anal. 57, 2850 (2019). https://doi.org/10.1137/19M1263662

S. Liang, Z. Huang, H. Zhang, in International conference on learning representations (2022). https://openreview.net/forum?id=uVXEKeqJbNa

W. Jia, D. An, L.-W. Wang, L. Lin, J. Chem. Theory Comput. 14, 5645 (2018). https://doi.org/10.1021/acs.jctc.8b00580

D. An, D. Fang, L. Lin, J. Comput. Phys. 451, 110850 (2022). https://doi.org/10.1016/j.jcp.2021.110850

P. Wopperer, U. De Giovannini, A. Rubio, Eur. Phys. J. B 90, 51 (2017). https://doi.org/10.1140/epjb/e2017-70548-3

J. Muga, J. Palao, B. Navarro, I. Egusquiza, Phys. Rep. 395, 357 (2004). https://doi.org/10.1016/j.physrep.2004.03.002

Y. Ueda, Y. Suzuki, K. Watanabe, Phys. Rev. B 97, 075406 (2018). https://doi.org/10.1103/PhysRevB.97.075406

K. Tsubonoya, C. Hu, K. Watanabe, Phys. Rev. B 90, 035416 (2014). https://doi.org/10.1103/PhysRevB.90.035416

F. Ladstädter, U. Hohenester, P. Puschnig, C. Ambrosch-Draxl, Phys. Rev. B 70, 235125 (2004). https://doi.org/10.1103/physrevb.70.235125

V.P. Zhukov, E.V. Chulkov, P.M. Echenique, Phys. Rev. B 72, 155109 (2005). https://doi.org/10.1103/PhysRevB.72.155109

N.A. Modine, R.M. Hatcher, J. Chem. Phys. 142, 204111 (2015). https://doi.org/10.1063/1.4921690

N.D. Mermin, Phys. Rev. 137, A1441 (1965). https://doi.org/10.1103/PhysRev.137.A1441

Socorro. http://dft.sandia.gov/socorro

I. Campillo, J.M. Pitarke, A. Rubio, E. Zarate, P.M. Echenique, Phys. Rev. Lett. 83, 2230 (1999). https://doi.org/10.1103/PhysRevLett.83.2230

M. Bauer, A. Marienfeld, M. Aeschlimann, Progress Surf. Sci. 90, 319 (2015). https://doi.org/10.1016/j.progsurf.2015.05.001

A. Tamm, M. Caro, A. Caro, G. Samolyuk, M. Klintenberg, A.A. Correa, Phys. Rev. Lett. 120, 185501 (2018). https://doi.org/10.1103/PhysRevLett.120.185501

P. Kratzer, M. Zahedifar, New J. Phys. 21, 123023 (2019). https://doi.org/10.1088/1367-2630/ab5c76

L. Lacombe, Y. Suzuki, K. Watanabe, N.T. Maitra, Eur. Phys. J. B 91, 96 (2018). https://doi.org/10.1140/epjb/e2018-90101-2

V. Rizzi, T.N. Todorov, J.J. Kohanoff, A.A. Correa, Phys. Rev. B 93, 024306 (2016). https://doi.org/10.1103/PhysRevB.93.024306

G. Vignale, W. Kohn, Phys. Rev. Lett. 77, 2037 (1996). https://doi.org/10.1103/PhysRevLett.77.2037

H.O. Wijewardane, C.A. Ullrich, Phys. Rev. Lett. 95, 086401 (2005). https://doi.org/10.1103/PhysRevLett.95.086401

C. Shepard, R. Zhou, D.C. Yost, Y. Yao, Y. Kanai, J. Chem. Phys. 155, 100901 (2021). https://doi.org/10.1063/5.0057587

Y. Yao, D.C. Yost, Y. Kanai, Phys. Rev. Lett. 123, 066401 (2019). https://doi.org/10.1103/PhysRevLett.123.066401

J. Sun, A. Ruzsinszky, J.P. Perdew, Phys. Rev. Lett. 115, 036402 (2015). https://doi.org/10.1103/PhysRevLett.115.036402

Y. Yao, Y. Kanai, J. Chem. Phys. 146, 224105 (2017). https://doi.org/10.1063/1.4984939

C.D. Pemmaraju, F.D. Vila, J.J. Kas, S.A. Sato, J.J. Rehr, K. Yabana, Comput. Phys. Commun. 226, 30 (2018). https://doi.org/10.1016/j.cpc.2018.01.013

N. Marzari, A.A. Mostofi, J.R. Yates, I. Souza, D. Vanderbilt, Rev. Mod. Phys. 84, 1419 (2012). https://doi.org/10.1103/RevModPhys.84.1419

E. Prodan, W. Kohn, Proc. Natl. Acad. Sci. 102, 11635 (2005). https://doi.org/10.1073/pnas.0505436102

P. Giannozzi, O. Baseggio, P. Bonfà, D. Brunato, R. Car, I. Carnimeo, C. Cavazzoni, S. de Gironcoli, P. Delugas, F. Ferrari Ruffino, A. Ferretti, N. Marzari, I. Timrov, A. Urru, S. Baroni, J. Chem. Phys. 152, 154105 (2020). https://doi.org/10.1063/5.0005082

H.-Y. Ko, J. Jia, B. Santra, X. Wu, R. Car, R.A. DiStasio Jr., J. Chem. Theor. Comput. 16, 3757 (2020). https://doi.org/10.1021/acs.jctc.9b01167

M. Hutchinson, M. Widom, Comput. Phys. Commun. 183, 1422 (2012). https://doi.org/10.1016/j.cpc.2012.02.017

X. Andrade, A. Aspuru-Guzik, J. Chem. Theor. Comput. 9, 4360 (2013)

W. Jia, L.-W. Wang, and L. Lin, in Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, series and number SC ’19 ( Association for Computing Machinery, New York, NY, USA, 2019) https://doi.org/10.1145/3295500.3356144

X. Andrade, C.D. Pemmaraju, A. Kartsev, J. Xiao, A. Lindenberg, S. Rajpurohit, L.Z. Tan, T. Ogitsu, A.A. Correa, J. Chem. Theor. Comput. 17, 7447 (2021). https://doi.org/10.1021/acs.jctc.1c00562

D. Wing, J.B. Haber, R. Noff, B. Barker, D.A. Egger, A. Ramasubramaniam, S.G. Louie, J.B. Neaton, L. Kronik, Phys. Rev. Mater. 3, 064603 (2019). https://doi.org/10.1103/PhysRevMaterials.3.064603

H. Zheng, M. Govoni, G. Galli, Phys. Rev. Mater. 3, 073803 (2019). https://doi.org/10.1103/PhysRevMaterials.3.073803

T. Stein, L. Kronik, R. Baer, J. Chem. Phys. 131, 244119 (2009). https://doi.org/10.1063/1.3269029

A. Pribram-Jones, K. Burke, Phys. Rev. B 93, 205140 (2016). https://doi.org/10.1103/PhysRevB.93.205140

K. Burke, J.C. Smith, P.E. Grabowski, A. Pribram-Jones, Phys. Rev. B 93, 195132 (2016). https://doi.org/10.1103/PhysRevB.93.195132

A. Pribram-Jones, P.E. Grabowski, K. Burke, Phys. Rev. Lett. 116, 233001 (2016). https://doi.org/10.1103/PhysRevLett.116.233001

E.W. Brown, B.K. Clark, J.L. DuBois, D.M. Ceperley, Phys. Rev. Lett. 110, 146405 (2013). https://doi.org/10.1103/PhysRevLett.110.146405

T. Dornheim, S. Groth, M. Bonitz, Phys. Rep. 744, 1 (2018). https://doi.org/10.1016/j.physrep.2018.04.001

V.V. Karasiev, J.W. Dufty, S. Trickey, Phys. Rev. Lett. 120, 076401 (2018). https://doi.org/10.1103/PhysRevLett.120.076401

V. Karasiev, S. Hu, M. Zaghoo, T. Boehly, Phys. Rev. B 99, 214110 (2019). https://doi.org/10.1103/PhysRevB.99.214110

M.P. Desjarlais, C.R. Scullard, L.X. Benedict, H.D. Whitley, R. Redmer, Phys. Rev. E 95, 033203 (2017). https://doi.org/10.1103/PhysRevE.95.033203

Acknowledgments

A. S. acknowledges fruitful discussions with Peter Kratzer. This material is based upon work supported by the Office of Naval Research (Grant No. N00014-18-1-2605) and the National Science Foundation (Grant No. OAC-1740219). A. K., B. R., and A. D. B. were supported by Sandia’s Laboratory Directed Research and Development (LDRD) Project No. 218456 and the US Department of Energy’s Science Campaign 1. This article has been co-authored by employees of National Technology & Engineering Solutions of Sandia, LLC under Contract No. DE-NA0003525 with the U.S. Department of Energy (DOE). The authors own all right, title and interest in and to the article and are responsible for its contents. The United States Government retains and the publisher, by accepting the article for publication, acknowledges that the United States Government retains a non-exclusive, paid-up, irrevocable, world-wide license to publish or reproduce the published form of this article or allow others to do so, for United States Government purposes. The DOE will provide public access to these results of federally sponsored research in accordance with the DOE Public Access Plan https://www.energy.gov/downloads/doe-public-access-plan. X. A. and A. A. C. work were supported by the Center for Non-Perturbative Studies of Functional Materials Under Non-Equilibrium Conditions (NPNEQ) funded by the Materials Sciences and Engineering Division, Computational Materials Sciences Program of the U.S. Department of Energy, Office of Science, Basic Energy Sciences and performed under the auspices of the US Department of Energy by Lawrence Livermore National Laboratory under Contract DE-AC52-07NA27344. Y. Y. and Y. K. were supported by the National Science Foundation under Award Nos. CHE-1954894 and OAC-17402204. Support from the IAEA F11020 CRP “Ion Beam Induced Spatio-temporal Structural Evolution of Materials: Accelerators for a New Technology Era” is gratefully acknowledged. This research is partially supported by the NCSA-Inria-ANL-BSC-JSC-Riken-UTK Joint-Laboratory for Extreme Scale Computing (JLESC, https://jlesc.github.io/). A. S. acknowledges support as Mercator Fellow within SFB 1242 at the University Duisburg-Essen. E. C. was supported by DOE Office of Advanced Scientific Computing Research under Contract DE-AC02-06CH11357. This work was performed, in part, at the Center for Integrated Nanotechnologies, an Office of Science User Facility operated for the U.S. Department of Energy (DOE) Office of Science by Los Alamos National Laboratory (Contract 89233218CNA000001) and Sandia National Laboratories (Contract DE-NA-0003525). This research is part of the Blue Waters sustained petascale computing project, which is supported by the National Science Foundation (awards OCI-0725070 and ACI-1238993) and the state of Illinois. Blue Waters is a joint effort of the University of Illinois at Urbana-Champaign and its National Center for Supercomputing Applications. This work made use of the Illinois Campus Cluster, a computing resource that is operated by the Illinois Campus Cluster Program (ICCP) in conjunction with the National Center for Supercomputing Applications (NCSA) and which is supported by funds from the University of Illinois at Urbana-Champaign. An award of computer time was provided by the Innovative and Novel Computational Impact on Theory and Experiment (INCITE) program. This research used resources of the Argonne Leadership Computing Facility, which is a DOE Office of Science User Facility supported under Contract DE-AC02-06CH11357.

Funding

Funding sources are acknowledged in the acknowledgement section.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kononov, A., Lee, CW., dos Santos, T.P. et al. Electron dynamics in extended systems within real-time time-dependent density-functional theory. MRS Communications 12, 1002–1014 (2022). https://doi.org/10.1557/s43579-022-00273-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1557/s43579-022-00273-7