Abstract

In this paper, we first consider the stability problem for a class of stochastic quaternion-valued neural networks with time-varying delays. Next, we cannot explicitly decompose the quaternion-valued systems into equivalent real-valued systems; by using Lyapunov functional and stochastic analysis techniques, we can obtain sufficient conditions for mean-square exponential input-to-state stability of the quaternion-valued stochastic neural networks. Our results are completely new. Finally, a numerical example is given to illustrate the feasibility of our results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.1 Introduction

As is well known, the dynamic research on neural network models has achieved fruitful results, and it has been applied in pattern recognition, automatic control, signal processing and artificial intelligence. However, most neural network models proposed and discussed in the literature are deterministic. It has the characteristics of being simple and easy to analyze. In fact, for any actual system, there is always a variety of random factors. However, in real nervous systems and in the implementation of artificial neural networks, noise is unavoidable [1, 2] and should be taken into consideration in modeling. Stochastic neural network is an artificial neural network and is used as a tool of artificial intelligence. Therefore, it is of practical importance to study the stochastic neural networks. The authors of [3] studied the stability of stochastic neural networks in 1996. Subsequently, some scholars carried out a lot of research work and made some progress [4–7]. Due to the finite switching speed of neurons and amplifiers, time delays inevitably exist in biological and artificial neural network models. In recent years, the study of the stability of delay stochastic neural networks has become a hot spot in many scholars [8–15]. It is well known that the external inputs can influence the dynamic behaviors of neural networks in practical applications. Therefore, it is significant to study the input-to-state stability problem in the field of stochastic neural networks [16–19].

Besides, the quaternion-valued neural network has been one of the most popular research hot spots, due to the storage capacity advantage compared to real-valued neural networks and complex-valued neural networks. It can be applied to the fields of robotics, attitude control of satellites, computer graphics, ensemble control, color night vision and image impression [20, 21]. The skew field of quaternion by

where \(q^{R}\), \(q^{I}\), \(q^{J}\), \(q^{K}\) are real numbers, the three imaginary units i, j and k obey Hamilton’s multiplication rules:

Since quaternion-valued neural networks were proposed, the study of quaternion-valued neural networks has received much attention of many scholars, and some results have been obtained for the stability ([22–27]), dissipativity ([28, 29]), input-to-state stability [30] and anti-periodic solutions [31] for the quaternion-valued neural networks. Very recently, many scholars considered the problem of robust stability for stochastic complex-valued neural networks [32, 33]. Subsequently, some scholars considered the problem of stability for stochastic quaternion-valued neural networks [34, 35]. However, to the best of our knowledge, till now there is still no result about the mean-square exponential input-to-state stability analysis for the stochastic quaternion-valued neural networks by direct method. So it is a challenging and important problem in theories and applications.

With the inspiration from the previous research, in order to fill the gap in the research field of quaternion-valued stochastic neural networks, the work of this paper comes from two main motivations. (1) The stability criterion is the mean-square exponential input-to-state stability, which is more general than the traditional mean-square exponential stability. In the past decade, many authors studied the input-to-state stability analysis for a class of stochastic delayed neural networks [16–19]. (2) Recently, little literature [34, 35] had studied the square-mean stability of quaternion-valued stochastic neural networks, thus it is worth studying the mean-square exponential input-to-state stability of the quaternion-valued stochastic neural networks by direct method.

Motivated by the above statement, in this paper, we consider the following stochastic quaternion-valued neural network:

where \(l\in \{1,2,\ldots ,n\}=:\mathcal{N}\), n is the number of neurons in layers; \(z_{l}(t)\in \mathbb{Q}\) is the state of the pth neuron at time t; \(a_{l}(t)>0\) is the self-feedback connection weight; \(b_{lk}(t)\) and \(c_{lk}(t)\in \mathbb{Q}\) are, respectively, the connection weight and the delay connection weight from neuron k to neuron l; \(\theta _{lk}(t)\) and \(\eta _{lk}(t)\) are the transmission delays; \(f_{k}, g_{k}:\mathbb{Q}\rightarrow \mathbb{Q}\) are the activation functions; \(U(t)=(U_{1}(t),U_{2}(t),\ldots ,U_{n}(t))\) belongs to \(\ell _{\infty }\), where \(\ell _{\infty }\) denotes the class of essentially bounded functions U from \(\mathbb{R}^{+}\) to \(\mathbb{Q}^{n}\) with \(\|U\|_{\infty }=\operatorname{esssup}_{t\geq 0}\|U(t)\|_{\mathbb{Q}}< \infty \); \(B(t)= (B_{1}(t),B_{2}(t),\ldots ,B_{n}(t) )^{T}\) is an n-dimensional Brownian motion defined on a complete probability space space; \(\sigma _{lk}:\mathbb{Q}\rightarrow \mathbb{Q}\) is a Borel measurable function; \(\sigma =(\sigma _{lk})_{n\times n}\) is the diffusion coefficient matrix.

For every \(z\in \mathbb{Q}\), the conjugate transpose of z is defined as \(z^{\ast }=z^{R}-iz^{I}-jz^{J}-kz^{K}\), and the norm of z is defined as

For every \(z=(z_{1},z_{2},\ldots ,z_{n})^{T}\in \mathbb{Q}^{n}\), we define \(\|z\|_{\mathbb{Q}^{n}}=\max_{l\in \mathcal{N}}\{\|z_{l}\|_{ \mathbb{Q}}\}\).

For convenience, we will adopt the following notation:

The initial conditions of the system (1.1) is of the form

where \(\phi _{l}\in BC_{\mathcal{F}_{0}}([-\tau ,0],\mathbb{Q})\), \(l\in \mathcal{N}\).

Comparing the previous results, we have the following advantages: Firstly, this is the first time to study this problem, and it fills the gap in the field of stochastic quaternion-valued neural networks. Secondly, quaternion-valued stochastic neural network (1.1) contains real-valued stochastic neural networks and complex-valued stochastic neural networks as its special cases, the main results of this paper are new and more general than those in the existing quaternion-valued neural network models in the literature. Thirdly, unlike some previous studies of quaternion-valued stochastic neural networks, we do not decompose the systems under consideration into real-valued systems, but rather directly study quaternion-valued stochastic systems. Finally, our method of this paper can be used to study the mean-square exponential input-to-state stability for other types of quaternion-valued stochastic neural networks.

This paper is organized as follows: In Sect. 2, we introduce some definitions and state some preliminary results which are needed in later sections. In Sect. 3, we establish some sufficient conditions for the mean-square exponential input-to-state stability of system (1.1). In Sect. 4, we give an example to demonstrate the feasibility of our results. Finally, we draw a conclusion in Sect. 5.

2 Preliminaries and basic knowledge

In this section, we introduce the quaternion version Itô formula and the definition of the mean-square exponential input-to-state stability.

Let \((\Omega ,\mathcal{F},\{\mathcal{F}_{t}\}_{t\geq 0},\mathrm{P})\) be a complete probability space with a natural filtration \(\{\mathcal{F}_{t}\}_{t\geq 0}\) satisfying the usual conditions (i.e., it is right continuous, and \(\mathcal{F}_{0}\) contains all P-null sets). Denote by \(BC_{\mathcal{F}_{0}}([-\tau ,0],\mathbb{Q}^{n})\) the family of all bounded, \(\mathcal{F}_{0}\)-measurable, \(C([-\tau ,0],\mathbb{Q}^{n})\)-valued random variables. Denote by \(\mathcal{L}_{\mathcal{F}_{0}}^{2}([-\tau ,0],\mathbb{Q}^{n})\) the family of all \(\mathcal{F}_{0}\)-measurable, \(C([-\tau ,0],\mathbb{Q}^{n})\)-valued random variables ϕ, satisfying \(\sup_{s\in [-\tau ,0]}\mathrm{E}\|\phi (s)\|_{\mathbb{Q}}^{2}< \infty \), where E denotes the expectation of the stochastic process.

Definition 2.1

Consider an n-dimensional quaternion-valued stochastic differential equation:

where \(z(t)=(z_{1}(t),z_{2}(t),\ldots ,z_{n}(t))^{T}\in \mathbb{Q}^{n}\). For \(V(t,z):\mathbb{R}^{+}\times \mathbb{Q}^{n}\rightarrow \mathbb{R}^{+}\) (in fact, we can write \(V(t,z)=V(t,z^{R},z^{I},z^{J},z^{K})\)), define the \(\mathbb{R}\)-derivative of V as

where const represents constant. Let \(C^{1,2}(\mathbb{R}^{+}\times \mathbb{Q}^{n},\mathbb{R}^{+})\) denote the family of all nonnegative functions \(V(t,z)\) on \(\mathbb{R}^{+}\times \mathbb{Q}^{n}\), which are once continuously differentiable in t and twice differentiable in \(z^{R}\), \(z^{I}\), \(z^{J}\) and \(z^{K}\). Then, for \(V\in C^{1,2}(\mathbb{R}^{+}\times \mathbb{Q}^{n},\mathbb{R}^{+})\), the quaternion version of the Itô formula takes the following form:

where \(f(t)=f(t,z(t),z(t-\theta (t)))\), \(g(t)=g(t,z(t),z(t-\eta (t)))\), and operator \(\mathcal{L}V(t,z)\) is defined as

Definition 2.2

The trivial solution of system (1.1) is mean-square exponentially input-to-state stable, if there exist constants \(\lambda >0\), \(\mathcal{M}_{1}>0\) and \(\mathcal{M}_{2}>0\) such that

for \(\phi \in \mathcal{L}_{\mathcal{F}_{0}}^{2}([-\tau ,0],\mathbb{Q}^{n})\) and \(U\in \ell _{\infty }\), where

Lemma 2.1

([31])

For all \(a,b\in \mathbb{Q}\), \(a^{\ast }b+b^{\ast }a\leq a^{\ast }a+b^{\ast }b\).

Throughout the rest of the paper, we assume that:

- \((H_{1})\):

-

There exist positive constants \(L_{k}^{f}\), \(L_{k}^{g}\), \(L_{lk}^{\sigma }\) such that for any \(x,y\in \mathbb{Q}\),

$$\begin{aligned}& \bigl\Vert f_{k}(x)-f_{k}(y) \bigr\Vert _{\mathbb{Q}} \leq L_{k}^{f} \Vert x-y \Vert _{\mathbb{Q}},\qquad \bigl\Vert g_{k}(x)-g_{k}(y) \bigr\Vert _{\mathbb{Q}} \leq L_{k}^{g} \Vert x-y \Vert _{\mathbb{Q}}, \\& \bigl\Vert \sigma _{lk}(x)-\sigma _{lk}(y) \bigr\Vert _{\mathbb{Q}} \leq L_{lk}^{ \sigma } \Vert x-y \Vert _{\mathbb{Q}},\qquad f_{k}(\mathbf{0})=g_{k}( \mathbf{0})= \sigma _{lk}(\mathbf{0})=\mathbf{0},\quad l,k\in \mathcal{N}. \end{aligned}$$ - \((H_{2})\):

-

For \(l\in \mathcal{N}\), there exist positive constants λ and \(\xi _{k}\) such that

$$\begin{aligned} 2\xi _{l}a_{l}^{-} >& \lambda \xi _{l}+2\xi _{l} +\sum_{k=1}^{n} \xi _{k} \bigl(b_{kl}^{+} \bigr)^{2} \bigl(L_{k}^{f} \bigr)^{2} +\sum _{k=1}^{n}\xi _{k} \bigl(c_{kl}^{+} \bigr)^{2} \bigl(L_{k}^{g} \bigr)^{2} \frac{e^{\lambda \theta ^{+}}}{1-\gamma } \\ &{}+\sum_{k=1}^{n}\xi _{k} \bigl(L_{kl}^{\sigma } \bigr)^{2} \frac{e^{\lambda \eta ^{+}}}{1-\beta }, \end{aligned}$$where \(\gamma =\sup_{t\in \mathbb{R}}\{\theta _{lk}'(t)\}\), \(\beta =\sup_{t\in \mathbb{R}}\{\eta _{lk}'(t)\}\).

3 Mean-square exponential input-to-state stability

In this section, we will consider the mean-square exponential input-to-state stability of system (1.1).

Theorem 3.1

Suppose that Assumptions \((H_{1})\)–\((H_{2})\) are satisfied, then, for any initial value of the dynamical system (1.1), there exists a trivial solution \(z(t)\), which is mean-square exponentially input-to-state stable.

Proof

Let \(\sigma (t)=(\sigma _{lk}(t))_{n\times n}\), where \(\sigma _{lk}(t)=\sigma _{lk}(z_{k}(t-\eta _{lk}(t)))\). We consider the Lyapunov function as follows:

where

Then, by the Itô formula, we have the following stochastic differential:

where \(V_{z}(t,z(t))= (\frac{\partial V(t,z(t))}{\partial z_{1}},\ldots , \frac{\partial V(t,z(t))}{\partial z_{n}} )\), and \(\mathcal{L}\) is the weak infinitesimal operator such that

From \((H_{2})\), we easily derive

Now, similar to the previous literature, we define the stopping time (or Markov time) \(\varrho _{k}:=\inf \{s\geq 0: |z(s)|\geq k\}\), and by the Dynkin formula, we have

Letting \(k\rightarrow \infty \) on both sides (3.3), from the monotone convergence theorem, we can get

On the other hand, it follows from the definition of \(V(t,z(t))\) that

Combining (3.4) and (3.5), the following holds:

where

which together with Definition 2.2 verifies that trivial solution of system (1.1) is mean-square exponentially input-to-state stable. The proof is complete. □

Remark 3.1

In the calculation process of Theorem 3.1, by using stochastic analysis theory and the Itô formula, we obtain the mean-square exponential input-to-state stability of system (1.1).

Remark 3.2

Theorem 3.1 can be applied to stability criteria for the considered stochastic network models by employing a non-decomposing method.

4 Illustrative example

In this section, we give an example to illustrate the feasibility and effectiveness of our results obtained in Sect. 3.

Example 4.1

Let \(n=3\). Consider the following quaternion-valued stochastic neural network:

where \(l=1,2,3\), the coefficients are follows:

Through simple calculations, we have

Take \(\lambda =0.1\), \(\xi _{1}=0.3\), \(\xi _{2}=0.4\), \(\xi _{3}=0.5\), then we have

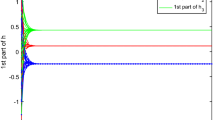

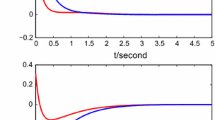

We can check that other conditions of Theorem 3.1 are satisfied. So, we know that a trivial solution of system (4.1) is mean-square exponentially input-to-state stable (see Figs. 1–4). The system (4.1) has the initial values \(z_{1}(0)=0.3-0.3i+0.5j-0.3k\), \(z_{2}(0)=-0.2+0.4i-0.4j-0.45k\) and \(z_{3}(0)=0.1-0.1i+0.2j+0.35k\). We use the Simulink toolbox of Matlab to get the numerical simulation diagram of this example.

State trajectories of variables \(z_{l}^{R}(t)\) of system (4.1) with \(U_{l}^{R}(t)\neq 0\), \(l=1,2,3\)

State trajectories of variables \(z_{l}^{R}(t)\) of system (4.1) with \(U_{l}^{R}(t)=0\), \(l=1,2,3\)

State trajectories of variables \(z_{l}^{I}(t)\) of system (4.1) with \(U_{l}^{I}(t)\neq 0\), \(l=1,2,3\)

State trajectories of variables \(z_{l}^{I}(t)\) of system (4.1) with \(U_{l}^{I}(t)=0\), \(l=1,2,3\)

State trajectories of variables \(z_{l}^{J}(t)\) of system (4.1) with \(U_{l}^{J}(t)\neq 0\), \(l=1,2,3\)

State trajectories of variables \(z_{l}^{J}(t)\) of system (4.1) with \(U_{l}^{J}(t)=0\), \(l=1,2,3\)

State trajectories of variables \(z_{l}^{K}(t)\) of system (4.1) with \(U_{l}^{K}(t)\neq 0\), \(l=1,2,3\)

Remark 4.1

By using the Simulink toolbox in MATLAB, Figs. 1–8 show the time revolution of four parts of \(z_{1}\), \(z_{2}\), respectively. When \(U_{l}(t)=0\), our results will conclude the traditionally mean-square exponential stability for the considered stochastic neural networks.

State trajectories of variables \(z_{l}^{K}(t)\) of system (4.1) with \(U_{l}^{K}(t)=0\), \(l=1,2,3\)

Remark 4.2

Quaternion-valued stochastic system includes real-valued stochastic system as its special cases. In fact, in Example 4.1, if all the coefficients are functions from \(\mathbb{R}\) to \(\mathbb{R}\), and all the activation functions are functions from \(\mathbb{R}\) to \(\mathbb{R}\), then the state \(z_{l}(t) \equiv z_{l}^{R}(t)\in \mathbb{R}\), in this case, systems (4.1) is stochastic real-valued system. Then, similar to the proofs of 3.1 under the same corresponding conditions, one can show that a similar result to Theorem 3.1 is still valid (see [16–19]).

5 Conclusion

In this paper, we consider the problem of the mean-square exponential input-to-state stability for the quaternion-valued stochastic neural networks by direct method. Then, by constructing an appropriate Lyapunov functional, stochastic analysis theory and the Itô formula, a novel sufficient condition has been derived to ensure the mean-square exponential input-to-state stability for the considered stochastic neural networks. In order to demonstrate the usefulness of the presented results, a numerical example is given. This paper improves and extends the old results in the literature [34, 35], and proposes a good research framework to study the mean-square exponential input-to-state stability of quaternion-valued stochastic neural networks with time-varying delays. We expect to extend this work to the study of other types of stochastic neural networks.

Availability of data and materials

Not applicable.

References

Wong, E.: Stochastic neural networks. Algorithmica 6, 466–478 (1991)

Haykin, S.: Neural Networks. Prentice Hall, New York (1994)

Liao, X.X., Mao, X.R.: Exponential stability and instability of stochastic neural networks. Stoch. Anal. Appl. 14(2), 165–185 (1996)

Blythe, S., Mao, X.R., Liao, X.X.: Stability of stochastic delay neural networks. J. Franklin Inst. 338(4), 481–495 (2001)

Zhao, H.Y., Ding, N., Chen, L.: Almost sure exponential stability of stochastic fuzzy cellular neural networks with delays. Chaos Solitons Fractals 40(4), 1653–1659 (2009)

Zhu, Q.X., Li, X.D.: Exponential and almost sure exponential stability of stochastic fuzzy delayed Cohen–Grossberg neural networks. Fuzzy Sets Syst. 203(2), 74–94 (2012)

Li, X.F., Ding, D.: Mean square exponential stability of stochastic Hopfield neural networks with mixed delays. Stat. Probab. Lett. 126, 88–96 (2017)

Wang, Z.D., Liu, Y.R., Fraser, K., Liu, X.H.: Stochastic stability of uncertain Hopfield neural networks with discrete and distributed delays. Phys. Lett. A 354(4), 288–297 (2006)

Wang, Z.D., Fang, J.A., Liu, X.H.: Global stability of stochastic high-order neural networks with discrete and distributed delays. Chaos Solitons Fractals 36(2), 388–396 (2008)

Yang, R.N., Zhang, Z.X., Shi, P.: Exponential stability on stochastic neural networks with discrete interval and distributed delays. IEEE Trans. Neural Netw. 21(1), 169–175 (2010)

Chen, G.L., Li, D.S., Shi, L., Gaans, O.V., Lunel, S.V.: Stability results for stochastic delayed recurrent neural networks with discrete and distributed delays. J. Differ. Equ. 264(6), 3864–3898 (2018)

Rajchakit, G.: Switching design for the robust stability of nonlinear uncertain stochastic switched discrete-time systems with interval time-varying delay. J. Comput. Anal. Appl. 16(1), 10–19 (2014)

Rajchakit, G., Sriraman, R., Kaewmesri, P., Chanthorn, P., Lim, C.P., Samidurai, R.: An extended analysis on robust dissipativity of uncertain stochastic generalized neural networks with Markovian jumping parameters. Symmetry 12(6), 1035 (2020)

Rajchakit, G., Chanthorn, P., Niezabitowski, M., Raja, R., Baleanu, D., Pratap, A.: Impulsive effects on stability and passivity analysis of memristor-based fractional-order competitive neural networks. Neurocomputing 417, 290–301 (2020)

Rajchakit, G., Sriraman, R., Samidurai, R.: Dissipativity analysis of delayed stochastic generalized neural networks with Markovian jump parameters. Int. J. Nonlinear Sci. Numer. Simul. (2021). https://doi.org/10.1515/ijnsns-2019-0244

Zhu, Q.X., Cao, J.D.: Mean-square exponential input-to-state stability of stochastic delayed neural networks. Neurocomputing 131, 157–163 (2014)

Zhu, Q.X., Cao, J.D., Rakkiyappan, R.: Exponential input-to-state stability of stochastic Cohen-Grossberg neural networks with mixed delays. Nonlinear Dyn. 79(2), 1085–1098 (2015)

Zhou, W.S., Teng, L.Y., Xu, D.Y.: Mean-square exponentially input-to-state stability of stochastic Cohen–Grossberg neural networks with time-varying delays. Neurocomputing 153, 54–61 (2015)

Liu, D., Zhu, S., Chang, W.T.: Mean square exponential input-to-state stability of stochastic memristive complex-valued neural networks with time varying delay. Int. J. Syst. Sci. 48(9), 1966–1977 (2017)

Isokawa, T., Kusakabe, T., Matsui, N., Peper, F.: Quaternion Neural Network and Its Application. Springer, Berlin (2003)

Luo, L.C., Feng, H., Ding, L.J.: Color image compression based on quaternion neural network principal component analysis. In: Proceedings of the 2010 International Conference on Multimedia Technology, ICMT 2010, China (2010)

Shu, H.Q., Song, Q.K., Liu, Y.R., Zhao, Z.J., Alsaadi, F.E.: Global μ-stability of quaternion-valued neural networks with non-differentiable time-varying delays. Neurocomputing 247, 202–212 (2017)

Liu, Y., Zhang, D.D., Lu, J.Q.: Global exponential stability for quaternion-valued recurrent neural networks with time-varying delays. Nonlinear Dyn. 87(1), 553–565 (2017)

Chen, X.F., Li, Z.S., Song, Q.K., Hua, J., Tan, Y.S.: Robust stability analysis of quaternion-valued neural networks with time delays and parameter uncertainties. Neural Netw. 91, 55–65 (2017)

Rajchakit, G., Sriraman, R.: Robust passivity and stability analysis of uncertain complex-valued impulsive neural networks with time-varying delays. Neural Process. Lett. 53(1), 581–606 (2021)

Rajchakit, G., Kaewmesri, P., Chanthorn, P., Sriraman, R., Samidurai, R., Lim, C.P.: Global stability analysis of fractional-order quaternion-valued bidirectional associative memory neural networks. Mathematics 8(5), 801 (2020)

Rajchakit, G., Chanthorn, P., Kaewmesri, P., Sriraman, R., Lim, C.P.: Global Mittag-Leffler stability and stabilization analysis of fractional-order quaternion-valued memristive neural networks. Mathematics 8(3), 422 (2020)

Tu, Z.W., Cao, J.D., Alsaedi, A., Hayat, T.: Global dissipativity analysis for delayed quaternion-valued neural networks. Neural Netw. 89, 97–104 (2017)

Liu, J., Jian, J.G.: Global dissipativity of a class of quaternion-valued BAM neural networks with time delay. Neurocomputing 349, 123–132 (2019)

Qi, X.N., Bao, H.B., Cao, J.D.: Exponential input-to-state stability of quaternion-valued neural networks with time delay. Appl. Math. Comput. 358, 382–393 (2019)

Huo, N.N., Li, B., Li, Y.K.: Existence and exponential stability of anti-periodic solutions for inertial quaternion-valued high-order Hopfield neural networks with state-dependent delays. IEEE Access 7, 60010–60019 (2019)

Chanthorn, P., Rajchakit, G., Kaewmesri, P., Sriraman, R., Lim, C.P.: A delay-dividing approach to robust stability of uncertain stochastic complex-valued Hopfield delayed neural networks. Symmetry 12(5), 683 (2020)

Chanthorn, P., Rajchakit, G., Thipcha, J., Emharuethai, C., Sriraman, R., Lim, C.P., Ramachandran, R.: Robust stability of complex-valued stochastic neural networks with time-varying delays and parameter uncertainties. Mathematics 8(5), 742 (2020)

Sriraman, R., Rajchakit, G., Lim, C.P., Chanthorn, P., Samidurai, R.: Discrete-time stochastic quaternion-valued neural networks with time delays: an asymptotic stability analysis. Symmetry 12(6), 936 (2020)

Humphries, U., Rajchakit, G., Kaewmesri, P., Chanthorn, P., Sriraman, R., Samidurai, R., Lim, C.P.: Stochastic memristive quaternion-valued neural networks with time delays: an analysis on mean square exponential input-to-state stability. Mathematics 8(5), 815 (2020)

Acknowledgements

Not applicable.

Funding

This work is supported by the Science Research Fund of Education Department of Yunnan Province of China under Grant 2018JS517.

Author information

Authors and Affiliations

Contributions

The authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dai, L., Hou, Y. Mean-square exponential input-to-state stability of stochastic quaternion-valued neural networks with time-varying delays. Adv Differ Equ 2021, 362 (2021). https://doi.org/10.1186/s13662-021-03509-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-021-03509-3