Abstract

The Nagel–Schreckenberg vehicular traffic model is extended to analyse a system of vehicles moving on a scale-free network to study extreme events in them. The dependence of the free-flow and congestion states on the density of vehicles is determined. In particular, a free-flow state at low vehicular density is observed and it gradually transitions to a congested state at higher density. Using detrended fluctuation analysis, it is shown that the flux of vehicles are long range correlated in a regime of low density. At higher densities, the flux becomes uncorrelated. We study the recurrence interval distribution for extreme events and the probability for its occurrence at low and high vehicular density regimes. It is shown that the occurrence probability for extreme events is independent of the degree of the node or the threshold used for defining extreme events

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The vehicular traffic flow in a network of roads is generally identified as an example of a complex system and it has remained an active area of research since the last four decades [1,2,3,4,5]. As a complex system, both interacting random walk type models and realistic ab initio traffic models have shown many symptoms of complexity such as the occurrence of phase transitions upon variation of some control parameter [6,7,8]. Most well-studied phenomenon is that of jamming transition, in which case, the free-flow state changes to a jammed state. It occurs in several models of traffic flow though there have been debates around the question if the models display a smooth or an abrupt transition. Most often, the control parameter is the density of vehicles, a measure of number of vehicles in a given area. For low-density values, free flow is observed and beyond a critical value of density a dynamical phase transition occurs to a jammed state. While many models capture this ingredient, in the last two decades experiments have also revealed the existence of phase transitions in observed traffic flow [9,10,11,12], including time-organisation of traffic [13]. Recently, it was observed in real traffic that the occurrence of these transitions and the time interval between their occurrences is essentially a random process [14]. However, recent trends towards automated driving have inspired adaptive cruise control models for understanding the features of traffic flow in a regime in which both automated driving and human drivers co-exist [15].

Traffic flow is a practical example of an interacting many-particle system that is typically out of equilibrium. Apart from the inherent academic interest in statistical physics of phase transitions in real traffic flows, modelling traffic flows is an important tool in designing new transport facilities, urban planning, prediction and prevention of accidents, improving efficient mobility and in reducing congestion. The traffic models are capable of displaying non-trivial emergent phenomena such as congestion or extreme events. They also provide an algorithm to capture such complex and nonlinear dynamics of traffic systems that might not be easily evident from field research or purely analytical methods.

Congestion is one among the many emergent phenomena in the case of dynamics of many agents (vehicles) on complex networks [6]. Congestion leads to huge delays and even cascading effects on road transportation networks. Typically, congestion emerges in an interacting system when a parameter controlling some coarse-grained measure such as the density of vehicles is varied. At a critical value of the parameter, flux of vehicles through the network decays to zero and effectively all the vehicles are stranded. A typical scenario could be as follows: a large network is defined on which random walkers execute dynamics. Rules of walking algorithm could, in general, include birth and death probabilities of walkers at every node, transition probability from one node to another that could depend on existing traffic at the destination node. Under such conditions, congestion transition appears as one possible outcome. In this scenario, if density of vehicles \(\rho \) is tuned, then the system displays free flow of vehicles for \(\rho < \rho _c\), but transitions to congestion (nearly all vehicles cease to move) for \(\rho > \rho _c\), where \(\rho _c\) is the critical parameter. This is often called the congestion transition and could be thought of as a grid failure in which network effectively loses its primary functionality. As pointed out before, the congestion aspect of traffic systems has been widely investigated both in terms of models and, less frequently, using field data.

On the other hand, a most commonly encountered situation is that of localized traffic snarls that does not necessarily lead to grid or network failure in its entirety. In a recent work, the recovery from such traffic snarls on scale-free networks has been studied using real traffic data showing the presence of three timescales in it [16]. In this work, the focus is on such localized snarls called extreme events, due to the fact that such events are typically associated with the tails of appropriate probability distribution functions. Extreme events have been studied before using several variants of random walkers as agents on complex networks [17]. In Refs. [17, 18], extreme events are defined as events whose magnitude crosses a threshold. The threshold itself is defined in terms of typical flux through a node of the network. It turned out that, in such a setting of random walkers on scale-free networks, probabilities for the occurrence of extreme events are higher on small degree nodes compared to hubs [17]. This counter-intuitive result appears to be generic for uncorrelated random walkers and is robust against changes to routing algorithms and threshold used to classify extreme events. However, an equivalent result for correlated walkers or agents is not known yet. One of the motivations for this work is to examine the extreme event properties for the dynamics of interacting and correlated particles. It must be pointed out that recently large deviation theory was applied to study the extreme values of traffic flow in the NS model and corresponding rate function was obtained [19].

In the case of interacting particles, another question of interest is the distribution of return intervals or recurrence intervals \(\tau \) between successive occurrences of extreme events. If the successive intervals are uncorrelated, then the recurrence distribution is exponential, \(\exp (-\tau /\langle \tau \rangle )\). However, for long range correlated time series with auto-correlation exponent \(\gamma \), the recurrence distribution is a product of a power-law term and a stretched exponential term [20]. Many naturally occurring systems exhibit the property of long-term correlations, as observed in several of hydrological, climate and meteorological data, physiological and IP packet transmission data [21,22,23]. Such correlations have been argued to represent a natural mechanism for the clustering of extreme events [24]. These statistical techniques constitute a powerful set of tools to analyse long-memory time series and understand patterns in the occurrence of accidents, collisions and overloading of infrastructure such as bridges. In time series for which direct estimation of autocorrelation exponent is ambiguous, probing extreme events is an indirect means of inferring long range correlations. In this work, we apply detrended fluctuation analysis to quantify long range correlation exponent \(\alpha \), which is related to the auto-correlation exponent \(\gamma \) (defined below in Eq. 2). It is of interest to understand how recurrence distributions depend on the coarse-grained parameters such as the vehicular density \(\rho \) that control the dynamical behaviour of the entire traffic system.

To understand the properties of extreme events of correlated events on complex networks, we consider a widely studied cellular automata based microscopic model, namely, the Nagel–Schreckenberg (NS) model [25] for vehicular traffic on roads. Along with the behavior of vehicles, infrastructure such as interconnecting roads and junctions play an important role in modelling realistic traffic and congestion patterns. Over the last 2 decades, complex networks [26] has become the unifying framework to understand the underlying network induced effects on the dynamics of traffic flow. Hence, in this work, NS model is implemented on a scale-free network and the resultant dynamics of multiple agents is simulated to study the properties of extreme events, namely, the recurrence distribution and occurrence probability of extreme events.

In Sect. 2, the basic NS model structure and its implementation on a complex network is discussed, In Sects. 3 and 4, simulation results for the flux-density plot, time series of flux flowing through a node, its autocorrelations and DFA exponents are presented. The results for extreme event recurrence distribution and probability for the occurrence of extreme events are discussed in Sect. 5. Finally, a summary is presented in Sect. 6.

2 Nagel–Schreckenberg model

The Nagel–Schreckenberg model is a well-known cellular automaton model for simulating freeway traffic [25]. It is a discrete-space, discrete-time stochastic model and defines a set of rules for vehicle movement on a one-dimensional array of L sites with periodic boundary conditions. Each site contains either one or no vehicle on it. The model is initialized with a fixed density of vehicles, \(\rho =M/L\), where M is the total number of vehicles. Both M and \(\rho \) remain constant throughout the simulation. Of the M vehicles on the lattice, we frame rules for the dynamics of n-th vehicle as follows: it moves with integer velocity \(v_n\), such that \(0 \le v_n < v_{max}\), and it can occupy integer valued position \(x_n\) such that \( 1 \le x_n \le L\). As time changes \(t \xrightarrow {} t+1\), the position of M vehicles on the lattice is updated in parallel according to the following rules :

Acceleration—\(v_n \xrightarrow {} v_n + 1\) if \(v_n < v_{max}\), i.e., vehicle accelerates by one velocity unit until it reaches maximum velocity \(v_{max}\).

Deceleration—Let \(d_n\) be the distance between the nth vehicle and the vehicle in front of it. If \(d_n \le v_n\), the vehicle reduces its speed to \(d_n - 1\) to prevent collision.

Random braking—If \(v_n > 0\), the nth vehicle decreases its velocity by one, i.e., \(v_n \xrightarrow {} v_n - 1\) with probability p. This accounts for random fluctuations due to human behaviour or external conditions, and introduces stochasticity in the model.

Vehicle movement—Each vehicle moves forward according to the \(v_n\) calculated using the above steps, i.e., \(x_n \xrightarrow {} x_n + v_n\).

For the simulations presented in this paper, we use \(v_{max} = 5\), \(p=0.3\), and each time step corresponds to 1 second of real time [25]. The Nagel–Schreckenberg model replicates the main features of real traffic flow at a global level [27], including the formation of spontaneous traffic jams due to the random braking step which also makes the model stochastic.

2.1 NS model on a network

In reality, the topology of transportation systems is much more complex than a regular lattice, and in general includes multiple elements such as interconnected roads, traffic lights and multiple lanes. Detailed models have been developed to mimic traffic on more realistic topologies such as two-dimensional lattices [28] along with added features such as traffic lights and lane changing [5]. Most methods to model traffic dynamics on complex networks primarily employ a hopping mechanism, by which particles (vehicles) jump from one node to any of its connected neighbour according to a set of rules but do not have any dynamics on the edges. In this work, we aim to combine the closer to real-life representation of traffic networks on a complex network topology with the ability of the Nagel–Schreckenberg model to replicate characteristics of traffic flow using simple rules. Hence, we consider a complex network with E edges representing roads and N nodes representing junctions that connect these roads. The network is a directed multi-graph (a graph with possibility of multiple edges connecting the same pair of nodes) where each edge \(e_i\) consists of \(l_i\) lanes in each of the two possible directions, i.e., a total of \(2l_i\) lanes are present on edge \(e_i\). Each lane, representing the basic unit of NS model, corresponds to a one-dimensional lattice of L sites. Figure 1 shows a snapshot of a small region of the network at an arbitrary time. The red circles in this figure indicates vehicle in that site.

The figure shows a small section consisting of 4 nodes of the network at an arbitrary time. Nodes are connected with a bi-directional edge consisting of one lane in each direction. Each lane is a 1-D lattice of L sites (for representational purposes, here \(L=18\)). Red circles on a site indicates the presence of a vehicle at that site

3 Simulations

The simulation of NS model is performed as follows. The network is initialized with \(M =\rho L \sum _{i=1}^{E} 2l_i\) vehicles positioned randomly on the lanes, where the parameter \(\rho \) is the density of vehicles on the network. The total number of vehicles M remains constant for all times. Each vehicle is initialized with \(v_i = 0\) and a destination node. The vehicle follows a random walk until it reaches its destination. For every time step \(t \xrightarrow {} t + 1\), the arrangement of vehicles on each of these lanes is updated independently using the update rules in 2. If a vehicle reaches a junction, it turns onto a new randomly selected lane. If the new lane cannot accommodate it, the vehicle waits at the junction until it can turn. If the junction is its destination node, the vehicle is removed from the network and a new vehicle is initialized at a random location.

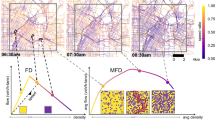

a The fraction of vehicles \(f_w\) waiting on all nodes averaged over all T time steps plotted as a function of \(\rho \), b Flux J, i.e., the number of vehicles passing through nodes per unit time, averaged over all N nodes plotted as a function of \(\rho \). Data are obtained from simulations with \(T = 50000\), \(N = 200\)

The Nagel–Schreckenberg model is simulated on a scale-free network with N nodes for T discrete time steps. A scale-free network is one whose degree distribution follows a power law of the form \(P(k) \sim k^{-\beta }\), where k is the degree and \(\beta \) is the exponent that might typically, but not always, lie in the range \(2 \le \beta \le 3\). Each edge is assumed to be equal in ’length’ and is taken to have \(L = 30\) lattice points in all the simulations.

A vehicle at any time step t can be in either of the two states—moving or waiting. A waiting vehicle is stuck at a junction since the lane it should turn on to next cannot accommodate it. As a result, the vehicles lined up behind this vehicle also enter the waiting state. Fraction of waiting vehicles can determine if the traffic is in free flow or congestion (jammed state).

In Fig. 2a, the fraction of waiting vehicles summed over all the nodes, \(f_w = \sum _{i=1}^{N} f_{i}\), is shown as a function of density \(\rho \) of vehicles. In this, \(f_{i}\) for node i is obtained by averaging the fraction of waiting vehicles over all time steps. If \(f_w \sim 1\), then it suggests a jammed state where most of the cars are not moving on the network, and \(f_w \sim 0\) suggests free-flow state on the network. As observed in Fig. 2a, upon increasing the density of vehicles, the fraction of waiting vehicles increases depicting a transition from free-flow to congestion state.

Another quantifier of traffic is the flux rate J, i.e., the number of vehicles passing through junctions (nodes) per unit time. Figure 2b shows the flux of vehicles transiting through the nodes per unit time, averaged over all the N nodes, varying with density \(\rho \). The average flux peaks at \(\rho = 0.4\) indicating the optimal density at which the flux of vehicles is maximum. However, any inference about the free flow of traffic must be drawn by considering not only the average flux, but also the fraction of cars in waiting state. A higher density would implicitly suggest more flux due to the larger number of vehicles on the move. However, a large fraction of waiting vehicles suggests an increasingly congestive state. Thus, taken together, Fig. 2a, b present a global view of the NS model dynamics on a network. It is relevant to point out that mean flux as shown in Fig. 2b has some support from the empirical measurements of traffic as well [29,30,31].

4 Long range correlations and DFA

In the last ten years, there is an increasing interest in the long range dependence in traffic flow [32,33,34,35,36]. The number of vehicles flowing through junctions (nodes) is an important quantity of interest in road traffic analysis. A surge in the flux of vehicles through junctions can point to an upcoming traffic jam. In this section, the time series of the flux of vehicles flowing through nodes is analysed. It is interesting to question whether successive values of flux are positively or negatively correlated or uncorrelated. A positive correlation would imply that large flux tends to be followed by larger values of flux (increasing trend) or small values followed by even smaller values (decreasing trend). For a sample time series data \(x_t, t = 1,\ldots ,T\), the auto-correlation is defined by,

where \({\bar{x}}\) is the mean of the time series, \(\sigma _x\) is the standard deviation and \(\langle ... \rangle \) represents the average taken over time steps \(t = 1,\ldots , T-s\) as \(T \rightarrow \infty \). For a time series with long term correlation, the auto-correlation function generally follows a power law decay of the form

where \(\gamma \) is the auto-correlation exponent. The correlation integral diverges in this range of \(\gamma \) leading to diverging moments. In contrast to the power-law of Eq. 2, for short range correlated time series the auto-correlation function is well approximated by an exponential decay of the form \(\exp (-s/\langle s \rangle )\).

In the original Nagel–Schreckenberg model, one time step corresponds to one unit of time [25]. In this work, sampling flux values at every time step leads to sparse auto-correlation values and hence, to analyse the auto-correlations, the value of flux is integrated over ten time steps, i.e., the values of flux at any instant of time t represents the sum of flux in the previous ten time steps. Furthermore, summing up the values every 10 time steps mimics a sampling rate closer to real-world traffic measurements. Direct estimation of \(\gamma \) using Eq. 2 can be ambiguous and unreliable due to non-stationarities in the data. Hence, the Detrended Fluctuation Analysis (DFA) [37, 38] is used to reliably estimate the DFA exponent \(\alpha \), and \(\gamma \) is obtained using the relation \(\gamma = 2 - 2\alpha \) [38]. Note that the regime of long range correlation with \(0< \gamma < 1\) maps to \(0.5< \alpha < 1\). DFA has been extensively studied and reported in the literature [38, 39], and hence, we recall only its basic steps here.

Time series of flux (J) through the node with highest degree, and sampled for, a 200 time steps (equivalent to 2000 time steps originally, integrated over intervals of 10 time steps), and b 2000 time steps (equivalent to 20,000 time steps originally, integrated over intervals of 10 time steps). Data taken from simulations with \(T = 50,000\) time steps on a scale-free network with \(N = 200\) nodes, averaged over 30 realisations

Given a time series x(t), to perform DFA, the first step is to create its cumulative sum given by

where \(\langle . \rangle \) is the mean. The profile y(t) is divided into windows of length L. In each window, a polynomial function \(y_{L,d}\) of order d is used for removing the trend. The case of \(d=1\) corresponds to DFA(1). Then, the fluctuation function F(L) is defined as,

where \(<.>\) represents averaging taken with respect to windows of identical length L. For long range correlated time series, it is expected that \(F(L) \sim L^{\alpha }\), where \(\alpha \) is the DFA exponent. If \(\alpha = 0.5\), then it implies absence of correlations in the data. For \(\alpha < 0.5\), the time series is said to display anti-persistence or anti-correlation. When \(0.5< \alpha < 1\), then it indicates presence of memory effect, often termed persistence or long range correlation. In the present problem, DFA is performed on the time series data of the number of vehicles passing through the node with highest degree. We focus on five values of density \(\rho \) for our analysis: \(\rho = 0.06, 0.2, 0.4, 0.6\) and 0.8. This choice corresponds to free-flow state at \(\rho =0.06\), while \(\rho =0.2, 0.4, 0.6\) and 0.8 correspond to increasing proportions of waiting vehicles. At \(\rho =0.4\), only about 40% of the vehicles are in free-flow state. As \(\rho \rightarrow 1\), the system is dominated by a large fraction of waiting vehicles and for \(\rho \sim 1\) extreme event analysis may not yield any useful results due to negligible flux of vehicles.

In Fig. 3a, a short time series of the flux passing through the highest degree node is shown for three different density values. To get a broader picture, a longer time series is displayed in Fig. 3b. To determine the autocorrelation exponent, we apply DFA technique to this time series. Figure 4 shows the DFA fluctuation function on log–log plot with a linear fit for the same set of density values as in Fig. 3a, along with \(\rho = 0.6\) and 0.8. It is observed that the flux corresponding to densities \(\rho =0.06\) and 0.2 displays long range correlation, while that for \(\rho =0.4\) displays nearly uncorrelated behaviour. Note that \(\rho =0.4\) is the density at which flux is maximum, as seen in Fig. 2b. Thus, using the relation between \(\alpha \) and \(\gamma \), we get \(\gamma = 0.66\) (\(\rho = 0.06\)), \(\gamma = 0.82\) (\(\rho = 0.2\)), \(\gamma =1.04\) (\(\rho = 0.4\)), \(\gamma =0.99\) (\(\rho =0.6\)) and \(\gamma =1.02\) (\(\rho =0.8\)). Presence of long-term correlation for \(\rho < 0.4\) indicates that the process exhibits long memory, i.e., the number of vehicles passing through a node are correlated in time. For positive correlations, surges in flux will most likely be followed by surges in flux, and declines in flux will likely be followed declines in flux. Essentially, highs and lows in the flux are clustered together in time, and are not random. For the uncorrelated case at \(\rho \gtrsim 0.4\), we can see that the surges in flux will be uncorrelated as well.

Detrended fluctuation function F(L) of the time series data of flux \(x_t\) through the node with highest degree. The fluctuation function is plotted against the window size L on a log–log scale. The red straight lines (with slopes annotated) are obtained from regression. Data are from simulations with \(T = 50,000\) time steps on a scale-free network with \(N = 200\) nodes, averaged over 30 simulations

5 Extreme events on nodes

5.1 Return interval distributions

A surge in the number of vehicles passing through a junction might be an indicator of impending traffic jams and even potential accidents. In this section, we examine the occurrence of extreme events in the flux of vehicles passing through nodes, specifically the return time interval distribution of extreme events. Given that for small values density, \(\rho < 0.4\), long range correlation was observed in the flux through a node (see Fig. 4), it can be anticipated that the recurrence interval distribution will show an enhanced weight at returns intervals \(r \approx 0\), when compared with the case when the flux is uncorrelated.

For time series of flux denoted by \(x_t\), an event at time \(t=\tau \) is called an extreme event (EE) if

where \(\mu \ge 0\) determines the threshold \(\phi \) to identify extreme events. In this, \({\bar{x}}\) is the mean flux and \(\sigma _x\) is the standard deviation in the flux. The return interval r is defined as the time interval between successive occurrences of extreme events. It is often convenient to change to scaled return interval defined as \(R = r/\langle r \rangle \), where \(\langle r \rangle \) is the mean return interval and will depend on \(\mu \). However, for the present purposes, we suppress the dependence on \(\mu \). It is known that for a long range correlated time series with autocorrelation exponent \(\gamma \), the recurrence distribution in the limit of \(R>> 1\) is a stretched exponential distribution given by [40],

where A and B are constants. In contrast to this, for an uncorrelated time series, the recurrence distribution is an exponential distribution given by

In essence, for long-term correlated time series, the probability P(R) behaves differently from the case of uncorrelated time series, especially as \(R \rightarrow 0\).

Probability distribution of return intervals for threshold \(\mu = 1\) on the log–log scale, fit to Eq. (6) (red curve) with parameters \(A,B, \gamma \), and Eq. (7) (blue curve) with parameters C, D. For (a) \(A=0.18, B=0.58, \gamma =0.70, C=0.09, D=1.13\), (b) \(A=0.3, B=0.58, \gamma =0.79, C=0.19, D=0.91\), (c) \(A=0.15, B=1.2, \gamma =1.24, C=0.20, D=0.91\), (d) \(A=0.13, B=1.43, \gamma =1.58, C=0.22, D=0.89\). Data taken from simulations with \(T = 50,000\) time steps on a scale-free network with \(N = 200\) nodes, averaged over 30 realisations

The return interval distributions obtained from simulations are compared with the analytical predictions given by Eqs. 6 and 7, for which the parameters A, B and \(\gamma \) are obtained through a regression procedure. For \(\rho =0.06\) in Fig. 5a, the stretched exponential fits the probability of return intervals better than the pure exponential, and the estimated value of exponent is \(\gamma =0.70\). This value of autocorrelation exponent agrees with \(\gamma = 2 - 2 \alpha =0.66\) found through the DFA technique. A similar agreement is observed for \(\rho =0.2\) in Fig. 5b with \(\gamma \) estimated through Eq. 6 is \(\gamma = 0.79\) and from DFA technique is \(\gamma = 0.82\). However, for \(\rho =0.4\) and \(\rho =0.6\) displayed in Fig. 5c, the simulation data are consistent with an exponential distribution rather than with stretched exponential distribution. The same was observed for \(\rho =0.8\).

A stretched exponential probability distribution for the return time intervals suggests that very short and very long return intervals are more frequent than they would be in uncorrelated data (in which case, the probability distribution would be purely exponential). Hence, it is evident that there is a bunching effect of extreme events for lower densities of vehicles. Remarkably, for \(\rho \gtrsim 0.4\), the extreme events in flux become uncorrelated. In Fig. 2b, a specific value of flux \(f_0\) corresponds to two different values of density, say, \(\rho =\rho _1\) and \(\rho =\rho _2\) such that \(\rho _2 > \rho _1\). Though the flux is identical at \(\rho _1\) and \(\rho _2\), the fraction of waiting cars is higher at \(\rho _2\) than at \(\rho _1\) (see Fig. 2a). Thus, any correlation built up by the vehicular flux is nullified by large fraction of vehicles that are not flowing. Hence, as the fraction of waiting vehicles increases, the temporal ordering of the correlated flux is disturbed leading to effectively uncorrelated behaviour as \(\rho \rightarrow 1\).

5.2 Probability for occurrence of extreme events

In this section, extreme events in time series data of number vehicles flowing through a node are analysed, with the definition of an extreme event given in Eq. 5. The probability of EE occurrence is easily computed as the ratio of total number of extreme events on a given node to the number of discrete time steps. As in previous sections, we focus on densities \(\rho = 0.06, 0.2, 0.4\) and 0.6 to calculate the probability of an extreme event \(g_k\) on a node with degree k as the average of the probability of extreme events on all nodes with degree k.

Figures 6, 7 and 8 show the probability for the occurrence of EE (for several values of extreme event thresholds) against the degree of nodes for \(\rho = 0.06, 0.2\) and 0.6, respectively. In all cases, the occurrence probability for EE is approximately uniform over the nodes, and no significant trend can be observed. Though not shown here, the occurrence probability for \(\rho =0.6\) also shows a similar behaviour. These are in contrast to the case of extreme events on a scale-free network with uncorrelated random walkers [17], in which case the extreme event probability has a strong dependence on the degree of the nodes. Hence, the property of uniform occurrence probability over all the nodes can be thought of as one possible signature of interactions among the vehicles. This would also imply that small degree nodes and hubs on the network have the same likelihood of encountering extreme events. It is also understandable that as threshold \(\mu \) increases, the EE probability is lower but not zero. The EE probability, for a fixed threshold \(\phi \), does not display pronounced dependence on density \(\rho \) as well.

All these observations lead us to the following broad result—that for NS model of traffic flow on a scale-free network, the extreme events on nodes, defined with respect to a specific threshold, are approximately equiprobable irrespective of the nodal degree. However, even if equiprobable on nodes, the return interval distribution reveals that for \(\rho < 0.4\), extreme events can be clustered together while for \(\rho > 0.4\) they are uncorrelated. Hence, this can be thought of as a transition from correlated to uncorrelated dynamics as density of vehicles increases.

6 Summary and conclusions

In summary, the celluar automata based rules of the Nagel–Schreckenberg model of traffic flow were extended to model traffic flow on a scale-free network. The observables such as the vehicular flux and the number of waiting cars were analysed to detect the extent of congestion or free flow in the traffic. On the network, we observe that a plot of flux against density (fundamental diagram) is qualitatively similar to the case of traffic flow on a simpler linear lattice. The vehicular flow is maximum at a special value of density, \(\rho =\rho _s=0.4\) in the case reported here. In this work, the focus is on the extreme events in this interacting many-particle system. The interactions arise due to finite capacity of the nodes (road junctions) to service the vehicles. Hence, a queue gets built up impeding free flow of traffic.

First, using the detrended fluctuation analysis technique, we have shown that the time series of flux for \(\rho < \rho _s\) is long range correlated, and at \(\rho >\rho _s\) is uncorrelated. Hence, at the density at which flux is maximum on the network, the flux is temporally uncorrelated. Indeed, for \(\rho > \rho _s\), flux continues to remain uncorrelated. Furthermore, we define extreme events on any specific node as an exceedence over the typical size of flux passing through that node. Based on this criteria, it is shown that the return interval distribution for extreme events agree with analytical results obtained earlier for long range correlated time series in the regime when \(\rho < \rho _s\). As anticipated based on DFA exponents, at \(\rho > \rho _s\), the return interval distribution reduces to pure exponential form corresponding to an uncorrelated process. Finally, the probabilities for the occurrence of extreme events on nodes are computed. Remarkably, it is observed that the occurrence probability is independent of the density of vehicles \(\rho \) and also the threshold used to determine extreme events. The extreme event probability is approximately uniform over the nodes of the network and does not depend on their degree. In this work, we have used a fixed value of the probability of random braking in the NS model, i.e., \(p=0.3\). While auto-correlations can change quantitatively as a function of p, we do not expect any qualitative change in the results due to changes in p except close to deterministic limits of \(p=0\) and \(p=1\). This can be explored in an extensive work on this problem.

It would be interesting to extend this work in several directions, in particular, examining the role of network topology in developing correlations among extreme events, and also if extreme events can disable the network functionality as a whole through appropriate modelling efforts. This work has provided initial pointers to the properties of extreme events in a traffic model. This line of study must be taken up using more refined Nagel–Schreckenberg class of models to examine the properties of extreme events in greater detail. Finally, results coming out of such extreme events study must also be compared with real traffic data.

References

F. van Wageningen-Kessels, H. van Lint, K. Vuik, S. Hoogendoorn, EURO J. Transport. Log. 4, 445 (2015)

I. Prigogine, R. Herman, Kinetic Theory of Vehicular Traffic (American Elsevier, New York, 1971)

S. Maerivoet, B. De Moor, Phys. Rep. 419, 1 (2005)

D. Helbing, Rev. Mod. Phys. 73, 1067 (2001)

D. Chowdhury, L. Santen, A. Schadschneider, Phys. Rep. 329, 199 (2000)

D. De Martino, L. DallAsta, G. Bianconi, M. Marsili, Phys. Rev. E 79, 015101(R) (2009)

S. Manfredi, E. Di Tucci, V. Latora, Phys. Rev. Lett. 120, 068301 (2018)

C. Dabrowski, Comput. Sci. Rev. 18, 10 (2015)

Shin-ichi Tadaki et al., New J. Phys. 15, 103034 (2013)

Y. Sugiyama et al., New J. Phys. 10, 033001 (2008)

A. Nakayama et al., New J. Phys. 11, 083025 (2009)

B.S. Kerner, Phys. Rev. Lett. 81, 3797 (1998)

G. Zeng et al., PNAS 116, 23 (2019)

Y. Dülgar et al., J. Intell. Trans. Sys. 24, 539 (2019)

B.S. Kerner, Phys. Rev. E 97, 042303 (2018)

L. Zhang et al., PNAS 116, 8673 (2019)

M.S. Vimal Kishore, A. Santhanam, R.E. Amritkar, Phys. Rev. Lett. 106, 188701 (2011)

M.S. Vimal Kishore, A. Santhanam, R.E. Amritkar, Phys. Rev. E 85, 056120 (2012)

W. Staffeldt, A.K. Hartmann, Phys. Rev. E 100, 062301 (2019)

M.S. Santhanam, A. Holger Kantz, Phys. Rev. E 78, 051113 (2008)

Jan. Beran, Statistics for Long-Memory Processes (Vol. 61), (Chapman and Hall/CRC, 1994)

Wilfredo Palma, Long-Memory Time Series: Theory and Methods (John Wiley & Sons, New York, 2007).

Vladas Pipiras and Murad S. Taqqu, Long Range Dependence and Self-Similarity (Vol. 45), (Cambridge University Press, 2017)

A. Bunde et al., Phys. Rev. Lett. 94, 048701 (2005)

K. Nagel, M. Schreckenberg, J. Phys. I 2, 2221 (1992)

Réka. Albert, Albert-László. Barabsi, Rev. Mod. Phys. 74, 47 (2002)

W. Knospe, L. Santen, A. Schadschneider, M. Schreckenberg, Phys. Rev. E 70, 016115 (2004)

O. Biham, A. Middleton, D. Levine, Phys. Rev. A 46(10), R6124 (1992)

N. Geroliminis, C.F. Daganzo, Transport. Res. Part B Methodol. 42, 759 (2008)

B. Piccoli, A. Tosin, Encycl. Complex. Syst. Sci. 22, 9727 (2009)

B. Seibold et al., Netw. Heterog. Media 8, 745 (2013)

Y.D. Chen et al., Mod. Phys. Lett. B 22, 101 (2008)

M.R. Perati et al., J. Transp. Eng. 138, 1233 (2012)

P. Shang, Y. Lu, S. Kama, Phys. A 370, 769 (2006)

S. Feng et al., Phys. A 492, 639 (2018)

S. Chand, G. Aouad, V.V. Dixit, Transp. Res. Rec. 2616, 49 (2017)

R. Hardstone et al., Front. Physiol. 3, 450 (2012)

J.W. Kantelhardt et al., Phys. A 295, 441 (2001)

C.-K. Peng, S. Havlin, H.E. Stanley, A.E. Goldberg, Chaos 5, 82 (1995)

A. Bunde et al., Phys. A 330, 1 (2003)

Author information

Authors and Affiliations

Contributions

MSS introduced the problem, KG worked out the details and performed the simulations. Both KG and MSS wrote the manuscript.

Corresponding author

Rights and permissions

About this article

Cite this article

Gupta, K., Santhanam, M.S. Extreme events in Nagel–Schreckenberg model of traffic flow on complex networks. Eur. Phys. J. Spec. Top. 230, 3201–3209 (2021). https://doi.org/10.1140/epjs/s11734-021-00016-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1140/epjs/s11734-021-00016-0