Abstract

In this paper, we study Korovkin-type approximation for double sequences of positive linear operators defined on the space of all real valued \(B-\)continuous functions via the notion of statistical convergence in the sense of power series methods instead of Pringsheim convergence. We present an interesting application that satisfies our new approximation theorem which wasn’t satisfied the one studied before. In addition, we derive the rate of convergence of the proposed approximation theorem. Finally, we give a conclusion for periodic functions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 INTRODUCTION AND PRELIMINARIES

The subject of Korovkin-type theory was initiated by Korovkin in 1960 in his pioneering paper [24], and it has been widely studied later on. It is worthwhile to point out that the Korovkin-type theory is about approximation to continuous functions by means of positive linear operators (also, see [1, 24]). Many researchers have studied Korovkin-type theory (see, for example [15, 18, 28, 29]). Moreover, the theory has been given various motivations such as relaxing the continuity of the functions, relaxing the notion of convergence. Aiming the improvement of the classical Korovkin theory, Badea et al. used the space of Bögel-type continuous (simply, \(B\)-continuous) functions in place of the ordinary continuity [3, 4, 6]. Moreover, from a different perspective, Gadjiev and Orhan [23] have used the notion of statistical convergence in order to prove the Korovkin-type approximation theorem. Afterwards, the studies including this convergence and its variants have been studied by authors (see [14, 16, 17, 21, 26, 30, 35]).

First, let us recall the notion of Pringsheim convergence.

As usual, \(\mathbb{N}\) denote the set of all-natural numbers. It is said that a double sequence \(x=\{x_{m,n}\}\) is Pringsheim convergent if, for every \(\varepsilon\) \(>0,\) there exists \(M=M(\varepsilon)\in\mathbb{N}\) such that \(\left|x_{m,n}-L\right|<\varepsilon\) whenever \(m,n>M.\) Here, \(L\) is called the Pringsheim limit of \(x\) and this is denoted by \(P-\lim_{m,n}x_{m,n}=L\) (see [27]). If there exists a positive number \(N\) such that \(\left|x_{m,n}\right|\leq N\) for all \((m,n)\in\mathbb{N}^{2}=\mathbb{N}\times\mathbb{N},\) then a double sequence is called bounded. Notice that, unlike a convergent single sequence, a convergent double sequence need not to be bounded.

Steinhaus [32] and Fast [22] gave the notion of statistical convergence of sequences of real numbers, independently. There are many variants of statistical convergence in the literature. Recently, Unver and Orhan introduced statistical convergence with respect to power series methods in [33]. More recently, Yıldız, Demirci and Dirik [34] extended this notion of convergence to double sequences. Before these notions of the statistical type of convergence, let us first remind the notions of natural density and statistical convergence.

Let \(K\) be a subset of \(\mathbb{N}_{0}.\) The natural density of \(K,\) denoted by \(\delta\left(K\right)\), is given by

whenever the limit exists, where \(\#\left\{\cdot\right\}\) denotes the cardinality of a set. It is said that a sequence \(x=\left\{x_{n}\right\}\) is statistically convergent provided that for every \(\varepsilon>0\) it holds

This is denoted by \(st-\lim_{n}x_{n}=L.\) It is evident from the definition that every convergent sequence (in the usual sense) is statistically convergent to the same limit, while a statistically convergent sequence need not to be convergent.

Let us turn our attention to the notion of statistical convergence for double sequences.

If \(E\subset\mathbb{N}_{0}^{2}=\mathbb{N}_{0}\times\mathbb{N}_{0},\) then \(E_{j,k}:=\) \(\left\{\left(m,n\right)\in E:m\leq j,n\leq k\right\}.\) The double natural density of \(E,\) denoted by \(\delta_{2}(E)\), is given by

whenever the limit exists ([25]). Let \(x=\left\{x_{m,n}\right\}\) be a number sequence. It is statistically convergent to \(L\) if for every \(\varepsilon>0,\) the set

has zero natural density, in which case we write \(st_{2}-\lim_{m,n}x_{m,n}=L\) ([25]).

It follows from the definition that a Pringsheim convergent double sequence is statistically convergent to the same value while a statistically convergent double sequence need not to be Pringsheim convergent. Notice that, a statistically convergent double sequence need not to be bounded.

Now we recall the statistical convergence with respect to power series methods. First, let us turn our attention to the power series method.

In what follows \(\left\{p_{m,n}\right\}\) will be a given non-negative real double sequence such that \(p_{00}>0\) and the corresponding power series

has a radius of convergence \(R\) with \(R\in\left(0,\infty\right]\) and \(t,s\in\left(0,R\right).\) If for all \(t,s\in\left(0,R\right),\) the limit

exists, then it is said that \(x\) is convergent in the sense of power series method, and this is denoted by \(P_{p}^{2}-\lim x_{m,n}=L\) ([7]). It is worth to point out that the method is regular if and only if

hold (see, e.g. [7]).

Remark 1. Let us notice first that in case of \(R=1,\) the power series method coincides with Abel summability method and logarithmic summability method when \(p_{mn}=1\) and \(p_{mn}=\frac{1}{\left(m+1\right)\left(n+1\right)},\) respectively. In the case of \(R=\infty,\) the power series method coincides with Borel summability method when \(p_{mn}=\frac{1}{m!n!}.\)

In this article, the power series method is always assumed to be regular.

Before giving the next definition, it is worthwhile to point out that, Ünver and Orhan [33] have recently introduced \(P_{p}\)-density of \(E\subset\mathbb{N}_{0}\) and the definition of \(P_{p}\)-statistical convergence for single sequences. Hence, they have showed that statistical convergence and statistical convergence in the sense of power series methods are incompatible. In view of their work, Yıldız, Demirci and Dirik [34] have more recently introduced the definitions of \(P_{p}^{2}\)-density of \(F\subset\mathbb{N}_{0}^{2}=\mathbb{N}_{0}\times\mathbb{N}_{0}\) and \(P_{p}^{2}\)-statistical convergence for double sequences:

Definition 1 [34]. Let \(F\subset\mathbb{N}_{0}^{2}.\) If the limit

exists, then \(\delta_{P_{p}}^{2}\left(F\right)\) is called the \(P_{p}^{2}\)-density of \(F.\) Notice that, it is not difficult to see from the definition of a power series method and \(P_{p}^{2}\)-density that \(0\leq\delta_{P_{p}}^{2}\left(F\right)\leq 1\) if it exists.

Definition 2 [34]. Let \(x=\left\{x_{m,n}\right\}\) be a double sequence. Then, \(x\) is said to be statistically convergent to \(L\) in the sense of power series method (\(P_{p}^{2}\)-statistically convergent) if for any \(\varepsilon>0\)

where \(F_{\varepsilon}=\left\{\left(m,n\right)\in\mathbb{N}_{0}^{2}:\left|x_{m,n}-L\right|\geq\varepsilon\right\},\) that is \(\delta_{P_{p}}^{2}\left(F_{\varepsilon}\right)=0\) for any \(\varepsilon>0.\) This is denoted by \(st_{P_{p}}^{2}\)-\(\lim x_{m,n}=L.\)

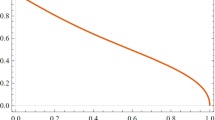

Example 1. Let \(\left\{p_{m,n}\right\}\) be defined as follows

and take the sequence \(\left\{s_{m,n}\right\}\) defined by

We calculate that, since for any \(\varepsilon>0,\)

\(\left\{s_{m,n}\right\}\) is \(P\)-statistically convergent to \(1.\) However, the sequence \(\left\{s_{m,n}\right\}\) is not statistically convergent to \(1.\)

We now pause to collect some basic notions and notations including \(B\)-continuity.

Bögel [10–12] first introduced the definition of \(B\)-continuity as follows.

Let \(X_{1}\) and \(X_{2}\) be compact subsets of the real numbers, and let \(D=X_{1}\times X_{2}.\) Then, a function \(g:D\rightarrow\) \(\mathbb{R}\) is called a \(B\)-continuous at a point \(\left(x,y\right)\in D\) provided that for every \(\varepsilon>0,\) there exists a positive number \(\delta=\delta(\varepsilon)\) such that \(\left|\Delta_{x,y}\left[g\left(u,v\right)\right]\right|<\varepsilon,\) for any \(\left(u,v\right)\in D\) with \(\left|u-x\right|<\delta\) and \(\left|v-y\right|<\delta.\) The symbol \(\Delta_{x,y}\left[g\left(u,v\right)\right]\) stands for the mixed difference of \(g\) defined by

As usual, the symbol \(C_{b}(D)\) stands for the space of all \(B-\)continuous functions on \(D\) and also, \(C(D)\) (or \(B(D)\)) denote the space of all continuous (in the usual sense) functions (or the space of all bounded function on \(D\)). The supremum norm on the spaces \(B(D)\) is also given by

Then, it can be easily seen that \(C(D)\subset C_{b}(D).\) Moreover, one concludes that for any unbounded \(B\)-continuous function of type \(g(u,v)=g_{1}(u)+g_{2}(v),\) we have \(\Delta_{x,y}\left[g\left(u,v\right)\right]=0\) for all \((x,y),(u,v)\in D.\)

We remind that the following lemma for \(B\)-continuous functions was first proved by Badea et al. [4].

Lemma 1 ([4]). If \(g\in C_{b}(D),\) then, for every \(\varepsilon>0,\) there are positive numbers \(A_{1}(\varepsilon)=A_{1}(\varepsilon,g)\) and \(A_{2}(\varepsilon)=A_{2}(\varepsilon,g)\) such that the inequality

holds for all \((x,y),\) \((u,v)\in D.\)

The paper is organized as follows. In the next section, we study on Korovkin-type approximation for double sequences of positive linear operators defined on the space of all real-valued \(B\)-continuous functions via the notion of statistical convergence in the sense of power series methods instead of Pringsheim convergence. Then, we present an interesting application that satisfies our new approximation theorem which wasn’t satisfied the one studied before. In Section 3, we compute the rate of convergence of our proposed approximation theorem. Finally, we give a conclusion for periodic functions in Section 4.

2 A KOROVKIN-TYPE APPROXIMATION THEOREM

Let \(T\) be a linear operator from \(C_{b}\left(D\right)\) into \(B\left(D\right).\) As usual, we say that \(T\) is a positive linear operator if \(g\geq 0\) implies \(T\left(g\right)\geq 0.\) The value of \(T\left(g\right)\) at a point \(\left(x,y\right)\in D\) denoted by \(T(g(u,v);x,y)\) or, briefly, \(T(g;x,y).\)

Here and throughout the paper, for fixed \((x,y)\in D\) and \(g\in C_{b}(D),\) the function \(G_{x,y}\) defined as follows:

It is easy to verify that the \(B-\)continuity of \(g\) implies the \(B\)-continuity of \(G_{x,y}\) for every fixed \((x,y)\in D\), since it holds

for all \((x,y),\) \((u,v)\in D\). The following test functions are also used throughout the paper

Badea et al. [4] gave the following Korovkin-type approximation theorem via \(B\)-continuity.

Theorem 1 [4]. Let \(\{T_{m,n}\}\) be a sequence of positive linear operators acting from \(C_{b}\left(D\right)\) into \(B\left(D\right).\) Assume that the following conditions hold:

\((i)\) \(T_{m,n}(e_{0};x,y)=1\) for all \((x,y)\in D\) and \((m,n)\in\mathbb{N}^{2},\)

\((ii)\) \(T_{m,n}(e_{1};x,y)=e_{1}(x,y)+u_{m,n}(x,y),\)

\((iii)\) \(T_{m,n}(e_{2};x,y)=e_{2}(x,y)+v_{m,n}(x,y),\)

\((iv)\) \(T_{m,n}(e_{3};x,y)=e_{3}(x,y)+w_{m,n}(x,y),\)

where \(\{u_{m,n}(x,y)\},\) \(\{v_{m,n}(x,y)\}\) and \(\{w_{m,n}(x,y)\}\) converge to zero uniformly on \(D\) as \(m,n\rightarrow\infty\) (in any manner). Then, the sequence \(\{T_{m,n}\left(G_{x,y};x,y\right)\}\) converges uniformly to \(g(x,y)\) with respect to \((x,y)\in D,\) where \(G_{x,y}\) is given by (3).

It is worth noting that if the condition \((i)\) is replaced by

\((i^{\prime})\) \(T_{m,n}(e_{0};x,y)=1+\alpha_{m,n}(x,y)\)

where \(\{\alpha_{m,n}(x,y)\}\) converge to zero uniformly on \(D\) as \(m,n\rightarrow\infty\) (in any manner), then the simple, but not uniform, convergence of the sequence \(\{T_{m,n}\left(G_{x,y}\right)\}\) to \(g\) for any \(g\in C_{b}(D),\) is obtained.

Now we can give the following main result of the present paper.

Theorem 2. Let \(\{T_{m,n}\}\) be a sequence of positive linear operators acting from \(C_{b}\left(D\right)\) into \(B\left(D\right).\) Assume that the following conditions hold:

and

Then, for all \(g\in C_{b}(D),\) we have

where \(G_{x,y}\) is given by (3).

Proof. Let \((x,y)\in D\) and \(g\in C_{b}\left(D\right)\) be fixed. Putting

thanks to (4), we get that

Using the \(B\)-continuity of the function \(G_{x,y}\) given by (3), Lemma 1 implies that, for every \(\varepsilon>0,\) there exist two positive numbers \(A_{1}(\varepsilon)\) and \(A_{2}(\varepsilon)\) such that

holds for every \((u,v)\in D.\) Also, thanks to (4), we can easily see that

holds for all \(\left(m,n\right)\in S.\) Since, \(T_{m,n}\) is linear and positive, for all \(\left(m,n\right)\in S,\) it follows from (9) and (10) that

where \(A(\varepsilon)=\max\{A_{1}(\varepsilon),A_{2}(\varepsilon)\}\)and hence,

holds for all \(\left(m,n\right)\in S.\) Now, taking the supremum over \((x,y)\in D\) on the both-sides of inequality (11), we have for all \(\left(m,n\right)\in S\)

For a given \(\varepsilon^{\prime}>0,\) choose \(\varepsilon>0\) such that \(\varepsilon<3\varepsilon^{\prime}\) and define

Thanks to (11), we get\(K\cap S\subseteq\bigcup_{i=1}^{3}(K_{i}\cap S),\) since

and, from hypotheses (5), we get \(\delta_{P_{p}}^{2}\left(K_{i}\right)=0,\) \(i=1,2,3,\) yielding

Moreover,

Thanks to (8) and (13), we can easily see that

which means

\(\Box\)

It is known that, for some \(g\in C_{b}(D),\) the function \(g\) may be unbounded on the compact set \(D.\) However, thanks to the conditions (4), (9) and (10), we can say that the number

in Theorem 2 is finite for each \(\left(m,n\right)\in S,\) where \(S\) is given by (7).

Now, we give an interesting example showing that our result in Theorem 2 is stronger than its classical version Theorem 1. We also see that the statistical Korovkin-type theorem given in [20] does not work for our new defined operators.

Example 2. Consider the following the Bernstein–Stancu-type operators [1]

where \(G_{x,y}\) is given by (3), and \((x,y)\in D=[0,1]\times[0,1],\) \(\alpha\), \(\beta\), \(\gamma\), \(\delta\) are fixed real numbers; \(g\in C_{b}(D).\) Then, thanks to Theorem 1, it is known that for any \(g\in C_{b}(D)\)

Now, we define the following positive linear operators on \(C_{b}(D)\) as follows:

where \(\left\{s_{m,n}\right\}\) given by (2). Observe that the sequence of positive linear operators \(\{T_{m,n}\}\) defined in (16), satisfies all the hypotheses of Theorem 2. So, by (15) and (2), we have

Since \(\left\{s_{m,n}\right\}\) is not \(P\)-convergent, the sequence \(\{T_{m,n}(g;x,y)\}\) given by (16) does not converge uniformly to the function \(g\in C_{b}(D).\) Thus, we get that Theorem 1 does not work for our new operators in (16). However, our Theorem 2 still works. Since \(\left\{s_{m,n}\right\}\) is not statistically convergent, the sequence \(\{T_{m,n}(g;x,y)\}\) given by (16) is not statistically uniformly convergent. Hence, the statistical Korovkin-type theorem given by Dirik, Duman and Demirci [20] does not work.

3 RATES OF \(P_{p}^{2}\)-STATISTICAL CONVERGENCE

In the present section, we calculate the rates of \(P_{p}^{2}\)-statistical convergence of a double sequence of positive linear operators by means of the modulus of continuity. Now, we begin with following definitions.

Definition 3. Let \(\left\{\alpha_{m,n}\right\}\) be a positive non-increasing double sequence. A double sequence \(x=\left\{x_{m,n}\right\}\) is \(P_{p}^{2}\)-statistically convergent to a number \(L\) with the rate of \(o(\alpha_{m,n})\) provided that for every \(\varepsilon>0,\)

where \(M(\varepsilon):=\) \(\left\{\left(m,n\right):\left|x_{m,n}-L\right|\geq\varepsilon\alpha_{m,n}\right\},\) and denoted by \(x_{m,n}-L=st_{P_{p}}^{2}-o(\alpha_{m,n}).\)

Definition 4. Let \(\left\{\alpha_{m,n}\right\}\) be the same as in Definition 3. A double sequence \(x=\left\{x_{m,n}\right\}\) is \(P_{p}^{2}\)-statistically bounded with the rate of \(O(\alpha_{m,n})\) provided that for every \(\varepsilon>0,\)

where \(N(\varepsilon):=\left\{\left(m,n\right):\left|x_{m,n}\right|\geq\varepsilon\alpha_{m,n}\right\},\) and denoted by \(x_{m,n}=st_{P_{p}}^{2}-O(\alpha_{m,n}).\)

Thanks to these definitions, it is possible to get the following auxiliary result.

Lemma 2. Let \(\left\{x_{m,n}\right\}\) and \(\left\{y_{m,n}\right\}\) be double sequences. Assume that \(\left\{\alpha_{m,n}\right\}\) and \(\left\{\beta_{m,n}\right\}\) be positive non-increasing sequences. If \(x_{m,n}-L_{1}=st_{P_{p}}^{2}-o(\alpha_{m,n})\) and \(y_{m,n}-L_{2}=st_{P_{p}}^{2}-o(\beta_{m,n}),\) then we have

-

\((i)\) \((x_{m,n}-L_{1})\mp(y_{m,n}-L_{2})=st_{P_{p}}^{2}-o(\gamma_{m,n})\), where \(\gamma_{m,n}:=\max\left\{\alpha_{m,n},\beta_{m,n}\right\}\) for each \(\left(m,n\right)\in\mathbb{N}_{0}^{2},\)

-

\((ii)\) \(\lambda(x_{m,n}-L_{1})=st_{P_{p}}^{2}-o(\alpha_{m,n})\) for any real number \(\lambda.\)

It is worth noting that, if we replace the symbol ‘‘\(o\)’’ with ‘‘\(O\)’’, then we get similar result.

Now we remind the concept of mixed modulus of smoothness. Let \(g\in C_{b}\left(D\right).\) The mixed modulus of smoothness of \(g,\) denoted by \(\omega_{mixed}\left(g;\delta_{1},\delta_{2}\right)\), is given by

for \(\delta_{1},\delta_{2}>0.\) In order to get our main result of this section, we will use the following inequality

for \(\lambda_{1},\lambda_{2}>0.\) Several authors used the modulus \(\omega_{mixed}\) in the framework of ‘‘Boolean sum type’’ approximation (see, for example, [13]). Elementary properties of \(\omega_{mixed}\) can be found in [31] (see also [2]), and in particular for the case of \(B\)-continuous functions in [3].

We can now give the main result of this section, which gives the rate of \(P_{p}^{2}\)-statistical convergence.

Theorem 3. Let \(\{T_{m,n}\}\) be a sequence of positive linear operators from \(C_{b}\left(D\right)\) into \(B\left(D\right),\) and let \(\left\{\alpha_{m,n}\right\}\) be a positive non-increasing sequence. Assume that the following condition holds:

where \(S=\left\{\left(m,n\right):T_{m,n}(e_{0};x,y)=e_{0}\left(x,y\right)\ {for \, all}(x,y)\in D\right\};\) and

where \(\gamma_{m,n}:=\sqrt{\left|\left|T_{m,n}(\varphi)\right|\right|}\) and \(\delta_{m,n}:=\sqrt{\left|\left|T_{m,n}(\Psi)\right|\right|}\) with \(\varphi(u,v)=\left(u-x\right)^{2},\) \(\Psi(u,v)=\left(v-y\right)^{2}.\) Then we get, for all \(g\in C_{b}\left(D\right),\)

where \(G_{x,y}\) is given by (3). We note that if we replace the symbol ‘‘ \(o\) ’’ with ‘‘ \(O\) ’’, then we get similar results.

Proof. Let \((x,y)\in D\) and \(g\in C_{b}\left(D\right)\) be fixed. Thanks to (17) that

Also, because of

we observe that

holds for all \(\left(m,n\right)\in S.\) Then, using the properties of \(\omega_{mixed},\) we obtain

Hence, from the monotonicity and the linearity of the operators \(T_{m,n}\) for all \(\left(m,n\right)\in S,\) thanks to (20) that

Then, using the Cauchy–Schwarz inequality, we get that

for all \(\left(m,n\right)\in S\), and taking the supremum over \((x,y)\in D\) on the inequality (21), we obtain for all \(\left(m,n\right)\in S\) that

where \(\delta_{1}:=\gamma_{m,n}:=\sqrt{\left|\left|T_{m,n}(\varphi)\right|\right|}\) and \(\delta_{2}:=\delta_{m,n}:=\sqrt{\left|\left|T_{m,n}(\Psi)\right|\right|}.\) For a given \(\varepsilon>0,\) let us set the followings:

Hence, thanks to (22) that \(U\cap S\subseteq U^{1}\cap S.\) We can easily see that

Letting \(t,s\rightarrow R^{-}\) and in view of (18), we conclude that

Furthermore, if we use the inequality

we get that

Letting \(t,s\rightarrow R^{-}\) in (24), and by (23) and (19), we conclude that

which gives the desired result. \(\Box\)

4 CONCLUSION

The paper contains Korovkin-type approximation theorem and the rate of convergence for all real-valued \(B-\)continuous functions via the notion of statistical convergence in the sense of power series methods. It is worth noting that by considering these results, similar proofs can be obtained for a sequence \(\{T_{m,n}\}\) of positive linear operators mapping \(B_{2\pi}\) into \(B\left(\mathbb{R}^{2}\right),\) where \(B_{2\pi}\) stands for the space of all real-valued \(B\)-continuous and \(B-2\pi\)-periodic functions on \(\mathbb{R}^{2}\) (see also [5, 19]).

REFERENCES

F. Altomare and M. Campiti, Korovkin-Type Approximation Theory and its Applications, Vol. 17 of de Gruyter Stud. Math. (Walter de Gruyter, Berlin, 1994).

G. A. Anastassiou and S. G. Gal, Approximation Theory: Moduli of Continuity and Global Smoothness Preservation (Birkhäuser, Boston, 2000).

I. Badea, ‘‘Modulus of continuity in Bögel sense and some applications for approximation by a Bernstein-type operator,’’ Studia Univ. Babeş-Bolyai, Ser.: Math.-Mech. 18, 69–78 (1973).

C. Badea, I. Badea, and H. H. Gonska, ‘‘A test function and approximation by pseudopolynomials,’’ Bull. Austral. Math. Soc. 34, 53–64 (1986).

C. Badea, I. Badea, and C. Cottin, ‘‘A Korovkin-type theorem for generalizations of Boolean sum operators and approximation by trigonometric pseudopolynomials,’’ Anal. Numer. Theory Approx. 17, 7–17 (1988).

C. Badea and C. Cottin, ‘‘Korovkin-type theorems for generalized Boolean sum operators,’’ in Approximation Theory, Colloq. Math. Soc. János Bolyai 58, 51–68 (1991).

S. Baron and U. Stadtmüller, ‘‘Tauberian theorems for power series methods applied to double sequences,’’ J. Math. Anal. Appl. 211, 574–589 (1997).

J. Boos, T. Leiger, and K. Zeller, ‘‘Consistency theory for SM-methods,’’ Acta. Math. Hungar. 76, 83–116 (1997).

J. Boos, Classical and Modern Methods in Summability (Oxford Univ. Press, New York, 2000).

K. Bögel, ‘‘Mehrdimensionale differentiation von funktionen mehrerer veränderlicher,’’ J. Reine Angew. Math. 170, 197–217 (1934).

K. Bögel, ‘‘Über mehrdimensionale differentiation, integration und beschränkte variation,’’ J. Reine Angew. Math. 173, 5–29 (1935).

K. Bögel, ‘‘Über die mehrdimensionale differentiation,’’ Jahresber. Deutsch. Mat. Verein. 65, 45–71 (1962).

C. Cottin, ‘‘Approximation by bounded pseudo-polynomials,’’ in Function Spaces, Proceedings of the International Conference, Poznań, August 25–30, 1986, Ed. by J. Musielak et al., Vol. 120 of Teubner-Texte zur Mathematik (1991), pp. 152–160.

S. Çınar and S. Yıldız, ‘‘\(P-\)statistical summation process of sequences of convolution operators,’’ Indian J. Pure Appl. Math. (2021, in press). https://doi.org/10.1007/s13226-021-00156-y

K. Demirci, A. Boccuto, S. Yıldız, and F. Dirik, ‘‘Relative uniform convergence of a sequence of functions at a point and Korovkin-type approximation theorems,’’ Positivity 24, 1–11 (2020).

K. Demirci and F. Dirik, ‘‘Four-dimensional matrix transformation and rate of \(A-\)statistical convergence of periodic functions,’’ Math. Comput. Model. 52, 1858–1866 (2010).

K. Demirci, F. Dirik, and S. Yıldız, ‘‘Approximation via equi-statistical convergence in the sense of power series method,’’ Rev. R. Acad. Cienc. Exactas Fis. Nat., Ser. A: Mat. RACSAM 116 (2), 1–13 (2022).

K. Demirci, S. Yıldız, and F. Dirik, ’’Approximation via power series method in two-dimensional weighted spaces,’’ Bull. Malays. Math. Sci. Soc. 43, 3871–3883 (2020).

F. Dirik, O. Duman, and K. Demirci, ‘‘Statistical approximation to Bögel-type continuous and periodic functions,’’ Centr. Eur. J. Math. 7, 539–549 (2009).

F. Dirik, O. Duman, and K. Demirci, ‘‘Approximation in statistical sense to \(B-\)continuous functions by positive linear operators,’’ Studia Sci. Math. Hung. 47, 289–298 (2010).

O. Duman, M. K. Khan, and C. Orhan, ‘‘\(A-\)statistical convergence of approximating operators,’’ Math. Inequal. Appl. 6, 689–700 (2003).

H. Fast, ‘‘Sur la convergence statistique,’’ Colloq. Math. 2, 241–244 (1951).

A. D. Gadjiev and C. Orhan, ‘‘Some approximation theorems via statistical convergence,’’ Rocky Mountain J. Math. 32, 129–138 (2002).

P. P. Korovkin, Linear Operators and Approximation Theory (Hindustan Publ., Delhi, 1960).

F. Moricz, ‘‘Statistical convergence of multiple sequences,’’ Arch. Math. (Basel) 81, 82–89 (2004).

S. Orhan and K. Demirci, ‘‘Statistical approximation by double sequences of positive linear operators on modular spaces,’’ Positivity 19, 23–36 (2015).

A. Pringsheim, ‘‘Zur theorie der zweifach unendlichen zahlenfolgen,’’ Math. Ann. 53, 289–321 (1900).

P. O. Şahin and F. Dirik, ‘‘A Korovkin-type theorem for double sequences of positive linear operators via power series method,’’ Positivity 22, 209–218 (2018).

N. Şahin Bayram and C. Orhan, ‘‘Abel convergence of the sequence of positive linear operators in \(L_{p,q}\left(loc\right)\),’’ Bull. Belg. Math. Soc.-Simon Stevin 26, 71–83 (2019).

N. Şahin Bayram and S. Yıldız, ‘‘Approximation by statistical convergence with respect to power series methods,’’ Hacet. J. Math. Stat. 51, 1108–1120 (2022).

L. L. Schumaker, Spline Functions: Basic Theory (Wiley, New York, 1981).

H. Steinhaus, ‘‘Sur la convergence ordinaire et la convergence asymtotique,’’ Colloq. Math. 2, 73–74 (1951).

M. Ünver and C. Orhan, ‘‘Statistical convergence with respect to power series methods and applications to approximation theory,’’ Numer. Funct. Anal. Optim. 40, 535–547 (2019).

S. Yıldız, K. Demirci, and F. Dirik, ‘‘Korovkin theory via \(P_{p}-\)statistical relative modular convergence for double sequences,’’ Rend. Circ. Mat. Palermo, II Ser. (2022, in press). https://doi.org/10.1007/s12215-021-00681-z

T. Yurdakadim, E. Taş, and Ö. G. Atlihan, ‘‘Statistical approximation properties of convolution operators for multivariables,’’ AIP Conf. Proc. 1558, 1156–1159 (2013).

Author information

Authors and Affiliations

Corresponding authors

Additional information

(Submitted by A. I. Volodin)

Rights and permissions

About this article

Cite this article

Demirci, K., Yıldız, S. & Dirik, F. Approximation via Statistical Convergence in the Sense of Power Series Method of Bögel-Type Continuous Functions. Lobachevskii J Math 43, 2423–2432 (2022). https://doi.org/10.1134/S1995080222120095

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S1995080222120095