Abstract

Some algorithm is presented for solving the convolution type Volterra integral equation of the first kind by the quadrature-sum method. We assume that the integral equation of the first kind cannot be reduced to an integral equation of the second kind but we do not assume that either the kernel or some of its derivatives at zero are unequal to zero. For the relations we propose there is given an estimate of the error of the calculated solution. Some examples of numerical experiments are presented to demonstrate the efficiency of the algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

INTRODUCTION

Solving the heat equation with data on a timelike boundary (for example, see [1]) or some inverse problems for the heat equation (see [2]), we have to solve the convolution type Volterra integral equation of the first kind

The distinctive feature of applications of this type is that (1) cannot be reduced to the Volterra integral equation of the second kind since the function \(K(t) \), as well as all its derivatives, vanishes at the point \(t=0 \).

Example 1\(. \) Consider the function \(K(t) = t^{-1/2} e^{-a/t} \) (see Fig. 1). In [1, 2] the cases are considered when the kernel is the same or more intricate but \(K(t)\) has similar behavior in the neighborhood of \(t=0\).

In this case for the solution of (1) the regularization methods applied in [3-6] cannot be used since these methods require the condition \(K^{(n)}(0)\ne 0\) ( \(n \) is some natural). The Denisov method requires the knowledge of \(f(0) \), but this condition is not usually fulfilled in practice. The Tikhonov regularization method leads to the solution of a Fredholm equations of the first kind [7].

Basing on the quadrature method, we propose the numerical method for solving integral equation (1) and obtain an estimate of the error of the solution. Some example is presented of a numerical implementation of the method.

The particularity of the solution of the convolution type Volterra integral equation of the first kind by applying the quadrature integration method is that we need to use only a fixed time meshsize. The time meshsize cannot be too small since, otherwise, a solution of the integral equation will take more time than we can afford. The second particularity that should be taken into account in our case is the behavior of \(K(t)\). Usually, this function changes significantly in a small neighborhood of \(t=0 \), and then it has a fairly “calm” behavior.

Example 2\(. \) The kernel \(K(t) = t^{-1/2}e^{-a/t} \) attains the maximum value at \(t_{\max } = 2a \) after which \(K(t) \) decreases smoothly as \(t^{-1/2} \) (see Fig. 1). In practice the parameter \(a \) is very small (for example, \(a = 10^{-6} \) [2]); i.e., \(a \) is smaller than any reasonable time meshsize. Therefore, this imposes constraints on the construction of the quadrature formula.

In [8], another technique is proposed for solving the given integral equation. This result is based on an optimization approach and the theorem of existence (proved in [8]) of a solution of this equation in the class of functions that can be represented by a Fourier series whose coefficients tend to zero as \(k^{-(1+\gamma )}\), \(\gamma >0 \). Sufficiently complete information of the theory and practice of the solutions of integral equations of various types is collected in the books [7, 9, 10] and their electronic version [11].

1. CONSTRUCTION OF A QUADRATURE FORMULA

We follow the standard scheme for constructing a quadrature formula for the solution of integral equation (1) (for example, see [7]). We will seek a solution on the interval \([0,T] \). To construct a quadrature formula, it suffices to assume that

Let the number of nodes of the interval \([0,T] \) be \(N+1 \). We denote the nodes of the mesh by \(t_n \), \(n=\overline {0,N}\), and the meshsize by \(h = T/N\), \(t_n = hn \). Put \(K^n = K(hn) \), \(f^n = f(hn)\), and \(g^n = g(hn) \).

To construct a quadrature formula, we write (1) at \(t_k \) as follows:

or

Before proceeding with the construction of the quadrature formula, we make some assumptions:

1. Assume that \(K(t)\ne 0 \) (\(t\in (0,\tau ]\), where \(\tau \) is some positive real). This assumption is related to the Titchmarsh Theorem:

Theorem 1 [12, p. 352]\(. \) Let \(f(t) \) and \(K(t) \) belong to \( L(0,T)\), and let

for almost all \(t\in (0,T)\). Then \(f(t)=0\) for almost all \(t \in (0,\theta ) \) and \( K(t)=0\) for almost all \(t\in (0,T-\theta )\).

The uniqueness of the solution of (1) if \(K(t)\not \equiv 0 \) in some neighborhood of zero on \([0,T] \) is a consequence of this theorem.

2. Assume that \(N \) is chosen so that \(h\le \tau \). We will need this assumption to use the mean value theorem below (see, for instance, [13]).

Theorem 2 \(. \) If \(K(t) \) is of constant sign in the interval \([0,\tau ]\) then there is \(\vartheta \in (0,\tau ) \) such that

To construct the quadrature formula we will proceed under the assumption that the kernel \(K(t) \) is given analytically, while the function \(g(t) \) is the result of measurements and is given in a table.

We have already noted that \(K(t)\) is a rapidly varying function in a small neighborhood of zero (the radius of the neighborhood is much less than \(h \)) and changes smoothly on the rest of the interval. Using the above suggestions, let us approximate each integral by the midpoint rectangle formula:

where \(r^n_j\) is the approximation error and \(j < n\). The last integral is approximated as follows:

3. Suppose that for \(K(t) \) the following holds:

Example 3. Let \(K(t) = t^{-1/2} e^{-a/t} \). Then

Since \(a \ll h\), we have \(|k(h)|\ge h^{1/2}\).

Remark\(. \) If \(K(t) \) has no singularity in neighborhood of zero then we put \(\alpha = 1 \). In this case the method for approximating the definite integral on \([t_n, t_{n-1}]\) will not differ from the approximation method on each other interval \([t_j, t_{j-1}] \), \(j < n\).

From (2) we have

where

is the approximation error (the remainder term in the quadrature) for (2).

Subtracting (4) with number \(n-1\) from (4) with number \(n \), we have

4. Assuming that the remainder terms \(R^j \) are small, we obtain

which can be used for solving (1).

2. PRELIMINARY ESTIMATES

To obtain some estimates, it is necessary to assume that

1. Estimate the difference \(h K^{3/2} - k(h)\). It is not hard to see that

From these two equalities we have

where \(c_1 = c_1 (M_1, M_2) \).

2. Estimate the difference \(K^{n-j+1/2} - K^{n-j-1/2}\). From

we obtain

3. Consider the remainder term in the quadrature when the integral in \([t_{j-1}, t_j]\) is approximated by the midpoint rectangle formula

We have the chain of equalities

where \(\xi _j\) is some point in \((t_{j-1},t_j) \).

Hence, for the quadrature remainder \(r^n_j \) it is easy to obtain the estimate

Note that since \(u(s) \) is the product of the two functions \(K(t-s)f(s) \) (here \(t \) plays the role of some parameter) then \(c_3 = c_3(M_0,M_1,M_2,F_0,F_1,F_2)\).

3. AN ERROR ESTIMATE OF A SOLUTION

Let \(f(t) \) be the solution of (1) such that (6) holds at \(t_{j-1/2} \), \(j=\overline {1,N}\). Relation (7) is a system of linear algebraic equations with a triangular matrix that can be solved. Let its solution be \( \tilde {f}^{j-1/2}\). Put

Assume that \(g(t)\in C[0,T]\) and \(g(t) \) is defined with some error \(\tilde {\delta }(t) \); i.e.,

Subtracting (7) from (6), we obtain that for \(\Delta f^{j-1/2}\) the following relations hold:

From (11) we infer

Taking (5) and (8)-(10) into account, we obtain

Since \(T=Nh \) and \(n \le N \), we can simplify the estimate:

Dividing this by \(c_{0}h^{\alpha } \) and applying Gronwall’s Lemma, as a result we get

where \(c_4 = 2 T c_3/c_0\), \(c_5 = 2/c_0 \), and \(c_6 = c_1/c_0 \). On the right-hand side of the inequality one term tends to zero as \(h \to 0\), the second term can take different values with a mismatched tendency of \(\delta \) and \(h \) to zero. In this case \(h \) must agree with the error value \(\delta \); i.e., \(h \) is quasi-optimal [14]. Assuming that \(h_{*}=(\alpha c_{4}/(2-\alpha )c_{5})^{1/2}\delta ^{1/2}\), we have the estimate

where \(c_7 = 2(c_4 c_5/(2-\alpha ))^{1/2}{\rm e}^{h_{*}^{1-\alpha } T c_6}\).

4. THE TECHNIQUE TO IMPROVE (12)

Estimation (12) can be improved by assuming

and/or

In this case, the following equality holds (see the derivation of (10)):

where, for short, \(t_{*} = t_{j-1/2} \) and \(\xi _j \in (t_{j-1},t_j) \). From (13) the next estimate is obtained:

Since the integrand \( u(s)\) is a product of the two functions \(K(s) \) and \(f(t_n - s) \); therefore,

From (11) it follows that

Further, (14) allows us to estimate \(|R^n - R^{n-1}|\) and conclude that

Then

Let us divide this inequality by \(c_0 h^{\alpha } \) and apply the Gronwall’s lemma. Then we have

where \(c_9 = (Tc_8/1920)/c_0 \), \(c_{10}=1/c_0 \), and \(c_{11}=c_1/c_0 \). Since, as \(h \) and \(\varepsilon \) tend to zero, both terms on the right hand side of (15) decrease; therefore, there is no need to choose a quasi-optimal meshsize.

5. A NUMERICAL EXPERIMENT

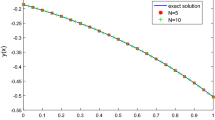

Let us give the three examples of solution of (1) by using (6). Consider \(K(t) = t^{-1/2}e^{-a/t} \) and \(a=10^{-5} \).

Put \(T=10\) and \(h=10^{-2} \). Consider the following three solutions of (1):

The functions \( f(t)\) are chosen in such a way that in these cases it is easy to calculate the corresponding functions \(g(t) \) by using the equality

and the property \(f(t-s) = f_{11}(t) f_{12}(s) - f_{21}(s) f_{22}(t)\). The corresponding integrals

are calculated analytically or numerically by using the trapezoid formula with \(h=10^{-6}\). The calculation results are shown in Fig. 2. On the left-hand side of the picture the exact \(f_e(t)\) and computed \(f(t) \) solutions are given, while on the right-hand side the relative calculation error \(\Delta (t) = |f_e(t)-f(t)|/|f_e(t)| \) is shown. It is clear that as \(t \) grows, the relative error decreases. The largest relative error is obtained in the neighborhood of zero which, as shown by the numerical experiments, decreases as \(h\) decreases.

Thus, \(f(t) \) is calculated at \(t_{j-1/2} \), \(j=\overline {1,N} \). At practice point of view, this is quite sufficient to evaluate the behavior of the unknown function. Nevertheless, if for some purpose we need to know the values of \( f(t)\) at points \(t_j \), \(j=\overline {1,N-1} \) then we can use the interpolation procedure. The extrapolation procedure can be used to find the value of \(f(t) \) at \(t_N \). However, judging by the behavior of the relative error (see Fig. 2), the extrapolation will not give a satisfactory result for \(t_0 = 0\).

To find the value of \(f(0)\), we use the equality that follows from (1):

Let \(t=h \) and let \(f(t) \) on the interval \([0,h] \) be approximated by the straight line \(at+b \). From (1) and (19) the two equalities follow:

where

From these it is easy to obtain that

Obviously,

It remains to determine what value to take for \(g^{\prime } \). Since \(g(t) \) is given in tabular form, we have the two choices: (1) \(g^{\prime }=g^1 /h\) and (2) \(g^{\prime }=(g^2 - g^1)/h \). If you look at the behavior of the function \(K(t) \) in the interval \([0,2h] \) (see Fig. 1), then the second choice is preferable. Numerical experiments have shown that the second choice leads to the smaller computational errors. Which is the preferred choice for calculating \(f^{1/2} \): (1) the first formula from (7); or (2) the second expression from (21) Numerical calculations have shown that both expressions give comparable accuracy results when calculating \(f(t)\) in a neighborhood of zero, and then the calculation error decreases in the same way. Nevertheless, the second choice is preferable since it additionally allows us to compute the value of \(f(0) \).

In Fig. 3 the result is presented of some solution of (1) when \(g(t) \) is defined with an error; i.e., instead of \(g(t) \), we consider \(g_{\varepsilon }(t) \) as follows:

Here \(\zeta _j\) is a random variable uniformly distributed in \([-1,1]\), while \(P \) is the error percentage, and the factor \(h \) ensures the inequality \(|g_{\varepsilon }(t_{j})-g^{\prime }(t_{j})| \le \varepsilon \). In the numerical experiment presented in Fig. 3, \(P = 20\% \) and the error with which the solution of (1) was obtained is at most \(10\%\).

CONCLUSION

In this article some algorithm is presented for solving the convolution type Volterra integral equation of the first kind by the quadrature-sum method. It is assumed that this integral equation of the first kind cannot be reduced to an integral equation of the second kind. For the relations we propose there is given the error estimate of the numerical solution.

We present the numerical experiments demonstrating the efficiency of the proposed algorithm. During calculations the solution of (1) was obtained at the points \(t_{j-1/2} \), \(j=\overline {1,N} \).

In practice it is quite sufficient to evaluate the behavior of the desired function. Nevertheless, if we need for some purpose to know the values of \(f(t) \) at \(t_j \) (\(j=\overline {1,N-1} \)) then we can use some interpolation procedure. The extrapolation procedure can be used to find \(f(t) \) at \(t_N \). To find the value of \(f(0) \), we give a simple formula in Section 5.

REFERENCES

A. L. Karchevsky, “Development of the Heated Thin Foil Technique for Investigating Nonstationary Transfer Processes,” Interfacial Phenomena and Heat Transfer6 (3), 179–185 (2018).

A. L. Karchevsky, Y. M. Turganbayev, S. J. Rakhmetullina, and Zh. T. Beldeubayeva, “Numerical Solution of an Inverse Problem of Determining the Parameters of a Source of Groundwater Pollution,” Eurasian J. Math. Comput. Appl. 5 (1), 53–73 (2017).

V. O. Sergeev, “Regularization of the Volterra Equation of the First Kind,” Dokl. Akad. Nauk SSSR 197 (3), 531–534 (1971).

A. M. Denisov, “On Approximate Solution of the Volterra Equation of the First Kind,” Zh. Vychisl. Mat. Mat. Fiz. 15 (4), 1053–1056 (1975).

N. A. Magnitskii, “An Approach to Regularization of the Volterra Equations of the First Kind,” Zh. Vychisl. Mat. Mat. Fiz. 15 (5), 1317–1323 (1975).

A. S. Apartsin, “On Numerical Solution of Integral Volterra Equations of the First Kind by the Regularized Quadrature Method,” Metody Optimizatsii i Ikh Prilozheniya No. 9, 99–107 (1979).

A. f. Verlan’ and A. F. Sizikov, Integral Equations: Methods, Algorithms, and Programs. Handbook (Naukova Dumka, Kiev, 1986) [in Russian].

A. L. Karchevsky, “On a Solution of the Convolution Type Volterra Equation of the \(1 \)st Kind,” Advanced Math. Models & Applications 2 (1), 1–5 (2017).

A. V. Manzhirov and A. D. Polyanin, Integral Equations: Methods of Solution. Handbook (Faktorial, Moscow, 2000) [in Russian].

A. D. Polyanin and A. V. Manzhirov, Integral Equations. Handbook (Fizmatlit, Moscow, 2003) [in Russian].

EqWorld, http://eqworld.ipmnet.ru.

E. Ch. Titchmarsh, Introduction to the Theory of Fourier Integrals (Oxford University Press, Oxford, 1937; OGIZ Gostekhizdat, Moscow, 1948).

G. M. Fikhtengol’ts, Fundamentals of Mathematical Analysis (Lan’, Moscow, 2008) [in Russian].

A. S. Apartsin and A. B. Bakushinskii, “Approximate Solution of the Integral Volterra Equations of the First Kind by the Method of Quadrature Sums,” Differential and Integral Equations No. 1, 248–258 (1972).

Funding

The author was supported by the State Task to the Sobolev Institute of Mathematics (project no. 0314–2019–0011).

Author information

Authors and Affiliations

Corresponding author

Additional information

Translated by G.A. Chumakov

Rights and permissions

About this article

Cite this article

Karchevsky, A.L. Solution of the Convolution Type Volterra Integral Equations of the First Kind by the Quadrature-Sum Method. J. Appl. Ind. Math. 14, 503–512 (2020). https://doi.org/10.1134/S1990478920030096

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S1990478920030096