Abstract

This paper focuses on the optimal minimum mean square error estimation of a nonlinear function of state (NFS) in linear Gaussian continuous-time stochastic systems. The NFS represents a multivariate function of state variables which carries useful information of a target system for control. The main idea of the proposed optimal estimation algorithm includes two stages: the optimal Kalman estimate of a state vector computed at the first stage is nonlinearly transformed at the second stage based on the NFS and the minimum mean square error (MMSE) criterion. Some challenging theoretical aspects of analytic calculation of the optimal MMSE estimate are solved by usage of the multivariate Gaussian integrals for the special NFS such as the Euclidean norm, maximum and absolute value. The polynomial functions are studied in detail. In this case the polynomial MMSE estimator has a simple closed form and it is easy to implement in practice. We derive effective matrix formulas for the true mean square error of the optimal and suboptimal quadratic estimators. The obtained results we demonstrate on theoretical and practical examples with different types of NFS. Comparison analysis of the optimal and suboptimal nonlinear estimators is presented. The subsequent application of the proposed estimators demonstrates their effectiveness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

INTRODUCTION

Estimation and filtering are powerful techniques for building models of complex control systems. The Kalman filtering and its variations are well-known state estimation techniques in wide use in a variety of applications such as navigation, target tracking, communications engineering, biomedical and chemical processing and other areas [1–6]. However, in many applications it is of interest to estimate not only a state vector \({{x}_{t}} \in {{\mathbb{R}}^{n}}\) but also a nonlinear function of the state vector (NFS), \({{z}_{t}} = f\left( {{{x}_{t}}} \right),\) which expresses practical and worthwhile information for control systems. The first motivating example of the NFS is the location of a target and radar. An angle (φ) and distance (d) from radar to target are shown in Fig. 1:

The second example can be an arbitrary quadratic form, \(f({{x}_{t}}) = x_{t}^{T}{\Omega }{{x}_{t}}\), representing an energy-like function of an object [7], or the Euclidean distance (2-norm), \(f({{x}_{t}}) = {\text{||}}{{x}_{t}} - x_{t}^{n}{\text{||}}\), between the current \({{x}_{t}}\) and nominal \(x_{t}^{n}\) states, respectively. So estimation and prediction of quantities represented by an NFS can be helpful in different applications, such as system control and target tracking.

The problem of estimation of nonlinear functions with unknown parameters and signals based on the minimax theory has been studied by many authors [8–11] and the references therein. Estimation of parameters of nonlinear functional model with known error covariance matrix is presented in [12]. Minimax quadratic estimate for integrated squared derivative of a periodic function is derived in [13]. In [14, 15], the optimal matrix of a quadratic function is searching based on cumulant criterions. Estimation of penalty considered as a quadratic cost functional for quantum harmonic oscillator is given in [16]. Estimators for integrals of nonlinear functions of a probability density are developed in [17, 18]. We also mention estimation of nonlinear functions of spectral density, periodogram of a stationary linear signals [19–21]. Some extension of these results obtained by [22]. In [23] an unknown distance between a target and radar is approximated by Taylor polynomial to subsequent estimation of its coefficients. For algorithms and theory for estimation information measures representing an nonlinear function of signals the reader is referred to [24, 25]. However, most authors have not focused on estimation of an NFS for vector signals defined by dynamical models, such as stochastic differential systems.

The aim of this paper is to develop an optimal two-stage minimum mean square error (MMSE) estimator for an arbitrary NFS in a linear Gaussian stochastic differential systems, and considerably study a special polynomial estimators for which one can obtain an important mean square estimation results. The main contributions of the paper are listed in the following:

1. This paper studies the estimation problem of an NFS within the continuous Kalman filtering framework. Using the mean square estimation approach, an optimal two-stage nonlinear estimator is proposed.

2. The optimal MMSE estimator for a polynomial functions (quadratic, cubic and quartic) is derived. We establish that the polynomial estimator represents a compact closed-form formula depends only on the Kalman estimate and error covariance.

3. Important class of quadratic estimators is comprehensively investigated, including derivation of a matrix equation for its true mean square error (MSE).

4. Performance of the proposed estimators for real NFS illustrates their theoretical and practical usefulness.

This paper is organized as follows. Section 1 presents a statement of the MMSE estimation problem for an NFS within the Kalman filtering framework. In Section 2, the general optimal MMSE estimator is proposed. Here we study the comparative analysis of the optimal and suboptimal estimators via several theoretical examples with a practical NFS. In Section 3, the importance of obtaining an optimal estimator in a closed form is studied. An optimal polynomial estimator represents a closed form expression in terms of the Kalman estimate and its error covariance. For an optimal and suboptimal quadratic estimators we derive matrix formulas for the true MSEs. The efficiency of the quadratic estimators is studied for a scalar random signal and on real model of the wind tunnel system.

1. PROBLEM STATEMENT

The Kalman framework involves estimation of the state of a continuous-time linear Gaussian dynamic system with additive white noise,

Here, \({{x}_{t}} \in {{\mathbb{R}}^{n}}\) is a state vector, \({{y}_{t}} \in {{\mathbb{R}}^{m}}\) is an observation vector, \({{v}_{t}} \in {{\mathbb{R}}^{r}}\) and \({{w}_{t}} \in {{\mathbb{R}}^{m}}\) are zero-mean Gaussian white noises with intensities Qt and Rt, respectively, i.e., \({\mathbf{E}}({{v}_{t}}v_{s}^{{\text{T}}}) = {{Q}_{t}}{{\delta }_{{t - s}}}\), \({\mathbf{E}}({{w}_{t}}w_{s}^{{\text{T}}}) = {{R}_{t}}{{\delta }_{{t - s}}}\), \({{\delta }_{t}}\) is the Dirac delta-function, \({{F}_{t}} \in {{\mathbb{R}}^{{n \times n}}}\), \({{G}_{t}} \in {{\mathbb{R}}^{{n \times r}}}\), \({{Q}_{t}} \in {{\mathbb{R}}^{{r \times r}}}\), \({{R}_{t}} \in {{\mathbb{R}}^{{m \times m}}}\) and \({{H}_{t}} \in {{\mathbb{R}}^{{m \times n}}}\), \({\mathbf{E}}(X)\) is the expectation of a random vector X.

We assume that the initial state \({{x}_{0}}\sim \mathbb{N}\left( {{{{\bar {x}}}_{0}},{{P}_{0}}} \right),\) and system and observation noises \({{v}_{t}}\), \({{w}_{t}}\) are mutually uncorrelated.

A problem associated with such system (1.1) is that of estimation of the nonlinear function of state vector

from the overall noisy observations \(y_{0}^{t} = \left\{ {{{y}_{s}}:0 \leqslant s \leqslant t} \right\}.\)

There are a multitude of statistics-based methods to estimate an unknown function \(z = f(x)\) from real sensor observations \(y_{0}^{t}.\) We focus on choosing the best estimate\(\hat {z}\) minimizing MSE: \(\mathop {\min }\limits_{\hat {z}} {\mathbf{E}}[{{\left\| {z - \hat {z}} \right\|}^{2}}].\) In general the optimal MMSE solution (further “estimate” or “estimator”) is given by the conditional mean, \(\hat {z} = {\mathbf{E}}(z {\text{|}}{\kern 1pt} y_{0}^{t})\) [26, 27]. The most challenging problem is how to calculate the conditional mean. In this paper, we solve this problem for the NFS (1.2) within the Kalman filtering framework.

We propose optimal and suboptimal MMSE estimation algorithms for the NFS and their implementation in next section.

2. OPTIMAL TWO-STAGE MMSE ESTIMATOR FOR GENERAL NFS

Here the optimal two-stage estimator for the general NFS is derived. Also we propose a simple suboptimal estimator. The both estimators include two stages: the optimal Kalman estimate of the state vector \({{\hat {x}}_{t}}\), computed at the first stage is used at the second stage for estimation of the NFS (1.2).

2.1. Optimal Two-Stage Algorithm

First stage (calculation of Kalman estimate). The estimate \({{\hat {x}}_{t}} = {\mathbf{E}}({{x}_{t}}|y_{0}^{t})\) of the state xt based on the observations \(y_{0}^{t}\), and its error covariance \({{P}_{t}} = {\mathbf{E}}({{e}_{t}}e_{t}^{{\text{T}}}),~\;{{e}_{t}} = {{x}_{t}} - {{\hat {x}}_{t}},\) are given by the continuous Kalman-Bucy filter (KBF) equations [4–6]:

Second stage (optimal estimator for NFS). The optimal estimate of the NFS \({{z}_{t}} = f({{x}_{t}})\) based on the observations \(y_{0}^{t}\) also represents a conditional mean, that is

where \({{p}_{t}}(x {\text{|}}{\kern 1pt} y_{0}^{t}) = \mathbb{N}\left( {{{{\hat {x}}}_{t}},{{P}_{t}}} \right)\) is a multivariate conditional Gaussian probability density function determining by the conditional mean \({{\hat {x}}_{t}} = {\mathbf{E}}({{x}_{t}}|y_{0}^{t})\) and covariance \({{P}_{t}} = {\text{cov}}({{x}_{t}},{{x}_{t}} {\text{|}}{\kern 1pt} y_{0}^{t}) = {\mathbf{E}}({{e}_{t}}e_{t}^{{\text{T}}})\).

Thus, the estimate in (2.2) represents the optimal MMSE estimator for the general NFS which depends on the Kalman estimate \({{\hat {x}}_{t}}\) and its error covariance Pt.

In practice, the nonlinear function (1.2) may depend not only on the state vector, but also on its estimate. Then the NSF takes the form

Taking into account that the state estimate \({{\hat {x}}_{t}}\) represents the known function of observations, \({{\hat {x}}_{t}}\) = \({{\hat {x}}_{t}}(y_{0}^{t})\), the MMSE estimate of an unknown function, \({{z}_{t}} = f({{x}_{t}},{{\hat {x}}_{t}})\) is given by the formula similar to (2.2). Namely

Further, we consider several theoretical examples of application of the general nonlinear estimators (2.2).

2.2. Examples of Two-Stage Estimator

Let \({{x}_{t}} = {{\left[ {\begin{array}{*{20}{c}} {{{x}_{{1,t}}}}&{{{x}_{{2,t}}}} \end{array}} \right]}^{{\text{T}}}}\) be a bivariate Gaussian vector; and \({{\hat {x}}_{t}} \in {{\mathbb{R}}^{2}}\) and \({{P}_{t}} \in {{\mathbb{R}}^{{2 \times 2}}}\) are the Kalman estimate and error covariance, respectively.

Example 1 (expected value of the Euclidean norm). The overall estimation error is defined as

Taking into account that the Euclidean norm of the error depends on the difference between state vector and its estimate, \({\text{||}}{{e}_{t}}{\text{||}} = \sqrt {e_{t}^{{\text{T}}}{{e}_{t}}} = \sqrt {{{{({{x}_{t}} - {{{\hat {x}}}_{t}})}}^{{\text{T}}}}({{x}_{t}} - {{{\hat {x}}}_{t}})} ,\) one transform the formula (2.2). We have

where \({{p}_{t}}(e {\text{|}}{\kern 1pt} y_{0}^{t}) = \mathbb{N}\left( {0,{{P}_{t}}} \right)\) is the conditional probability density function of the error \({{e}_{t}} = {{x}_{t}} - {{\hat {x}}_{t}}.\)

Next using [28] we have the analytical expression for the MMSE estimate of the Euclidean norm zt = \({\text{||}}{{e}_{t}}{\text{||}}{\text{,}}\)

where \({}_{2}^{{}}{{F}_{1}}\left( {a,b;c;x} \right)~\) is the hypergeometric function, and λ1,t and λ2,t are the eigenvalues of Pt.

Example 2 (feedback control depends on maximum of state coordinate). In mechanical systems a piecewise feedback control law is given by

where D is a distance threshold. Then the MMSE estimate of the maximum \({{z}_{t}} = {\text{max}}\left( {{{x}_{{1,t}}},{{x}_{{2,t}}}} \right)\) is obtained from [29],

where ϕ(⋅) and Φ(⋅) are the standard conditional Gaussian density and cumulative distribution function, respectively.

Example 3 (estimation of sine function). Let θ be a random unknown angle which is measured in the presence of an additive white noise. Then

where \({{x}_{t}} = \theta ,\) and wt is a zero-mean Gaussian white noise with intensity r.

The KBF equations (2.1) become

Integrating the Riccati equation for \({{P}_{t}} = {\mathbf{E}}[{{(\theta - {{\hat {x}}_{t}})}^{2}}]\), we obtain \({{P}_{t}} = \sigma _{\theta }^{2}{\text{/}}(1 + t\sigma _{\theta }^{2}{\text{/}}r)\). Further, we consider a sine function of an unknown angle θ. Then an NFS becomes \(z = f(\theta ) = \sin \theta \).

1. Optimal estimate. Using (2.2) the optimal estimate of the sine is

where \({{\hat {\theta }}_{t}} = {{\hat {x}}_{t}}\) and Pt are determined by (2.8).

2. Suboptimal estimate. In parallel with the optimal mean square estimate (2.9) we consider a simple suboptimal estimate, \({{\tilde {z}}_{t}} = f({{\hat {\theta }}_{t}}) = {\text{sin}}{{\hat {\theta }}_{t}}\).

To compare estimation accuracy of two estimates, we derive the analytical formulas for their MSE: \(P_{{z,t}}^{{{\text{opt}}}} = {\mathbf{E}}[{{({\text{sin}}\theta - {{\hat {z}}_{t}})}^{2}}]\) and \(P_{{z,t}}^{{{\text{sub}}}} = {\mathbf{E}}[{{\left( {\sin \theta - {{{\tilde {z}}}_{t}}} \right)}^{2}}],\) and demonstrate a comparative analysis. We obtain

where

Figure 2 illustrates the exact MSEs \(P_{{z,t}}^{{{\text{opt}}}}\) and \(P_{{z,t}}^{{{\text{sub}}}}\) for the parameters \({{m}_{\theta }} = 0\); \(\sigma _{\theta }^{2} = 2\); \(r = 1\) and Fig. 3 shows the relative error, \({{\Delta }_{t}} = {\text{|}}(P_{{z,t}}^{{{\text{opt}}}} - P_{{z,t}}^{{{\text{sub}}}}){\text{/}}P_{{z,t}}^{{{\text{opt}}}}{\text{|}} \cdot 100\% .\) Not surprisingly, Figs. 2 and 3 illustrate that the optimal estimate is better than suboptimal one, i.e., \(P_{{z,t}}^{{{\text{opt}}}} < P_{{z,t}}^{{{\text{sub}}}}.\) We also observe that the difference between two estimates becomes negligible as the time increases.

Example 4 (estimation of distance between random location θ and given point a). In this case an NFS becomes \(z = \left| {\theta - a} \right|\). Under the model (2.7) for a random location \({{x}_{t}} = \theta \) we use the best estimate (2.2) for the distance \(\left| {\theta - a} \right|\). Then

where \({{\hat {\theta }}_{t}} = {{\hat {x}}_{t}}\) and Pt are determined by (2.8).

In the particular case with a = 0 the MMSE estimate of an unknown modulus, \(z = f\left( \theta \right) = \left| \theta \right|\), takes the form

In addition we can consider a simple suboptimal estimate \({{\tilde {z}}_{t}} = f({{\hat {\theta }}_{t}}) = {\text{|}}{{\hat {\theta }}_{t}}{\text{|}}.\)

To study the behavior of the MSEs, \(P_{t}^{{{\text{opt}}}} = {\mathbf{E}}[{{\left( {\left| \theta \right| - {{{\hat {z}}}_{t}}} \right)}^{2}}]\) and \(P_{t}^{{{\text{sub}}}} = {\mathbf{E}}[{{\left( {\left| \theta \right| - {{{\tilde {z}}}_{t}}} \right)}^{2}}]\) set \({{m}_{\theta }} = 1\); \(~\sigma _{\theta }^{2} = 1\); \(r = 0.2\). To compute the MSEs the Monte-Carlo simulation with 1000 runs was used. As shown in Fig. 4, the optimal estimate \({{\hat {z}}_{t}}\) has a great improvement over the suboptimal one \({{\tilde {z}}_{t}}\).

2.3. Alternative Idea of Suboptimal Estimation of NFS

In contrast to the proposed optimal MMSE solution (2.1) and (2.2) there is an alternative idea to estimate an NFS. In this case the NFS, \({{z}_{t}} = f({{x}_{t}})\), is considered as additional state variable zt which is determined by the nonlinear stochastic equation \({{\dot {z}}_{t}} = a\left( {{{x}_{t}},{{z}_{t}}} \right) + b\left( {{{x}_{t}},{{z}_{t}}} \right){{v}_{t}},\) in which the drift coefficient \(a\left( {{{x}_{t}},{{z}_{t}}} \right)\) and diffusion matrix \(b\left( {{{x}_{t}},{{z}_{t}}} \right)\) are determined by the Ito formula applied to the complex function f(xt), in which the argument is given by the equation \({{\dot {x}}_{t}} = {{F}_{t}}{{x}_{t}} + {{G}_{t}}{{v}_{t}}\) [30]. Including the variable zt into the state of a system \({{x}_{t}} \in {{\mathbb{R}}^{n}},\) we obtain system with the augmented state \({{X}_{t}} = [\begin{array}{*{20}{c}} {x_{t}^{{\text{T}}}}&{{{z}_{t}}} \end{array}] \in {{\mathbb{R}}^{{n + 1}}}\). Thus the problem of estimation of the NFS is reduced to the nonlinear filtering problem by replacing the original state xt by the augmented one Xt. And approximate nonlinear filtering techniques can be used for simultaneously estimation of xt and zt. Many different approximate filters have been proposed [5, 6, 30–35], among which we distinguish the extended Kalman filter [5], the conditionally optimal Pugachev filter with given and optimal structures [30–32] and the unscented Kalman filter [33]. But computational complexity of the approximate nonlinear filters is considerably greater than complexity of the linear Kalman-Bucy filter. The proposed two-stage procedure (2.1) and (2.2) is more promising than estimation of the augmented state Xt.

2.4. Simple Suboptimal Estimator for NFS

In parallel to the optimal estimator \({{\hat {z}}_{t}} = {\mathbf{E}}({{z}_{t}} {\text{|}}{\kern 1pt} y_{0}^{t})\) we propose a simple suboptimal estimate of the NFS, such as \({{\tilde {z}}_{t}} = f({{\hat {x}}_{t}})\) which depends only on the Kalman estimate of state \({{\hat {x}}_{t}}\) and does not require its error covariance Pt in contrast to the optimal one \({{\hat {z}}_{t}}\). The numerical results show that the suboptimal estimate \({{\tilde {z}}_{t}}\) may be either close to the optimal one (Example 3) or seriously worse (see Example 4 and further Example 6).

2.5. Real-Time Implementation of MMSE Estimator

The error covariance Pt can be pre-computed, because it does not depend on the sensor observations \(y_{0}^{t} = \left\{ {{{y}_{s}}:0 \leqslant s \leqslant t} \right\},\) but only on the noise statistics \({{Q}_{t}}\), Rt, the system matrices \({{F}_{t}},\;{{G}_{t}},\;{{H}_{t}}\) and the initial condition P0, which are the part of system and observation model (1.1). Thus, once the observation schedule has been settled, the real-time implementation of the MMSE estimator, \({{\hat {z}}_{t}} = {{\hat {z}}_{t}}\left( {{{{\hat {x}}}_{t}},{{P}_{t}}} \right)\), requires only the computation of the Kalman estimate \({{\hat {x}}_{t}}\).

2.6. Closed-Form MSE Estimator

For the general NFS, \({{z}_{t}} = f\left( {{{x}_{t}}} \right)\), calculation of the optimal MMSE estimate is reduced to calculation of the multivariate integral (2.2). Analytic calculation of the integral (closed-form MSE estimator) is possible only in special cases as in Examples 1–4.

Further, we consider a polynomial function of state (polynomial form) for which it is possible to obtain a simple closed-form MSE estimators that depend only on the Kalman statistics \(\left( {{{{\hat {x}}}_{t}},{{P}_{t}}} \right)\).

3. OPTIMAL CLOSED-FORM MMSE ESTIMATOR FOR POLYNOMIAL FUNCTIONS

Let consider a special NFS (1.2) that represents an arbitrary multivariate polynomial function (form) such as,

where \(x \in {{\mathbb{R}}^{n}}\), and \(A,B \in {{\mathbb{R}}^{{n \times n}}}\). For simplicity, we ignore the subscript t in this Section.

3.1. Optimal Polynomial Estimators

In case of the polynomial forms (3.1), the optimal estimate \(\hat {z} = {\mathbf{E}}[f\left( x \right) {\text{|}}{\kern 1pt} y_{0}^{t}]\) has a closed-form solution since the conditional expectation depends on high-order moments of a conditional Gaussian distribution \(\mathbb{N}({{\hat {x}}_{t}},{{P}_{t}})\), which can be explicitly calculated in terms of first- and second-order moments, namely, the Kalman estimate and its error covariance \((\hat {x},P)\). The following theorem gives the best polynomial estimators.

Theorem 1.The optimal MMSE estimators \(\hat {z} = {\mathbf{E}}[f\left( x \right) {\text{|}}{\kern 1pt} y_{0}^{t}]\) for the polynomial forms (3.1) are given by the following analytical formulas:

The derivation of the estimators (3.2) is given in Appendix.

Example 5 (optimal and suboptimal estimation of the Euclidean norm). To determine an optimal relative location in wireless sensor networks we need to evaluate the cost function representing the Euclidean norm of an overall error,

where e is n-dimensional random error, \(e\sim \mathbb{N}(0,P)\)

If n = 2 the optimal MMSE estimate \(\hat {z}\) determined by formulas (2.5) and (2.6),

If n > 2 then it is difficult to derive an analytical formula for the optimal estimate \(\hat {z}\) such as (3.3). In this case we propose a simple suboptimal estimate \(\tilde {z}\) for the Euclidean norm. First, using the quadratic estimator (3.2) we calculate the MMSE estimate of the square norm \({{\left\| {{\kern 1pt} e{\kern 1pt} } \right\|}^{2}} = {{e}^{{\text{T}}}}e,\) and then extract the square root. We have

where tr(P) denotes the trace of a matrix P.

To study behavior of the optimal and suboptimal estimates (3.3) and (3.4) at n = 2 we set

Then \(\hat {z} = 1.524\), \(\tilde {z} = 1.732\). The relative error \(\left| {\frac{{\tilde {z} - \hat {z}}}{{\tilde {z}}}} \right| \cdot 100\% = 13.6\% \) shows that the suboptimal estimate \(\tilde {z}\) is more worse than the optimal one \(\hat {z}\).

3.2. Exact Matrix Formulas for Quadratic Estimators

Consider an arbitrary quadratic form (QF)

Using Theorem 1, the optimal quadratic estimator (3.2) can be explicitly calculated in terms of the state estimate \({{\hat {x}}_{t}}\) and error covariance Pt,

In parallel to the optimal estimate we propose a suboptimal estimate \(\tilde {z}\) of the QF which depends only on the Kalman estimate \({{\hat {x}}_{t}}\) and does not require its error covariance Pt in contrast to the optimal one (3.6). The suboptimal estimate is obtained by direct calculation of the QF at the point \({{x}_{t}} = {{\hat {x}}_{t}}\) such as,

Let compare estimation accuracy of the optimal (3.6) and suboptimal (3.7) estimates. The following result completely defines the MSEs,

Theorem 2.The mean square errors \(P_{{z,t}}^{{{\text{opt}}}}\) and \(P_{{z,t}}^{{{\text{sub}}}}\) are given by

and

respectively. Here the unconditional mean \({{\mu }_{t}} = {\mathbf{E}}\left( {{{x}_{t}}} \right)\) and covariance \({{C}_{t}} = {\text{cov}}\left( {{{x}_{t}},{{x}_{t}}} \right)\) of the state vector xtare determined by the equations of the method of moments [6],

The derivation of the MSEs (3.8) and (3.9) is given in Appendix.

Note that the difference between \(P_{{z,t}}^{{{\text{opt}}}}\) and \(P_{{z,t}}^{{{\text{sub}}}}\) is equal \(P_{{z,t}}^{{{\text{sub}}}} - P_{{z,t}}^{{{\text{opt}}}} = {\text{t}}{{{\text{r}}}^{2}}(A{{P}_{t}}).\)

Thus, equations (3.8)–(3.10) completely define the true MSEs of the optimal and suboptimal quadratic estimators, respectively.

3.3. Theoretical and Practical Examples of Application of Quadratic Estimators

Example 6 (theoretical example—estimation of power of scalar signal). Let xt be a scalar random signal measured in additive white noise. Then the system model is

where \({{{v}}_{t}}\) and wt are the uncorrelated white Gaussian noises with intensities q and r, respectively, and x0 ~ \(\mathbb{N}({{m}_{0}},\sigma _{0}^{2}).\)

The KBF equations (2.1) give the following

Let consider power of the signal xt, which is proportional to its square. Then \({{z}_{t}} = f({{x}_{t}}) = x_{t}^{2}\)

Using (3.6) and (3.7) we obtain the optimal and suboptimal estimates of power of the signal, respectively,

Compare accuracy of the estimates. Using Theorem 2, we obtain the true MSEs,

where the mean μt and covariance Ct of the signal xt are determined by (3.10) as follows:

Figure 5 shows the relative error \({{\Delta }_{t}} = {\text{|}}(P_{{z,t}}^{{{\text{opt}}}} - P_{{z,t}}^{{{\text{sub}}}}){\text{/}}P_{{z,t}}^{{{\text{opt}}}}{\text{|}} \cdot 100\% \) for the values \(a = - 1\), \(q = 0.5\), \({{m}_{\theta }} = 0\), \(\sigma _{\theta }^{2} = 4\), r = 0.1 From Fig. 5 we observe that the relative error Δt varies from 3 to 6% within the time zone \(t \in [0.1;~~1.1]\), and then it increases. In steady-state regime t > 4 the relative error reaches the value \({{\Delta }_{\infty }} = 20.4{{\% }}\) and at the same time zone the absolute values of the MSEs are equal \(P_{\infty }^{{{\text{opt}}}} = 0.1029\) and \(P_{\infty }^{{{\text{sub}}}}\) = 0.1239. Thus the numerical results show that the suboptimal estimate \({{\tilde {z}}_{t}} = \hat {x}_{t}^{2}\) may be seriously worse than the optimal one \({{\hat {z}}_{t}} = \hat {x}_{t}^{2} + {{P}_{t}}.\)

Example 7 (practical example—wind tunnel system). Here an experimental analysis of the quadratic estimators is considered on example of a total kinetic energy of the high-speed closed-air unit wind tunnel system [36]. The state vector \({{x}_{t}} \in {{\mathbb{R}}^{3}}\) consists of the state variables \({{x}_{{1t}}},\;~{{x}_{{2t}}}\) and \({{x}_{{3t}}},\) representing derivatives from a chosen equilibrium point of the following quantities: x1—Mach number, x2—actuator position guyde vane angle in a driving fan, and x3—actuator rate. Then the system model is given by

where the initial conditions are \({{\bar {x}}_{0}} = {{\left[ {3~\;28\;10} \right]}^{{\text{T}}}}\) and \({{P}_{0}} = {\text{diag}}\left[ {1\;~1\;~0} \right]\).

Two sensory measurement model is given

The intensities of the white noises \({{v}_{t}} \in {{\mathbb{R}}^{3}}\) and \({{w}_{t}} \in {{\mathbb{R}}^{2}}\) are subjected to \(Q = {\text{diag}}\left[ {0.01\;0.01~\;0.01} \right]\) and \(R = {\text{diag[}}0.05\,\,0.05{\text{]}}\), respectively.

The total kinetic energy of an actuator can be expressed as sum of the translational kinetic energy of the center of mass, \({{E}^{{tr}}} = mv_{t}^{2}{\text{/}}2\) and the rotational kinetic energy about the center of mass, \({{E}^{r}} = {\mathbf{I}}\omega _{t}^{2}{\text{/}}2\) where I is rotational inertia, \({{\omega }_{t}} = {{\dot {x}}_{{2t}}}\) is angular velocity, m is mass and \({{v}_{t}} = {{x}_{{3t}}}\) is linear velocity. Then the energy can be expressed in the following QF,

where \({{X}_{t}} \in {{\mathbb{R}}^{4}}\) is the extended state vector, and I= 0.136 kgm2, m = 7.39 kg.

Using Theorem 1 the optimal and suboptimal quadratic estimators take the form

where the estimate of the state \({{\hat {X}}_{t}} \in {{\mathbb{R}}^{4}}\) and error covariance \({{P}_{t}} \in {{\mathbb{R}}^{{4 \times 4}}}\) are determined by (2.1).

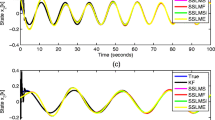

Our point of interest is behavior of the MSEs, \(P_{{z,t}}^{{{\text{opt}}}}\) = E[(zt – \({{\hat {z}}_{t}}\))2] and \(P_{{z,t}}^{{{\text{sub}}}}\) = E[(zt – \({{\tilde {z}}_{t}}\))2] which can be calculated by using Theorem 2. We observe in Figure 6 that the optimal estimator has the best performance in contrast to the suboptimal one, i.e., \(P_{{z,t}}^{{{\text{opt}}}}\) < \(P_{{z,t}}^{{{\text{sub}}}}\). The relative error Δt varies from 6.2 to 10% within the initial zone, t ∈ [0.02; 0.07] of operation of the system, and then it decreases. In time zone, t > 0.07 the values of the MSEs and relative error are equal \(P_{{z,t}}^{{{\text{opt}}}}\) = 0.89, \(P_{{z,t}}^{{{\text{sub}}}}\) = 0.94, and Δt = 5.6%, respectively.

As a result, we confirm that the proposed optimal quadratic estimator is more suitable for data processing in practice.

CONCLUSIONS

In some application problems, nonlinear function of state variables contains useful information of the target systems for control. In order to estimate an arbitrary NFS, an optimal two-stage MMSE estimation algorithm is proposed. At the first stage, a preliminary state estimate from the standard KBF method is calculated. And next the computed estimate is used at the second stage for the MMSE estimation of an NFS.

Particular attention is given for a polynomial functions of a state. In this case, it is possible to derive a closed-form polynomial estimator, which depends only on a parameters of the KBF. Interpretation of a quadratic functional as power or energy is considered in Examples 6 and 7.

In a view of importance of an NFS for practice, the proposed estimation algorithms are illustrated on theoretical and numerical examples for a real NFS. The examples show that the optimal MMSE estimator yields reasonably good estimation accuracy.

Using the MMSE method, an optimal two-stage nonlinear estimator is proposed. We establish that the polynomial estimators (quadratic, cubic and quartic) can be represented a compact closed-forms which depend only on the KBF characteristics (Theorem 1). An important class of quadratic functional is comprehensively investigated, including derivation of a matrix equations for a true MSE (Theorem 2). Performance of the proposed estimators for real NFS illustrates their theoretical and practical usefulness.

REFERENCES

N. Davari and A. Gholami, “An asynchronous adaptive direct kalman filter algorithm to improve underwater navigation system performance,” IEEE Sens. J. 17, 1061–1068 (2017).

T. Rajaram, J. M. Reddy, and Y. Xu, “Kalman filter based detection and mitigation of subsynchronous resonance with SSSC,” IEEE Trans. Power Syst. 32, 1400–1409 (2017).

X. Deng and Z. Zhang, “Automatic multihorizons recognition for seismic data based on Kalman filter tracker,” IEEE Geosci. Remote Sensing Lett. 14, 319–323 (2017).

M. S. Grewal, A. P. Andrews, and C. G. Bartone, Global Navigation Satellite Systems, Inertial Navigation, and Integration (Wiley, NJ, 2013).

D. Simon, Optimal State Estimation (Wiley, NJ, 2006).

Y. Bar-Shalom, X. R. Li, and T. Kirubarajan, Estimation with Applications to Tracking and Navigation (Wiley, New York, 2001).

V. I. Arnold, Mathematical Methods of Classical Mechanics (Springer, New York, 1989).

T. T. Cai and M. G. Low, “Optimal adaptive estimation of a quadratic functional,” Ann. Stat. 34, 2298–2325 (2006).

J. Robins, L. Li, E. Tchetgen, and A. Vaart, “Higher order infuence functions and minimax estimation of nonlinear functionals,” Prob. Stat. 2, 335–421 (2008).

J. Jiao, K. Venkat, Y. Han, and T. Weissman, “Minimax estimation of functionals of discrete distributions,” IEEE Trans. Inform. Theory 61, 2835–2885 (2015).

J. Jiao, K. Venkat, Y. Han, and T. Weissman, “Maximum likelihood estimation of functionals of discrete distributions,” IEEE Trans. Inform. Theory 63, 6774–6798 (2017).

Y. Amemiya and W. A. Fuller, “Estimation for the nonlinear functional relationship,” Ann. Stat. 16, 147–160 (1988).

D. L. Donoho and M. Nussbaum, “Minimax quadratic estimation of a quadratic functional,” J. Complexity 6, 290–323 (1990).

D. S. Grebenkov, “Optimal and suboptimal quadratic forms for noncentered gaussian processes,” Phys. Rev. E88, 032140 (2013).

B. Laurent and P. Massart, “Adaptive estimation of a quadratic functional by model selection,” Ann. Stat. 28, 1302–1338 (2000).

I. G. Vladimirov and I. R. Petersen, “Directly coupled observers for quantum harmonic oscillators with discounted mean square cost functionals and penalized back-action,” in Proceedings of the IEEE Conference on Norbert Wiener in the 21st Century, Melbourne, Australia,2016, pp. 78–83.

K. Sricharan, R. Raich, and A. O. Hero, “Estimation of nonlinear functionals of densities with confidence,” IEEE Trans. Inform. Theory 58, 4135–4159 (2012).

A. Wisler, V. Berisha, A. Spanias, and A. O. Hero, “Direct estimation of density functionals using a polynomial basis,” IEEE Trans. Signal Process. 66, 558–588 (2018).

M. Taniguchi, “On estimation of parameters of gaussian stationary processes,” J. Appl. Prob. 16, 575–591 (1979).

C. Zhao-Guo and E. J. Hanman, “The distribution of periodogram ordinates,” J. Time Ser. Anal. 1, 73–82 (1980).

D. Janas and R. Sachs, “Consistency for non-linear functions of the periodogram of tapered data,” J. Time Ser. Anal. 16, 585–606 (1995).

G. Fay, E. Moulines, and P. Soulier, “Nonlinear functionals of the periodogram,” J. Time Ser. Anal. 23, 523–553 (2002).

C. Noviello, G. Fornaro, P. Braca, and M. Martorella, “Fast and accurate ISAR focusing based on a doppler parameter estimation algorithm,” IEEE Geosci. Remote Sens. Lett. 14, 349–353 (2017).

Y. Wu and P. Yang, “Minimax rates of entropy estimation on large alphabets via best polynomial approximation,” IEEE Trans. Inform. Theory 62, 3702–3720 (2016).

Y. Wu and P. Yang, “Optimal entropy estimation on large alphabets via best polynomial approximation,” in Proceedings of the IEEE International Symposium on Information Theory, Hong Kong,2015, pp. 824–828.

S. O. Haykin, Adaptive Filtering (Prentice Hall, NJ, 2013).

T. K. Moon and W. C. Stirling, Mathematical Methods and Algorithms for Signal Processing (Prentice Hall, NJ, 2000).

A. Coluccia, “On the expected value and higher-order moments of the euclidean norm for elliptical normal variates,” IEEE Commun. Lett. 17, 2364–2367 (2013).

S. Nadarajah and S. Kotz, “Exact distribution of the max/Min of two gaussian random variables,” IEEE Trans. Very Large Scale Integr. Syst. 16, 210–212 (2008).

V. S. Pugachev and I. N. Sinitsyn, Stochastic Differential Systems. Analysis and Filtering, 2nd ed. (Nauka, Moscow, 1990) [in Russian].

V. S. Pugachev, “Assessment of the state and parameters of continuous nonlinear systems,” Avtom. Telemekh., No. 6, 63–79 (1979).

E. A. Rudenko, “Optimal structure of continuous nonlinear reduced-order Pugachev filter,” J. Comput. Syst. Sci. Int. 52, 866 (2013).

S. J. Julier and J. K. Uhlmann, “Unscented filtering and nonlinear estimation,” Proc. IEEE 92, 401–422 (2004).

K. Ito and K. Xiong, “Gaussian filters for nonlinear filtering problems,” IEEE Trans. Autom. Control 45, 910–927 (2000).

A. Doucet, N. D. Freitas, and N. Gordon, Sequential Monte Carlo Methods in Practice (Springer, London, 2001).

E. S. Armstrong and J. S. Tripp, “An application of multivariable design techniques to the control of the national transonic facility,” NASA Technical Paper, No. 1887 (NASA, Washington, DC, 1981), pp. 1–36.

R. Kan, “From moments of sum to moments of product,” J. Multivar. Anal. 99, 542–554 (2008).

B. Holmquist, “Expectations of products of quadratic forms in normal variables,” Stoch. Anal. Appl. 14, 149–164 (1996).

Author information

Authors and Affiliations

Corresponding authors

Additional information

This work was supported by the Incheon National University Research Grant in 2015–2016.

APPENDIX

APPENDIX

Proof of Theorem 1. The derivation of the polynomial estimators (3.2) is based on the Lemma 1.

Lemma 1. Let \(x \in {{\mathbb{R}}^{n}}\) be a Gaussian random vector, \(x \sim \mathbb{N}(\mu ,S)\) and \(A,B \in {{\mathbb{R}}^{{n \times n}}}\) be an arbitrary matrices. Then it holds that

The derivation of the formulas (A.1) is based on their scalar versions given in [37, 38], and standard transformations on random vectors.

This completes the proof of Lemma 1.

Next, replacing in (A.1) an unconditional expectations and covariance by their conditional versions, for example, \(\mu \to {\mathbf{E}}(x {\text{|}}{\kern 1pt} y_{0}^{t}) = \hat {x}\), S → \({\text{cov(}}\hat {x},\hat {x} {\text{|}}{\kern 1pt} y_{0}^{t}{\text{)}}\) = P we obtain (3.2).

This completes the proof of Theorem 1.

Proof of Theorem 2. The derivation of the MSEs is based on the Lemma 2.

Lemma 2.Let \(X \in {{\mathbb{R}}^{{3n}}}\) be a composite multivariate Gaussian vector, \({{X}^{{\text{T}}}} = [{{U}^{{\text{T}}}}\;{{V}^{T}}\;{{W}^{T}}]\):

Then the third- and fourth-order vector moments of the composite random vector are given by

The derivation of the vector formulas (A.3) is based on their scalar versions, and standard matrix manipulations,

where \({{\mu }_{h}} = {\mathbf{E}}({{x}_{h}})\), \({{S}_{{pq}}} = {\text{cov(}}{{x}_{p}},{{x}_{q}}{\text{)}}\).

This completes the proof of Lemma 2.

Further, we derive the formula (3.8). Using (3.5) and (3.6), the error can be written as

Next, using the unbiased and orthogonality properties of the Kalman estimate \({\mathbf{E}}\left( e \right) = {\mathbf{E}}(e{{\hat {x}}^{{\text{T}}}}) = 0,\) we obtain

Using Lemma 2 we can calculate high-order moments in (A.6). We have

where

Substituting (A.7) in (A.6), and after some manipulations, we get the optimal MSE (3.8).

In the case of the suboptimal estimate \(\tilde {z},\) the derivation of the MSE (3.9) is similar.

This completes the proof of Theorem 2.

Rights and permissions

About this article

Cite this article

Choi, W., Song, I.Y. & Shin, V. Two-Stage Algorithm for Estimation of Nonlinear Functions of State Vector in Linear Gaussian Continuous Dynamical Systems. J. Comput. Syst. Sci. Int. 58, 869–882 (2019). https://doi.org/10.1134/S1064230719060169

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S1064230719060169