Abstract

This paper considers the use of Python modules for solving resource-intensive tasks on a high-performance computer cluster. It shows the application of the cluster for solving the most complex problems in the field of nuclear physics. It also demonstrates methods of high-performance computing based on mathematical coprocessors and graphics accelerators on the example of multiplication of real matrices. The use of the Python algorithmic language in high-performance computing in many cases significantly expands the convenience of creating, debugging and testing program code.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Many modern computing complexes included in the Top500 list of the world’s leading supercomputers are assembled according to a hybrid scheme. They include not only universal processors, but also energy-efficient mathematical coprocessors such as Intel Xeon Phi or Nvidia Tesla. Such machines are an example of devices designed specifically to perform massive parallel computing. For example, the Intel Xeon Phi coprocessor provides the ability to perform calculations using up to 240 logical cores, and the Nvidia Tesla A100 mathematical coprocessor includes 6912 CUDA stream cores. Such supercomputers have a rather complex architecture of interaction between the processor and the coprocessor, which greatly complicates the process of preparing and maintaining program code. Programming of such systems using the full functionality of the means of parallelization of computing has its own peculiarities, since modern GPUs, unlike central processors, are massively parallel computing devices with a relatively large number of computing cores and hierarchically organized own memory.

The Supercomputer Center of Voronezh State University [1] has a high-performance computing cluster consisting of 10 nodes. Seven cluster nodes contain 2 Intel Xeon Phi accelerators, and the other three nodes contain 2 Nvidia Tesla accelerators. The cluster nodes are connected by an InfiniBand network.

Immediately after its creation in 2002, the computing cluster started to be applied to solve the most complex problems in the field of nuclear physics. Thus, the associated with 20-fold summation calculation of the effective numbers W [2] of nucleon clusters 8Be and 16O in nuclei is significantly accelerated when using parallel algorithms. Obtaining the values of \(W\) often requires solving the Schrödinger equation for various, often very complex types of nuclear interactions. At the same time, the Numerov’s method is widely used [3]. The calculation of the interaction potential \(V\), which is included in the equation and determines the behavior of the nuclear system, when using “folding potentials” [2] leads to multiple integration over several variables and, thereby, to a large expenditure of machine time. Therefore, the developed algorithm of the Numerov’s method with parallelization, as well as algorithms for efficient parallel summation, are used to obtain the probabilities of cluster decays [2], spectra of internal braking radiation at \(\alpha \), cluster and proton decays [4], when evaluating the effect of quark amplification of “rigid” processes with deuteron departure [5], in the analysis of properties of \(\alpha \)-partial solutions of the multi-nucleon problem [6], etc.

Due to the fact that there are accelerators of the above types in the nodes of the computing cluster, the task of ensuring their effective use is currently important.

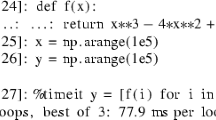

Modern trends stimulate development of higher-level software tools to facilitate the programming of complex computing systems. Regarding this, special Python modules, which simplify the methods of working with the coprocessor, have been created. As examples of such extensions, we would point to the modules PyMIC and PyCUDA [7]. The principle of operation of these modules is the same; it consists in providing an interface to the main processor/coprocessor operations. Of course, the implementation of multiplication of real matrices in Python cannot display high computational speed, so mathematical operations of this kind are performed, as a rule, by means of third-party libraries, most often written in C or Fortran [8]. An example of this is calculations performed using the NumPy package. In this case, routines implemented in Fortran are used as third-party libraries. Accordingly, the first step to transferring calculations to a coprocessor will be the implementation of a user library in a compiled language and its integration into Python code.

The most accessible in terms of simplicity of programming are calculations using the Intel Xeon Phi mathematical coprocessor. This is due to the similarity of the architecture of the coprocessor with universal processors from Intel and AMD, which allows you to use already known programming techniques. It is easy to demonstrate work with such a coprocessor using the example of the problem of multiplying two real matrices. The authors have developed a version of the source code of the user library for integration with the PyMIC module (library libmlt.so). Note that in this case, the code does not differ significantly from the standard implementation of matrix multiplication using C.

This library performs calculations on an Intel Xeon Phi coprocessor, receiving data from a Python program, and returns the result of the calculation to the Python environment. The code of the numerical calculations program with a call to the library libmlt.so uses the tools of the NumPy package to generate matrices.

Considering the possibility of using up to 240 threads for computing (one physical core is reserved for the needs of the operating system), the Intel Xeon Phi coprocessor provides ample opportunities in the study of parallel computing issues without the need for a deep understanding of its architecture and special dialects of the C language.

Preparing software code focused on working with Nvidia GPUs is much more difficult. The main difficulty of writing code for the Nvidia Tesla coprocessor is explained by the peculiarities of the CUDA streaming cores.

The program for multiplying two matrices, written for the Nvidia Tesla coprocessor with the use of the PyCUDA module, requires more time for preparation and debugging.

As in the case of the Intel Xeon Phi code, the program consists of initializing data, sending it to the coprocessor and back, as well as from the kernel that performs calculations. However, the mathematical core here is very different from the analog for the Intel coprocessor due to the difference in architectures.

The use of the Python algorithmic language in high-performance computing in many cases significantly expands the convenience of creating, debugging and testing program code. Moreover, in order to train specialists to work on supercomputers, it is advisable to start training with simpler Intel coprocessors with the transition to Nvidia coprocessors, which have become a recognized standard in the industry today. As a result, the use of top-level operations of the PyMIC and PyCUDA modules makes it possible to significantly simplify work with a supercomputer, provide seamless integration with the tools of the popular Python programming language.

REFERENCES

S. D. Kurgalin and S. V. Borzunov, “Using the resources of the Supercomputer Center of Voronezh State University in learning processes and scientific researches,” in Proceedings of the International Conference “Supercomputer Days in Russia”, Moscow, 2018 (Mosk. Gos. Univ., 2018), pp. 972–977.

S. G. Kadmensky, S. D. Kurgalin, and Yu. M. Tchuvil’sky, “Cluster states in atomic nuclei and cluster-decay processes,” Phys. Part. Nucl. 38, 699–742 (2007).

R. W. Hamming, Numerical Methods for Scientists and Engineers, 2nd ed. (Dover Publ., 1987).

S. D. Kurgalin, Yu. M. Tchuvil’sky, and T. A. Churakova, “Internal bremsstrahlung of strongly interacting charged particles,” Phys. At. Nucl. 79, 943–950 (2016).

S. D. Kurgalin and Yu. M. Tchuvil’sky, “Distributions of 6q-fluctuons in nuclei and quark amplification of rigid processes with deuteron departure,” Phys. At. Nucl. 49, 126–134 (1989).

I. A. Gnilozub, S. D. Kurgalin, and Yu. M. Tchuvil’sky, “Properties of alpha-particle solutions to the many-nucleon problem,” Phys. At. Nucl. 69, 1014–1029 (2006).

Pycuda 2022.2.2 documentation // URL: https://documen.tician.de/pycuda/. Accessed May 12, 2023.

S. Kurgalin and S. Borzunov, A Practical Approach to High-Performance Computing (Springer Int. Publ., 2019).

Funding

This work was supported by ongoing institutional funding. No additional grants to carry out or direct this particular research were obtained.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

The authors of this work declare that they have no conflicts of interest.

Additional information

Publisher’s Note.

Pleiades Publishing remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Borzunov, S., Romanov, A., Kurgalin, S. et al. High-Performance Computing on Mathematical Coprocessors and Graphics Accelerators Using Python. Phys. Part. Nuclei 55, 472–473 (2024). https://doi.org/10.1134/S1063779624030250

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S1063779624030250