Abstract

In the context of tropical mathematics, the problem of solving a vector equation with two given matrices and unknown vectors, each part of which has the form of a product of one of the matrices and an unknown vector, is considered. Such an equation, which has an unknown vector on either side of the equal sign, is often called a two-sided equation. A new procedure for solving two-sided equations is proposed based on minimizing some distance function between the vectors of tropical vector spaces that are generated by the columns of each of the matrices. As a result of the procedure, a pair of vectors is obtained, which provides a minimum distance between the spaces and the value of the distance itself. If the equation has solutions, then the resulting vectors are the solution to the equation. Otherwise, these vectors define a pseudo-solution that minimizes the deviation of one side of the equation from the other. Execution of the procedure consists in constructing a sequence of vectors that are pseudo-solutions of the two-sided equation in which the left and right sides are alternately replaced by constant vectors. Unlike the well-known alternating algorithm, in which the corresponding inequalities are solved one by one instead of equations, the proposed procedure uses a different argument, looks simpler, and allows one to establish natural criteria for completing calculations. If the equation has no solutions, the procedure also finds a pseudo-solution and determines the value of the error associated with it, which can be useful in solving approximation problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 INTRODUCTION

In this study, in the context of tropical mathematics, the problem of solving a vector equation that has the form

where A and B are given matrices; x and y are unknown vectors; the product of a matrix and a vector is defined in terms of operations of some tropical algebra, is considered. This equation has unknown vectors on both sides of the equal sign; therefore, it is often called a two-sided equation in scientific publications.

In tropical (idempotent) algebra, which studies the theory and applications of semirings and semifields with idempotent addition [1–6], the problem of solving a two-sided equation is of significant interest. In particular, this is related to the fact that the procedure of solving many other vector equations, for example, an equation of the form Ax = Bx with one unknown vector x is reduced to such a problem.

The issues of studying and solving two-sided equations were considered in some works, including the first publications on this problem by P. Butkovich [7–9] and other works published later [10–13]. The most often used applications are the problems of optimal planning of production processes [9, 11, 13]. Usually, the results known in publications propose algorithmic methods that, using an iterative computational procedure, make it possible to find one of the solutions, if they exist, or conclude that solutions are absent, otherwise (see, for example, the review of the methods for solving the two-sided equation in ([5], Section 7)).

Existing methods and approaches used to solve the two-sided equation include the following: combinatorial algorithms [8, 14], the elimination method [9], the upper-bound method [15], the algorithm based on the properties of mappings with division on partially ordered sets [16], the alternating algorithm [10], the combinatorial algorithm for equations in the field of rational numbers [11], the method of reducing to systems of equations and inequalities of two variables [12], and the method for solving equations with quadratic matrices of a special type [13]. However, in practice, the mentioned solution algorithms generally prove to be not efficient enough, for example, because of their computational complexity or additional constraints imposed on the condition and solution of the problem. Therefore, the development of new efficient procedures for solving two-sided equations seems to be quite a relevant problem.

In this study, a new procedure is proposed for solving two-sided equations based on the minimization of some function of the distance between the vectors of tropical vector spaces that are generated by the columns of each matrix of the equation. As a result of application of this procedure, one obtains a pair of vectors that provide the minimum distance between the spaces and the value of the distance itself. If the equation has solutions, the obtained vectors are the solution of the equation. Otherwise, these vectors determine a pseudo-solution that minimizes the deviation of one side of the equation from the other.

Execution of this procedure consists in constructing a sequence of vectors that are pseudo-solutions of the two-sided equation, in which the left and right sides are alternately replaced by constant vectors. Unlike the known alternating algorithm [10], in which the corresponding inequalities are alternately solved instead of solving the equations, the proposed procedure uses a different argument, seems to be simpler, and makes it possible to establish natural criteria of completion of calculations. If solutions do not exits, the procedure finds also a pseudo-solution and determines the value of the error associated with it, which can be useful when solving approximation problems.

2 PRELIMINARY INFORMATION

In this section, we present the main definitions, used denotations, and preliminary results of tropical algebra [17] necessary for further analysis and solving the two-sided equation. Further information on the theory and applications of tropical algebra can be found in a number of papers published over the last 25 years, including [1–6].

2.1 Idempotent Semifield

Let us consider a set \(\mathbb{X}\), which is closed with respect to the operations of addition ⊕ and multiplication ⊗ and contains their neutral elements: zero \(\mathbb{O}\) and unit \(\mathbb{1}\). The operations are set so that (\(\mathbb{X}\), ⊕, \(\mathbb{O}\)) forms an idempotent commutative semigroup with zero; (\(\mathbb{X}\)\{\(\mathbb{O}\)}, ⊗, \(1\)) is a commutative group; and multiplication is distributive with respect to addition. The algebraic system (\(\mathbb{X}\), ⊕, ⊗, \(\mathbb{O}\), \(1\)) is usually called an idempotent semifield.

In the considered semifield, the operation of addition is idempotent, i.e., for any x ∈ \(\mathbb{X}\), the equality x ⊕ x = x is satisfied; the operation of multiplication is invertible, i.e., for any x ≠ \(\mathbb{O}\), there is an inverse element x–1 such that x ⊗ x–1 = \(1\). Below, the sign of the multiplication operation is omitted, implying that xy = x ⊗ y.

The denotation of powers with an integer exponent is understood in the sense of multiplication ⊗. In addition, it is assumed that the equation xp = a has a unique solution x at any a ∈ \(\mathbb{X}\) and integer p > 0. In this case, the power with a rational exponent are also determined; the semifield is called algebraically complete.

The operation of idempotent addition induces a partial order on \(\mathbb{X}\), according to the rule: x ≤ y then and only then, when x ⊕ y = y. With respect to this order, the operation of summation has an extremal property in the form of inequalities x ≤ x ⊕ y and y ≤ x ⊕ y, which are valid for any x and y. The inequality x ⊕ y ≤ z is equivalent to the system of inequalities x ≤ z and y ≤ z. The operations of addition and multiplication are monotone: the inequality x ≤ y implies inequalities \(x \oplus z\) ≤ \(y \oplus z\) and xz ≤ yz for any z. The operator of inversion is antitone: the inequality x ≤ y for x, y ≠ \(\mathbb{O}\) implies the inequality x–1 ≥ y–1. Finally, it is assumed that the described partial order is completed to the linear order so as to consider that the semifield is linearly ordered.

The examples of real, linearly ordered, algebraically complete idempotent semifields include the following:

where \(\mathbb{R}\) is a set of real numbers and \({{\mathbb{R}}_{ + }} = \{ x \in \mathbb{R}\,|\,x > 0\} \).

In the idempotent semifield \({{\mathbb{R}}_{{\max , + }}}\), which is usually called (max, +) algebra, addition ⊕ is determined as the operation max; multiplication ⊗ as arithmetic summation +. The role of \(\mathbb{O}\) is played by –∞; the role of unit \(1\) by the number 0. The power xy corresponds to arithmetic product xy and is defined for any x, y ∈ \(\mathbb{R}\). The inverse element x–1 for any x ≠ \(\mathbb{O}\) coincides with the opposite number –x in conventional arithmetic. The order relation induced by idempotent addition corresponds to the natural linear order for the set \(\mathbb{R}\).

In the semifield \({{\mathbb{R}}_{{\min }}}\) (known also as min algebra), the operations are defined as ⊕ = min and ⊗ = × with neutral elements \(\mathbb{O}\) = +∞ and \(1\) = 1. The notions of power and inverse element have the usual sense. The order set by idempotent addition is inverse to the standard linear order on the set \(\mathbb{R}\). All the above idempotent semifields are isomorphic to each other. For example, the semifield \({{\mathbb{R}}_{{\max , + }}}\) is related to \({{\mathbb{R}}_{{\min }}}\) by means of the isomorphism x \( \mapsto \) e–x.

2.2 Algebra of Matrices and Vectors

Let us denote by \({{\mathbb{X}}^{{m \times n}}}\) the set of matrices consisting of m rows and n columns with elements from \(\mathbb{X}\). The matrix, all elements of which are \(\mathbb{O}\), is zero and is denoted by 0. The matrix that does not have zero columns (rows) is called regular with respect to columns (rows). The matrix with neither zero rows nor zero columns is called regular. The quadratic matrix with elements equal to \(1\) at its diagonal and \(\mathbb{O}\) at all other positions is a unit matrix and is denoted by I.

The addition and multiplication of matrices and multiplication of a matrix by a scalar is executed according to the conventional rules with replacement of the operation of arithmetic addition and multiplication with the operations ⊕ and ⊗. The monotony and other properties of scalar operations ⊕ and ⊗ associated with the order relation on \(\mathbb{X}\) are generalized to operations on matrices with which the inequalities are understood component-wise.

As usual, the matrix consisting of one column (row) forms a column vector (row vector). In what follows, all vectors are considered to be column vectors, unless otherwise indicated. The set of column vectors of n elements is denoted by \({{\mathbb{X}}^{n}}\). The vector, all elements of which are \(\mathbb{O}\), is the zero vector and is denoted by 0. A vector is called regular if it does not have zero elements.

For any nonzero vector x = (xi) ∈ \({{\mathbb{X}}^{n}}\), the multiplicative conjugate row vector x– = (\(x_{i}^{ - }\)) with elements \(x_{i}^{ - }\) = \(x_{i}^{{ - 1}}\), if xi ≠ \(\mathbb{O}\), and \(x_{i}^{ - } = 0\) otherwise, is defined.

It is easy to verify that for a regular vector x, the following relations are valid:

while, for the latter equality to be satisfied, the sufficient condition is x ≠ 0.

For regular vectors x and y, the inequality x ≤ y implies x– ≥ y–.

If the matrix A ∈ \({{\mathbb{X}}^{{m \times n}}}\) and vectors x ∈ \({{\mathbb{X}}^{n}}\) and y ∈ \({{\mathbb{X}}^{m}}\) are regular, then the products Ax and y–A are regular vectors.

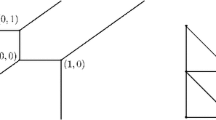

2.3 Vector Space

Let there be a system of vectors a1, …, an ∈ \({{\mathbb{X}}^{m}}\). The vector b ∈ \({{\mathbb{X}}^{m}}\) is a linear combination of vectors of this system if the equality b = x1a1 ⊕ … ⊕ xnan holds for some x1, …, xn ∈ \(\mathbb{X}\). The set of all linear combinations of the system of vectors forms their linear span

It is easy to see that the set of vectors \(\mathcal{A}\) is closed under vector addition and scalar multiplication. The set \(\mathcal{A}\) is called the tropical vector space over the semifield \(\mathbb{X}\) generated by the system of vectors a1, …, an.

2.4 Metric

Let us introduce the distance function on the set of vectors \({{\mathbb{X}}^{m}}\). For any vector a = (ai), let us consider the support supp(a) = {i | ai ≠ \(\mathbb{O}\), 1 ≤ i ≤ m}, i.e., the set of indices of its nonzero elements.

For any nonzero vectors a = (ai) and b = (bi) for which the condition supp(a) = supp(b) is satisfied, we define the distance

In the case when supp(a) ≠ supp(b), we will assume that the value of the function is larger than any value from \(\mathbb{X}\) and write d(a, b) = ∞. If a = b = 0, then we set d(a, b) = \(1\).

In the context of the semifield \({{\mathbb{R}}_{{\max , + }}}\), where \(1\) = 0, the function d for all a, b ∈ \({{\mathbb{R}}^{m}}\) coincides with the usual Chebyshev distance (metric)

For other semifields isomorphic to \({{\mathbb{R}}_{{\max , + }}}\), the distance function d becomes a metric after applying the corresponding isomorphic mapping. For example, in the case of the semifield \({{\mathbb{R}}_{{\min }}}\), the metric is the function d′(a, b) = –lnd(a, b).

The function d defined in terms of any of the above semifields can be considered as a generalized metric with values in the set [\(1\), ∞). Below, when measuring distances, we will use the generalized metric d.

3 MEASUREMENT OF DISTANCES AND SOLVING EQUATIONS

Let us consider the distance from the vector b ∈ \({{\mathbb{X}}^{m}}\) to the set \(\mathcal{A} \subset {{\mathbb{X}}^{m}}\), which is defined as follows:

Let \(\mathcal{A}\) be a tropical vector space generated by the system of nonzero vectors a1, …, an ∈ \({{\mathbb{X}}^{m}}\). Any vector a ∈ \(\mathcal{A}\) can be represented in the form of the linear combination a = x1a1 ⊕ … ⊕ xnan with coefficients x1, …, xn ∈ \(\mathbb{X}\), as well as with the help of the matrix A = (a1, …, an) composed of vectors of the system, which are taken as column vectors, and the vector x = (x1, …, xn)T in the form of the equality a = Ax.

The distance from the vector b to the vector space \(\mathcal{A}\) takes the form

It can be shown (see, for example, [17, 18]) that for a regular vector b, it is sufficient to find the minimum on the right only over the regular vectors x, i.e., the equality

is satisfied.

It is evident that the equality \(d(\mathcal{A},{\mathbf{b}}) = 1\) is equivalent to the condition b ∈ \(\mathcal{A}\), while the inequality \(d(\mathcal{A},{\mathbf{b}}) \ne 1\) implies that \({\mathbf{b}} \notin \mathcal{A}\).

Let us assume that A ∈ \({{\mathbb{X}}^{{m \times n}}}\) is a regular matrix; b ∈ \({{\mathbb{X}}^{m}}\) is a regular vector. Let us define the function

The following statement was proved in [19] (see also [17, 18]).

Lemma 1. Let A be a regular matrix and b be a regular vector. Then the equality

is satisfied; while, the minimum is reached at x = \(\sqrt {{{\Delta }_{{\mathbf{A}}}}({\mathbf{b}})} {{({{{\mathbf{b}}}^{ - }}{\mathbf{A}})}^{ - }}\).

If \(\mathcal{A}\) is a tropical vector space generated by the columns of matrix A, then the distance from the vector b to \(\mathcal{A}\) is defined as \(d(\mathcal{A},{\mathbf{b}})\) = \(\sqrt {{{\Delta }_{{\mathbf{A}}}}({\mathbf{b}})} \); the vector closest to b in the space \(\mathcal{A}\) has the form y = \(\sqrt {{{\Delta }_{{\mathbf{A}}}}({\mathbf{b}})} {\mathbf{A}}{{({{{\mathbf{b}}}^{ - }}{\mathbf{A}})}^{ - }}\).

It should be noted that the condition b ∈ \(\mathcal{A}\) corresponds to the equality ΔA(b) = \(1\) and the condition b ∉ \(\mathcal{A}\), to the inequality ΔA(b) > \(1\).

The results of analysis of the distances in the tropical vector space can be applied to solving the equation in the form

with respect to the vector x ∈ \({{\mathbb{X}}^{n}}\).

This equation has an unknown vector on one side from the equal sign and is often called a one-sided equation.

This equation was studied in [17–19], where the following result was obtained.

Theorem 2. Let A be a regular matrix and b, a regular vector. Then, the following statements are valid.

1. If ΔA(b) = \(1\), then equation (1) has a solution and the vector x = (b–A)– is the maximum solution.

2. If ΔA(b) > \(1\), then equation (1) does not have solutions. The best approximation to the solution in terms of the distance function d is the vector x = \(\sqrt {{{\Delta }_{{\mathbf{A}}}}({\mathbf{b}})} {{({{{\mathbf{b}}}^{ - }}{\mathbf{A}})}^{ - }}\).

It should be noted that the quantity \(\sqrt {{{\Delta }_{{\mathbf{A}}}}({\mathbf{b}})} \) has the sense of the minimum achievable deviation between the left- and right-hand sides of equation (1) measured in accordance with the scale of the distance function d.

Let us assume that A ∈ \({{\mathbb{X}}^{{m \times n}}}\) and B ∈ \({{\mathbb{X}}^{{m \times k}}}\) are given regular matrices; x ∈ \({{\mathbb{X}}^{n}}\) and y ∈ \({{\mathbb{X}}^{k}}\) are unknown regular vectors. Let us investigate the following two-sided equation in which unknown vectors appear in both sides:

As in the case of equation (1), the problem of analysis of equation (2) can be reduced to determining the distances between tropical vector spaces. Let us consider the columns of matrices A = (a1, …, an) and B = (b1, …, bk). Let us denote by \(\mathcal{A}\) the tropical space generated by the system of vectors a1, …, an; by \(\mathcal{B}\), the space generated by b1, …, bk. Let us determine the distance between the spaces as follows:

If condition d(\(\mathcal{A}\), \(\mathcal{B}\)) = \(1\) is satisfied, this implies that the spaces \(\mathcal{A}\) and \(\mathcal{B}\) have a nonempty intersection; then equation (2) will have a solution (x, y).

It the value of the distance d(\(\mathcal{A}\), \(\mathcal{B}\)) > \(1\), this corresponds to the absence of common points of spaces \(\mathcal{A}\) and \(\mathcal{B}\) (and, thus, the equation does not have a solution); its value shows the minimum distance between the vectors of these spaces (the minimum possible deviation between both sides of the equation).

Let us fix some regular vector x ∈ \({{\mathbb{X}}^{n}}\). It has a corresponding vector a = Ax of the space \(\mathcal{A}\). According to Lemma 1, the minimum distance between the vector a and the vectors of the space \(\mathcal{B}\) is the square root of the quantity

The vector of the space \(\mathcal{B}\) closest to the vector a can be written in the form

Similarly, for the fixed y ∈ \({{\mathbb{X}}^{k}}\), the squared distance from vector b = By to the space \(\mathcal{A}\) and the vector a ∈ \(\mathcal{A}\) closest to vector b are defined by the formulas

Let us assume that for the vectors \({{{\mathbf{x}}}_{*}} \in {{\mathbb{X}}^{n}}\) and \({{{\mathbf{y}}}_{*}} \in {{\mathbb{X}}^{k}}\), the following is true:

It is evident that in this case, the equalities

hold.

Let us introduce the denotation \({{\Delta }_{*}}\) = \({{\Delta }_{{\mathbf{B}}}}({\mathbf{A}}{{{\mathbf{x}}}_{*}})\) = \({{\Delta }_{{\mathbf{A}}}}({\mathbf{B}}{{{\mathbf{y}}}_{*}})\) and note that, according to Theorem 2, the equality \({{\Delta }_{*}}\) = \(1\) implies that equation (2) has regular solutions. The value of \({{\Delta }_{*}}\), which is not equal (larger than) \(1\), shows that the equality in (2) is not satisfied for any regular vectors x and y; its value determines the minimum possible deviation between both sides of the equation, which is reached at x = \({{{\mathbf{x}}}_{*}}\) and y = \({{{\mathbf{y}}}_{*}}\).

4 PROCEDURE OF SOLVING THE TWO-SIDED EQUATION

The above analysis of two-sided equation (2) provides a background for development of the following procedure for solving. This procedure is based on constructing a sequence of vectors in spaces \(\mathcal{A}\) and \(\mathcal{B}\), which are generated by columns of the matrices A and B. Vectors are examined taken alternately from each space so that after the choice of some vector from one space, the next vector is chosen in the other space to provide the minimum distance to the previous vector. In the context of solving equation (2), along with the indicated vectors, the vectors x and y of the coefficients of their decomposition as linear combinations of the columns of the corresponding matrices A and B are considered.

Let us assume that we have chosen an arbitrary regular vector x0 ∈ \({{\mathbb{X}}^{n}}\). This vector has the corresponding vector a0 = Ax0 ∈ \(\mathcal{A}\). Applying Theorem 2, we find the least distance from the vector a0 to the vectors of the space \(\mathcal{B}\), according to the formulas

This value is reached at the vector b1 ∈ \(\mathcal{B}\), which has the form

The minimum distance from the vector b1 to the vectors of the space \(\mathcal{A}\) is

and is attained at a vector a2 ∈ \(\mathcal{A}\) such that

Similarly, we determine the distance d(a2, \(\mathcal{B}\)) based on the value of Δ2, which is used further to find vectors y3 and b3. Next, we calculate the value of Δ2 to determine the distance d(b3, \(\mathcal{A}\)) and find vectors x4 and a4.

As a result of repeating the above calculations, the sequence of vectors a0, b1, a2, b3, a4, …, which are chosen one by one from the spaces \(\mathcal{A}\) and \(\mathcal{B}\) so as to minimize the distance between successive vectors, is constructed. At the same time, the sequence of the pairs of vectors (x0, y1), (x2, y3), … is generated; they represent some approximations of the solution to equation (2).

Let us investigate the sequence Δ0, Δ1, Δ1, … and note that Δi ≥ \(1\) for all i = 0, 1, …, i.e., this sequence is bounded from below. Now, let us verify that this sequence is not increasing.

Let us show that Δ1 ≤ Δ0 and consider the quantity Δ1 = \({{({\mathbf{A}}{{({{({\mathbf{B}}{{{\mathbf{y}}}_{1}})}^{ - }}{\mathbf{A}})}^{ - }})}^{ - }}{\mathbf{B}}{{{\mathbf{y}}}_{1}}\).

By virtue of the regularity of vector y1, the regularity of matrix B with respect to its rows, and the regularity of matrix A with respect to its columns, the row vector (By1)–A is regular. Taking into account the regularity of the vector x0, we get the inequality \({{{\mathbf{x}}}_{0}}{\mathbf{x}}_{0}^{ - } \geqslant {\mathbf{I}}\). Premultiplying this inequality by \({{({\mathbf{B}}{{{\mathbf{y}}}_{1}})}^{ - }}{\mathbf{A}}\), we come to the inequality \({{({\mathbf{B}}{{{\mathbf{y}}}_{1}})}^{ - }}{\mathbf{A}}\) ≤ \({{({\mathbf{B}}{{{\mathbf{y}}}_{1}})}^{ - }}{\mathbf{A}}{{{\mathbf{x}}}_{0}}{\mathbf{x}}_{0}^{ - }\), both sides of which are regular. After multiplicative conjugate transposition of both sides of the latter inequality, we obtain

As a result of premultiplication of both sides of the inequality by A, one more passage to the conjugate vectors and postmultiplication by By1, we get

Applying the inequality obtained together with the equality y1 = \(\sqrt {{{\Delta }_{0}}} {{({{({\mathbf{A}}{{{\mathbf{x}}}_{0}})}^{ - }}{\mathbf{B}})}^{ - }}\), which implies that By1 = \(\sqrt {{{\Delta }_{0}}} {\mathbf{B}}{{({{({\mathbf{A}}{{{\mathbf{x}}}_{0}})}^{ - }}{\mathbf{B}})}^{ - }}\), and then using the evident equality \({{({\mathbf{A}}{{{\mathbf{x}}}_{0}})}^{ - }}{\mathbf{B}}{{({{({\mathbf{A}}{{{\mathbf{x}}}_{0}})}^{ - }}{\mathbf{B}})}^{ - }}\) = \(1\), we get

Similarly, we can verify that the inequalities Δ2 ≤ Δ1 and, thus, the inequalities Δi + 1 ≤ Δi for all i are fulfilled. Therefore, the sequence Δ0, Δ1, … is not increasing. Taking into account the fact that this sequence is bounded from below, it converges to some limit \({{\Delta }_{*}} \geqslant 1\).

It should be noted that each element of the considered sequence has the sense of the squared distance from some vector of one of the spaces \(\mathcal{A}\) and \(\mathcal{B}\) to the closest vector from another space. Therefore, if the equality Δi = \(1\) is satisfied for some i, this implies that the spaces \(\mathcal{A}\) and \(\mathcal{B}\) have a nonempty intersection and equation (2) is solvable. At the same time, if i is an even number, then the intersection contains the vector ai = Axi and the pair of vectors (xi, yi + 1) is the solution of the equation. If i is odd, then the intersection contains the vector bi = Byi and the solution is the pair (xi + 1, yi).

Attainment of the equality Δi = \(1\) indicates that the limit \({{\Delta }_{*}}\) = Δi of the sequence Δ0, Δ1, … is found and can be used for determining the conditions of completion of the calculation procedure in practical calculations.

If the intersection of the spaces \(\mathcal{A}\) and \(\mathcal{B}\) is empty, then the value of the limit satisfies the inequality \({{\Delta }_{*}}\) > \(1\) and equation (2) does not have solutions. In this case, the procedure should be stopped as soon as a repeating element appears in any of the sequences x0, x2, … or y1, y3, ….

The above procedure gives us the following algorithm for the analysis of equation (2).

Algorithm 1.

(1) Set i = 0 and choose a regular vector x0.

(2) Calculate

(3) If Δi = \(1\) or yi + 1 = yj for some j < i, then set

and stop the procedure; otherwise, set i = i + 1.

(4) Calculate

(5) If Δi = \(1\) or xi + 1 = xj for some j < i, then set

and stop; otherwise, set i = i + 1.

(6) Return to step (2).

If, as a result of operation of this algorithm, we obtain \({{\Delta }_{*}}\) = \(1\), then equation (2) has solutions, including the found pair of vectors (\({{{\mathbf{x}}}_{*}}\), \({{{\mathbf{y}}}_{*}}\)). In the case, when \({{\Delta }_{*}}\) > \(1\), the equation does not have solutions; the value of \({{\Delta }_{*}}\) shows the minimal possible deviation between both sides of the equation, which is reached at vectors (\({{{\mathbf{x}}}_{*}}\), \({{{\mathbf{y}}}_{*}}\)).

5 EXAMPLES OF SOLVING A TWO-SIDED EQUATION

Let as first present the example of solving the equation from [10], which is set in the context of (max, +) algebra (the semifield \({{\mathbb{R}}_{{\max , + }}}\)). The matrices of the equation and the initial approximation are defined (with the use of the denotation \(\mathbb{O}\) = –∞) as follows:

To solve the equation, we apply Algorithm 1. Taking into account the fact that the operation of extraction of the square root in (max, +) algebra corresponds to the arithmetic halving, we get the following results for each calculation step:

The value \({{\Delta }_{*}}\) = Δ3 = 0 = \(1\) obtained shows that the equation has solutions. In addition, the solution is found to be

Let us consider the equation with matrices from the previous example, in each of them, one element in the last row is replaced as follows:

Provided that the vector of initial approximation x0 does not change, Algorithm 1 gives the following results:

Taking into account the fact that the equality x4 = x2 is fulfilled, provided that Δ3 = 1 > \(1\), the equation does not have solutions. The value \({{\Delta }_{*}}\) = Δ3 = 1 shows the minimum deviation between both sides of the equation, which is reached at the vectors

6 CONCLUSIONS

In this work, a new computational procedure of solving a two-sided vector equation in idempotent algebra is presented; it is based on analysis of the distances between tropical vector spaces. The procedure is realized as an iterative calculation algorithm described in terms of an arbitrary, linearly ordered, algebraically complete idempotent semifield. If the equation does not have solutions, this procedure finds its pseudo-solution, which provides the minimum deviation of one side of the equation from another and determines the value of this deviation.

Analysis and estimation of the computation complexity of the proposed algorithm is of special interest for further research.

REFERENCES

V. N. Kolokoltsov and V. P. Maslov, Idempotent Analysis and Its Applications (Springer-Verlag, Dordrecht, 1997), in Ser.: Mathematics and Its Applications, Vol. 401. https://doi.org/10.1007/978-94-015-8901-7

J. S. Golan, Semirings and Affine Equations over Them (Springer-Verlag, New York, 2003), in Ser.: Mathematics and Its Applications, Vol. 556. https://doi.org/10.1007/978-94-017-0383-3

B. Heidergott, G. J. Olsder, and J. van der Woude, Max Plus at Work (Princeton Univ. Press, Princeton, 2006), in Ser.: Princeton Series in Applied Mathematics.

M. Gondran and M. Minoux, Graphs, Dioids and Semirings: New Models and Algorithms (Springer-Verlag, New York, 2008), in Ser.: Operations Research/Computer Science Interfaces Series, Vol. 41. https://doi.org/10.1007/978-0-387-75450-5

P. Butkovič, Max-Linear Systems: Theory and Algorithms (Springer-Verlag, London, 2010), in Ser.: Springer Monographs in Mathematics. https://doi.org/10.1007/978-1-84996-299-5

D. Maclagan and B. Sturmfels, Introduction to Tropical Geometry (American Mathematical Society, Providence, R.I., 2015), in Ser.: Graduate Studies in Mathematics, Vol. 161. https://doi.org/10.1090/gsm/161

P. Butkovič, “On certain properties of the systems of linear extremal equations,” Ekon.-Mat. Obz. 14, 72–78 (1978).

P. Butkovič, “Solution of systems of linear extremal equations,” Ekon.-Mat. Obz. 17, 402– 416 (1981).

P. Butkovič and G. Hegedüs, “An elimination method for finding all solutions of the system of linear equations over an extremal algebra,” Ekon.-Mat. Obz. 20, 203–215 (1984).

R. A. Cuninghame-Green and P. Butkovič, “The equation A⊗x = B⊗y over (max,+),” Theor. Comput. Sci. 293, 3–12 (2003). https://doi.org/10.1016/S0304-3975(02)00228-1

P. Butkovič and K. Zimmermann, “A strongly polynomial algorithm for solving two-sided linear systems in max-algebra,” Discrete Appl. Math. 154, 437–446 (2006). https://doi.org/10.1016/j.dam.2005.09.008

E. Lorenzo and M. J. de la Puente, “An algorithm to describe the solution set of any tropical linear system A☉x = B☉x,” Linear Algebra Appl. 435, 884–901 (2011). https://doi.org/10.1016/j.laa.2011.02.014

D. Jones, “On two-sided max-linear equations,” Discrete Appl. Math. 254, 146–160 (2019). https://doi.org/10.1016/j.dam.2018.06.011

P. Butkovič, “On properties of solution sets of extremal linear programs,” in Algebraic and Combinatorial Methods in Operations Research (North-Holland, Amsterdam, 1984), in Ser.: North-Holland Mathematics Studies, vol. 95, pp. 41–54. https://doi.org/10.1016/S0304-0208(08)72952-9

E. A. Walkup, G. Borriello, J. M. Taylor, and M. A. Atiyah, “A general linear max-plus solution technique,” in Idempotency (Cambridge Univ. Press, Cambridge, 1998), in Ser.: Publications of the Newton Institute, pp. 406–415. https://doi.org/10.1017/CBO9780511662508.024

R. A. Cuninghame-Green and K. Zimmermann, “Equation with residuated functions,” Comment. Math. Univ. Carol. 42, 729–740 (2001).

N. K. Krivulin, Methods of Idempotent Algebra for Problems in Modeling and Analysis of Complex Systems (S.-Peterb. Gos. Univ., St. Petersburg, 2009) [in Russian].

N. K. Krivulin, “On solution of a class of linear vector equations in idempotent algebra,” Vestn. S.-Peterb. Univ.: Prikl. Mat. Inf. Protsessy Upr. 5 (3), 63–76 (2009).

N. K. Krivulin, “On solution of linear vector equations in idempotent algebra,” in Mathematical Models: Theory and Applications (VVM, St. Petersburg, 2004), Vol. 5, pp. 105–113 [in Russian].

ACKNOWLEDGMENTS

The author is grateful to the reviewers for some important remarks and suggestions that were considered when preparing the final version of this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

The author declares that he has no conflicts of interest.

Additional information

Translated by E. Smirnova

About this article

Cite this article

Krivulin, N.K. On the Solution of a Two-Sided Vector Equation in Tropical Algebra. Vestnik St.Petersb. Univ.Math. 56, 172–181 (2023). https://doi.org/10.1134/S1063454123020103

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S1063454123020103