Abstract

Melanoma, starts growing in melanocytes, is less common but more serious and aggressive than any other types of skin cancers found in human. Melanoma skin cancer can be completely curable if it is diagnosed and treated in an early stage. Biopsy is a confirmation test of melanoma skin cancer which is invasive, time consuming, costly and painful. To prevent this problem, research regarding computerized analysis of skin cancer from dermoscopy images has become increasingly popular for last few years. In this research, we extract the pertinent features from dermoscopy images related to shape, size and color properties based on ABCD rule. Although ABCD features were used before, these features were mostly calculated to reflect asymmetry, compactness index as border irregularity, color variegation and average diameter. This paper proposes one asymmetry feature, three border irregularity features, one color feature and two diameter features as distinctive and pertinent. Implementation of our approach indicates that each of these proposed features is able to detect melanoma lesions with over 72% accuracy individually and the overall diagnostic system achieves 98% classification accuracy with 97.5% sensitivity and 98.75% specificity. Therefore, this method could assist dermatologist for making decision clinically.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1. INTRODUCTION

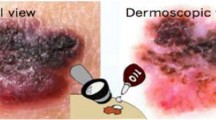

Melanoma is not as much of common but the lethal form of skin cancer: 75% of skin cancer deaths occur due to melanoma [1]. According to American cancer society [2], about 91 270 new cases of melanomas were estimated to be diagnosed (about 55 150 in male and 36 120 in female) in the United States and almost 9320 people were anticipated to die of melanoma (about 5990 male and 3330 female) in 2018. Fortunately, if melanoma can be detected and diagnosed properly at its early stages, the survival rate is very high. Human visual analysis can detect melanoma cancer at its initial stage, but it might not be accurate even when examined by expert dermatologists [3]. High grade of visual similarity between melanoma and benign lesions deteriorates the accuracy of human visual system.

Melanoma grows in pigment producing cells in the outer layer of skin which is severely exposed to ultraviolet radiation from the sun. It is estimated that extreme UV exposure and sunburns over a lifetime are the reasons of about 90% of melanomas [4]. When unrepaired DNA caused by ultraviolet radiation leads the skin cells to increase rapidly, they grow out of control and form malignant tumors. Malignant melanomas always tend to spread into surroundings and very often it goes to adjacent lymph nodes, lungs, and brain. Therefore, early diagnosis and treatment are essential for melanoma. Various imaging methods have been developed to detect melanoma skin lesions because of their non-invasive and fast characteristics. Due to easy acquisition of dermoscopic images by only using digital cameras, such images are commonly used in analysis of melanoma skin lesions.

Image preprocessing, segmentation, feature extraction, and classification are essential steps for automated diagnosis of skin lesions from dermoscopy images. Some artefact and hair removal algorithms have been proposed for the image preprocessing to improve the segmentation accuracy. Razmjooy et al. [5] used edge detection method to de-noise the images. They used intensity threshold for image segmentation and SVM classifier to classify melanoma. Dull–Razor algorithm was developed by Lee et al. [6] to remove dark hair from skin images.

Sumithra et al. [7] suggested a region growing algorithm to segment the skin lesion from background which initialized seed point automatically and then extracted texture and color features from the lesion. Wong et al. proposed a region merging algorithm for lesion segmentation based on iterative stochastic manner [8]. Furthermore, fuzzy logic based on clustering techniques are used for segmenting skin lesions, such as the fuzzy c-means algorithm (FCM) [9] and the anisotropic mean shift method based on the FCM algorithm (AMSFCM) [10]. Many authors proposed algorithms associated with active contour model (ACM) without edge [11] for segmentation of skin lesion.

Different feature extraction algorithms have been proposed to classify malignant and benign skin lesion such as ABCD rule [12, 13], seven-point checklist method [8], three-point checklist [14], and CASH algorithm [15]. These skin cancer detection methods are based on shape, geometry, color, texture, and structure of skin lesion. ABCD rule is a useful screening tool for diagnosis of melanoma with reasonable sensitivity and specificity [13], being a measure based on the Asymmetry (A), Border (B), Colour (C) and Diameter (D) properties of lesion. Different methods for extracting A, B, C and D parameters are illustrated for the analysis of dermoscopy images.

• Asymmetry (A): Asymmetry measure is computed based on axis of symmetry [16, 17], and geometrical descriptors [18].

• Border (B): Border represents geometrical characteristics based on border gradients and edge properties [18].

• Color (C): Color is defined by statistical parameters based on color models [16]– [18], color pixels intensities [18], and relative color descriptors [16, 19].

• Diameter (D): Largest axis of the best fitted ellipse [17] or longest distance between any two points on the lesion’s border [20].

Rastgoo et al. [21] extracted shape, color, and texture features of the lesion and three classifiers to detect melanomas. They presented the results of features both by individually and combined and achieved the sensitivity of 98% and specificity of 70% for texture feature with random forest algorithm. Kasmi et al. [22] developed an ABCD based algorithm for 200 dermoscopic images and achieved overall accuracy of 90%. Ferris et al. [23] extracted 54 features including border irregularity, eccentricity, color histogram etc. 97.4% sensitivity and 44.2% specificity were achieved using these features with forest classifier. Sheha et al. [24] proposed GLCM (gray level co-occurrence matrix) parameters and used multilayer perceptron classifier to detect malignant and benign lesion. Korjakowska [25] extracted shape, color, and texture features and proposed a feature selection algorithm to detect micro-malignant melanoma with a diameter under 5 mm in their initial stage. Sensitivity of 90% and specificity of 96% were achieved for 200 dermoscopic images in his study.

In the final stage, several classification goals are employed, such as benign versus malignant [26], melanoma versus nonmelanoma [27, 28], dysplastic versus nondysplastic versus melanotic [28], and regular versus irregular lesion border [29]. Various classification methods based on decision tree [28, 30], Bayesian learning-based algorithm [26, 28], support vector machine (SVM) [26, 31] algorithm, artificial neural network (ANN) [27, 28, 32, 33], genetic algorithms (GAs) [34], two-stage classifier (K-nearest neighbor (KNN), maximum likelihood classifier) [35] has also been proposed to detect melanoma. Lequan Yu et al. [36] proposed deep learning network for melanoma recognition. One recent paper [37] proposed deep learning convolutional neural network and handcrafted method fusion for melanoma diagnosis.

Many authors considered many features for classification, although all of these features are not distinctive effectively for benign and malignant lesions. Therefore, it is seen from literature review, if an increase in sensitivity (percentage of correctly classified melanoma) is required to achieve, specificity (percentage of correctly classified benign lesion) must be compromised to some extent and vice versa. With this problem in mind, we developed an automated diagnostic system to improve classification accuracy in terms of both sensitivity and specificityduring early diagnosis of malignant melanomas. In this paper, one asymmetry feature, three border irregularity features, one color feature and two diameter features are proposed to classify melanoma lesions. Extracted features from 200 images are applied to feed forward neural network classifier with back propagation algorithm (BNN) to classify the skin lesion as malignant and benign. Each of our considered features can detect malignant and benign lesions with accuracy of over 72% individually and all features together achieve 98% accuracy with 97.5% sensitivity and 98.75% specificity.

2. DATASET DESCRIPTION

Acquisition of a suitable dataset is an important task to develop an automated diagnosis system for melanoma skin lesions. The dataset obviously needs to contain all types of possible images. In this work, PH2 database [38] is adopted for dermoscopy images, which was constructed by a joint collaboration of Dermatology service of Pedro Hispano Hospital in Portugal and the University of Porto. The dermoscopic images were obtained under the same conditions through Tuebinger Mole Analyzer system using a magnification of 20×. These are 8-bit RGB color images with a resolution of 768 × 560 Pixels. The PH2 database includes 200 dermoscopic images containing 80 common nevus, 80 atypical nevus, and 40 melanomas. We classified these data into two categories: 80 benign lesions and 120 malignant lesions (80 atypical and 40 melanoma) in this work. It is very challenging to classify the melanocytic lesions into three categories (benign, atypical and melanoma) from their dermoscopy images because some atypical lesions resemble benign and the others resemble melanoma. We include atypical and melanoma lesions into malignant class because atypical lesions are suspicious to melanomas and this class requires biopsy to confirm melanoma. It will help the patients be careful about their suspicious skin lesions and direct them to carry out regular inspection or any other precaution. Since, our approach distinctively detects benign lesions, no biopsy will be required for this category. Thus, the number of overall biopsies will be reduced in the detection process of melanoma skin cancer. Some sample dermoscopy images from PH2 dataset are shown in Fig. 1.

3. PROPOSED APPROACH FOR SKIN LESION DETECTION

The main goal of this paper is to develop a computational approach to identify and classify melanoma skin lesions from dermoscopy images. Figure 2 illustrates a flow of procedure for the proposed system, which includes the following steps: (1) image acquisition, (2) preprocessing of image under study, (3) image segmentation, (4) extraction of distinct features, and (5) classification of skin lesion. Each step of the proposed approach is presented in detail in this section.

3.1 Image Preprocessing

The collected images may contain some artifacts that can interrupt the precision of the image segmentation process and eventually image classification. Hairs are the most common and dangerous artifacts for dermoscopy images. Moreover, various types of noises, such as variable lighting conditions, camera distance for image acquisition create problems for image segmentation. Therefore, preprocessing is needed to enable accurate segmentation of skin lesion. Dull–Razor algorithm [6] is applied to remove dark and black hair, and median filter is used to remove thin hair and other artifacts from skin images. Results of hair removal from two sample images are shown in Fig. 3.

3.2 Image Segmentation

Once the image preprocessing has been done, region of interest (lesion) is separated from the skin background for the following feature extraction step. Segmentation divides the image into a set of distinct regions to represent the image into more meaningful way that is easier to analyze. Each region has to be of consistent characteristics such as intensity, color, texture etc., which are different from other regions in the image. In this paper, active contour model (ACM) without edges proposed by Chan and Vese [11] is employed for lesion segmentation. This method performs segmentation based on the approximate shape of contour in the image. By the function for minimal partition problem, Chan–Vese proposed to minimize the energy expressed by Eq. (1) with respect to c1, c2 and E. Here, E is the initial contour, c1 and c2 are specified as the mean value of the region inside the E as well as the mean value of everything outside the E, respectively and u0 represents the entire image.

where μ, ν, λ1, λ2 are fixed parameters, selected by the user to fit a particular class of images. In our experiment, λ1 = λ2 = 1 and ν = 0, as suggested by the original paper. μ is selected as 0.2 for the considered set of images. Last two terms of the right side of equation interpret two forces. The first term forces to shrink the contour and the second term forces to expand the contour. These two forces get balanced when the contour touches the boundary of our region of interest. Two sample segmented images using this method are shown in Fig. 3.

3.3 Feature Formulation and Extraction

After the separation of the region of interest, next step is to extract effective features from the segmented lesion. Considered features should have the distinguishing characteristics between benign and malignant categories. Therefore, extraction of distinct features is the most important step for classification of lesions properly. In this paper, we developed shape, size and color feature of the lesion based on ABCD rule. The ABCD rule [12] is considered as a fundamental structure to detect a melanoma skin lesion.

One asymmetry feature: asymmetry score along both axes, three border irregularity features: irregularity with best fitted ellipse, area to perimeter ratio and compactness index, two diameter features: average diameter of the lesion and difference between principal axes lengths, and one color feature: color variegation in the lesion are calculated in this work. The four factors can be grouped into two categories: reflection of shape properties of a lesion (A, B, and D), and color information (C). To compute the shape features, some properties of lesion, such as; area, centroid, perimeter, orientation angle etc. are required to be determined first.

Area (A) is determined by zeroth-order moment (\({{M}_{{00}}}\)) of lesion. The raw moments and central moments of order (i + j) of a binary image f(x, y) are calculated by Eqs. (2) and (3), respectively. Centroid \(({{x}_{0}},{{y}_{0}})\) of the region is then computed using Eq. (4)

Eigenvalues (λ1 and λ2) of covariance matrix for binary image are calculated by Eq. (5). Semi-major axis length of best fit ellipse, \(a = 2\sqrt {{{\lambda }_{1}}} \) and semi-minor axis length (orthogonal to the semi-major axis), \(b = 2\sqrt {{{\lambda }_{2}}} \). The orientation angle (θ) of the lesion is determined by Eq. (6).

Using the lesion properties determined above, the shape features (A, B, and D) are computed below.

3.3.1. Asymmetry index. Image coordinate system is needed to be aligned with the segmented region by placing the origin of coordinate system onto the centroid of the region and then rotating it according to orientation angle so that major axis is overlapped to the x-axis of coordinate system. When the image coordinate system is perfectly aligned with the region, the object is flipped across major axis. Then the non-overlapping region (ΔBx) between segmented image (B) and flipped image (Bx) is computed by Eq. (7). The asymmetry index (AS1) across major axis defines the ratio of the areas of ΔBx and B. Similarly, asymmetry score across minor axis (AS2) is computed using Eq. (8). The steps to determine the asymmetry score (AS1) are demonstrated with a segmented image lesion in Fig. 4.

3.3.2. Border irregularity. One of the primary signs of malignant melanoma is border irregularity [12, 13]. How deviated the object is from a regular shape indicates the nonuniformity or irregularity. In order to measure border irregularity, we consider regular elliptical and circular shape here. The following three features are determined to represent border irregularity.

(A) Irregularity with Ellipse (BI1)

To determine the border irregularity with ellipse, best fitted ellipse is constructed using centroid, major axis length and minor axis length of the lesion best-fit ellipse determined at the beginning of Section 3.3. The nonoverlapping region between lesion and its best fit ellipse defines the nonuniformity or border irregularity (BI1), which is calculated by Eq. (9).

Here, B represents a skin lesion and E represents its best fit ellipsoid region. ΔE and ΔB represent the areas of nonoverlapping region and lesion, respectively. Figures 5a and 5b depict the best fitted ellipse for a lesion and the corresponding nonoverlapping region, respectively.

(B) Area to Perimeter ratio (BI2)

Another measure of border irregularity is the ratio of area to perimeter (\(BI2 = A{\text{/}}P\)) of the segmented binary lesion. It is one of the early distinct signs of melanoma. Malignant lesions do have greater area to perimeter ratio than that of benign lesions. In this paper, perimeter is computed by applying the Kimura–Kikuchi–Yamasaki method for boundary path length measurement [39].

(C) Compactness Index (BI3)

Compactness is a measure of closeness of the pixels in the shape to the center of the shape. It represents the smoothness of the lesion border. Since the most compact shape is a circle, we define compactness by \(BI3 = 4\pi A{\text{/}}{{P}^{2}}\). Circle has a compactness of 1, and for all other shapes, compactness is less than 1 (between 0 and 1). Melanoma lesion is considered to have ragged, uneven, blur, and irregular border and thus its compactness score deviates from one and approaches to zero.

3.3.3. Diameter. Since, a melanoma lesion usually grows larger than a common lesion, it is considered as one of the most important distinguishing parameters. To compute diameter, we consider two features of the lesion: one being the average diameter of the lesion (D1) and the other being the difference between major axis and minor axis lengths (D2) of the best-fit ellipse.

(A) Lesion Diameter (D1)

Diameter defines the size of the lesion. A melanoma lesion generally has a diameter greater than 6 mm [40]. In this paper we determine the average diameter of a lesion from its area (A), major (2a) and minor (2b) axes lengths of its best-fit ellipse and it is calculated as, \(D1 = (d1 + d2){\text{/2}}\), where, \(d1 = \sqrt {4A{\text{/}}\pi } \), and \(d2 = (2a + 2b){\text{/2}}\).

(B) Difference between Principal Axes Lengths (D2)

One pertinent feature of malignant melanoma is the difference between major and minor axis lengths of skin lesion (\(D2 = 2(a - b)\)). Malignant lesions are characterized by larger D2 value, whereas normal lesion is found to be of lower value.

3.3.4. Color feature. To represent the C letter of the ABCD rule, we start by computing number of colors present in the skin lesion. Normal lesion consists of a uniform color [26]. They are often characterized by brown, black etc. One early sign of melanoma is the emergence of variations in lesion color. Usually malignant melanomas contain three or more types of distinct colors. Even five or six colors may be present in melanoma lesions. Regarding to the color variegation, six different colors are considered in our work according to PH2 database [38]. Colors which are considered to be present in melanoma skin lesion are white, red, light brown, dark brown, blue gray, and black. To estimate color score, the segmented binary region is overlaid on the original RGB image. Afterwards, this RGB model is employed to estimate colors of skin lesion in an image. We tried for several combinations of red, green and blue channels to find these six possible colors in the RGB color space for our considered 200 images. The best threshold found in the experiment is given in Table 1.

First, the entire image is scanned, and the number of pixels which belong to each region is counted. If the number of pixels of an individual color is 5% of total number of pixels in the lesion, then the color is assumed to be present and the score of color is determined as the total number of colors estimated in the lesion. This score ranges from 1 to 6. Figure 6 presents color score of two sample images containing score 2 and 4.

3.4 Lesion Classification

Once the set of features built, following step is to classify the lesions to detect melanoma. For our classification technique we used supervised learning where output values are known before (back propagation algorithm, BNN). The dataset is split into three categories: 70% for training, 15% for validation and 15% for testing. The weights and biases are initialized with small random numbers, such as between –1 and +1. Then, the output of NN is calculated according to these weights, biases and inputs. This output is compared with desired output, if it does not match, an error signal is generated. Weights and biases are continuously adjusted to minimize this error. In our work, the network consists of one input layer, one hidden layer with 100 neurons and one output layer. Results of images are applied as 1 or 0, where 1 defines malignant and 0 defines benign lesion.

4. RESULTS AND DISCUSSION

The proposed methodology has been implemented with MATLAB 2016a on a Core i5 CPU equipped with 8GB RAM. In this section, experimental results for the proposed approach are presented and discussed.

4.1 Feature Analysis

In order to illustrate the implementation results, values of all features for ten benign and ten malignant sample images randomly selected from PH2 dataset [38] are shown in Tables 2 and 3, respectively. Based on the feature values, images are classified as benign or malignant. We can also determine approximate ranges of feature values for being benign and malignant, which are given in the last rows of Table 2 and 3, respectively. These ranges are determined from feature values of the entire dataset (200 images). If the feature values for a particular image lie in the malignant ranges, it is considered as malignant lesion and vice versa. However, all feature values do not lie in the particular ranges for all images, an artificial neural network is employed here for overall decision making.

From Tables 2 and 3, it is clearly evident that malignant lesions have higher asymmetry index (>0.15 for most of the malignant lesions) than that of benign lesions along both image axes. Irregularity index with respect to best fit ellipse (BI1) is also found similar to asymmetry index. For malignant lesions, it is greater than 0.15 for nearly all images. Area to perimeter ratio (BI2) increases with malignancy whereas, compactness index (BI3) is decreasing for malignant lesion. In our experiment we have found BI2 score greater than 90 and BI3 score less than 0.8 for most of the malignant cases. In case of diameter, both parameters (D1, D2) are larger for malignant lesions. Thus, approximate threshold values for average diameter and difference between principal axes are considered about 350 pixels and 90 pixels, respectively. In addition to that, malignant lesions are found to have three or more colors.

Percentage of images (among 80 benign and 120 malignant) lying in the defined threshold ranges for being malignant and benign are presented with respect to all the features in Fig. 7 through Fig. 13. For five features (BI1, BI2, C, D1, D2), above 64% benign and 65% malignant images lie in the particular threshold ranges of benign and malignant, respectively whereas, AS1, AS2 and BI3 find above 59% benign and 55% malignant images in the particular ranges. Difference between principal axes (D2) gives the highest accuracy (78.5% for benign and 78% for malignant) among all features followed by the color feature (83% for benign and 64% for malignant) and Area to Perimeter ratio, BI2 (77% for benign and 67% for malignant). Since, these considered features can be categorized into two distinct ranges for malignant and benign images, they are able to distinguish malignant from and therefore, can be considered as pertinent features.

4.2 Classification Accuracy of Overall System

The performance of the diagnostic system is assessed with accuracy (ACC), sensitivity (SEN) and specificity (SPC), which are calculated using Eqs. (10), (11), and (12) respectively.

Here, TP (True Positive) represents number of malignant lesions correctly classified as malignant, FP (False Positive) stands for malignant lesions incorrectly classified as benign, whereas TN (True Negative) specifies number of benign lesions correctly classified as benign and FN (False Negative) stands for benign lesions which are incorrectly classified as malignant. Table 4 shows the classification performance of our proposed approach in a confusion matrix. This table depicts that the proposed system is capable of detecting all lesions with 98% accuracy, whereas accuracy for diagnosing malignant lesions is 97.5%.

4.3 Accuracy of the Proposed Features

Each individual feature considered in this research contributes significantly in the classification process. In this work, classification accuracy for each individual feature is also determined by neural network. The percentage of correctly classified images (malignant and benign) by individual features are presented in Table 5. It is found that each of the features is capable of providing over 72% classification accuracy. D2 classifies the lesion with the highest accuracy (90%) among all features followed by BI2 (87.5%). This table also lists the sensitivity and specificity. All features can individually diagnose malignant lesions with over 72% accuracy and benign lesions with over 81% accuracy. Therefore, the overall results indicate that the proposed features are promising to effectively identify malignant lesions.

4.4 Comparison with Other Published Works

A comparative study of our proposed approach with some relevant published works from literature is illustrated in Table 6. For all previous method listed in the table it is observed that, an increase in sensitivity (percentage of correctly classified malignant images) results in decrease in specificity (percentage of correctly classified benign images) and vice versa. However, in this research, satisfactory results for both sensitivity and specificity are achieved with our proposed features.

5. CONCLUSION AND FUTURE WORKS

Due to rapid growth of melanoma skin cancer patients, skin cancer has become a dynamic research field. Therefore, development of new techniques for early diagnosis of melanoma cancer has become very important. Several automated methods for skin lesion detection have been reported in literature. However, most of the works considered numerous features for classification, which are not properly distinctive for benign and malignant lesions. In this study, a pertinent feature extraction approach based on ABCD rule for classification of dermoscopy images is presented. We proposed seven features associated with asymmetry, irregularity, color, and diameter of skin lesion. We have reported the effectiveness and classification accuracy for the entire system as well as for individual features in this paper. Implementation results indicate that our proposed features can effectively classify lesions with a satisfactory level of accuracy, sensitivity and specificity. In our system, the weights used in BNN are same for all the images and this method has been verified to be true for all types of images. Since, the present approach provides a superior performance, this will be an effective and accurate process for melanoma detection. However, the classification results may be improved further by using deep neural network, since this network is capable of learning from large amount of data. Therefore, in future, deep learning algorithm will be taken into account for skin lesion classification.

REFERENCES

P. Trovitch, A. Gupte, and K. Ciftci, “Early detection and treatment of skin cancer,” Turk. J. Cancer 32 (4), 129–137 (2002).

American Cancer Society, “Melanoma Skin Cancer” [Online]. Available: https://www.cancer.org/cancer/melanoma-skin-cancer. [Accessed: 25-Jun-2018].

H. Kittler, H. Pehamberger, K. Wolff, and M. Binder, “Diagnostic accuracy of dermoscopy,” Lancet Oncol. 3 (3), 159–165 (2002).

Canadian Dermatology Association, “Melanoma” [Online]. Available: https://dermatology.ca/public-patients/skin/melanoma. [Accessed: 11-Jun-2018].

N. Razmjooy, B. Somayeh Mousavi, F. Soleymani, and M. Hosseini Khotbesara, “A computer-aided diagnosis system for malignant melanomas,” Neural Comput. Appl. 23 (7–8), 2059–2071 (2013).

T. Lee, V. Ng, R. Gallagher, A. Coldman, and D. McLean, “Dullrazor: A software approach to hair removal from images,” Comput. Biol. Med. 27 (6), 533–543 (1997).

R. Sumithra, M. Suhil, and D. S. Guru, “Segmentation and classification of skin lesions for disease diagnosis,” Procedia Comput. Sci. 45, 76–85 (2015).

A. Wong, J. Scharcanski, and P. Fieguth, “Automatic skin lesion segmentation via iterative stochastic region merging,” IEEE Trans. Inf. Technol. Biomed. 15 (6), 929–936 (2011).

Md. M. Rahman, P. Bhattacharya, and B. C. Desai, “A multiple expert-based melanoma recognition system for dermoscopic images of pigmented skin lesions,” in Proc. 8th IEEE Int. Conf. on BioInformatics and BioEngineering (BIBE 2008) (Athens, Greece, 2008), IEEE, pp. 1–6.

H. Zhou, G. Schaefer, A. H. Sadka, and M. E. Celebi, “Anisotropic mean shift based fuzzy C-means segmentation of dermoscopy images,” IEEE J. Sel. Top. Signal Process. 3 (1), 26–34 (2009).

T. F. Chan and L. A. Vese, “Active contours without edges,” IEEE Trans. Image Process. 10 (2), 266–277 (2001).

A. B. Cognetta, T. Vogt, M. Landthaler, O. Braun-Falco, and G. Plewig, “The ABCD rule of dermatoscopy: High prospective value in the diagnosis of doubtful melanocytic skin lesions,” J. Am. Acad. Dermatol. 30 (4), 551–559 (1994).

N. R. Abbasi, et al., “Early diagnosis of cutaneous melanoma: revisiting the ABCD criteria,” J. Am. Med. Assoc. 292 (22), 2771–2776 (2004).

I. Zalaudek, et al., “Three-point checklist of dermoscopy: An open internet study,” Br. J. Dermatol. 154 (3), 431–437 (2006).

J. S. Henning, et al., “The CASH (color, architecture, symmetry, and homogeneity) algorithm for dermoscopy,” J. Am. Acad. Dermatol. 56 (1), 45–52 (2007).

Y. Chang, R. J. Stanley, R. H. Moss, and W. Van Stoecker, “A systematic heuristic approach for feature selection for melanoma discrimination using clinical images,” Skin Res. Technol. 11 (3), 165–178 (2005).

Z. She, Y. Liu, and A. Damatoa, “Combination of features from skin pattern and ABCD analysis for lesion classification,” Skin Res. Technol. 13 (1), 25–33 (2007).

P. G. Cavalcanti and J. Scharcanski, “Macroscopic pigmented skin lesion segmentation and its influence on lesion classification and diagnosis,” in Color Medical Image Analysis, Ed. by M. Celebi and G. Schaefer, Lecture Notes in Computational Vision and Biomechanics (Springer, Dordrecht, 2013), Vol. 6, pp. 15–39.

R. LeAnder, P. Chindam, M. Das, and S. E. Umbaugh, “Differentiation of melanoma from benign mimics using the relative-color method,” Skin Res. Technol. 16 (3), 297–304 (2010).

A. H. AlAsadi and B. M. Al-Safy, “Early detection and classification of melanoma skin cancer,” Int. J. Inf. Technol. Comput. Sci. 7 (12), 67–74 (2015).

M. Rastgoo, R. Garcia, O. Morel, and F. Marzani, “Automatic differentiation of melanoma from dysplastic nevi,” Comput. Med. Imaging Graphics 43, 44–52 (2015).

K. Mokrani and R. Kasmi, “Classification of malignant melanoma and benign skin lesions: implementation of automatic ABCD rule,” IET Image Process. 10 (6), 448–455 (2016).

L. K. Ferris, et al., “Computer-aided classification of melanocytic lesions using dermoscopic images,” J. Am. Acad. Dermatol. 73 (5), 769–776 (2015).

M. A. Sheha, M. S. Mabrouk, and A. Sharawy, “Automatic detection of melanoma skin cancer using texture analysis,” Int. J. Comput. Appl. 42 (20), 22–26 (2012).

J. Jaworek-Korjakowska, “Computer-aided diagnosis of micro-malignant melanoma lesions applying Support Vector Machines,” BioMed Res. Int. 2016, Article ID 4381972, 8 pages (2016).

R. Garnavi, M. Aldeen, and J. Bailey, “Computer-aided diagnosis of melanoma using border- and wavelet-based texture analysis,” IEEE Trans. Inf. Technol. Biomed. 16 (6), 1239–1252 (2012).

H. Iyatomi, et al., “An improved Internet-based melanoma screening system with dermatologist-like tumor area extraction algorithm,” Comput. Med. Imaging Graphics 32 (7), 566–579 (2008).

I. Maglogiannis and C. N. Doukas, “Overview of advanced computer vision systems for skin lesions characterization,” IEEE Trans. Inf. Technol. Biomed. 13 (5), 721–733 (2009).

K. M. Clawson, P. Morrow, B. Scotney, J. McKenna, and O. Dolan, “Analysis of pigmented skin lesion border irregularity using the harmonic wavelet transform,” in Proc. 2009 13th International Machine Vision and Image Processing Conference (IMVIP 2009) (Dublin, Ireland, 2009), IEEE, pp. 18–23.

M. E. Celebi, et al., “Automatic detection of blue-white veil and related structures in dermoscopy images,” Comput. Med. Imaging Graphics 32 (8), 670–677 (2008).

R. B. Oliveira, N. Marranghello, A. S. Pereira, and J. M. R. S. Tavares, “A computational approach for detecting pigmented skin lesions in macroscopic images,” Expert Syst. Appl. 61, 53–63 (2016).

F. Dalila, A. Zohra, K. Reda, and C. Hocine, “Segmentation and classification of melanoma and benign skin lesions,” Optik (Int. J. Light Electron Opt.) 140, 749–761 (2017).

M. Silveira, et al., “Comparison of segmentation methods for melanoma diagnosis in dermoscopy images,” IEEE J. Sel. Top. Signal Process. 3 (1), 35–45 (2009).

M. E. Roberts and E. Claridge, “An artificially evolved vision system for segmenting skin lesion images,” in Medical Image Computing and Computer-Assisted Intervention – MICCAI 2003, Proc. 6th Int. Conf., Ed. by R. E. Ellis and T. M. Peters, Lecture Notes in Computer Science (Springer, Berlin, Heidelberg, 2003), vol. 2878, pp. 655–662.

P. G. Cavalcanti, J. Scharcanski, and G. V. G. Baranoski, “A two-stage approach for discriminating melanocytic skin lesions using standard cameras,” Expert Syst. Appl. 40 (10), 4054–4064 (2013).

L. Yu, H. Chen, Q. Dou, J. Qin, and P. Heng, “Automated melanoma recognition in dermoscopy images via very deep residual networks,” IEEE Trans. Med. Imaging 36 (4), 994–1004 (2017).

J. Hagerty, et al., “Deep learning and handcrafted method fusion: higher diagnostic accuracy for melanoma dermoscopy images,” IEEE J. Biomed. Health Inf. (2019).

T. Mendonca, P. M. Ferreira, J. S. Marques, A. R. S. Marcal, and J. Rozeira, “PH2 – A dermoscopic image database for research and benchmarking,” in Proc. 35th Annual Int. Conf. IEEE Engineering in Medicine and Biology Society (EMBS 2013) (Osaka, Japan, 2013), IEEE, pp. 5437–5440.

K. Kimura, S. Kikuchi, and S.-I. Yamasaki, “Accurate root length measurement by image analysis,” Plant Soil 216 (1–2), 117–127 (1999).

B. K. Rao, et al., “Can early malignant melanoma be differentiated from atypical melanocytic nevi by in vivo techniques? Part I. Clinical and dermoscopic characteristics,” Skin Res. Technol. 3 (1), 8–14 (1997).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

The authors declare that they have no conflicts of interest. The article does not contain any studies involving human participants performed by any of the authors.

Additional information

Sharmin Majumder received her B.Sc. in electrical and electronic engineering (EEE) from Chittagong University of Engineering and Technology (CUET), Bangladesh, and M.Sc. in EEE from Chittagong University of Engineering and Technology, Bangladesh. Currently she is working as an Assistant Professor in the department of EEE, CUET. Her research areas include signal and image processing, biomedical imaging, neuroengineering, machine learning, and human-computer interaction.

Muhammad Ahsan Ullah received B.Sc. in engineering degree in electrical and electronic engineering from Chittagong University of Engineering and Technology, Bangladesh in 2002, M.E. degree in Electronic and Information Engineering from Kyung Hee University, Republic of Korea in 2007 and PhD degree in Information and Control Engineering at Nagaoka University of Technology at 2011. He is doing research on signal processing, coding theory, threshold decoding, MC-CDMA, OFDMA, MIMO, control system, embedded system design and image processing.

Rights and permissions

About this article

Cite this article

Majumder, S., Ullah, M.A. A Computational Approach to Pertinent Feature Extraction for Diagnosis of Melanoma Skin Lesion. Pattern Recognit. Image Anal. 29, 503–514 (2019). https://doi.org/10.1134/S1054661819030131

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S1054661819030131