Abstract

We consider a zero-sum differential game on a finite interval, in which the players not only control the system’s trajectory but also influence the terminal time of the game. It is assumed that the early terminal time is an absolutely continuous random variable, and its density is given by bounded measurable functions of time assigned by both players (the intensities of the influence of each player on the termination of the game). The payoff function may depend both on the terminal time of the game together with the position of the system at this time and on the player who initiates the termination. The strategies are formalized by using nonanticipating càdlàg processes. The existence of the game value is shown under the Isaacs condition. For this, the original game is approximated by an auxiliary game based on a continuous-time Markov chain, which depends on the controls and intensities of the players. Based on the strategies optimal in this Markov game, a control procedure with a stochastic guide is proposed for the original game. It is shown that, under an unlimited increase in the number of points in the Markov game, this procedure leads to a near-optimal strategy in the original game.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

INTRODUCTION

This work is devoted to the development of methods for solving differential games with random terminal time. We consider a zero-sum differential game on a finite interval \([0;T]\), in which the players can influence in a nonanticipating way the density of the moment of early (before the time \(T\)) termination of the game, setting at each time, each on their own part, the intensity (conditional density) of the termination. The cost function depends both on the terminal time and the position of the system at that moment and on the player who initiates the early termination; thus, this function becomes a random variable. For this reason, the actions of the players are considered to be aimed at optimizing the expected value of the cost function. Each player still has the opportunity to rely on their knowledge of the position of the system, assigning their own control and intensity during the game, but it should also be noted that the game between the players can occur repeatedly and the actions of the players can change during such a series of games; in particular, their actions may be nondeterministic, resulting from certain random processes. That is why we assume that, on the one hand, the players can choose the distributions of their controls rather than controls per se. On the other hand, by collecting statistics on a sufficiently long series of games, players have the opportunity to recover the distribution used by the opponent with any predetermined accuracy; in particular, knowing nothing during the game about the intensity of early termination already assigned by the opponent, each of them can recover the probabilistic law governing it.

In connection with the above, we assume in this paper that the strategies are random processes, which depend in a nonanticipating way on the realized trajectory of the system and, in the case of a counterstrategy, on the actions of the opponent. A detailed definition of strategies and counterstrategies is given in Section 2; we only note here that they are in principle not new. For a continuous-time Markov chain, such general definitions can be found, for example, in [26], where more constructive special cases of the definitions are described in detail. For example, in our setting, each player can use the classical deterministic strategies: piecewise continuous program strategies or piecewise constant positional strategies. We also note that the requirement imposed in this paper that the strategies can employ only càdlàg processes is rather of a technical nature; to some extent it can be justified by the facts that in control theory piecewise continuous controls are everywhere dense, whereas in the theory of differential games, within the classical setting [20, 30, 21], piecewise constant positional controls of the players implement the value function with any predetermined accuracy.

Let us discuss similar settings studied in the literature. The general statement of a game in which a player controls the moment of exiting the process goes back to Dynkin’s classic paper [10]. In such games (Dynkin games, stopping games), it is usually assumed that there is some conflict-control process and at least one of the players must choose by their actions a convenient time for stopping the entire game. Such game settings have numerous applications, in particular, in financial mathematics (see, for example, [1, 7, 15]); for them, the questions of the existence and description of the value of the game, the construction of optimal strategies, and the structure of such strategies are investigated. Starting with the classical works [5, 6], the dynamics of the game is usually described by a stochastic differential equation, but Lévy–Feller processes [4], as well as discrete-time (see, for example, [24, 29]) or continuous-time [11, 22] Markov chains, are also used. Finally, a differential game in which the distribution of the terminal time (and hence the intensity) is known to the players in advance was considered, for example, in [13, 23].

In this paper, for the game under consideration, we prove the existence of both the value and approximately optimal strategies that implement the value with any predetermined accuracy. The construction of the strategies is based on the technique of a stochastic guide; the idea of the technique is as follows. For any motion \(y\) of the original conflict-control system, a player uniquely reconstructs step-by-step a certain distribution \(\mathbb{P}^{Z}\) of auxiliary motions \(z\) calculated by this player. At each time \(t_{k}\) of some partition, the player assigns for the following time interval a control \(\bar{u}\) using the realized position \(z(t_{k})\), which is in fact a random variable, by the rule

where \({u}^{\mathrm{to}\,z}\) is a control that maximally shifts the position of the original system to the point \(z\). This technique solves the original problem and goes back conceptually to the classical methods for differential games [20] proposed by Krasovskii and Subbotin. For example, the rule \(\bar{u}^{\mathrm{to}\,z}\) is fundamental to the extremal shift method, and the rule (0.1) with substitution \(y\equiv z\) is used in the positional formalization of a differential game introduced by Krasovskii and Subbotin in [20]. The method of control with a guide proposed by the same authors originally presumed a one-to-one dependence of \(z\) on \(y\), but later a nondeterministic rule was proposed for constructing \(z\) in Krasovskii’s paper [17]. In [18, 19] a stochastic guide was constructed with the use of the Itô differential equation. In [2, 3] the trajectory of a continuous-time Markov game is considered as \(z\). In the present paper, \(z\) is also generated by an approximately optimal strategy in a continuous-time Markov game. In particular, the question of existence of the value in the original game is reduced to a similar fact for a Markov game, which was obtained in [14].

The paper is organized as follows. In Section 1 we formulate the original differential game and state (up to the definition of the strategies) Theorem 1, which guarantees the existence of the value in this game and is the main result of the paper. In Section 2 we give some basic definitions from the theory of random processes and introduce formalizations of the strategies used in the paper. In the next section, we extend the dynamics of the original game and represent the original objective functional as a discounted payoff. Section 4 is devoted to an auxiliary Markov game. In Section 5 the technique of a stochastic guide for the first player is considered: a random process (double game) is introduced, in which the first component corresponds to the state of the original game and the second component corresponds to the state of the stochastic guide; then, with the use of an extremal shift, the control of the first player is written in each component and a fictitious control is written for the second player in the Markov game. The last section is devoted to the proof of the theorem, which reduces to estimating the difference between the components of the random process, i.e., the trajectories of the original game and of the stochastic guide.

1. PROBLEM STATEMENT

1.1. Dynamics of the original game

Consider a conflict-control system in \(\mathbb{R}^{d}\)

Assume that \(\mathbb{U}\) and \(\mathbb{V}\) are metric compact sets in some finite-dimensional Euclidean spaces and the function \(f:\mathbb{R}_{+}\times\mathbb{R}^{d}\times{{\mathbb{U}}}\times{{\mathbb{V}}} \to\mathbb{R}^{d}\) is Lipschitz in all variables. We also require the saddle point condition in the small game (the Isaacs condition) [20, 21]: for all \(x,w\in\mathbb{R}^{d}\),

In addition to controlling the motion in \(\mathbb{R}^{d}\), each player can influence the terminal time of the game. Let us fix closed intervals \([\varphi_{-};\varphi_{+}],[\psi_{-};\psi_{+}]\subset\mathbb{R}_{+}\), which are sets of parameters that control the intensity of game termination on the part of each player. Assume that the first and second players have chosen piecewise continuous functions \([0;T]\ni t\mapsto\varphi(t)\in[\varphi_{-};\varphi_{+}]\) and \([0;T]\ni t\mapsto\psi(t)\in[\psi_{-};\psi_{+}]\), respectively. Then, for almost all \(t\in[0;T)\), the conditional probability of termination of the game on the interval \([t;t+\Delta t)\) if the game was still continuing at the time \(t\) is \((\varphi(t)+\psi(t))\Delta t+o(\Delta t)\). Solving the corresponding differential equation for the total intensity \(\varphi+\psi\), we find that, for any time \(\tau\in[0;T)\), the probability of termination of the game before the time \(\tau\) is

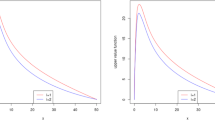

in particular, for \(\varphi(t)+\psi(t)\equiv C\), the terminal time of the game has the same distribution as \(\min(\theta,T)\), where \(\theta\) is distributed exponentially with parameter \(C\). Using (1.3) and the corresponding differential equations for the probabilities of termination of the game before the moment \(\tau<T\) on the initiative of the first and second player, respectively, we have for them the values

As a corollary, we find that, in the case of termination of the game at a time \(t<T\), the player responsible for this is the first with probability \(\varphi(t)/(\varphi(t)+\psi(t))\) and the second with probability \(\psi(t)/(\varphi(t)+\psi(t))\). It remains to formalize formulas (1.3) and (1.4).

For this, we equip the square \([0;1]^{2}\) with the \(\sigma\)-algebra \(\mathcal{B}([0;1]^{2})\) of Borel sets and consider the Lebesgue measure \(\lambda\) on them. We obtain the probability space

which assigns a random number \(\omega_{i}\) to each player. Now, for all Borel mappings \(\varphi:[0;T)\to[\varphi_{-};\varphi_{+}]\) and \(\psi:[0;T)\to[\psi_{-};\psi_{+}]\), we introduce random variables \(\omega\mapsto\theta_{1}(\omega)\) and \(\omega\mapsto\theta_{2}(\omega)\) by the following rule: for all \(\omega=(\omega_{1},\omega_{2})\in[0;1]^{2}\),

Note that, for any \(\tau\in[0;T)\), the probability of the event \(\theta_{1}<\tau\) coincides with (1.3) in the case \(\psi\equiv 0\). Similarly, the probability of the event \(\theta_{2}<\tau\) coincides with (1.3) in the case \(\varphi\equiv 0\). Thus, the random variable \(\theta_{i}\) coincides with the time of the possible termination of the game on the initiative of the \(i\)th player in the case of zero intensity of the opponent. Moreover, for any mappings \(\varphi:[0;T)\to[\varphi_{-};\varphi_{+}]\) and \(\psi:[0;T)\to[\psi_{-};\psi_{+}]\), the random variables \(\theta_{i}\) are independent because the random variables \(\omega_{i}\) are independent; hence, for the time of the early termination of the game

which is a random variable, the probability of the event \(\theta_{\min}<\tau\) is found by formula (1.3), and the probabilities of the events \(\theta_{1}<\tau\) and \(\theta_{2}<\tau\) are found by formula (1.4), as required. In what follows, we suppose that \((\omega_{1},\omega_{2})\) is chosen before the start of the game and is independent of the actions of the players, who have no information about \((\omega_{1},\omega_{2})\) before the terminal time. Thus, the players know formulas (1.3) and (1.4) and know (or hypothesize) their intensity and/or the intensity of the opponent but have no other information about the terminal time \(\theta_{\min}\).

For simplicity of notation, we introduce the sets of control parameters of the first and second players: \(\bar{{\mathbb{U}}}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}{{\mathbb {U}}}\times[\varphi_{-};\varphi_{+}]\) and \(\bar{{\mathbb{V}}}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}{{\mathbb {V}}}\times[\psi_{-};\psi_{+}]\).

1.2. Goals of the players and the value of the game

Let us define the goals of the players. Let functions \(\sigma_{0}:\mathbb{R}^{d}\to\mathbb{R}\), \(\sigma_{1}:[0;T]\times\mathbb{R}^{d}\to\mathbb{R}\), and \(\sigma_{2}:[0;T]\times\mathbb{R}^{d}\to\mathbb{R}\) be given. Assume that these functions are locally Lipschitz in \(x,t\) and have norms bounded by the number \(1\). Let \(\sigma_{0}(y(T))\) be the payoff of the first player to the second if there has been no early termination, i.e., the game has terminated at the time \(T\). Let the number \(\sigma_{1}(\theta_{1},y(\theta_{1}))\) be the payoff of the first player to the second if the first player has initiated an early termination of the game (at a time \(\theta_{1}\)). Similarly, \(\sigma_{2}(\theta_{2},y(\theta_{2}))\) is the payoff of the first player to the second if the termination of the game (at the time \(\theta_{2}\)) has been initiated by the second player. Thus, almost always on \([0;1]^{2}\), for a known trajectory \(y(\cdot)\) and known actions \((\bar{u},\bar{v})(\cdot)\) of the players, the objective function is defined; it is the random variable

Since the players do not know the outcome \(\omega\in[0;1]^{2}\), they can only optimize the expected value of the objective function \(J\)

which, similarly to the trajectory \(y(\cdot)\) of system (1.1), is uniquely recovered from the players’ controls \(\bar{u}(\cdot)\) and \(\bar{v}(\cdot)\).

Formalizations of the strategies will be given in the following section; we only note that one of the players will be allowed to feed as their control a càdlàg (right continuous and having limit on the left) trajectory of some random process, which depends on the trajectory of (1.1) in a nonanticipating way. The other will be allowed to know not only the trajectory but also the control used by the opponent; as a consequence, the control of this player depends on these trajectory and control as a random process in a nonanticipating way. Let us introduce the necessary notation.

Let \(\mathfrak{S}^{\mathrm{I}}\) and \(\mathfrak{S}^{\mathrm{II}}\) be the sets of admissible strategies of the players considered as distributions of random processes that depend in a nonanticipating way on the trajectory \(y(\cdot)\). Denote the sets of admissible counterstrategies of the players by \(\mathfrak{Q}^{\mathrm{I}}_{x_{*}}\) and \(\mathfrak{Q}^{\mathrm{II}}_{x_{*}}\), and denote by \(\mathfrak{R}_{x_{*}}({\mathbb{P}}^{\mathrm{I+II}})\) the set of all possible distributions \(\mathbb{P}^{\mathrm{all}}_{x_{*}}\) of random processes in the game where the players adhere to a joint strategy \({\mathbb{P}}^{\mathrm{I+II}}\). Naturally, any stepwise strategies (in particular, program strategies) are admissible strategies, and any admissible strategy is a counterstrategy.

Depending on which of the players is informationally discriminated, i.e., which of them has in advance informed the opponent about their strategy, we obtain two values of the game:

Theorem 1

The equality \(V^{-}=V^{+}\) is true. Moreover\(,\) for each positive \(\varepsilon,\) the players have admissible strategies \(\mathbb{P}^{\mathrm{I}}_{\varepsilon}\in\mathfrak{S}_{x_{*}}^{\mathrm{I}}\) and \(\mathbb{P}^{\mathrm{II}}_{\varepsilon}\in\mathfrak{S}_{x_{*}}^{\mathrm{II}}\) that guarantee

This theorem will be proved in the last section. Before that we recall some definitions from the theory of random processes and give the definitions of strategies promised earlier.

2. STRATEGIES AS DISTRIBUTIONS OF RANDOM PROCESSES

2.1. General facts from the theory of random processes

Recall that, for any pair of probability spaces \((\Omega^{\prime},\mathcal{F}^{\prime},\mathbb{P}^{\prime})\) and \((\Omega^{\prime\prime},\mathcal{F}^{\prime\prime},\mathbb{P}^{\prime\prime})\), we can consider the \(\sigma\)-algebra \(\mathcal{F}^{\prime}\otimes\mathcal{F}^{\prime\prime}\) of subsets of \(\Omega^{\prime}\times\Omega^{\prime\prime}\) as the smallest \(\sigma\)-algebra containing all products of the form \(A\times B\) for \(A\in\mathcal{F}^{\prime}\) and \(B\in\mathcal{F}^{\prime\prime}\). On this algebra, we can uniquely reconstruct a probability \(\mathbb{P}^{\prime}\otimes\mathbb{P}^{\prime\prime}\) by the rule

We obtain the product \((\Omega^{\prime}\times\Omega^{\prime\prime},\mathcal{F}^{\prime}\otimes \mathcal{F}^{\prime\prime},\mathbb{P}^{\prime}\otimes\mathbb{P}^{\prime\prime})\) of the original probability spaces. In addition, if there is a mapping \(g\) from a probability space \((\Omega_{1},\mathcal{F}_{1},\mathbb{P}_{1})\) to a measurable space \((\Omega_{2},\mathcal{F}_{2})\) and this mapping is measurable, i.e., \(g^{-1}(A)\in\mathcal{F}_{1}\) for all \(A\in\mathcal{F}_{2}\), then this mapping specifies the push-forward of the measure, probability \(\mathbb{P}_{2}\) for \((\Omega_{2},\mathcal{F}_{2})\), by the rule \(\mathbb{P}_{2}(A)\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\mathbb{P} _{1}(g^{-1}(A))\) for all \(A\in\mathcal{F}_{2}\). In particular, for the probability introduced above for \(\Omega^{\prime}\times\Omega^{\prime\prime}\), the marginal probabilities \(\mathbb{P}^{\prime}\) and \(\mathbb{P}^{\prime\prime}\) for \(\Omega^{\prime}\) and \(\Omega^{\prime\prime}\) coincide with the push-forwards of the measure \(\mathbb{P}^{\prime}\otimes\mathbb{P}^{\prime\prime}\) by the mappings \((\omega^{\prime},\omega^{\prime\prime})\mapsto\omega^{\prime}\) and \((\omega^{\prime},\omega^{\prime\prime})\mapsto\omega^{\prime\prime}\), respectively.

Fix a state space \(\mathbb{X}\) as a closed subset of a finite-dimensional Euclidean space, and equip it with the \(\sigma\)-algebra of Borel sets \(\mathcal{B}(\mathbb{X})\), thus obtaining the measurable space \((\mathbb{X},\mathcal{B}(\mathbb{X}))\). Fix also a time interval \(\mathcal{T}\).

Consider now the Skorokhod space \(D\big{(}\mathcal{T},\mathbb{X}\big{)}\) [8, 16] of all possible càdlàg functions from \(\mathcal{T}\) to \(\mathbb{X}\) (functions from \(\mathcal{T}\) to \(\mathbb{X}\) that are right continuous and have limit on the left). This space is Polish; equipping it with the corresponding Borel \(\sigma\)-algebra \(\mathcal{B}\big{(}D(\mathcal{T},\mathbb{X})\big{)}\), we obtain a measurable space. On each Skorokhod space, we fix the default canonical filtration

obtaining the stochastic basis \(\big{(}D\big{(}\mathcal{T},\mathbb{X}\big{)},\mathcal{B}\big{(}D(\mathcal{T}, \mathbb{X})\big{)},(\mathcal{A}^{\mathbb{X}}_{t})_{t\in\mathcal{T}}\big{)}\).

Following [25, Ch. IV, Sect. 1, Definition O2], we define a random process with time interval \(\mathcal{T}\) and state space \((\mathbb{X},\mathcal{B}(\mathbb{X}))\) as a system consisting of some probability space \((\Omega,\mathcal{F},\mathbb{P})\) and some family \((X_{t})_{t\in\mathcal{T}}\) of measurable mappings from \(\Omega\) to \(\mathbb{X}\). Each \(X_{t}\) is called the state of the process at the time \(t\), and the mapping \(t\mapsto X_{t}(\omega)\) is called the trajectory of the process corresponding to the outcome \(\omega\in\Omega\). A random process is called a càdlàg process if \(\mathbb{P}\)-almost all its trajectories are such. For each process, we can consider the corresponding first canonical process [25], replacing each \(\omega\) by the corresponding trajectory \(t\mapsto X_{t}(\omega)\); in particular, all càdlàg processes with state space \(\mathbb{X}\) and time interval \(\mathcal{T}\) are uniquely recovered by some probability measure (distribution of the process [9, Sect. 17.1]) given on the measurable space \((D(\mathcal{T},\mathbb{X}),\mathcal{B}(D(\mathcal{T},\mathbb{X})))\). In what follows, we will specify most càdlàg processes in the form of first canonical processes, fixing only their distributions in an appropriate Skorokhod space.

We will say that \((X_{t})_{t\in\mathcal{T}}\) is adapted to \((\mathcal{F}_{t})_{t\in\mathcal{T}}\) if, for each \(t\in\mathcal{T}\), the random variable \(\omega\mapsto X_{t}(\omega)\) is \(\mathcal{F}_{t}\)-measurable. A random variable \(\tau\) with values in \(\mathcal{T}\) is called a stopping time (with respect to a filtration \((\mathcal{F}_{t})_{t\in\mathcal{T}}\)) if, for all \(t\in\mathcal{T}\), the event \(\{\tau\leq t\}=\{\omega\,|\,\tau(\omega)\leq t\}\) lies in \(\mathcal{F}_{t}\). Note that if the canonical filtration \((\mathcal{A}^{\mathbb{X}}_{t})_{t\in\mathcal{T}}\) is taken as a filtration \((\mathcal{F}_{t})_{t\in\mathcal{T}}\) for \(\Omega\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}D(\mathcal{T},\mathbb {X})\), then any process given on this basis is \(\mathcal{A}^{\mathbb{X}}_{t}\)-measurable, and all possible stopping times \(\tau\) are exactly the mapping \(\tau:D(\mathcal{T},\mathbb{X})\mapsto\mathcal{T}\) such that, for any \(x,x^{\prime}\in D(\mathcal{T},\mathbb{X})\), the equality \(x(t)=x^{\prime}(t)\) for all \(\tau(x)\geq t\) implies \(\tau(x)=\tau(x^{\prime})\).

2.2. Strategy sets

Let \(\mathcal{T}\) be an interval closed on the left, and let \(t_{0}\) be its lower bound.

In addition to the Skorokhod space \(D\big{(}\mathcal{T},\mathbb{X}\big{)}\), we consider some compact sets \(\Upsilon_{1}\) and \(\Upsilon_{2}\) in some finite-dimensional Euclidean spaces; these sets are the sets of control parameters of the players. Let \(\Upsilon\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\Upsilon_{1}\times \Upsilon_{2}\). Program strategies of the first and second players and their joint program strategies are all possible piecewise right continuous and having limit on the left mappings that are elements of \(D(\mathcal{T},\Upsilon_{1})\), \(D(\mathcal{T},\Upsilon_{2})\), and \(D(\mathcal{T},\Upsilon)\), respectively. As earlier, equip them with \(\sigma\)-algebras of Borel sets with the canonical filtration.

For the state space \(\mathbb{X}\) and the set of control parameters \(\Upsilon\), we call a mapping

an admissible dynamics \(\mathbb{P}^{G}\) with initial distribution \(\varrho\in\mathcal{P}(\mathbb{X})\) if it satisfies the initial condition \(\mathbb{P}^{G}_{\upsilon(\cdot)}[\varrho](X(t_{0})\in A)\equiv\varrho(A)\) for all \(A\in\mathcal{B}(\mathbb{X})\) and \(\upsilon(\cdot)\in D\big{(}\mathcal{T},{\Upsilon}\big{)}\) and is nonanticipating; i.e., for any \(\upsilon^{\prime}(\cdot),\upsilon^{\prime\prime}(\cdot)\in D\big{(}\mathcal{T} ,{\Upsilon}\big{)}\) and time \(t\in\mathcal{T}\), the equality \(\upsilon^{\prime}|_{[t_{0};t)}=\upsilon^{\prime\prime}|_{[t_{0};t)}\) implies \(\mathbb{P}^{G}_{\upsilon^{\prime}(\cdot)}[\varrho](A)=\mathbb{P}^{G}_{\upsilon ^{\prime\prime}(\cdot)}[\varrho](A)\) for all \(A\in{\mathcal{A}}^{\mathbb{X}}_{t}\). Note that any probability \(\mathbb{P}^{G}_{\upsilon(\cdot)}[\varrho]\) is regular, since \(D\big{(}\mathcal{T},\mathbb{X}\big{)}\) is a complete separable metric space.

A strategy \(\mathbb{P}^{i}\) of the \(i\)th player is a mapping taking each element from \(x\in D\big{(}\mathcal{T},\mathbb{X}\big{)}\) to a distribution \(\mathbb{P}^{i}_{x(\cdot)}\) on \(D(\mathcal{T},{\Upsilon}_{i})\) (and, hence, to a first canonical process \(({\upsilon}^{i}_{x(\cdot)}(t))_{t\in\mathcal{T}}\) with values in \(\Upsilon_{i}\)) such that the nonanticipation condition holds for all \(t\in\mathcal{T}\): if \(x^{\prime}(s)=x^{\prime\prime}(s)\) for all \(s\in\mathcal{T}\) not greater than \(t\), then \(\mathbb{P}^{i}_{x^{\prime}(\cdot)}(A)=\mathbb{P}^{i}_{x^{\prime\prime}(\cdot)} (A)\) for all \(A\in\mathcal{A}_{t}^{\Upsilon_{i}}\). The notion of joint strategy \({\mathbb{P}}^{\mathrm{I+II}}\) of the players, which maps trajectories from \(D\big{(}\mathcal{T},\mathbb{X}\big{)}\) to distributions on \(D(\mathcal{T},{\Upsilon})\), is introduced similarly.

Note that any program strategy \(\upsilon_{i}(\cdot)\in D(\mathcal{T},\Upsilon_{i})\), being a mapping \(x(\cdot)\mapsto\upsilon_{i}(\cdot)\) from \(D\big{(}\mathcal{T},\mathbb{X}\big{)}\) to \(D(\mathcal{T},{\Upsilon}_{i})\) independent of the trajectory \(x(\cdot)\), is a strategy of the \(i\)th player.

For any initial condition \(\varrho\in\mathcal{P}(\mathbb{X})\), we call a random process \((X_{t})_{t\in\mathcal{T}}\) with values in \(\mathbb{X}\) a realization of a joint strategy \({\mathbb{P}}^{\mathrm{I+II}}\)for an admissible dynamics \(\mathbb{P}^{G}\) if there is a process \((\upsilon_{t},X_{t})_{t\in\mathcal{T}}\) given by some distribution \(\mathbb{P}^{\mathrm{all}}_{\varrho}\) on \(D(\mathcal{T},\Upsilon)\times D\big{(}\mathcal{T},\mathbb{X}\big{)}\) for which the mappings \(\upsilon(\cdot)\mapsto\mathbb{P}^{G}_{\upsilon(\cdot)}[\varrho]\) and \(x(\cdot)\mapsto\mathbb{P}^{\mathrm{I+II}}_{x(\cdot)}\) are the Radon–Nikodym derivatives of the marginal distributions \(\mathbb{P}^{\chi}_{\varrho}\) and \(\mathbb{P}^{\Upsilon}_{\varrho}\) (on \(D\big{(}\mathcal{T},\mathbb{X}\big{)}\) and \(D(\mathcal{T},\Upsilon)\), respectively) with respect to \(\mathbb{P}^{\mathrm{all}}_{\varrho}\); i.e.,

We will denote the set of all possible realizations for a strategy \({\mathbb{P}}^{\mathrm{I+II}}\) by \(\mathfrak{R}_{\varrho}({\mathbb{P}}^{\mathrm{I+II}})\).

We call a strategy \(\mathbb{P}^{i}\) of the \(i\)th player admissible for an initial condition \(\varrho\in\mathcal{P}(\mathbb{X})\) if the pair of strategies \((\mathbb{P}^{i},\delta_{\upsilon_{3-i}})\) has the corresponding realization for any program strategy \(\upsilon_{3-i}\in D(\mathcal{T},\Upsilon_{3-i})\) of the opponent. In particular, the program strategies themselves are admissible; indeed, for any choice of program strategies \((\upsilon_{1},\upsilon_{2})\) of the players, we can take \(\delta_{(\upsilon_{1},\upsilon_{2})}\otimes\mathbb{P}^{G}_{(\upsilon_{1}, \upsilon_{2})(\cdot)}[\varrho]\) as realizations of their joint strategy.

A counterstrategy \(\mathbb{Q}^{i}[\mathbb{P}^{\mathrm{III}-i}]\) of the \(i\)th player is a mapping taking each admissible strategy of the opponent \(\mathbb{P}^{\mathrm{\mathrm{III}}-i}\) to some joint strategy \({x(\cdot)}\mapsto\mathbb{Q}^{i}[\mathbb{P}^{\mathrm{III}-i}]_{x(\cdot)}\) on \(D(\mathcal{T},{\Upsilon}_{1}\times\Upsilon_{2})\) (and, hence, to a random process \(({\upsilon}_{x(\cdot)}(t))_{t\in\mathcal{T}}\) with values in \(\Upsilon_{1}\times\Upsilon_{2}\)) such that the corresponding marginal distribution on \(\Upsilon_{3-i}\) coincides with \(\mathbb{P}^{\mathrm{III}-i}_{x(\cdot)}\). A counterstrategy is called admissible if the strategy \(x(\cdot)\mapsto\mathbb{Q}^{i}[\mathbb{P}^{{\mathrm{III}-i}}]_{x(\cdot)}\) is admissible for any admissible strategy of the opponent \(\mathbb{P}^{\mathrm{III}-i}\). It is clear that any admissible strategy \(\mathbb{P}^{i}\) is also an admissible counterstrategy because it assigns to a strategy of the opponent \(\mathbb{P}^{\mathrm{III}-i}\) some joint strategy \({x(\cdot)}\mapsto\mathbb{P}^{\mathrm{I}}_{x(\cdot)}\otimes\mathbb{P}^{\mathrm{ II}}_{x(\cdot)}\).

Denote by \(\mathfrak{S}^{\mathrm{I}}_{x_{*}}\) and \(\mathfrak{S}^{\mathrm{II}}_{x_{*}}\) the sets of admissible (for an initial condition \(\varrho=\delta_{x_{*}}\)) strategies of the first and second players. Similarly, denote by \(\mathfrak{Q}^{\mathrm{I}}_{x_{*}}\) and \(\mathfrak{Q}^{\mathrm{II}}_{x_{*}}\) the sets of admissible (for the same condition) counterstrategies of the players. The above implies the chain of embeddings \(D(\mathcal{T},\Upsilon_{i})\hookrightarrow\mathfrak{S}^{i}_{x_{*}} \hookrightarrow\mathfrak{Q}^{i}_{x_{*}}\).

We construct a stepwise strategy, for example, for the first player, showing at the same time that any such strategy is also admissible. Consider some partition \(\Delta=(t_{k})_{k\in\mathbb{N}}\) of the time interval \(\mathcal{T}\), and let some admissible (for example, program) strategy \(\mathbb{P}^{I:k}\) of the first player be given for each \(k\in\mathbb{N}\) on the interval \([t_{k-1};t_{k})\). In particular, for any program strategy \(\upsilon_{k}\in D([t_{k-1};t_{k}),\Upsilon_{2})\) of the second player, there is a realization \(\mathbb{P}^{\mathrm{all}:k}\) of their joint strategy \(\delta_{\upsilon_{k}}\otimes\mathbb{P}^{I:k}\) on this interval. Now, each initial (at the time \(t_{k-1}\)) distribution \(\varrho_{k-1}\) corresponds to the probability \(\mathbb{P}^{\mathrm{all}:k}_{\varrho_{k-1}}(X(\cdot)\in B)\) for all Borel subsets \(B\) of the set \(D([t_{k-1};t),\mathbb{X})\) and, hence, to the marginal probability \(\varrho_{k}[\varrho](B)\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}} \mathbb{P}^{\chi:k}_{\varrho_{k-1}}(X(t_{k})\in B)\) depending on \(\varrho\). Thus, we have constructed a sequence of transition probabilities \(\varrho_{k}:\mathcal{P}(\mathbb{X})\to\mathcal{P}(\mathbb{X})\) depending on \(\varrho_{0}\); after that, we can introduce a distribution \(\mathbb{P}^{\mathrm{all}}_{\varrho_{0}}\) as the probability \(\otimes_{k\in\mathbb{N}}\mathbb{P}^{\mathrm{all}:k}_{\varrho_{k-1}}\) on Borel subsets of \(\prod_{k\in\mathbb{N}}D([t_{k-1};t_{k}),\Upsilon\times\mathbb{X})\). Thus, the realization of the joint strategy \(\mathbb{P}^{\mathrm{all}}_{\varrho_{0}}\) has been constructed, and any strategy constructed in this way (any stepwise strategy) is admissible.

2.3. Admissibility of the original dynamics

Let us show that the original game defines an admissible dynamics with \(\mathbb{X}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\mathbb{R}^{d+1}\), \(\mathcal{T}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}[0;T]\), and \(\Upsilon\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\bar{\mathbb{U}} \times\bar{\mathbb{V}}\). Indeed, if we fix program strategies, i.e., some càdlàg controls \(\bar{u}=(u,\varphi)\) and \(\bar{v}=(v,\psi)\), then the corresponding deterministic process \(t\mapsto y(t)\) uniquely defines the Dirac measure \(\mathbb{P}_{(u,v)}[\varrho]=\delta_{y}\) over the set of its trajectories from \(D(\mathcal{T},\mathbb{R}^{d})\) under a Dirac initial condition \(\varrho\). Due to their linearity, these distributions \(\mathbb{P}_{(u,v)}[\varrho]\) are defined for all possible initial conditions \(\varrho\). Further, each pair \((\varphi,\psi)\) defines stopping times \(\theta_{i}\) and, together with them, \(\theta_{\min}\) by means of formulas (1.5), (1.6). Let a random variable \(s\) be zero if the game is not completed upon the expiry of the time of the game (\(T=\theta_{\min}\)), be \(1+\theta_{\min}\) if \(\theta_{1}=\theta_{\min}\in[0;T)\), and be \(-1-\theta_{\min}\) if \(\theta_{2}=\theta_{\min}\in[0;T)\). To each pair

we assign a trajectory \(y_{(x(\cdot),s)}(\cdot)\in D([0;T],\mathbb{X})\) by the equalities

Then the push-forward of the measure \(\mathbb{P}_{({u},{v})}[\varrho]\otimes\mathbb{P}_{(\varphi,\psi)}\) by this mapping specifies a distribution \({\mathbb{P}}^{G}_{(\bar{u},\bar{v})}[\varrho]\) on \(D(\mathcal{T},\mathbb{X})\). In particular, the last coordinate now switches from the zero value to the value of the random variable \(s\) at the time of the early termination of the game. Now the distribution \({\mathbb{P}}^{\mathrm{all}}_{\varrho}\) on \(D(\mathcal{T},\bar{\mathbb{U}}\times\bar{\mathbb{V}})\times D(\mathcal{T}, \mathbb{X})\) is uniquely recovered by the following rule: for all Borel subsets \(B\subset D(\mathcal{T},\bar{\mathbb{U}}\times\bar{\mathbb{V}})\) and \(D\subset D(\mathcal{T},\mathbb{X})\),

Since a realization is constructed in the original game for an arbitrary joint program strategy, i.e., a pair of càdlàg controls \(\bar{u}=(u,\varphi)\) and \(\bar{v}=(v,\psi)\), it follows that the dynamics of the original game is admissible. Consequently, stepwise strategies in the original game are also admissible.

3. EXTENSION OF THE ORIGINAL GAME

Although the game process corresponding to the original game is completely defined, it will be convenient for us to extend its definition so that the process will be given on an unbounded time interval and in an extended state space. For this, we put \(\mathcal{X}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\mathbb{R}_{+} \times\mathbb{R}^{d+1}\) and define unit vectors of this space:

Now, for any vector \(z\in\mathcal{X}\), its \(i\)th coordinate can be written in the form \(\pi_{i}z\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\langle\pi_{i},z\rangle\). Finally, denote by \(||\cdot||_{d}\) the norm of a vector without the last coordinate: \(||z||_{d}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}||z-(\pi_{d+1}z) \pi_{d+1}||\).

3.1. Required constants

Since the dynamics of the system is bounded, there exists a compact set \(K_{<}\subset\mathbb{R}^{d}\) such that any solution \(y\) of system (1.1) with the initial condition from the unit ball centered at \(x_{*}\) stays within this set on the interval \([0;T]\). Increasing if necessary this set to \(K_{>}\), we can assume that the distance of any solution of system (1.1) (with the initial condition \(x_{*}\)) from the boundary of \(K_{>}\) is greater than 1 at any time from \([0;T]\). Now we can find a sufficiently large number \(L\geq 2\) such that the functions \(f|_{[0;T]\times K_{>}\times{{\mathbb{U}}}\times{{\mathbb{V}}}}\), \(\sigma_{0}|_{K_{>}}\), \(\sigma_{1}|_{[0;T]\times{K_{>}}}\), and \(\sigma_{2}|_{[0;T]\times{K_{>}}}\) are Lipschitz with the constant \(L\). We can also assume that the norm of \(f|_{[0;T]\times K_{>}\times\mathbb{U}\times\mathbb{V}}\) and the numbers \(\varphi_{+}\) and \(\psi_{+}\) are not greater than \(L-1\). Recall that, by our assumptions, the functions \(\sigma_{0}\), \(\sigma_{1}\), and \(\sigma_{2}\) do not exceed 1 in absolute value; for definiteness, we continue the mappings \(\sigma_{1}|_{[0;T]\times{\mathbb{R}^{d}}}\) and \(\sigma_{2}|_{[0;T]\times{\mathbb{R}^{d}}}\) to \([T;\infty)\times\mathbb{R}^{d}\) preserving both their Lipschitz constant and their norm in the uniform metric; this is possible by [28, Theorem 9.58].

Fix a smooth monotonically nonincreasing \(2\)-Lipschitz scalar function \(a:\mathbb{R}\to[0;1]\) such that \(a(0)=1\), \(a(1)=0\), and the function \(r\mapsto a(r-1/2)-1/2\) is odd. We also define \(a_{K}(x)\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}1-a(\operatorname*{ dist}(x;\mathbb{R}^{d}\setminus K_{>}))\) for all \(x\in\mathbb{R}^{d}\).

Fix some positive number \(h<\min(1,e^{-2}/T,{56L^{2}Te^{-12LT}}/{d})\) such that \({e^{-{T}/{8h}}}\leq hT\) and \(1/h\in\mathbb{N}\). Define

We have the inequalities

3.2. Extended dynamics of the original system

Recall that, according to the formalization proposed in the preceding section, the termination of the game on the initiative of the first or the second player at a time \(\theta\) consists in shifting the position of the system along the additional \((d+1)\)th coordinate by the vector \((1+\theta)\pi_{d+1}\) or \(-(1+\theta)\pi_{d+1}\), respectively. Now the extended space of the original dynamic system (1.1) coincides with \(\mathbb{R}^{d+1}\times\{s=0\}\), and the set \(\mathbb{R}^{d+1}\times\{s\geq 1\}\) contains all possible terminal positions of the system if the game has been terminated on the initiative of the first player; similarly, the set \(\mathbb{R}^{d+1}\times\{s\leq-1\}\) contains all terminal positions of the system if the game has been terminated on the initiative of the second player. Consequently, we extend the dynamics of (1.1) to \(\mathcal{X}=\mathbb{R}_{+}\times\mathbb{R}^{d+1}\).

For this, to each \(w=(t,x,s)\in\mathcal{X}\), \((u,\varphi)\in\bar{{{\mathbb{U}}}}\), and \((v,\psi)\in\bar{\mathbb{V}}\), we assign a vector

It is easy to verify that the function \({\hat{f}}\) is bounded in the norm by the number \(L\) and is \(3L\)-Lipschitz in each of the domains \([0;T)\times\mathbb{R}^{d+1}\) and \([T;\infty)\times\mathbb{R}^{d+1}\). In addition, \({\hat{f}}(w,\bar{u},\bar{v})\) coincides with \((1,f(t,x,u,v),0)\) if \(w=(t,x,s)\in[0;T)\times K_{<}\times\{0\}\), \((u,\varphi)\in\bar{{{\mathbb{U}}}}\), and \((v,\psi)\in\bar{\mathbb{V}}\). Moreover, outside the set \(\mathcal{X}_{<}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\mathbb{R}_{ +}\times K_{>}\times[-2-T;2+T]\), the function \({\hat{f}}\) is zero in all the coordinates except for the zero coordinate.

Consider a conflict-control system

If the dynamics in this system is defined by (3.2) and the initial conditions are in the set \(\mathcal{X}_{<}\), then the trajectories of (3.3) stay inside this set; in addition, for the original initial conditions and any controls of the players, the corresponding coordinates of the trajectories of (3.3) coincide with the coordinates of the trajectories of (1.1), and the time coincides with the zero coordinate of the solution of (3.3). Note also that, after the terminal time of the game, i.e., for \(\pi_{0}y(t)=t\geq T\) or for \(|\pi_{d+1}y(t)|\geq 1\), only the zero coordinate of the solution of system (3.3), which corresponds to time, changes. Finally, by the boundedness of the right-hand side for any solution \(y\) with dynamics (3.2), we have

In order to describe the jump in the last coordinate, we will require that the conditional probability of termination of the game on the initiative of the first (the second) player during a small interval \([t;t+\Delta t)\) is \(a(|\pi_{d+1}y|)1_{[0;T)}(\pi_{0}y)\varphi(t)\Delta t+o(\Delta t)\) (\(a(|\pi_{d+1}y|)1_{[0;T)}(\pi_{0}y)\psi(t)\Delta t+o(\Delta t)\), respectively). Then the times of positive and negative jumps in the last coordinate, which are the stopping times \(\theta_{1}\) and \(\theta_{2}\), are described by the rules

which in the case of the original initial conditions correspond to the rules (1.5) and (1.6) with the zero intensity of the opponent. In the general case, in view of the equality \(t=\pi_{0}y(t)\), the nondeterministic transition of the system corresponding to an early termination of the game and, hence, the value \(\theta_{\min}=\min(\theta_{1},\theta_{2})<T\), can be described by the Lévy measure

depending on \(z\), \(\bar{u}=(u,\varphi)\), and \(\bar{v}=(v,\psi)\).

Let us show that the dynamics extended in this way is admissible. Once again, for \(\mathcal{T}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\mathbb{R}_{+}\), we assign to each pair of controls \(\bar{u}=(u,\varphi)\), \(\bar{v}=(v,\psi)\) the Dirac measures \({\hat{\mathbb{P}}}_{(\bar{u},\bar{v})}[\varrho]\) supported by solutions \(y\) with dynamics (3.2) generated by these controls on the entire semiaxis. For the intensities \((\varphi,\psi)\), we find the stopping times \(\theta_{1}\) and \(\theta_{2}\) and, hence, \(\theta_{\min}\). As in the preceding section, we define for \(\theta_{1},\theta_{2}\) a random variable \(s\) and assign to each pair \((x(\cdot),s)\in D(\mathbb{R}_{+},\mathbb{X})\times([-1-T;-1]\cup\{0\}\cup[1;1+ T])\) a path \(y_{(x(\cdot),s)}(\cdot)\in D(\mathbb{R}_{+},\mathcal{X})\) by the rule (2.1). Once again, the push-forward of the measure \({\hat{\mathbb{P}}}_{(\bar{u},\bar{v})}\otimes\mathbb{P}_{(\varphi,\psi)}\) by this mapping specifies a distribution \({\hat{\mathbb{P}}}^{G}_{(\bar{u},\bar{v})}[\varrho]\) on \(D(\mathcal{T},\mathcal{X})\); then, the distribution \({\hat{\mathbb{P}}}^{\mathrm{all}}_{\varrho}\) on \(D(\mathcal{T},\bar{\mathbb{U}}\times\bar{\mathbb{V}})\times D(\mathcal{T}, \mathcal{X})\) is uniquely recovered by an analog of (2.2), i.e., by the rule

Thus, in the extended game, for an arbitrary pair of càdlàg controls \(\bar{u}=(u,\varphi)\), \(\bar{v}=(v,\psi)\), we have constructed their realization; hence, the extended dynamics is admissible. Therefore, as earlier, we have the admissibility of the program and stepwise strategies.

3.3. Payoff function in an integral form

We define a function \(W:\mathcal{X}\to\mathbb{R}\) by the following rule: for any \(z=(t,x,s)\in\mathcal{X}\),

Note that the function \({W}\) does not exceed \(1\) in absolute value, is independent of the zero coordinate, and is \(3L\)-Lipschitz because \(L\geq 2\).

To any path \(y\in D\big{(}\mathbb{R}_{+},\mathcal{X}\big{)}\), we assign a payoff

Let us establish the following representation of the expected value of the payoff function: for any random process with distribution \({\hat{\mathbb{P}}}^{G}_{\bar{u},\bar{v}}[\delta_{(0,x_{0},0)}]\in\mathcal{P}(D (\mathbb{R}_{+},\mathcal{X}))\) of trajectories \(t\mapsto y(t)=(t,x(t),s(t))\) with initial conditions \(x(0)\in K_{<}\), \(s(0)=0\),

Indeed, consider an arbitrary trajectory \(t\mapsto y(t)=(t,x(t),s(t))\) of the process. This trajectory is defined on the entire semiaxis, but, starting from some stopping time \(\tau_{T}\), all its coordinate except for the zero coordinate do not change anymore. This happens either upon the expiry of the time of the game, and then \(\tau_{T}=T\) and \(W(y(t))=\sigma_{0}(x(T))\) for all \(t\geq\tau_{T}\); or on the initiative of the first player, and then \(\tau_{T}=\theta_{1}\) is the first time with \(s(t)>0\), and \(z(\tau_{T})=(\tau_{T},x(\tau_{T}),\tau_{T}+1)\) and \(W(y(t))=\sigma_{1}(\tau_{T},x(\tau_{T}))\) for all \(t\geq\tau_{T}\); or on the initiative of the second player, and then \(\tau_{T}=\theta_{2}\) is the first time with \(s(t)<0\), and \(W(y(t))=\sigma_{2}(\tau_{T},x(\tau_{T}))\) for all \(t\geq\tau_{T}\). Since \(e^{h(T+2\gamma-t)}1_{[T+2\gamma;\infty)}(\pi_{0}y(t))W(y(t))\equiv 0\) until the time \(T+2\gamma\) along the same trajectory \(y(\cdot)\), it follows that \(\bar{\sigma}(y)\) is exactly \(\sigma_{0}(x(T))\), \(\sigma_{1}(\theta_{1},x(\theta_{1}))\), and \(\sigma_{2}(\theta_{2},x(\theta_{2}))\) in each of these cases. Thus, equality (3.6) is established.

Since the expected values of the payoff functions coincide in view of (3.6), the constructed game with the extended dynamics and the initial condition \((0,x_{*},0)\) coincides with the original game.

4. AUXILIARY MARKOV GAME

Let us now start to construct an approximating Markov game depending on the step \(h\). For this we consider an integer lattice \(\mathcal{Z}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}h\mathbb{Z}^{d+2 }\cap\mathcal{X}\) with step \(h\) that has dimension \(d+2\) and nonnegative zero coordinate. For the set of states, we take \(\mathcal{Z}_{<}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\mathcal{Z} \cap\mathcal{X}_{<}\). The space of paths is the Skorokhod space \(D\big{(}\mathbb{R}_{+},\mathcal{Z}_{<}\big{)}\) equipped with the \(\sigma\)-algebra of Borel sets and the canonical filtration.

4.1. Randomized strategies in the Markov game

We take randomized strategies as strategies of the players.

A randomized strategy \(\bar{\mu}\) of the first player is a pair \(({\mu},\varphi_{\mu})\) of mappings taking each \(w\in\mathcal{Z}_{<}\) to a time-dependent probability measure \({\mu}[w]\) on Borel subsets of \({\mathbb{U}}\) and a Borel measurable function \({\varphi}_{\mu}[w]\) with values in \([\varphi_{-};\varphi_{+}]\). A randomized strategy \(\bar{\nu}\) of the second player is a pair \(({\nu},\psi_{\nu})\) of mappings taking each \(w\in\mathcal{Z}_{<}\) to a time-dependent probability measure \({\nu}[w]\) on Borel subsets of \({{\mathbb{V}}}\) and a Borel measurable function \({\varphi}_{\nu}[w]\) with values in \([\psi_{-};\psi_{+}]\). Denote the sets of all randomized strategies of the first and second players by \(\check{\mathbb{U}}_{\varpi}\) and \(\check{\mathbb{V}}_{\varpi}\), respectively. If an element from \(\check{\mathbb{U}}_{\varpi}\) (or \(\check{\mathbb{V}}_{\varpi}\)) is independent of time, we will call it a stationary strategy. Denote the sets of all stationary strategies of the first and second players by \(\check{\mathbb{U}}_{\varsigma}\) and \(\check{\mathbb{V}}_{\varsigma}\), respectively, and denote by \({\mathbb{U}}_{\varsigma}\) and \({\mathbb{V}}_{\varsigma}\) their projections, i.e., the mappings \(w\mapsto{\mu}[w]\) and \(w\mapsto{\nu}[w]\), respectively.

Define a mapping \(\check{f}:\mathcal{Z}_{<}\times\check{\mathbb{U}}_{\varsigma}\times\check{ \mathbb{V}}_{\varsigma}\to\mathcal{Z}_{<}\) as follows: for any pair of strategies \((\bar{\mu},\bar{\nu})\in\check{\mathbb{U}}_{\varsigma}\times\check{\mathbb{V}} _{\varsigma}\) and a point \(w\in\mathcal{Z}_{<}\),

We also define for any \(i\in[0\,:\,d+1]\) the projections \(\pi^{+}_{i}\check{f}\) and \(\pi^{-}_{i}\check{f}\) of the mapping \(\check{f}\) by the rules

Note that we will substitute into \(\check{f}\) (and into other objects) values of time-dependent randomized strategies \(\bar{\mu}\) and \(\bar{\nu}\) in place of stationary randomized strategies, but in this case the argument responsible for time will be omitted for simplicity of notation.

4.2. Dynamics of the Markov game

For all points \(w\in\mathcal{Z}_{<}\) and randomized strategies \(\bar{\mu}\) and \(\bar{\nu}\) of the players, we define the Itô measure \(\check{\eta}(w,\bar{\mu},\bar{\nu};\cdot)\): for all Borel subsets \(A\subset\mathcal{Z}_{<}\),

For this measure, one can easily verify the following equalities, which will be needed below in the construction of the Lévy–Khinchin generator: for all \(w\in\mathcal{Z}_{<}\),

The introduced measure \(\check{\eta}\) corresponds to a continuous-time Markov chain given by the Kolmogorov matrix \((\bar{Q}_{wy}(\bar{\mu},\bar{\nu}))_{w,y\in\mathcal{Z}_{<}}\): for all time-dependent randomized strategies \(\bar{\mu},\bar{\nu}\),

Note that, for each \(w\in\mathcal{Z}_{<}\), both the measure \(\check{\eta}(w,\bar{\mu},\bar{\nu};\cdot)\) on the power set of \(\mathcal{Z}_{<}\) and the operator corresponding to \(\bar{Q}_{wy}\) linearly depend on \(\bar{\mu}\) and \(\bar{\nu}\). Now, for any mapping \(q:\mathcal{Z}_{<}\to\mathbb{R},\) from the minimax theorem we have the equality

For any mapping \(q:\mathcal{Z}_{<}\to\mathbb{R}\), we introduce (and fix) stationary strategies \(\bar{\mu}^{\downarrow q}\) and \(\bar{\nu}^{\uparrow q}\) implementing this minimax at each point.

4.3. Objective function and the value of the Markov game

Since all matrices \(\bar{Q}_{wy}\), similarly to the lengths of the jumps, are uniformly bounded, all assumptions of [ 14, Remark 4.2(b); 26, Theorem 5.1; 27, Assumptions 2.1, 2.2] are satisfied. Now, in view of [ 14, Proposition 3.1(a); 27, Sect. 2.3], for each pair of time-dependent randomized strategies \((\bar{\mu},\bar{\nu})\in\check{\mathbb{U}}_{\varpi}\times\check{\mathbb{V}}_{\varpi}\) and initial conditions \(\varrho\) (a probability over \(\mathcal{Z}_{<}\)), there are a process \((\check{Y}(t))_{t\geq 0}\) generated by them and, hence, its distribution \(\check{\mathbb{P}}^{G}_{\bar{\mu},\bar{\nu}}[\varrho]\in\mathcal{P}(D(\mathbb{ R}_{+},\mathcal{Z}_{<}))\). Then each randomized strategy is admissible, and we can assign to it a process \((\check{Y}(t))_{t\geq 0}\) with distribution

Once again, to each element of the path space \(z\in D\big{(}\mathbb{R}_{+},\mathcal{Z}_{<}\big{)}\subset D\big{(}\mathbb{R}_{ +},\mathcal{X}_{<}\big{)}\), we assign the payoff \(\bar{\sigma}(z)\) by the rule (3.5). For each initial position \(z_{0}\in\mathcal{Z}_{<}\), the players at time \(0\) can provide by the choice of the randomized strategy one of the following values depending on who of them is informationally discriminated:

Moreover, as follows from [ 14, Theorem 5.1; 26, Theorem 2], the system of equations (see [ 14, (5.4); 26, (11)])

has a unique solution in view of equality (4.3), and this solution coincides with \(\check{\mathcal{V}}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\check{ \mathcal{V}}^{-}=\check{\mathcal{V}}^{+}\). Note that, by the construction of the matrix \(\bar{Q}\), there are at most \(2d+4\) nonzero terms in each equation of the system. Moreover, in the case \(\pi_{0}y\geq\Gamma\), the value \(\check{\mathcal{V}}(y)\) is calculated explicitly and equals \(e^{h(T+2\gamma-\pi_{0}w)}W(w)\). Thus, this system reduces to a system of a finite number of equations.

4.4. Optimal stationary strategies

It follows from [ 26, (6); 14, (5.5)–(5.7)] that any stationary strategies \(\bar{\mu}^{\mathrm{opt}}\) and \(\bar{\nu}^{\mathrm{opt}}\) that solve for all \(w\in\mathcal{Z}_{<}\) the system

are optimal in this problem. Thus, now we can take \(\bar{\mu}^{\mathrm{opt}}\equiv\bar{\mu}^{\downarrow\check{\mathcal{V}}}\) and \(\bar{\nu}^{\mathrm{opt}}\equiv\bar{\nu}^{\uparrow\check{\mathcal{V}}}\) as optimal strategies; moreover, we can choose them so that they take values among Dirac measures over \({\hat{\mathbb{U}}}\) and \({\hat{\mathbb{V}}}\), respectively. In this case, the second component in \(\bar{\mu}^{\mathrm{opt}}=({u}^{\mathrm{opt}},\varphi^{\mathrm{opt}})\) is uniquely recovered by the rule

if \(|\pi_{d+1}w|<1\), \(\pi_{0}w\in[0;\Gamma)\), and \(\check{\mathcal{V}}(w+\pi_{d+1}(1+\pi_{0}w))\neq\check{\mathcal{V}}(w)\) and can be arbitrary if at least one of these conditions does not hold. A similar property holds for \(\bar{\nu}^{\mathrm{opt}}=({v}^{\mathrm{opt}},\psi^{\mathrm{opt}})\).

4.5. Estimates for the zero coordinate, which is independent of the players’ actions

Fix some \(z_{0}\in\mathcal{Z}_{<}\) with \(\pi_{0}z_{0}=0\) and the Dirac measure \(\varrho\) supported at this point. Then, for arbitrary, possibly, time-dependent randomized strategies of the players \(\bar{\mu}\) and \(\bar{\nu}\), there exists a distribution \(\check{\mathbb{P}}^{\mathrm{all}}_{\delta_{z_{0}}}\) of the process \((\check{Y}(t))_{t\geq 0}\) that linearly depends on this Dirac measure. For simplicity of notation, we assume until the end of this section that \(\check{\mathbb{P}}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\check{ \mathbb{P}}^{\mathrm{all}}_{\delta_{z_{0}}}\).

Note that, regardless of the players’ actions, the process \(\pi_{0}\check{Y}(t)\) has independent increments, and the random variables \(\pi_{0}(\check{Y}(t_{0}+t)-\check{Y}(t))/h\) have Poisson distribution with parameter \(t/h\); in particular, their expected value and dispersion are \(t/h\). Then \(\check{\mathbb{E}}|\pi_{0}\check{Y}(t)-t|^{2}=ht\); in particular, for \(t=T+2\gamma\),

Finally, since \(\pi_{0}\check{Y}(t)-t\) is also a martingale, it follows by Doob’s inequality that

Now, let us consider the random variable \(\xi=\pi_{0}\check{Y}(\Gamma)/h\), which has Poisson distribution with parameter \(\Gamma/h\). According to the Chernoff bound (a variant of Markov’s inequality), for any negative \(\delta\), we have

The equality sign here is due to the substitution of the value of the probability generating function (at the point \(\delta\)) for the Poisson distribution with parameter \(\Gamma/h\). Now, using the inequalities \(e^{\delta}-1\leq\delta+\delta^{2}/2\) and \(\delta<0\), substituting \({\delta}=-{\gamma}/{\Gamma}\), \(T=\Gamma-\gamma\), and \(\gamma=\sqrt{-2hT\ln(hT)}\), we finally obtain

Similarly, for \(\check{\mathbb{P}}(\pi_{0}\check{Y}(\Gamma+\gamma)\leq{\Gamma})\) and \({\delta}=-{\gamma}/({\Gamma+\gamma})\), we have

Once again, using \(\gamma=\sqrt{-2hT\ln(hT)}<T/2\) and \(2\sqrt{hT}\leq\gamma\) (see (3.1)), we obtain

4.6. Estimates for the trajectories in the Markov game

In this section we derive an analog of the estimate [2, Lemma 12] on required classes of strategies.

Fix some pair of randomized strategies \(\bar{\mu}=(\mu,\varphi_{\mu})\), \(\bar{\nu}=(\nu,\psi_{\nu})\) and, hence, the distribution \(\check{\mathbb{P}}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\check{ \mathbb{P}}^{\mathrm{all}}_{\varrho}\) and the Itô measure \({\eta}(z;\cdot)\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\check{\eta} (z,\bar{\mu},\bar{\nu};\cdot)\). This Itô measure corresponds to a Lévy–Khinchin generator (see [ 16, (5.1); 27, (2.14)]), which takes each function \(g\in C^{2}_{c}(\mathcal{Z}_{<})\) to a mapping \(x\mapsto\check{\Lambda}g(x)\) by the rule

Note that all the numbers \(\eta(x;\mathbb{X})\) are bounded uniformly in \(x\), the supports of the measures \(\eta(x;\cdot)\) are uniformly bounded, and \(\eta(x;G)\equiv 0\) for some neighborhood \(G\) of zero. Thus, the conditions [27, Assumptions 1, 2] are satisfied, and we have Dynkin’s formula [27, Proposition 2.3]:

for all \(g\in C^{2}_{c}(\mathcal{Z}_{<})\) and all nonnegative \(t\) and \(r\) (\(t\geq r\)); i.e., the process

is a martingale. By the uniform boundedness of the supports of the Itô measure, Dynkin’s formula (4.7) also holds for all \(g\in C^{2}(\mathcal{X})\), since these functions can always be assumed to be zero outside a compact neighborhood of the union of all supports.

Now, let strategies \(\bar{\mu}=(\mu,\varphi_{\mu})\) and \(\bar{\nu}=(\nu,\psi_{\nu})\) be stepwise with some partition \((t_{k})_{k\in\mathbb{N}}\). Since such a strategy is admissible, we once again we have a distribution \(\check{\mathbb{P}}^{\mathrm{all}}_{\varrho}\). Fix some time \(t^{\prime}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}t_{k-1}\) from the partition and an element \(w^{\prime}\in\mathcal{X}\), which can depend on \(\check{Y}(t^{\prime})\). Now, on the interval \([t^{\prime};t^{\prime\prime})\stackrel{{\scriptstyle\scriptstyle\triangle}}{{= }}[t_{k-1};t_{k})\), the strategy \(\bar{\mu}=(\mu,\varphi_{\mu})\), \(\bar{\nu}=(\nu,\psi_{\nu})\), as a program strategy with a possible dependence on \(\check{Y}(\cdot)|_{[0;t^{\prime})}\), also has its Lévy–Khinchin generator (4.6), for which Dynkin’s formula (4.7) holds.

Denote by \({\mathbb{E}}^{\mathrm{all}}_{y^{\prime}}\) the conditional expected value with respect to \(y^{\prime}=\check{Y}(t^{\prime})\). Applying the constructed generator (4.6) to the function \(g(w)\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}||w-w^{\prime}||^{2}_{d}\) on the interval \([t^{\prime};t^{\prime\prime})\), we conclude that, according to (4.8),

is a martingale; therefore, for all \(t\in[t^{\prime};t^{\prime\prime})\),

Thus,

Define \(M\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\sqrt{hL\sqrt{d+1}+L^{2}}\). Estimate the doubled product in (4.10) by the sum of squares. By the boundedness of (4.1) and (4.2) in the norm by the numbers \(L\) and \(hL\sqrt{d+1}\), we have

Hence, by Gronwall’s inequality, we have

for all \(t\in[t^{\prime};t^{\prime\prime})\); i.e.,

Thus, on any interval \([t^{\prime};t^{\prime\prime})\stackrel{{\scriptstyle\scriptstyle\triangle}}{{= }}[t_{k-1};t_{k})\) of the partition, the process

becomes a supermartingale. Since a stepwise strategy is switched only at times specified in advance, adding over all intervals of the partition, we obtain the supermartingale (4.12), as well as inequality (4.11), for all \(t\geq t^{\prime}\). Moreover, for \(w^{\prime}=y^{\prime}=\check{Y}(t^{\prime})\), (4.11) implies

By \(||\check{f}||_{d}\leq L\) and the upper bound \(hL\sqrt{d+1}\) for the norm of (4.2), we find from (4.10) that

for all \(t\) from the partition interval \([t^{\prime};t^{\prime\prime})\). Substituting estimate (4.13) here for \(w^{\prime}=y^{\prime}=\check{Y}(t^{\prime})\), we obtain

where we have used \(\arctan x\geq x-x^{3}/3\) in the last inequality. Thus, by (4.14), we have

for any \(t\in[t^{\prime};t^{\prime\prime})\), where \([t^{\prime};t^{\prime\prime})\) is an arbitrary interval from the partition chosen for the stepwise strategy.

Finally, since the functions \(g\) under consideration are independent of the last coordinate, the calculations and the resulting inequalities are independent of the choice of \(\varphi_{\mu}\) and \(\varphi_{\nu}\).

5. SCHEME OF THE GUIDE AND A DOUBLE GAME

We take the product \(\mathbb{X}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\mathcal{X}_{<} \times\mathcal{Z}_{<}\) for the state space of a new game; then, the state of paths is the Skorokhod space \(D({\mathbb{R}_{+}},\mathcal{X}_{<}\times\mathcal{Z}_{<})\). The component \(\mathcal{X}_{<}\) contains the original game; the game on the component \(\mathcal{Z}_{<}\) (except for the last coordinate) is calculated by the first player, and its position is the guide that helps to construct a strategy in \(\mathcal{X}_{<}\). The sets of control parameters of the players are \(\Upsilon_{1}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\mathbb{U} \times\check{\mathbb{U}}_{\varsigma}\times{\mathbb{V}}_{\varsigma}\) and \(\Upsilon_{2}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\bar{\mathbb{V}}\). Then all possible joint instantaneous controls compose the set \(\Upsilon\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\mathbb{U}\times \check{\mathbb{U}}_{\varsigma}\times{\mathbb{V}}_{\varsigma}\times\bar{\mathbb {V}}\). In order to describe an admissible dynamics of the double game, we construct for each program strategy \(\upsilon(\cdot)\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}(u,\mu, \varphi,\nu,v,\psi)(\cdot)\in D(\mathbb{R}_{+},\Upsilon)\) a distribution \(\widetilde{\mathbb{P}}^{G}_{\upsilon}[\varrho]\) that depends linearly on the initial condition \(\varrho\in\mathcal{P}(\mathbb{X})\). Assuming a linear dependence on \(\varrho\), we can restrict ourselves to the case where \(\varrho\) is a Dirac measure, supported at some point \((x,z)\in\mathcal{X}_{<}\times\mathcal{Z}_{<}\).

Let us first construct auxiliary processes \(({\hat{Y}}_{t})_{t\geq 0}\) and \((\check{Y}_{t})_{t\geq 0}\). For this we consider on \(\mathcal{X}_{<}\) the dynamics \({\hat{\mathbb{P}}}^{G}_{(u,0,v,\psi)}[\delta_{x}](\cdot)\) of a differential game with zero intensity of the first player in the last coordinate, and for \(\mathcal{Z}_{<}\) we introduce the dynamics \(\check{\mathbb{P}}^{G}_{(\mu,\varphi,\nu,0)}[\delta_{z}](\cdot)\) of a Markov game with zero intensity of the second player in the last coordinate. Both dynamics are admissible, and there exist the corresponding processes \(({\hat{Y}}_{t})_{t\geq 0}\) and \((\check{Y}_{t})_{t\geq 0}\). Since \(\pi_{d+1}{\hat{Y}}\) and \(\pi_{d+1}\check{Y}\) are governed by the intensities \(\psi\) and \(\varphi\), respectively, we can define \({\hat{\theta}}_{2}\) and \(\check{\theta}_{1}\) as the stopping times of the game by virtue of each players and, hence, the stopping time \({\theta}_{\min}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\min(\check{ \theta}_{1},{\hat{\theta}}_{2})\). Further, each pair of trajectories \(({\hat{Y}}_{t},\check{Y}_{t})_{t\geq 0}\) together with the stopping times \({\hat{\theta}}_{2}\) and \(\check{\theta}_{1}\) corresponds to a trajectory \(({\hat{w}},\check{w})(\cdot)\in D([0;\infty),\mathbb{R}^{2})\) according to the following rules: \(({\hat{w}},\check{w})\equiv(0,0)\) for \(\theta_{\min}\geq T\);

for \({\theta}_{\min}={\hat{\theta}}_{2}<T\) and \({\theta}_{\min}=\check{\theta}_{1}<T\), respectively.

Finally, we define the process \((Y^{\prime}(t),Y^{\prime\prime}(t))_{t\geq 0}\) with values in \(\mathcal{X}_{<}\times\mathcal{Z}_{<}\) as the push-forward of the process \(({\hat{Y}}_{t},\check{Y}_{t},({\hat{w}},\check{w})(t))_{t\geq 0}\) by the nonanticipating mapping

The resulting process specifies a dynamics for each joint program strategy from \(D(\mathbb{R}_{+},\Upsilon)\). Thus, an admissible dynamics is constructed.

Let us mention some properties of the process. First, the dynamics in each component with respect to all coordinates except for the last one completely coincides with the dynamics described earlier for the extended original game and the Markov game. Second, in each component, the lengths of the jumps and their intensities up to the time \(\min\{t\,|\,\pi_{0}Y^{\prime\prime}(t)\geq\Gamma\}\cup\{T\}\) obey the same Lévy measures as in the mentioned games. Third, before the time \(T\), the last coordinates in each component, being zero at the initial moment, become nonzero only simultaneously. Thus, the nondeterminacy of the component \(Y^{\prime}(t)\) is completely described by the nondeterminacy of the last coordinate of the component \(Y^{\prime\prime}(t)\); hence, for the computation of the last coordinate of the component \(Y^{\prime\prime}(t)\), we do not need to know the intensity of the second player during the game, since this coordinate can change only simultaneously with the termination of the game. Finally, this means that the control of the second player can be given in the format of the control of the original game, which has been done by the choice of \(\Upsilon_{1}=\mathbb{U}\times\check{\mathbb{U}}_{\varsigma}\times{\mathbb{V}}_ {\varsigma}\), \(\Upsilon_{2}=\bar{\mathbb{V}}\).

5.1. Aiming

Constructing the strategy of the first player and the response of the second player imagined by the first player, we will need to aim at and deviate from positions on each component. Let us introduce required functions.

First note that, in view of (1.2), for any vectors \(x,w\in\mathcal{X}\), there exist controls \(u^{\mathrm{to}\ w}(x)\in\mathbb{U}\) and \(v^{\mathrm{from}\,w}(x)\in\mathbb{V}\) such that

for all \((\varphi,\psi)\in[\varphi_{-};\varphi_{+}]\times[\psi_{-};\psi_{+}]\). For any vector \(w\in\mathcal{X}\), we introduce a control of the first player by the rule

For all \(x,w\in[0;T)\times\mathbb{R}^{d+1}\), since \({\hat{f}}\) is Lipschitz, we have

where the inequality holds due to the substitution \(u=u^{\prime}\), \(v^{\prime}=v\).

In order to define the behavior of the second player on the component \(\mathcal{Z}_{<}\), we assign for each \(x\in\mathcal{X}\) a stationary randomized strategy of the second player in the form

Then, for any stationary randomized strategy \(\bar{\mu}\) of the first player, we have

Therefore, for all \(\bar{v}\), \(\bar{\mu}\), \(\varphi\), and \(\psi\) in the case \(x,w\in[0;T)\times\mathbb{R}^{d+1}\) we have

Fix some pair of \(({x^{\prime},w^{\prime}})\in\mathcal{X}\times\mathcal{Z}_{<}\). Consider a mapping \(R_{x^{\prime},w^{\prime}}:\mathcal{X}\times\mathcal{X}\to\mathbb{R}_{+}\) independent of the \((d+1)\)th coordinates of its arguments and given by the rule

To obtain an analog of (4.9), to each stationary strategy \(\bar{\mu}\) we assign the generator (4.6) corresponding to the joint stationary strategy \((\bar{\mu},\bar{\nu}^{\mathrm{from}\,x^{\prime}}(w^{\prime}))\) for the function \(g(w)\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}{R}_{w^{\prime},x^{ \prime}}(w)\):

Now, for \(x,w,x^{\prime},w^{\prime}\in[0;T]\times\mathbb{R}^{d+1}\), since \({\hat{f}}\) is Lipschitz, we have

For any solution \(t\mapsto y(t)\) of system (3.3) generated by the joint control \((\bar{u},\bar{v})\), we have

for any smooth function \(g\). Hence, similarly to (4.9), for \({\hat{\Lambda}}[\bar{u},\bar{v}]g(x)\stackrel{{\scriptstyle\scriptstyle \triangle}}{{=}}\Big{\langle}\dfrac{\partial g(x)}{\partial x}|_{x=y(t)},{\hat {f}}(x,\bar{u},\bar{v})\Big{\rangle}\), the process

for all smooth functions \(g:\mathcal{X}\to\mathbb{R}\) independent of the last coordinate becomes a martingale; in particular, we have Dynkin’s formula (4.7). In addition, we have

in the case \(x,w,x^{\prime},w^{\prime}\in[0;T]\times\mathbb{R}^{d+1}\). Adding (5.4) and (5.2), we obtain for all such \(x\), \(w\), \(x^{\prime}\), and \(w^{\prime}\) and any \(\bar{\mu}\in\check{\mathbb{U}}_{\varsigma}\), \(\bar{v}\in\bar{\mathbb{V}}\) the estimate

5.2. Construction of a guide for the first player

Suppose that the first player learns the position of the trajectory \(x(\cdot)\) of the real game at some times \((t_{k})_{k\in\mathbb{N}}\) given in advance. We assume that this sequence of times is increasing, \(t_{0}=0\), and some \(t_{k}\) equals \(T\). For this sequence of times, we define the fineness of the partition \(r\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\max_{k\in\mathbb{N},t_{k} \leq T}|t_{k}-t_{k-1}|\) and the mapping \(\mathbb{R}_{+}\ni t\mapsto\tau_{\Delta}(t)\stackrel{{\scriptstyle\scriptstyle \triangle}}{{=}}\max\{t_{i}\,|\,t_{i}\leq t\}\).

Let us describe a stepwise strategy of the first player for the chosen partition \((t_{k})_{k\in\mathbb{N}}\).

Let the first player apply an optimal stationary strategy \(\mu^{\mathrm{opt}}\) at each time on the component \(\mathcal{Z}_{<}\); in particular, let the first player use \(\varphi^{\mathrm{opt}}[z(t)]\) as their intensity of termination of the game. Then this player uses \(\varphi^{\mathrm{opt}}[z(t)]\) on the first component as well, i.e., in the real game.

Let the first player try to minimize the distance from \(z(\cdot)\) to \(x(\cdot)\) on the first, real, component as follows: on the interval \([t_{k-1};t_{k})\), this player uses a control that is best (at the time \(t_{k-1}\)) for the shift of \(x\) to \(z(t_{k-1})\). Thus, the first player applies the following rule: \(\mathbb{R}_{+}\ni t\mapsto({u}^{\mathrm{to}\ z(\tau_{\Delta}(t))}(x(\tau_{ \Delta}(t)),\mu^{\mathrm{opt}}[z],\varphi^{\mathrm{opt}}[z])\in\Upsilon_{1} \stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}{\mathbb{U}}\times\check{ \mathbb{U}}_{\varsigma}\). Further, within the subgame calculated by the first player on \(\mathcal{Z}_{<}\), let the second player as imagined by the first try to maximize the distance from \(z(\cdot)\) to \(x(\cdot)\), applying a stationary strategy that is worst for the shift of \(z\) towards \(x(\tau_{\Delta}(t))\). Then, however, the strategy \(\mathbb{R}_{+}\ni t\mapsto{\nu}^{\mathrm{from}\,x(\tau_{\Delta}(t))}\), which depends nonanticipatingly on \(x\) and stationarily on \(z\), is used as \({\nu}\). Thus, the following strategy of the first player is specified: at each time,

Since this strategy is stepwise, it is admissible.

Now, having all the required constructions, we can proceed to proving the theorem.

6. PROOF OF THEOREM 1

Assume that the second player has all information about the strategy of the first player; in particular, the first player knows both the rule (5.6) and the position \(x\) and \(z\) at each moment \(t\) and, hence, knows the trajectory of the double game of the first player realized in the course of the game. Fix an arbitrary counterstrategy of the second player, which assigns in a nonanticipating way to each trajectory \((x,z)(\cdot)\) of the double game a distribution \(\widetilde{\mathbb{Q}}^{\mathrm{II}}[\widetilde{\mathbb{P}}^{\mathrm{I}}]_{(x, z)(\cdot)}\) on the Skorokhod space \(D(\mathbb{R}_{+},\mathbb{U}\times\check{\mathbb{U}}_{\varsigma}\times{\mathbb{ V}}_{\varsigma}\times\bar{\mathbb{V}})\) such that its projection onto \(D(\mathbb{R}_{+},\mathbb{U}\times\check{\mathbb{U}}_{\varsigma}\times{\mathbb{ V}}_{\varsigma})\) coincides with the distribution \(\widetilde{\mathbb{P}}^{\mathrm{I}}\) chosen by the first player together with the rule (5.6). In particular, for the counterstrategy \(\widetilde{\mathbb{Q}}^{\mathrm{II}}\), we could take an arbitrary element \(\mathbb{Q}^{\mathrm{II}}\) from \(\mathfrak{Q}^{\mathrm{II}}\), i.e., a counterstrategy of the original game; in this case, the above mapping would not depend on \(z\).

Fix initial conditions \((x_{0}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}(0,x_{*},0),z_{0}=(0, y_{0},0))\) such that \(||x_{*}-y_{0}||^{2}\leq{7}L^{2}\gamma^{2}e^{-12LT}\) and \(y_{0}\in h\mathbb{R}^{d}\). For this it is sufficient (see (3.1)) to choose as \(y_{0}\) the element from \(h\mathbb{Z}^{d}\) nearest to \(x_{*}\). For the strategy \(\mathbb{P}^{\mathrm{I}}\) of the first player, we take the described stepwise strategy and fix an arbitrary realization \(\widetilde{\mathbb{P}}^{\mathrm{all}}_{\delta_{(x_{0},z_{0})}}\) of the resulting joint strategy \(\widetilde{\mathbb{P}}^{\mathrm{I+II}}\). Thus, we have specified both the probability \(\widetilde{\mathbb{P}}^{\chi}_{\delta_{(x_{0},z_{0})}}\), which tracks only the position of the system, and the realization \({\hat{\mathbb{P}}}^{\mathrm{all}}_{\delta_{x_{0}}}\) not containing the guide. In particular, if the second player chooses the counterstrategy \(\mathbb{Q}^{\mathrm{II}}=\widetilde{\mathbb{Q}}^{\mathrm{II}}\) among the counterstrategies of the original game, the distribution \({\hat{\mathbb{P}}}^{\mathrm{all}}_{\delta_{x_{0}}}\) becomes a realization of some joint strategy from \(\mathbb{Q}^{\mathrm{II}}[\mathbb{P}^{\mathrm{I}}]\).

Since the initial position is fixed, we will omit the corresponding lower indices.

6.1. Divergence of trajectories

Define a stopping time \(\tau\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\min\{t\,|\,\pi_{0}Y^{ \prime\prime}(t)\geq T\}\cup\{T\}\) and consider two consecutive times \(t_{k-1}\) and \(t_{k}\) from the partition and, together with them, stopping times \(t^{\prime}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\min(t_{k-1},\tau)\) and \(t^{\prime\prime}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\min(t_{k},\tau)\). On the interval \([t^{\prime};t^{\prime\prime})\), the first player implements a constant control \((\bar{u}^{\mathrm{to}\ w^{\prime}}(x^{\prime}),\,\bar{\mu}^{\mathrm{opt}},{\nu }^{\mathrm{from}\,x^{\prime}})\), where \(x^{\prime}=Y^{\prime}(t^{\prime})\) and \(w^{\prime}=Y^{\prime\prime}(t^{\prime})\). Disintegrating \(\widetilde{\mathbb{P}}^{\chi}\) over \(x^{\prime}=Y^{\prime}(t^{\prime})\) and \(w^{\prime}=Y^{\prime\prime}(t^{\prime})\), we consider for all \(x^{\prime}\in\mathcal{X}\) and \(w^{\prime}\in\mathcal{Z}_{<}\) the corresponding conditional expected value \(\widetilde{\mathbb{E}}^{\chi}_{t^{\prime},x^{\prime},w^{\prime}}\) of the difference \(||Y^{\prime\prime}(t^{\prime\prime})-Y^{\prime}(t^{\prime\prime})||^{2}_{d}-|| Y^{\prime\prime}(t^{\prime})-Y^{\prime}(t^{\prime})||^{2}_{d}\).

From the definitions of \(R_{x^{\prime},w^{\prime}}\) and \(R_{w^{\prime},x^{\prime}}\) (see (5.1)) and the chain of equalities

we have the inequality \(\big{(}||x-w||^{2}_{d}-||x^{\prime}-w^{\prime}||^{2}_{d}\big{)}/2\leq||x-x^{ \prime}||^{2}_{d}+||w-w^{\prime}||^{2}_{d}+R_{x^{\prime},w^{\prime}}(x)+R_{w^{ \prime},x^{\prime}}(w)\). Putting \(S(x,w,x^{\prime},w^{\prime})\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=} }||x-x^{\prime}||^{2}_{d}+R_{x^{\prime},w^{\prime}}(x)+R_{w^{\prime},x^{\prime }}(w)+||w-w^{\prime}||^{2}_{d}\), we get

Let us estimate the expected values of \(R\) and \(S\). Substituting the stopping time \(t^{\prime\prime}\), since (4.8) and (5.3) are martingales, we obtain

Adding and using inequality (5.5), we get

Thus, the following inequality holds for \(S\):

By the estimates (3.4) and (4.15), we have

for all \(t\in[t_{k-1};t_{k}]\), where \(M^{2}=hL\sqrt{d+1}+L^{2}\). For \(\widetilde{\mathbb{E}}^{\chi}_{t^{\prime},x^{\prime},w^{\prime}}S(Y^{\prime}(t ^{\prime\prime}),Y^{\prime\prime}(t^{\prime\prime}),x^{\prime},w^{\prime})\), let us substitute the stopping time \(t^{\prime\prime}\leq t^{\prime}+t_{k-1}-t_{k}\) as \(t\) and define the function \(g(r)\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}(h\sqrt{d+1}+4M(e^{r}-1 )^{3/2}/3r+Lr)(1+Lr)/6\), where \(r=\max_{t_{i}\leq T}(t_{i}-t_{i-1})\). Then

Now, returning to (6.1), we obtain

Integrating the resulting estimate over all \(t^{\prime}=\min(t_{k-1},\tau)\) and \((x^{\prime},w^{\prime})=(Y^{\prime}(t^{\prime}),Y^{\prime\prime}(t^{\prime}))\), we get

now, substituting \(t^{\prime}=\min(t_{k-1},\tau)\) and \(t^{\prime\prime}=\min(t_{k},\tau)\), we obtain

Since this is true for all intervals \([t_{i-1};t_{i})\), multiplying by \(e^{12L(t_{k}-t_{i})}\), we also obtain

Successively substituting these inequalities into each other, we get

replacing here the sum over lower rectangles for the integral, we obtain

for all natural \(k\). Now, from \(||Y^{\prime}(0)-Y^{\prime\prime}(0)||_{d}^{2}\leq 7L^{2}e^{-12LT}\gamma^{2}\), since \(T\) belongs to the partition, we finally derive

Recall that, as shown in (4.4),

Then the estimate (3.4) implies

Further, recall that \(e^{-t}(||\check{Y}(t)-w^{\prime}||_{d}^{2}+M^{2})\) is a supermartingale for \(M^{2}=hL\sqrt{d+1}+L^{2}\) (see (4.12)). Then, for the stopping times \(\min(T,\tau)\) and \(T+2\gamma\), we have the inequality

Estimating its right-hand side by means of

and putting \(H(\gamma)\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}(hL\sqrt{d+1}+L^{2 })\gamma e^{2\gamma}(2+\sqrt{2}e^{T})\), we obtain

and

Together with (6.2) and (6.4), since \(\sqrt{7}+3\sqrt{2}<7\), we get

6.2. Divergence of payoffs

Denote by \(A\) the event

here \({\hat{Y}}(\cdot)\) is the trajectory of the original game. Note that \({\hat{Y}}(\cdot)\) can differ from \({Y}^{\prime}(\cdot)\) only on \([T;\infty)\) and only because of a jump of \({Y}^{\prime}\) in the last coordinate. Jumps are impossible after the stopping time \(\tau^{\prime}=\min\{t\,|\,\pi_{0}Y^{\prime\prime}(t)>T+\gamma\}\). Since the intensities of the players do not exceed \(L\), we find that the probability of such a jump does not exceed

consequently, the probability of the event \((\bar{\sigma}({\hat{Y}})\neq\bar{\sigma}({Y}^{\prime}))\) is not greater either. On the other hand, (4.5) implies \(\widetilde{\mathbb{P}}^{\mathrm{all}}((\pi_{0}Y^{\prime\prime}(T+2\gamma)>T+ \gamma)\,\&\,(\pi_{0}Y^{\prime\prime}(T+\gamma)>T))\geq 1-\gamma\). Since \(L\geq 2\) and the absolute values of the functions \({W}\) and \(\bar{\sigma}\) are bounded by 1, we have

Consider a trajectory for which the event \(A\) is true. Recall that \({\hat{Y}}(t)=Y^{\prime}(t)=Y^{\prime}(t_{T})\) and \(Y^{\prime\prime}(t)=Y^{\prime\prime}(t_{T})\) for all \(t\geq t_{T}\stackrel{{\scriptstyle\scriptstyle\triangle}}{{=}}\sup\{t\,|\,\pi_ {0}Y^{\prime\prime}(t)<T+\gamma\}\cup\{T\}\). Now, for the trajectory of the process in the case of the event \(A\), we have

In addition, in the case of \(A\), a jump in the last coordinate may occur no more than once, and it occurs simultaneously in both components by the distance \(1+\pi_{0}Y^{\prime}\) and \(1+\pi_{0}Y^{\prime\prime}\), respectively. Hence, it follows from (6.3) that

whence, in particular, \(\widetilde{\mathbb{P}}^{\mathrm{all}}(A)\widetilde{\mathbb{E}}^{\mathrm{all}} \big{(}|\pi_{d+1}(Y^{\prime}(t_{T})-Y^{\prime\prime}(t_{T}))|\,\big{|}\,A\big{ )}\leq\sqrt{2}\gamma\leq L\gamma\). Then in view of the \(3L\)-Lipschitzness of the mapping \(y\mapsto{W}(y)\), it follows from (6.5) that

Together with (6.6), this gives

Recall that, by the construction of the double game, \(Y^{\prime\prime}\) is the process generated in the Markov game in which the first player uses on each interval \([t_{k-1};t_{k})\) the strategy \(\bar{\mu}^{\mathrm{opt}}\equiv\bar{\mu}^{\downarrow\check{\mathcal{V}}}\). Then \(\check{\mathcal{V}}(Y^{\prime\prime}(t))\) becomes a supermartingale on each of these intervals; i.e.,

Uniting over all intervals, we find that \(\check{\mathcal{V}}(z_{0})\geq\widetilde{\mathbb{E}}^{\mathrm{all}}\bar{\sigma }(Y^{\prime\prime})\); i.e., by (6.7),

for any actions of the opponent \(\bar{v}\). Since \(\widetilde{\mathbb{E}}^{\mathrm{all}}\bar{\sigma}({\hat{Y}})\) is exactly the objective function in the original game and the game with a guide within the double game is one of the methods to play in the original game for the first player in the case of discrimination of the first player by the second, we have shown that

Similarly, using a guide, we construct a strategy for the second player with a symmetric estimate. Since \(\gamma=\sqrt{2hT\ln(hT)}\), and the grid step \(h\) and partition fineness \(r\) can be chosen arbitrarily small, the equality \(V^{+}=V^{-}\) is also shown.

REFERENCES

R. Amir, I. V. Evstigneev, and K. R. Schenk-Hoppé, “Asset market games of survival: A synthesis of evolutionary and dynamic games,” Annals Finance 9 (2), 121–144 (2013). https://doi.org/10.1007/s10436-012-0210-5

Y. Averboukh, “Approximate solutions of continuous-time stochastic games,” SIAM J. Control Optim. 54 (5), 2629–2649 (2016). https://doi.org/10.1137/16M1062247

Y. Averboukh, “Approximate public-signal correlated equilibria for nonzero-sum differential games,” SIAM J. Control Optim. 57 (1), 743–772 (2019). https://doi.org/10.1137/17M1161403

A. Basu and L. Stettner, “Zero-sum Markov games with impulse controls,” SIAM J. Control Optim. 58 (1), 580–604 (2020). https://doi.org/10.1137/18M1229365

A. Bensoussan and A. Friedman, “Nonlinear variational inequalities and differential games with stopping times,” J. Functional Anal. 16 (3), 305–352 (1974). https://doi.org/10.1016/0022-1236(74)90076-7

A. Bensoussan and A. Friedman, “Nonzero-sum stochastic differential games with stopping times and free boundary problems,” Trans. Amer. Math. Soc. 231 (2), 275–327 (1977). https://doi.org/10.1090/S0002-9947-1977-0453082-7

T. R. Bielecki, S. Crépey, M. Jeanblanc, and M. Rutkowski, “Arbitrage pricing of defaultable game options with applications to convertible bonds,” Quantitative Finance 8 (8), 795–810 (2008). https://doi.org/10.1080/14697680701401083

P. Billingsley, Convergence of Probability Measures (Wiley, New York, 1968; Nauka, Moscow, 1977).

A. A. Borovkov, Probability Theory (URSS, Moscow, 1999; Springer, London, 2013).

E. B. Dynkin, “Game variant of a problem on optimal stopping,” Soviet Math. Dokl. 10, 270–274 (1969).

E. Ekström and G. Peskir, “Optimal stopping games for Markov processes,” SIAM J. Control Optim. 47 (2), 684–702 (2008). https://doi.org/10.1137/060673916

F. Gensbittel and C. Grün, “Zero-sum stopping games with asymmetric information,” Math. Oper. Res. 44 (1), 277–302 (2019). https://doi.org/10.1287/moor.2017.0924

E. Gromova, A. Malakhova, and A. Palestini, “Payoff distribution in a multi-company extraction game with uncertain duration,” Math. 6 (9), article no. 165 (2018). https://doi.org/10.3390/math6090165

X. Guo and O. Hernández-Lerma, “Zero-sum continuous-time Markov games with unbounded transition and discounted payoff rates,” Bernoulli 11 (6), 1009–1029 (2005). https://doi.org/10.3150/bj/1137421638

S. Hamadéne, “Mixed zero-sum stochastic differential game and American game options,” SIAM J. Control Optim. 45 (2), 496–518 (2006). https://doi.org/10.1137/S036301290444280X

V. N. Kolokoltsov, Markov Processes, Semigroups and Generators (De Gryuter, Berlin, 2011), Ser. De Gruyter Studies in Mathematics, Vol. 38.

N. N. Krasovskii, “An approach–evasion game with a stochastic guide,” Dokl. Akad. Nauk SSSR 237 (5), 1020–1023 (1977).

N. N. Krasovskii and A. N. Kotel’nikova, “On a differential interception game”, Proc. Steklov Inst. Math. 268 (1), 161–206 (2010). https://doi.org/10.1134/S008154381001013X