Abstract

Consumers often rely on online review sites (e.g., Yelp, TripAdvisor) to read reviews on service products they may consider choosing. Despite the usefulness of such reviews, consumers may have difficulty finding reviews suitable for their own preferences because those reviews possess significant preference heterogeneity among reviewers. Differences in the four dimensions of individual reviewers (Experience, Productivity, Details, and Criticalness) lead them to have differential impacts on various consumers. Thus, this research is aimed at understanding the profiles of multiple influential reviewer segments in the service industries under two fundamental evaluation principles (Quality and Likeness). Based on the results, we theorize that reviewer influence arises from writing either reviews of high quality (Quality) or reviews reflecting a significant segment of consumers’ common preferences (Likeness). Using two service product categories (restaurants and hotels), we empirically identify and profile four specific influential review segments: (1) top-tier quality reviewers, (2) second-tier quality reviewers, (3) likeness (common) reviewers, and (4) less critical likeness reviewers. By using our approach, product review website managers can present product reviews in such a way that users can easily choose reviews and reviewers that match their preferences out of a vast collection of reviews containing all kinds of preferences.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Online reviews contain enriched purchase experiences covering all sorts of service products. Recent research has shown that such reviews help consumers make choices from multiple product alternatives (Chevalier and Mayzlin 2006; Choi and Leon 2020; Grewal and Stephen 2019; Ismagilova et al. 2020; Leong et al. 2021; Lopez et al. 2020; Proserpio and Zervas 2017; Ren and Nickerson 2019; Shihab and Putri 2019; Siddiqi et al. 2020; Thomas et al. 2019; Vana and Lambrecht 2021; Zablocki et al. 2019; Zheng 2021; Zhu and Zhang 2010). Because online reviews are created by reviewers, it is important for businesses to understand how reviewers interact with other consumers so that they can reach out to their target consumers through product promotion and customer retention efforts on review platforms (Trusov et al. 2010). To reflect the importance of reviewers on general consumers, many social media platforms evaluate, recognize, and even feature influential reviewers to their platform users (Rust et al. 2021). For example, a reviewer’s rank on Amazon.com is determined by the overall helpfulness of all reviews by the reviewer, factoring in the number of reviews by the reviewer (Yazdani et al. 2018). The helpfulness of each review is determined by votes for the review from general customers (www.amazon.com/review/top-reviewers). According to Amazon, a top-ranked reviewer, Wm (Andy) Anderson, has received more than 40,000 helpful votes for his various posted book reviews. By contrast, another reviewer, PhotoGraphics, has mostly written about everyday life products such as cat food, mug suction cups, and almonds. Importantly, these examples demonstrate that different types of influential reviewers impact different consumers.

To understand these various types of reviewers, researchers have recently examined the characteristics and roles of reviewers in generating such reviews (Ghose and Ipeirotis 2011; Hoskins et al. 2021; Ismagilova et al. 2020; Karimi and Wang 2017; Lo and Yao 2019; Ozanne et al. 2019; Sunder et al. 2019; Wu et al. 2021). Given the importance of reviewers on social media, researchers have characterized these reviewers as opinion leaders in their interactions with general consumers (Flynn et al. 1996; Mu et al. 2018). Although this stream of research enhances our understanding of different reviewers, it falls short of uncovering the vast amounts of reviewers’ preference heterogeneity buried in their posted reviews. Compared to the traditional consumer segmentation methods based on a limited number of structured variables (e.g., price, promotion) (Suay-Pérez et al. 2021; Kamakura and Russell 1989), our research takes advantage of extremely enriched and readily accessible unstructured information from a large number of reviewers for reviewer segmentation.

Importantly, unlike existing studies, the primary objective of this research is to methodically profile multiple influential reviewer segments through reviewer segmentation to help service businesses establish effective segment-specific business strategies (see Table 1). In our research, we find that there are two principles under which reviewers become influential [raising the quality of reviews (Quality) and showing similar preferences to a significant size of consumers (Likeness)]. Further, we show how reviewers’ four dimensions (Experience, Productivity, Details, and Criticalness) in their textual product reviews explain their influence on social media. Practically, using the results of our reviewer segmentation, review platforms can present the essential properties of multiple influential reviewer segments, which can allow their users to choose reviews that match their preferences out of a vast collection of cluttered reviews.

In the context of social media, we theorize that users may take advantage of two fundamentally distinct types of reviewers: one type writing high-quality reviews (Quality) and the other type appealing to consumers by sharing similar preferences (Likeness). The coexistence of professional reviewers (e.g., power bloggers), along with ordinary amateur reviewers in many online review platforms covering various industries, attest to these two types of reviewers (Yazdani et al. 2018). In particular, we find a notable example of this case in the movie industry (Jacobs et al. 2015; Tsao 2014), where reviews by both professional critics (e.g., Metacritic, Rotten Tomatoes) and ordinary moviegoers (e.g., IMDb, Yahoo! Movies) strongly influence movie revenues. In their meta-analysis on the source credibility effect, Ismagilova et al. (2020) found that both source expertise and homophily between an information source and a receiver significantly influence information adoption and purchase intention.

On online review sites, one common way for consumers to reduce their transaction costs and uncertainties is to rely on like-minded reviewers who share similar preferences with them (Likeness). Social media studies show that ordinary users’ recommendations influence other consumers more effectively than experts’ recommendations because ordinary users are perceived as being more credible, which evokes stronger empathy due to the homophily of users sharing similar identities and preferences (Filieri et al. 2018; Huang and Chen 2006; Ladhari et al. 2020; Sokolova and Kefi 2020; Tsao 2014). Further, reviewers whose preferences reflect a significant proportion of consumer groups will be more influential because they can appeal to a larger consumer group. The other way to be influential on review sites is to post high-quality reviews consistently through accumulated experience and knowledge (Akdeniz et al. 2013; Kamakura et al. 2006). In their research on word-of-mouth, Bansal and Voyer (2000) found that the sender’s expertise indicates a positive influence on the receiver’s purchase decision (Gilly et al. 1998; Hovland and Weiss 1951).

Given the stated research findings, this research is aimed at identifying and profiling diverse reviewer groups grounded in the two principles of Quality and Likeness. Furthermore, to profile influential reviewer segments, we use four dimensions of reviewer characteristics, taking advantage of the common structure of typical online review sites: (1) Experience (Banerjee et al. 2017; Zhu and Zhang 2010), (2) Productivity (Ngo-Ye and Sinha 2014; You et al. 2015), (3) Details (Chevalier and Mayzlin 2006; Pan and Zhang 2011), and (4) Criticalness (Mudambi and Schuff 2010). Most of these studies have focused on the reviews themselves and have examined some of the factors selectively without a general framework. By contrast, we examine the four reviewer dimensions from a comprehensive perspective to understand how they play out in determining various types of reviewer influence.

From a managerial perspective, this research can help review platform managers oversee their user information (Yee et al. 2021). For example, if a platform can classify its reviewers into several homogeneous groups, platform users can more efficiently find relevant purchase and consumption information from reviewers sharing similar preferences. When a platform provides more customized reviewer matching through reviewer segmentation, its users’ visit frequencies will increase (Baird and Parasnis 2011).

Literature review

Individual reviewers’ preferences and writing styles revealed in their product reviews have differential impacts on other consumers. For example, Naylor et al. (2011) found that consumers use an accessibility-based egocentric anchor to infer that ambiguous reviewers have similar tastes to their own, leading consumers to be similarly persuaded by reviews written by ambiguous and similar reviewers. Opinion leaders are identified as having high product expertise and strong social connections. In the context of online review sites, opinion leaders can be perceived as influential reviewers with product expertise supported by other consumers’ votes for their reviews (Agnihotri and Bhattacharya 2016; Casaló et al. 2020; Chan and Misra 1990; Farivar et al. 2021; Kuksov and Liao 2019; Risselada et al. 2018; Vrontis et al. 2021). In measuring how effective reviewers are in assisting other consumers, cue-based trust theory may be applicable (Wang et al. 2004). Trust is commonly studied with a two-dimensional view based on a rational evaluation process and an emotional response (Komiak and Bendasat 2006; Ozdemir et al. 2020; Punyatoya 2019). Following this view, Johnson and Grayson (2005) identified two opposing types of trust in evaluating interpersonal trust—affective trust and cognitive trust—in consumer-level service relationships. In the social media context, value expressive signals such as attitudes, hobbies, and preferences are revealed in consumer reviews, which suggests that social media members interact to experience a psychological connection or emotional affiliation with other like-minded members (Mu et al. 2018). On the other hand, cognitive trust is based on accumulated knowledge that allows consumers to make predictions with some level of confidence. Online content with high credibility (such as consumer reviews) may appeal to uncertain consumers as a source of cognition-based trust (Moorman et al. 1992). The empirical results by Johnson and Grayson (2005) supported the relationship between trust and sales (Grimm 2005; Lewis and Weigert 1985).

Reviewers with significant influence may be regarded as opinion leaders on social media. Opinion leadership may take place when reviewers influence other consumers’ purchasing behavior in specific product categories rather than across categories (Flynn et al. 1996; Vrontis et al. 2021). Hennig-Thurau et al. (2004) identified four online consumer segments based on eight motives of consumer online articulation (e.g., self-enhancement, social benefits): self-interested helpers, multiple-motive consumers, consumer advocates, and true altruists. A major distinction regarding these reviewers can be made in terms of the magnitude of their influence on other consumers.

The success of social media sites relies on the number and activity levels of their users (Algharabat et al. 2020; Lim et al. 2020). Although users typically have numerous connections to other site users, only a fraction of these so-called social media friends actually influence other members’ site usage (Trusov et al. 2010). Lee et al. (2011) found that helpful reviewers on TripAdvisor.com are those who travel more, actively post reviews, and give lower hotel ratings. Mu et al. (2018) examined the differential effects of online group influence on digital product consumption in online music listening, with a distinction between mainstream music and niche music. When consumers read online reviews to find satisfactory products, the combination of varied preferences and the unique writing characteristics of each distinct reviewer segment will exert differential influences on various consumers (Algesheimer et al. 2005; Flynn et al. 1996; Lee et al. 2011; Mu et al. 2018).

Our review of the online reviewer literature identified four major reviewer characteristics that can determine reviewer influence on online review platforms: (1) Experience, (2) Productivity, (3) Details, and (4) Criticalness. First, in the context of online reviews, experience can be measured by the time length for which the reviewer has been a member of the review community. Studies have found that reviewers’ experience in online review sites is positively associated with reviewer trustworthiness perceived by consumers and review helpfulness (Ku et al. 2012; Malik and Hussain 2020; Zhu and Zhang 2010).

Second, many studies have examined the positive impact of review volume on sales (Chintagunta et al. 2010; Kim et al. 2019), as consumers may perceive the volume signal as credible (Banerjee et al. 2017; You et al. 2015; Zhu and Zhang 2010). In addition, Ngo-Ye and Sinha (2014) focused on reviewers rather than reviews and found that the reviewer’s review frequency helps predict review helpfulness.

Third, previous research has found that word-of-mouth recommendations with details about products are more persuasive than broad and general reviews because detailed reviews are more diagnostic in making purchase decisions (Dholakia and Sternthal 1977; Herr et al. 1991). Studies have shown that longer reviews include more details on product information, product usage, and consumers’ overall consumption experiences (Ren and Hong 2019). Therefore, detailed reviews are likely to be perceived to be more helpful in assessing product quality and performance (Mudambi and Schuff 2010). Chevalier and Mayzlin’s (2006) study on Amazon reviews also showed that the amount of information in reviews has a positive correlation with overall product sales. Further, consumers consider a reviewer who spent more time writing a long review as being more credible (Pan and Zhang 2011).

Lastly, the reviewer’s summary rating of the product indicates his or her overall assessment of the product under review (Mudambi and Schuff 2010; Sánchez-Pérez et al. 2021). The rating is regarded as a useful cue for consumers in evaluating product quality (Krosnick et al. 1993). Rating valence is expected to affect review helpfulness, but previous studies have reported inconsistent results for the direction of this relationship (Pan and Zhang 2011; Racherla and Friske 2012). Interestingly, psychology and world-of-mouth studies suggest that humans are drawn to and put more emphasis on negative (compared to positive) information due to the negativity bias (Jordan 1965; Lo and Yao 2019; Mahajan et al. 1984; Skowronski and Carlston 1989; Tata et al. 2020).

Empirical analyses and results

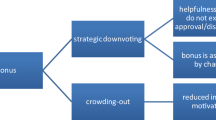

Our empirical analyses for reviewer segmentation and profiling using data from two service industries (hotels and restaurants) take multiple successive steps. To give an overview of these empirical analyses, we provide a flowchart in Fig. 1. Each step in the figure is explained in detail in this empirical section.

Data from TripAdvisor (Hotels) and Yelp (Restaurants)

We collected the necessary data from two representative service industries: hotel reviews from TripAdvisor and restaurant reviews from the Yelp Dataset Challenge (www.yelp.com/dataset_challenge). We chose these two industries because they are representative consumer service industries that can be easily understood by most people.

First, we collected hotel service reviews from TripAdvisor (Moon and Kamakura 2017). We determined that choosing a specific geographical region is not suitable for hotel reviews because individuals travel to multiple destinations and, accordingly, generate multiple reviews from everywhere. Therefore, we adopted snowball sampling, where we began with all hotel reviews in a single city (Phoenix, Arizona) as well as the profile information of the corresponding reviewers. We then collected all other hotel reviews that these reviewers had posted on TripAdvisor. Concurrently, we collected the hotel characteristics reported by the hotels, as shown in the Business-Reported Variables section of Table 2. As a result, although we initiated the sampling from a single city, we were able to collect a large number of reviews around the globe. Then, we selected those reviewers with at least ten hotel reviews in the resulting dataset for methodologically stable and practically meaningful segmentation profiling (Allaway et al. 2014). Because the primary purpose of our analysis is to identify influential reviewers on social media, our selection of relatively high-volume review writers seems fitting, given that reviewers’ influence is heavily associated with their writing productivity (Lee et al. 2011). Our sampling process resulted in a total of 183,987 hotel reviews posted by 5906 hotel reviewers for 58,964 hotels.

The business attribute information provided by businesses is basic and invariable across all reviews pertaining to the same business. Accordingly, the business-reported information cannot reflect consumers’ diverse preferences for the business. By contrast, consumers’ reviews have an extensive amount of information on both reviewers and hotels in an unstructured format. Such a data structure, common on social media, explains why marketing practitioners can use our procedure in this research to learn about different reviewers representing consumer heterogeneity in hotel preferences and choices. Furthermore, each review has an overall rating for the particular restaurant experience on a 5-point scale. Although product sales are not available on social media, product ratings are readily available. These ratings are influential in consumers’ product choices as post-consumption satisfaction evaluations of the products consumed. In the current social media era, consumers’ product choices are strongly influenced by such ratings from other trustworthy consumers (Simonson and Rosen 2014).

Second, we also chose restaurant reviews from Yelp (Luca and Zervas 2016). The industry, with various price levels and various cuisines, is diverse enough to show complicated heterogeneity in consumer preferences. In the Yelp data covering many regions over the period between 2006 and 2016, we selected restaurants in the state of Arizona over the same period. Further, we removed reviewers who posted fewer than ten reviews on restaurants over the 11 years in the state to capture sufficient information on the individual reviewers with respect to their preferences and writing styles. As a result, we ended up with 356,199 reviews from 13,006 reviewers on 12,396 restaurants for our segmentation analysis. These reviewers account for more than 70% of all the reviews from Arizona.

Importantly, our empirical application is expected to demonstrate that the Quality and Likeness principles prevail in profiling influential online reviewer segments in terms of the four reviewer characteristics (Experience, Productivity, Details, and Criticalness) consistently between the two product categories. Further, we applied distinct sampling approaches for the two product category cases: hotel review sampling covering the world through snowball sampling and restaurant review sampling based on a specific state (Arizona). Showing consistent results from the two cases with two different sampling approaches would provide strong external and content validity for our primary results, as demonstrated below.

Procedure for reviewer segmentation and profiling

This research presents a procedure that segments and profiles reviewers using consumers’ reviews on businesses. Specifically, the procedure goes through the following stages: first, we develop a business domain taxonomy (hotels and restaurants, respectively) using consumers’ textual reviews on the focal business (ontology learning). This taxonomy summarizes product features directly elicited from the reviews in a structured manner. Second, using the elicited product features, we generate a review × topic matrix, where each cell indicates how much the review covers the product feature (text categorization). Third, using both the consumer-review variables (from the review × topic matrix) and business-reported variables, we segment reviewers into the optimal number of reviewer segments (finite-mixture modeling). Lastly, we identify and profile influential reviewer segments.

Stage 1 Building a business domain taxonomy (ontology learning) We develop a business domain taxonomy (hotels or restaurants) based on consumers’ reviews on the domain business using ontology learning. The taxonomy summarizes the product features elicited from the reviews (see the Consumer-Related Variable section of Table 2 for hotel reviews). Each product feature identified (e.g., pleasant room amenities) is defined by a number of descriptive terms directly elicited from consumers’ own words in reviews (e.g., excellent shower, high-quality linen, huge bed). To execute this task, we construct a taxonomy vocabulary for the product category by mining online reviews on the product using ontology learning. Ontology is an explicit, formal specification of a shared conceptualization within the focal domain, where “formal” implies that the ontology should be machine-readable and shared with the broad domain society (Buitelaar et al. 2005). Automatic support in ontology development is referred to as “ontology learning” (Missikoff et al. 2002).

To develop a detailed taxonomy of product features, we develop a semi-automatic text-mining framework based on ontology learning. In this ontology learning task, we combine natural language processing (such as part-of-speech tagging and the stemming function) and our domain knowledge through research. We begin with unsupervised topic modeling driven by Latent Dirichlet Allocation (LDA) using enormous volumes of textual reviews (Tirunillai and Tellis 2014). This unsupervised step would generate a large number of product features as topics and associated descriptive terms defining each topic. A critical issue in producing a taxonomy from a huge amount of information is scalability, that is, how to process large amounts of textual information (Soucek 1991). When marketers collect online product reviews, they often deal with large amounts of unstructured textual reviews. For this reason, ontology learning needs to be an automatic or semi-automatic text-mining approach instead of a manual approach. This automatic LDA step can efficiently reduce the amount of effort needed to generate a complete taxonomy because it can automatically remove a large portion of unnecessary noise in the textual corpus.

Thus, LDA can primarily consider the relationships of the terms used in the corpus; however, it cannot integrate substantive meanings within the given domain. In particular, LDA cannot fully grasp the hierarchical structure of the domain. For example, our restaurant domain taxonomy generated four grand topics (Amenities, Dining Occasions, Cuisines, and Evaluations), where each grand topic has its own multiple subtopics. LDA cannot capture this hierarchical structure without human intervention. Given this limitation, we reorganized and refined the initial LDA-generated taxonomy by adding our domain knowledge obtained from our own research on the domain industry. To obtain the necessary domain knowledge, we studied the content of multiple hotel and restaurant review sites.

Stage 2 Generating the review-by-feature matrix (text categorization) The product taxonomy in the first stage produces a number of product features as topics, as shown in the Consumer-Review Variables section in Table 2. Then, using text categorization, we measured the amount of content pertaining to the particular topic in each review (Feldman and Sanger 2007). This task resulted in a review-by-topic matrix, where each cell indicates the degree to which the review covers the product feature topic. That is, based on our final domain taxonomy, we counted the number of appearances of all reviewers’ descriptive terms pertaining to each product feature topic. Our count for the topic indicates how many times these terms were used in the given review, allowing for multiple uses of the same term in each review. We converted this topic-term count into the weight of the topic discussion in the review using the term frequency-inverse document frequency (TF-IDF) scheme.

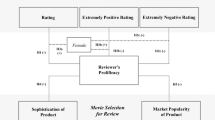

Stage 3 Segmenting reviewers (finite-mixture modeling) We conducted reviewer segmentation based on finite-mixture modeling with the review-by-feature matrix as input data. Let yhi be the rating variable for review \(i\) by reviewer h.

where \({\varvec{x}}\) is the set of independent variables from the review-by-topic matrix and the characteristic variables reported by the businesses. α and β are parameters to be estimated, where α is the intercept parameter. u follows the normal distribution, turning the model into a linear regression model. The well-known Expectation–Maximization (EM) algorithm is implemented for estimation.

Whereas traditional segmentation methods use consumers’ brand choices as the dependent variable (Kamakura and Russell 1989), we adopt reviewers’ brand (product) rating as the dependent variable because the rating is known to be positively associated with consumers’ eventual product choices (Mudambi and Schuff 2010; Sun 2012). Further, ratings are commonly included on social media, but sales are not disclosed. On review platforms, each review (as the analysis unit in our finite-mixture model) generates a rating, where a number of reviews typically cover the same product. Because we segment reviewers writing multiple reviews on multiple products in this procedure, the rating is considered to be a proper and effective measure of how each product feature explains diverse consumer preferences.

Results on the domain taxonomy and the review-by-feature matrix

Using the hotel reviews, we conducted our text-mining analysis part. (We conducted the same procedure for the restaurant reviews. However, for the purpose of exposition, we use only the hotel case in this subsection because the same patterns apply to the hotel case.) First, this analysis generated the hotel taxonomy, which resulted in the Consumer-Review Variables in Table 2. This taxonomy consists of six broad categories (travel type, hotel facility, bedroom, extra service, staff service, and overall experience). Each category has multiple product feature topics. The taxonomy has a total of 27 product features on the entire hotel business, ranging from personal travel to expensive stay to pleasant experiences. In particular, the two topics in the overall evaluation category (Back Next Time & No Recommendation) control for both positive and negative evaluations across all the hotel business features. Each feature topic is defined by a set of descriptive terms elicited directly from the consumers’ reviews. The range of the variables demonstrates the richness and profundity of the elicited information.

Results on reviewer segmentation

Table 3 summarize our determination of the best model with the optimal number of segments in terms of the Fit of Entropy (FOE) and four information criteria (BIC, AIC, AIC3, and CAIC) for hotels. FOE, which ranges from 0 to 1, measures the goodness of segment classification (Celeux and Soromenho 1996). FOE = 0 when all the posterior probabilities are equal for each segment (maximum entropy), which gives cause for concern. FOE = 1 indicates that each reviewer belongs to one of the segments without any degree of fuzziness. Table 2 shows that we have two types of variables for reviewer segmentation in restaurants: (1) business-related variables, and (2) consumer-review variables. The business-related variables include information reported by the businesses themselves. Variations of these variables across reviewers still occur because reviewers visit different businesses and choose to write about various businesses. Traditional consumer segmentation does not consider this part of heterogeneity across consumers, despite the fact that consumers choose different businesses or brands. The consumer-review variables coming from our hotel domain taxonomy were elicited from consumer reviews. Given that these two sources of information differ from each other, we applied segmentation based on (1) business-reported variables only, (2) consumer-review variables only, and (3) both types of variables (the combo model).

Because our model has a large number of reviews from a sizeable number of reviewers with a large number of parameters to be estimated (as shown in Table 4), it was challenging to find the converged optimal solution in each model, not to mention the time taken for convergence. Therefore, when the model did not improve the information criteria value more than 0.1% with the additionally added segment, we stopped extending the model in terms of the number of segments (Dziak et al. 2019). Simultaneously, we considered the FOE measure because each added segment increases the overall fuzziness in allocating individual reviewers to specific segments. Overall, in each product category, the combo models demonstrate better (that is, smaller) information criterion values than the business-reported variable model and the consumer-review variable model. Among the combo models, we chose the five-segment case as the best model in hotels for two reasons. First, the information criterion value improvement from another added segment is negligent after the chosen number of segments in each category. Second, the FOE value (0.708 in hotels and 0.690 in restaurants) is sufficiently high, indicating that the segment memberships were clearly determined.

Profiles of diverse reviewer segments

Using the best segmentation models selected in both the hotel and restaurant cases, this subsection provides the profiles of multiple influential reviewer segments. First, Table 4 shows the parameter estimation results of the best models (The same results of the restaurant case are not provided to avoid content repetition from this second case.) Table 4 shows substantial differences across reviewer segments, while each segment has a large number of variables that are significant at 5%. Because the dependent variable is the review rating on a five-point scale, the positive sign of any consumer-review variable (as a continuous variable) indicates that increased discussion of the product feature has a positive impact on the review rating for the given segment. In other words, when reviewers discuss the product feature, they tend to perceive it positively in their reviews with a positive estimate. The meaning of the negative sign is the opposite.

Importantly, the estimation results generally lend content validity to our segmentation results. For example, in the consumer-review variables for hotels, most of the significant estimates of the positive valence topics (e.g., Design & Décor (+); Good Location (+); Great Facilities (+)) have a positive sign. By contrast, most of the significant estimates of the negative valence topics (e.g., Awful Rooms (−); Outdated Bedroom Appliances (−); No Recommendation (−)) have a negative sign. These findings support the content validity of our segmentation results, given that these variable estimates reflect topic-inherent valences consistently but at varying degrees across the segments.

This estimation process provided the segment memberships of all individual reviewers in both product category cases. Using this information, we provide the profile of each segment: Table 5 for the five hotel segments and Table 6 for the six restaurant segments. First, among the reviewer segments for hotels (Table 5), we identify the first four segments as practically influential reviewer segments based on two indices: (1) the reviewer’s number of user votes, and (2) the segment size: Segment 1 (top-tier quality reviewers), Segment 2 (second-tier quality reviewers), Segment 3 (likeness (common) reviewers), and Segment 4 (less critical likeness reviewers). We also consider the segment size as the segment’s impact measure because reviewers share preferences with general consumers who rely on the review platform. When there are more consumers sharing similar preferences, those reviewers can have more influence.

Although we use hotels as our primary presentation case, this time we highlight the profile results of the restaurant case (in Table 6) first and briefly summarize the results of the hotels (in Table 5) as a similar case.

First, top-tier quality reviewers (Segment 1) have by far the most votes. These reviewers consist of approximately 12% of the reviewers in the data. They are the most experienced reviewers, and they are extremely productive in terms of the number of reviews they post. They also tend to write the longest reviews by providing deep details on various product features. These reviewers assign the second lowest average rating of the businesses they review with critical and conscientious comments. Existing studies indicate that expert reviewers tend to be more critical than amateur reviewers because their expertise leads them to consider more complicated elements from the critical points (Beaudouin and Pasquier 2017; Elberse and Eliashberg 2003; Racherla and Friske 2012). These characteristics point to high-quality reviews that stem from those reviewers’ expertise, leading to their extreme popularity regarding user votes. As a result, these reviewers wield strong influence on other users on the review site. In a way, these influential reviewers can be compared to power bloggers with a number of followers.

Second, second-tier quality reviewers (Segment 2) are similar to top-tier quality reviewers in their review characteristics, but with less experience, reduced productivity, shorter reviews, and more lenient ratings. In other words, these reviewers have less expertise than the top-tier segment, but they can exert comparable influence on the market because a larger segment size (21%) allows them to appeal to more consumers. Importantly, we find that these two segments fall into the Quality-based influential reviewer category, as their influence on general consumers arises from high-quality reviews produced by their expertise.

Third, we choose likeness (common) reviewers (Segment 3) as influential because this group accounts for the largest segment size (38%); moreover, these reviewers’ characteristics are close to those of the majority of the general reviewers. In each of the four reviewer characteristics (Experience, Productivity, Details, and Criticalness), these reviewers have the average value of the general reviewers (in the Ave. column). (Notably, the number of reviews (86) in this segment is much less than the overall average (189), but its segment rank (fourth out of six) of the variable is still in the middle. This pattern occurs because the average is skewed by the extremely large value (449) of the top-tier quality reviewer segment.) Because their influence arises from being similar to the majority of average consumers, we refer to the segment as Likeness (common) reviewers.

Lastly, we choose less critical likeness reviewers (Segment 4) as another group of influential reviewers. These reviewers have a modest level of user votes and a modest segment size. Their characteristics are similar to those of likeness (common) reviewers. One notable distinction between the two segments lies in the fact that these reviewers have a much higher rating average than likeness reviewers (4.29 > 3.85). In other words, they tend to be lenient evaluators and more focused on affection than cognition, hence the segment name less critical likeness reviewers. In brief, we find that these two segments fall into the Likeness reviewer category, as their influence on review sites arises from their reflection of the preferences of a majority of consumers. The size of the two segments is approximately 50% in our restaurant case.

Table 5 summarizes the profiles of the five segments in our hotel reviews. The results strongly replicate the primary results of our restaurant case, which shows that there are two segments falling into Quality and another two segments falling into Likeness. This result lends significant validity to our empirical findings because we see the same pattern of results in two distinct product categories. Furthermore, the two datasets are sampled in two different ways: restaurant review sampling based on a specific state (Arizona) and hotel review sampling with a global reach through snowball sampling. These dual sampling methods show that our primary results are not artifacts from a particular sampling approach.

Four determinants of the reviewer’s influence (robustness check)

Reviewers who generate high volumes of influential word-of-mouth can be considered as opinion leaders on social media. Because such influence cannot be measured directly, marketing studies have often used the number of votes in review helpfulness as a proxy measure of the review’s importance. Then, reviewers with a large number of votes in review helpfulness can be regarded as influential reviewers (Agnihotri and Bhattacharya 2016; Risselada et al. 2018; Yazdani et al. 2018). To verify the aforementioned four reviewer characteristic dimensions (Experience, Productivity, Details, and Criticalness) as criteria for the reviewer influence measure, we run the regression models specified in Table 7. In the regression analysis, for each product category, three variants of the reviewer’s number of votes is the dependent variable, while the four reviewer characteristics are part of the independent variables. A number of consumer-review variables in both hotels (in Table 2) and restaurants are used as control variables to account for heterogeneity among reviews in all the regression variants. Similarly, a number of business-reported variables in both categories (in Table 2) are also used as control variables to account for heterogeneity among businesses. Those control variables’ estimates were omitted to avoid cluttering in Table 7.

Model A with the number of votes as the dependent variable shows significantly positive predictors of Experience, Productivity, and Details, while the model has a significantly negative predictor in Review Criticalness. This pattern is consistent in both product categories. There are several noteworthy points regarding the results from Model A. First, the Months on Platform variable indicates that reviewers’ Experience matters, given that reviewers who started earlier on the platform have more opportunities to write additional reviews, and accordingly receive more votes (Ku et al. 2012). This effect is consistent across all the regression cases in the table. Next, when reviewers write more reviews (# of Reviews), they tend to receive more votes (Floyd et al. 2014). Next, the average Review Length (Details) has a positive impact on votes in both categories (Mudambi and Schuff 2010). This finding implies that when a longer review provides more detailed and specific information, it is more likely that users will find it useful. Lastly, the negative predictor in Review Criticalness indicates that more critical reviewers tend to receive more votes. This pattern is consistent with the finding from existing studies that negative information tends to be more influential than positive information on social media (Pan and Chiou 2011). In particular, when consumers view critical negative information (e.g., bad service, high price, low-quality ingredients in restaurant reviews) about a business, they will most likely remove that business from their consideration set. Then, consumers can focus on alternative businesses for their final choices.

Model B has the ratio of Votes and the # of Reviews as the dependent variable, which measures the efficiency of the reviewer’s review writing. In this model, we lose Review Length as an independent variable because it became part of the revised dependent variable. The results of the model show that Experience and Details are positive predictors in both product categories.

The regression results indicate that influential reviewers are those who have rich experiences with the social media platform, who write frequently, who express specific details, and who tend to evaluate businesses critically. This amounts to a brief profile of influential reviewers at the aggregate level, which is consistent with the specific profiles from our segmentation and profiling results.

Discussion

Summary of empirical results

The flowchart of Fig. 1 summarizes our empirical analyses for reviewer segmentation and profiling with the hotel industry application. We collected rich data related to consumers’ hotel reviews from TripAdvisor. To structure the qualitative textual reviews, we built a business domain taxonomy for the industry, which generated 27 product features. To integrate the data into our finite-mixture segmentation model, we created the review-by-feature matrix using ontology learning and text categorization. Our reviewer segmentation led to strong reviewer profiling patterns pertaining to two fundamental evaluation principles: quality and likeness (refer to Table 5). We replicated the same patterns involving quality and likeness in the second product category, restaurants (refer to Table 6).

Theoretical implications

Social media research on service products enhances our understanding of how reviews play a role in consumers’ decision making for those products. By contrast, our understanding of individual reviewers posting multiple reviews on a variety of businesses has been relatively limited (Moon and Kamakura 2017). Notably, Li and Du (2011) indicated that although many theories have been proposed about social networks, the issue of opinion leader identification needs to be further examined. Based on this observation, they built an ontology for a marketing product and then generated characteristics from the blog content, authors, readers, and their relationships. Then, they associated the features with opinion leaders. To fill this research void in the social media literature, our research concentrated on reviewers rather than reviews. Furthermore, we segmented reviewers to account for their differences in choosing discussion topics (e.g., price vs. quality) and writing styles (e.g., long and specific vs. short and general). We use this reviewer segmentation utilizing online reviews as a way for platform managers to reach out to their platform users, given that a certain group of reviewers represents and influences a segment of consumers sharing similar preferences for the given products.

Our empirical application demonstrated that the Quality and Likeness principles prevail in profiling diverse online reviewer segments in terms of four reviewer characteristics (Experience, Productivity, Details, and Criticalness) consistently between hotels and restaurants (Ismagilova et al. 2020; Yazdani et al. 2018). Further, the two product cases applied a distinct sampling approach: hotel review sampling with a global reach through snowball sampling and restaurant review sampling based on a specific state. Showing consistent results from the two product categories with the two different sampling approaches provided content and external validity for our research results.

To identify and profile multiple reviewer segments, we used the abovementioned four dimensions of reviewer characteristics directly elicited from the common structure of online review platforms. The roles of these four dimensions in online reviews have been intensively examined by many studies: (1) Experience (Banerjee et al. 2017; Ku et al. 2012), (2) Productivity (Chintagunta et al. 2010; You et al. 2015), (3) Details (Chevalier and Mayzlin 2006), and (4) Criticalness (Elberse and Eliashberg 2003; Mahajan et al. 1984). However, whereas most studies have examined the reviews themselves along with some chosen factors without a general framework, our research viewed reviewers’ four dimensions from a comprehensive perspective to understand how they function in examining various types of reviewer influence.

In the context of social media, we theorize that users reply to two distinct types of reviewers: one type writing high-quality reviews (Quality) and the other type appealing to consumers by expressing similar preferences (Likeness) (Ismagilova et al. 2020). On online review sites, one common way for consumers to reduce their transaction costs and uncertainties is to rely on the homophily of like-minded reviewers who share similar preferences with them (Likeness) (De Bruyn and Lilien 2008; Filieri et al. 2018). The other way to be influential is by posting high-quality reviews consistently through accumulated experience and knowledge (Gilly et al. 1998; Hovland and Weiss 1951; Kamakura et al. 2006). Such expertise tends to make those reviewers more critical than amateur reviewers (Beaudouin and Pasquier 2017).

Importantly, this research identified and profiled influential reviewer groups grounded in these two principles of Quality and Likeness driving reviewers’ influence. Our empirical analysis demonstrated that each principle is consistently represented in two reviewer segments between two product categories. Specifically, Quality reviewers comprise two segments that differ in the level of Quality (top-tier quality reviewers and second-tier quality reviewers). By contrast, Likeness reviewers comprise two segments that differ in criticalness (likeness (common) reviewers and less critical likeness reviewers).

Managerial implications

Managers seek to understand how the opinions of diverse reviewer groups influence product sales to effectively target these influencers on social media. We note that the task poses a major challenge due to reviewer heterogeneity in multiple sources such as: (1) the choices of products and businesses they decide to write about, (2) the choices of product/business features in their reviews, and (3) their writing styles (including description details and word choice). For example, one reviewer may write long reviews with many descriptive terms on a number of product features, while another reviewer may write concise reviews on only a few select features (Moon and Kamakura 2017).

When small-sized retailers cannot directly reach out to a large number of individual consumers due to their limited resources, they often implement social media-based marketing strategies, targeting impactful reviewers (Rapp et al. 2013). These reviewers help retailers eventually reach out to a broader customer base, sharing similar preferences with those target reviewers. For example, restaurant managers conduct different marketing strategies on social media in terms of serving price-oriented consumers as opposed to quality-oriented consumers. Our empirical analysis of restaurant and hotel reviewers provided the profiles of four different segments of influential reviewers: top-tier quality reviewers, second-tier quality reviewers, likeness (common) reviewers, and less critical likeness reviewers (see Tables 5, 6). Given each group’s unique traits and product feature preferences, marketers can utilize each group differently. For example, if the business is a common type of restaurant targeting typical American consumers (e.g., Jason’s Deli), managers may utilize average reviewers to take advantage of their preference similarity with a majority of consumers. If managers wish to focus on a small number of influential reviewers due to their limited promotion resources (e.g., high-end local restaurants), they may take advantage of top-quality reviewers.

Realistically, most small businesses have limited resources and will not be able to implement segment-specific social media campaigns on their own. To help these retailers find and reach their suitable influential reviewer segments, review platforms can showcase reviewer segments on their websites (Baird and Parasnis 2011). One way of doing so is to feature some selected Quality reviewers and some Likeness reviewers.

On the consumer side, consumers also heavily rely on online product reviews to make product choices (Ngo-Ye and Sinha 2014). In doing so, they rely on reliable and trustworthy reviewers sharing their preferences. Although they can usually identify some popular reviewers by using their review records (including the number of votes and the number of reviews they posted), it is usually unclear which reviewers they can relate to in terms of shared preferences. Therefore, review platforms can provide more relevant reviews for their users by connecting them with reviewers who share similar preferences.

If a social media platform can systematically group its reviewers into several groups without bias (as we did for restaurant and hotel reviewers), consumers can more efficiently find reviewers sharing similar preferences and providing relevant purchase and consumption information. For example, when we go to a particular product page on Amazon, we can find that the product reviews are categorized according to the overall product rating (5 star, 4 start, 3 star, 2 star, and 1 star). We can also find some information on reviewers (e.g., reviewer rankings) based on the number of reviews and the number of user votes. Similarly, Expedia classifies its customer reviews by traveler type (e.g., couples, business travelers, families).

Likewise, a platform can provide preference-based reviewer segmentation to help users find their matching reviewers and reviews out of an enormous volume of cluttered reviews. Thus, social media platforms can unearth diverse reviewers as featured reviewers on their websites (Yang et al. 2020). Furthermore, with this segment-based presentation of reviewers and reviews, reviewers can more easily see how they are connected to or compared with other reviewers.

To conclude, when platforms present diverse influential reviewers (as revealed by reviewer segmentation), they provide their platform users with more customized reviewer matching and more successful review recommendations, which helps these platforms retain their users longer (Filieri et al. 2018; Huang 2003; Khalifa and Liu 2007).

Limitations and future research suggestions

Our research on diverse reviewer segmentation assumes that such segmentation is similar to consumer segmentation. Although this assumption seems reasonable because reviewers are also consumers, the exact relationship between reviewers and general consumers needs to be empirically demonstrated with proper data. In particular, when making connections with reviewer segmentation and consumer segmentation, researchers must consider social media bias. It is widely known that there is a significant amount of bias and manipulation in consumer-generated content on review sites, which primarily takes two forms—sampling bias and false information bias. Sampling bias indicates inaccurate information caused by the under- or over-representation of certain consumer groups, given that social media activities differ across specific consumer groups (e.g., young vs. old, males vs. females) (Li and Hitt 2008). On the other hand, false information bias takes place when some businesses and consumers post fake reviews to influence target businesses (Goh et al. 2013). These biases weaken our assumption that reviewers represent consumers with similar preferences. It would be helpful to determine how such biases influence the effectiveness of our segmentation approach based on online reviews.

References

Agnihotri, Arpita, and Saurabh Bhattacharya. 2016. Online Review Helpfulness: Role of Qualitative Factors. Psychology and Marketing 33 (11): 1006–1017.

Akdeniz, Billur, Roger J. Calantone, and Clay M. Voorhees. 2013. Effectiveness of Marketing Cues on Consumer Perceptions of Quality: The Moderating Roles of Brand Reputation and Third-Party Information. Psychology & Marketing 30 (1): 76–89.

Algesheimer, René, Utpal M. Dholakia, and Andreas Herrmann. 2005. The Social Influence of Brand Community: Evidence from European Car Clubs. Journal of Marketing 69 (3): 19–34.

Algharabat, Raed, Nripendra P. Rana, Ali Abdallah Alalwan, Abdullah Baabdullah, and Ashish Gupta. 2020. Investigating the Antecedents of Customer Brand Engagement and Consumer-Based Brand Equity in Social Media. Journal of Retailing and Consumer Services 53: 101767.

Allaway, Arthur W., Giles D’Souza, and DavidKyoungmi BerkowitzKim. 2014. Dynamic Segmentation of Loyalty Program Behavior. Journal of Marketing Analytics 2: 18–32.

Baird, Carolyn Heller, and Gautam Parasnis. 2011. From Social Media to Social Customer Relationship Management. Strategy & Leadership 39 (5): 30–37.

Ball, Leslie, and Jennifer Elworthy. 2014. Fake or Real? The Computational Detection of Online Deceptive Text. Journal of Marketing Analytics 2: 187–201.

Banerjee, Shankhadeep, Samadrita Bhattacharyya, and Indranil Bose. 2017. Whose Online Reviews to Trust? Understanding Reviewer Trustworthiness and Its Impact on Business. Decision Support Systems 96 (2): 17–26.

Bansal, Harvir S., and Peter A. Voyer. 2000. Word-of-Mouth Processes within a Services Purchase Decision Context. Journal of Service Research 3 (2): 166–177.

Beaudouin, Valérie, and Dominique Pasquier. 2017. Forms of Contribution and Contributors’ Profiles: An Automated Textual Analysis of Amateur on Line Film Critics. New Media & Society 19 (11): 1810–1828.

Buitelaar, Paul, Philipp Cimiano, and Bernardo Magnini. 2005. Ontology Learning from Text: Methods, Evaluation and Applications. Amsterdam: IOS Press.

Casaló, Luis V., Carlos Flavián, and Sergio Ibáñez-Sánchez. 2020. Influencers on Instagram: Antecedents and Consequences of Opinion Leadership. Journal of Business Research 117: 510–519.

Celeux, Gilles, and Gilda Soromenho. 1996. An Entropy Criterion for Assessing the Number of Clusters in a Mixture Model. Journal of Classification 13 (2): 195–212.

Chan, Kenny K. and Shekhar Misra. 1990. Characteristics of the Opinion Leader: A New Dimension. Journal of Advertising 19 (3), 53–60.

Chevalier, Judith A., and Dina Mayzlin. 2006. The Effect of Word of Mouth on Sales: Online Book Reviews. Journal of Marketing Research 43 (3): 345–354.

Chintagunta, Pradeep K., Shyam Gopinath, and Sriram Venkataraman. 2010. The Effects of Online User Reviews on Movie Box Office Performance: Accounting for Sequential Rollout and Aggregation across Local Markets. Marketing Science 29 (5): 944–957.

Choi, Hoon S., and Steven Leon. 2020. An Empirical Investigation of Online Review Helpfulness: A Big Data Perspective. Decision Support Systems 139: 113403.

Cimiano, Philipp. 2006. Ontology Learning and Population from Text: Algorithms, Evaluation and Applications. Germany: Springer.

De Bruyn, Arnaud, and Gary L.. Lilien. 2008. A Multi-Stage Model of Word-of-Mouth Influence through Viral Marketing. International Journal of Research in Marketing 25 (3): 151–163.

Dholakia, Ruby Roy, and Brian Sternthal. 1977. Highly Credible Sources: Persuasive Facilitators or Persuasive Liabilities? Journal of Consumer Research 3 (4): 223–232.

Dziak, John J., Donna L. Coffman, Stephanie T. Lanza, Runze Li, and Lars S. Jermiin. 2019. Sensitivity and Specificity of Information Criteria. Briefings in Bioinformatics 20: 1–13.

Elberse, Anita, and Jehoshua Eliashberg. 2003. Demand and Supply Dynamics for Sequentially Released Products in International Markets: The Case of Motion Pictures. Marketing Science 22 (3): 329–354.

Farivar, Samira, Fang Wang, and Yufei Yuan. 2021. Opinion Leadership vs. Para-Social Relationship: Key Factors in Influencer Marketing. Journal of Retailing and Consumer Services 59: 102371.

Feldman, Ronen, and James Sanger. 2007. The Text Mining Handbook: Advanced Approaches in Analyzing Unstructured Data. Cambridge: Cambridge University Press.

Filieri, Raffaele, Fraser McLeay, Bruce Tsui, and Zhibin Lin. 2018. Consumer Perceptions of Information Helpfulness and Determinants of Purchase Intention in Online Consumer Reviews of Services. Information & Management 55 (8): 956–970.

Floyd, Kristopher, Ryan Freling, Saad Alhoqail, Hyun Young Cho, and Traci Freling. 2014. How Online Product Reviews Affect Retail Sales: A Meta-Analysis. Journal of Retailing 90 (2): 217–232.

Flynn, Leisa Reinecke, Ronald E. Goldsmith, and Jacqueline K. Eastman. 1996. Opinion Leaders and Opinion Seekers: Two New Measurement Scales. Journal of the Academy of Marketing Science 24 (2): 137–147.

Ghose, Anindya, and Panagiotis G. Ipeirotis. 2011. Estimating the Helpfulness and Economic Impact of Product Reviews: Mining Text and Reviewer Characteristics. IEEE Transactions on Knowledge and Data Engineering 23 (10): 1498–1512.

Gilly, Mary C., John L. Graham, Mary Finley Wolfinbarger, and Laura J. Yale. 1998. A Dyadic Study of Interpersonal Information Search. Journal of the Academy of Marketing Science 26 (2): 83–100.

Goh, Khim-Yong., Cheng-Suang. Heng, and Zhijie Lin. 2013. Social Media Brand Community and Consumer Behavior: Quantifying the Relative Impact of User- and Marketer-Generated Content. Information Systems Research 24 (1): 88–107.

Grewal, Lauren, and Andrew T. Stephen. 2019. In Mobile We Trust: The Effects of Mobile Versus Nonmobile Reviews on Consumer Purchase Intentions. Journal of Marketing Research 56 (5): 791–808.

Grimm, Pamela E. 2005. Ab Components’ Impact on Brand Preference. Journal of Business Research 58 (4): 508–517.

Hennig-Thurau, Thorsten, Kevin P. Gwinner, Gianfranco Walsh, and Dwayne D. Gremler. 2004. Electronic Word-of-Mouth via Consumer-Opinion Platforms: What Motivates Consumers to Articulate Themselves on the Internet? Journal of Interactive Marketing 18 (1): 38–52.

Herr, Paul M., Frank R. Kardes, and John Kim. 1991. Effects of Word-of-Mouth and Product-Attribute Information on Persuasion: An Accessibility-Diagnosticity Perspective. Journal of Consumer Research 17 (4): 454–462.

Hoskins, Jake, J. Shyam Gopinath, Cameron Verhaal, and Elham Yazdani. 2021. The Influence of the Online Community, Professional Critics, and Location Similarity on Review Ratings for Niche and Mainstream Brands. Journal of the Academy of Marketing Science 49 (6): 1065–1087.

Hovland, Carl I., and Walter Weiss. 1951. The Influence of Source Credibility on Communication Effectiveness. Public Opinion Quarterly 15 (4): 635–650.

Huang, Ming-Hui. 2003. Designing Website Attributes to Induce Experiential Encounters. Computers in Human Behavior 19 (4): 425–442.

Huang, Jen-Hung., and Yi-Fen. Chen. 2006. Herding in Online Product Choice. Psychology & Marketing 23 (5): 413–428.

Ismagilova, Elvira, Emma Slade, Nripendra P. Rana, and Yogesh K. Dwivedi. 2020. The Effect of Characteristics of Source Credibility on Consumer Behaviour: A Meta-Analysis. Journal of Retailing and Consumer Services 53: 101736.

Jacobs, RuudS., Ard Heuvelman, Somaya Ben Allouch, and Oscar Peters. 2015. Everyone’s a Critic: The Power of Expert and Consumer Reviews to Shape Readers’ Post-Viewing Motion Picture Evaluations. Poetics 52: 91–103.

Johnson, Devon, and Kent Grayson. 2005. Cognitive and Affective Trust in Service Relationships. Journal of Business Research 58 (4): 500–507.

Kamakura, Wagner A., and Gary J. Russell. 1989. A Probabilistic Choice Model for Market Segmentation and Elasticity Structure. Journal of Marketing Research 26 (4): 379–390.

Kamakura, Wagner A., Suman Basuroy, and Peter Boatwright. 2006. Is Silence Golden? An Inquiry into the Meaning of Silence in Professional Product Evaluations. Quantitative Marketing and Economics 4 (2): 119–141.

Karimi, Sahar, and Fang Wang. 2017. Online Review Helpfulness: Impact of Reviewer Profile Image. Decision Support Systems 96: 39–48.

Khalifa, Mohamed, and Vanessa Liu. 2007. Online Consumer Retention: Contingent Effects of Online Shopping Habit and Online Shopping Experience. European Journal of Information Systems 16: 780–792.

Kim, Kacy, Sukki Yoon, and Yung Kyun Choi. 2019. The Effects of eWOM Volume and Valence on Product Sales—An Empirical Examination of the Movie Industry. International Journal of Advertising 38 (3): 471–488.

Komiak, Sherrie Y.X.., and Izak Bendasat. 2006. The Effects of Personalization and Familiarity on Trust and Adoption of Recommendation Agents. MIS Quarterly 30 (4): 941–960.

Krosnick, Jon A., David S. Boninger, Yao C. Chuang, Matthew K. Berent, and Catherine G. Carnot. 1993. Attitude Strength: One Construct or Many Related Constructs? Journal of Personality and Social Psychology 65 (6): 1132–1151.

Ku, Yi-Cheng., Chih-Ping. Wei, and Han-Wei. Hsiao. 2012. To Whom Should I Listen? Finding Reputable Reviewers in Opinion-Sharing Communities. Decision Support Systems 53 (3): 534–542.

Kuksov, Dmitri, and Chenxi Liao. 2019. Opinion Leaders and Product Variety. Marketing Science 38 (5): 812–834.

Ladhari, Riadh, Elodie Massa, and Hamida Skandrani. 2020. YouTube Vloggers’ Popularity and Influence: The Roles of Homophily, Emotional Attachment, and Expertise. Journal of Retailing and Consumer Services 54: 102027.

Lee, Hee, Rob Law, and Jamie Murphy. 2011. Helpful Reviewers in TripAdvisor, an Online Travel Community. Journal of Travel & Tourism Marketing 28 (7): 675–688.

Leong, Choi-Meng, Alexa Min-Wei Loi, and Steve Woon. 2021. The Influence of Social Media eWOM Information on Purchase Intention. Journal of Marketing Analytics, Forthcoming.

Lewis, J. David., and Andrew Weigert. 1985. Trust as a Social Reality. Social Forces 63 (4): 967–985.

Li, Feng, and Timon C. Du. 2011. Who Is Talking? An Ontology-Based Opinion Leader Identification Framework for Word-of-Mouth Marketing in Online Social Blogs. Decision Support Systems 51 (1): 190–197.

Li, Xinxin, and Lorin M. Hitt. 2008. Self-Selection and Information Role of Online Product Reviews. Information Systems Research 19 (4): 456–474.

Li, Yung-Ming., Chia-Hao. Lin, and Cheng-Yang. Lai. 2010. Identifying Influential Reviewers for Word-of-Mouth Marketing. Electronic Commerce Research and Applications 9 (4): 294–304.

Lim, Jeen-Su., Phuoc Pham, and John H. Heinrichs. 2020. Impact of Social Media Activity Outcomes on Brand Equity. Journal of Product & Brand Management 29 (7): 927–937.

Lo, Ada S., and Sharon Siyu Yao. 2019. What Makes Hotel Online Reviews Credible? An Investigation of the Roles of Reviewer Expertise, Review Rating Consistency and Review Valence. International Journal of Contemporary Hospitality Management 31 (1): 41–60.

Lopez, Alberto, Eva Guerra, Beatriz Gonzalez, and Sergio Madero. 2020. Consumer Sentiments Toward Brands: The Interaction Effect between Brand Personality and Sentiments on Electronic Word of Mouth. Journal of Marketing Analytics 8 (4): 203–223.

Luca, Michael, and Georgios Zervas. 2016. Fake It Till You Make It: Reputation, Competition, and Yelp Review Fraud. Management Science 62 (12): 3412–3427.

Mahajan, Vijay, Eitan Muller, and Roger A. Kerin. 1984. Introduction Strategy for New Products with Positive and Negative Word-of-Mouth. Management Science 30 (12): 1389–1404.

Malik, M.S.I., and Ayyaz Hussain. 2020. Exploring the Influential Reviewer, Review and Product Determinants for Review Helpfulness. Artificial Intelligence Review 53: 407–427.

Mathwick, Charla, and Jill Mosteller. 2017. Online Reviewer Engagement: A Typology Based on Reviewer Motivations. Journal of Service Research 20 (2): 204–218.

Missikoff, Michele, Roberto Navigli, and Paola Velardi. 2002. Integrated Approach to Web Ontology Learning and Engineering. IEEE Computer 35 (11): 60–63.

Moon, Sangkil, and Wagner A. Kamakura. 2017. A Picture is Worth a Thousand Words: Translating Product Reviews into a Product Positioning Map. International Journal of Research in Marketing 34 (1): 265–285.

Moon, Sangkil, Nima Jalali, and Sunil Erevelles. 2021. Segmentation of Both Reviewers and Businesses on Social Media. Journal of Retailing and Consumer Services 61: 102524.

Moorman, Christine, Gerald Zaltman, and Rohit Deshpande. 1992. Relationships between Providers and Users of Market Research: The Dynamics of Trust within and between Organizations. Journal of Marketing Research 29 (3): 314–328.

Mu, Jifeng, Ellen Thomas, Jiayin Qi, and Yong Tan. 2018. Online Group Influence and Digital Product Consumption. Journal of the Academy of Marketing Science 46 (5): 921–947.

Mudambi, Susan M., and David Schuff. 2010. Research Note: What Makes a Helpful Online Review? A Study of Customer Reviews on Amazon.com. MIS Quarterly 34 (1): 185–200.

Naylor, Rebecca Walker, Cait Poynor Lamberton, and David A. Norton. 2011. Seeing Ourselves in Others: Reviewer Ambiguity, Egocentric Anchoring, and Persuasion. Journal of Marketing Research 48 (3): 617–631.

Ngo-Ye, Thomas L., and Atish P. Sinha. 2014. The Influence of Reviewer Engagement Characteristics on Online Review Helpfulness: A Text Regression Model. Decision Support Systems 61: 47–58.

Ozanne, Marie, Stephanie Q. Liu, and Anna S. Mattila. 2019. Are Attractive Reviewers More Persuasive? Examining the Role of Physical Attractiveness in Online Reviews. Journal of Consumer Marketing 36 (6): 728–739.

Ozdemir, Sena, ShiJie Zhang, Suraksha Gupta, and Gaye Bebek. 2020. The Effects of Trust and Peer Influence on Corporate Brand—Consumer Relationships and Consumer Loyalty. Journal of Business Research 117: 791–805.

Pan, Lee-Yun., and Jyh-Shen. Chiou. 2011. How Much Can You Trust Online Information? Cues for Perceived Trustworthiness of Consumer-Generated Online Information. Journal of Interactive Marketing 25 (2): 67–74.

Pan, Yue, and Jason Q. Zhang. 2011. Born Unequal: A Study of the Helpfulness of User-Generated Product Reviews. Journal of Retailing 87 (4): 598–612.

Proserpio, Davide, and Georgios Zervas. 2017. Online Reputation Management: Estimating the Impact of Management Responses on Consumer Reviews. Marketing Science 36 (5): 645–665.

Punyatoya, Plavini. 2019. Effects of Cognitive and Affective Trust on Online Customer Behavior. Marketing Intelligence and Planning 37 (1): 80–96.

Racherla, Pradeep, and Wesley Friske. 2012. Perceived ‘Usefulness’ of Online Consumer Reviews: An Exploratory Investigation across Three Services Categories. Electronic Commerce Research and Applications 11 (6): 548–559.

Rapp, Adam, Lauren Skinner Beitelspacher, Dhruv Grewal, and Douglas E. Hughes. 2013. Understanding Social Media Effects across Seller, Retailer, and Consumer Interactions. Journal of the Academy of Marketing Science 41 (5): 547–566.

Ren, Gang, and Taeho Hong. 2019. Examining the Relationship Between Specific Negative Emotions and the Perceived Helpfulness of Online Reviews. Information Processing & Management 56 (4): 1425–1438.

Ren, Jie, and Jeffrey V. Nickerson. 2019. Arousal, Valence, and Volume: How the Influence of Online Review Characteristics Differs with Respect to Utilitarian and Hedonic Products. European Journal of Information Systems 28 (3): 272–290.

Risselada, Hans, Lisette de Vries, and Mariska Verstappen. 2018. The Impact of Social Influence on the Perceived Helpfulness of Online Consumer Reviews. European Journal of Marketing 52 (3/4): 619–636.

Rust, Roland T., William Rand, Ming-Hui. Huang, Andrew T. Stephen, Gillian Brooks, and Timur Chabuk. 2021. Real-Time Brand Reputation Tracking Using Social Media. Journal of Marketing 85 (4): 21–43.

Sánchez-Pérez, Manuel, María D. Illescas-Manzano, and Sergio Martínez-Puertas. 2021. Online Review Ratings: An Analysis of Product Attributes and Competitive Environment. Journal of Marketing Communications, Forthcoming.

Shihab, Muhammad Rifki, and AudryPragita Putri. 2019. Negative Online Reviews of Popular Products: Understanding the Effects of Review Proportion and Quality on Consumers’ Attitude and Intention to Buy. Electronic Commerce Research 19 (1): 159–187.

Siddiqi, Umar Iqbal, Jin Sun, and Naeem Akhtar. 2020. The Role of Conflicting Online Reviews in Consumers’ Attitude Ambivalence. The Service Industries Journal 40 (13–14): 1003–1030.

Simonson, Itamar, and Emanuel Rosen. 2014. What Marketers Misunderstand about Online Reviews. Harvard Business Review 92 (1/2): 23–25.

Skowronski, John J., and Donal E. Carlston. 1989. Negativity and Extremity Biases in Impression Formation: A Review of Explanations. Psychological Bulletin 105 (1): 17–22.

Sokolova, Karina, and Hajer Kefi. 2020. Instagram and YouTube Bloggers Promote It, Why Should I Buy? How Credibility and Parasocial Interaction Influence Purchase Intentions. Journal of Retailing and Consumer Services 53: 101742.

Soucek, Branko. 1991. Neural and Intelligent Systems Integration. New York: Wiley.

Suay-Pérez, Francisco, Gabriel I. Penagos-Londoño, Lucia Porcu, and Felipe Ruiz-Moreno. 2021. Customer Perceived Integrated Marketing Communications: A Segmentation of the Soda Market. Journal of Marketing Communications, Forthcoming, 1–17.

Sunder, Sarang, Kihyun Hannah Kim, and Eric A. Yorkston. 2019. What Drives Herding Behavior in Online Ratings? The Role of Rater Experience, Product Portfolio, and Diverging Opinions. Journal of Marketing 83 (6): 93–112.

Sun, Monic. 2012. How Does the Variance of Product Ratings Matter? Management Science 58 (4): 696–707.

Tata, Sai Vijay, Sanjeev Prashar, and Sumeet Gupta. 2020. An Examination of the Role of Review Valence and Review Source in Varying Consumption Contexts on Purchase Decision. Journal of Retailing and Consumer Services 52: 101734.

Thomas, Marc-Julian., Bernd W. Wirtz, and Jan C. Weyerer. 2019. Determinants of Online Review Credibility and Its Impact on Consumers’ Purchase Intention. Journal of Electronic Commerce Research 20 (1): 1–20.

Tirunillai, Seshadri, and Gerard J. Tellis. 2014. Mining Marketing Meaning from Online Chatter: Strategic Brand Analysis of Big Data Using Latent Dirichlet Allocation. Journal of Marketing Research 51 (4): 463–479.

Trusov, Michael, Anand V. Bodapati, and Randolph E. Bucklin. 2010. Determining Influential Users in Internet Social Networks. Journal of Marketing Research 47 (4): 643–658.

Tsao, Wen-Chin. 2014. Which Type of Online Review Is More Persuasive? The Influence of Consumer Reviews and Critic Ratings on Moviegoers. Electronic Commerce Research 14: 559–583.

Vana, Prasad, and Anja Lambrecht. 2021. The Effect of Individual Online Reviews on Purchase Likelihood. Marketing Science 40 (4): 708–730.

Vrontis, Demetris, Anna Makrides, Michael Christofi, and Alkis Thrassou. 2021. Social Media Influencer Marketing: A Systematic Review, Integrative Framework and Future Research Agenda. International Journal of Consumer Studies 45 (4): 617–644.

Wang, Sijun, Sharon E. Beatty, and William Foxx. 2004. Signaling the Trustworthiness of Small Online Retailers. Journal of Interactive Marketing 18 (1): 53–69.

Williamson, Oliver E. 1979. Transaction-Cost Economics: The Governance of Contractual Relations. Journal of Law and Economics 22 (2): 233–261.

Wu, Xiaoyue, Liyin Jin, and Xu. Qian. 2021. Expertise Makes Perfect: How the Variance of a Reviewer’s Historical Ratings Influences the Persuasiveness of Online Reviews. Journal of Retailing 97 (2): 238–250.

Xu, Qian. 2014. Should I Trust Him? The Effects of Reviewer Profile Characteristics on eWOM Credibility. Computers in Human Behavior 33: 136–144.

Yang, Zhi, Diao, Zihe, and Kang, Jun. 2020. Customer Management in Internet-Based Platform Firms: Review and Future Research Directions. Marketing Intelligence & Planning, forthcoming.

Yazdani, Elham, Shyam Gopinath, and Steve Carson. 2018. Preaching to the Choir: The Chasm Between Top-Ranked Reviewers, Mainstream Customers, and Product Sales. Marketing Science 37 (5): 838–851.

Yee, Wong Foong, Siew Imm Ng, Kaixin Seng, Xin-Jean Lim, and Thanuja Rathakrishnan. 2021. How Does Social Media Marketing Enhance Brand Loyalty? Identifying Mediators Relevant to the Cinema Context. Journal of Marketing Analytics. Forthcoming.

You, Ya., Gautham G. Vadakkepatt, and Amit M. Joshi. 2015. A Meta-Analysis of Electronic Word-of-Mouth Elasticity. Journal of Marketing 79 (2): 19–39.

Zablocki, Agnieszka, Bodo Schlegelmilch, and Michael J. Houston. 2019. How Valence, Volume and Variance of Online Reviews Influence Brand Attitudes. AMS Review 9 (1): 61–77.

Zheng, Lili. 2021. The Classification of Online Consumer Reviews: A Systematic Literature Review and Integrative Framework. Journal of Business Research 135 (11): 226–251.

Zhu, Feng, Xiaoquan Zhang. 2010. Impact of Online Consumer Reviews on Sales: The Moderating Role of Product and Consumer Characteristics. Journal of Marketing 74 (2): 133–148.

Funding

Funding was provided by The Hankuk University of Foreign Studies Research Fund of 2022.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jalali, N., Moon, S. & Kim, MY. Profiling diverse reviewer segments using online reviews of service industries. J Market Anal 11, 130–148 (2023). https://doi.org/10.1057/s41270-022-00163-w

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1057/s41270-022-00163-w