Abstract

In many engineering optimization problems, the number of function evaluations is severely limited by the time or cost constraints. These limitations present a significant challenge in the field of global optimization, because existing metaheuristic methods typically require a substantial number of function evaluations to find optimal solutions. This paper presents a new metaheuristic optimization algorithm that considers the information obtained by a radial basis function neural network (RBFNN) in terms of the objective function for guiding the search process. Initially, the algorithm uses the maximum design approach to strategically distribute a set of solutions across the entire search space. It then enters a cycle in which the RBFNN models the objective function values from the current solutions. The algorithm identifies and uses key neurons in the hidden layer that correspond to the highest objective function values to generate new solutions. The centroids and standard deviations of these neurons guide the sampling process, which continues until the desired number of solutions is reached. By focusing on the areas of the search space that yield high objective function values, the algorithm avoids exhaustive solution evaluations and significantly reduces the number of function evaluations. The effectiveness of the method is demonstrated through a comparison with popular metaheuristic algorithms across several test functions, where it consistently outperforms existing techniques, delivers higher-quality solutions, and improves convergence rates.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Optimization1 is the mathematical discipline focused on finding the best solution to a problem from a set of available alternatives, guided by a specific set of criteria or objectives. It involves selecting the most effective option among various possibilities with the aim of achieving the maximum efficiency, minimum cost, or other desired outcomes. This process is integral to decision-making across a wide range of fields, including engineering, economics, finance, logistics, and more. At its core, optimization seeks to identify the optimal conditions or variables that satisfy given constraints, maximizing or minimizing a target function.

In the past, classical optimization methods2, such as gradient descent and linear programming, were sufficient to tackle the limited set of optimization challenges available, given the modest computational resources that were then available. These traditional techniques are applicable only when the problem is convex and derivable, which ensures that solutions can be found within the mathematical constraints upon which these methods are based3. However, with the significant advancements in computational power, the scope and complexity of problems that can be optimized have expanded. This development has led to the emergence of complex issues that fall outside the applicability of classical methods due to their inherent limitations and the specific requirements of these new challenges4. As a result, there is a need to develop more advanced and flexible optimization strategies that can navigate the complex landscapes of modern optimization problems.

A metaheuristic method5 is an advanced optimization strategy designed to solve complex problems that are beyond the reach of classical optimization techniques. Unlike classical methods, which often require specific conditions like convexity and differentiability to guarantee finding an optimal solution, metaheuristics do not rely on such strict mathematical properties6. These methods provide flexible, heuristic solutions to a wide range of optimization problems, often using iterative processes to explore and exploit the search space. Most metaheuristic methods draw inspiration from metaphors7, often rooted in natural phenomena or biological processes, to guide their search strategies for optimization solutions. These algorithms mimic the underlying principles of nature, such as evolution, social behavior, and physical processes, to explore complex solution spaces8. Some metaheuristic algorithms are inspired by Darwin’s theory of evolution, aligning with the concepts of natural selection and adaptation9,10. These algorithms, known as evolutionary algorithms (EAs), include prominent examples like the genetic algorithm (GA) developed by Holland11, bird mating optimizer (BMO) by Askarzadeh12, evolution-based strategies (ES) by Schwefel13, and improved multi-operator differential evolution (IMODE) introduced by Sallam et al.14. Additionally, there are algorithms based on swarm intelligence (SI), which emulate the collective intelligence behaviors observed in certain insects and animals critical for their survival15. Well-known SI algorithms include the artificial bee colony (ABC) by Karaboga and Basturk16, ant colony optimization (ACO) by Dorigo and Di Caro17, particle swarm optimization (PSO) by Eberhart and Kennedy18, tuna swarm optimization (TSO) by Xie et al.19, and integrations of particle filter (PF) with PSO by Pozna et al.20. Beyond biological inspirations, some metaheuristics draw from physical phenomena, attempting to replicate natural laws like gravity, electromagnetism, and inertia21. Examples of these physics-based algorithms include the gravitational search algorithm (GSA) by Rashedi, Nezamabadi-pour, and Saryazdi22, water evaporation optimization (WEO) by Kaveh and Bakhshpoori23, and the Black Hole (BH) algorithm by Hatamlou24. This diversity of inspirations showcases the breadth of approaches in metaheuristic algorithm development, from biological evolution and social behaviors to the principles governing physical phenomena.

Most metaheuristic methods operate primarily using the information provided by the best solution found in each iteration to guide their strategy25. This characteristic is a defining aspect of these algorithms. Although these methods produce several solutions that could provide information about the shape and structure of the objective function, their use is completely discarded26. The lack of information from the objective function’s landscape can lead to increased search times, as these methods may need to generate and assess an exhaustive number of solutions to thoroughly explore the search space27. They navigate through the solution space iteratively, making decisions and adjustments based solely on the performance of the current best solution. This approach means that the algorithms generate potential solutions without any preliminary indication of their quality or effectiveness. The information of each produced solution only becomes apparent after it has been evaluated against the objective function28. While this strategy allows for flexibility and the ability to tackle a wide range of problems, it can also result in less efficient search processes, especially when dealing with complex or high-dimensional optimization challenges. The need to extensively sample the search space with scarce information to identify promising regions often translates into higher computational costs and longer times to find optimal or near-optimal solutions. Given the limitations of traditional metaheuristic methods in efficiently exploring the search space, there’s a significant demand for algorithms that can intelligently guide the search process by utilizing information from the objective function obtained during the generation of solutions. Incorporating this information can substantially enhance the search efficiency, as it enables the algorithm to focus on more promising regions of the search space.

A radial basis function neural network (RBFNN)29 is an artificial neural network structured into three layers: an input layer, a hidden layer equipped with radial basis functions, and an output layer. The hidden layer’s activation functions are radial basis functions, which are unique mathematical functions determined by the distance between the input data points and certain center points. These center points, along with their corresponding weights, are optimized during the training process, enabling the RBFNN to accurately approximate complex functions and unravel intricate patterns within the data. A notable feature of RBFNNs is their transparency30,31; once trained, each neuron in the hidden layer corresponds to a specific region of the data space, indicating its sensitivity and contribution to the model’s output. This attribute allows for a clear understanding of how different regions of the data space influence the output, effectively distinguishing between areas that are likely to yield higher output values from those that are not. Such insights can be invaluable for an optimization algorithm, guiding it to prioritize sampling from regions of the space that are more promising, thereby enhancing the efficiency and effectiveness of the search process.

Maximum designs32 are experimental design techniques focused on ensuring comprehensive coverage across the full spectrum of possible experimental conditions or factors. The primary goal is to maximize the spread of experimental points throughout the design space, thus facilitating a thorough exploration of all potential interactions among the decision variables. These techniques utilize an iterative process where, in each iteration, the position of an experimental point is altered by randomly varying its decision variables33. This modification is evaluated against a specific criterion that measures the distribution of points. If the new configuration improves this distribution, the change is accepted; otherwise, it’s rejected, and the experimental point reverts to its original position. This cycle repeats until the distribution of all points meets a predetermined threshold of desired dispersion. Consequently, this method ensures that the experimental points are optimally distributed across the design space, allowing for a comprehensive understanding of the process’s responses to all variations in the decision variables. While maximum designs have been widely applied in experimental design, their use as an initialization method in metaheuristic algorithms remains almost unexplored.

Expensive optimization34,35 is a field widely observed in engineering practice, characterized by the use of computationally intensive execution of computational models to calculate the quality of candidate solutions. Owing to significant time requirements, only a limited number of function evaluations can typically be utilized for expensive optimization. This limitation significantly restricts the application of many optimization algorithms, including popular evolutionary and metaheuristic algorithms, which generally require a large number of function evaluations to achieve satisfactory solutions36. Consequently, there is a need for more efficient optimization methods that can deliver high-quality results with fewer evaluations, making them suitable for expensive optimization scenarios.

This paper introduces a novel metaheuristic optimization algorithm that utilizes the capabilities of a radial basis function neural network (RBFNN) to guide the search process. The algorithm starts by employing the maximum design approach to initialize a set of solutions that are strategically positioned to cover the entire search space comprehensively. Subsequently, the algorithm enters a cyclical phase. In each cycle, the RBFNN is trained to model the objective function values based on the current set of solutions. The algorithm identifies the neurons in the hidden layer corresponding to the highest values of the objective function and utilizes the parameters of these crucial neurons, such as their centroids and standard deviations, to generate new solutions by sampling in the order of importance until the typical number of solutions is reached. As the algorithm focuses on the promising regions of the search space that generate a high value of the objective function, it avoids the exhaustive evaluation of solutions and enables a more informed exploration of the search space. The proposed method’s efficiency was assessed by comparing it with several popular metaheuristic algorithms on a set of 30 test functions. The results showed that the new approach outperformed the existing techniques, achieving superior-quality solutions and better convergence rates.

The rest of this research paper is divided into several sections. Section “Radial basis functions neural network (RBFNN)” delves into the attributes and features of the Radial Basis Function Neural Network (RBFNN). Section “Maximum designs” covers the Maximum Designs Method and its attributes in exploring the search space. Section “Proposed algorithm” outlines the suggested methodology. Section “Experimental results” presents the experimental outcomes. Section “The proposed RBFNN-A method in expensive optimization” conduct a complexity time analysis from the proposed method. Lastly, Section “Conclusions” the conclusions are presented.

Radial basis functions neural network (RBFNN)

Structure and operation of a RBFNN

A Radial Basis Function Neural Network (RBFNN)29 is a specific type of artificial neural network that stands out due to its distinct architecture. It has three layers: an input layer, a hidden layer with radial basis functions, and an output layer (as illustrated in Fig. 1). The input layer represents the input variables of the network, with each node corresponding to a specific feature or dimension of the input data. The hidden layer of an RBFNN is where the network’s unique characteristics lie, comprising radial basis functions (RBFs) associated with individual neurons. Each neuron is defined by a center point and a radial basis function that quantifies its influence based on the distance from the center. The RBFs exhibit local receptive regions, meaning they are sensitive primarily to data points located near their respective centers. This characteristic enables the network to capture particular patterns or features in the input data. The output layer typically consists of a linear combination of the hidden layer outputs, transforming the information extracted by the RBFs into the network’s final output.

In Fig. 1, a schematic representation of a generic Radial Basis Function Neural Network (RBFNN) is depicted. As with any neural network, the objective is to model the mapping \(\mathbf{Y}=f(\mathbf{X})\) by considering a set \(\mathbf{D}\) of training data \(\left(\mathbf{X}\left(n\right),\mathbf{Y}(n)\right)\), where \(n\) is one of the elements of \(\mathbf{D}\). The network comprises of \(p\) neurons in the input layer, \(m\) neurons in the hidden layer, and an output layer with \(r\) neurons. Thus, the output k, denoted as \({y}_{k}(n)\), which is generated based on the input pattern \(\mathbf{X}\left(n\right)=\left\{{x}_{1}(n),\dots ,{x}_{p}(n)\right\}\), can be expressed and modeled as follows:

In Eq. (1), the term \({w}_{ik}\) signifies the weight attached to the hidden neuron \(i\) with respect to the output neuron \(k\), while \({\phi }_{i}\left(n\right)\) denotes the activation levels of the hidden neurons (\(i=1,\dots ,m\)) that correspond to the input \(\mathbf{X}\left(n\right)\). The activation value of each hidden neuron \(i\), denoted as \({\phi }_{i}\left(n\right)\), is determined by the following equation:

In Eq. (2), \(\phi (\cdot )\) is referred to as the radial basis function, while \({C}_{i}=\left({c}_{i1}, \dots , {c}_{ip}\right)\) represents the centers of the radial basis function. Additionally, \({\sigma }_{i}=\left({\sigma }_{i1},\dots ,{\sigma }_{ip}\right)\) denotes the deviation or width, and ‖∙‖ represents the Euclidean distance between the input data \(\mathbf{X}\left(n\right)\) and the center \({C}_{i}\).

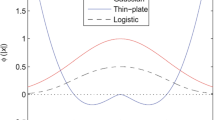

The activation value of a neuron in the hidden layer is determined by the proximity of the input data \(\mathbf{X}\left(n\right)\) to the center \({C}_{i}\). In general, a radial basis function \(\phi (\cdot )\) attains a value close to its maximum when the input \(\mathbf{X}\left(n\right)\) is in close proximity to the center \({C}_{i}\) of the neuron; conversely, it assumes a minimum value when \(\mathbf{X}\left(n\right)\) is far from the center. There are several functions that exhibit these characteristics. However, one of the most commonly used is the Gaussian function. Under these conditions, the activation value of a neuron i in the hidden layer can be calculated as follows:

A key attribute of the radial basis function neural network (RBFNN) is its inherently interpretable structure, especially evident in the hidden layer. During the training process, the RBFNN adjusts the center points and shapes of the radial basis functions, allowing it to adeptly approximate complex nonlinear relationships present in the data. This adjustability is crucial, as it enables the network to effectively model intricate patterns and dependencies between variables. The transparency of this process is a significant advantage; it allows for a clear understanding of how the network processes information. By observing the parameters in the radial basis functions and their influence on the network’s output, it becomes possible to determine the specific associations and dependencies between the input and output data. This level of interpretability is particularly valuable in applications where understanding the underlying decision-making process of the model is as important as the accuracy of its predictions.

Training of a RBFNN

The training process of a Radial Basis Function Neural Network (RBFNN) consists of two stages37 that facilitate the network’s ability to approximate complex functions with precision. Initially, an unsupervised phase is employed wherein the centers \({C}_{i}\) and widths \({d}_{i}\) of the radial basis functions (RBFs) in the hidden layer are initialized using the \(k\)-means clustering method (\(i=1,\dots ,m\)).

The supervised learning phase is the next step in the network’s training process. During this phase, input data points are provided to the network, and the radial basis functions (RBFs) compute their output values. These outputs are then compared to the target values from the training dataset, and a common optimization technique, such as gradient descent, is employed to adjust the weights connecting the hidden layer (\(i=1,\dots ,m\)) and output layer (\(k=1,\dots ,r\)) neurons so that the error between the computed and target outputs is minimized.

The process of training the network is repeated iteratively on the training dataset to ensure that it converges to a satisfactory approximation of the target function. Properly trained, the radial basis functions of the RBFNN are positioned and shaped to capture the essential patterns and relationships within the data, thereby enabling the network to generalize well to unseen data points. Once the RBFNN has been trained, it can be applied to perform a variety of tasks, including function approximation, pattern recognition, and regression, with a high degree of accuracy and transparency. The key advantage of the RBFNN is its ability to approximate complex nonlinear relationships between inputs and outputs, making it a powerful tool for data analysis and modeling.

Maximum designs

Maximum designs32,33 represent a class of experimental design techniques dedicated to achieving extensive coverage throughout the entire range of potential experimental conditions or factors. The central aim of these techniques is to distribute experimental points as widely as possible across the design space. This broad dispersion is crucial for enabling a comprehensive exploration of the experiment’s landscape, ensuring that all potential interactions between decision variables are thoroughly investigated. By maximizing the spread of these points, maximum designs ensure that no area of the design space is overlooked, allowing for a complete and detailed understanding of how different factors interact and influence the outcomes. This approach is particularly important in experiments where capturing the full complexity of the system or process under study is essential for drawing accurate and reliable conclusions.

This section is divided into two parts. The first part explains the index used by the maximum designs method to measure data dispersion. The second part analyzes the maximum designs method in detail.

Evaluation of dispersion

To assess how well a set of \(N\) elements \(\left\{{\mathbf{x}}_{1},\dots ,{\mathbf{x}}_{N}\right\}\) is distributed within its space, the determinant of a covariance function \(Co\) matrix serves as a critical index. A covariance function captures the variance between pairs of variables indicating their level of differentiation. The covariance matrix \(C\) is an \(N\times N\) matrix, encapsulating the relationships between all \(N\) elements within the set. Each element \({co}_{i,j}\) within matrix \(Co\) is defined as follows:

The relation between \({\mathbf{x}}_{i}\) and \({\mathbf{x}}_{j}\) is represented by the value \({co}_{i,j}\). When \({\mathbf{x}}_{i}\) and \({\mathbf{x}}_{j}\) are close, the value of \({co}_{i,j}\) is nearly one, but it decreases to zero as they move further apart. Once the matrix \(Co\) is calculated, the value of the determinant \(\left|Co\right|\) represents the degree of dispersion of \(\left\{{\mathbf{x}}_{1},\dots ,{\mathbf{x}}_{N}\right\}\). A high value of \(\left|Co\right|\) is high indicates a higher dispersion of the data.

The method of maximum designs

In Maximum designs, the central objective is to adjust the positions of a set of \(N\) points \(\left\{{\mathbf{x}}_{1},\dots ,{\mathbf{x}}_{N}\right\}\) within a \(d\)-dimensional space \(\left({\mathbf{x}}_{i}=\left\{{x}_{i,1},\dots ,{x}_{i,d}\right\},i\in 1,\dots ,N\right)\) to maximize the coverage of the design space \(\mathbf{S}\), defined by the range of minimum (\({min}_{j}\)) and maximum (\({max}_{j}\)) values of each decision variable \(j\) where \(j\in 1,..,d\). Initially, this technique involves randomly positioning the set of \(N\) points within the search space \(\mathbf{S}\). Following this, an iterative process is employed. In each iteration \(t\), the position of one of the points \({\mathbf{x}}_{i}\) is altered by randomly changing its decision variables producing the new position \({\mathbf{x}}_{i}^{n}\). After implementing this change, a crucial evaluation step follows. This evaluation determines whether the dispersion of the new configuration \(\left|Co({\mathbf{x}}_{i}^{n})\right|\) (that includes the modified point \({\mathbf{x}}_{i}^{n}\)) is higher than the original dispersion \(\left|Co({\mathbf{x}}_{i})\right|\). If the new configuration improves this distribution \(\left(\left|Co({\mathbf{x}}_{i}^{n})\right|>\left|Co({\mathbf{x}}_{i})\right|\right)\), the change \({\mathbf{x}}_{i}^{n}\) is accepted; otherwise, it’s rejected, and the point reverts to its original position \({\mathbf{x}}_{i}\). This cycle repeats until the distribution of all points meets a predetermined threshold of desired dispersion. This iterative cycle is continued until one of two criteria is met: either a predefined number of iterations \(iterini\) has been completed or the set of \(N\) points has achieved a specified level of dispersion \(\left|Co\right|\) in its last configuration.

Figure 2 provides a clear example of how the method of maximum designs operates in practice. Figure 2a, we observe an initial configuration of 50 points in two dimensions \({x}_{1}\) and \({x}_{2}\) considering a space defined within \([\text{0,1}].\) These points are deliberately clustered around the center of the space, a setup designed to highlight the impact of the method. In Fig. 2b, the outcome after 100 iterations is depicted. Here, the final arrangement of points is showcased, demonstrating how the method achieves comprehensive and efficient coverage of the space. This transformation from a centralized cluster to a well-distributed set of points across the entire space underscores the effectiveness of the maximum designs method in optimizing the spread of points for better exploration and analysis of the given area.

Proposed algorithm

This study introduces a novel metaheuristic optimization algorithm that incorporates the information derived from a radial basis function neural network (RBFNN) as a means of informing the search process. The aim of the proposed method is to identify the optimal solution for a nonlinear objective function \(f\), which can be described as follows:

where the function is referred as \(f:{\mathbb{R}}^{d}\to {\mathbb{R}}\), within a search space represented by \(\mathbf{S}\) \(\left(\mathbf{x}\in {\mathbb{R}}^{d}|{min}_{i}\le {x}_{i}\le {max}_{i}, i=1,\dots ,d\right)\), which is bounded by lower (\({min}_{i}\)) and upper (\({max}_{i}\)) limits for each dimension. Our proposed approach consists of six essential components: (4.1) initialization, (4.2) training of the Radial Basis Function Neural Network (RBFNN) and (4.3) generation of solutions. Each of these elements plays a critical role in the effectiveness of our optimization strategy. In the subsequent subsections, we will provide a detailed explanation of each component, highlighting their significance and how they collectively contribute to the success of our approach. At the end of this section, in subsection “Computational procedure”, a summary of the computational procedure of the complete method is given.

Initialization

Our methodology incorporates the information obtained from the neural network’s training to develop the search strategy. For this reason, it is essential to have an initial comprehensive and representative sample set that encompasses the entire search space. This process is achieved through the utilization of the maximum design method (as outlined in Section “Maximum designs”). The initialization stage represents the initial operation of the algorithm, with the aim of establishing an initial population consisting of \(N\) elements (\(P=\left\{{\mathbf{x}}_{1},\dots , {\mathbf{x}}_{N}\right\}\)). Each solution \({\mathbf{x}}_{i}\) in the population represents a specific combination of decision variables \(\left\{x,...,{x}_{i,d}\right\}\), which assumes an initial value for the optimization process. In our approach, the initial positions of each element \({\mathbf{x}}_{i}\) are determined by the maximum design method. The method has been configured to consider 1000 iterations (\(iterini=1000\)), which guarantees that all the positions of the candidate solutions thoroughly explore the search space \(\mathbf{S}\). It is important to note that the computational cost of initialization is performed only once in the optimization process.

Training the RBFNN

Given a set \(\left\{{\mathbf{x}}_{1},\dots , {\mathbf{x}}_{N}\right\}\) of \(N\) points sourced from the input space \(\mathbf{S}\)—either as initial configuration or as results from prior iterations—the next step involves evaluating these points against the objective function \(f\). This evaluation yields \(N\) training pairs, each linking a position \({\mathbf{x}}_{i}\) to its respective outcome \(f({\mathbf{x}}_{i})\) produced by the objective function. This collection of pairs forms the dataset on which the neural network is trained, aiming to closely approximate the objective function \(f\). Through this training, the network learns to correlate input positions with their corresponding function values. This capability allows the network to grasp the intricate patterns and relationships present in the data. Consequently, this understanding significantly improves the network’s prediction accuracy, thereby enhancing its role in steering the optimization process towards more effective solutions.

In the training phase, the neural network is structured to include \(d\) neurons in the input layer, correlating to each decision variable. The hidden layer contains \(M\) units. This layer is important for understanding the complex relationships within the data. A singular output neuron is assigned for generating the network’s predictions of the objective function \(f\). The training follows the procedures detailed in Section “Radial basis functions neural network (RBFNN)”, employing a structured methodology to ready the network for its optimization role. This arrangement guarantees that the neural network is in harmony with the problem’s specific attributes, facilitating its capacity to accurately learn and predict the objective function. Such an alignment is essential, as it equips the network to significantly influence the optimization process, steering it toward favorable results.

The performance of a Radial Basis Function Neural Network (RBFNN) is significantly influenced by the number of neurons, \(M\), in its hidden layer. Having a higher number of neurons enhances the network’s ability to precisely approximate complex functions, capturing intricate details. However, this advantage comes with the risk of overfitting to the training data, which can undermine the network’s ability to perform well on unseen data. On the other hand, a smaller number of neurons simplifies the model and reduces computational demands but may compromise the quality of function approximation due to insufficient complexity. Determining the optimal number of neurons in the hidden layer is a delicate balance that depends on the scope of the search space. Achieving this balance is essential for maximizing RBFNN performance, ensuring the network can accurately model the data patterns without unnecessary complexity. Our methodology for selecting the appropriated number of neurons in the hidden layer follows the model considered in Eq. (6), tailored to align with these considerations.

In our Radial Basis Function Neural Network (RBFNN) model, the selection of the number of hidden neurons \(M\), is influenced by both the dimensions \(d\) of the data and the quantity of training data available \(N\). The complexity inherent in the data, which guides the determination of the optimal number of hidden neurons, is directly affected by these two factors. As the dimensionality of the data increases, a correspondingly larger number of hidden neurons may be required to effectively capture and replicate the patterns present. High dimensional data, in particular, demands a more substantial neuron count in the hidden layer to ensure the network can accurately learn and represent the underlying relationships. Utilizing the approach defined in Eq. (6), we calibrate the number of neurons in the hidden layer to achieve a balance. This balance is crucial for providing a robust approximation of the objective function, ensuring generalization while avoiding the problem of overfitting. This methodology ensures that our RBFNN model can adapt to the complexity and scale of the data it is trained on, offering an optimal level of performance.

After finalizing the network architecture, the next step is to carry out the training process as outlined in Section “Radial basis functions neural network (RBFNN)”. Conducted over 100 iterations, this phase arms the neural network with the ability to closely represent the objective function. With this information, the neural network can be used to explore the search space. Upon the completion of the training, the network finds the network parameters to establish significant associations between the input space data points and their related outputs. The result of this training introduces two crucial aspects into the network’s framework: A) an in-depth insight into certain areas within the input space that impact the output significantly, and B) a clear evaluation of how these areas contribute to the outcome. These integral components enable the network to uncover the complex dynamics between variables, offering a refined analysis of the data. This enhancement paves the way for devising precise and powerful optimization strategies, significantly boosting the network’s functionality.

The influence of regions of the input space on particular outputs is defined by the outputs of the radial basis functions \({\phi }_{i}\), where each function is associated with a neuron \(i\) in the hidden layer. These functions act as specialized filters, with each one exhibiting distinct sensitivity to certain areas of the input space. When a radial basis function’s output nears one for a specific input, this indicates that the input lies within a region to which the function is highly responsive. On the other hand, a function output close to zero suggests that the input falls outside the function’s sensitive region. This capability of the network to discriminate and evaluate the relevance of various parts of the input space is crucial. It enables the network to deeply grasp the intricate dynamics present in the data. As a result, the network can enhance the precision of its predictions and the efficacy of its optimization approaches, leveraging this nuanced understanding to navigate the complexities of the input space more effectively.

The weights connecting the neurons in the hidden layer to the output neuron also play a pivotal role in highlighting the importance of specific regions within the input space for determining the final output. A high and positive weight, denoted by \({w}_{i}\), indicates that the region of the input space associated with the radial basis function \({\phi }_{i}\) significantly influences the generation of high values or peaks in the network’s output. Conversely, a high but negative weight \({w}_{i}\) suggests that the region delineated by \({\phi }_{i}\) is instrumental in producing low values or valleys in the output. These weightings are crucial for the network’s ability to prioritize different areas within the input space, effectively mapping the complex interplay between inputs and outputs. By understanding these relationships, the network enhances its ability to deliver accurate predictions and make informed optimization decisions, leveraging the nuanced insights provided by the weight assignments to navigate the optimization landscape more effectively.

Figure 3 demonstrates how the neural network’s structure, post-training, captures essential details about the function it seeks to approximate. In the depicted scenario, the network aims to model a function f, utilizing a dataset composed of points (\(\mathbf{x},J(\mathbf{x})\)), where \(\mathbf{x}\) encompasses two decision variables, \({x}_{1}\) and \({x}_{2}\). The figure highlights the pivotal role of the centroids of the hidden layer’s neurons (\(M=3\)) in determining the locations of the function’s peaks and valleys after approximation. Notably, the most significant peak is at position \({C}_{3}\), coinciding with the highest weight \({w}_{3}=2\) of a positive value, whereas the valley is at position \({C}_{3}\), linked to the sole negative weight \({w}_{3}=-1\). The standard deviations, \({\sigma }_{1}\), \({\sigma }_{2}\), and \({\sigma }_{3}\), are crucial for understanding how the function \(f\) is distributed around key positions \({C}_{1}\), \({C}_{2}\), and \({C}_{3}\) within the network. Remarkably, these standard deviations are uniform across both dimensions, \({x}_{1}\) and \({x}_{2}\), indicating a consistent spread. The larger values of \({\sigma }_{1}\) and \({\sigma }_{2}\) (\({\sigma }_{1}={\sigma }_{2}=0.8\)) suggest a broader, more gentle distribution, whereas the smaller value of \({\sigma }_{3}\) (\({\sigma }_{3}=0.5\)) indicates a sharper peak around \({C}_{3}\). This variance in dispersion values underlines the network’s ability to differentiate and detail the function’s local behaviors and characteristics, offering profound insights into the objective function’s nature. Such detailed understanding is crucial for refining optimization strategies and making informed decisions.

Production of the new solutions

In our methodology, at each iteration \(k\), we create \(N\) new solutions by extracting information from the preceding \(N\) solutions used to train the neural network. To generate the \(N\) new solutions, we first identify the \(b\) most significant solutions from the current population. The knee point technique38,39 is employed to determine the number and identity of the most significant solutions. From these \(b\) solutions, the \(N\) new solutions are generated. To perform this process, one of the \(b\) solutions is randomly selected. Assuming that solution \(i\) has been chosen, the next step is to determine which hidden layer neural network this solution belongs to. The neural network responsible for this solution will be the one that yields a higher value, as it is more closely related to its centroid. Assuming that solution \(i\) belongs to the region corresponding to neuron \(j\), the new solution is generated by sampling a Gaussian function with the parameters of the radial basis function corresponding to neuron \(j\). This process is repeated until \(N\) new solutions are generated. All these operations will be described in the following paragraphs.

Identification of the most significant solutions

The knee point38,39 is used in this work to identify the number \(b\) of significant solutions among a set N of solutions. In system engineering, the knee point refers to a critical position in a performance curve or trade-off analysis in which the value of the elements changes significantly. This point is used to identify the optimal balance between different factors, such as value versus significance, in the design and development of systems. Beyond the knee point, the values of the elements strongly diminished until they were categorized as insignificant compared with first elements. Identifying this point helps decision makers select the most effective and efficient option among various alternatives.

To identify the knee point among the \(N\) solutions, we begin by organizing these solutions in descending order based on their performance against the objective function. In this arrangement, solution \({\mathbf{x}}_{1}\) is the one yielding the highest value \(f({\mathbf{x}}_{1})\), indicating optimal performance, while solution \({\mathbf{x}}_{N}\) is at the other end, offering the least desirable outcome \(f({\mathbf{x}}_{N})\). Following this organization, we construct two sequences. The first sequence, labeled \({s}_{1}\), incorporates the objective function values generated by each solution \(\left({s}_{1}=\left\{f\left({\mathbf{x}}_{1}\right),\dots ,f({\mathbf{x}}_{N})\right\}\right)\). The second sequence, named \({s}_{2}\), consists of the indices corresponding to each solution’s position in the ordered list \(\left({s}_{2}=\left\{1,\dots ,N\right\}\right)\). The subsequent phase involves normalizing these sequences to ensure their values range from 0 to 1. This normalization is achieved by dividing the sequences by their respective maximum values, resulting in \({s}_{1}\) being scaled by \(f({\mathbf{x}}_{1})\), the highest objective function value, and \({s}_{2}\) being divided by \(N\), the total number of solutions.

Taking into account the values of \({s}_{1}\) and \({s}_{2}\), we can define the objective function \(J\) that connects the data from \({s}_{2}\) in terms of the significance of \({s}_{1}\). The structure of \(J\) is modeled as follows:

This objective function \(J\), consisting of values \({J}_{1},\dots ,{J}_{N}\), has a size of \(N\), and one of its key features is that it possesses only one global minimum value. This minimum value signifies the knee point \(KP\), which is determined as \(KP=\text{min}(J)\). Figure 4 shows the estimation of \(KP\). In the example, a set of eleven points (\(N=11\)) that associates the normalized variables \({s}_{1}\) and \({s}_{2}\) is assumed. In Fig. 4, the point \(KP\) divides the graph into two parts, the first 4 points represent the most significant solutions \(\left\{{\mathbf{x}}_{1},{\mathbf{x}}_{2},{\mathbf{x}}_{3},{\mathbf{x}}_{4}\right\}\) (\(b=4\)), while the other 7 \(\left\{{\mathbf{x}}_{5},\dots ,{\mathbf{x}}_{11}\right\}\) present the insignificant solutions. In our approach the 4 solutions will be used as the significant ones to produce the 11 new solutions.

Generation of solutions

The generation of a new set of \(N\) solutions begins by identifying the \(b\) most significant solutions. These \(b\) solutions \(\left\{{\mathbf{x}}_{1},\dots ,{\mathbf{x}}_{b}\right\}\) are then utilized as the basis for the subsequent set of \(N\) new solutions. The generation process begins with the random selection of one solution from the identified \(b\) significant solutions. If the solution \(i\) is selected. The next step is to determine the specific hidden layer neural network to which this solution is most closely associated. This is determined by identifying the neuron that produces the highest output value for solution \(i\), indicating a closer proximity to its corresponding centroid. If it is determined that solution \(i\) falls within the domain influenced by neuron \(j\), then the new solution is created considering the parameters associates to this neuron. Under these conditions, the new solution \({\mathbf{x}}_{new}\) is produced by sampling from a normal distribution considering the following model:

where \({C}_{j}\) and \({\sigma }_{j}\) (\({\sigma }_{j}=\left({\sigma }_{j1},\dots ,{\sigma }_{jd}\right)\)) represent the centroid and standard deviation of the radial basis function associated with neuron \(j\). The process of generating a new solution is repeated until \(N\) new solutions are generated.

Figure 5 presents a visual illustration of the sampling process, showing the specific regions within the input space that each neuron in the hidden layer is responsive to. These designated areas are targeted during the sampling phase, utilizing the parameters that correspond to the radial basis functions associated with each neuron. This graphical representation effectively highlights how the neural network’s structure facilitates the identification and sampling of distinct areas, based on the neurons’ sensitivity to particular segments of the input space. By employing the parameters of the radial basis functions, the process ensures that samples are drawn from regions most relevant to the neurons’ activation patterns, offering insight into the network’s method of approximating and exploring the input space through its hidden layer dynamics.

Computational procedure

The computational methodology of our approach is encapsulated in Algorithm 1, presented as pseudocode. Initially, the algorithm involves setting up input parameters, including the population size \(N\), the number of initialization iterations \(iterini\), and the number of evolution iterations \(itermax\). Following this setup, the population is initialized within the search space boundaries using the maximum designs method (line 2). The algorithm then enters a cycle (line 3) that repeats until the predefined number of iterations has been met (\(itermax\)). The cycle commences with training the radial basis function network with the current \(N\) elements (line 4). Subsequent to this training phase, the algorithm identifies the \(b\) most significant solutions out of the \(N\), utilizing the knee-point method for selection (line 5). The generation of the next set of \(N\) solutions is based on these \(b\) significant solutions (line 6), necessitating the alignment of each significant solution with the neuron in the hidden layer to which it is most closely related. Leveraging this alignment, new solutions are crafted by sampling a normal distribution with parameters derived from the associated neuron. This iterative process culminates in the generation of \(N\) new solutions. Upon completion of the cycle, the algorithm deems the best-performing solution as the answer to the optimization challenge, effectively iterating through initialization, training, selection, and generation phases to arrive at an optimal solution.

Pseudocode of the proposed method.

Experimental results

This section details a suite of experiments designed to evaluate the efficacy of our approach, herein referred to as RBFNN-A (RBFNN approach). For a comprehensive evaluation of our algorithm’s performance, several elements were considered. First, a set of 29 functions traditionally used to evaluate the accuracy and efficiency of metaheuristic methods was included. These functions were chosen to maintain compatibility with other previous studies and are listed in Table A of the Appendix. Second, the CEC 2022 test function set, which includes 12 functions with varying levels of complexity, was utilized. This set allows for the consideration of composite and hybrid functions, providing a robust test for the algorithm’s capabilities. These functions are detailed in Table B of the Appendix. Finally, a set of engineering problems was used in the comparisons to demonstrate the method’s effectiveness on practical problems commonly encountered in practice. A description of these engineering problems is provided in Table C of the Appendix. This multi-faceted approach ensures a thorough and relevant assessment of our algorithm.

To facilitate a comprehensive evaluation of our proposed approach’s effectiveness, we’ve incorporated a range of well-established metaheuristic algorithms into our analysis. These selected algorithms span from traditional to more contemporary optimization techniques, thereby covering a wide spectrum of methodologies. The comparative analysis includes several notable algorithms such as the Artificial Bee Colony (ABC)16, Crow Search Algorithm (CSA)40, Differential Evolution (DE)41, Estimation of Distribution Algorithm (EDA)42, Moth-Flame Optimization (MFO)43, Harmony Search (HS)44, Simulated Annealing (SA)45, and State of Matter Search (SMS)46. To ensure a fair and consistent comparison across all methods, we have adhered to the parameter settings recommended by the original creators of each algorithm, as cited in their respective publications. By aligning each algorithm’s configuration with the suggestions of its authors, we aim to present each method in its best light, ensuring that the performance metrics reflect the optimal capabilities as intended by their developers. This approach underscores the integrity of the comparison, offering a clear and unbiased assessment of how our proposed method stacks up against established benchmarks in the field.

This section is divided into four sections, each of which is carefully structured. The first section “Assessment of performance in relation to its parameters” deals with the evaluation of the performance of the proposed method in terms of its own parameters. The second section, section “Performance comparison considering the 29 functions from table A”, begins with a comprehensive performance analysis that compares the proposed method to established metaheuristic algorithms considering the 29 functions of Table A. This comparison is intended to highlight the unique strengths and efficiency of the proposed approach. In subsection “Engineering design problems”, the performance of all metaheuristic methods is analyzed through the set of function of CEC 2022 from Table B. Finally, in Section “Convergence analysis”, we analyze the performance of metaheuristic algorithms when they attempt to solve the engineering problems described in Table C of the Appendix.

Assessment of performance in relation to its parameters

The two parameters, the number of elements \(N\) and the number of hidden neural neurons \(M\), significantly influence the expected performance of the proposed optimization algorithm. In this sub-section, we analyze how the algorithm behaves under different settings of these parameters. To keep the analysis straightforward, we consider only a subset of functions such as \({f}_{2}, {f}_{5}, {f}_{13},{f}_{20}\) and \({f}_{25}\), which include both unimodal and multimodal functions. The description of these function is shown in Table A of the Appendix. During simulations, all functions are evaluated with a dimension \(n=50\). Initially, the parameters \(N\) and \(M\) are set to their default values, with \(N=50\) and \(M=10\). Our analysis then examines the impact of each parameter independently, keeping the other parameter fixed at its default value. To reduce the stochastic effects inherent to the algorithm, each benchmark function is executed independently 10 times. The termination criterion for the optimization process is set to a maximum of 1000 iterations (\(itermax=1000\)). This systematic approach ensures a thorough evaluation of how \(N\) and \(M\) affect the performance of the proposed algorithm.

In the first stage, the behavior of the proposed algorithm is analyzed by considering different values for \(N\). In this analysis, the values of \(N\) vary from 10 to 100, while the value of \(M\) remains fixed at \(10\). The results, recorded in Table 1, are presented in terms of the Average Best Fitness values (AB). The Average Best Fitness Value (AB) represents the lowest fitness value achieved by each method, reflecting the quality of the solutions generated. Notably, lower values indicate superior performance. From Table 1, it is evident that the proposed algorithm maintains better performance on all functions when \(N\ge 50\). Conversely, when \(N<50\), the performance of the algorithm is inconsistent, generally producing suboptimal results. This analysis highlights the importance of adequately setting the parameter \(N\) to ensure the algorithm’s robustness and effectiveness.

In the second stage, the performance of the proposed algorithm is evaluated by considering different number of hidden neurons \(M\). In this experiment, the values of \(M\) are varied from 2 to 20, while the value of \(N\) remains fixed at 50. The statistical results obtained by the proposed method using different values of \(M\) are presented in Table 2. From Table 2, it is clear that our optimization algorithm performs best when \(M\ge 10\), consistently obtaining superior results across all functions. Conversely, when \(M<10\), the performance of the algorithm declines significantly, resulting in suboptimal outcomes.

In general, the experimental results shown in Tables 1 and 2 suggest that a proper combination of the parameter values \(N\) and \(M\) can significantly improve the performance of the proposed method and the quality of the solutions. From this experiment, we can conclude that the optimal parameter set consists of \(M\ge 10M\) and \(N\ge 50N\). Consequently, to avoid unnecessary computational load while maintaining high performance, the values of \(M\) and \(N\) were set to their minimum effective values, \(M=10\) and \(N=50\). This finding not only enhances the efficiency of the computational process but also validates the practical application of Eq. (6), which has been proposed in several studies in the literature for the training of radial basis neural networks. This validation demonstrates the robustness and reliability of the proposed algorithm within the tested parameter range.

Performance comparison considering the 29 functions from table A

For a broad evaluation, we utilized a diverse set of 29 benchmark functions, encompassing types such as unimodal, multimodal, and hybrid, with their mathematical representations provided in Table A of the Appendix. These functions were chosen to maintain compatibility with other previous studies. From Table A, \(f\left({x}^{*}\right)\) represents the optimal function value obtained at the position \({x}^{*}\), and \(\mathbf{S}\) denotes the defined lower and upper bounds of the search space. This structured approach aims to offer a detailed insight into the RBFNN-A’s capabilities, highlighting its strengths and areas of robustness across a variety of challenging functions.

In our comparative analysis of various algorithms, we concentrate on analyzing the fitness values produced by each method, attempting to minimize these values throughout the optimization process. The optimization efforts are regulated by a predefined stopping criterion of a maximum of 1000 iterations (\(maxiter=1000\)). Additionally, the analysis scrutinizes the algorithms’ performances at different dimensional scales, notably at 50 and 100 dimensions, to evaluate the scalability of our proposed approach. For consistency, each metaheuristic algorithm is configured with a population size of 50 individuals, which dictates the number of candidate solutions explored in each iteration. Considering the inherent stochastic nature of these algorithms, we conduct 30 independent runs for each benchmark function to achieve a robust evaluation. These multiple iterations are crucial for understanding the impact of randomness and variability on the performance of the algorithms, thereby enriching the statistical analysis and insights. This meticulous methodology facilitates a detailed and nuanced comparison of the algorithms’ efficiency across various operational scenarios and constraints.

The outcomes of each algorithm are thoroughly detailed in Tables 2 and 3, which provide essential numerical data for evaluating the performance of each approach for 50 and 100 dimensions, respectively. These measurements include the average best fitness values AB, the median fitness values MD, and the standard deviation SD of the fitness values. The averaged Best Fitness Value (AB) signifies the lowest fitness value achieved by each method, reflecting the quality of the solutions it generates. Notably, lower values here indicate superior performance. Furthermore, the Median Fitness values (MD) is the median fitness value computed over all runs for each method, providing an overall perspective on the algorithm’s performance and consistency. This average is calculated from 30 separate runs to minimize the effect of randomness inherent in these algorithms. Lastly, the Standard Deviation (SD) quantifies the variability in the fitness values across the 30 executions for each method, with a lower standard deviation indicating more consistent and dependable performance. To aid in rapid identification of the most efficient algorithms, the entries in these tables that represent the best performance are highlighted in boldface, emphasizing the methods that yield the lowest fitness values.

The optimization outcomes for all 28 functions at 50 dimensions, detailed in Table 3, highlight the RBFNN-A algorithm’s superior performance across a broad spectrum of cases, specifically for functions \({f}_{1}\), \({f}_{2}\), \({f}_{3}\), \({f}_{4}\), \({f}_{5}\), \({f}_{9}\), \({f}_{10}\), \({f}_{11}\), \({f}_{12}\), \({f}_{15}\), \({f}_{16}\), \({f}_{17}\), \({f}_{19}\), \({f}_{20}\), \({f}_{21}\), \({f}_{22}\), \({f}_{23}\), \({f}_{25}\), \({f}_{26}\), \({f}_{27}\), and \({f}_{28}\). This demonstrates a notable enhancement in the proposed algorithm’s efficiency over traditional metaheuristic approaches. Nevertheless, the algorithm did not achieve optimal results for functions \({f}_{6}\), \({f}_{7}\), \({f}_{8}\), \({f}_{13}\), \({f}_{14}\), \({f}_{18}\), \({f}_{24}\), and \({f}_{29}\). In direct comparison with the Crow Search Algorithm (CSA), the RBFNN-A notably outperforms on functions \({f}_{1}\), \({f}_{2}\), and \({f}_{4}\) with significant differences in the average best solution (AB) values, such as 1.49E+01, 8.05E+05, and 5.08E−01, respectively. Moreover, against functions \({f}_{3}\), \({f}_{5}\), and \({f}_{10}\), RBFNN-A still shows superior efficacy, albeit with smaller margins when compared to CSA. Similarly, against the Differential Evolution (DE) algorithm, RBFNN-A exhibits enhanced performance on the aforementioned functions, with a marked difference in AB values; for instance, a 1.66E+01 difference for function \({f}_{3}\). By using the information of promising regions within the search space, which are effectively delineated by the hidden neurons in the proposed algorithm, a significant enhancement in search efficiency is achieved. This advanced feature enables the algorithm to discern and prioritize areas of the search space that are most likely to yield high-quality solutions, thereby streamlining the path towards discovering optimal or near-optimal solutions. Such an approach stands in stark contrast to traditional metaheuristic strategies, which predominantly hinge on the information derived from the best-performing element within the population. Consequently, this nuanced method of leveraging insights from the broader search landscape, rather than focusing narrowly on the peak performers, facilitates a more informed and strategic exploration. This, in turn, fosters faster convergence rates by effectively bypassing less promising regions and concentrating computational efforts on areas with the highest potential for success.

The analysis of 28 functions at 100 dimensions, as detailed in Table 4, reveals that our algorithm, RBFNN-A, surpasses most competing methods, exhibiting a consistent performance in solving optimization challenges. Despite not achieving top results in eight instances, its overall performance and the potential as a robust metaheuristic solution are clearly demonstrated, especially when considering the average best outcomes. These results further establish RBFNN-A’s efficacy as an instrumental approach for complex optimization issues in metaheuristic research. Specifically, RBFNN-A outperformed other algorithms in 21 out of 29 functions tested, notably excelling in functions \({f}_{1}\), \({f}_{2}\), \({f}_{3}\), \({f}_{4}\), \({f}_{5}\), \({f}_{7}\), \({f}_{9}\), \({f}_{10}\), \({f}_{11}\), \({f}_{12}\), \({f}_{15}\), \({f}_{17}\), \({f}_{19}\), \({f}_{20}\), \({f}_{21}\), \({f}_{22}\), \({f}_{23}\), \({f}_{25}\), \({f}_{26}\), \({f}_{27}\), and \({f}_{28}\). Its performance fell short in functions \({f}_{6}\), \({f}_{8}\), \({f}_{13}\), \({f}_{14}\), \({f}_{16}\), \({f}_{18}\), \({f}_{24}\), and \({f}_{29}\). In comparison with algorithms such as ABC, CAB, CSA, DE, EDA, HS, MFO, SA, and SMS, RBFNN-A demonstrated significant advantages, especially in the average best (AB) scores for the evaluated functions. For instance, in function \({f}_{1}\), RBFNN-A achieved an AB of 3.95, significantly better than the ABC algorithm, which scored 20.7, highlighting RBFNN-A’s superior performance. The innovative design of our proposed algorithm (RBFNN-A) plays a pivotal role in enhancing the optimization process by adeptly identifying the most promising regions within the search space, as determined by its hidden neurons. This capability is crucial for achieving an optimal balance between exploration and exploitation—two fundamental aspects of search strategies in optimization algorithms. Exploration involves venturing into new, uncharted areas of the search space to uncover potential solutions, while exploitation focuses on thoroughly investigating known, promising regions to refine existing solutions. By leveraging the predictive power of RBFNN-A to assess the potential of unexplored areas, our method intelligently decides when to embark on exploration to discover new possibilities and when to concentrate on exploitation to maximize the utility of already identified promising zones. This strategic balance ensures that computational resources are utilized efficiently, leading to a more effective and systematic approach to reaching optimal solutions.

Statistical analysis

Metaheuristic algorithms, characterized by their stochastic optimization processes, leverage randomness to navigate complex problem spaces in search of optimal solutions. Due to their inherent stochasticity, the performance outcomes of these algorithms can fluctuate across various executions or datasets. To establish the statistical significance of the results yielded by different methods, the Wilcoxon signed-rank test47,48 is employed. This non-parametric statistical test is designed to assess whether the performance differences observed between two algorithms are statistically significant or merely the result of random variation. Unlike parametric tests, the Wilcoxon test does not assume a specific distribution for the data. Instead, it compares the relative rankings of paired observations—typically, the performance metrics of two algorithms tested against the same suite of functions. By analyzing these ranks, the test calculates a \(p\)-value, reflecting the likelihood that the noted performance discrepancies are due to chance. Should this \(p\)-value fall below a certain threshold, such as 0.05, the differences are considered statistically significant, indicating that one algorithm consistently outperforms or underperforms the other in a meaningful way.

In our study, the Wilcoxon signed-rank test is applied to the results listed in Table 5, which features the performance of various algorithms on functions evaluated at 100 dimensions. This table facilitates a statistical comparison between our proposed RBFNN-A method and other metaheuristic approaches, offering a robust statistical validation of the conducted experiments. For clarity, Table 5 employs specific symbols to denote the outcomes of the Wilcoxon test: the symbol ▲ signifies that RBFNN-A has outperformed a competing algorithm, ▼ indicates instances where RBFNN-A was outperformed by another method, and ► denotes cases where no statistically significant difference was observed between RBFNN-A and another algorithm according to the Wilcoxon test. Analysis of Table 5 reveals that RBFNN-A demonstrates superior performance over competing algorithms across a wide array of functions, specifically \({f}_{1}\), \({f}_{2}\), \({f}_{3}\), \({f}_{4}\), \({f}_{5}\), \({f}_{7}\), \({f}_{9}\), \({f}_{10}\), \({f}_{11}\), \({f}_{12}\), \({f}_{13}\), \({f}_{15}\), \({f}_{16}\), \({f}_{17}\), \({f}_{19}\), \({f}_{20}\), \({f}_{21}\), \({f}_{22}\), \({f}_{23}\), \({f}_{24}\), \({f}_{25}\), \({f}_{26}\), \({f}_{27}\), and \({f}_{28}\). RBFNN-A showed no significant difference when compared with the MFO algorithm at \({f}_{8}\) and faced challenges outperforming the ABC, CSA, EDA, HS, MFO, and SA algorithms at functions \({f}_{18}\) and \({f}_{29}\), and the DE algorithm at \({f}_{6}\), \({f}_{8}\), \({f}_{14}\), \({f}_{18}\) and \({f}_{29}\), highlighting the nuanced landscape of algorithmic performance across different optimization challenges. Our approach has yielded substantial advantages in terms of scalability. By using the information encoded within the neural network, our algorithm is capable of effectively addressing larger and more complex problems. This is particularly beneficial in overcoming the curse of dimensionality, a prevalent challenge in high-dimensional optimization tasks. The neural network’s capacity to compactly represent and utilize intricate patterns and relationships within the data allows our method to navigate these vast search spaces more efficiently. Our algorithm can identify relevant features and dynamics of the optimization problem by leveraging the neural network’s encoded knowledge, thus facilitating a more targeted and informed search process. As a result, our method is well-equipped to manage the complexities inherent in high-dimensional optimization problems, making it a reliable solution for a range of challenging scenarios.

Convergence analysis

Convergence analysis49 is an examination that aims to evaluate how metaheuristic algorithms approach an optimal solution to a given problem over time. The importance of convergence analysis lies in its ability to provide insights into the reliability, efficiency, and effectiveness of a metaheuristic algorithm. This analysis is crucial for algorithm selection and tuning in practical applications, as it helps to guarantee that the chosen metaheuristic will perform well on specific classes of problems, thereby saving computational resources and improving solution quality.

In our study to evaluate the convergence of metaheuristic algorithms, we conducted an experiment where all participating algorithms were tasked with optimizing the same set of 29 functions concurrently. The primary metric for assessing each algorithm’s performance was the point they took to reach their best solution. However, instead of measuring this point in conventional iterations, we quantified it based on the number of function accesses required to achieve the optimal solution. This approach was chosen over counting iterations because metaheuristic algorithms vary widely in their design, with some algorithms inherently making more function accesses per iteration than others. This variance could skew the results if iterations were the sole metric. By focusing on function accesses, we ensure a more equitable and objective comparison, accurately reflecting each algorithm’s efficiency in finding the best solution without bias towards their operational intricacies.

Table 6 presents a comparative analysis of the number of function accesses required by different algorithms to achieve convergence on a set of functions, specifically considering problems with 50 dimensions. Notably, the proposed algorithm stands out for its efficiency, achieving convergence with markedly fewer function accesses than its competitors. This efficiency is attributed to the algorithm’s strategy of progressively narrowing down the search area within the function landscape, focusing on regions identified as most promising for locating the global solution. Through this iterative process, the algorithm systematically reduces the search space until no further promising regions remain to be explored or exploited, at which point convergence is achieved. The effectiveness of this approach is starkly illustrated in the comparison with the next best performing algorithm, the SMS, which required 81,461 function evaluations to reach convergence. In contrast, the proposed algorithm attained convergence with just approximately 1400 function accesses, highlighting its exceptional capability to navigate through complex optimization problems efficiently. This significant reduction in the number of required function evaluations not only demonstrates the proposed algorithm’s superior convergence efficiency but also positions it as a highly valuable tool for tackling high-dimensional optimization challenges across various scientific and engineering fields.

Performance comparison considering the 12 CEC 2022 functions from table B

The CEC 202250 Special Session on Single Objective Optimization involves a diverse set of benchmark functions designed to test the performance of optimization algorithms across a range of challenges. These functions are specifically chosen to represent various difficulties encountered in real-world optimization problems. The functions can be summarized as it is shown in Table B. The CEC 2022 functions include shifting and rotating basic functions, as well as combining several of them into a single function. These modifications create functions that are significantly more challenging to optimize than traditional functions. By incorporating shifts and rotations, the search space becomes more complex and less predictable, making it harder for optimization algorithms to find the global optimum. Additionally, the combination of multiple basic functions into composite and hybrid functions introduces further intricacies, as the algorithm must navigate varied landscapes within a single problem. This increased difficulty helps to more rigorously test the robustness, adaptability, and overall performance of optimization methods, ensuring they are capable of handling real-world optimization challenges.

In the optimization of the CEC 2022 functions, the standard test with the execution of the functions in 20 dimensions was considered. To mitigate the random effects inherent to the stochastic nature of the methods, each function was executed 30 times for each metaheuristic algorithm. The results, presented in Table 7, show the numerical values obtained as the average of the best values (AB) across the 30 runs, along with the dispersion in terms of standard deviation (SD). This comprehensive testing approach ensures that the performance of each algorithm is reliably assessed, providing a clear comparison of their effectiveness and consistency in optimizing the challenging CEC 2022 functions.

According to the values in Table 7, it is clear that the proposed RBFNN-A method produces the best solutions in terms of accuracy (AB). The DE and ABC methods also perform well, whereas the other methods show poor performance. A more detailed analysis of the table reveals that, generally, the methods perform better on functions \({F}_{1}\) to \({F}_{5}\), which are basic unimodal and multimodal functions. In contrast, functions \({F}_{6}\) to \({F}_{12}\) pose greater challenges, as they represent combinations of several functions that generate higher complexity. It is in these more complex functions that the proposed algorithm’s performance stands out the most, significantly differing from the other methods and highlighting its superior capability in handling difficult optimization problems.

From Table 7, it is evident that the proposed method RBFNN-A is more robust in terms of standard deviation (SD), as it produces a smaller dispersion of solutions across the set of runs. To illustrate the distribution of the solutions more clearly, Fig. 6 shows the distributions for each metaheuristic algorithm on three selected functions: (a) \({F}_{2}\), (b) \({F}_{8}\) and (c) \({F}_{12}\). These functions were chosen to represent different types of functions in the CEC 2022 set. Specifically, \({F}_{2}\) is a basic multimodal function, \({F}_{8}\) is a hybrid function, and \({F}_{12}\) is a composite function. The figure demonstrates that the proposed method has the smallest distribution for each of these functions, indicating consistent performance, whereas the other methods exhibit varying degrees of dispersion. This highlights the robustness and reliability of the proposed method in producing stable and accurate solutions across different types of optimization problems.

To assess the performance of the methodologies examined in our study, we utilized the Friedman ranking test51. The Friedman ranking test is a non-parametric statistical test that is suitable for evaluating and ranking multiple techniques across different datasets or scenarios, particularly when the underlying data does not meet the normality assumption. This test offers a dependable alternative to parametric tests, such as ANOVA. In the Friedman test, each method is ranked based on its performance within each dataset, with the best-performing method receiving a rank of 1, the second-best a rank of 2, and so on. If two methods perform equally, they are assigned the average of the ranks they would occupy. Table 8 presents the findings of the Friedman analysis, which considers the AB numerical values from Table 7. Based on these results, the RBFNN-A method emerges as the top performer, trailed by DE and the ABC, while the other methods receive lower rankings, indicating suboptimal performance.

Engineering design problems

Validating the performance of a new metaheuristic method in engineering design problems is crucial for several reasons. First, engineering design problems often involve complex and nonlinear optimization tasks that require robust and efficient solution strategies. By testing a new metaheuristic method in these scenarios, researchers can assess its effectiveness, reliability, and performance under realistic conditions. Second, validation helps ensure that the new method can handle the specific constraints and requirements inherent in engineering problems. This process also highlights the method’s advantages over existing approaches, potentially leading to more innovative and optimized design solutions.

This subsection focuses on applying the proposed method to a range of interesting engineering design problems, including the Three-bar truss, Pressure vessel, and Welded-beam. The objective is to test the effectiveness of the proposed metaheuristic method compared to other techniques in these practical scenarios. By evaluating the performance of the methods on these diverse engineering challenges, we aim to demonstrate the robustness and applicability of the proposed approach in real-world situations. For detailed mathematical descriptions of each problem, readers are referred to Table B in the appendix.

In the analysis of engineering design problems, the same metaheuristic algorithms from the previous analysis (ABC, CAB, CSA, DE, EDA, HS, MFO, SA, SMS, and RBFNN-A) were employed. Each experiment was conducted with a population of 50 individuals and a stopping criterion of 1000 iterations for every algorithm. Given the stochastic nature of metaheuristic algorithms, each engineering problem was independently executed 30 times to ensure reliable results. The numerical outcomes for each engineering challenge are detailed, considering their specific decision variables, constraints, and fitness values. Additionally, the statistical evaluation includes the averaged best fitness values (AB) and the standard deviation (SD) to provide a comprehensive understanding of each algorithm’s performance. This thorough analysis ensures that the results are robust and that the effectiveness of each algorithm in solving engineering design problems is accurately assessed.

Optimization problems in engineering often have constraints on their decision variables, explicitly defined by constraint functions. To handle these constraints, the constraint separation technique has been employed in the following experiments. This technique involves treating constraints independently from the objective function during the optimization process. With this methodology, each candidate solution generated by the metaheuristic algorithm is checked for feasibility by evaluating it against all constraints. Only those solutions that satisfy all the constraints are considered feasible and are passed on to the next phase of the optimization process. Conversely, infeasible solutions are discarded and replaced by new ones. This approach ensures that the optimization focuses solely on viable solutions, enhancing efficiency and effectiveness in finding the optimal solution.

-

A)

Three-bar truss design problem

The Three-bar truss design problem52 involves a 2-dimensional search space, with the objective of minimizing the structure of a loaded three-bar truss. This truss structure is graphically depicted in Fig. 7. The optimization process is subject to three constraints, detailed in Table C in the appendix. The results of the optimization problem for the Three-Bar Truss are summarized in Table 9. These results demonstrate that the proposed method successfully finds the optimal solution for this engineering problem. The method’s capability to effectively handle the constraints and complexities of this problem highlights its practical application and efficiency in solving tangible engineering challenges.

-

B)

Pressure vessel design problem

The Pressure Vessel Design problem53 is depicted in Fig. 8 and aims to minimize the total cost of material, forming, and welding, taking into account various design parameters such as the thickness of the shell (\({T}_{s}={x}_{1}\)), thickness of the head (\({T}_{h}={x}_{2}\)), inner radius (\(R={x}_{3}\)), and length of the shell (\(L={x}_{4}\)). A detailed mathematical description of this problem can be found in Table C of the appendix. Table 10 summarizes the results of applying the proposed method to this design problem, which show that the RBNFF-A method, along with the DE and MFO methods, obtain the best solution to the problem, while the other methods had varying levels of performance.

-

C)

Welded-beam design problem

The Welded-Beam Design problem 54 focuses on minimizing manufacturing costs by optimizing four key design variables (see Fig. 9): width (\(h={x}_{1}\)), length (\(l={x}_{2}\)), depth (\(t={x}_{3}\)), and thickness (\(b={x}_{4}\)). Governed by seven constraints, this problem presents a complex scenario typical of real-world engineering challenges. Detailed specifications for the Welded-Beam design are elaborately listed in Table C of the Appendix. The effectiveness of various algorithms in solving this problem, including the proposed method, is documented in Table 11. Analysis of the data in this table reveals that the proposed method outperforms other well-known metaheuristic methods, achieving the best solution for this specific design problem. This result highlights not only the proposed methodology’s effectiveness but also confirms its practical applicability and superiority in tackling real-world engineering problems, particularly in cost-efficient design optimization.

Time complexity

In many engineering optimization problems, the cost of function evaluations is a major constraint that limits the number of evaluations available. This limitation poses a significant challenge to the field of global optimization, as conventional metaheuristic techniques typically require a substantial number of function evaluations to identify optimal solutions. Consequently, the high computational demand of these methods often exceeds what is feasible within the given resource constraints, necessitating the development of more efficient algorithms that can deliver high-quality results with fewer function evaluations. This underscores the need for innovative approaches that balance accuracy and efficiency in resource-constrained optimization scenarios.

In the proposed approach, the proposed RBFNN algorithm is trained to model the objective function values based on the current set of solutions. The algorithm identifies the neurons in the hidden layer that correspond to the highest objective function values. It then utilizes the parameters of these crucial neurons, such as their centroids and standard deviations, to generate new solutions by sampling in order of importance until the typical number of solutions is reached. By focusing on the promising regions of the search space that yield high objective function values, the algorithm avoids the need for exhaustive evaluation of all possible solutions. This strategy enables a more informed and efficient exploration of the search space. Consequently, the proposed method can find the global solution with a significantly reduced number of objective function evaluations.